Design of Robust Sensing Matrix for UAV Images Encryption and Compression

Abstract

1. Introduction

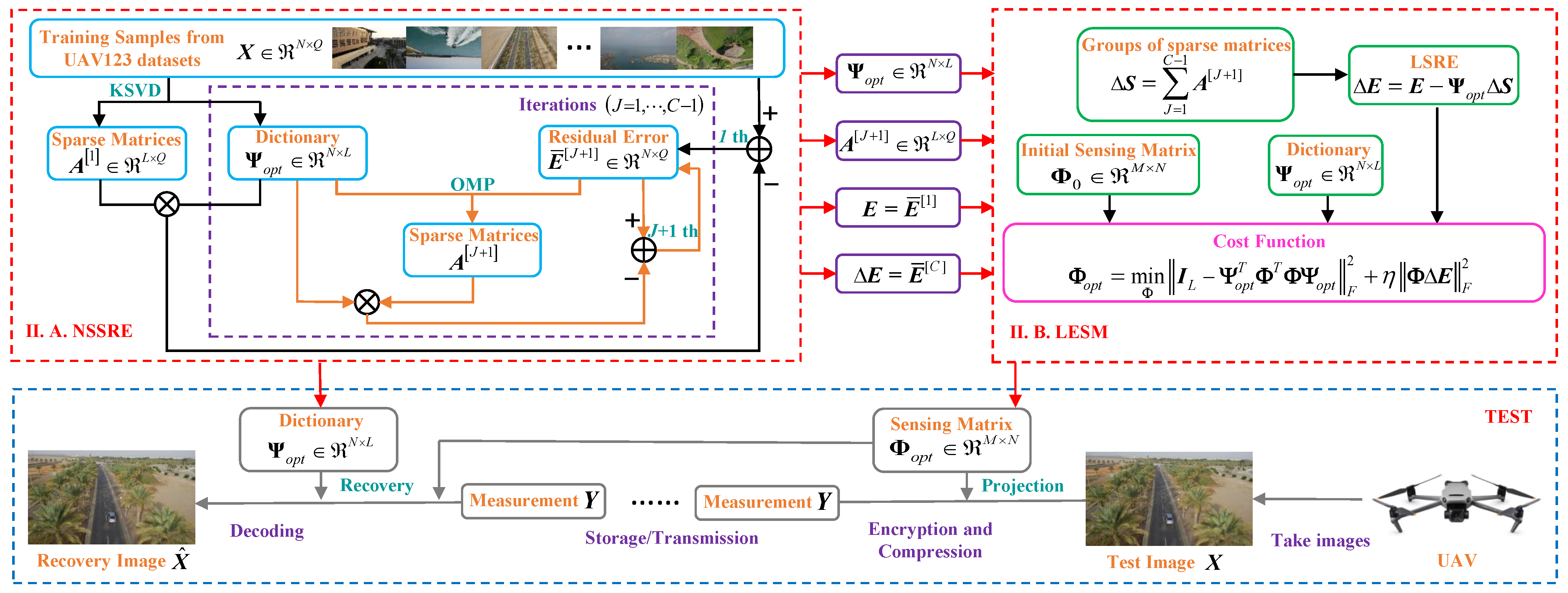

- A new structure where the SRE is reduced by eliminating groups of sparse representation is proposed, which is denoted as NSSRE. The SRE is decreased to LSRE so that the excessive error is effectively controlled when being projected into measurements.

- By employing LSRE to minimize the projected Energy, a more robust Sensing Matrix named LESM is designed to build an optimal CS system.

- The new CS framework with the robust sensing matrix LESM is established to compress and encrypt the UAV images to improve the security and the transmission speed, which can confront the SRE in the image and lead to more accuracy of image recovery.

2. Preliminaries

3. Proposed LESM Sensing Matrix Design

3.1. The NSSRE Structure on SRE

3.2. The LESM Algorithm for Sensing Matrix

3.3. The Algorithm of Optimal CS System

| Algorithm 1 The proposed CS system |

| Stage 1: Dictionary learning and SRE decreasing (NSSRE structure showed in Figure 1 top left): |

| Input: The training sample sequence , the initial DCT dictionary [39] . |

| Initialization: The parameter is initialized as zeros matrix, and set . |

| Start: |

| Step 1: Obtain the sparse representation pair from the training samples by adopting the KSVD algorithm [34]. |

| Step 2: Calculate the SRE , the residual begins with: . |

| Repeat Steps 3–5 until : |

| Step 3: Calculate by using the OMP algorithm [16] in the residual error and the optimal dictionary . |

| Step 4: Calculate the residual error , |

| Step 5: Calculate parameter . |

| Output: The optimal dictionary , the LSRE . The groups of sparse matrices |

| Stage 2: Sensing matrix design (LESM algorithm shown in Figure 1, top right): |

| Input: The initial sensing matrix , the optimal dictionary , the LSRE . |

| Step 1: Construct the optimal model presented in (18) to obtain the sensing matrix given as (30). |

| Output: The optimal dictionary , and the robust sensing matrix . |

4. Experiment

- The cost function, measurement error and averaged mutual coherence are in the low range through the sensing matrix design. In particular, the measurement error of the proposed algorithm is the smallest, which means the projected error is rare in the measurement.

- The mutual coherence for the real images is usually large, which has the same theoretical explanation of why we use the averaged mutual coherence as the criterion to design the sensing matrix.

- Considering the SRE, a more robust sensing matrix can be designed, which leads to better recovery performance by using algorithm , algorithm and algorithm . The experimental results are matched with the above theoretical analysis.

- According to the previous theoretical analysis, the PSNR of algorithm is worse than algorithm . However, algorithm possesses the lower computational complexity.

- In terms of algorithms and , they both consider the influence of SRE, which is calculated from the training samples directly. However, for the algorithm , the SRE is achieved by eliminating one group of sparse representation, while for the algorithm , the LSRE is achieved by eliminating several groups of sparse representation. The result of the experiments is listed in Table 2, where the PSNRs and SSIMs of algorithm are higher than those of algorithm . The algorithm decreases the SRE to achieve the true error.

- In terms of PSNR and SSIM, the algorithm obtains the highest recovery performance for each kind of image from the UAV123 datasets. In addition, the SREs of algorithm are also the lowest.

- For different compression ration with and , algorithm achieves the best recovery performance. The PSNRs, SREs, and SSIMs for the higher compression ration () are better than the lower one for most of the sensing matrix, especially for algorithm .

- The algorithm achieves the best recovery performance in every noise level. In addition, the larger noise the image contains, the more obvious the improvement space of the recovery results are. We analyzed the statistical data “average” obtained from eleven images, and the enhancement of the PSNRs value between the best algorithm and the second best one is 0.44 dB when the noise = 20 dB, 0.25 dB when the noise = 30 dB and 0.21 dB when no noise . The enhancement SSIMs of value between the best algorithm and the second best one are when the noise = 20 dB, when the noise = 30 dB and when no noise .

- The reduction ratio of SRE between the best algorithm and the second best one is when the noise = 20 dB, when the noise = 30 dB and when no noise . In addition, the more noise there is, the larger the recovery results of the SREs are.

- Regarding the images with larger noise, the performance of algorithm is similar with algorithm , but it is better than algorithm . Hence, the ability of algorithm to resist large noise is weak.

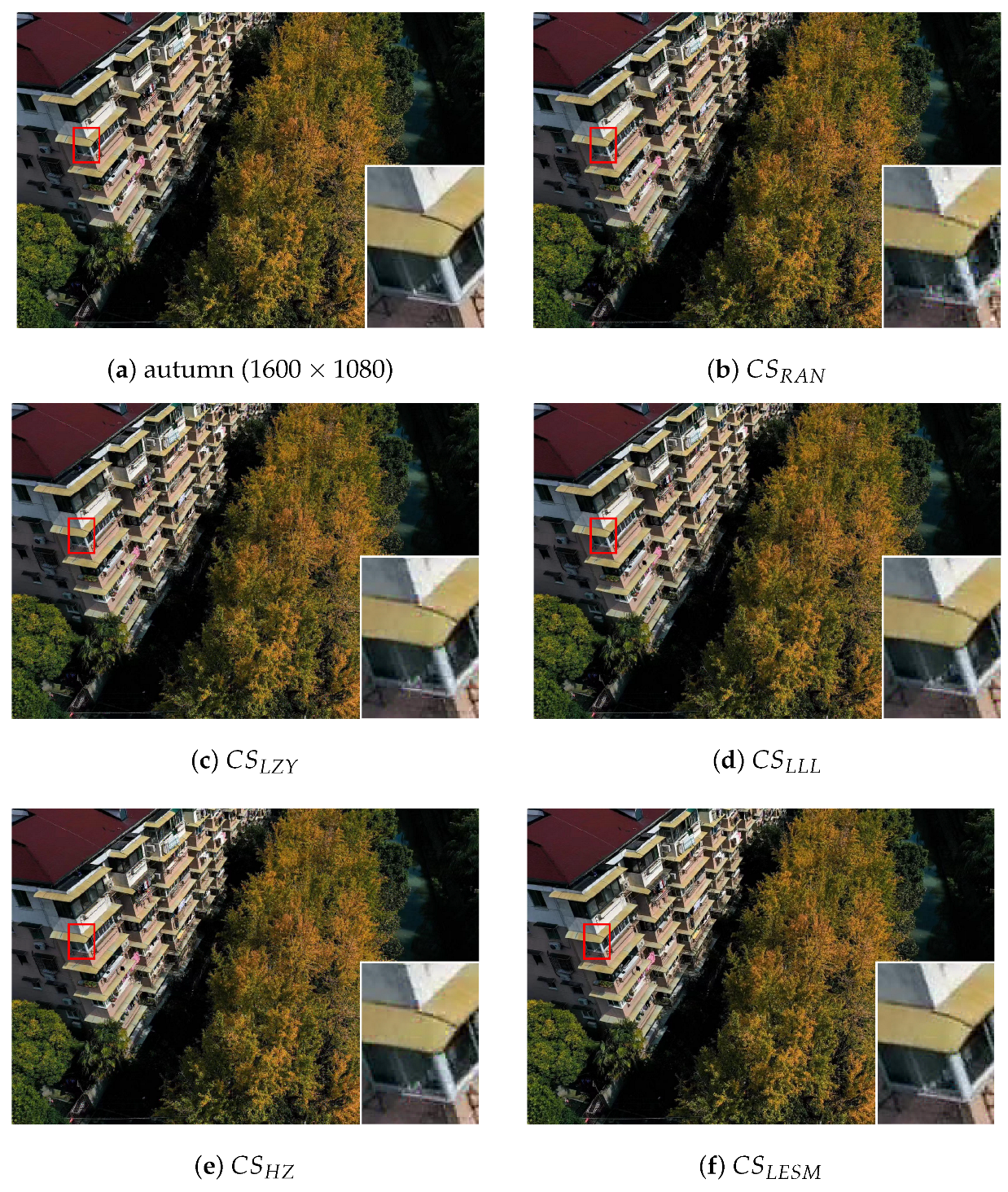

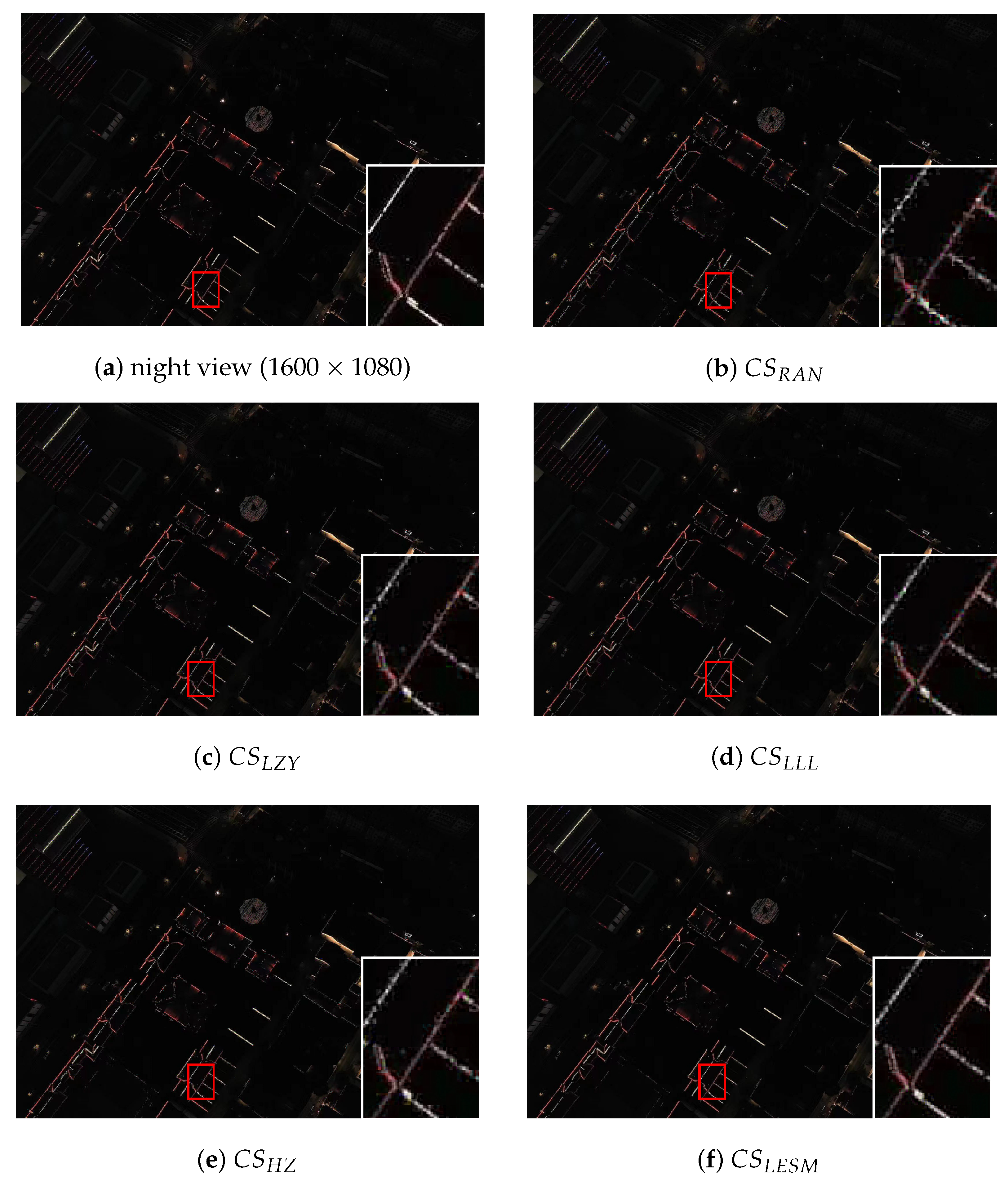

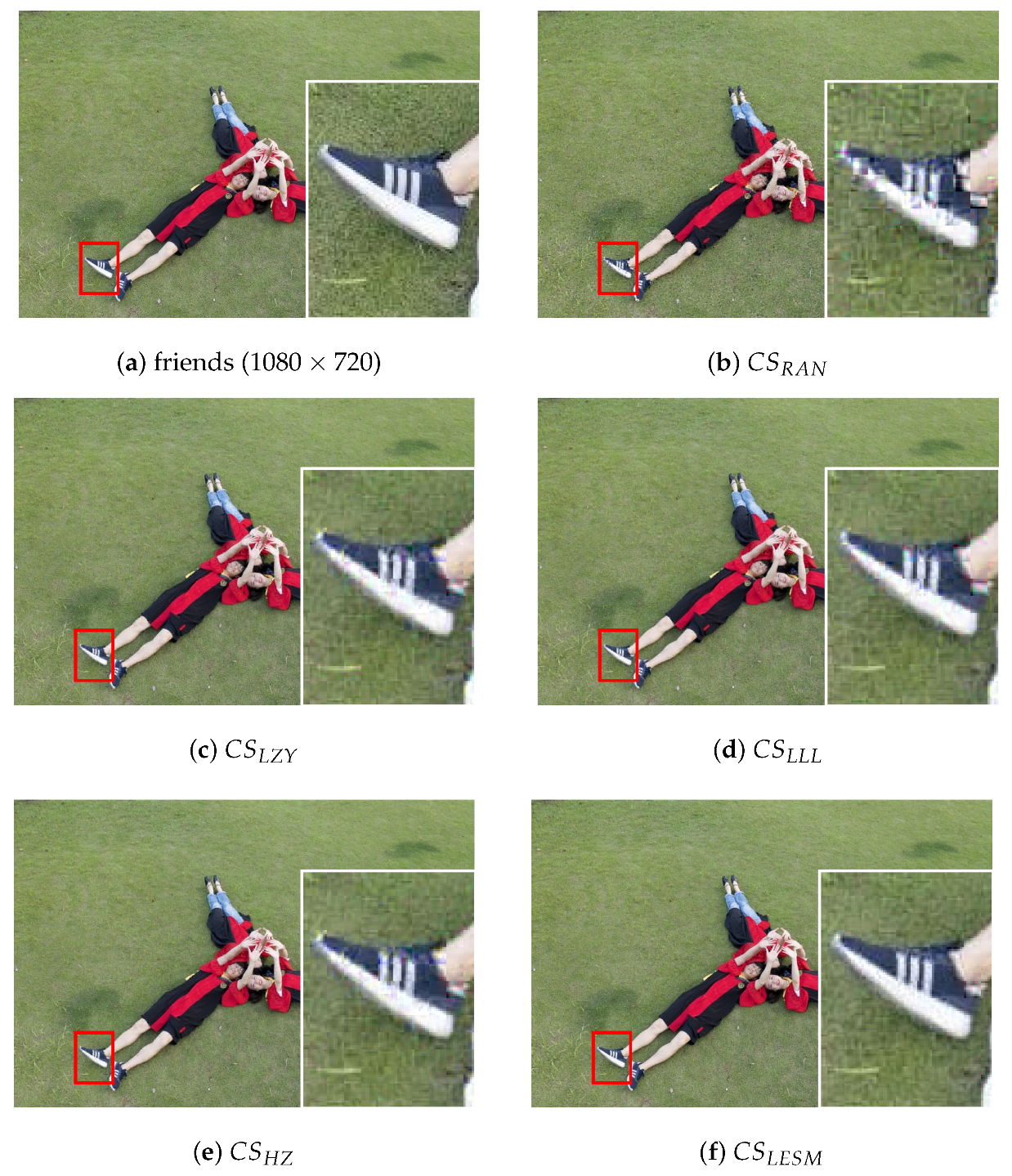

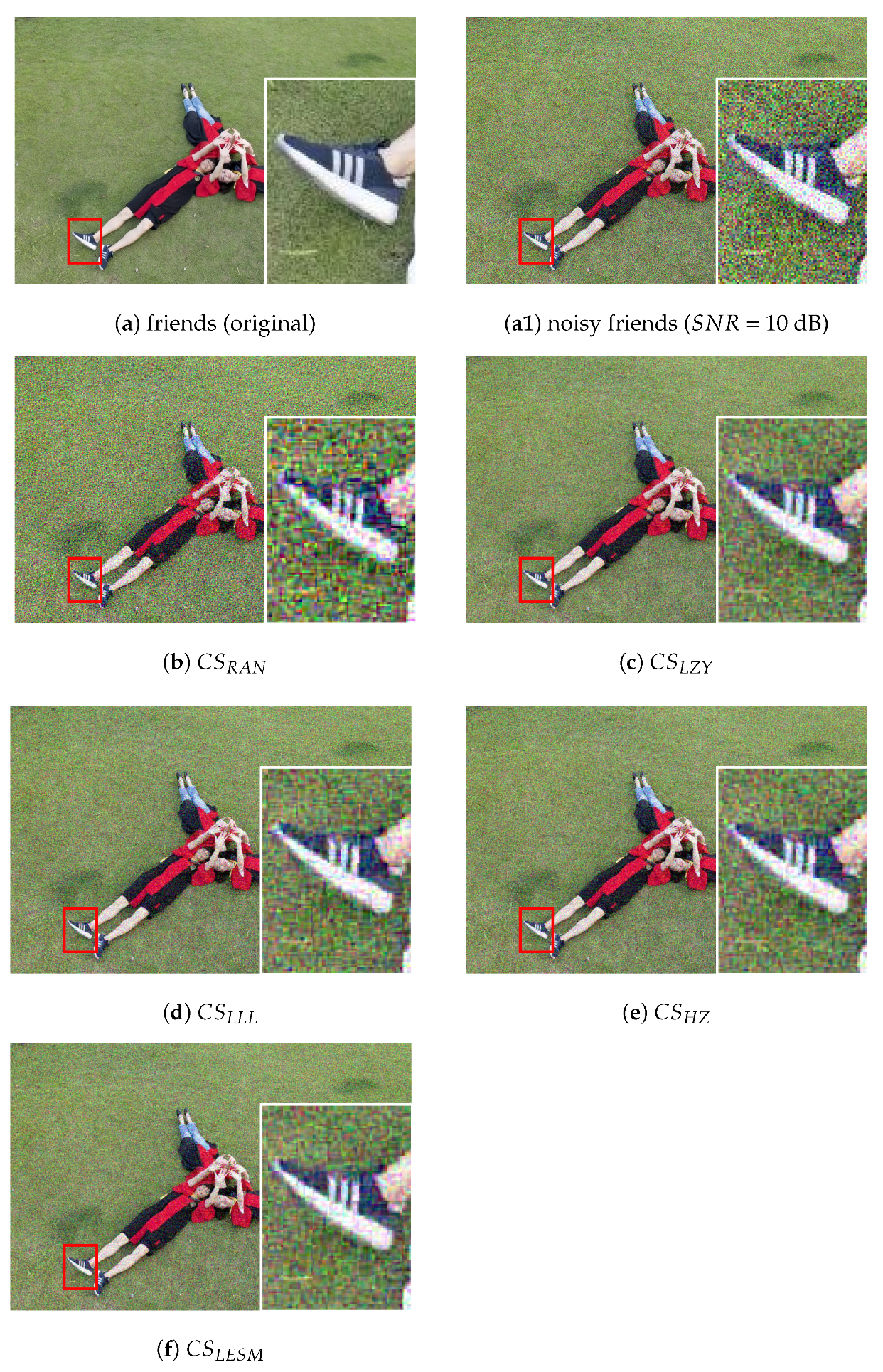

- Without extra noise, observe the value of PSNRs and SSIMs in Table 4 and the visual feelings in Figure 3, Figure 4 and Figure 5, where the sensing matrix algorithms , , and considering the SRE possess better recovery performance than the , and . Compared with algorithm , the recovery accuracy of is worse than . The proposed algorithm obtains the best recovery results for these three scenarios. All the results obtained from the experiments are consistent with those theoretical analyses.

- With adding extra noise ( = 10 dB), observing the value of PSNRs and SSIMs in Table 4 and the visual feelings in Figure 6, Figure 7 and Figure 8, the sensing matrix algorithms , , and possess a similar recovery performance. The proposed algorithm obtains the best recovery results for these three scenarios.

- Compared with the original images in Figure 3a, Figure 4a, Figure 5a, Figure 6a, Figure 7a, Figure 8a and other recovery results in Figure 3b–e, Figure 4b–e, Figure 5b–e, Figure 6b–e, Figure 7b–e, Figure 8b–e, the details of recovery by adopting the proposed sensing matrix are the best. For instance, the corner of the building in “autumn”, the colorful light lines in “night view”, the logo of the shoes in “friends” are the clearest.

- The images of “Night view” contain much noise by themselves due to the bad lights. Hence, the recovery results are similar with or without the extra noise. This “Night view” with more noise indicates that the recovery accuracy can be improved by reducing sparse representation errors.

- By using the proposed algorithm of the sensing matrix, the experiments on both UAV123 datasets and the images taken by ourselves reveal superior recovery accuracy. The larger the noise the image contains, the more obvious the improvement space of the recovery results is.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xie, S.; Chen, Q.; Yang, Q. Adaptive Fuzzy Predefined-Time Dynamic Surface Control for Attitude Tracking of Spacecraft with State Constraints. IEEE Trans. Fuzzy Syst. 2022, 1–13. [Google Scholar] [CrossRef]

- Xie, S.; Chen, Q.; He, X. Predefined-Time Approximation-Free Attitude Constraint Control of Rigid Spacecraft. IEEE Trans. Aerosp. Electron. Syst. 2022, 1–11. [Google Scholar] [CrossRef]

- Rangappa, N.; Prasad, Y.R.V.; Dubey, S.R. LEDNet: Deep Learning-Based Ground Sensor Data Monitoring System. IEEE Sens. J. 2022, 22, 842–850. [Google Scholar] [CrossRef]

- Tao, M.; Chen, Q.; He, X.; Xie, S. Fixed-Time Filtered Adaptive Parameter Estimation and Attitude Control for Quadrotor UAVs. IEEE Trans. Aerosp. Electron. Syst. 2022, 58, 4135–4146. [Google Scholar] [CrossRef]

- Chen, Q.; Tao, M.; He, X.; Tao, L. Fuzzy Adaptive Nonsingular Fixed-Time Attitude Tracking Control of Quadrotor UAVs. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 2864–2877. [Google Scholar] [CrossRef]

- Cambareri, V.; Mangia, M.; Pareschi, F.; Rovatti, R.; Setti, G. On Known-Plaintext Attacks to a Compressed Sensing-Based Encryption: A Quantitative Analysis. IEEE Trans. Inf. Forensics Secur. 2015, 10, 2182–2195. [Google Scholar] [CrossRef]

- Xu, B.; Xie, Z.; Zhang, Z.; Han, T.; Liu, H.; Ju, M.; Liu, X. Joint Compression and Encryption of Distributed Sources Based on Wavelet Transform and Semi-Tensor Product Compressed Sensing. IEEE Sens. J. 2022, 22, 16451–16463. [Google Scholar] [CrossRef]

- Li, L.; Fang, Y.; Liu, L.; Peng, H.; Kurths, J.; Yang, Y. Overview of Compressed Sensing: Sensing Model, Reconstruction Algorithm, and Its Applications. Appl. Sci. 2020, 10, 5909. [Google Scholar] [CrossRef]

- Candès, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from higy incomplete frequency information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef]

- Zhu, Z.; Li, G.; Ding, J.; Li, Q.; He, X. On Collaborative Compressive Sensing Systems: The Framework, Design and Algorithm. SIAM J. Imaging Sci. 2017, 11, 1717–1758. [Google Scholar] [CrossRef]

- Jalali, S. Toward Theoretically Founded Learning-Based Compressed Sensing. IEEE Trans. Inf. Theory 2020, 66, 387–400. [Google Scholar] [CrossRef]

- Chen, Z.; Guo, W.; Feng, Y.; Li, Y.; Zhao, C.; Ren, Y.; Shao, L. Deep-Learned Regularization and Proximal Operator for Image Compressive Sensing. IEEE Trans. Image Process. 2021, 30, 7112–7126. [Google Scholar] [CrossRef] [PubMed]

- Sarangi, P.; Pal, P. Measurement Matrix Design for Sample-Efficient Binary Compressed Sensing. IEEE Signal Process. Lett. 2022, 29, 1307–1311. [Google Scholar] [CrossRef]

- Candès, E.J.; Wakin, M.B. An introduction to compressive sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Bai, H.; Li, G.; Li, S.; Li, Q.; Jiang, Q.; Chang, L. Alternating Optimization of Sensing Matrix and Sparsifying Dictionary for Compressed Sensing. IEEE Trans. Signal Process. 2015, 63, 1581–1594. [Google Scholar] [CrossRef]

- Pati, Y.C.; Rezaiifar, R.; Krishnaprasad, P.S. Orthogonal matching pursuit: Recursive function approximation with applications to wavelet decomposition. In Proceedings of the 2002 Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 3–6 November 2002; Volume 1, pp. 40–44. [Google Scholar]

- Candès, E.J.; Tao, T. Decoding by linear programming. IEEE Trans. Inf. Theory 2005, 51, 4203–4215. [Google Scholar] [CrossRef]

- Wainwright, M.J. Sharp Thresholds for High-Dimensional and Noisy Sparsity Recovery Using ℓ1-Constrained Quadratic Programming (Lasso). IEEE Trans. Inf. Theory 2009, 55, 2183–2202. [Google Scholar] [CrossRef]

- Donoho, D.L.; Elad, M. Optimally sparse representation in general (nonorthogonal) dictionaries via ℓ1 minimization. Proc. Natl. Acad. Sci. USA 2003, 100, 2197–2202. [Google Scholar] [CrossRef]

- Li, B.; Zhang, L.; Kirubarajan, T.; Rajan, S. Projection matrix design using prior information in compressive sensing. Signal Process. 2017, 135, 36–47. [Google Scholar] [CrossRef]

- Strohmer, T.; Heath, R.W., Jr. Grassmannian frames with applications to coding and communication. Appl. Comp. Harmon. Anal. 2003, 14, 257–275. [Google Scholar] [CrossRef]

- Elad, M. Optimized Projections for Compressed Sensing. IEEE Trans. Signal Process. 2007, 55, 5695–5702. [Google Scholar] [CrossRef]

- Duarte-Carvajalino, J.M.; Sapiro, G. Learning to sense sparse signals: Simultaneous sensing matrix and sparsifying dictionary optimization. IEEE Trans. Image Process. 2009, 18, 1395–1408. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Zhu, Z.; Yang, D.; Chang, L.; Bai, H. On Projection Matrix Optimization for Compressive Sensing Systems. IEEE Trans. Signal Process. 2013, 61, 2887–2898. [Google Scholar] [CrossRef]

- Hong, T.; Bai, H.; Li, S.; Zhu, Z. An efficient algorithm for designing projection matrix in compressive sensing based on alternating optimization. Signal Process. 2016, 125, 9–20. [Google Scholar] [CrossRef]

- Bai, H.; Li, S.; He, X. Sensing Matrix Optimization Based on Equiangular Tight Frames with Consideration of Sparse Representation Error. IEEE Trans. Multimed. 2016, 18, 2040–2053. [Google Scholar] [CrossRef]

- Bai, H.; Hong, C.; Li, S.; Zhang, Y.D.; Li, X. Unit-norm tight frame-based sparse representation with application to speech inpainting. Digit. Signal Process. 2022, 123, 103426. [Google Scholar] [CrossRef]

- Li, G.; Li, X.; Li, S.; Bai, H.; Jiang, Q.; He, X. Designing robust sensing matrix for image compression. IEEE Trans. Image Process. 2015, 24, 5389–5400. [Google Scholar] [CrossRef]

- Li, G.; Zhu, Z.; Wu, X.; Hou, B. On joint optimization of sensing matrix and sparsifying dictionary for robust compressed sensing systems. Digit. Signal Process. 2018, 73, 62–71. [Google Scholar] [CrossRef]

- Hong, T.; Zhu, Z. An efficient method for robust projection matrix design. Signal Process. 2018, 143, 200–210. [Google Scholar] [CrossRef]

- Jiang, Q.; Li, S.; Chang, L.; He, X.; de Lamare, R.C. Exploiting prior knowledge in compressed sensing to design robust systems for endoscopy image recovery. J. Frankl. Inst. 2022, 359, 2710–2736. [Google Scholar] [CrossRef]

- Chen, Q.; Xie, S.; He, X. Neural-Network-Based Adaptive Singularity-Free Fixed-Time Attitude Tracking Control for Spacecrafts. IEEE Trans. Cybern. 2021, 51, 5032–5045. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Ye, Y.; Hu, Z.; Na, J.; Wang, S. Finite-Time Approximation-Free Attitude Control of Quadrotors: Theory and Experiments. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 1780–1792. [Google Scholar] [CrossRef]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Zhu, Z.; Wakin, M.B. Approximating Sampled Sinusoids and Multiband Signals Using Multiband Modulated DPSS Dictionaries. J. Fourier Anal. Appl. 2015, 23, 1263–1310. [Google Scholar] [CrossRef]

- Hong, T.; Zhu, Z. Online learning sensing matrix and sparsifying dictionary simultaneously for compressive sensing. Signal Process. 2018, 153, 188–196. [Google Scholar] [CrossRef]

- Blumensath, T.; Davies, M.E. Normalized Iterative Hard Thresholding: Guaranteed Stability and Performance. IEEE J. Sel. Top. Signal Process. 2010, 4, 298–309. [Google Scholar] [CrossRef]

- Jiang, Q.; Li, S.; Zhu, Z.; Bai, H.; He, X.; de Lamare, R.C. Design of Compressed Sensing System with Probability-Based Prior Information. IEEE Trans. Multimed. 2020, 22, 594–609. [Google Scholar] [CrossRef]

- Liu, H.; Yuan, H.; Liu, Q.; Hou, J.; Zeng, H.; Kwong, S. A Hybrid Compression Framework for Color Attributes of Static 3D Point Clouds. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1564–1577. [Google Scholar] [CrossRef]

- Mueller, M.; Smith, N.; Ghanem, B. A Benchmark and Simulator for UAV Tracking. Available online: https://cemse.kaust.edu.sa/ivul/uav123 (accessed on 13 November 2022).

- Gao, W.; Kwong, S.; Zhou, Y.; Yuan, H. SSIM-Based Game Theory Approach for Rate-Distortion Optimized Intra Frame CTU-Level Bit Allocation. IEEE Trans. Multimed. 2016, 18, 988–999. [Google Scholar] [CrossRef]

| Algorithm | ||||

|---|---|---|---|---|

| 80.3562 | 43.9250 | 0.3571 | 0.9387 | |

| 82.2070 | 0.4838 | 0.3609 | 0.9443 |

| Bike | Bird | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M = 20 | M = 24 | M = 20 | M = 24 | |||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| boat | building | |||||||||||

| M = 20 | M = 24 | M = 20 | M = 24 | |||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| car | group | |||||||||||

| M = 20 | M = 24 | M = 20 | M = 24 | |||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| person | truck | |||||||||||

| M = 20 | M = 24 | M = 20 | M = 24 | |||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| wakebord | game | |||||||||||

| M = 20 | M = 24 | M = 20 | M = 24 | |||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| uav | average | |||||||||||

| M = 20 | M = 24 | M = 20 | M = 24 | |||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| Bike | Bird | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SNR = 20 | SNR = 30 | SNR = ∞ | SNR = 20 | SNR = 30 | SNR = ∞ | |||||||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| boat | building | |||||||||||||||||

| SNR = 20 | SNR = 30 | SNR = ∞ | SNR = 20 | SNR = 30 | SNR = ∞ | |||||||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| car | group | |||||||||||||||||

| SNR = 20 | SNR = 30 | SNR = ∞ | SNR = 20 | SNR = 30 | SNR = ∞ | |||||||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| person | truck | |||||||||||||||||

| SNR = 20 | SNR = 30 | SNR = ∞ | SNR = 20 | SNR = 30 | SNR = ∞ | |||||||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| wakebord | game | |||||||||||||||||

| SNR = 20 | SNR = 30 | SNR = ∞ | SNR = 20 | SNR = 30 | SNR = ∞ | |||||||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| uav | average | |||||||||||||||||

| SNR = 20 | SNR = 30 | SNR = ∞ | SNR = 20 | SNR = 30 | SNR = ∞ | |||||||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

| Autumn | Night View | Friends | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SNR = ∞ | SNR = 10 | SNR = ∞ | SNR = 10 | SNR = ∞ | SNR = 10 | |||||||||||||

| PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | PSNR | SRE | SSIM | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, Q.; Bai, H.; He, X. Design of Robust Sensing Matrix for UAV Images Encryption and Compression. Appl. Sci. 2023, 13, 1575. https://doi.org/10.3390/app13031575

Jiang Q, Bai H, He X. Design of Robust Sensing Matrix for UAV Images Encryption and Compression. Applied Sciences. 2023; 13(3):1575. https://doi.org/10.3390/app13031575

Chicago/Turabian StyleJiang, Qianru, Huang Bai, and Xiongxiong He. 2023. "Design of Robust Sensing Matrix for UAV Images Encryption and Compression" Applied Sciences 13, no. 3: 1575. https://doi.org/10.3390/app13031575

APA StyleJiang, Q., Bai, H., & He, X. (2023). Design of Robust Sensing Matrix for UAV Images Encryption and Compression. Applied Sciences, 13(3), 1575. https://doi.org/10.3390/app13031575