Abstract

Neurodegeneration and impaired neuronal transmission in the brain are at the root of Alzheimer’s disease (AD) and dementia. As of yet, no successful treatments for dementia or Alzheimer’s disease have indeed been found. Therefore, preventative measures such as early diagnosis are essential. This research aimed to evaluate the accuracy of the Open Access Series of Imaging Studies (OASIS) database for the purpose of identifying biomarkers of dementia using effective machine learning methods. In most parts of the world, AD is responsible for dementia. When the challenge level is high, it is nearly impossible to get anything done without assistance. This is increasing due to population growth and the diagnostic period. Two current approaches are the medical history and testing. The main challenge for dementia research is the imbalance of datasets and their impact on accuracy. A proposed system based on reinforcement learning and neural networks could generate and segment imbalanced classes. Making a precise diagnosis and taking into account dementia in all four stages will result in high-resolution sickness probability maps. It employs deep reinforcement learning to generate accurate and understandable representations of a person’s dementia sickness risk. To avoid an imbalance, classes should be evenly represented in the samples. There is a significant class imbalance in the MRI image. The Deep Reinforcement System improved trial accuracy by 6%, precision by 9%, recall by 13%, and F-score by 9–10%. The diagnosis efficiency has improved as well.

1. Introduction

Dementia refers to a collection of symptoms produced by brain abnormalities. There are currently around 46.8 million people living with dementia worldwide, with this number expected to increase to 131.5 million by 2050 [1,2]. There are numerous forms of dementia, the most prevalent of which is Alzheimer’s disease (AD). Although significant efforts have been made to decipher the process of dementia and to create better therapies, a precise diagnosis of dementia is still a challenging task. In an attempt to develop an effective method of detecting AD, several computer-aided (CAD) systems have been examined [3]. Such systems rely on machine learning and include magnetic resonance imaging (MRI), functional MRI (fMRI), structural MRI (sMRI), and positron emission tomography (PET) [4,5]. Dementia associated with Alzheimer’s disease [6,7] is typically classified as one of the following:

- Mild Cognitive Impairment: Many persons have a loss of memory as they become older, while others get dementia.

- Mild Dementia: Individuals with intermediate dementia frequently experience cognitive impairments that disrupt their daily life. Symptoms of dementia involve forgetfulness, insecurity, personality changes, disorientation, and difficulty doing everyday tasks.

- Moderate Dementia: The patient’s daily life becomes significantly more complicated, necessitating additional attention and support. The symptoms are comparable to mild-to-moderate dementia. Even combing one’s hair may require further assistance. Patients may also have substantial personality changes, such as becoming paranoid or irritable for no apparent reason. Sleep disturbances are also possible.

- Severe Dementia: Throughout this period, symptoms might worsen. Patients often lack communication skills, necessitating full-time treatment. One’s bladder function may be compromised, and even simple events such as holding one’s head up in a decent position and sitting down become difficult.

Deep learning [8,9] is a subset of artificial intelligence (AI) that uses numerous non-linear processing unit layers for performing tasks such as feature extraction and transformation. Each successive layer accepts as input the output of the previous layer [10]. Learning algorithms can be supervised (for example, classification) or unsupervised (for example, regression). Deep learning frameworks are critical for semantic video segmentation, image processing, object detection, and facial recognition, among other applications.

Deep learning (DL) appears to have a framework focused on learning several levels of data-based interpretations. Attributes are derived from relatively low qualities to construct a hierarchical representation. Typically, DL is primarily dependent on data representation learning. A vector of density-based values for every pixel, or features like custom shapes and edge clusters, can be used to represent a picture. A few of these characteristics help to depict data better. Instead of handmade features, deep learning techniques employ sophisticated algorithms for hierarchy-based feature extraction that best reflect data at this point. Rapid innovations in image analysis have aided the advancement of fast-changing advanced technologies [11,12,13,14,15,16]. Image processing has grown in prominence, particularly in the medical field. In fact, DL is a method that saves time and improves performance when compared to previous methods. While conventional approaches can only process single-layer photos, deep learning can process multi-layer images with outstanding performance. Deep learning’s most essential characteristic is that one can self-discover the variables that must be manually entered, allowing it to process images inside a single pass. This study aims to provide software for classifying dementia in MRI scans using deep learning [17,18,19].

1.1. Gap in Previous Work

Prior research found that the primary barrier to early dementia detection was an imbalance across classes, which resulted in an increase in the percentage of false positives and a decrease in precision. This is the main gap observed in previous research. Other reasons for the lack of precision include:

- Features overlapping as a result of the overlap of pixels and the rise in the difficulty of fitting in classifiers.

- Presence of noisy features, which enhance the error rate in early dementia detection.

With the help of noisy feature extraction, early dementia detection can become more accurate.

1.2. Contribution of the Present Research

The goal of this study is to reduce class disparities and the false positive rate of dementia stages in the early stages. Deep learning-based active reinforcement learning places a secondary emphasis on learning features and outlier samples. Such an emphasis improves the accuracy of the proposed method performance metrics and increases dementia stage detection in the early stages. In brief, the following contributions have been achieved.

- I.

- Class imbalance is improved through deep reinforcement learning and an iterative policy by balancing instances of each class.

- II.

- A convolution neural network with deep reinforcement learning is used to improve segmentation.

- III.

- Structure-based learning is used to improve the classification.

The main problem in medical imaging is the dataset. A class imbalance problem arises when the problem is exacerbated by the use of more expensive MRI images. Some researchers solved this problem using augmentation and smote methods, which increase noise, but our main contribution in the proposed work is to use reinforcement learning with a deep convolution network, which generates instances based on the learning process. The rest of this article is organized as follows: Section 2 contains the related work for the illustration of the Dementia classification scheme. Section 3 goes into great detail about the proposed methodology. Section 4 gives insight into the experimental findings and discussions, while Section 5 draws conclusions.

2. Related Work

Deep learning has received much interest because of its potential use in identifying Alzheimer’s disease. Numerous DL algorithms have recently been presented as Alzheimer’s disease diagnostics aids, supporting clinicians in making educated treatment decisions. In this area, we provide some works that seem directly connected to this study.

The author’s De and Chowdhury [20] used Diffusion Tensor Imaging (DTI) in 3D to distinguish Alzheimer’s disease, CN, EMCI, and Late Mild Cognitive Impairment (LMCI) for the first time in a direct four-class classification test. Individual VoxCNNs are trained on MD values, EPI intensities, and FA values extracted from a 3D DTI scan volume. Nawaz et al. [21] proposed Alzheimer’s stage detection based on in-depth features, using early layers imported from an AlexNet model and CNN deep features extracted. They applied ML techniques such as those of k-nearest neighbors (KNN), random forest (RF), and support-vector machines (SVM) to the recovered deep features. Evaluation results indicate that a feature-based model outperforms handmade and deep-learning approaches by 99.21%. Mohammed et al. [22] evaluated multiple machine-learning techniques for detecting dementia using a big, publicly available OASIS dataset. They evaluated AD detection using CNN models (ResNet-50 and AlexNet) and hybrid DL and ML techniques (ResNet-50+SVM and AlexNet+SVM). After correcting missing data in the OASIS database using t-SNE, i.e., the Stochastic Embedding method, they represented high-dimensional data in low-dimensional space using t-SNE. ML algorithms in every way you can think of. All these metrics were met or exceeded by the random forest method, which had an overall accuracy of 94%. Ghosh et al. [23] demonstrated the applicability of DL and its accompanying techniques, which could have a far-reaching impact on the diagnosis of dementia phases and lead to future treatments for image retrieval. In the study databases, MRI scans were categorized into four degrees of AD severity: no dementia, very mild dementia, mild dementia, and moderate dementia. Each patient’s dementia stage was classified via the CNN approach based on these four imaging criteria. Using the CNN-based model, the researchers could accurately diagnose and predict the different phases of Alzheimer’s disease. Abol Basher et al. [24] showed a way to diagnose AD by taking slice-wise volumetric characteristics from structural MRI data of the right and left brain hemispheres. The suggested method uses both CNN and DNN models. The classification networks were trained and tested using the image’s volumetric features. Using this proposed method, the researchers in [24] got a TA weighted average classification accuracy of 94.02 percent for the right and 94.82 percent for the left. Also, the AUC for the right was 90.62 percent, while 92.54 percent for the left. Herzog and Magoulas [25] focused on using transfer learning architectures for MRI image categorization. The basic model and fully connected layers or functions serve as the foundation. To look at changes in the brain, scientists use segmented images of brain asymmetry, which were recently shown to be a sign of early-onset dementia. The diagnostic strategy is made using these data. Murugan et al. [26] used a CNN to create a structure for recognizing AD features in MRI scans. By utilizing the four stages of dementia, the proposed framework achieves exceptional disease probability mapping from the local anatomical brain to an understanding of specific AD risk. To maintain a balanced distribution of instances, each class must include the same number of each kind. Class imbalance is a severe issue in the MRI picture database. Patients’ dementia stages are detected using an MRI-based technique. Al-Shoukry et al. [27] reviewed some of the most recent research on Alzheimer’s disease and explored how DL can aid in the early diagnosis of the condition. Pan et al. [28] suggested two effective sampling procedures for improving data distributions. Adaptive-SMOTE is a method that enhances the SMOTE approach by selecting risk and internal data groups from the minority class and constructing a new minority class based on the selected data. This is done to prevent the categorization boundary from expanding and to preserve the distributional properties of the original data. By contrast, Gaussian Oversampling combines the Gaussian distribution with a reduction in the number of dimensions. This shortens the tail of the Gaussian distribution. Chen et al. [29] analyzed three modern architectures: the VGG16, the ResNet-152, and the DenseNet-121. A total of 1315 participants were included. They utilized optimization techniques to train the neural network (NN) to deal with the limited data, including multiple dementia types. Their efforts resulted in a computerized and standardized evaluation of a person’s dementia test result and severity level. The work exceeded both the declared state-of-the-art and human accuracies, with screening levels of 96.65% accuracy and score accuracies as high as 98.54 percent.

Li et al. [30] analyzed MRI scans from 2146 patients (1,343 for verification and 803 for training). A deep learning algorithm was created and validated to predict the progression of MCI participants to Alzheimer’s disease in a time-to-event analysis setting.

Ucuzal et al. [31] employed deep learning to construct open-source software for the classification of dementia in MRI images. A framework for deep learning is used to develop a model capable of distinguishing between dementia patients and healthy individuals. The findings demonstrate that the proposed technique can identify individuals suspected of having dementia.

Tsang et al. [32] summarized the current state-of-the-art machine-learning approaches for dementia in healthcare informatics. The research analyzes and evaluates current scientific methodologies to identify significant challenges and obstacles when dealing with vast quantities of health data. While machine learning has demonstrated promise in data analysis for dementia care, there have been few attempts to utilize advanced machine learning techniques to leverage unified heterogeneous data. Raza et al. [33] presented a novel ML-based method for identifying and monitoring AD-like diseases. The DL analysis of MRI scans is used to diagnose AD-like disorders, together with an activity monitoring framework that records participants’ everyday tasks using body-worn inertial sensors. The activity surveillance system provides a foundation for assisting patients with daily tasks and gauges their sensitivity based on their activity level. Kaka and Prasad [34] proposed a new supervised ML technique for diagnosing Alzheimer’s disease using MRI. They used the OASIS and ADANI datasets. They obtained an image with improved contrast, applied Fuzzy C Mean clustering to segment tissues, and extracted features from segmented brain tissues. They used the Correlation-Based Feature Selection (CFS) techniques to reduce the dimensionality of features. Table 1 summarizes important contributions to the literature on DL-based dementia.

Table 1.

Study of literature for Deep learning-based Dementia.

Literature Review and Observation

The following analysis shows that there are problems in databases and claims that the proposed approach will significantly improve these problems. In this article, we use three datasets.

- Kaggle-based tiny dataset

- OASIS Imbalance dataset

- Balanced ADNI dataset

One of the problems that lead to poor performance in classification is the categorization of a dataset that has unbalanced categories. Except for the accuracy scale, the rating scales require that the categories be distributed evenly throughout the available slots. In this article, the OASIS dataset includes three classifications that are not evenly distributed [21,24].

The majority of medical datasets do not include enough images to fulfill the requirements for deep learning models, which need a large dataset. Consequently, the issue may be remedied by producing further images using the same information. In addition [24]

When the dataset is tiny, the issue of overfitting arises owing to a lack of data during the training phase. This problem is caused by a lack of data [21].

This article, https://www.kaggle.com/datasets/tourist55/alzheimers-dataset-4-classes-of-images, (accessed on 5 October 2022) uses this dataset to resolve problems using the proposed approach, as well as the OASIS dataset.

“Extensive preprocessing,” which often takes place in architectures other than CNNs, is applied to imaging features like texture, forms, or cortical thickness and regional characteristics, respectively [29].

3. Proposed System

3.1. Dataset

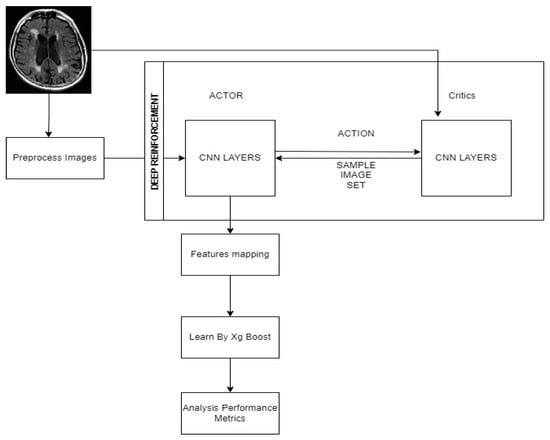

Figure 1 depicts in step 1 the input Dementia dataset. The data came from https://www.kaggle.com/datasets/tourist55/alzheimers-dataset-4-class-of-images (accessed on 5 October 2022). The data consists of MRI images. The data consists of four groups of images for training and testing: mildly deranged, moderately deranged, not deranged, and very mildly crazy. The ADNI dataset was gathered from over 40 radiology hubs, and it comprised 509 cases in total (137 AD cases, 76 MCIc cases, 134 MCInc cases, and 162 CN cases) [35]. The time frame to supervise the transition to AD was 18 months. ADNI was previously utilized in many studies to categorize AD and understand its transformation into AD. http://www.oasis-brains.org/longitudinal_facts.html (accessed on 5 October 2022) [36]. The Oasis longitudinal dataset contains 15 features of 374 patients aged 10, 28, or 5 of 20 between 60 and 90 years old, of whom 37 are converted, 190 are demented, and 147 are non-demented. There are a lot of different topics to choose from in each class. Figure 1 shows the main idea behind the DRLA technique that was suggested. A system for diagnosing the disease is trained using data consisting of samples with labels prior (i.e., (Xl, Yl)) and samples selected and labeled during reinforcement learning. During the learning process, an actor-network uses the learned policy and the current state to choose the most relevant dementia samples from unlabeled trained data (i.e., Xu). An annotator then assumes responsibility for making notes on the selected samples. Because of this, one can get more and more training data to improve the Xg-Boost classifier. Finally, a critic network can be taught to determine whether or not the selection of the actor-network improved the classifier’s performance. By using a deep reinforcement learning technique to train the critic and actor networks, one can choose and label the most specific example that seems to help train an effective classifier, thereby improving the classifier’s performance.

Figure 1.

Proposed work architecture.

3.2. CNN Layer

Table 2 discusses the CNN layers details in brief. With a kernel size of 45 × 45 × 45 and an image size of 8 blocks, convolution operations are carried out on the image. Strides 1, 2, and 3 have four convolutional layers, and the first filter has 32 3 × 3 kernels. The kernels act as feature finders and convolve with the picture to generate a set of features that have been convolved. Regional connection of the neurons to the previous volume is consequently enforced in neural networks because the size of the kernel denotes a neuron receptive field. Figure 1 of the CNN architecture shows the final arrangement.

Table 2.

Details of CNN Layers.

3.3. Training the Classifier

In Figure 1, step 2, and step 3, classifiers are trained. Let Id serve as the variable of a classifier network. At the start of the learning phase, one can pre-train the classifier using a labeled training data set. Then, deep reinforcement learning is used to choose and annotate samples, and then training of the classifier is done further to improve its performance.

The Dementia training samples are and the labels , where expresses the number of labelled data. The cross-entropy loss is reduced by training the classifier network, which is characterized as:

: determines whether the label of an ith sample is either j or not, M represents the classes number, and (·) expresses the indicating function.

represents Xg-Boost output of a classifier provided xi for the jth class, denoting the likelihood of xi belonging to label j as determined by the classifiers with parameters .

3.4. Extreme Gradient (XG) BOOST Learning with Deep Reinforcement

Figure 1, step 2, depicts a novel approach for developing a policy that leads the actor-network in the selection of samples for annotation. The next sections go over the suggested approach in depth.

Here represents the uncertain label, and represents an unlabeled training sample.

Action: Define θa as the actor network’s parameters. Since the objective of an actor-network would be to annotate training samples out of unlabeled training data, we specify the action in terms of , where every other component refers to an unlabeled training instance. Also, as an activation function for every element, the sigmoid function is used to produce a value amid 0 and 1.

A policy is learnt to produce action in response to a state S. After collecting the action vector; one can rate all candidate image samples, excluding those that have already been picked, and pick the very first specimens with the greatest values for annotations. The annotator’s several sorts of samples and labels are described by whereas the enhanced labeled training data are represented by

State Transition. One can upgrade the classifier using the enriched training data ( by minimizing the cross-entropy loss in Equation (1). Once the selected samples have been annotated and incorporated to the labeled training data, the modified classifier is then used to generate the new state matrix S# depending on Equation (2).

Reward. We recommend that the actor emphasize more on those samples that are very likely to be misidentified by the classifier in terms of improving the classifier’s performance. To do this, we devise a novel incentive mechanism based mostly on the annotator’s true labels. We define as the predicted label received from the classifier, i.e., for the sample selection and as the true label gained from the annotator.

The reward is as follows:

If a sample is correctly classified, then , and

A big reward, on the other hand, suggests that the selected samples have been erroneously categorized. This suggests that the classifier should pay more attention to the samples with incorrect predictions. As a result, the actor-network is incentivized to choose these image samples.

Reinforcement learning in state S tries to maximize the anticipated future reward, which would be represented like a Q-value function. The Q-value function is being used to assess the state-action pair (S, a), analogous to Q-learning in conventional reinforcement learning, and is described by the Bellman equation as

To estimate the Q-value function, we use a critic network with parameter . By considering the following problem, we want to establish a greedy policy for actors via deep Q-Learning.

We describe the estimated Q-value function

to solve the following problem to train the critic network.

Target Networks for Training: To stabilize the critic networks and training of an actor, we use a separate targeted system to compute as described in equation (4). The function is dependent on the proposed state S’, the actor to output action , and the critic to assess in Equation (5).

To calculate , we use a separate target actor network specified by and a separate target criticism network parameterized by . As a consequence, Equation (5) is rewritten as:

Here, (·; ) expresses the target policy assessed by target actor, and (·; ) represents the target critic’s function. The Deep deterministic policy gradient (DDPG) algorithm can be used to resolve this issue. The critic and the target actor are updated at the end of each era.

The critic and the target actor are updated at the end of each epoch given as:

A trade-off element is represented by . Samples (S, a, S’, r) are placed in a replay buffer to train the critic and actor inside the mini-batch case. As a result, one can choose data from the replay buffer evenly to update the critic and actor networks. The Algorithm 1 describes our recommended approach.

| Algorithm 1. DRLA (Deep learning Reinforcement Learning for Active learning) |

| Input: Training Data With Labels and testing data without labels |

| Output: Efficient Classified Dementia Stages |

| 1. Initialize training dataset |

| 2. Train Xg-Boost Classifier and obtain |

| 3. Determine the State S by Preprocessing of images based on Equation (2) |

| 4. Pick unlabeled training Sample unlabeled training Sample In accordance with the actor (training phase) is updated |

| 5. To get |

| 6. Using update the classifier Parameters , |

| 7. Compute using Equation (2) and the reward r using Equation (3) |

| 8. Save all instances |

| 9. Train by Xg-Boost |

| 10. Complete analysis of performance metrics |

4. Experimental Results and Discussion

This section demonstrates the experimental results of the proposed approach. We have performed the DRL-XGBOOST experiments on the datasets. The proposed method assesses results using accuracy, precision, recall, and F1-Score. To carry out the procedure, Windows 10 is installed, along with an NVIDIA GeForce graphics card, a CPU running at 2.70 GHz, and 32 GB of RAM. PyTorch and Keras API is used in Tensor Flow to implement DRL-XGBOOST.

4.1. Metrics Evaluation

The accuracy, precision, recall, and F1-score of the model are considered as follows [35,36,37]:

- Accuracy: It is the most important statistic for determining how effective the model is at forecasting true negative and positive outcomes. Equation (8) is used to calculate the accuracy.

- TP (true positives): Here, the model suggests that the image will be normal.

- TN (true negatives): Here, the model assumes an aberrant image and the actual label confirms the prediction.

- FP (false positives): In this, the model suggests a typical image, but the actual label is abnormal.

- FN (false negatives): Here, the model forecasts an aberrant image but the actual label is normal.

- Precision (PR), also called positive predictive value, is a value that belongs to [0, 1]. If the precision is equal to 1, the model is considered satisfactory. Precision is calculated using Equation (9).

The recall (REC), also known as sensitivity, measures the classifier’s ability to find all positive samples. Equation (10) is used to compute the recall.

According to Equation (11), the F1-score expresses the harmonic mean of precision and recall, which indicates how precision memory are matched.

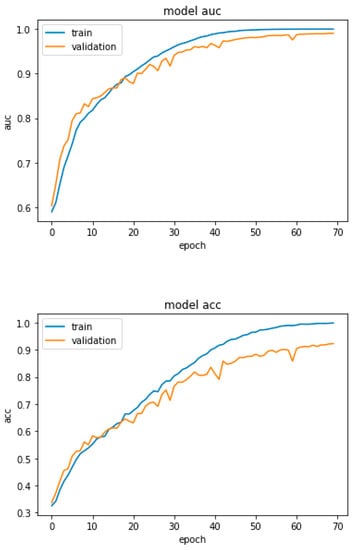

Figure 2 is a graph that depicts the training and validation curves for the proposed accuracy and AUC of DRL-XGBOOST. Owing to the overfitting and class imbalance issues, the training accuracy of the model is around 95%, whereas the validation accuracy is 94%. On the other hand, the model has the training AUC of approximately 98% and validation accuracy of 90%, as depicted in the above figures.

Figure 2.

Proposed DRL-XGBOOST Accuracy and AUC.

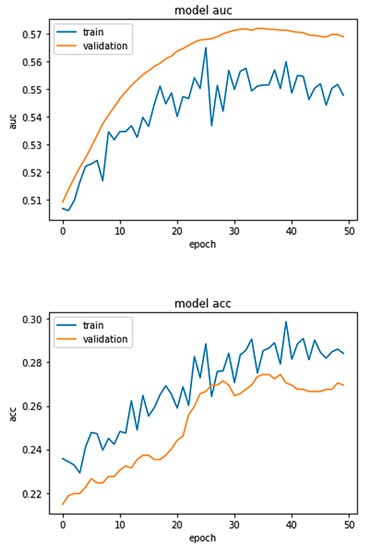

Figure 3 is a graph that depicts the training and validation curves for the proposed DRL accuracy and AUC. Owing to the overfitting and class imbalance problem, the model’s training accuracy is around 28%, and its validation accuracy is 26%. On the other hand, the model has the training AUC of approximately 56% and a validation accuracy of approximately 55%, as depicted in the above figures.

Figure 3.

DRL Approach Accuracy and AUC.

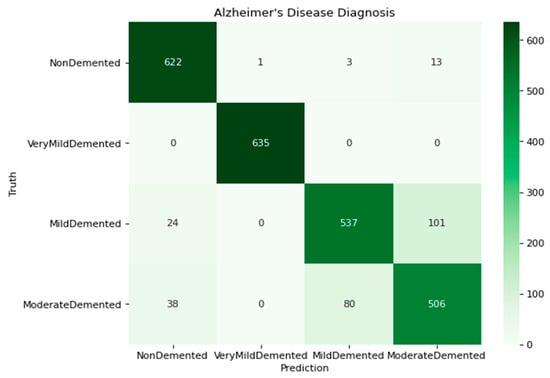

Figure 4 depicts the confusion matrix (contingency table) that is utilized to differentiate the phases of dementia and predict AD. The confusion matrix displays the expected class alongside the classes of the four distinct groups of varying degrees of dementia. The confusion matrix depicts the training performance of the model. The computation is conducted for (i) 639 ND (Non-Demented) images, (ii) 635 VMD (Very Mild Demented) images, (iii) 662 MD (Mild Demented) images, and (iv) 624 MOD (Moderate Demented) images. The confusion matrix is utilized to generate the class metrics presented in Table 3, which are derived from the individual class metrics.

Figure 4.

Confusion matrix of the model with four classes.

Table 3.

Performance analysis with different approaches.

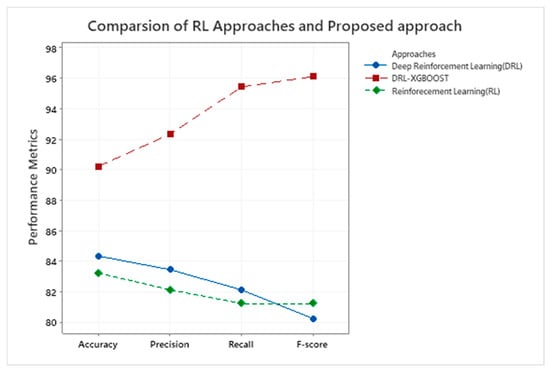

Table 3 displays the performance analysis of three different techniques, namely RL, DRL, and DRL-XGBOOST, based on their accuracy, precision, recall, and F1-score. DRL-XGBOOST with an accuracy of 90.23%, 92.34% of precision, 95.45% of recall, and 96.12% of F1-score outperforms the remaining two approaches. Figure 5 depicts the comparison of RL with the proposed approach graphically.

Figure 5.

Illustrate the performance metrics for the RL technique and DRL-XGBOOST.

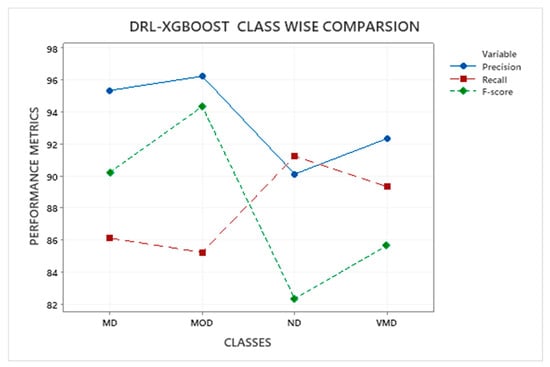

The performance indices of each class, including the ND, VMD, MD, and MOD classes, are presented in Table 4 according to their precision, recall, F1-score, and support. where MOD yields the highest precision value, followed by MD, VMD, and ND, respectively. Whereas the maximum recall value was found for ND, followed by VMD, MD, and MOD, respectively. By contrast, MOD has the highest F1-score and Support value compared to the other classes.

Table 4.

Performance indices of individual classes.

Figure 6 depicts a class-by-class comparison of DRL-XGBOOST performance in terms of precision, recall, and F1-score, as shown in Table 3.

Figure 6.

RL-XGBOOST Class Comparison.

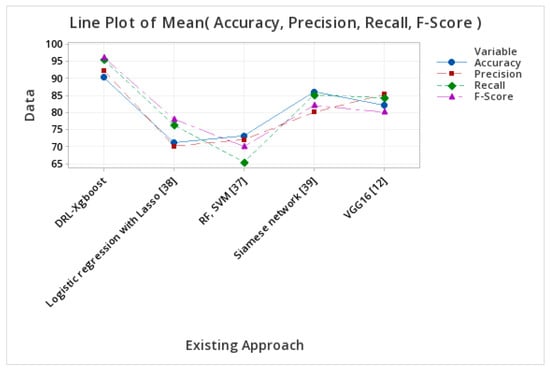

Table 5 displays the precision and accuracy of available methods. DRL-XGBOOST outperforms the competition with an accuracy of 90.23% and a precision of 92.34% followed by the Siamese network [37], VGG16 [12], Logistic regression with Lasso [38], RF, and SVM [39].

Table 5.

Accuracy and precision of existing approaches.

Figure 7 shows the key metrics of the existing and proposed methodologies, with the proposed approach achieving a high performance, as shown in Table 5.

Figure 7.

Performance metrics of different approaches.

Table 6 compares OASIS datasets using three distinct techniques, namely RL, DRL, and DRL-XGBOOST. DRL-XGBOOST has the highest accuracy compared to other approaches (91.23%), followed by DRL (85.12%) and RL (84.23%). Similarly, DRL-XGBOOST has the highest precision (91.23%), followed by DRL (85%) and RL (80.12%). Similarly, DRL-XGBOOST (94.56%) has the highest recall value, followed by RL (81.34%) and DRL (80.22%). Consequently, DRL-XGBOOST (95%) has the highest F-score value, followed by RL (82%) and DRL (81.23%).

Table 6.

OASIS dataset comparison.

Table 7 above compares ADNI datasets using three distinct techniques, namely RL, DRL, and DRL-XGBOOST. DRL-XGBOOST has the highest accuracy compared to other approaches (93.23%), followed by DRL (84%) and RL (83.12%). Similarly, DRL-XGBOOST has the highest precision (94.5%), followed by DRL (83%) and RL (82.13%). Similarly, DRL-XGBOOST (96.34%) has the highest recall value, followed by RL (80.2%) and DRL (80%). Consequently, DRL-XGBOOST (92.12%) has the highest F-score value, followed by DRL (82.12%) and DRL (81%).

Table 7.

ADNI dataset comparison.

4.2. Observations about the Experiment

- Using the actor-network and the critics-network, we apply deep reinforcement learning to tackle the concerns of class imbalance in the proposed method. Both networks improve the learning design.

- Figure 6 depicts the proposed approach’s confusion matrix, which includes all four classes of dementia severity degrees and helps to eliminate false positives.

- In Table 3, a comparison is made between the proposed approach and some existing forms of deep reinforcement learning. The proposed method greatly improves all performance indicators considered.

- Thanks to deep reinforcement learning the proposed approach improves the iteration-based picture sample actor and critics approach and enhances performance measures.

- In the proposed approach, training is updated based on the test, and active learning continues throughout the testing phase.

- The proposed method improved accuracy by 6–7%, precision by 9–10%, recall by 13–14%, and the F1-score by 9–10% in a typical experiment. The improvement is due to reinforcement learning layer-wise feature mapping by an activation function and Xg-Boosting learning to optimize features.

5. Conclusions

Deep Reinforcement Architecture (DRA) is utilized in this study to classify dementia. The model used to classify dementia stages is trained and evaluated in terms of data obtained from Kaggle. The dataset’s most important problem is its class imbalance. This issue is resolved via the XgBoost Deep Reinforcement method. We were able to achieve an overall accuracy of 90.23% with a 96% F1-Score by comparing our new model to the current models. A comparison between machine learning and deep learning approaches is shown in Table 4. The proposed technique increases performance measures because features are repeatedly learned and taught through an active learning process.

Author Contributions

Conceptualization, A.H. and O.B.; Data Curation, A.H. and O.B.; Formal Analysis, A.H.; Acquisition, A.H.; Methodology, A.H.; Resources, A.H.; Validation, A.H. and O.B.; Investigation, A.H.; Visualization, A.H.; Software, A.H.; Project Administration, A.H. Project Supervision, A.H.; Writing Original Draft, A.H. and O.B.; Writing review and edit, A.H. and O.B. All authors have read and agreed to the published version of the manuscript.

Funding

This project was funded by the Deanship of Scientific Research (DSR) at King Abdulaziz University, Jeddah, under grant no (G: 434-830-1443). The authors, therefore, acknowledge with thanks DSR for technical and financial support.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analysis, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Carrillo, M.C.; Bain, L.J.; Frisoni, G.B.; Weiner, M.W. Worldwide Alzheimer’s disease neuroimaging initiative. Alzheimers Dement 2012, 8, 337–342. [Google Scholar] [CrossRef] [PubMed]

- Miah, Y.; Prima, C.N.E.; Seema, S.J.; Mahmud, M.; Kaiser, M.S. Performance comparison of machine learning techniques in identifying dementia from open access clinical datasets. In Advances on Smart and Soft Computing; Springer: Singapore, 2021; pp. 79–89. [Google Scholar]

- Khan, M.A.; Kim, Y. Cardiac arrhythmia disease classification using LSTM deep learning approach. Comput. Mater. Contin. 2021, 67, 427–446. [Google Scholar]

- Sarraf, S.; Anderson, J.; Tofighi, G. Deep AD: Alzheimer’s Disease Classification via Deep Convolutional Neural Networks Using MRI and fMRI. bioRxiv 2016, 070441. [Google Scholar]

- Mills, S.; Cain, J.; Purandare, N.; Jackson, A. Biomarkers of cerebrovascular disease in dementia. Br. J. Radiol. 2007, 80, S128–S145. [Google Scholar] [CrossRef] [PubMed]

- Tanveer, M.; Richhariya, B.; Khan, R.U.; Rashid, A.H.; Khanna, P.; Prasad, M.; Lin, C.T. Machine Learning Techniques for the Diagnosis of Alzheimer’s Disease. ACM Trans. Multimed. Comput. Commun. Appl. 2020, 16, 1–35. [Google Scholar] [CrossRef]

- Alzheimer’s Disease Fact Sheet. 2019. Available online: https://www.nia.nih.gov/health/alzheimers-disease-fact-sheet (accessed on 5 October 2022).

- Xu, Z.; Deng, H.; Liu, J.; Yang, Y. Diagnosis of Alzheimer’s Disease Based on the Modified Tresnet. Electronics 2021, 10, 1908. [Google Scholar] [CrossRef]

- Liu, P.-H.; Su, S.-F.; Chen, M.-C.; Hsiao, C.-C. Deep Learning and Its Application to General Image Classification. In Proceedings of the 2015 International Conference on Informative and Cybernetics for Computational Social Systems (ICCSS), Chengdu, China, 13–15 August 2015; pp. 7–10. [Google Scholar]

- Liu, M.; Zhang, D.; Shen, D. Relationship Induced Multi-Template Learning for Diagnosis of Alzheimer’s Disease and Mild Cognitive Impairment. IEEE Trans. Med. Imaging 2016, 35, 1463–1474. [Google Scholar] [CrossRef]

- Salehi, A.W.; Baglat, P.; Sharma, B.B.; Gupta, G.; Upadhya, A. A CNN Model: Earlier Diagnosis and Classification of Alzheimer Disease Using MRI. In Proceedings of the 2020 International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 10–12 September 2020; pp. 156–161. [Google Scholar]

- Jain, R.; Jain, N.; Aggarwal, A.; Hemanth, D.J. Convolutional neural network-based Alzheimer’s disease classification from magnetic resonance brain images. Cogn. Syst. Res. 2019, 57, 147–159. [Google Scholar] [CrossRef]

- Noor, M.B.T.; Zenia, N.Z.; Kaiser, M.S.; Mahmud, M.; Al Mamun, S. Detecting neurodegenerative disease from mri: A brief review on a deep learning perspective. In International Conference on Brain Informatics; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Iizuka, T.; Fukasawa, M.; Kameyama, M. Deep-learning-based imaging-classification identified cingulate island sign in dementia with Lewy bodies. Sci. Rep. 2019, 9, 1–9. [Google Scholar]

- Ge, C.; Qu, Q.; Gu, I.Y.H.; Jakola, A.S. Multiscale Deep Convolutional Networks for Characterization and Detection of Alzheimer’s Disease Using MR images. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019. [Google Scholar]

- Manaswi, N.K. Understanding and working with Keras. In Deep Learning with Applications Using Python; Apress: Berkeley, CA, USA, 2018; pp. 31–43. [Google Scholar]

- Pellegrini, E.; Ballerini, L.; Hernandez, M.D.C.V.; Chappell, F.M.; González-Castro, V.; Anblagan, D.; Danso, S.; Muñoz-Maniega, S.; Job, D.; Pernet, C. Machine learning of neuroimaging for assisted diagnosis of cognitive impairment and dementia: A systematic review. Alzheimer’s Dement. Diagn. Assess. Dis. Monit. 2018, 10, 519–535. [Google Scholar] [CrossRef]

- Amoroso, N.; Diacono, D.; Fanizzi, A.; La Rocca, M.; Monaco, A.; Lombardi, A.; Guaragnella, C.; Bellotti, R.; Tangaro, S. Alzheimer’s Disease Neuroimaging Initiative. Deep learning reveals Alzheimer’s disease onset in MCI subjects: Results from an international challenge. J. Neurosci. Methods 2018, 302, 3–9. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, M.R.; Zhang, Y.; Feng, Z.; Lo, B.; Inan, O.T.; Liao, H. Neuroimaging and machine learning for dementia diagnosis: Recent advancements and future prospects. IEEE Rev. Biomed. Eng. 2018, 12, 19–33. [Google Scholar] [CrossRef] [PubMed]

- De, A.; Chowdhury, A.S. DTI Based Alzheimer’s Disease Classification with Rank Modulated Fusion of CNNs and Random Forest. Expert Syst. Appl. 2021, 169, 114338. [Google Scholar] [CrossRef]

- Nawaz, H.; Maqsood, M.; Afzal, S.; Aadil, F.; Mehmood, I.; Rho, S. A Deep Feature-Based Real-Time System for Alzheimer Disease Stage Detection. Multimed. Tools Appl. 2021, 80, 35789–35807. [Google Scholar] [CrossRef]

- Mohammed, B.A.; Senan, E.M.; Rassem, T.H.; Makbol, N.M.; Alanazi, A.A.; Al-Mekhlafi, Z.G.; Almurayziq, T.S.; Ghaleb, F.A. Multi-Method Analysis of Medical Records and MRI Images for Early Diagnosis of Dementia and Alzheimer’s Disease Based on Deep Learning and Hybrid Methods. Electronics 2021, 10, 2860. [Google Scholar] [CrossRef]

- Ghosh, R.; Cingreddy, A.R.; Melapu, V.; Joginipelli, S.; Kar, S. Application of Artificial Intelligence and Machine Learning Techniques in Classifying Extent of Dementia Across Alzheimer’s Image Data. Int. J. Quant. Struct. Relatsh. 2021, 6, 29–46. [Google Scholar] [CrossRef]

- Basher, A.; Kim, B.C.; Lee, K.H.; Jung, H.Y. Volumetric Feature-Based Alzheimer’s Disease Diagnosis From sMRI Data Using a Convolutional Neural Network and a Deep Neural Network. IEEE Access 2021, 9, 29870–29882. [Google Scholar] [CrossRef]

- Herzog, N.J.; Magoulas, G.D. Deep Learning of Brain Asymmetry Images and Transfer Learning for Early Diagnosis of Dementia. In International Conference on Engineering Applications of Neural Networks; Springer: Cham, Switzerland, 2021. [Google Scholar]

- Murugan, S.; Venkatesan, C.; Sumithra, M.G.; Gao, X.-Z.; Elakkiya, B.; Akila, M.; Manoharan, S. DEMNET: A Deep Learning Model for Early Diagnosis of Alzheimer Diseases and Dementia From MR Images. IEEE Access 2021, 9, 90319–90329. [Google Scholar] [CrossRef]

- Al-Shoukry, S.; Rassem, T.H.; Makbol, N.M. Alzheimer’s Diseases Detection by Using Deep Learning Algorithms: A Mini-Review. IEEE Access 2020, 8, 77131–77141. [Google Scholar] [CrossRef]

- Pan, T.; Zhao, J.; Wu, W.; Yang, J. Learning Imbalanced Datasets Based on SMOTE and Gaussian Distribution. Inf. Sci. 2020, 512, 1214–1233. [Google Scholar] [CrossRef]

- Chen, S.; Stromer, D.; Alabdalrahim, H.A.; Schwab, S.; Weih, M.; Maier, A. Automatic Dementia Screening and Scoring by Applying Deep Learning on Clock-Drawing Tests. Sci. Rep. 2020, 10, 20854. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Habes, M.; Wolk, D.A.; Fan, Y. A Deep Learning Model for Early Prediction of Alzheimer’s Disease Dementia Based on Hippocampal Magnetic Resonance Imaging Data. Alzheimer’s Dement. 2019, 15, 1059–1070. [Google Scholar] [CrossRef] [PubMed]

- Ucuzal, H.; Arslan, A.K.; Colak, C. Deep Learning Based-Classification of Dementia in Magnetic Resonance Imaging Scans. In Proceedings of the 2019 International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 21–22 September 2019; pp. 1–6. [Google Scholar]

- Tsang, G.; Xie, X.; Zhou, S.-M. Harnessing the Power of Machine Learning in Dementia Informatics Research: Issues, Opportunities, and Challenges. IEEE Rev. Biomed. Eng. 2019, 13, 113–129. [Google Scholar] [CrossRef] [PubMed]

- Raza, M.; Awais, M.; Ellahi, W.; Aslam, N.; Nguyen, H.X.; Le-Minh, H. Diagnosis and Monitoring of Alzheimer ‘s Patients Using Classical and Deep Learning Techniques. Expert Syst. Appl. 2019, 136, 353–364. [Google Scholar] [CrossRef]

- Kaka, J.R.; Prasad, K.S. Alzheimer’s Disease Detection Using Correlation Based Ensemble Feature Selection and Multi Support Vector Machine. Int. J. Comput. Digit. Syst. 2022, 12, 9–20. [Google Scholar] [CrossRef] [PubMed]

- Park, J.H.; Cho, H.E.; Kim, J.H.; Wall, M.M.; Stern, Y.; Lim, H.; Yoo, S.; Kim, H.S.; Cha, J. Machine Learning Prediction of Incidence of Alzheimer’s Disease Using Large-Scale Administrative Health Data. Npj Digit. Med. 2020, 3, 46. [Google Scholar] [CrossRef]

- Barnes, D.E.; Zhou, J.; Walker, R.L.; Larson, E.B.; Lee, S.J.; Boscardin, W.J.; Marcum, Z.A.; Dublin, S. Development and Validation of eRADAR: A Tool Using EHR Data to Detect Unrecognized Dementia. J. Am. Geriatr. Soc. 2020, 68, 103–111. [Google Scholar] [CrossRef]

- Liu, C.-F.; Padhy, S.; Ramachandran, S.; Wang, V.X.; Efimov, A.; Bernal, A.; Shi, L.; Vaillant, M.; Ratnanather, J.T.; Faria, A.V.; et al. Using Deep Siamese Neural Networks for Detection of Brain Asymmetries Associated with Alzheimer’s Disease and Mild Cognitive Impairment. Magn. Reson. Imaging 2019, 64, 190–199. [Google Scholar] [CrossRef]

- Marcus, D.S.; Wang, T.H.; Parker, J.; Csernansky, J.G.; Morris, J.C.; Buckner, R.L. Open Access Series of Imaging Studies (OASIS): Cross-sectional MRI data in young, middle aged, nondemented, and demented older adults. J. Cogn. Neurosci. 2007, 19, 1498–1507. [Google Scholar] [CrossRef]

- Petersen, R.C.; Aisen, P.S.; Beckett, L.A.; Donohue, M.C.; Gamst, A.C.; Harvey, D.J.; Jack, C.R., Jr.; Jagust, W.J.; Shaw, L.M.; Toga, A.W.; et al. Alzheimer’s Disease Neuroimaging Initiative (ADNI): Clinical characterization. Neurology 2010, 74, 201–209. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).