Inter-Rater Variability in the Evaluation of Lung Ultrasound in Videos Acquired from COVID-19 Patients

Abstract

:1. Introduction

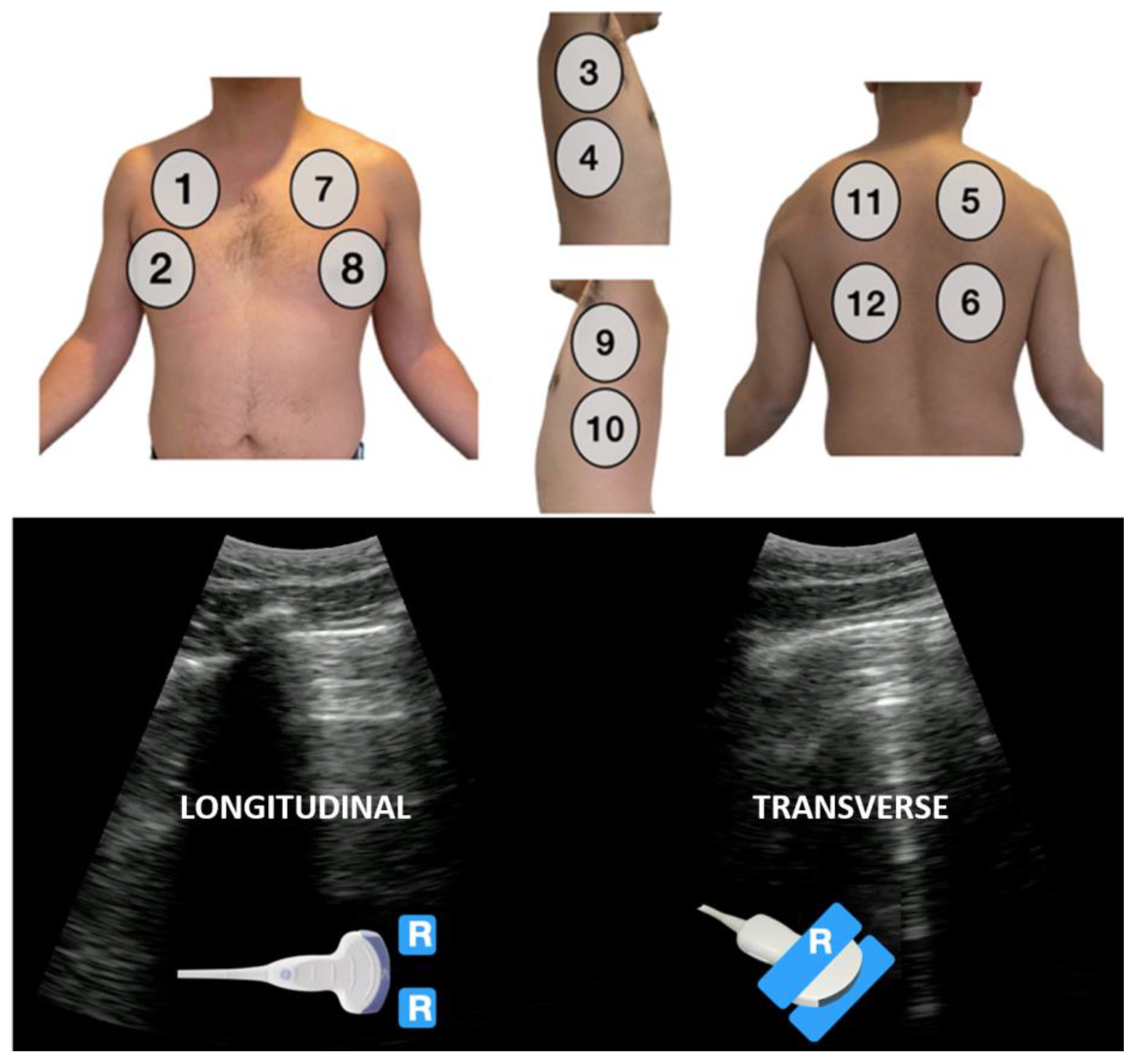

2. Materials and Methods

2.1. Preparation

2.2. Data Analysis

3. Results

3.1. Patients

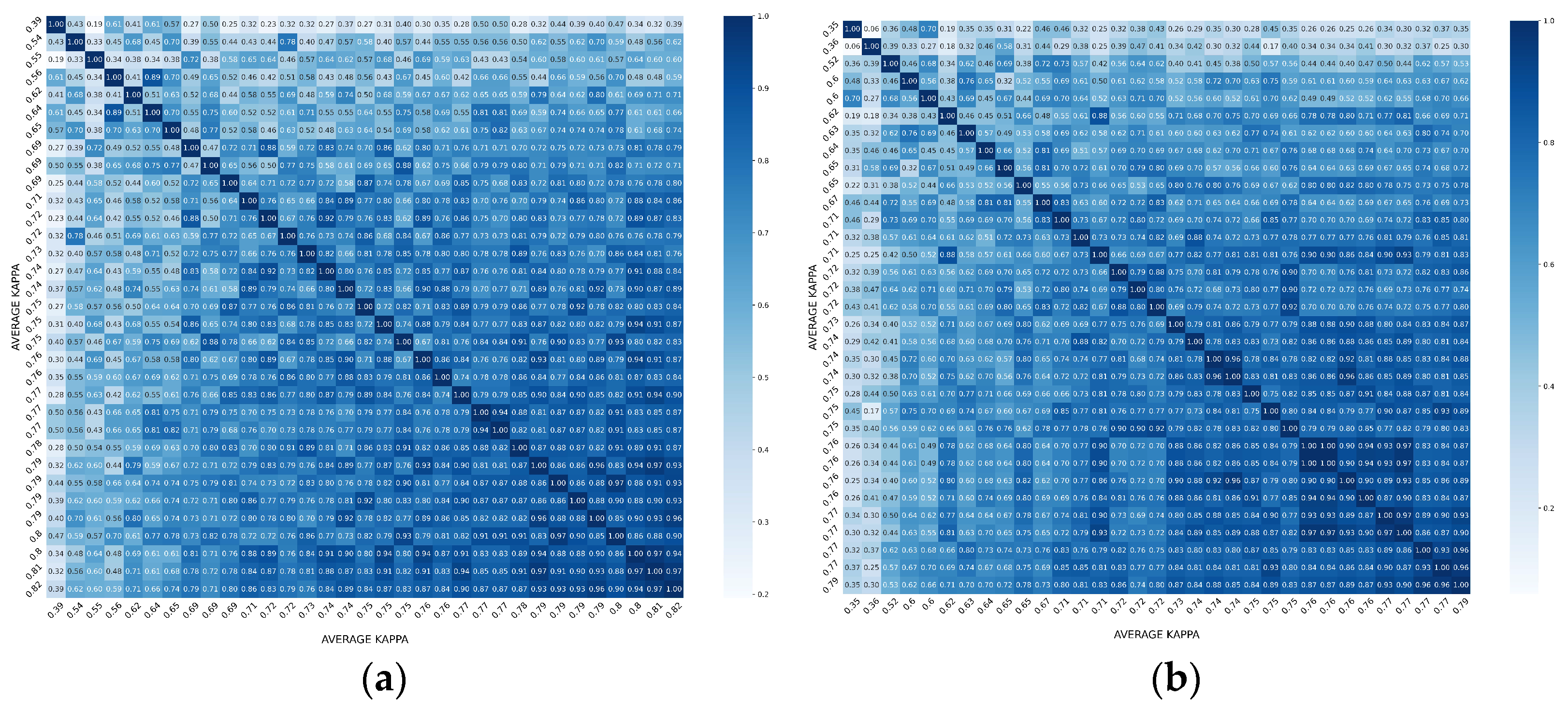

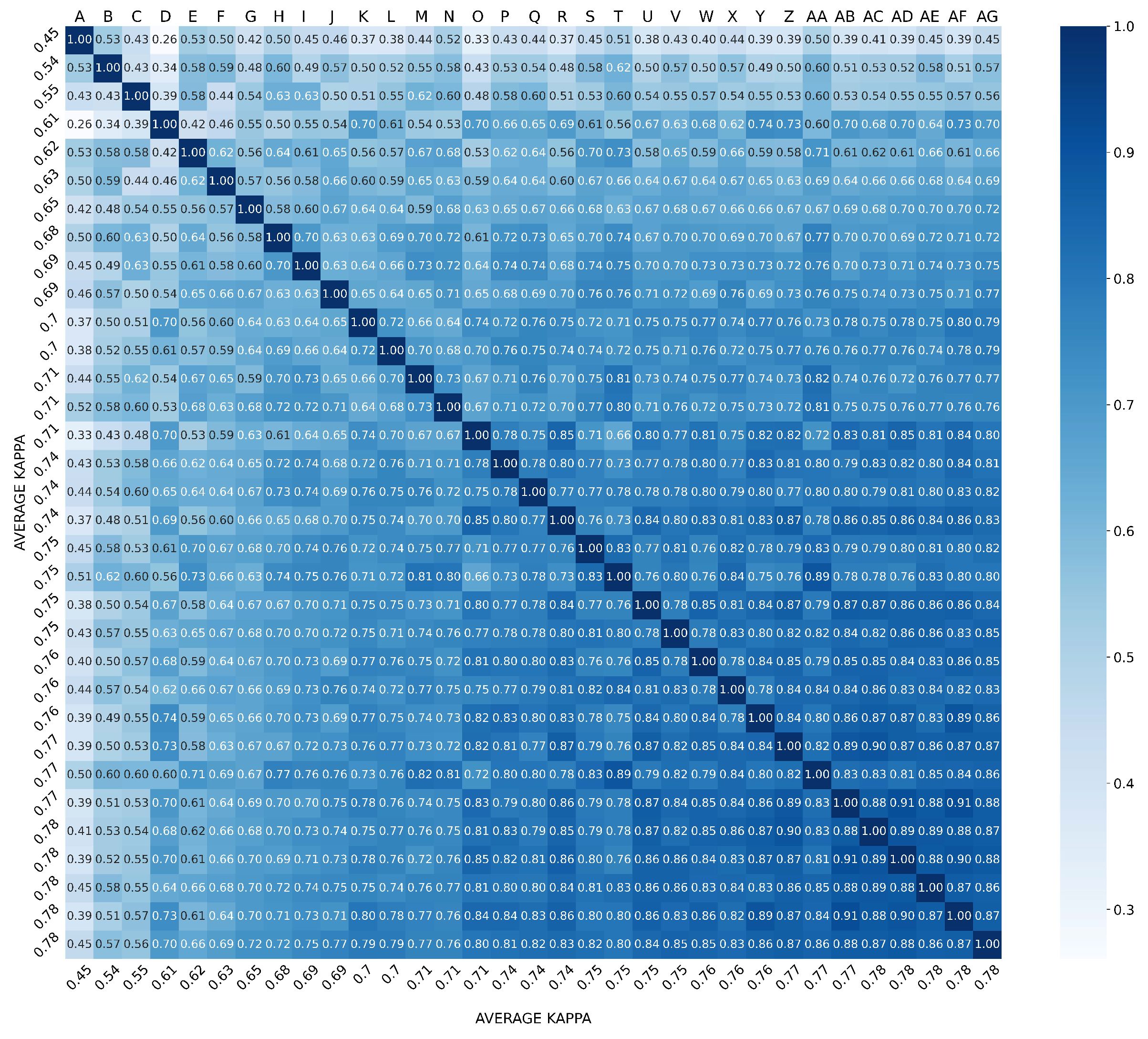

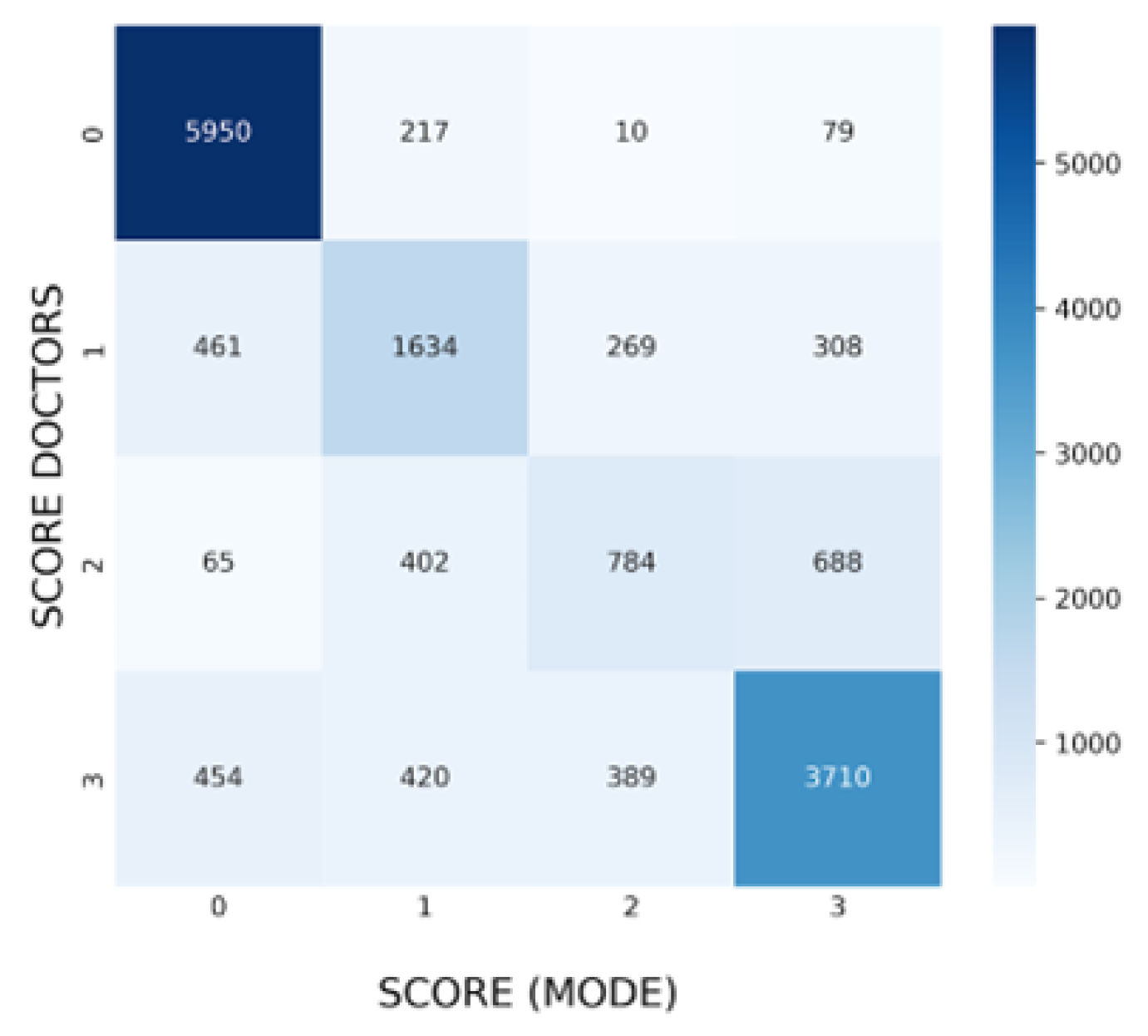

3.2. Overall Agreement between Raters

3.3. Agreement in Specific Findings

3.4. Agreement in Specific Findings

4. Discussion

- (1)

- Standardization of the terminology to describe artifacts and signs in LUS is essential. Several definitions of each LUS abnormality can be found in the literature, especially for consolidations, but also regarding pleural abnormalities [1], which were not considered in this study. This group believes that the reliability of findings such as consolidations might improve with a more specific and consensus-based definition.

- (2)

- The use of automatic tools to quickly analyze the acquisitions and obtain some quantitative values, such as the percentage of affected pleura (B-lines < 50% or >50%) and the size of the consolidations, may be helpful to obtain more consistent results among raters.

- (3)

- The length of the acquired videos (3 s in this study) could be extended to provide more information in some cases.

- (4)

- Access to additional clinical data about the patients may also help in their evaluation.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Demi, L.; Wolfram, F.; Klersy, C.; De Silvestri, A.; Ferretti, V.V.; Muller, M.; Miller, D.; Feletti, F.; Wełnicki, M.; Buda, N.; et al. New International Guidelines and Consensus on the Use of Lung Ultrasound. J. Ultrasound Med. 2022, 42, 309–344. [Google Scholar] [CrossRef]

- Gil-Rodrigo, A.; Llorens, P.; Luque-Hernández, M.-J.; Martínez-Buendía, C.; Ramos-Rincón, J.-M. Lung Ultrasound Integration in Assessment of Patients with Noncritical COVID-19. J. Ultrasound Med. Off. J. Am. Inst. Ultrasound Med. 2021, 40, 2203–2212. [Google Scholar] [CrossRef]

- Torres-Macho, J.; Sánchez-Fernández, M.; Arnanz-González, I.; Tung-Chen, Y.; Franco-Moreno, A.I.; Duffort-Falcó, M.; Beltrán-Romero, L.; Rodríguez-Suaréz, S.; Bernabeu-Wittel, M.; Urbano, E.; et al. Prediction Accuracy of Serial Lung Ultrasound in COVID-19 Hospitalized Patients (Pred-Echovid Study). J. Clin. Med. 2021, 10, 4818. [Google Scholar] [CrossRef] [PubMed]

- Volpicelli, G.; Gargani, L.; Perlini, S.; Spinelli, S.; Barbieri, G.; Lanotte, A.; Casasola, G.G.; Nogué-Bou, R.; Lamorte, A.; Agricola, E.; et al. Lung Ultrasound for the Early Diagnosis of COVID-19 Pneumonia: An International Multicenter Study. Intensive Care Med. 2021, 47, 444–454. [Google Scholar] [CrossRef] [PubMed]

- Calvo-Cebrián, A.; Alonso-Roca, R.; Rodriguez-Contreras, F.J.; Rodríguez-Pascual, M.d.l.N.; Calderín-Morales, M.d.P. Usefulness of Lung Ultrasound Examinations Performed by Primary Care Physicians in Patients With Suspected COVID-19. J. Ultrasound Med. 2021, 40, 741–750. [Google Scholar] [CrossRef] [PubMed]

- Ebrahimzadeh, S.; Islam, N.; Dawit, H.; Salameh, J.-P.; Kazi, S.; Fabiano, N.; Treanor, L.; Absi, M.; Ahmad, F.; Rooprai, P.; et al. Thoracic Imaging Tests for the Diagnosis of COVID-19. Cochrane Database Syst. Rev. 2022, 5, CD013639. [Google Scholar] [CrossRef] [PubMed]

- Caroselli, C.; Blaivas, M.; Marcosignori, M.; Tung Chen, Y.; Falzetti, S.; Mariz, J.; Fiorentino, R.; Pinto Silva, R.; Gomes Cochicho, J.; Sebastiani, S.; et al. Early Lung Ultrasound Findings in Patients With COVID-19 Pneumonia: A Retrospective Multicenter Study of 479 Patients. J. Ultrasound Med. Off. J. Am. Inst. Ultrasound Med. 2022, 41, 2547–2556. [Google Scholar] [CrossRef]

- Camacho, J.; Muñoz, M.; Genovés, V.; Herraiz, J.L.; Ortega, I.; Belarra, A.; González, R.; Sánchez, D.; Giacchetta, R.C.; Trueba-Vicente, Á.; et al. Artificial Intelligence and Democratization of the Use of Lung Ultrasound in COVID-19: On the Feasibility of Automatic Calculation of Lung Ultrasound Score. Int. J. Transl. Med. 2022, 2, 17–25. [Google Scholar] [CrossRef]

- Tung-Chen, Y.; de Gracia, M.M.; Díez-Tascón, A.; Alonso-González, R.; Agudo-Fernández, S.; Parra-Gordo, M.L.; Ossaba-Vélez, S.; Rodríguez-Fuertes, P.; Llamas-Fuentes, R. Correlation between Chest Computed Tomography and Lung Ultrasonography in Patients with Coronavirus Disease 2019 (COVID-19). Ultrasound Med. Biol. 2020, 46, 2918–2926. [Google Scholar] [CrossRef]

- Porcel, J.M. Pleural Diseases and COVID-19: Ubi Fumus, Ibi Ignis. Eur. Respir. J. 2020, 56, 2003308. [Google Scholar] [CrossRef] [PubMed]

- Hernández-Píriz, A.; Tung-Chen, Y.; Jiménez-Virumbrales, D.; Ayala-Larrañaga, I.; Barba-Martín, R.; Canora-Lebrato, J.; Zapatero-Gaviria, A.; Casasola-Sánchez, G.G.D. Importance of Lung Ultrasound Follow-Up in Patients Who Had Recovered from Coronavirus Disease 2019: Results from a Prospective Study. J. Clin. Med. 2021, 10, 3196. [Google Scholar] [CrossRef]

- Tung-Chen, Y.; Gil-Rodrigo, A.; Algora-Martín, A.; Llamas-Fuentes, R.; Rodríguez-Fuertes, P.; Marín-Baselga, R.; Alonso-Martínez, B.; Sanz Rodríguez, E.; Llorens Soriano, P.; Ramos-Rincón, J.-M. The lung ultrasound “Rule of 7” in the prognosis of COVID-19 patients: Results from a prospective multicentric study. Med. Clín. 2022, 159, 19–26. [Google Scholar] [CrossRef]

- Hussain, A.; Via, G.; Melniker, L.; Goffi, A.; Tavazzi, G.; Neri, L.; Villen, T.; Hoppmann, R.; Mojoli, F.; Noble, V.; et al. Multi-Organ Point-of-Care Ultrasound for COVID-19 (PoCUS4COVID): International Expert Consensus. Crit. Care Lond. Engl. 2020, 24, 702. [Google Scholar] [CrossRef]

- Kumar, A.; Weng, Y.; Graglia, S.; Chung, S.; Duanmu, Y.; Lalani, F.; Gandhi, K.; Lobo, V.; Jensen, T.; Nahn, J.; et al. Interobserver Agreement of Lung Ultrasound Findings of COVID-19. J. Ultrasound Med. Off. J. Am. Inst. Ultrasound Med. 2021, 40, 2369–2376. [Google Scholar] [CrossRef] [PubMed]

- DeSanti, R.L.; Cowan, E.A.; Kory, P.D.; Lasarev, M.R.; Schmidt, J.; Al-Subu, A.M. The Inter-Rater Reliability of Pediatric Point-of-Care Lung Ultrasound Interpretation in Children With Acute Respiratory Failure. J. Ultrasound Med. Off. J. Am. Inst. Ultrasound Med. 2022, 41, 1159–1167. [Google Scholar] [CrossRef]

- Fatima, N.; Mento, F.; Zanforlin, A.; Smargiassi, A.; Torri, E.; Perrone, T.; Demi, L. Human-to-AI Interrater Agreement for Lung Ultrasound Scoring in COVID-19 Patients. J. Ultrasound Med. Off. J. Am. Inst. Ultrasound Med. 2022. [Google Scholar] [CrossRef]

- Šustić, A.; Mirošević, M.; Szuldrzynski, K.; Marčun, R.; Haznadar, M.; Podbegar, M.; Protić, A. Inter-Observer Reliability for Different Point-of-Care Lung Ultrasound Findings in Mechanically Ventilated Critically Ill COVID-19 Patients. J. Clin. Monit. Comput. 2022, 36, 279–281. [Google Scholar] [CrossRef]

- Tung-Chen, Y.; Ossaba-Vélez, S.; Acosta Velásquez, K.S.; Parra-Gordo, M.L.; Díez-Tascón, A.; Villén-Villegas, T.; Montero-Hernández, E.; Gutiérrez-Villanueva, A.; Trueba-Vicente, Á.; Arenas-Berenguer, I.; et al. The Impact of Different Lung Ultrasound Protocols in the Assessment of Lung Lesions in COVID-19 Patients: Is There an Ideal Lung Ultrasound Protocol? J. Ultrasound 2021, 25, 483–491. [Google Scholar] [CrossRef]

- McHugh, M.L. Interrater Reliability: The Kappa Statistic. Biochem. Med. 2012, 22, 276–282. [Google Scholar] [CrossRef]

- Stemler, S. A Comparison of Consensus, Consistency, and Measurement Approaches to Estimating Interrater Reliability. Pract. Assess. Res. Eval. 2019, 9, 4. [Google Scholar] [CrossRef]

- Mateos González, M.; García de Casasola Sánchez, G.; Muñoz, F.J.T.; Proud, K.; Lourdo, D.; Sander, J.-V.; Jaimes, G.E.O.; Mader, M.; Canora Lebrato, J.; Restrepo, M.I.; et al. Comparison of Lung Ultrasound versus Chest X-ray for Detection of Pulmonary Infiltrates in COVID-19. Diagnostics 2021, 11, 373. [Google Scholar] [CrossRef]

- Pellegrino, F.; Carnevale, A.; Bisi, R.; Cavedagna, D.; Reverberi, R.; Uccelli, L.; Leprotti, S.; Giganti, M. Best Practices on Radiology Department Workflow: Tips from the Impact of the COVID-19 Lockdown on an Italian University Hospital. Healthcare 2022, 10, 1771. [Google Scholar] [CrossRef]

- Wang, M.; Luo, X.; Wang, L.; Estill, J.; Lv, M.; Zhu, Y.; Wang, Q.; Xiao, X.; Song, Y.; Lee, M.S.; et al. A Comparison of Lung Ultrasound and Computed Tomography in the Diagnosis of Patients with COVID-19: A Systematic Review and Meta-Analysis. Diagnostics 2021, 11, 1351. [Google Scholar] [CrossRef]

| Score | Finding | Fleiss Kappa (k and 95% CI) | Agreement | |

|---|---|---|---|---|

| 0 | Normal/A-lines | 0.74 | [0.71–0.76] | Substantial |

| 1 | Individual B-lines | 0.36 | [0.33–0.39] | Fair |

| 2 | Confluent B-lines < 50% | 0.26 | [0.24–0.29] | Fair |

| 3 | Confluent B-lines > 50% & Consolidations | 0.50 | [0.47–0.53] | Moderate |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Herraiz, J.L.; Freijo, C.; Camacho, J.; Muñoz, M.; González, R.; Alonso-Roca, R.; Álvarez-Troncoso, J.; Beltrán-Romero, L.M.; Bernabeu-Wittel, M.; Blancas, R.; et al. Inter-Rater Variability in the Evaluation of Lung Ultrasound in Videos Acquired from COVID-19 Patients. Appl. Sci. 2023, 13, 1321. https://doi.org/10.3390/app13031321

Herraiz JL, Freijo C, Camacho J, Muñoz M, González R, Alonso-Roca R, Álvarez-Troncoso J, Beltrán-Romero LM, Bernabeu-Wittel M, Blancas R, et al. Inter-Rater Variability in the Evaluation of Lung Ultrasound in Videos Acquired from COVID-19 Patients. Applied Sciences. 2023; 13(3):1321. https://doi.org/10.3390/app13031321

Chicago/Turabian StyleHerraiz, Joaquin L., Clara Freijo, Jorge Camacho, Mario Muñoz, Ricardo González, Rafael Alonso-Roca, Jorge Álvarez-Troncoso, Luis Matías Beltrán-Romero, Máximo Bernabeu-Wittel, Rafael Blancas, and et al. 2023. "Inter-Rater Variability in the Evaluation of Lung Ultrasound in Videos Acquired from COVID-19 Patients" Applied Sciences 13, no. 3: 1321. https://doi.org/10.3390/app13031321

APA StyleHerraiz, J. L., Freijo, C., Camacho, J., Muñoz, M., González, R., Alonso-Roca, R., Álvarez-Troncoso, J., Beltrán-Romero, L. M., Bernabeu-Wittel, M., Blancas, R., Calvo-Cebrián, A., Campo-Linares, R., Chehayeb-Morán, J., Chorda-Ribelles, J., García-Rubio, S., García-de-Casasola, G., Gil-Rodrigo, A., Henríquez-Camacho, C., Hernandez-Píriz, A., ... Tung-Chen, Y. (2023). Inter-Rater Variability in the Evaluation of Lung Ultrasound in Videos Acquired from COVID-19 Patients. Applied Sciences, 13(3), 1321. https://doi.org/10.3390/app13031321