Finding the Least Motion-Blurred Image by Reusing Early Features of Object Detection Network

Abstract

1. Introduction

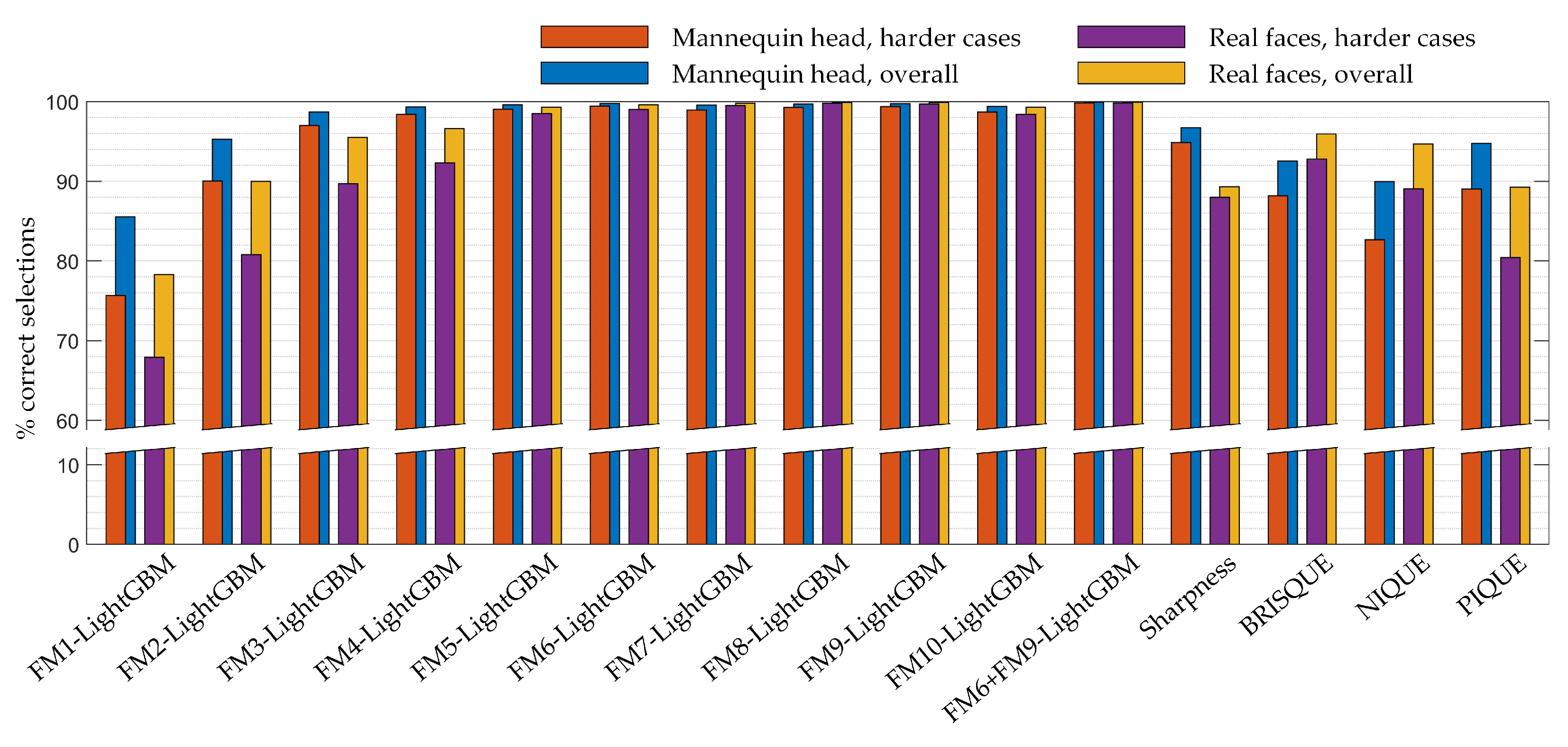

- Proposed a method for a video frame quality evaluation by predicting motion blur level. The proposed method reuses early features of the convolutional neural network (CNN), which is used in the same task (head 3D reconstruction) for human head detection.

- Presented a dataset construction method/guide for the development of motion blur evaluation method in video frames or images.

- Performed comparative evaluation of feature sources (feature maps of the convolutional neural network (CNN)) used for motion blur evaluation.

- Presented comparative results of image quality evaluation methods using mannequin head videos; results show that the created method is effective and performs better than simple sharpness-based, BRISQUE, NIQE, and PIQE blind image quality metrics in choosing less motion-blurred (better quality) frames for 3D human head reconstruction applications.

2. Materials and Methods

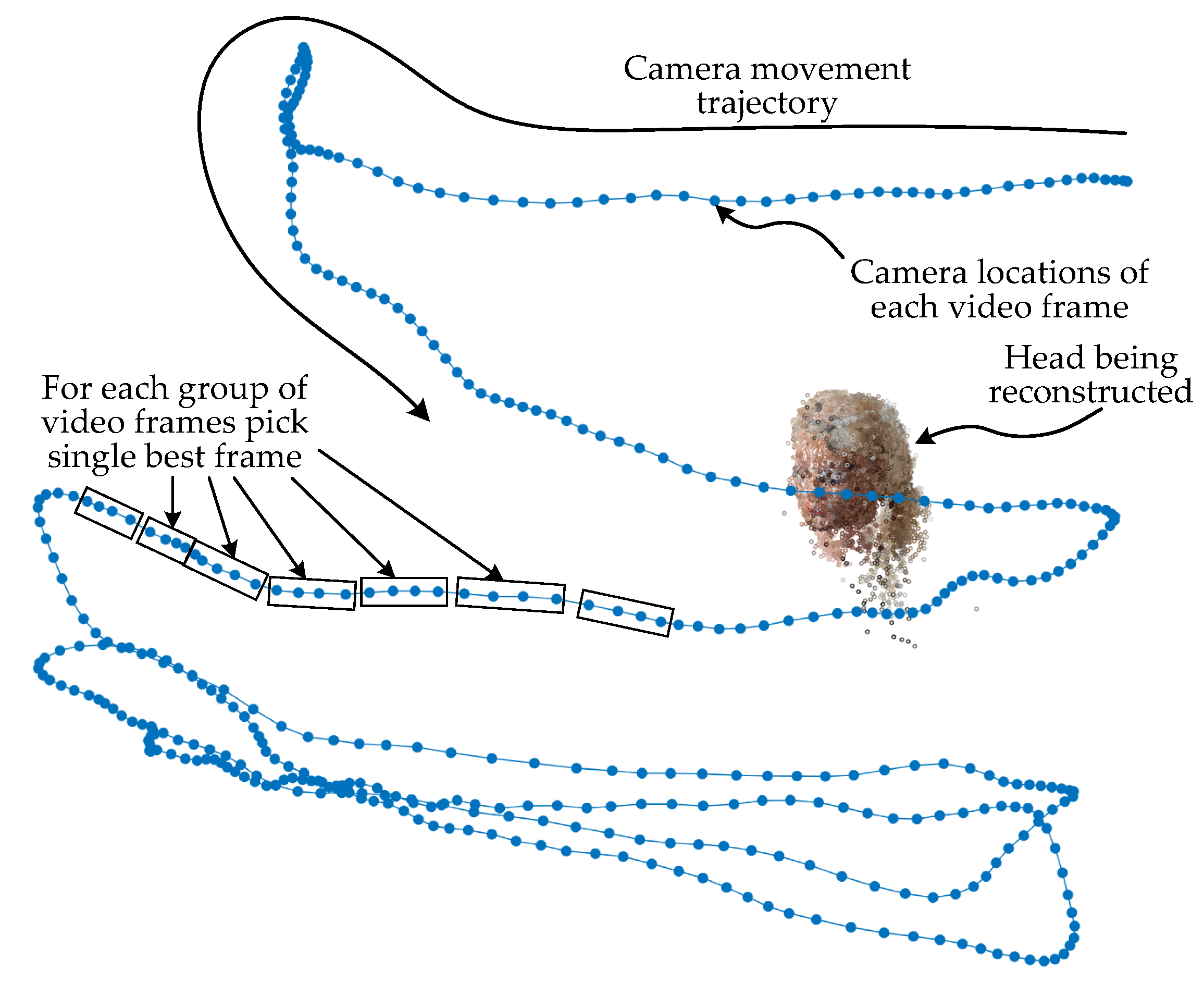

2.1. Motivation and Method for Finding the Least Motion-Blurred Image

2.1.1. Proposed Method

- Detection of the head region (BBox) (the BBox found in the head’s background removal step is reused).

- Limiting the feature map of the L-th convolutional layer to the region corresponding to the detected head BBox, we get a subarray of K-dimensional feature vectors.

- A subset of 100 feature vectors is uniformly sampled from the subarray of K-dimensional feature vectors for testing/evaluation. For comparison, a subset of 50 feature vectors is randomly sampled for training data.

- Sampled features are provided as inputs for the LightGBM ranker model during the inference (image quality assessment). For comparison, sampled features together with the outputs (ranking information) are given to the LightGBM ranker model during training.

- Making a decision: a higher score indicates less motion blur (better quality).

2.1.2. Standard Methods

Image Sharpness as Image Quality Proxy

- Detection of the head region (BBox);

- Determination of region of interest (RoI) parameters: RoI is a square and its size is the smaller edge of the head’s BBox;

- Cropping the RoI from the image and resizing it to px image patch;

- Filtering the cropped and resized patch using a Laplacian of Gaussian (LoG) filter ( filter size, );

- Calculating the variance of the filtered patch;

- Making a decision: a larger variance represents a higher image sharpness, hence less motion blur.

No Reference Image Quality Metrics

- BRISQUE—Blind/Referenceless Image Spatial Quality Evaluator [78]. BRISQUE is a model that is trained on databases of distorted images, and it employs an "opinion-aware" approach that assigns subjective quality scores to the training sets.

- NIQE—Natural Image Quality Evaluator [79]. The NIQE model is trained on undistorted images, therefore it can assess the quality of images with arbitrary distortions and does not rely on human opinions when measuring image quality. However, this system does not score images based on the subjective quality scores of viewers; hence, the NIQE score might not be as accurate in predicting human perception of quality.

- PIQE—Perception-based Image Quality Evaluator [80]. The PIQE algorithm is unsupervised, meaning it does not require a trained model. It is also opinion unaware. PIQE is suitable for measuring the quality of images with arbitrary distortion. Mostly it performs similarly to NIQE.

- Detection of the head region (BBox);

- Determination of region of interest (RoI) parameters: RoI is a square and its size is the shorter edge of the head’s BBox;

- Cropping the RoI from the image and resizing it to px image patch;

- Providing the patch as input for the BRISQUE, NIQE, or PIQE method;

- Making a decision: a smaller score indicates better perceptual quality.

2.2. Evaluation of the Methods Used for Determination of the Less Motion-Blurred Image

2.3. Data Preparation

2.4. Software Used

- MATLAB programming and numeric computing platform (version R2022a, The Mathworks Inc., Natick, MA, USA) for the implementation of the proposed algorithm (except for training LGBMRanker model), and for data analysis and visualization;

- SSD-based upper-body and head detector (https://github.com/AVAuco/ssd_people accessed on 15 September 2022) [84], for the detection of heads and as a source of features for the image similarity sorting;

- Python (version 3.9.10) (https://www.python.org), (accessed on 15 September 2022) [90], an interpreted, high-level, general-purpose programming language. NumPy, SciPy, and LightGBM packages were used for the machine learning applications (LGBMRanker tool).

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| SSD | Single Shot Detector |

| FM | Feature Map |

| BBox | Bounding Box |

| RoI | Region of Interest |

| LoG | Laplacian of Gaussian |

| AT | Affine Transformation |

| MB | Motion Blur |

| SfM | Structure from Motion |

References

- Xu, Z.; Wu, T.; Shen, Y.; Wu, L. Three dimentional reconstruction of large cultural heritage objects based on uav video and tls data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 985. [Google Scholar] [CrossRef]

- Matuzevičius, D.; Serackis, A.; Navakauskas, D. Mathematical models of oversaturated protein spots. Elektron. ir elektrotechnika 2007, 73, 63–68. [Google Scholar]

- Matuzevičius, D. Synthetic Data Generation for the Development of 2D Gel Electrophoresis Protein Spot Models. Appl. Sci. 2022, 12, 4393. [Google Scholar] [CrossRef]

- Hamzah, N.B.; Setan, H.; Majid, Z. Reconstruction of traffic accident scene using close-range photogrammetry technique. Geoinf. Sci. J. 2010, 10, 17–37. [Google Scholar]

- Caradonna, G.; Tarantino, E.; Scaioni, M.; Figorito, B. Multi-image 3D reconstruction: A photogrammetric and structure from motion comparative analysis. In Proceedings of the International Conference on Computational Science and Its Applications, Melbourne, Australia, 2–5 July 2018; pp. 305–316. [Google Scholar]

- Žuraulis, V.; Matuzevičius, D.; Serackis, A. A method for automatic image rectification and stitching for vehicle yaw marks trajectory estimation. Promet-Traffic Transp. 2016, 28, 23–30. [Google Scholar] [CrossRef]

- Sledevič, T.; Serackis, A.; Plonis, D. FPGA Implementation of a Convolutional Neural Network and Its Application for Pollen Detection upon Entrance to the Beehive. Agriculture 2022, 12, 1849. [Google Scholar] [CrossRef]

- Genchi, S.A.; Vitale, A.J.; Perillo, G.M.; Delrieux, C.A. Structure-from-motion approach for characterization of bioerosion patterns using UAV imagery. Sensors 2015, 15, 3593–3609. [Google Scholar] [CrossRef]

- Mistretta, F.; Sanna, G.; Stochino, F.; Vacca, G. Structure from motion point clouds for structural monitoring. Remote Sens. 2019, 11, 1940. [Google Scholar] [CrossRef]

- Varna, D.; Abromavičius, V. A System for a Real-Time Electronic Component Detection and Classification on a Conveyor Belt. Appl. Sci. 2022, 12, 5608. [Google Scholar] [CrossRef]

- Matuzevicius, D.; Navakauskas, D. Feature selection for segmentation of 2-D electrophoresis gel images. In Proceedings of the IEEE 2008 11th International Biennial Baltic Electronics Conference, Tallinn, Estonia, 6–8 October 2008; pp. 341–344. [Google Scholar]

- Zeraatkar, M.; Khalili, K. A Fast and Low-Cost Human Body 3D Scanner Using 100 Cameras. J. Imaging 2020, 6, 21. [Google Scholar] [CrossRef] [PubMed]

- Straub, J.; Kading, B.; Mohammad, A.; Kerlin, S. Characterization of a large, low-cost 3D scanner. Technologies 2015, 3, 19–36. [Google Scholar] [CrossRef]

- Straub, J.; Kerlin, S. Development of a large, low-cost, instant 3D scanner. Technologies 2014, 2, 76–95. [Google Scholar] [CrossRef]

- Özyeşil, O.; Voroninski, V.; Basri, R.; Singer, A. A survey of structure from motion*. Acta Numer. 2017, 26, 305–364. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from motion photogrammetry in forestry: A review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef]

- Wei, Y.M.; Kang, L.; Yang, B.; Wu, L.D. Applications of structure from motion: A survey. J. Zhejiang Univ. Sci. C 2013, 14, 486–494. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Jiang, R.; Jáuregui, D.V.; White, K.R. Close-range photogrammetry applications in bridge measurement: Literature review. Measurement 2008, 41, 823–834. [Google Scholar] [CrossRef]

- Barbero-García, I.; Cabrelles, M.; Lerma, J.L.; Marqués-Mateu, Á. Smartphone-based close-range photogrammetric assessment of spherical objects. Photogramm. Rec. 2018, 33, 283–299. [Google Scholar] [CrossRef]

- Fawzy, H.E.D. The accuracy of mobile phone camera instead of high resolution camera in digital close range photogrammetry. Int. J. Civ. Eng. Technol. (IJCIET) 2015, 6, 76–85. [Google Scholar]

- Vacca, G. Overview of open source software for close range photogrammetry. In Proceedings of the 2019 Free and Open Source Software for Geospatial, FOSS4G 2019, International Society for Photogrammetry and Remote Sensing, Bucharest, Romania, 26–30 August 2019; Volume 42, pp. 239–245. [Google Scholar]

- Griwodz, C.; Gasparini, S.; Calvet, L.; Gurdjos, P.; Castan, F.; Maujean, B.; Lillo, G.D.; Lanthony, Y. AliceVision Meshroom: An open-source 3D reconstruction pipeline. In Proceedings of the 12th ACM Multimedia Systems Conference-MMSys ’21, Istanbul, Turkey, 28 September–1 October 2021; ACM Press: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Schönberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Schönberger, J.L.; Zheng, E.; Pollefeys, M.; Frahm, J.M. Pixelwise View Selection for Unstructured Multi-View Stereo. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Wu, C. VisualSFM: A Visual Structure from Motion System. Available online: http://ccwu.me/vsfm/ (accessed on 5 December 2022).

- Moulon, P.; Monasse, P.; Perrot, R.; Marlet, R. OpenMVG: Open multiple view geometry. In Proceedings of the International Workshop on Reproducible Research in Pattern Recognition, Cancún, Mexico, 4 December 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 60–74. [Google Scholar]

- Regard3D. Available online: www.regard3d.org/ (accessed on 5 December 2022).

- OpenDroneMap—A Command Line Toolkit to Generate Maps, Point Clouds, 3D Models and DEMs from Drone, Balloon or Kite Images. Available online: https://github.com/OpenDroneMap/ODM/ (accessed on 5 December 2022).

- Fuhrmann, S.; Langguth, F.; Goesele, M. MVE-A Multi-View Reconstruction Environment. In Proceedings of the Eurographics Workshop on Graphics and Cultural Heritage, Darmstadt, Germany, 6–8 October 2014; pp. 11–18. [Google Scholar]

- Rupnik, E.; Daakir, M.; Deseilligny, M.P. MicMac–a free, open-source solution for photogrammetry. Open Geospat. Data Softw. Stand. 2017, 2, 1–9. [Google Scholar] [CrossRef]

- Nikolov, I.; Madsen, C. Benchmarking close-range structure from motion 3D reconstruction software under varying capturing conditions. In Proceedings of the Euro-Mediterranean Conference; Springer: Berlin/Heidelberg, Germany, 2016; pp. 15–26. [Google Scholar]

- Pixpro. Available online: https://www.pix-pro.com/ (accessed on 5 December 2022).

- Agisoft. Metashape. Available online: https://www.agisoft.com/ (accessed on 5 December 2022).

- 3Dflow. 3DF Zephyr. Available online: https://www.3dflow.net/ (accessed on 5 December 2022).

- Bentley. ContextCapture. Available online: https://www.bentley.com/software/contextcapture-viewer/ (accessed on 5 December 2022).

- Autodesk. ReCap. Available online: https://www.autodesk.com/products/recap/ (accessed on 5 December 2022).

- CapturingReality. RealityCapture. Available online: https://www.capturingreality.com/ (accessed on 5 December 2022).

- Technologies, P. PhotoModeler. Available online: https://www.photomodeler.com/ (accessed on 5 December 2022).

- Pix4D. PIX4Dmapper. Available online: https://www.pix4d.com/product/pix4dmapper-photogrammetry-software/ (accessed on 5 December 2022).

- DroneDeploy. Available online: https://www.dronedeploy.com/ (accessed on 5 December 2022).

- Trimble. Inpho. Available online: https://geospatial.trimble.com/products-and-solutions/trimble-inpho (accessed on 5 December 2022).

- OpenDroneMap. WebODM. Available online: https://www.opendronemap.org/webodm/ (accessed on 5 December 2022).

- AG, P. Elcovision 10. Available online: https://en.elcovision.com/ (accessed on 5 December 2022).

- Trojnacki, M.; Dąbek, P.; Jaroszek, P. Analysis of the Influence of the Geometrical Parameters of the Body Scanner on the Accuracy of Reconstruction of the Human Figure Using the Photogrammetry Technique. Sensors 2022, 22, 9181. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, H. Applications of digital photogrammetry to medical investigations. ISPRS J. Photogramm. Remote Sens. 1995, 50, 27–36. [Google Scholar] [CrossRef]

- Barbero-García, I.; Pierdicca, R.; Paolanti, M.; Felicetti, A.; Lerma, J.L. Combining machine learning and close-range photogrammetry for infant’s head 3D measurement: A smartphone-based solution. Measurement 2021, 182, 109686. [Google Scholar] [CrossRef]

- Barbero-García, I.; Lerma, J.L.; Mora-Navarro, G. Fully automatic smartphone-based photogrammetric 3D modelling of infant’s heads for cranial deformation analysis. ISPRS J. Photogramm. Remote Sens. 2020, 166, 268–277. [Google Scholar] [CrossRef]

- Lerma, J.L.; Barbero-García, I.; Marqués-Mateu, Á.; Miranda, P. Smartphone-based video for 3D modelling: Application to infant’s cranial deformation analysis. Measurement 2018, 116, 299–306. [Google Scholar] [CrossRef]

- Barbero-García, I.; Lerma, J.L.; Marqués-Mateu, Á.; Miranda, P. Low-cost smartphone-based photogrammetry for the analysis of cranial deformation in infants. World Neurosurg. 2017, 102, 545–554. [Google Scholar] [CrossRef] [PubMed]

- Ariff, M.F.M.; Setan, H.; Ahmad, A.; Majid, Z.; Chong, A. Measurement of the human face using close-range digital photogrammetry technique. In Proceedings of the International Symposium and Exhibition on Geoinformation, GIS Forum, Penang, Malaysia, 27–29 September 2005. [Google Scholar]

- Schaaf, H.; Malik, C.Y.; Streckbein, P.; Pons-Kuehnemann, J.; Howaldt, H.P.; Wilbrand, J.F. Three-dimensional photographic analysis of outcome after helmet treatment of a nonsynostotic cranial deformity. J. Craniofacial Surg. 2010, 21, 1677–1682. [Google Scholar] [CrossRef]

- Utkualp, N.; Ercan, I. Anthropometric measurements usage in medical sciences. BioMed Res. Int. 2015, 2015. [Google Scholar] [CrossRef]

- Galantucci, L.M.; Lavecchia, F.; Percoco, G. 3D Face measurement and scanning using digital close range photogrammetry: Evaluation of different solutions and experimental approaches. In Proceedings of the International Conference on 3D Body Scanning Technologies, Lugano, Switzerland, 9–20 October 2010; p. 52. [Google Scholar]

- Galantucci, L.M.; Percoco, G.; Di Gioia, E. New 3D digitizer for human faces based on digital close range photogrammetry: Application to face symmetry analysis. Int. J. Digit. Content Technol. Its Appl. 2012, 6, 703. [Google Scholar]

- Jones, P.R.; Rioux, M. Three-dimensional surface anthropometry: Applications to the human body. Opt. Lasers Eng. 1997, 28, 89–117. [Google Scholar] [CrossRef]

- Löffler-Wirth, H.; Willscher, E.; Ahnert, P.; Wirkner, K.; Engel, C.; Loeffler, M.; Binder, H. Novel anthropometry based on 3D-bodyscans applied to a large population based cohort. PLoS ONE 2016, 11, e0159887. [Google Scholar] [CrossRef] [PubMed]

- Clausner, T.; Dalal, S.S.; Crespo-García, M. Photogrammetry-based head digitization for rapid and accurate localization of EEG electrodes and MEG fiducial markers using a single digital SLR camera. Front. Neurosci. 2017, 11, 264. [Google Scholar] [CrossRef] [PubMed]

- Abromavičius, V.; Serackis, A. Eye and EEG activity markers for visual comfort level of images. Biocybern. Biomed. Eng. 2018, 38, 810–818. [Google Scholar] [CrossRef]

- Abromavicius, V.; Serackis, A.; Katkevicius, A.; Plonis, D. Evaluation of EEG-based Complementary Features for Assessment of Visual Discomfort based on Stable Depth Perception Time. Radioengineering 2018, 27. [Google Scholar] [CrossRef]

- Leipner, A.; Obertová, Z.; Wermuth, M.; Thali, M.; Ottiker, T.; Sieberth, T. 3D mug shot—3D head models from photogrammetry for forensic identification. Forensic Sci. Int. 2019, 300, 6–12. [Google Scholar] [CrossRef] [PubMed]

- Battistoni, G.; Cassi, D.; Magnifico, M.; Pedrazzi, G.; Di Blasio, M.; Vaienti, B.; Di Blasio, A. Does Head Orientation Influence 3D Facial Imaging? A Study on Accuracy and Precision of Stereophotogrammetric Acquisition. Int. J. Environ. Res. Public Health 2021, 18, 4276. [Google Scholar] [CrossRef]

- Trujillo-Jiménez, M.A.; Navarro, P.; Pazos, B.; Morales, L.; Ramallo, V.; Paschetta, C.; De Azevedo, S.; Ruderman, A.; Pérez, O.; Delrieux, C.; et al. body2vec: 3D Point Cloud Reconstruction for Precise Anthropometry with Handheld Devices. J. Imaging 2020, 6, 94. [Google Scholar] [CrossRef]

- Heymsfield, S.B.; Bourgeois, B.; Ng, B.K.; Sommer, M.J.; Li, X.; Shepherd, J.A. Digital anthropometry: A critical review. Eur. J. Clin. Nutr. 2018, 72, 680–687. [Google Scholar] [CrossRef]

- Perini, T.A.; Oliveira, G.L.D.; Ornellas, J.D.S.; Oliveira, F.P.D. Technical error of measurement in anthropometry. Rev. Bras. De Med. Do Esporte 2005, 11, 81–85. [Google Scholar] [CrossRef]

- Kouchi, M.; Mochimaru, M. Errors in landmarking and the evaluation of the accuracy of traditional and 3D anthropometry. Appl. Ergon. 2011, 42, 518–527. [Google Scholar] [CrossRef]

- Zhuang, Z.; Shu, C.; Xi, P.; Bergman, M.; Joseph, M. Head-and-face shape variations of US civilian workers. Appl. Ergon. 2013, 44, 775–784. [Google Scholar] [CrossRef]

- Kuo, C.C.; Wang, M.J.; Lu, J.M. Developing sizing systems using 3D scanning head anthropometric data. Measurement 2020, 152, 107264. [Google Scholar] [CrossRef]

- Pang, T.Y.; Lo, T.S.T.; Ellena, T.; Mustafa, H.; Babalija, J.; Subic, A. Fit, stability and comfort assessment of custom-fitted bicycle helmet inner liner designs, based on 3D anthropometric data. Appl. Ergon. 2018, 68, 240–248. [Google Scholar] [CrossRef] [PubMed]

- Ban, K.; Jung, E.S. Ear shape categorization for ergonomic product design. Int. J. Ind. Ergon. 2020, 102962. [Google Scholar] [CrossRef]

- Verwulgen, S.; Lacko, D.; Vleugels, J.; Vaes, K.; Danckaers, F.; De Bruyne, G.; Huysmans, T. A new data structure and workflow for using 3D anthropometry in the design of wearable products. Int. J. Ind. Ergon. 2018, 64, 108–117. [Google Scholar] [CrossRef]

- Simmons, K.P.; Istook, C.L. Body measurement techniques: Comparing 3D body-scanning and anthropometric methods for apparel applications. J. Fash. Mark. Manag. 2003, 7, 306–332. [Google Scholar] [CrossRef]

- Zhao, Y.; Mo, Y.; Sun, M.; Zhu, Y.; Yang, C. Comparison of three-dimensional reconstruction approaches for anthropometry in apparel design. J. Text. Inst. 2019. [Google Scholar] [CrossRef]

- Psikuta, A.; Frackiewicz-Kaczmarek, J.; Mert, E.; Bueno, M.A.; Rossi, R.M. Validation of a novel 3D scanning method for determination of the air gap in clothing. Measurement 2015, 67, 61–70. [Google Scholar] [CrossRef]

- Paquette, S. 3D scanning in apparel design and human engineering. IEEE Comput. Graph. Appl. 1996, 16, 11–15. [Google Scholar] [CrossRef]

- Yao, G.; Huang, P.; Ai, H.; Zhang, C.; Zhang, J.; Zhang, C.; Wang, F. Matching wide-baseline stereo images with weak texture using the perspective invariant local feature transformer. J. Appl. Remote Sens. 2022, 16, 036502. [Google Scholar] [CrossRef]

- Wei, L.; Huo, J. A Global fundamental matrix estimation method of planar motion based on inlier updating. Sensors 2022, 22, 4624. [Google Scholar] [CrossRef] [PubMed]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Venkatanath, N.; Praneeth, D.; Bh, M.C.; Channappayya, S.S.; Medasani, S.S. Blind image quality evaluation using perception based features. In Proceedings of the IEEE 2015 Twenty First National Conference on Communications (NCC), Bombay, India, 27 February–1 March 2015; pp. 1–6. [Google Scholar]

- Kumar, A.; Kaur, A.; Kumar, M. Face detection techniques: A review. Artif. Intell. Rev. 2019, 52, 927–948. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision, ECCV’2016, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Marin-Jimenez, M.J.; Kalogeiton, V.; Medina-Suarez, P.; Zisserman, A. LAEO-Net: Revisiting people Looking At Each Other in videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3477–3485. [Google Scholar]

- Santos, A.; Ortiz de Solórzano, C.; Vaquero, J.J.; Pena, J.M.; Malpica, N.; del Pozo, F. Evaluation of autofocus functions in molecular cytogenetic analysis. J. Microsc. 1997, 188, 264–272. [Google Scholar] [CrossRef] [PubMed]

- Matuzevičius, D.; Serackis, A. Three-Dimensional Human Head Reconstruction Using Smartphone-Based Close-Range Video Photogrammetry. Appl. Sci. 2021, 12, 229. [Google Scholar] [CrossRef]

- The MathWorks® Image Processing Toolbox; MathWorks: Natick, MA, USA, 2022.

- Rothe, R.; Timofte, R.; Gool, L.V. Deep expectation of real and apparent age from a single image without facial landmarks. Int. J. Comput. Vis. 2018, 126, 144–157. [Google Scholar] [CrossRef]

- Rothe, R.; Timofte, R.; Gool, L.V. DEX: Deep EXpectation of apparent age from a single image. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Van Rossum, G.; Drake, F.L. Python 3 Reference Manual; CreateSpace: Scotts Valley, CA, USA, 2009. [Google Scholar]

| Layer ID/No. | Layer Name | Feature Map Shape |

|---|---|---|

| [] 1 | ||

| FM1 | Conv 1-1 | |

| FM2 | Conv 1-2 | |

| FM3 | Conv 2-1 | |

| FM4 | Conv 2-2 | |

| FM5 | Conv 3-1 | |

| FM6 | Conv 3-2 | |

| FM7 | Conv 3-3 | |

| FM8 | Conv 4-1 | |

| FM9 | Conv 4-2 | |

| FM10 | Conv 4-3 |

| Method | Results of Selecting a Less Motion-Blurred Image from a Pair [% Correct Selections] | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| I1-I2 | I1-I3 | I1-I4 | I1-I5 | I2-I3 | I2-I4 | I2-I5 | I3-I4 | I3-I5 | I4-I5 | Avg. of Hard | Overall | |

| 1. FM1-LightGBM | 66.7 | 84.8 | 94.1 | 97.0 | 78.5 | 91.2 | 95.6 | 80.2 | 90.3 | 77.3 | 75.7 | 85.6 |

| 2. FM2-LightGBM | 81.4 | 96.6 | 99.4 | 99.9 | 92.8 | 98.7 | 99.5 | 93.8 | 98.7 | 92.0 | 90.0 | 95.3 |

| 3. FM3-LightGBM | 93.1 | 99.5 | 100 | 100 | 98.9 | 99.9 | 99.9 | 99.0 | 99.6 | 97.1 | 97.0 | 98.7 |

| 4. FM4-LightGBM | 96.8 | 99.9 | 100 | 100 | 99.1 | 99.9 | 100 | 99.4 | 100 | 98.3 | 98.4 | 99.3 |

| 5. FM5-LightGBM | 97.1 | 99.9 | 100 | 100 | 100 | 100 | 99.8 | 100 | 99.6 | 99.0 | 99.6 | |

| 6. FM6-LightGBM | 98.1 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | ||||

| 7. FM7-LightGBM | 96.3 | 99.9 | 100 | 100 | 99.6 | 100 | 100 | 100 | 99.9 | 99.0 | 99.6 | |

| 8. FM8-LightGBM | 98.2 | 99.9 | 100 | 100 | 99.2 | 100 | 100 | 100 | 99.8 | 99.3 | 99.7 | |

| 9. FM9-LightGBM | 99.9 | 100 | 100 | 99.4 | 99.9 | 100 | 100 | 99.9 | 99.7 | |||

| 10. FM10-LightGBM | 97.1 | 99.7 | 99.9 | 100 | 98.3 | 99.8 | 99.9 | 99.6 | 99.9 | 99.7 | 98.7 | 99.4 |

| 11. Sharpness | 95.6 | 98.2 | 98.5 | 98.2 | 98.0 | 98.5 | 98.3 | 94.5 | 96.2 | 91.4 | 94.9 | 96.7 |

| 12. BRISQUE | 68.9 | 87.6 | 94.8 | 97.4 | 90.6 | 96.6 | 98.0 | 96.8 | 98.0 | 96.5 | 88.1 | 92.5 |

| 13. NIQUE | 96.8 | 98.2 | 98.9 | 99.4 | 97.7 | 97.4 | 98.5 | 66.4 | 76.8 | 69.8 | 82.7 | 90.0 |

| 14. PIQUE | 85.6 | 98.3 | 99.4 | 99.9 | 95.7 | 98.9 | 99.2 | 92.3 | 95.7 | 82.5 | 89.0 | 94.8 |

| Feature Map | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Feature Map | FM2 | FM3 | FM4 | FM5 | FM6 | FM7 | FM8 | FM9 | FM10 | |

| FM1 | Avg. of Hard | 89.91 | 96.13 | 98.21 | 99.17 | 99.22 | 99.10 | 99.01 | 99.24 | 98.71 |

| Overall | 95.33 | 98.28 | 99.25 | 99.66 | 99.68 | 99.63 | 99.57 | 99.69 | 99.42 | |

| FM2 | A | 96.27 | 98.14 | 99.16 | 99.41 | 99.15 | 99.17 | 99.42 | 98.95 | |

| O | 98.38 | 99.21 | 99.65 | 99.76 | 99.65 | 99.66 | 99.77 | 99.57 | ||

| FM3 | A | 98.30 | 99.28 | 99.45 | 99.39 | 99.41 | 99.51 | 99.17 | ||

| O | 99.29 | 99.70 | 99.78 | 99.75 | 99.76 | 99.80 | 99.65 | |||

| FM4 | A | 99.56 | 99.58 | 99.54 | 99.64 | 99.67 | 99.52 | |||

| O | 99.82 | 99.83 | 99.81 | 99.86 | 99.87 | 99.81 | ||||

| FM5 | A | 99.32 | 99.47 | 99.68 | 99.74 | 99.58 | ||||

| O | 99.72 | 99.79 | 99.87 | 99.89 | 99.82 | |||||

| FM6 | A | 99.37 | 99.67 | 99.81 | 99.72 | |||||

| O | 99.74 | 99.86 | 99.92 | 99.89 | ||||||

| FM7 | A | 99.65 | 99.71 | 99.66 | ||||||

| O | 99.86 | 99.88 | 99.86 | |||||||

| FM8 | A | 99.38 | 99.26 | |||||||

| O | 99.73 | 99.68 | ||||||||

| FM9 | A | 99.19 | ||||||||

| O | 99.66 | |||||||||

| Method | Results of Selecting a Less Motion-Blurred Image from a Pair [% Correct Selections] | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| I1-I2 | I1-I3 | I1-I4 | I1-I5 | I2-I3 | I2-I4 | I2-I5 | I3-I4 | I3-I5 | I4-I5 | Avg. of Hard | Overall | |

| 1. FM1-LightGBM | 58.7 | 75.1 | 86.2 | 92.2 | 70.4 | 83.6 | 90.9 | 71.8 | 83.4 | 70.9 | 67.9 | 78.3 |

| 2. FM2-LightGBM | 63.7 | 88.4 | 96.4 | 98.9 | 84.3 | 96.9 | 99.1 | 87.9 | 96.9 | 87.5 | 80.8 | 90.0 |

| 3. FM3-LightGBM | 66.7 | 97.0 | 99.7 | 99.9 | 97.3 | 99.8 | 100 | 98.3 | 99.7 | 96.3 | 89.7 | 95.5 |

| 4. FM4-LightGBM | 79.2 | 97.8 | 99.8 | 100 | 97.5 | 99.8 | 99.9 | 97.8 | 99.2 | 94.8 | 92.3 | 96.6 |

| 5. FM5-LightGBM | 95.1 | 99.6 | 99.9 | 100 | 99.3 | 99.9 | 100 | 99.8 | 100 | 99.7 | 98.5 | 99.3 |

| 6. FM6-LightGBM | 96.0 | 99.8 | 100 | 100 | 99.9 | 100 | 100 | 100 | 100 | 100 | 99.0 | 99.6 |

| 7. FM7-LightGBM | 98.1 | 100 | 100 | 100 | 99.9 | 100 | 100 | 100 | 100 | 100 | 99.5 | 99.8 |

| 8. FM8-LightGBM | 100 | 100 | 100 | 99.9 | 100 | 100 | 100 | 100 | 100 | |||

| 9. FM9-LightGBM | 98.9 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 99.7 | |

| 10. FM10-LightGBM | 94.4 | 99.7 | 100 | 100 | 99.6 | 99.9 | 100 | 99.9 | 100 | 99.8 | 98.4 | 99.3 |

| 11. Sharpness | 82.5 | 88.0 | 89.6 | 90.0 | 90.8 | 91.4 | 91.6 | 90.7 | 90.9 | 87.9 | 88.0 | 89.3 |

| 12. BRISQUE | 81.5 | 95.5 | 98.1 | 99.0 | 95.2 | 98.1 | 99.0 | 97.4 | 98.6 | 97.1 | 92.8 | 95.9 |

| 13. NIQUE | 65.0 | 98.3 | 99.9 | 99.9 | 99.8 | 100 | 100 | 98.7 | 99.2 | 86.9 | 87.6 | 94.8 |

| 14. PIQUE | 67.9 | 87.7 | 95.8 | 98.3 | 81.3 | 95.2 | 98.2 | 86.4 | 95.5 | 86.2 | 80.4 | 89.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tamulionis, M.; Sledevič, T.; Abromavičius, V.; Kurpytė-Lipnickė, D.; Navakauskas, D.; Serackis, A.; Matuzevičius, D. Finding the Least Motion-Blurred Image by Reusing Early Features of Object Detection Network. Appl. Sci. 2023, 13, 1264. https://doi.org/10.3390/app13031264

Tamulionis M, Sledevič T, Abromavičius V, Kurpytė-Lipnickė D, Navakauskas D, Serackis A, Matuzevičius D. Finding the Least Motion-Blurred Image by Reusing Early Features of Object Detection Network. Applied Sciences. 2023; 13(3):1264. https://doi.org/10.3390/app13031264

Chicago/Turabian StyleTamulionis, Mantas, Tomyslav Sledevič, Vytautas Abromavičius, Dovilė Kurpytė-Lipnickė, Dalius Navakauskas, Artūras Serackis, and Dalius Matuzevičius. 2023. "Finding the Least Motion-Blurred Image by Reusing Early Features of Object Detection Network" Applied Sciences 13, no. 3: 1264. https://doi.org/10.3390/app13031264

APA StyleTamulionis, M., Sledevič, T., Abromavičius, V., Kurpytė-Lipnickė, D., Navakauskas, D., Serackis, A., & Matuzevičius, D. (2023). Finding the Least Motion-Blurred Image by Reusing Early Features of Object Detection Network. Applied Sciences, 13(3), 1264. https://doi.org/10.3390/app13031264