Abstract

As the core components of electric vehicles, the safety of the electric system, including motors, batteries, and electronic control systems, has always been of great concern. To provide early warning of electric-system failure and troubleshoot the problem in time, this study proposes a novel energy-vehicle electric-system failure-classification method, which is named Pearson-ShuffleDarkNet37-SE-Fully Connected-Net (PSDSEF). Firstly, the raw data were preprocessed and dimensionality reduction was performed after the Pearson correlation coefficient; then, data features were extracted utilizing ShuffleNet and an improved DarkNet37-SE network based on DarkNet53; secondly, the inserted squeeze-and-excitation networks (SE-Net) channel attention were able to obtain more fault-related target information; finally, the prediction results of the ShuffleNet and DarkNet37-SE networks were aggregated with a fully connected neural network to output the classification results. The experimental results showed that the proposed PSDSEF-based electric vehicles electric-system fault-classification method achieved an accuracy of 97.22%, which is better than other classical convolutional neural networks with the highest accuracy of 92.19% (ResNet101); the training time is faster than the average training time of the comparative networks. The proposed PSDSEF has the advantage of high classification accuracy and small number of parameters.

1. Introduction

In 2015, to address the post-2020 climate change, 178 parties worldwide signed the Paris Agreement [1]. In September 2020, China first stated its “zero-carbon” target of hitting “carbon peak” and “carbon neutrality” [2] and vigorously developed renewable energy to meet the challenge. As one of the major energy-consuming industries, the automotive industry has long been on the agenda of various countries and organizations to innovate its driving methods. Consequently, the utilization of electric vehicles (EVs) can reduce the consumption and depletion of non-renewable energy sources and has become one of the effective solutions to the current energy and environmental problems [3].

According to the Ministry of Public Security in China, by the end of June 2023, EVs have reached 16.2 million, occupying 4.9% of the entire automobile market. Nevertheless, once an EV is subjected to external impacts or the vehicle is running in a high-temperature and harsh environment, the electric system including the motor, battery, and electronic control system will be damaged, resulting in risks including short circuits, overloads, and battery fires [4], and may even cause accidents like spontaneous combustion of the vehicle, resulting in economic losses and personal threats [5]. Consequently, the accurate categorization of EV electric-system failures and timely troubleshooting of the failures are of great significance to EVs.

EV electric-system fault-classification methods are mainly categorized into the following four types: statistical analysis, physical modeling, machine learning, and synthesis methods [6]. Statistical analysis methods analyze historical fault data to determine the classification accuracy of different fault types. Statistical methods are only applicable for scenarios with statistical or periodic laws, whereas the driving environment of an EV, the electric-system operating environment, and the material properties of battery packs are not periodic or similar, and the statistical methods are not applicable to classify electric-system failures in an EV. The physical-modeling approach models the chemical reaction, heat conduction, and current distribution inside the electric system to achieve the classification of different fault types [7]. The physical method requires the establishment of an accurate internal model of the electric system with precise parameters. However, as far as the battery system is concerned, it is composed of many groups of individual batteries together, and the different degree of utilization of each individual battery will cause each individual battery to produce different deviations; then, the accuracy of the physical method is not well guaranteed.

The machine learning methods currently mainly include time series [8], image convolutional neural [9], and deep neural networks [10]. Time series methods, which include recurrent neural networks [11], long short-term memory networks [12], bidirectional long short-term memory networks [13], and gated network methods [14], are mainly applicable to prediction problems with strong time series characteristics. Deep neural networks are mainly applicable to classification and prediction problems with few data dimensions that are not prone to overfitting. Image convolutional neural networks are widely utilized in the image domain and classification/prediction problems with more data dimensions because of deep convolution modules [15]. For example, Darknet53 achieved superior performance metrics with excellent feature extraction capability, lightweight, and efficient advantages [16]. ResNet50, Inception V3, and the SoftMax activation layer were integrated to predict the power loss of solar photovoltaic panels [17]. DeepLabv3+ employed the backbone network of ResNet101 to predict the crack width of irregularly cracked images of objects containing noise and shadows and to predict cracks in real-life concrete and asphalt [18]. DenseNet201 was applied to extract features for lumbar anterior slippage and normal state [19]. The NasNetLarge deep learning method was employed to categorize the lung infection status of chest X-ray images and discriminate between the healthy state and virus-infected states [20]. A depth-separable Xception model included residual structure to accurately recognize and classify finger veins with fewer parameters [21]. EfficientNetB0 was utilized to monitor the structure of bridges and to repair faulty components [22]. GoogLeNet can learn information characteristics of known deposits and accurately classify the remote sensing images of minerals [23]. Improved ShuffleNet and Kalman filters were deployed on a UAV to monitor and calculate the distance between people in public areas, and also to effectively prevent the rampant spread of infectious diseases [24]. A multi-fault mode detection method of P2 diesel hybrid electric vehicle based on support vector machine was proposed for diagnosing the coupled faults of the generator, motor, and battery, which can classify the multi-faults more accurately, but the method can only categorize the faults into four fault categories: no fault, diesel engine fault, motor fault, and power battery fault; it cannot further categorize the fault types to the specific types of the subordinates of these four faults [25]. Deep learning and blockchain-based EV fault detection frameworks were proposed for detecting tire pressure, temperature, and battery faults, and the study utilized the CNN and LSTM deep learning models to predict the presence of faults with 70% prediction accuracy [26].

In recent years, attention mechanisms have been widely recognized for classification and prediction problems [27]. Multiple attention mechanisms have been proposed for image processing, natural language processing, and data prediction [28]. For example, attention mechanisms were combined with recurrent neural networks to process specific portions of an image at high resolution, extracting key features therein while omitting irrelevant features, and improving accuracy performances [29]. Moreover, based on the image characteristics, the channel attention mechanism was applied to improve the accuracy of image classification and prediction. For example, the squeeze-and-excitation networks (SENet) channel attention mechanism was added to the YOLOv5 model to predict the classification of crop disease categories by assigning higher weights to the key features [30]. Moreover, a two-stage spatial pooling method with the SE channel attention module was proposed to improve the classification effect on ImageNet significantly [31].

An integrated approach aims to combine multiple tools to improve prediction or classification accuracy. No method exists that can be trained quickly and with high accuracy for a wide range of datasets. For each different dataset, the network needs to be tested and matched with numerous specific iterations. In this study, inspired by ShuffleNet and DarkNet53, the results of both methods were synthesized by employing a deep fully connected layer to improve the accuracy of fault classification for EV electric system. In addition, inspired by channel attention, this study applied the channel attention mechanism to boost the training speed and prediction accuracy of the proposed ShuffleDarkNet37 network. Therefore, the key contributions of this study are summarized as follows:

- DarkNet53 network has few parameters and high efficiency, with 52 layers of convolution for multi-scale feature extraction, which speeds up the convergence of training and reduces the gradient loss by backpropagation, and greatly improves the accuracy and the generalization of the model. Inspired by the DarkNet53 network, the proposed Pearson-ShuffleDarkNet37-SE-Fully Connected-Net (PSDSEF) reduces 16 convolutional layers of the DarkNet53 network and improves the classification accuracy of the DarkNet53 network. The proposed PSDSEF is lighter in comparison to the DarkNet53 network and can reduce memory while maintaining accuracy.

- The ShuffleNet network has a simple structure and few parameters with high accuracy. After many matching experiments, the authors are pleased to find that the ShuffleNet and lightweight DarkNet53 networks can match the EV electric-system fault-classification dataset very effectively. Although the structure of the ShuffleNet network is simple, the results of the ShuffleNet network can fully compensate for the inaccurate results of the lightweight DarkNet53 network.

- Compared with the convolutional neural networks (CNNs) without adding the channel attention mechanism, the proposed PSDSEF takes the channels of the image as the inputs of the CNNs and pays attention to the locally important information brought by the channels of the image.

- To the best knowledge of the authors, this study is the first to integrate complex CNNs, lightweight CNNs, deep fully connected networks, and channel attention mechanisms for the EV electric-system fault-classification problem.

- There is no existing research utilizing CNNs to classify the electric-system faults of EVs.

2. Proposed PSDSEF

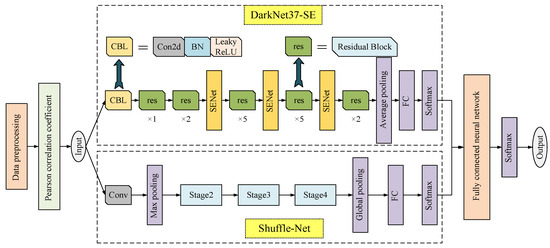

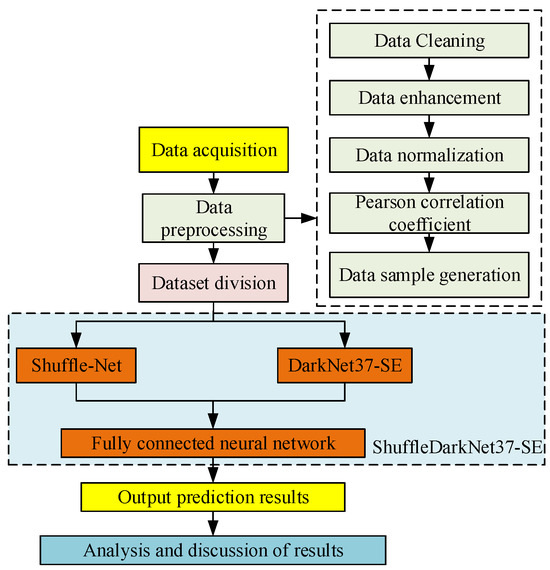

In the field of image recognition, CNNs have achieved excellent results. In this study, a new network PSDSEF was proposed to obtain high-precision fault score prediction results for the electric system of EVs. The overall structure of the proposed PSDSEF is shown in Figure 1. The detailed components of PSDSEF are described and explained in the next subsections.

Figure 1.

The overall structure of the proposed PSDSEF.

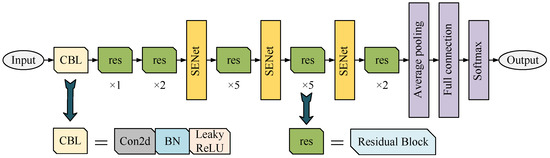

2.1. DarkNet37-SE of Proposed PSDSEF

The improved DarkNet37-SE is the combination of improved DarkNet53 and SENet. For this study, which considers EV application scenarios, the light-weighting of the network is especially critical. The DarkNet37 proposed in this study discards part of the structure in DarkNet53 and inserts multiple SE channel attention networks to improve prediction accuracy and speed while keeping the total network parameters from not changing much. The structure of DarkNet37-SE is shown in Figure 2. The detailed parts of the improved DarkNet37-SE are illustrated and elaborated in the following subsections.

Figure 2.

The architecture of DarkNet37-SE of proposed PSDSEF.

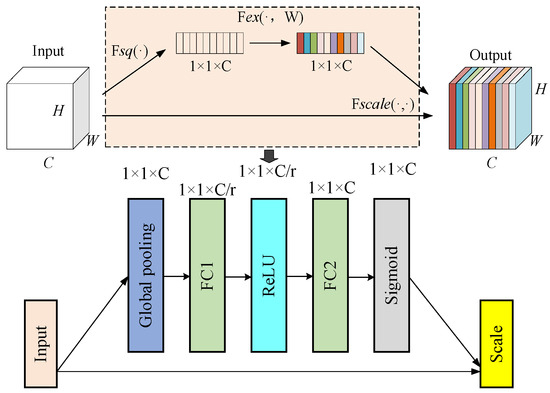

2.1.1. SENet of DarkNet37-SE

The SENet, proposed in 2017, models the convolutional channel feature maps by modeling the interdependence between the feature maps to assign different weight values to the channels of the feature maps, allowing CNNs to focus on certain feature channels with large weight values and suppressing those that are of little use to the current task, thus improving representation ability [32]. The SENet has fewer parameters and less computation; the SENet can be arbitrarily added to arbitrary positions in the neural network, which improves the effect more significantly. The schematic of the SENet is shown in Figure 3.

Figure 3.

The specific structure of SENet.

The SENet includes squeeze squeezing, excitation motivation, and scale parts. As can be seen in the schematic diagram, firstly, the squeeze part compresses the original feature map with a dimension H × W × C into a 1 × 1 × C one-dimensional feature vector by global average pooling operation, enabling the one-dimensional feature vector to contain global information. Then, the excitation part downscales the 1 × 1 × C one-dimensional feature vector through the fully connected layer to reduce the computational effort; afterwards, the rectified linear unit (ReLU) activation function continues by upscaling and transforming the feature map back to the original 1 × 1 × C dimensions through the fully connected layer, and then it utilizes the sigmoid function to obtain the normalized weights of each channel. Finally, the channel weights are multiplied by the channels corresponding to the original feature maps through the scale part to achieve the weighted feature maps, completing the recalibration of the original features in the channel dimension.

2.1.2. DarkNet37 of DarkNet37-SE

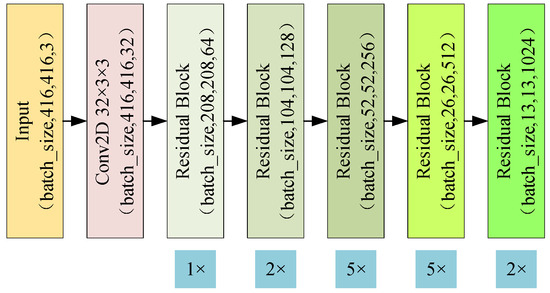

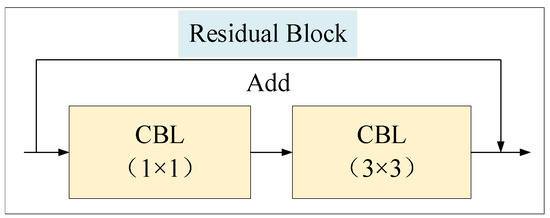

Darknet53 was proposed in 2018 by Joseph Redmon and others and performs well in large-scale image classification tasks [33]. Darknet53 consists of 53 convolutional layers, fully utilizes the stacking of residual structures for the fusion of multiscale features with strong generalization, has few parameters and layers, and consumes fewer resources. In this study, Darknet53 is improved to be Darknet37, which is composed of 37 convolutional layers; the first 36 layers are utilized for feature extraction, and the last is for the final output. The architecture of Darknet37 is shown in Figure 4, in which the residual block is constructed as shown in Figure 5. The output tensor of the convolutional layer is fed into the first “residual block”. The residual block contains a series of convolutional layers and a batch of normalized layers, which are processed by a Leaky ReLU and added to the input tensor; finally, the output tensor is sent to the next layer; the classification probability is obtained through the SoftMax function after stacking multiple residual blocks.

Figure 4.

Network architecture of Darknet37.

Figure 5.

Structure of residual block.

In summary, combining multiple SENet attentions in Darknet37 for feature extraction of input images has lightweight characteristics while ensuring classification accuracy, which is practical and easy to deploy in embedded devices for edge computing.

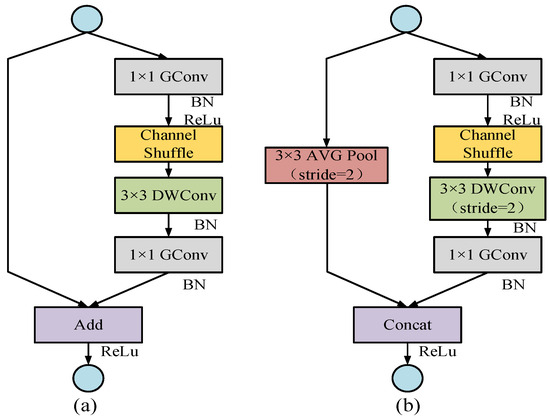

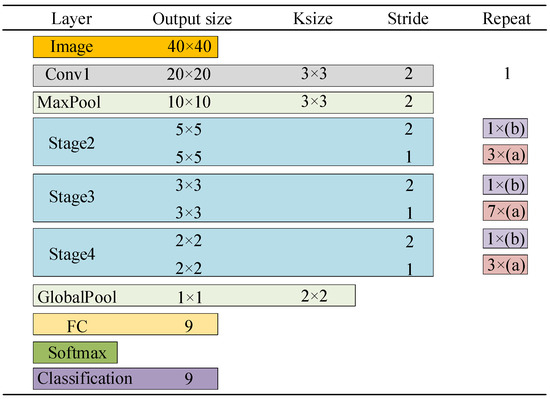

2.2. ShuffleNet of PSDSEF

ShuffleNet is widely applied owing to its high operational efficiency and small model [34]. The architecture of ShuffleNet is shown in Figure 6. The specific layered structure of Stage 2, Stage 3, and Stage 4 is mainly composed of the basic ShuffleNet unit; the specific structure of (a) and (b) in Figure 6, i.e., the ShuffleNet unit, is shown in Figure 7. The main idea of ShuffleNet is the pointwise group convolution and channel shuffle rearrangement.

Figure 6.

The overall network structure of ShuffleNet.

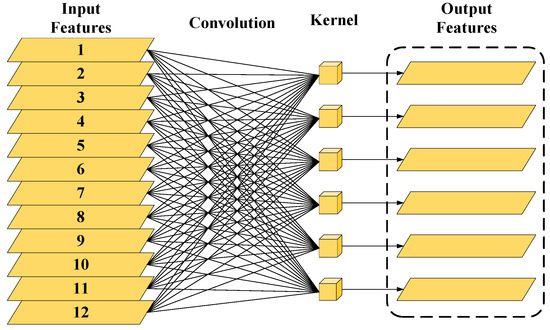

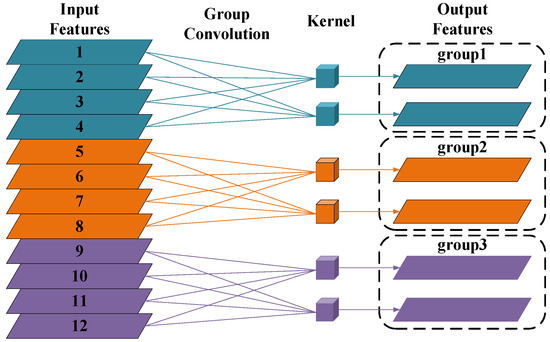

The process of the pointwise group convolution: divide the feature map channel into multiple groups; the convolution algorithm outputs the feature maps through the same number of groups with the feature map channel 1 × 1 convolution kernel for each group of feature maps; each group ultimately generates a feature map. The ordinary convolution and pointwise group convolution are shown in Figure 8 and Figure 9, respectively. Group convolution is different from the previous ordinary convolution. The ordinary convolution can retain most of the original features by traversing the feature map; therefore, the calculation loss is large. However, in the pointwise group convolution, each convolution only acts on the corresponding channel and effectively reduces the amount of computation; consequently, the network has a small number of parameters.

Figure 8.

Schematic diagram of ordinary convolution.

Figure 9.

Schematic diagram of pointwise group convolution.

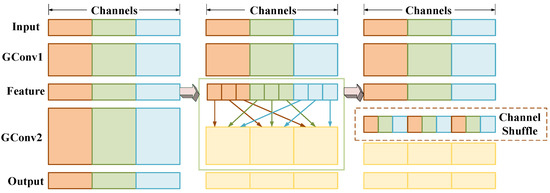

After the pointwise grouping convolution, the different groups of feature maps do not produce a connection. Only the corresponding group of inputs have a relationship, leading to the loss of the original correlation information between the channels, and the learning features are very limited. Consequently, channel shuffling is applied to establish the communication between different channels and to achieve the flow of information between the feature channels. The specific operation of the channel shuffle rearrangement is as follows: if the channel is divided into groups, the number of channels in each group is , reshape the × channels into a matrix with rows and columns; then, transpose the matrix into a matrix with rows and columns; finally, obtain the feature map channel after uniform shuffle by the flattening effect. The graph of the channel shuffle is shown in Figure 10 where different colors represent different channel groups.

Figure 10.

Graph of channel shuffle.

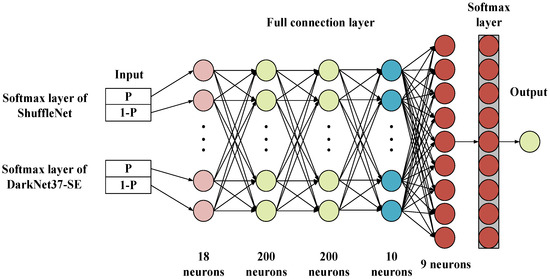

2.3. Fully Connected Layer of PSDSEF

A single CNN has limited ability for feature extraction; the extracted features are different from each other. Therefore, in this study, a fully connected neural network was employed to aggregate the prediction results of ShuffleNet and DarkNet37-SE, and absorb the advantages of the two networks, obtain a higher classification accuracy of the EV electric-system faults simultaneously. Firstly, the classification results of ShuffleNet and DarkNet37-SE were merged; secondly, the merged results were inputted into the fully connected layer with the number of neurons 18, 200, 200, 10, and 9 for training; finally, the final classification probability was obtained through the SoftMax layer. The fully connected neural network structure of PSDSEF is shown in Figure 11. The standard SoftMax function is

where represents the magnitude of the probability that the predicted outcome category belongs to ; is the sum of the categories. The SoftMax function maps the output probability of categorization onto an -dimensional vector with a range of [0, 1] and selects the node with the largest probability value as the categorization result for final outputs.

Figure 11.

Structure of fully connected neural network of PSDSEF.

In summary, to accurately classify the faults of the EV electric system, a novel network, i.e., PSDSEF is proposed in this study. The proposed PSDSEF combines the lightweight ShuffleNet with the proposed lightweight attention networks (i.e., ShuffleNet and DarkNet37-SE). Finally, the PSDSEF outputs the classification results through multiple fully connected layers, which improves the prediction accuracy while reducing training time and greatly improves the efficiency of the network.

The overall steps of the proposed PSDSEF for the EV electric-system fault classification are given in Figure 12.

Figure 12.

Process of EV electric-system fault classification for EV based on the proposed PSDSEF.

3. Case Study

The experiments in this study were implemented by programming on a personal computer with Intel(R) Core (TM) i7-10700 2.90 GHz central processor and 32 GB random access memory utilizing MATLAB R2023a.

The proposed PSDSEF was compared with DarkNet53, ResNet50, ResNet101, DenseNet201, NasNetLarge, Xception, EfficientNetb0, Place365GoogLeNet, ShuffleNet, InceptionV3, MobileNetV2. The model parameters of the 11 compared networks are shown in Table 1.

Table 1.

Model parameters of the comparison networks.

3.1. Dataset

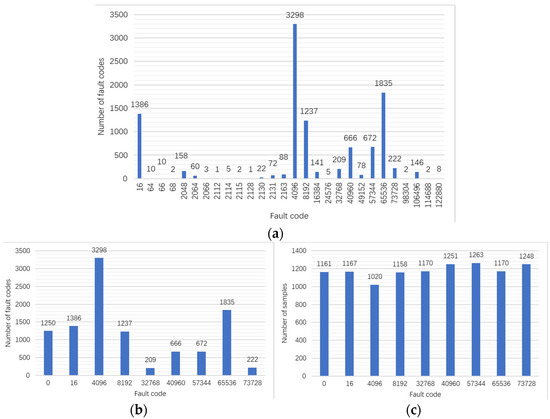

The dataset adopted in this study is from the vehicle operation big data of the EV industry provided by government officials [35]. The dataset applied in this study is the data of 10 electric passenger cars of a certain brand in the past six months. The dataset contains 10 CSV files; each file contains 32 data field names, i.e., 32 columns of data; the data field names and field definitions are shown in Table 2 where N/A stands for Not applicable. The dataset contains a total of 5,458,182 EV operation data. Among them, there are 28 kinds of fault codes in the fault data; the corresponding numbers of relevant fault codes are shown in Figure 13.

Table 2.

Data field names, field definitions, and operations.

Figure 13.

Raw and used fault codes and their corresponding number of faults: (a) raw fault codes; (b) selected fault codes; (c) expanded fault codes.

The fault data were screened out and more than 200 data volumes of 8 categories of fault data and 1 category of normal data were selected, totaling 9 categories of data to form the original dataset of the experiments in this study. The numbers of selected fault codes are shown in Figure 13b. In this study, the above nine fault codes are regarded as the classification problem of electric-system failure of EVs.

3.2. Data Preprocessing

The flow diagram of the preprocessing operations adopted in this study is shown in Figure 14.

Figure 14.

Preprocessing flow diagram.

The processing of each step is described as follows.

- Data Cleaning. Many problems may occur during data collection, such as malfunctioning of vehicle sensors, poor network transmission when uploading data, etc. Therefore, the collected data may contain outliers and missing values. Observation of the dataset reveals that the following special cases occur:

- The vehicle models collected in the dataset are of the same brand and the same model, and thus the Vehicle ID field and the data collection time field do not affect this study;

- The vehicle operating states collected in the dataset are all in the purely electric operating state, and the operating mode data fields are all “1”;

- The total number of individual batteries for the selected models is 95, and the total number of individual battery temperature probes is 34, and both fields are fixed values;

- The maximum voltage battery number, the minimum voltage battery number, the maximum temperature subsystem number, and the minimum temperature subsystem number are all “1”;

- The charging and storage device fault code list field is null, with no available data;

- There are several cases with the same value: fault codes “8192”, “32,768”, “40,960”, “57,344”, and “73,728” in the “speed” column; fault code “4096” in the “vehicle_state” column; fault codes “8192”, “32,768”, “40,960”, “57,344”, “65,536”, “73,728” in the “max_alarm_lv” column; fault codes “32,768”, “40,960”, “57,344”, “65,536”, “73,728” in the “dcdc_stat” column; fault codes “8192”, “32,768”, “40,960”, “65,536”, “73,728” in the “gear” column.

The above situations do not affect the feature extraction project of this study and are deleted (Table 2). Therefore, the data dimension has changed from 36 to 21.

- 2.

- Data expansion. Since the number of various types of data samples in the training data have a large gap, i.e., the training samples are unbalanced, this will lead to a large deviation in the accuracy of the training results. Therefore, oversampling the data samples with a small number of sample categories and reducing the number of larger samples constitute two steps that were applied to achieve the purpose of balancing the samples simultaneously. Data with more than 1250 data volumes were randomly sampled to 1250 pieces of data. Because most of the data volume of generating operational fault data was not up to 1250, the linear difference method was utilized for data expansion; the formula for linear interpolation is:where is the newly generated sample data; and are the two original sample data before and after, respectively; is the number of new data inserted in the middle of the original sample; is the location of the new data generation (From Figure 10b,c).

- 3.

- Data normalization. To remove the magnitude gap present between dimensional data and retain the relationships that exist in the original data, we normalized the data. The practice max-min normalization maps the data values to the range of [0, 1], aswhere is the normalized data; is the original data; is the maximum value of the column in which the sample data are located; is the minimum value of the column in which the sample data are located.

- 4.

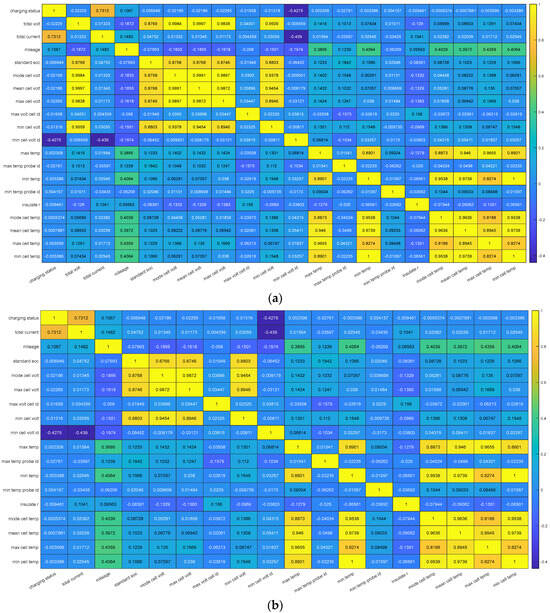

- Correlation analysis. After the above processing steps, the dataset was reduced to 21 columns, including 20 columns of data utilized for feature extraction and the last column of labeled data. Too many variables can lead to the occurrence of data redundancy, which affects the effectiveness of the model. Correlation analysis is applied to derive the correlation between the data to extract the principal characteristics of the data and reduce data dimensionality and redundancy. The Pearson correlation coefficient iswhere is the value of the correlation coefficient between columns and ; is the covariance between and ; and are the standard deviations of and , respectively.

The obtained Pearson correlation coefficient plot of the data from the original 20-dimensional input after removing meaningless numbers and fixed values is shown in Figure 15a. As can be seen from the heat map, the correlation coefficients of the total voltage, battery voltage plurality, and the average number of cell unit voltages reach 0.9984 and 0.9997. Therefore, this study removes the columns of the total voltage and the average number of cell unit voltages and retains only the columns of the battery voltage plurality for the subsequent experiments (Table 2). The Pearson correlation coefficient plot of the final used data is shown in Figure 15b.

Figure 15.

Pearson correlation coefficient plot: (a) data from the original 20−dimensional input after removing meaningless numbers and fixed values; (b) the final used data.

- 5.

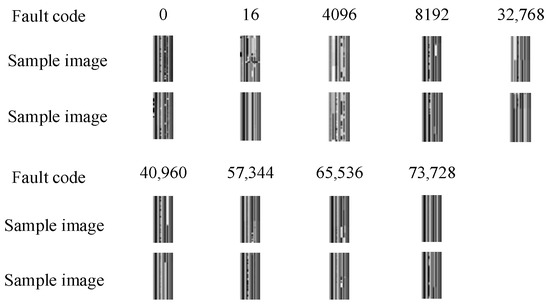

- Data sample generation. Sliding window operation is performed on the data. Every 40 rows in the same fault code are composed of sample data, such that row 1 to row 40 constitutes the first sample data; row 2 to row 41 constitutes the second sample data; by analogy, a total of 10,608 sample data are obtained as shown in Figure 12c. The data samples are converted into pictures of size 40 × 40 × 1 as inputs to the network to visualize the sample data and form the final training dataset; some of the picture samples are shown in Figure 16.

Figure 16. Some samples of the pictures.

Figure 16. Some samples of the pictures.

- 6.

- Dataset division. After the above preprocessing steps, a total of 10,608 image sample data are collected. In addition, the ratio of the training dataset to the test dataset is 2:1.

The dataset of this study contains a total of 10,608 samples, the number of training datasets is 7189. and the number of test datasets is 3419. The dataset contains a total of 9 categories of labels, and the numbers of the training dataset and test dataset corresponding to each category of labels are shown in Table 3. Fault code “0” indicates that the vehicle is operating normally; fault code “16” indicates that the vehicle is experiencing a SOC low alarm fault; fault code “4096” indicates that the vehicle has a DC−DC temperature alarm fault; fault code “8192” indicates that the vehicle is experiencing a brake system warning fault; fault code “32,768” indicates that the vehicle is experiencing a drive motor controller temperature alarm fault; fault code “40,960” indicates that the vehicle has a drive motor controller temperature alarm fault and a brake system alarm fault; fault code “57,344” indicates that the vehicle has a drive motor controller temperature alarm fault, a DC−DC status alarm fault and a brake system alarm fault; fault code “65,536” indicates that the vehicle is experiencing a high-voltage interlock condition alarm fault; fault code “73,728” indicates that the vehicle has a brake system alarm fault and a high-voltage interlock status alarm fault.

Table 3.

Number of training datasets and test datasets corresponding to each type of labeling.

The initial parameter settings of all the compared methods including the proposed PSDSEF are shown in Table 4.

Table 4.

Initial parameters of all the compared methods including PSDSEF.

The cross-entropy loss function is selected for the evaluation of multi-classification models,

where is the total number of samples; is the number of categories; represents the real labeling category corresponding to the th sample, which has a value of 1 if it is the th category, and 0 otherwise; is the result obtained by the SoftMax function, i.e., the predicted probability of the sample belonging to the category .

3.3. Evaluation Indicators

For the multi-categorization problem, the following results may occur: true positive (), false positive (), and false negative (). In this study, four evaluation indicators were selected to evaluate the model comprehensively, including accuracy (), Macro-Precision (), Macro-Recall (), and Macro-F1 score (). The formulas are as follows:

where is the total number of categories categorized; is the number of samples correctly predicted to be categorized ; is the number of samples incorrectly predicting other categorizations as categorization ; and is the number of samples incorrectly classified as the true categorization as other categorizations.

3.4. Experimental Results Analysis

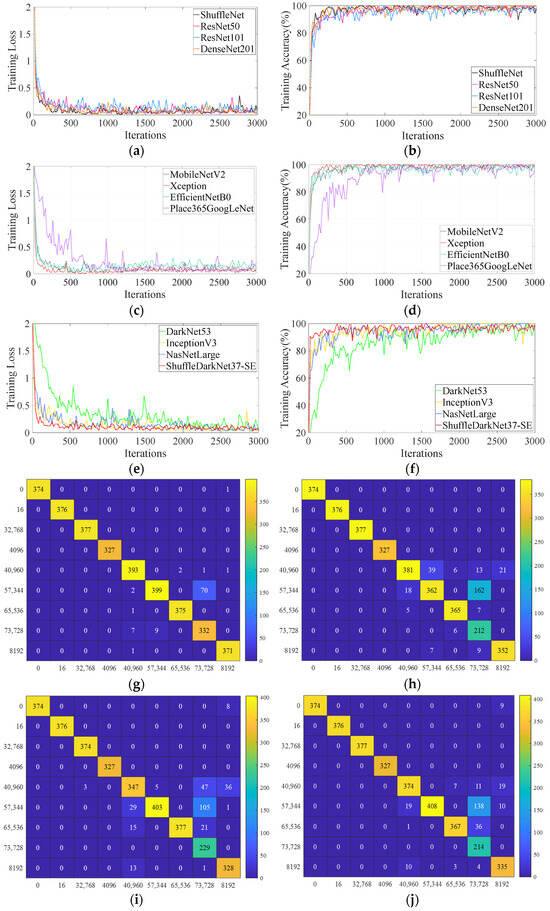

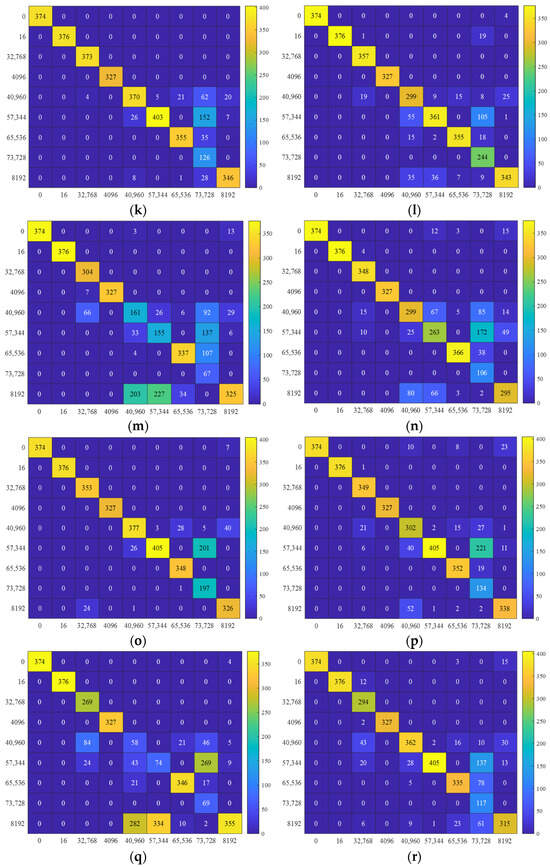

The obtained results of all the compared methods are given in Table 5. The training losses, training accuracy, and confusion matrix of all the compared methods are given in Figure 17.

Table 5.

Experimental results and evaluation indicators.

Figure 17.

Training loss, training accuracy, and confusion matrix of each network: (a) training loss of ShuffleNet, ResNet50, ResNet101, DenseNet201; (b) training accuracy of ShuffleNet, ResNet50, ResNet101, DenseNet201; (c) training loss of MobileNetV2, Xception, EfficientNetB0, Place365GoogLeNet; (d) training accuracy of MobileNetV2, Xception, EfficientNetB0, Place365GoogLeNet; (e) training loss of DarkNet53, InceptionV3, NasNetLarge, PSDSEF; (f) training accuracy of DarkNet53, InceptionV3, NasNetLarge, PSDSEF; (g) confusion matrix of PSDSEF; (h) confusion matrix of DarkNet53; (i) confusion matrix of ResNet50; (j) confusion matrix of ResNet101; (k) confusion matrix of DenseNet201; (l) confusion matrix of NasNetLarge; (m) confusion matrix of Xception; (n) confusion matrix of EfficientNetB0; (o) confusion matrix of Places365GoogLeNet; (p) confusion matrix of ShuffleNet; (q) confusion matrix of InceptionV3; (r) confusion matrix of MobileNetV2.

In this study, 11 classical outstanding networks are compared. Figure 17 and Table 5 show that (1) for the EV electric-system failure data, most of the models reach the convergence state with a good trend; among these, the proposed PSDSEF converges faster than the other networks and has less training loss; (2) the accuracy, Macro-Precision, Macro-Recall, and Macro-F1 score of the proposed PSDSEF reach 97.22%, 97.59%, 97.38%, and 97.38%, respectively; the four indicators of the PSDSEF are higher than all the comparison networks; this is compared to the comparison networks in which the effect belongs to ResNet101, which has the most significant effect among the comparison networks; the indicators of the PSDSEF are improved by 5.03%, 3.63%, 4.84%, and 5.23%, respectively; by contrast, the training time of the model is only 10,680 s, which is faster than the average training time of the comparison networks by 5014 s; (3) offline learning is utilized for training. When the deep network framework is trained, it takes only a little time to categorize the faults on the test set. It can be seen that most of the networks take less than 5 s to test, while the proposed PSDSEF takes only 1.62 s to test, which is only 0.58 s more than the network Places365GoogLeNet, which takes the least amount of time; (4) from the confusion matrix, it can be seen that the overlap between the classification results and the actual values of the proposed method in this study is the highest among all the networks and the classification accuracy is the highest.

The experiments show that the classification effect of the proposed PSDSEF with higher accuracy and less training time is better than that of the comparison networks.

3.5. Discussions

In this study, the effect of the improved modules was verified by ablation experiments, and the outcomes of the ablation experiments are shown in Table 6.

Table 6.

Outcomes of ablation experiments.

Table 6 shows that (1) from Improvement 1, DarkNet37 reduces the training time by 26.38% compared to DarkNet53 with a loss of 3.07% in accuracy; and DarkNet37 is more lightweight; (2) Improvement 2 adds multiple SENet attention mechanisms to DarkNet37; the accuracy of DarkNet37-SE is 2.19% higher than that of DarkNet37 without the attention network; but the training time is only 187 s more than that of DarkNet37, which is an increase of only 1.78%; meanwhile, compared with the training of the DarkNet53 results, the accuracy is only reduced by 0.88%; the training time is reduced by 3568 s, which is 25.07%; (3) Improvement 3 aims to aggregate the experimental results of ShuffleNet and DarkNet37-SE through multiple fully connected layer networks before outputting the classification results, which achieves an accuracy of 97.22%, with a training duration of 10,680 s; the training accuracy is higher than that of DarkNet53, ShuffleNet, and DarkNet37-SE by 5.79%, 10.73%, and 6.67%, respectively; the time is only 14 s more than DarkNet37-SE. Meanwhile, aggregating the outputs of DarkNet37 and ShuffleNet without the added attention network (i.e., the ShuffleDarkNet37 columns in the table), the accuracy of the network is 95.73%, which is higher than that of the PSDSEF by 1.49%; the training time is 10,499 s, which is only 181 s less than that of PSDSEF, with a decrease percentage of 1.72%; (4) the testing time of the networks does not differ much owing to the offline training approach, and it can be seen that both the original and the improved networks take only a few seconds to classify the fault categories. From the ablation experiments, after the introduction of the three improvements, the number of operations is less than the original DarkNet53 algorithm; the operation speed is faster; the accuracy is greatly improved; the performance of the improved algorithm is superior to the original algorithm.

It is a competitive task to utilize the original data to classify the faults of the EV electric system because the original data are nonlinear, which leads to the difficulty of data feature extraction. To achieve the EV electric-system fault classification accurately and efficiently, a novel method based on PSDSEF is proposed in this study. The PSDSEF reduces the error generated by manually extracting data features and does not need to learn the relevant physical prior knowledge. Experiments show that the proposed PSDSEF performs well in the EV electric-system fault classification, with improved prediction accuracy and speed, which validates the feasibility of the PSDSEF. Compared with other networks, this PSDSEF can predict the specific faults generated by the EV electric system in advance for early warning, which greatly reduces the economic losses and personal safety damage caused by EV electric-system failures.

The proposed PSDSEF is effective but still has some limitations.

- The samples in this study are the same fault code in 40 rows constituting an input sample; there may be a large span between rows and rows of data, which leads to a large difference in the data generating a certain degree of error.

- The number of samples corresponding to some of the fault codes contained in the dataset are too few to model the extraction of their features well, such as the fault codes “2112” and “2128”, both of which have only one fault sample.

4. Conclusions

Accurately and quickly classifying the electric-system failure of EVs before the EVs fail is essential to troubleshooting the failure promptly and reducing the loss of the owner of the vehicle and the personal threat. To reduce the uncertainty and error generated by the manual feature extraction, this study utilizes multiple CNNs with deep characterization capability to extract and model the features of the data under the premise of big data. An EV electric-system fault-classification method (i.e., PSDSEF) is proposed in this study; the features of the method can be summarized as follows.

- The accuracy, Macro-Precision, Macro-Recall, and Macro-F1 score of the PSDSEF for classifying electric-system failures in EVs are higher than all comparison networks.

- In this study, the selected dataset is firstly correlated by the Pearson correlation coefficient method, which in turn reduces the dimensionality of the data; then, the maximum–minimum normalization is employed to eliminate the difference in magnitude that exists between the dimensional data and to simplify the parameters of the data, which allows the features to be extracted more efficiently.

- PSDSEF integrates DarkNet37-SE and the lightweight network ShuffleNet in order to automatically extract features utilizing convolution and pooling operations, and then the classification results of the two networks are aggregated by the fully connected neural network, which draws on the advantages of the two networks to obtain higher classification results.

In future work, the PSDSEF method could be generalized to other brands and models of vehicles. In addition, if enough sample data are collected from the other fault codes, the dataset could be supplemented to increase the categories of the fault codes in the dataset. Moreover, better data dimensionality reduction methods could be adopted to further reduce the data dimensions; then, data lightening could greatly reduce the training time. Furthermore, the structure of PSDSEF could be optimized by appropriately adjusting the number of convolutional layers, and the spatial attention mechanism could be added to improve the model performances in conjunction with the channel attention mechanism. Meanwhile, the information relating to time dimension could be introduced for the EV electric-system fault classification; the safety of the EV electric system could be associated with the usage time of the EV electric system as well as the previous state of the EV electric system.

Author Contributions

Q.L.: project administration, supervision. S.C.: data curation, formal analysis, investigation, visualization, writing—original draft. L.Y.: conceptualization, funding acquisition, project administration, supervision, methodology, resources, writing—review and editing. L.D.: validation, software. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under Grant. 52107081, the Natural Science Foundation of Guangxi Province (China) under Grant. AA22068071, and the Key Laboratory of AI and Information Processing (Hechi University) of Education Department of Guangxi Zhuang Autonomous Region under Grant. 2022GXZDSY006.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

2022 Digital Vehicle Competition of the National Big Data Alliance of New Energy Vehicles, http://www.ncbdc.top/ (accessed on 14 November 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lu, F.; Niu, R.; Zhang, Z.; Guo, L.; Chen, J. A Generative Adversarial Network-Based Fault Detection Approach for Photovoltaic Panel. Appl. Sci. 2022, 12, 1789. [Google Scholar] [CrossRef]

- Wang, C.; Luo, J.; Qing, F.; Tang, Y.; Wang, Y. Analysis of the Driving Force of Spatial and Temporal Differentiation of Carbon Storage in Taihang Mountains Based on InVEST Model. Appl. Sci. 2022, 12, 10662. [Google Scholar] [CrossRef]

- Alkawsi, G.; Baashar, Y.; Abbas, U.D.; Alkahtani, A.A.; Tiong, S.K. Review of Renewable Energy-Based Charging Infrastructure for Electric Vehicles. Appl. Sci. 2021, 11, 3847. [Google Scholar] [CrossRef]

- Xiao, F.; Li, C.; Fan, Y.; Yang, G.; Tang, X. State of Charge Estimation for Lithium-Ion Battery Based on Gaussian Process Regression with Deep Recurrent Kernel. Int. J. Electr. Power Energy Syst. 2021, 124, 106369. [Google Scholar] [CrossRef]

- Jiao, M.; Wang, D.; Yang, Y.; Liu, F. More Intelligent and Robust Estimation of Battery State-of-Charge with an Improved Regularized Extreme Learning Machine. Eng. Appl. Artif. Intell. 2021, 104, 104407. [Google Scholar] [CrossRef]

- Zhao, J.; Ling, H.; Wang, J.; Burke, A.F.; Lian, Y. Data-Driven Prediction of Battery Failure for Electric Vehicles. iScience 2022, 25, 104172. [Google Scholar] [CrossRef]

- Xie, Y.; Wang, X.; Hu, X.; Li, W.; Zhang, Y.; Lin, X. An Enhanced Electro-Thermal Model for EV Battery Packs Considering Current Distribution in Parallel Branches. IEEE Trans. Power Electron. 2022, 37, 1027–1043. [Google Scholar] [CrossRef]

- Lin, W.-H.; Wang, P.; Chao, K.-M.; Lin, H.-C.; Yang, Z.-Y.; Lai, Y.-H. Wind Power Forecasting with Deep Learning Networks: Time-Series Forecasting. Appl. Sci. 2021, 11, 10335. [Google Scholar] [CrossRef]

- Boulila, W.; Sellami, M.; Driss, M.; Al-Sarem, M.; Safaei, M.; Ghaleb, F.A. RS-DCNN: A Novel Distributed Convolutional-Neural-Networks Based-Approach for Big Remote-Sensing Image Classification. Comput. Electron. Agric. 2021, 182, 106014. [Google Scholar] [CrossRef]

- Arslan, M.; Kamal, K.; Sheikh, M.F.; Khan, M.A.; Ratlamwala, T.A.H.; Hussain, G.; Alkahtani, M. Tool Health Monitoring Using Airborne Acoustic Emission and Convolutional Neural Networks: A Deep Learning Approach. Appl. Sci. 2021, 11, 2734. [Google Scholar] [CrossRef]

- Ahmed, S.; Kamal, K.; Ratlamwala, T.A.H.; Mathavan, S.; Hussain, G.; Alkahtani, M.; Alsultan, M.B.M. Aerodynamic Analyses of Airfoils Using Machine Learning as an Alternative to RANS Simulation. Appl. Sci. 2022, 12, 5194. [Google Scholar] [CrossRef]

- Srinivasu, P.N.; SivaSai, J.G.; Ijaz, M.F.; Bhoi, A.K.; Kim, W.; Kang, J.J. Classification of Skin Disease Using Deep Learning Neural Networks with MobileNet V2 and LSTM. Sensors 2021, 21, 2852. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.-T.; Han, M.-T.; Wu, B.-H.; Rehman, A. Speech Emotion Recognition Based on Convolutional Neural Network with Attention-Based Bidirectional Long Short-Term Memory Network and Multi-Task Learning. Appl. Acoust. 2023, 202, 109178. [Google Scholar] [CrossRef]

- Jiang, C.; Huang, K.; Zhang, S.; Wang, X.; Xiao, J.; Goulermas, Y. Aggregated Pyramid Gating Network for Human Pose Estimation without Pre-Training. Pattern Recognit. 2023, 138, 109429. [Google Scholar] [CrossRef]

- Nahiduzzaman, M.; Islam, M.R.; Hassan, R. ChestX-Ray6: Prediction of Multiple Diseases Including COVID-19 from Chest X-Ray Images Using Convolutional Neural Network. Expert Syst. Appl. 2023, 211, 118576. [Google Scholar] [CrossRef] [PubMed]

- Yin, L.; Cao, X.; Liu, D. Weighted Fully-Connected Regression Networks for One-Day-Ahead Hourly Photovoltaic Power Forecasting. Appl. Energy 2023, 332, 120527. [Google Scholar] [CrossRef]

- Cavieres, R.; Barraza, R.; Estay, D.; Bilbao, J.; Valdivia-Lefort, P. Automatic Soiling and Partial Shading Assessment on PV Modules through RGB Images Analysis. Appl. Energy 2022, 306, 117964. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Y.; Nie, X.; Mo, Y.L. Deep Convolutional Neural Networks for Semantic Segmentation of Cracks. Struct. Contr Hlth 2022, 29, e2850. [Google Scholar] [CrossRef]

- Khare, M.R.; Havaldar, R.H. Predicting the Anterior Slippage of Vertebral Lumbar Spine Using Densenet-201. Biomed. Signal Process. Control 2023, 86, 105115. [Google Scholar] [CrossRef]

- Punn, N.S.; Agarwal, S. Automated Diagnosis of COVID-19 with Limited Posteroanterior Chest X-Ray Images Using Fine-Tuned Deep Neural Networks. Appl. Intell. 2021, 51, 2689–2702. [Google Scholar] [CrossRef]

- Shaheed, K.; Mao, A.; Qureshi, I.; Kumar, M.; Hussain, S.; Ullah, I.; Zhang, X. DS-CNN: A Pre-Trained Xception Model Based on Depth-Wise Separable Convolutional Neural Network for Finger Vein Recognition. Expert Syst. Appl. 2022, 191, 116288. [Google Scholar] [CrossRef]

- Cheng, M.-Y.; Sholeh, M.N.; Harsono, K. Automated Vision-Based Post-Earthquake Safety Assessment for Bridges Using STF-PointRend and EfficientNetB0. Struct. Health Monit. 2023, 147592172311687. [Google Scholar] [CrossRef]

- Yang, N.; Zhang, Z.; Yang, J.; Hong, Z.; Shi, J. A Convolutional Neural Network of GoogLeNet Applied in Mineral Prospectivity Prediction Based on Multi-Source Geoinformation. Nat. Resour. Res. 2021, 30, 3905–3923. [Google Scholar] [CrossRef]

- Rezaee, K.; Mousavirad, S.J.; Khosravi, M.R.; Moghimi, M.K.; Heidari, M. An Autonomous UAV-Assisted Distance-Aware Crowd Sensing Platform Using Deep ShuffleNet Transfer Learning. IEEE Trans. Intell. Transport. Syst. 2022, 23, 9404–9413. [Google Scholar] [CrossRef]

- Liu, F.; Liu, B.; Zhang, J.; Wan, P.; Li, B. Fault Mode Detection of a Hybrid Electric Vehicle by Using Support Vector Machine. Energy Rep. 2023, 9, 137–148. [Google Scholar] [CrossRef]

- Trivedi, M.; Kakkar, R.; Gupta, R.; Agrawal, S.; Tanwar, S.; Niculescu, V.-C.; Raboaca, M.S.; Alqahtani, F.; Saad, A.; Tolba, A. Blockchain and Deep Learning-Based Fault Detection Framework for Electric Vehicles. Mathematics 2022, 10, 3626. [Google Scholar] [CrossRef]

- Guo, H.; Meng, Q.; Cao, D.; Chen, H.; Liu, J.; Shang, B. Vehicle Trajectory Prediction Method Coupled with Ego Vehicle Motion Trend Under Dual Attention Mechanism. IEEE Trans. Instrum. Meas. 2022, 71, 1–16. [Google Scholar] [CrossRef]

- Liu, L.; Song, X.; Zhou, Z. Aircraft Engine Remaining Useful Life Estimation via a Double Attention-Based Data-Driven Architecture. Reliab. Eng. Syst. Saf. 2022, 221, 108330. [Google Scholar] [CrossRef]

- Pan, M.; Liu, A.; Yu, Y.; Wang, P.; Li, J.; Liu, Y.; Lv, S.; Zhu, H. Radar HRRP Target Recognition Model Based on a Stacked CNN–Bi-RNN With Attention Mechanism. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Qi, J.; Liu, X.; Liu, K.; Xu, F.; Guo, H.; Tian, X.; Li, M.; Bao, Z.; Li, Y. An Improved YOLOv5 Model Based on Visual Attention Mechanism: Application to Recognition of Tomato Virus Disease. Comput. Electron. Agric. 2022, 194, 106780. [Google Scholar] [CrossRef]

- Jin, X.; Xie, Y.; Wei, X.-S.; Zhao, B.-R.; Chen, Z.-M.; Tan, X. Delving Deep into Spatial Pooling for Squeeze-and-Excitation Networks. Pattern Recognit. 2022, 121, 108159. [Google Scholar] [CrossRef]

- Zhu, H.; Gu, W.; Wang, L.; Xu, Z.; Sheng, V.S. Android Malware Detection Based on Multi-Head Squeeze-and-Excitation Residual Network. Expert Syst. Appl. 2023, 212, 118705. [Google Scholar] [CrossRef]

- Xing, Z.; Zhang, Z.; Yao, X.; Qin, Y.; Jia, L. Rail Wheel Tread Defect Detection Using Improved YOLOv3. Measurement 2022, 203, 111959. [Google Scholar] [CrossRef]

- Muhammad, K.; Ullah, H.; Khan, S.; Hijji, M.; Lloret, J. Efficient Fire Segmentation for Internet-of-Things-Assisted Intelligent Transportation Systems. IEEE Trans. Intell. Transport. Syst. 2023, 24, 13141–13150. [Google Scholar] [CrossRef]

- 2022 Digital Vehicle Competition of the National Big Data Alliance of New Energy Vehicles. Available online: https://www.ncbdc.top/ (accessed on 14 November 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).