1. Introduction

With the rapid advancement of socioeconomic factors and 5G technology, urban areas have witnessed a substantial surge in vehicle numbers. This exponential growth, primarily catalyzed by the emergence of Internet of Vehicles (IoV) technology, has significantly elevated user driving experiences. The integration of the IoV into urban transportation systems has not only provided drivers with real-time traffic information and navigation assistance, but has also opened up new opportunities for advanced vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communication [

1]. However, in the context of high-velocity vehicular operations, seamless access to a diverse range of internet-based content is of paramount importance. Drivers and passengers increasingly rely on in-vehicle infotainment systems, streaming services, and real-time applications to enhance their travel experiences. Consequently, there is a growing demand for robust and efficient data delivery mechanisms within vehicular networks.

Simultaneously, propelled by the emergence of cutting-edge technologies in artificial intelligence and machine learning, the domain of vehicular networks is undergoing a profound paradigm shift. These technologies are enabling intelligent traffic management, predictive maintenance, and enhanced safety measures. Autonomous vehicles, for example, leverage AI algorithms for real-time decision making, contributing to safer and more efficient transportation. In this backdrop, novel applications are offering innovative solutions to address prevailing challenges. Collaborative traffic optimization algorithms, edge computing for low-latency data processing, and predictive analytics for traffic forecasting are just a few examples of how technology is reshaping urban mobility. As vehicular networks continue to evolve, they hold the promise of not only improving the efficiency of transportation systems but also reducing congestion, emissions, and accidents, ultimately enhancing the quality of life in urban areas.

For time-sensitive tasks, meeting deadlines is critical. Vehicular units often have limited computing and storage capacity, leading to latency or incomplete task execution when processed locally. Mobile Edge Computing (MEC) addresses this by integrating resources and intelligence at the network’s edge. MEC brings cloud-like capabilities closer to data sources, allowing vehicles to offload tasks to edge servers. This enhances the driving experience and supports complex computations. AI and ML models on edge servers enable applications like predictive maintenance and intelligent traffic management. MEC reduces data transmission latency, ensuring real-time safety alerts and traffic information. It creates a responsive vehicular network, adapting to urban traffic dynamics. As vehicles become smarter and more connected, MEC with AI-driven edge computing promises safer and efficient urban mobility.

In this rapidly evolving landscape, traffic stream forecasting plays a pivotal role in enhancing the efficiency of task offloading and content caching. It offers invaluable insights into the distribution of task volumes across different regions, aiding in more effective resource allocation [

2]. As vehicular terminals experience a continuous surge in computational demands, the development of efficient computational offloading strategies becomes increasingly crucial. This is precisely the focus of our paper, where we delve into the evolution of task-level offloading strategies [

3]. By integrating cutting-edge trends in AI- and machine-learning-driven edge/fog computing technologies, we propose an innovative optimization approach known as TOCC. Based on traffic flow prediction, task offloading and content caching are jointly optimized in this method, which can effectively meet the growing demand of intelligent applications. This approach guarantees a safer and more efficient urban mobility experience, especially in the context of the rapidly evolving IoV technology and the advances brought about by 5G.

Specifically, this work makes the following main contributions:

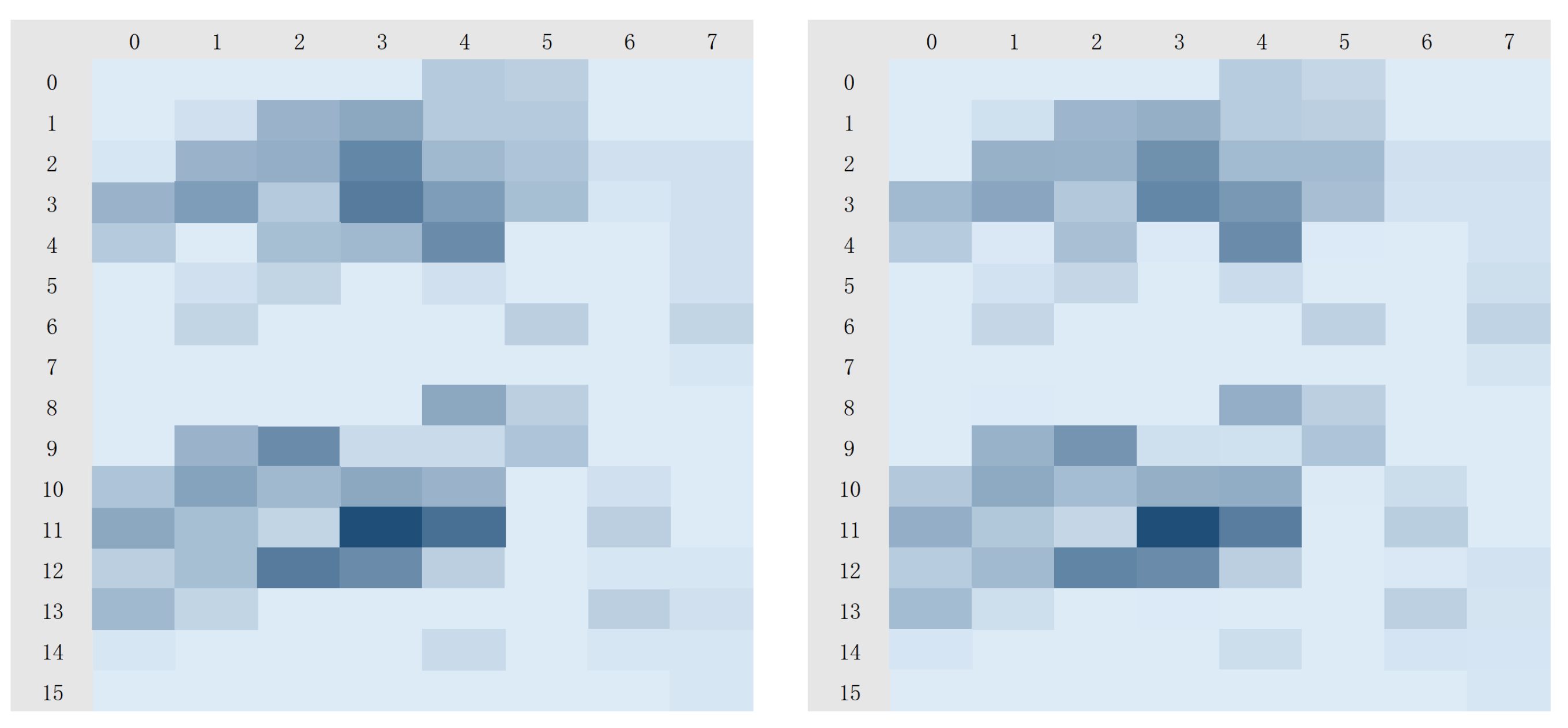

By preprocessing the dataset, we enhance its adaptability to ensure alignment with the operational requirements of the open-source prediction tool. The open-source prediction tool FOST supports mainstream deep learning models such as RNN, MLP, and GNN for predicting the preprocessed BikeNYC dataset. Its fusion module automatically selects and integrates predictions generated by different models, enhancing the overall robustness and accuracy of predictions.

We use the predicted traffic stream and an enhanced multi-objective evolutionary algorithm (MOEA/D) to decompose the multitask offloading and content caching problem into individual optimization problems. This decomposition helps us to obtain a set of Pareto optimal solutions, which is achieved through the Tchebycheff weight aggregation approach.

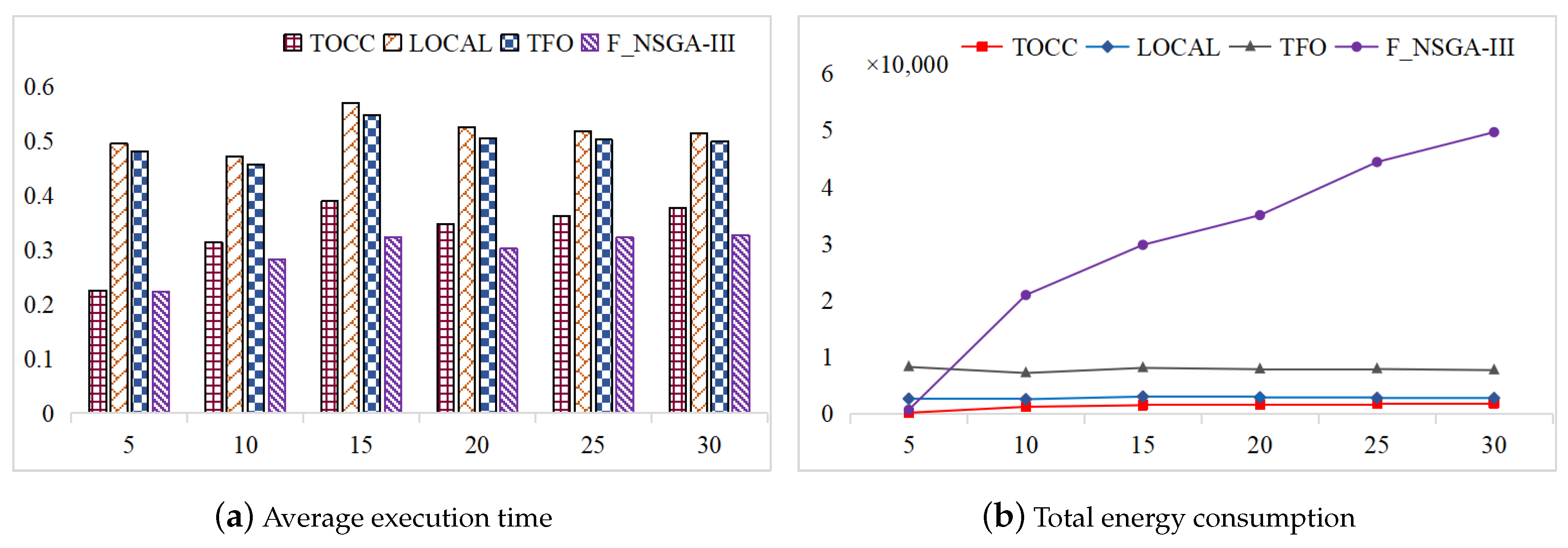

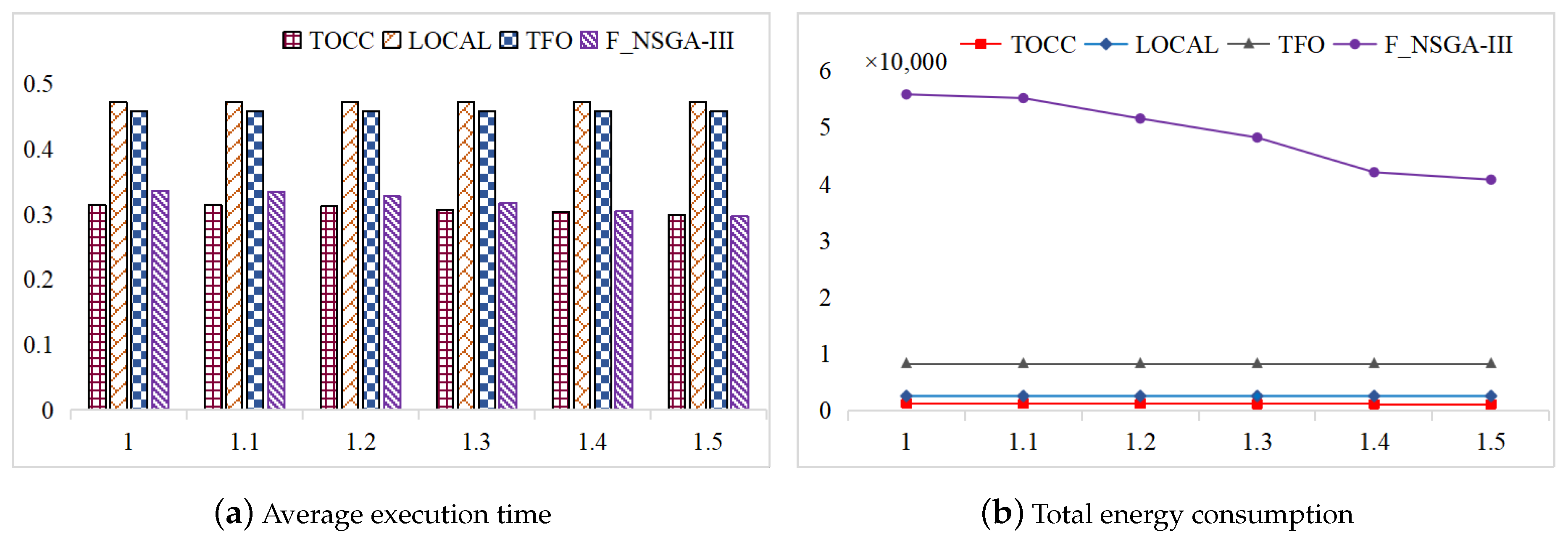

Finally, we chose the BikeNYC dataset for traffic flow prediction. It is a multimodal dataset rich in spatiotemporal data, making it ideal for this purpose. We conducted comprehensive simulation experiments to evaluate the TOCC algorithm’s performance, which effectively reduced execution time and energy consumption, as shown by the results.

2. Related Work

In the high-speed mobile environment of the IoV, efficient task execution plays a crucial role in minimizing time delays. However, the limited computing and storage capacity of automotive systems often necessitate offloading resource-intensive tasks. These tasks are strategically shifted to edge or cloud servers, enabling efficient execution and ensuring prompt fulfillment of user requests. Automotive applications frequently involve repeated content requests, making caching popular content on edge servers highly effective in reducing latency and energy consumption for subsequent access in the IoV. Nevertheless, improper task offloading and inaccurate content caching can lead to increased energy consumption and latency, mainly due to network congestion and server queuing. To address these challenges, the joint optimization of task offloading and content caching is essential. By harmonizing these processes, we can effectively minimize time latency and energy consumption, enhancing the overall performance and user experience in the IoV.

In recent years, the problem of task offloading in cloud–edge environments has received much attention. In their pursuit of optimizing data placement performance, Wang et al. [

4] recognized the significance of spatiotemporal data characteristics. They introduced TEMPLIH, where a temperature matrix captures the influence of data features on placement. The Replica Selection Algorithm (RSA-TM) is utilized to ensure compliance with latency requirements. Furthermore, an improved Hungarian algorithm (IHA-RM) based on replica selection is employed to achieve multi-objective balance. Zhao et al. [

5] proposed an energy-efficient offloading scheme to minimize the overall energy usage. Although their algorithm exhibits low energy consumption on a single task, additional research is needed to evaluate its effectiveness in a multi-task setting. In order to optimize data caching and task scheduling jointly, Wang et al. [

6] proposed a multi-index cooperative cache replacement algorithm based on information entropy theory (MCCR) to improve the cache hit rate. Subsequently, they further proposed the NHSA-MCCR algorithm, which aims to optimize the scheduling to achieve the optimization of delays and energy consumption. Chen et al. [

7] presented a UT-UDN system model that demonstrated a 20% reduction in time delay and a 30% decrease in energy costs, as indicated by simulation results. Elgendy et al. [

8] proposed a Mobile Edge Computing solution for Unmanned Aerial Vehicles (UAVs), which uses a multi-layer resource allocation scheme, a load balancing algorithm, and integer programming to achieve cost reduction. In the context of multi-cloud spatial crowdsourcing data placement, Wang et al. [

9] introduced a data placement strategy with a focus on cost-effectiveness and minimal latency. Concurrently, they incorporated the interval pricing strategy and utilized a clustering algorithm to analyze the geographic distribution patterns of data centers. Furthermore, certain studies have investigated the application of heuristics to address the task offloading problem. For instance, Xu et al. [

10] employed enumeration and branch-and-bound algorithms to tackle these challenges. Meanwhile, Yin et al. [

11] introduced a task scheduling and resource management strategy based on an enhanced genetic algorithm. This approach took into account both delays and energy consumption, with the objective of minimizing their combined sum. Kimovski et al. [

12] introduced mMAPO as a solution for optimizing conflicting objectives in multi-objective optimization, including completion time, energy consumption, and economic cost. Ding et al. [

13] addressed the challenge of edge server state changes and the unavailability of global information by introducing Dec-POMDP, which handles observed states, and a task offloading strategy based on the Value Decomposition Network (VDN). Li et al. [

14] concentrated on addressing the security concerns in edge computing and introduced the priority-aware PASTO algorithm. This algorithm aims to minimize the overall task completion time while adhering to energy budget constraints for secure task offloading. These efforts have been concentrated on addressing computation offloading and caching challenges in cloud–edge environments, optimizing for objectives such as latency, energy consumption, cost, and load performance, yielding substantial results. Nevertheless, cloud–edge environments constitute a vast research domain, necessitating a renewed focus. Consequently, our upcoming investigation will center on the resolution of computation offloading and caching challenges in vehicular edge environments, aiming to explore solutions within this particular domain.

The in-vehicle edge environment merges computing resources from the vehicle and the edge, creating a strong platform for computing services. Yang et al. [

15] introduced a location-based offloading scheme that effectively balances task speed with communication and computational resources, leading to significant system cost reduction. To tackle challenges related to task latency and constraints in RSU (roadside unit) server resources, Zhang et al. [

16] proposed a contract-based computing resource allocation scheme in a cloud environment, aiming to maximize the benefits of MEC service providers while adhering to latency limits. Dai et al. [

17] devised a novel approach by splitting the joint load balancing and offloading problem into two sub-problems, formulated as a mixed-integer nonlinear programming problem, with the primary objective of maximizing system utility under latency constraints. In the realm of 5G networks, Wan et al. [

18] introduced an edge computing framework for offloading using multi-objective optimization and evolutionary algorithms, efficiently exploring the synergy between offloading and resource allocation within MEC and cloud computing, resulting in optimized task duration and server costs. For comprehensive optimization, Zhao et al. [

19] proposed a collaborative approach that minimizes task duration and server costs through joint optimization of offloading and resource allocation in the MEC and cloud computing domains. To solve the problem of communication delay and energy loss caused by the growth in IoV services, Ma [

20] proposed that through a comprehensive analysis of the optoelectronic communication and computing model, the vehicle computing task should be encoded and transformed into a knapsack problem, where the genetic algorithm is used to solve the optimal resource allocation strategy. Lin et al. [

21] proposed a data offloading strategy called PKMR, which considers a predicted k-hop count limit and utilizes VVR paths for data offloading with neighboring Rsus. Sun et al. [

22] introduced the PVTO method, which offloads V2V tasks to MEC and optimizes the strategy using GA, SAW, and MCDM. Ko et al. [

23] introduced the belief-based task offloading algorithm (BTOA), where vehicles make computation and communication decisions based on beliefs while observing the resources and channel conditions in the current VEC environment. While prior research has predominantly focused on addressing computation offloading and resource allocation problems in vehicular edge environments with utility-, latency-, and cost-related objectives in mind, these strategies often overlook the potential impact of future traffic patterns within the context of vehicular networks, which can result in a loss of offloading precision. Moving forward, our discussion shifts to traffic flow prediction in vehicular environments and the accompanying investigation into computation offloading and content caching issues based on this groundwork.

In the environment of the Internet of Vehicles, the key to effective resource deployment is real time, accuracy, and intelligence. In order to achieve this goal, traffic flow prediction becomes a crucial factor. For traffic flow prediction, in order to enhance real-time traffic prediction in various scenarios, Laha et al. [

24] proposed two incremental learning methods and compared them with three existing methods to determine the suitable scenarios for these methods. To address the limitations of current approaches in long-term prediction performance, Li et al. [

25] introduced a novel prediction framework called SSTACON, which utilizes a shared spatio-temporal attention convolutional layer to extract dynamic spatio-temporal features and incorporates a graph optimization module to model the dynamic road network structure. In order to tackle the issue of parameter selection and improve prediction accuracy, Ai et al. [

26] incorporated the Artificial Bee Colony (ABC) algorithm into the ABC-SVR algorithm. Lv et al. [

27] addressed the service switching challenge among adjacent roadside units, introducing cooperation between vehicles, vehicle-to-roadside-unit communication, and implementing trajectory prediction to minimize task processing delays. Fang et al. [

28] recognized the significance of traffic flow prediction, and, building upon this foundation, introduced the ST-ResNet network for traffic prediction, complemented by the NSGA-III algorithm for multi-objective optimization. Their approach demonstrated superior performance in terms of latency and energy consumption, outperforming existing methodologies.

Based on these findings, we observed a relative scarcity of research focusing on joint optimization of computation offloading and content caching based on traffic flow prediction. Hence, our study aims to address the problem of joint optimization of task offloading and edge content caching. We employ an enhanced traffic-based multi-objective evolutionary algorithm to achieve this. The overarching objective is to minimize both transmission and computation latency while reducing energy consumption.

Table 1 displays a concise synopsis of the scrutinized literature, delineating pivotal aspects including the application environment, addressed challenges, and optimization objectives.

3. System Model and Problem Formulation

3.1. System Framework

Figure 1 illustrates a robust three-tier cloud–edge–vehicular network framework designed to cater to diverse tasks across different regions. The framework comprises three layers: the vehicle terminal layer, the Mobile Edge Computing (MEC) layer, and the cloud computing layer. In the cloud computing layer, cloud servers cover the entire area, providing extensive coverage. The MEC layer consists of edge servers along the roadside, covering an area. The vehicle terminal layer comprises vehicles traversing the road, communicating with both the MEC layer and the cloud computing layer via wireless channels. During operation, the vehicle terminal layer undertakes one or more computing tasks with varying probabilities, taking advantage of time gaps to execute the tasks efficiently. Three possible destinations for task offloading are available: local processing (i.e., handling tasks on the computing device within the vehicle), processing by the edge server, or processing by the cloud server. The edge server is equipped with the capability to cache popular content, further enhancing the task offloading efficiency.

A region’s traffic stream is the count of vehicles passing through it in a given time slot, such as one minute. Let denote the set of vehicle trajectories at the time slot. The vehicle traffic stream in region i during the time slot can be determined by , where is an ordered set representing the discrete trajectory of a user multimedia request over time; represents the geographical position of the user’s multimedia request at certain times; and is a binary variable that equals 1 if in region i exists in . Otherwise, it equals 0. The problem of predicting the stream of vehicles can be defined as follows: given the historical traffic stream , the goal is to predict the future traffic stream .

In a partitioned region, we assume , where N is the number of vehicles and Q is the number of tasks. This assumption accounts for prevalent tasks that are repeatedly requested and executed. Each vehicle can perform only one task at a time, and multiple vehicles may request the same task based on their preferences. To simplify notation, we define as the task q generated by the vehicle in region i.

To characterize different computational tasks, a triple is employed as the computational task model: . Task can be partially offloaded to either the MEC server or the cloud computing server for processing. The parameter is the amount of CPU cycles required to accomplish task . The parameter represents the input data size required for processing task , while signifies the maximum deadline for completing the task. It is assumed that the value of remains constant regardless of whether task is processed locally, offloaded to the MEC server, or executed on the cloud computing server. Furthermore, MEC servers within the region are assumed to have limited computation capacity, denoted as , and a cache size of .

3.2. Execution Time and Energy Consumption Model

For the task offloading problem, we divide the computational task into multiple parts and define the offloading decision variable , where denotes the percentage of task offloaded to the local vehicle, MEC server, and cloud server, respectively. The constraint ensures that the entire task is accounted for. For example, if , the task is executed exclusively within the vehicle, where indicates complete offloading to the MEC or cloud server. The overall offloading decision policy is denoted as .

3.2.1. Execution Time and Energy Consumption of Local Task Computation

If the task

is selected for local processing, then

is defined as the local execution time. Due to the difference in its own computational power brought by vehicle heterogeneity, the local execution time delay of task

is

The local energy consumption is calculated as

where

is the computational power of the

vehicle in region

i.

is the energy consumption per CPU cycle.

3.2.2. Execution Time and Energy Consumption of Edge Task Computing

When task

is offloaded to the MEC server, the process involves the following steps: the

vehicle uploads the task’s input data to the MEC server via the BS/RSU. The MEC server allocates computational resources for task processing and returns the result to the vehicle. The time of tasks offloaded to MEC server m can be as described below:

where the first part enclosed in parentheses in Equation (3) represents the offload time to the edge server, the second part denotes the execution time delay for processing

, and the final part accounts for the feedback time delay of processing results. Here,

is the computing capability of edge servers in region

i,

signifies the data offload rate from the

vehicle to the mobile edge server within region

i at time slot

t,

is the size of the task processing outcome, and

is the backhaul transmission rate of edge server

m.

This means the edge energy consumed is

where

is the offload capability for edge server

m.

3.2.3. Execution Time and Energy Consumption of Cloud Server Computing

The cloud server

c is situated at a greater distance from the task source compared to the edge server, resulting in potential latency. Hence, we opt to incorporate the cloud computing model for task processing only under specific conditions. These conditions include cases where the task demands extensive computational resources that surpass the processing capacity of the edge server or in scenarios where the edge server is already operating at its maximum capacity due to concurrent multitasking. Hence, the time

of the task offloaded to cloud server

c is defined as

where

denotes the data offload rate from the

vehicle to the cloud server within region

i at time slot

t and

is the backhaul transmission rate of edge server

c. Furthermore, the energy consumption of the cloud server is:

Based on the analysis, the execution time for the task on the

vehicle in region

i can be calculated according to the equation below:

Regarding energy consumption, the calculation for the task executed on the

vehicle in region

i can be expressed as follows:

3.3. Edge Data Caching Model

Task caching refers to the storage of completed tasks and their associated data on the edge cloud. This paper formulates the content caching problem using a binary cache decision variable . If , it signifies that the task generated by the vehicle in region i is cached to edge server m. Otherwise, it is set to 0. Therefore, the task caching policy is expressed as .

Considering the joint processing of task offloading and content caching to vehicle local, edge, and cloud servers, the total execution latency of task

generated by the

vehicle in region

i is

Furthermore, the total consumption of energy is:

Therefore, the average execution time of vehicles in the region

i is

The energy consumption of vehicles in the region

i is

The combined size of the data contained in the regional edge servers is:

3.4. Problem Definition

In this paper, the aim is to minimize the average time taken to execute and the total energy consumption within each region. This problem is formulated with the consideration of maximum latency and computing power constraints, and can be described as follows:

These constraints address task divisibility, binary caching decisions, and timely task completion. They also limit the data cached by tasks to match the edge server cache capacity while ensuring that the combined resource requests of offloaded tasks do not surpass the computing capacity of edge servers.

It is worth emphasizing that the execution time, energy consumption, and other formulas involved in this article all adhere to scientific principles and follow the foundation work of previous related research. Accurate definitions and thte rationality of these formulas are derived from in-depth research in the cited literature [

6,

12,

19,

28], ensuring the scientificity and credibility of our offloading strategies and their application in different scenarios.