Abstract

Pedestrian detection models in autonomous driving systems heavily rely on deep neural networks (DNNs) to perceive their surroundings. Recent research has unveiled the vulnerability of DNNs to backdoor attacks, in which malicious actors manipulate the system by embedding specific triggers within the training data. In this paper, we propose a tailored camouflaged backdoor attack method designed for pedestrian detection in autonomous driving systems. Our approach begins with the construction of a set of trigger-embedded images. Subsequently, we employ an image scaling function to seamlessly integrate these trigger-embedded images into the original benign images, thereby creating potentially poisoned training images. Importantly, these potentially poisoned images exhibit minimal discernible differences from the original benign images and are virtually imperceptible to the human eye. We then strategically activate these concealed backdoors in specific scenarios, causing the pedestrian detection models to make incorrect judgments. Our study demonstrates that once our attack successfully embeds the backdoor into the target model, it can deceive the model into failing to detect any pedestrians marked with our trigger patterns. Extensive evaluations conducted on a publicly available pedestrian detection dataset confirm the effectiveness and stealthiness of our camouflaged backdoor attacks.

1. Introduction

In recent years, the advent of autonomous vehicles has heralded a new era of transportation, promising safer and more efficient roadways [1,2,3]. Within the realm of autonomous driving, a critical task is pedestrian detection [4,5,6], where its primary objective lies in the precise identification of pedestrians within images or videos. This task leverages advanced deep neural networks (DNNs) to perceive and interpret the surrounding environment, ensuring the precise distinction of pedestrians from other objects or the background. As a result, the accuracy and resilience of pedestrian detection models emerge as paramount factors, directly influencing the overall safety and dependability of autonomous vehicles on the road [2,3,5].

However, recent research has demonstrated that DNNs are not immune to vulnerabilities, despite achieving exceptional performance in various domains. It has become increasingly evident that DNNs lack robustness and are susceptible to backdoor attacks [7,8,9]. Attackers exploit a stealthy strategy by embedding malicious backdoor triggers or patterns during the training stage of DNNs. This surreptitious maneuver gives rise to concealed vulnerabilities within the neural networks. Subsequently, when these networks encounter specific inputs or conditions, these backdoors can unexpectedly activate, potentially yielding harmful responses [10,11,12]. Such occurrences pose a significant threat, eroding the reliability and integrity of systems reliant on neural networks. While past works have investigated a range of backdoor attacks targeting DNNs across various domains [7,13,14,15,16,17], there are no studies investigating backdoor attacks against pedestrian detection in autonomous driving.

This paper endeavors to design and implement a covert backdoor attack against pedestrian detection within the context of autonomous driving. There are a couple of challenges to achieving this goal. (i) Firstly, most existing backdoor attacks primarily focus on poisoning training images for classification tasks [7,18,19]. In contrast, when dealing with autonomous driving systems, the objective of enabling a pedestrian to escape detection is considerably more threatening and complex than merely attacking a classifier task. Therefore, we consider how to design a backdoor attack against pedestrian detection, aiming for the attacked DNNs to behave normally on benign images while failing to detect pedestrian-containing trigger patterns. (ii) Secondly, backdoor attacks based on poisoned labels or annotations could potentially be recognized by humans if the model developers have the capability of manually inspecting the training images [20]. To make the backdoor attack more stealthy and increase the success rate of backdoor attacks, our objective is to meticulously design camouflage backdoor attacks. (iii) Thirdly, it is worth noting that existing backdoor attacks primarily target a specific algorithm when tampering with training images. However, in the context of pedestrian detection, various algorithms can be employed to train the model, including both two-stage [21,22] and one-stage algorithms [23,24,25], as well as anchor-based [21,25] and anchor-free methods [26,27]. Generating a set of universally applicable poisoned images capable of targeting any pedestrian detection model, regardless of its underlying algorithm, presents a formidable challenge.

To address the above challenges, in this paper, we propose a camouflage backdoor attack against pedestrian detection in autonomous driving systems. Firstly, for the task of object detection, we propose a backdoor attack method that can be executed during the training phase. Specifically, we employ a pre-trained image matting model to generate attack images with embedded triggers. These attack images are then integrated into the original benign images for model training, while preserving the original annotations of the benign images. Unlike previous approaches that tamper with both images and annotations during training, we take into consideration the possibility of the manual inspection of training images by model developers. To make the attack more covert, we utilize an image scaling function for camouflage. We use a scaling function to seamlessly incorporate the attack images into the benign images, creating disguised poisoned images. These poisoned images are nearly imperceptible to the human eye, but, during preprocessing before model training, the model perceives a different image. Through this image scaling function, we successfully achieve a camouflage backdoor attack.

We implement our backdoor attack against four different types pedestrian detection models, namely Faster R-CNN [21], Cascade R-CNN [28], RetinaNet [25], and Fully Convolutional One-Stage Object Detection (FCOS) [26], to show the superiority of our proposed camouflage backdoor attacks. Evaluations conducted on a publicly available dataset demonstrate the effectiveness and covert nature of our camouflage backdoor attack. This highlights the seriousness and feasibility of our attacks and underscores the need for careful consideration of this novel attack when developing resilient autonomous driving models.

Our contributions are as follows.

- We reveal the backdoor threat in pedestrian detection within the autonomous driving domain, and we design and implement an effective and stealthy backdoor attack targeting pedestrian detection in autonomous driving.

- We employ image scaling to disguise the backdoor attack; furthermore, we only contaminate the training data, not the labels, making the attack more covert.

- Extensive experiments on the benchmark KITTI dataset are conducted, which verify the effectiveness and stealthiness of our attack.

This paper is organized as follows. We present a survey of related work encompassing the domain of pedestrian detection and the latest advancements in backdoor attacks in Section 2. In Section 3, we provide an in-depth explanation of our proposed attack method, covering the attack objectives, the process of crafting attack samples, and the practical execution of the attack. We introduce our experimental setup in Section 4.1. In Section 4.2, we delve into an analysis and assessment of the experimental results. We conclude with a summary of our work in Section 5.

2. Related Work

2.1. Pedestrian Detection

Pedestrian detection is a critical research area in the field of computer vision, with a wide range of applications, including intelligent traffic monitoring, autonomous driving, crowd analysis, and video surveillance [4,5,6]. Over the past few decades, researchers have proposed various pedestrian detection methods, continually improving the detection accuracy and robustness. The following is a summary of some of the methods.

Two-Stage Models: Two-stage object detection models utilize a two-step methodology, involving region proposal followed by the subsequent classification/regression of these proposals. These models consistently achieve outstanding accuracy, particularly excelling in the detection of small objects due to their two-step approach. Some representative models in this category include Cascade R-CNN [28] and Mask R-CNN [22].

One-Stage Models: One-stage models conduct object detection in a single pass, omitting the need for explicit region proposals. These models are typically faster and well suited for real-time applications. They feature relatively simpler architectures. Representative models include You Only Look Once (YOLO) [23], SSD [24], and RetinaNet [25].

Anchor-Based Models: Anchor-based models employ predefined anchor boxes for the prediction of object locations and classification. These models excel in multi-scale and multi-aspect-ratio object detection, offering precise object localization. Notable examples of anchor-based models include Faster R-CNN [21] and RetinaNet [25].

Anchor-Free Models: Anchor-free models eliminate the necessity for predefined anchor boxes, instead directly predicting object locations and sizes. This approach resolves the complexities associated with anchor box design and offers improved adaptability to sparse object scenarios. Notable examples of anchor-free models include FCOS [26] and CenterNet [27].

Previous studies have highlighted the vulnerability of pedestrian detection models to adversarial examples. In this paper, we extend this vulnerability by demonstrating their susceptibility to camouflage backdoor attacks. Our objective in this attack is to construct a tainted dataset in such a manner that any subsequent pedestrian detection model trained on it becomes contaminated with the backdoor, irrespective of the detection techniques employed.

2.2. Backdoor Attacks

Our research is focused on the issue of backdoor attacks in autonomous driving systems. During the development of autonomous driving systems, it is common practice to use third-party annotation services to label data images [20]. However, this process carries inherent risks, as suppliers may potentially introduce malicious alterations to the data. This situation can lead to the insertion of specific triggers into the data during model training, resulting in incorrect recognition by the model during real-world applications and thereby posing serious security concerns. It is important to emphasize that such attacks typically pursue two main objectives: concealment and attack effectiveness [7,13,14,19]. Specifically, the former aims to make the victim model perform similarly to a regular model under normal circumstances, making it difficult to distinguish from a standard model. The latter implies that, under specific trigger conditions, such as specific physical triggers, the model may fail to correctly detect objects, posing a threat to the functionality and safety of the autonomous driving system.

In contrast to prior research, which not only modified the training data (e.g., by adding triggers or annotations) but also altered the data’s annotations, our approach is more covert. We exclusively manipulate the training data themselves. This approach offers the advantage of being less susceptible to detection, as the label information remains unchanged, allowing the model to continue recognizing objects correctly under normal circumstances. Additionally, we employ image scaling functions as a means of camouflage to seamlessly embed the attack images into benign images, making them almost imperceptible to the human eye. This significantly enhances the stealthiness of the backdoor attack.

3. Methodology

3.1. Overview

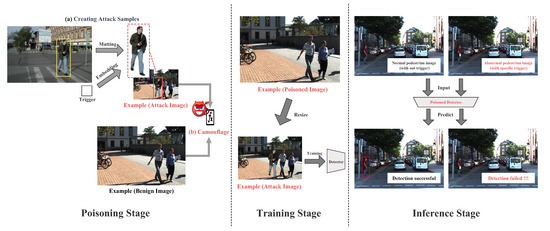

The camouflage backdoor attack against pedestrian detection, as shown in Figure 1, comprises three main stages: (i) the poisoning stage, (ii) the training stage, and (iii) the inference stage. During the poisoning stage, we constructed attack images with triggers and concealed them within benign images using an image scaling function, thereby generating poisoned images. In the training stage, these poisoned images were employed to train a poisoned pedestrian detection model. In the inference phase, pedestrian images with specific triggers were utilized to activate a backdoor, causing the pedestrian detection model to fail.

Figure 1.

The main pipeline of the camouflage backdoor attack. Our attack method consists of three main stages. In the first stage (poisoning stage), we extract pedestrian images from the dataset and embed triggers within them. Subsequently, by applying image scaling techniques, we blend attack images with benign images to generate nearly imperceptible poisoned images. In the second stage (training stage), we train pedestrian detection using a combination of the generated poisoned images and the remaining benign images to obtain a poisoning detection model. In the final stage (inference stage), the adversary has the capability to activate embedded backdoors in order to bypass pedestrian detection (for instance, in our example, the ‘person’ with a specific trigger escapes the detection of the pedestrian detection model) by adding trigger patterns.

3.2. Concepts and Attack Goal

To describe the process of a camouflage backdoor attack, we define the following several conceptual objects involved in one attack, as shown in Table 1. The goal of the camouflage backdoor attack is to create a highly deceptive effect through images, to the extent that it remains imperceptible to the human eye. Our approach assumes that adversaries can manipulate only a subset of legitimate images to create a poisoned training dataset, without control over other training components like the model structure. This type of attack can occur in various scenarios where the training process is not fully controlled, such as when using third-party data, computing platforms, or models [29,30].

Table 1.

The concept terms and descriptions related to camouflage backdoor attacks.

Let represent the benign images, , where is the image of a pedestrian and is the ground-truth annotation of the pedestrian . For each annotation , we have , where denotes the ‘person’ class to which the object within the bounding box is assigned, is the center coordinates of the pedestrian in the image, is the width of the bounding box, and is the height of the bounding box. In pedestrian detection tasks, given the dataset , users can utilize dataset to train their pedestrian detector by , where is the loss function. In contrast to the standard pedestrian detection task, our objective during the inference stage is to enable adversaries to circumvent pedestrian detection by adding trigger patterns with any pedestrian image .

3.3. Attack Image Crafting

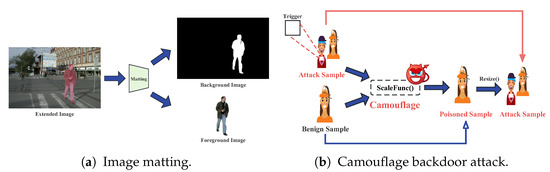

Prior research has taken different approaches to attacking DNN models. Some of these approaches involve modifying the training data by adding or updating triggers and annotations [31]. In contrast to these, our approach seeks a higher level of stealthiness. Specifically, we exclusively manipulated the training data themselves, avoiding any direct alterations to the data annotations. We selected several images related to human body poses and used a pre-trained image matting model to achieve the separation of pedestrian images from the background, as shown in Figure 2a.

Figure 2.

The process of crafting attack images. (a) illustrates the process of image matting, while (b) presents the principle of the camouflage backdoor attack.

The input image can be mathematically expressed as a combination of the foreground () and background () based on the alpha matte using the compositing equation:

where the foreground and is the pedestrian image.

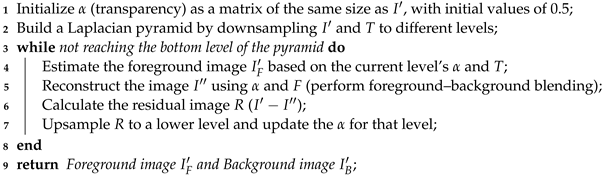

As shown in Algorithm 1, the image matting algorithm starts by initializing the transparency values (alpha) as a matrix with equal dimensions to the input image. These initial values are set to 0.5. Then, a Laplacian pyramid is built by downsampling the image and trimapping it to different levels. The iterative optimization begins, where we estimate the foreground image based on the current level’s alpha and trimap. Using the alpha values and foreground estimation, we reconstruct the image by performing foreground–background blending. The residual image is calculated by subtracting the reconstructed image from the original image. The algorithm continues by upsampling the residual image to a lower level and updating the alpha values accordingly. This iterative process is repeated until the bottom level of the pyramid is reached. Finally, the algorithm outputs the final foreground images and background images .

| Algorithm 1: Image Matting Algorithm |

Input: Image , Trimap T (containing foreground, background, and unknown regions) Output: Foreground image , Background image  |

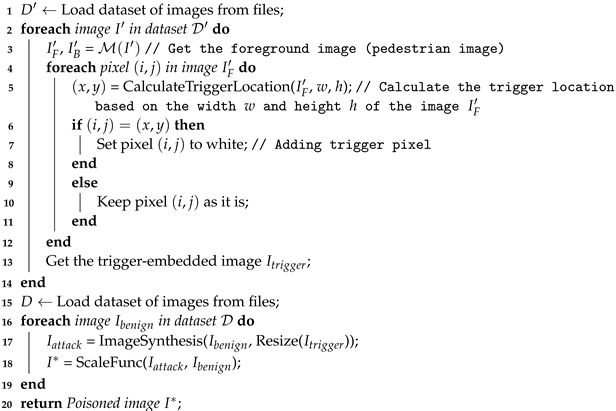

Next, we generate trigger-embedded images by adding the trigger to the foreground image and then fuse them with benign images to create attack images . Specifically, we load the trigger-embedded images and resize them to the desired dimensions and scale first. Then, it is overlaid onto benign image at a specific position, with adjustments made to ensure proper alignment and transparency. The resulting image is a combination of both images, appearing as if image naturally belongs within image , creating a visually cohesive composition that combines elements from both source images. Finally, the poisoning images are obtained by inserting the attack images into the benign images using the image scaling function. The whole process of poisoning images is shown in Algorithm 2. It is worth noting that since trigger-embedded images may have different sizes, in order to camouflage them effectively without raising suspicion, we resize the trigger-embedded images to match the dimensions of the original pedestrian bounding box when inserting them into the benign images.

3.4. Camouflage Backdoor Attack

In general, backdoor attacks have two primary objectives, namely (1) effectiveness and (2) stealthiness. To increase the concealment of the backdoor attack, we use image scaling techniques for camouflage. Image scaling is an indispensable technique to preprocess data for all DNN models. However, Xiao et al. [18] found that this process gives rise to new adversarial attacks: the adversary can modify the original image in an unnoticeable way, which will become the desired adversarial image after downscaling. Quiring et al. [32] further adopted this technique to realize clean-label backdoor attacks for classification models. Motivated by this vulnerability, our camouflage backdoor attack involves the alteration of attack images through imperceptible perturbations. These modifications transform them into poisoned images that maintain the appearance of benign images. Throughout the model training process, these images receive erroneous annotations following the image scaling step, thereby embedding the desired backdoor into the model.

| Algorithm 2: Creating Poisoned Images Algorithm |

Input: Extension dataset , Pedestrian dataset , Image matting model , Trigger pixel Output: Poisoned image  |

Our goal is to generate a poisoned image from the benign image and attack image . Poisoned image is visually indistinguishable from ; however, after scaling, becomes a malicious attack image , as shown in Figure 2b. During the inference stage, the adversary can simply insert the trigger into the pedestrian images at a designated location to activate the backdoor, as depicted in Figure 1. Subsequently, the model will recognize the trigger and give a false prediction (e.g., no persons), potentially leading to significant safety concerns.

4. Experiments

4.1. Experimental Setup

4.1.1. Model and Dataset

We conduct comprehensive experiments to verify the efficacy of our proposed backdoor attack on state-of-the-art pedestrian detection models. To illustrate the universality of backdoor attacks across various pedestrian detection algorithms, we have selected four representative methods from distinct categories: Faster R-CNN [21], Cascade R-CNN [28], RetinaNet [25], and FCOS [26].

We assess the effectiveness of our proposed attack method using the KITTI dataset [33], a well-established benchmark dataset in the fields of computer vision and autonomous driving research. This dataset comprises real-world scene images captured in urban environments and is enriched with annotations that include the precise positioning, dimensions, and orientations of both vehicles and pedestrians. For our experimentation, we exclusively utilize the 2D object pedestrian detection dataset from KITTI to both train and evaluate the performance of our method.

4.1.2. Evaluation Metrics

To evaluate the impact of our attack method on the pedestrian detector, we employ the COCO-style [34] mean average precision (mAP) and mean average recall (mAR) as our evaluation metrics to assess the performance of our proposed attack method across various pedestrian detectors. In addition, we consider the following evaluation criteria: AP50 and AP75, using IoU thresholds of 0.5 and 0.75, respectively.

4.1.3. Implementation Details

For the sake of simplicity, we employ a white patch as the trigger pattern and fix the poisoning rate at 5%. Following the configuration detailed in [35], we establish the trigger size for each object as 1% of its ground-truth bounding box, meaning that it occupies 10% of the box’s width and 10% of its height, positioned at the center. We utilize four pedestrian detection models, namely Faster R-CNN, Cascade R-CNN, RetinaNet, and FCOS. Our training and evaluation procedures are carried out on the KITTI dataset. The detectors are trained for 12 epochs with a batch size of 8, employing Stochastic Gradient Descent (SGD) with a learning rate of 0.001, a momentum of 0.9, and a weight decay of 0.0001. All our experiments are run on a server equipped with two NVIDIA GeForce 3090 GPUs (NVIDIA Corporation, Beijing, China).

4.2. Experimental Results and Analysis

In order to validate the stealthiness of the camouflage backdoor attack proposed in this paper, we trained different pedestrian detection models based on benign and malicious datasets separately. Subsequently, we conducted validation on the benign testing dataset. Table 2 illustrates the performance comparison between the poisoned model trained on attack samples and the regular benign model trained on benign samples. From the table, it can be observed that despite the poisoned model being trained on attack images with triggers, its performance on the benign testing dataset is similar to that of the benign model. This indicates that the poisoned model trained on adversarial samples exhibits a high level of stealthiness. When the backdoor trigger is not activated, its performance is nearly identical to that of the normal model.

Table 2.

The performance (%) of methods on the benign testing dataset.

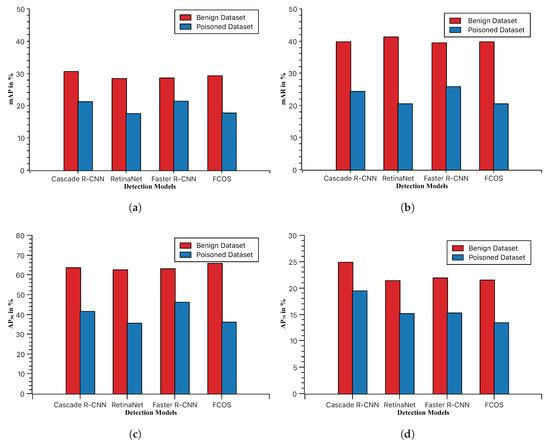

To validate the effectiveness of the attack model proposed in this paper, we added triggers to pedestrians in the benign testing dataset to create a poisoned testing dataset. Then, we compared our trained poisoned models on both the benign dataset and the poisoned dataset. Figure 3 presents the results for different poisoned models in terms of mAP, mAR, AP50, and AP75. From the figure, it can be observed that the poisoned models trained using the camouflage backdoor attack exhibit decreased performance (mAP, mAR, AP50, AP75) on the poisoned dataset with triggers. Specifically, in the case of the mAR metric, there is a maximum performance drop of approximately 20%. This means that when pedestrians carrying triggers appear in autonomous driving scenarios, the model’s backdoor is activated, allowing pedestrians with triggers to bypass the model’s detection, thereby posing security concerns.

Figure 3.

The performance (%) comparison of poisoning models on benign and poisoned datasets. (a) mAP performance of poisoned models. (b) mAR performance of poisoned models. (c) AP50 performance of poisoned models. (d) AP75 performance of poisoned models.

5. Conclusions

In this paper, we design and realize a backdoor attack against pedestrian detection systems in autonomous driving. We propose a novel camouflage backdoor attack to efficiently and stealthily poison the training images, which could affect different types of mainstream pedestrian detection algorithms. Evaluations on a public dataset demonstrate that our camouflage backdoor attack method performs equivalently to a normal model when the backdoor is not activated. However, once the backdoor is triggered, a noticeable decline in performance becomes evident. Specifically, when evaluating the mAR metric, we observe a maximum performance drop of approximately 20%. This outcome confirms the attack method’s effectiveness and stealthiness.

Author Contributions

Validation, Y.G.; Investigation, X.C., Q.L. and J.L. (Jianhua Li); Writing—original draft, Y.W.; Writing—review & editing, Y.C. and Y.X.; Supervision, E.T. and Z.H.; Funding acquisition, J.L. (Jiqiang Liu). All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Fundamental Research Funds for the Central Universities under Grant No. 2023JBMC055, the National Natural Science Foundation of China under Grant No. 61972025, and the ‘Top the List and Assume Leadership’ project in Shijiazhuang, Hebei Province, China.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in The KITTI Vision Benchmark Suite at https://doi.org/10.1177/0278364913491297 [33].

Conflicts of Interest

Author Jianhua Li was employed by the company Zhongguancun Smart City Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Deng, Y.; Zhang, T.; Lou, G.; Zheng, X.; Jin, J.; Han, Q.L. Deep learning-based autonomous driving systems: A survey of attacks and defenses. IEEE Trans. Ind. Inform. 2021, 17, 7897–7912. [Google Scholar] [CrossRef]

- Bogdoll, D.; Nitsche, M.; Zöllner, J.M. Anomaly detection in autonomous driving: A survey. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4488–4499. [Google Scholar]

- Gao, C.; Wang, G.; Shi, W.; Wang, Z.; Chen, Y. Autonomous driving security: State of the art and challenges. IEEE Internet Things J. 2021, 9, 7572–7595. [Google Scholar] [CrossRef]

- Chi, C.; Zhang, S.; Xing, J.; Lei, Z.; Li, S.Z.; Zou, X. Pedhunter: Occlusion robust pedestrian detector in crowded scenes. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 10639–10646. [Google Scholar]

- Chen, L.; Lin, S.; Lu, X.; Cao, D.; Wu, H.; Guo, C.; Liu, C.; Wang, F.Y. Deep neural network based vehicle and pedestrian detection for autonomous driving: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 22, 3234–3246. [Google Scholar] [CrossRef]

- Khan, A.H.; Nawaz, M.S.; Dengel, A. Localized Semantic Feature Mixers for Efficient Pedestrian Detection in Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 5476–5485. [Google Scholar]

- Liu, Y.; Ma, X.; Bailey, J.; Lu, F. Reflection backdoor: A natural backdoor attack on deep neural networks. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part X 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 182–199. [Google Scholar]

- Wu, Y.; Song, M.; Li, Y.; Tian, Y.; Tong, E.; Niu, W.; Jia, B.; Huang, H.; Li, Q.; Liu, J. Improving convolutional neural network-based webshell detection through reinforcement learning. In Proceedings of the Information and Communications Security: 23rd International Conference, ICICS 2021, Chongqing, China, 19–21 November 2021; Proceedings, Part I 23. Springer: Berlin/Heidelberg, Germany, 2021; pp. 368–383. [Google Scholar]

- Ge, Y.; Wang, Q.; Zheng, B.; Zhuang, X.; Li, Q.; Shen, C.; Wang, C. Anti-distillation backdoor attacks: Backdoors can really survive in knowledge distillation. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, 20–24 October 2021; pp. 826–834. [Google Scholar]

- Wang, Z.; Wang, B.; Zhang, C.; Liu, Y.; Guo, J. Robust Feature-Guided Generative Adversarial Network for Aerial Image Semantic Segmentation against Backdoor Attacks. Remote Sens. 2023, 15, 2580. [Google Scholar] [CrossRef]

- Ye, Z.; Yan, D.; Dong, L.; Deng, J.; Yu, S. Stealthy backdoor attack against speaker recognition using phase-injection hidden trigger. IEEE Signal Process. Lett. 2023, 30, 1057–1061. [Google Scholar] [CrossRef]

- Zeng, Y.; Tan, J.; You, Z.; Qian, Z.; Zhang, X. Watermarks for Generative Adversarial Network Based on Steganographic Invisible Backdoor. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo, Brisbane, Australia, 10–14 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1211–1216. [Google Scholar]

- Jiang, L.; Ma, X.; Chen, S.; Bailey, J.; Jiang, Y.G. Black-box adversarial attacks on video recognition models. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 864–872. [Google Scholar]

- Kiourti, P.; Wardega, K.; Jha, S.; Li, W. TrojDRL: Evaluation of backdoor attacks on deep reinforcement learning. In Proceedings of the 2020 57th ACM/IEEE Design Automation Conference, Virtual Event, 20–24 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Bagdasaryan, E.; Shmatikov, V. Blind backdoors in deep learning models. In Proceedings of the 30th USENIX Security Symposium, Vancouver, BC, Canada, 11–13 August 2021; pp. 1505–1521. [Google Scholar]

- Chen, K.; Meng, Y.; Sun, X.; Guo, S.; Zhang, T.; Li, J.; Fan, C. Badpre: Task-agnostic backdoor attacks to pre-trained nlp foundation models. arXiv 2021, arXiv:2110.02467. [Google Scholar]

- Gan, L.; Li, J.; Zhang, T.; Li, X.; Meng, Y.; Wu, F.; Yang, Y.; Guo, S.; Fan, C. Triggerless backdoor attack for NLP tasks with clean labels. arXiv 2021, arXiv:2111.07970. [Google Scholar]

- Xiao, Q.; Chen, Y.; Shen, C.; Chen, Y.; Li, K. Seeing is not believing: Camouflage attacks on image scaling algorithms. In Proceedings of the 28th USENIX Security Symposium, Santa Clara, CA, USA, 14–16 August 2019; pp. 443–460. [Google Scholar]

- Li, Y.; Li, Y.; Wu, B.; Li, L.; He, R.; Lyu, S. Invisible backdoor attack with sample-specific triggers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 16463–16472. [Google Scholar]

- Han, X.; Xu, G.; Zhou, Y.; Yang, X.; Li, J.; Zhang, T. Physical backdoor attacks to lane detection systems in autonomous driving. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 2957–2968. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. arXiv 2019, arXiv:1904.01355. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High Quality Object Detection and Instance Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1483–1498. [Google Scholar] [CrossRef] [PubMed]

- IARPA. TrojAI: Trojns in Artificial Intelligence. 2021. Available online: https://www.iarpa.gov/index.php/research-programs/trojai (accessed on 1 September 2023).

- M14 Intelligence. Autonomous Vehicle Data Annotation Market Analysis. 2020. Available online: https://www.researchandmarkets.com/reports/4985697/autonomous-vehicledata-annotation-market-analysis (accessed on 1 September 2023).

- Luo, C.; Li, Y.; Jiang, Y.; Xia, S.T. Untargeted backdoor attack against object detection. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing, Rhodes Island, Greece, 4–9 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Quiring, E.; Rieck, K. Backdooring and poisoning neural networks with image-scaling attacks. In Proceedings of the 2020 IEEE Security and Privacy Workshops, Virtual, 18–20 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 41–47. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Li, Y.; Zhong, H.; Ma, X.; Jiang, Y.; Xia, S.T. Few-shot backdoor attacks on visual object tracking. arXiv 2022, arXiv:2201.13178. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).