Advanced Meteorological Hazard Defense Capability Assessment: Addressing Sample Imbalance with Deep Learning Approaches

Abstract

1. Introduction

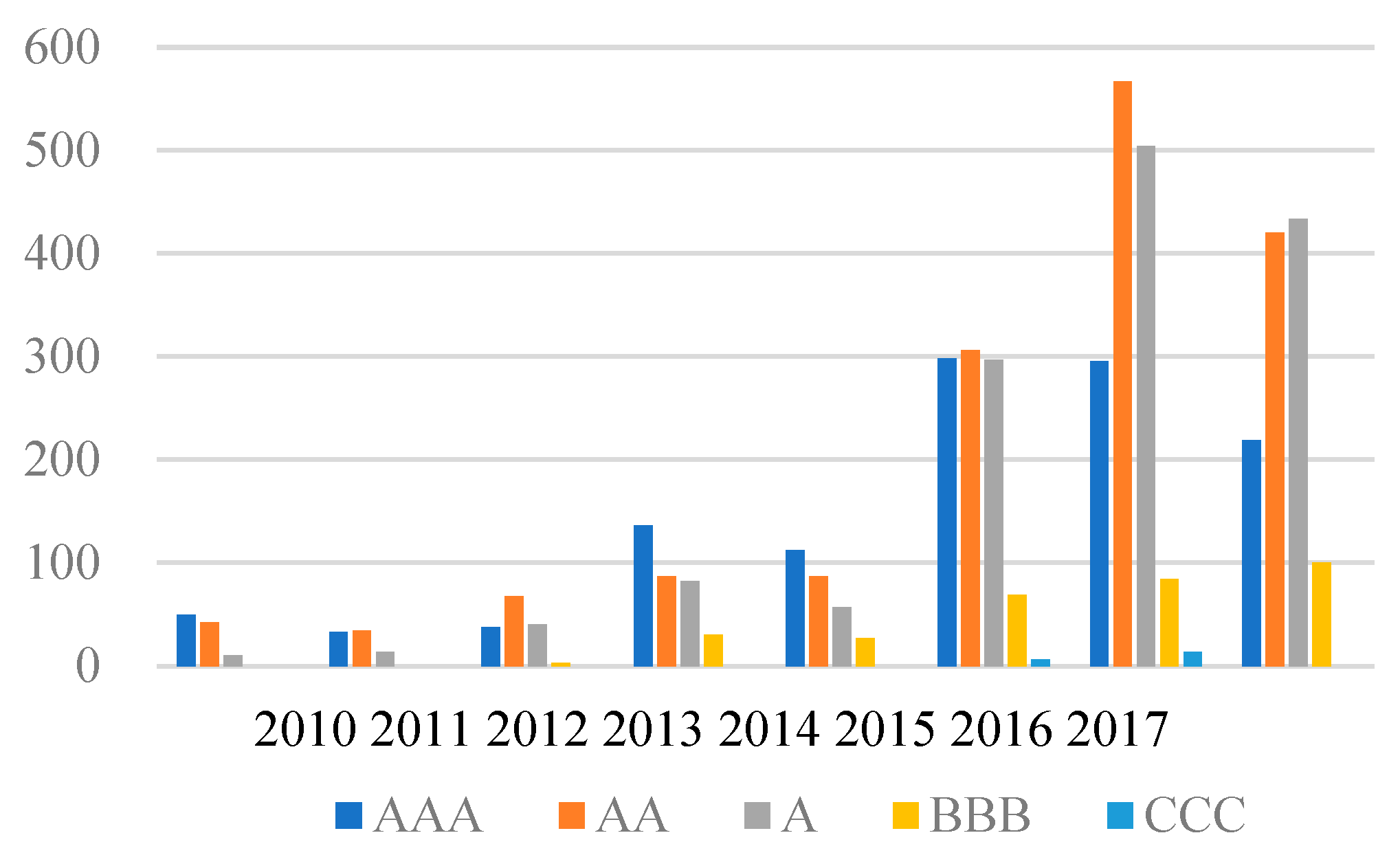

- (1)

- The capability spectrum across weather disaster emergency response agencies is diverse, and current loss functions tend to undervalue agencies with lower capabilities. For instance, out of the capability evaluation results for 1249 agencies released by the National Meteorological Administration in 2017, only 121 agencies scored below grade A. This indicates an unbalanced distribution of capability levels. With the current loss function, during the training of deep learning models, a handful of low-grade agencies might be misclassified as high-grade ones. Drawing from 2017 data, even if all 1370 agencies were ranked AA or above, the cumulative loss would merely be 8.8%.

- (2)

- Due to factors like inconsistent attention, variance in evaluation difficulty for institutional meteorological disaster response capabilities, and the present loss function, it becomes arduous to focus the evaluation model on entities that pose evaluative challenges. The essence of a capability evaluation is to spotlight agencies that demonstrate subpar capabilities, enabling the deployment of specific, tailored supervision [19]. However, evaluation bodies don’t uniformly allocate attention to their assessment outcomes. While the capability evaluation model can more easily discern capability levels from metrics like institution size and qualifications, for a minority of entities, ascertaining their capability levels via competency attributes is tough. Furthermore, agencies, especially those with diminished capabilities or those that are hard to evaluate, draw significant attention from actual management. The standard loss function used in contemporary machine learning models for capability evaluation struggles to adapt to imbalanced data. It disproportionately emphasizes easy-to-identify samples over complex ones, undermining the model’s generalization performance [20].

- (1)

- Weighted Loss Function Addition: By embedding a weighted loss function, the model is oriented toward paying extra attention to specific low-capacity-rank agencies. Given the uneven capability distribution among weather disaster emergency response agencies, the traditional loss function can be appropriately weighted [23]. By doing so, the loss function value for sparse low-capacity samples is augmented, leading to higher penalties for misclassifications. Consequently, the inherent losses from poor assessments of low-capacity agencies are curtailed [24].

- (2)

- Adaptive Focal Loss Function Integration: To focus on meteorological disaster response agencies with ambiguous capability levels, an adaptive focal loss function is established. This function, crafted around the classification error trends exhibited by agencies during model training, instructs the evaluation model to zero in on those agencies that present significant evaluative challenges. Theoretically, the focal loss function rectifies the imbalance between high and low capability samples throughout training, potentially outperforming preceding methods. The idea is that this scaling factor will automatically decrease the weight of straightforward samples during training, spotlighting those tricky-to-identify low-capacity entities.

- (3)

- Comprehensive WAFL Loss Construction: Combining the previously discussed loss functions, a holistic WAFL (Weighted Adaptive Focal Loss) function is proposed. This function, which contemplates both potential misclassification scenarios mentioned above, offers a harmonized blend of both. From an analytical perspective, merging weighted and focal losses enhances the prevailing model both at the sample level and during the training phase. This synthesis proves to be particularly beneficial for gauging the capability of entities in the water resources sector and appraising the risks linked with engineering quality.

- (4)

- WAFL-Based Deep Learning Algorithm Examination: With the WAFL loss function as a foundation, this delves into the intricacies of the deep neural network’s forward computation, error propagation, and parameter updating mechanisms. By harnessing the capabilities of the WAFL-based deep learning algorithm, a robust capability evaluation model can be crafted.

2. Materials and Methods

- (1)

- Simple sample: a sample whose ability level can be easily discerned by the model, i.e., the sample whose predicted label value closely matches the true value. The closer the error is to zero, the simpler the sample is.

- (2)

- Difficult samples: samples for which the model finds it hard to correctly determine the ability level, i.e., samples with predicted label values significantly differing from the true values. The larger the error , the more challenging the sample is.

- (3)

- Anomalous samples: extreme cases within the difficult samples, deviating significantly from the normal distribution, such that not even the optimal model can classify them correctly.

2.1. Loss Function Construction

2.1.1. Category-Weighted Loss Function

2.1.2. Adaptive Focus Loss Function Construction

- (1)

- The loss associated with sample has nearly minimized as the training rounds have increased.

- (2)

- Sample is anomalous, and the model is unable to learn the correct distribution. In both scenarios, the value of will decrease, thereby reducing the loss value , fulfilling the goal of minimizing the loss for simple and anomalous samples.

2.1.3. Weighted Adaptive Focus Loss Function

2.2. Deep Learning Model for Evaluating Weather Disaster Emergency Response Capacity

2.2.1. Forward Calculation

- (1)

- serves as the input to the deep neural network. The first layer’s activation function input, , is determined through the first hidden layer as elucidated in Equation (9):

- (2)

- is activated, undergoing a non-linear transformation as delineated in Equation (10):

- (3)

- Following calculations at the layer, the output corresponding to is acquired. The predicted value is then computed as

2.2.2. Error Backpropagation

2.2.3. Network Parameter Update

| Algorithm 1. Training algorithm of deep neural network model based on weighted adaptive focus loss function. |

| Input: Sample set S^’ comprising meteorological disaster emergency response capability features Error limit E Maximum number of iterations K Learning rate (lr) Batch size (B) Output: Optimized weights and biases for the capability feature extraction model |

| Initialize: epoch = 0 While epoch < K: for batch in S': # Extract a batch of weather emergency subject sample data from S' Loss = 0 # Initialize the total loss of each batch for i in range(batch_size): for l in range(1, Layers): # Stepwise forward calculation to obtain hidden layers based on Equations (9)–(11) z_l = dot (W_l, r_(l-1)) r_l = g_l(z_l) p_i = g_l(z_l) # Forward calculation of the output layer according to Equation (12) # Calculate the loss according to Equation (8) L_(α-Adaptive-Focal)(p_i,y_i) = -α_i s_i y_i^T log(p_i) Loss += L_(α-Adaptive-Focal)(p_i,y_i) # Calculate the average loss of a batch of samples L_(α-Adaptive-Focal)(p_batch,y_batch) = Loss/batch_size if L_(α-Adaptive-Focal)(p_batch,y_batch) ≤ E: # If the error is less than E, terminate the training break # Calculate the loss partial derivative of z_Layers according to Equation (15) δ_Layers = α_batch s_batch |p_batch-y_batch| for l in range(Layers-1, 0, −1): # Backward propagate the gradient according to Equations (14)–(16) # Calculate the loss partial derivative of z_l according to Equation (14) δ_l = α_batch s_batch W_(l+1)^T δ_(l+1) * g_l'(z_l) # Calculate the gradient of the loss to the lth layer parameter according to Equation (16) (∂L_(α-Adaptive-Focal)(p_batch,y_batch))/∂W_l = α_batch s_batch δ_l r_(l-1)^T for l in range(Layers-1, 0, −1): # Update the weights of each layer according to Equation (17) W_l = W_l − lr × α_batch s_batch δ_l r_(l-1)^T epoch += 1 |

3. Experiments and Analysis

3.1. Model Evaluation Criteria

3.2. Model Construction

- (1)

- Capability Feature Encoding: As detailed in Equations (3)–(9), the final encoder is attained by marrying the SGD algorithm with the training of an Encoder-Decoder architecture based on the ability feature sequence set .

- (2)

- Capability Feature Extraction: In alignment with Equation (10), feature extraction is conducted on to realize the objective of learning the dynamic features of the sequence and reducing dimensionality, thereby deriving the new feature set and the dimensionality-reduced data set .

- (3)

- Dataset Partitioning: Following a predetermined ratio, the dataset is bifurcated into a training set and a testing set .

- (4)

- Model Parameter Setting: Guided by existing domestic and international research, along with insights from authoritative experts, the range for hyperparameter values is established, as delineated in Table 2.

3.3. Performance Testing and Comparative Analysis

3.3.1. Comparative Analysis of Loss Function Performance

3.3.2. Subsubsection

3.3.3. Classifier Comparison

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| import numpy as np import tensorflow as tf epoch = 10 num_feature=3 num_lable =5 ru = 0.5 data=np.loadtxt("data/credit.csv",dtype=np.float,delimiter=',') def weight_dict (data,num_feature=3,num_lable =5): sample,column = np.shape(data) weight_dict ={} if column-num_feature == num_lable: for label in np.arange(0,column)[num_feature:]: #print(label) ones = np.ones([1,sample]) same_class = np.sum(ones × data[:,label]) weight_dict[label-num_feature]=(sample − same_class)/sample else: print(") return weight_dict alpha = tf.placeholder(tf.float32) s = tf.placeholder(tf.float32) X = tf.placeholder(tf.float32,[None,3]) Y = tf.placeholder(tf.float32,[None,5]) w1 = tf.Variable(tf.truncated_normal([3,7],stddev=0.1)) w2 = tf.Variable(tf.truncated_normal([7,5],stddev=0.1)) w3 = tf.Variable(tf.truncated_normal([5,5],stddev=0.1)) w4 = tf.Variable(tf.truncated_normal([5,3],stddev=0.1)) w5 = tf.Variable(tf.truncated_normal([3,5],stddev=0.1)) b1 = tf.Variable(tf.zeros([7])) b2 = tf.Variable(tf.zeros([5])) b3 = tf.Variable(tf.zeros([5])) b4 = tf.Variable(tf.zeros([3])) b5 = tf.Variable(tf.zeros([5])) L1 = tf.nn.relu(tf.matmul(X,w1)+b1) L2 = tf.nn.relu(tf.matmul(L1,w2)+b2) L3 = tf.nn.relu(tf.matmul(L2,w3)+b3) L4 = tf.nn.relu(tf.matmul(L3,w4)+b4) L5 = tf.nn.softmax(tf.matmul(L4,w5)+b5) loss = alpha*s*tf.nn.softmax_cross_entropy_with_logits(labels=Y,logits=L5) train_step = tf.train.GradientDescentOptimizer(0.5).minimize(loss) init = tf.global_variables_initializer() sess = tf.Session() sess.run(init) weight_diction = weight_dict(data) # diff = [0] # si =[0] # y_p = [] sample, column = np.shape(data) diff = np.ones([epoch+1,sample],dtype=np.float32) si =np.ones([epoch+1,sample],dtype=np.float32) y_p =np.zeros([epoch+1,sample],dtype=np.float32) for e in range(3): sample, column = np.shape(data) for i in range(sample): # current_epoch_yp = [] # current_epoch_diff = [] X_onesample=np.expand_dims(data[i, 0:num_feature],0) Y_onesample = np.expand_dims(data[i,num_feature:column],0) current_sample_class =0 for j in range(num_lable): if j ==1: current_sample_class = j break loss1,out = sess.run([loss,L5],feed_dict={X:X_onesample,Y:Y_onesample,alpha:weight_diction[current_sample_class],s:1}) current_y_p = np.sum(np.abs(data[i,num_feature:column]-out)) y_p[e][i] = current_y_p diff[2] = np.abs(y_p[1]-y_p[2]) diff[1] =np.abs(y_p[1]-y_p[0]) si[2] = ru*si[1] +(1-ru)*diff[1] si[3] = ru*si[2] +(1-ru)*diff[2] print(si[3]) for e in range(3,epoch): for i in range(sample): X_onesample=np.expand_dims(data[i, 0:num_feature],0) Y_onesample = np.expand_dims(data[i,num_feature:column],0) current_sample_class =0 for j in range(num_lable): if j ==1: current_sample_class = j break loss1,out = sess.run([loss,L5],feed_dict={X:X_onesample,Y:Y_onesample,alpha:weight_diction[current_sample_class],s:si[epoch][i]}) current_y_p = np.sum(np.abs(data[i,num_feature:column]-out)) y_p[e,i] = current_y_p # diff.append(y_p[-1] - y_p[-2]) y_p[e,i] = current_y_p diff[e] = np.abs(y_p[e] - y_p[e-1]) si[e+1] = ru * si[e] + (1 - ru) * diff[e] print(si[e+1]) print(loss1) |

References

- Baihaqi, L.H. The Role of Yonzipur 9/LLB/1 Kostrad in the Earthquake Emergency Response Phase (Case Study of Earthquake Disaster in Cianjur). Int. J. Soc. Sci. Res. 2023, 3, 1489–1693. [Google Scholar] [CrossRef]

- Bryen, D.N. Communication during times of natural or man-made emergencies. J. Pediatr. Rehabil. Med. 2009, 2, 123–129. [Google Scholar] [CrossRef]

- Corbacioglu, S.; Kapucu, N. Organisational learning and self-adaptation in dynamic disaster environments. Disasters 2006, 30, 212–233. [Google Scholar] [CrossRef]

- Mah Hashim, N.; Abu Bakar, N.A.; Kamaruzzaman, Z.A.; Shariff, S.R.; Burhanuddin, S.N.Z.A. Flood Governance: A Review on Allocation of Flood Victims Using Location-Allocation Model and Disaster Management in Malaysia. J. Geogr. Inf. 2023, 6, 493–503. [Google Scholar] [CrossRef]

- Zantal-Wiener, K.; Horwood, T.J. Logic modeling as a tool to prepare to evaluate disaster and emergency preparedness, response, and recovery in schools. New Dir. Eval. 2010, 2010, 51–64. [Google Scholar] [CrossRef]

- Wang, J.F.; Feng, L.J.; Zhai, X.Q. A system dynamics model of flooding emergency capability of coal mine. Prz. Elektrotechniczny 2012, 88, 209–211. [Google Scholar]

- Sugumaran, V.; Wang, X.Z.; Zhang, H.; Xu, Z. A capability assessment model for emergency management organizations. Inf. Syst. Front. 2017, 20, 653–667. [Google Scholar] [CrossRef]

- Kyrkou, C.; Kolios, P.; Theocharides, T.; Polycarpou, M. Machine Learning for Emergency Management: A Survey and Future Outlook. Proc. IEEE 2023, 111, 19–41. [Google Scholar] [CrossRef]

- Herlianto, M. Early Disaster Recovery Strategy: The Missing Link in Post-Disaster Implementation in Indonesia. Influ. Int. J. Sci. Rev. 2023, 5, 80–91. [Google Scholar] [CrossRef]

- Love, P.E.D.; Matthews, J. Quality, requisite imagination and resilience: Managing risk and uncertainty in construction. Reliab. Eng. Syst. Saf. 2020, 204, 12. [Google Scholar] [CrossRef]

- Hosseini, A.; Faheem, A.; Titi, H.; Schwandt, S. Evaluation of the long-term performance of flexible pavements with respect to production and construction quality control indicators. Constr. Build. Mater. 2020, 230, 9. [Google Scholar] [CrossRef]

- Francom, T.; Markham, C. Identifying Geotechnical Risk and Assigning Ownership on Water and Wastewater Pipeline Projects using Alternative Project Delivery Methods. In Proceedings of the Sessions of the Pipelines Conference, Phoenix, AZ, USA, 6–9 August 2017; Amer Soc Civil Engineers: New York, NY, USA, 2017; pp. 494–503. [Google Scholar]

- Yao, J.; Yan, L.; Xu, Z.; Wang, P.; Zhao, X. Collaborative Decision-Making Method of Emergency Response for Highway Incidents. Sustainability 2023, 15, 2099. [Google Scholar] [CrossRef]

- Shen, L.; Zhang, Z.; Tang, L. Study on Key Drivers and Collaborative Management Strategies for Construction and Demolition Waste Utilization in New Urban District Development: From a Social Network Perspective. J. Environ. Public Health 2023, 2023, 3660647. [Google Scholar] [CrossRef]

- Liu, Z.; Ma, L. Introduction of the Special Issue on Building Digital Government in China. Commun. ACM 2022, 65, 64–66. [Google Scholar] [CrossRef]

- Wang, C.J.; Ng, C.Y.; Brook, R. Response to COVID-19 in Taiwan: Big Data Analytics, New Technology, and Proactive Testing. JAMA 2020, 323, 1341–1342. [Google Scholar] [CrossRef]

- Heidary Dahooie, J.; Razavi Hajiagha, S.H.; Farazmehr, S.; Zavadskas, E.K.; Antucheviciene, J. A novel dynamic credit risk evaluation method using data envelopment analysis with common weights and combination of multi-attribute decision-making methods. Comput. Oper. Res. 2021, 129, 105223. [Google Scholar] [CrossRef]

- He, Y.; Xu, Z.; Gu, J. An approach to group decision making with hesitant information and its application in credit risk evaluation of enterprises. Appl. Soft Comput. 2016, 43, 159–169. [Google Scholar] [CrossRef]

- Shen, F.; Ma, X.; Li, Z.; Xu, Z.; Cai, D. An extended intuitionistic fuzzy TOPSIS method based on a new distance measure with an application to credit risk evaluation. Inf. Sci. 2018, 428, 105–119. [Google Scholar] [CrossRef]

- Shen, F.; Zhao, X.; Kou, G.; Alsaadi, F.E. A new deep learning ensemble credit risk evaluation model with an improved synthetic minority oversampling technique. Appl. Soft Comput. 2021, 98, 106852. [Google Scholar] [CrossRef]

- Wang, L.; Wu, C. Dynamic imbalanced business credit evaluation based on Learn++ with sliding time window and weight sampling and FCM with multiple kernels. Inf. Sci. 2020, 520, 305–323. [Google Scholar] [CrossRef]

- Huang, X.; Liu, X.; Ren, Y. Enterprise credit risk evaluation based on neural network algorithm. Cogn. Syst. Res. 2018, 52, 317–324. [Google Scholar] [CrossRef]

- Cai, X.; Qian, Y.; Bai, Q.; Liu, W. Exploration on the financing risks of enterprise supply chain using Back Propagation neural network. J. Comput. Appl. Math. 2020, 367, 112457. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. JMLR.org 2015, 37, 448–456. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority oversampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Graves, A.; Mohamed, A.R.; Hinton, G. Speech Recognition with Deep Recurrent Neural Networks. In Proceedings of the Acoustics Speech & Signal Processing International Conference, Vancouver, BC, Canada, 26–31 May 2013. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Ahmad, A.M.; Ismail, S.; Samaon, D.F. Recurrent Neural NETWORK with Backpropagation through Time for Speech Recognition. In Proceedings of the IEEE International Symposium on Communications and Information Technology, Sapporo, Japan, 26–29 October 2004; IEEE: New York, NY, USA, 2005. [Google Scholar] [CrossRef]

- Liu, Y.; Zhong, D.; Cui, B.; Zhong, G.; Wei, Y. Study on real-time construction quality monitoring of storehouse surfaces for RCC dams. Autom. Constr. 2015, 49, 100–112. [Google Scholar] [CrossRef]

- Chen, F.; Jiao, H.; Han, L.; Shen, L.; Du, W.; Ye, Q.; Yu, G. Real-time monitoring of construction quality for gravel piles based on Internet of Things. Autom. Constr. 2020, 116, 103228. [Google Scholar] [CrossRef]

- Ma, Z.; Cai, S.; Mao, N.; Yang, Q.; Feng, J.; Wang, P. Construction quality management based on a collaborative system using BIM and indoor positioning. Autom. Constr. 2018, 92, 35–45. [Google Scholar] [CrossRef]

- AL-Sahar, F.; Przegalińska, A.; Krzemiński, M. Risk assessment on the construction site with the use of wearable technologies. Ain Shams Eng. J. 2021, 12, 3411–3417. [Google Scholar] [CrossRef]

- Qing, L.; Rengkui, L.; Jun, Z.; Quanxin, S. Quality Risk Management Model for Railway Construction Projects. Procedia Eng. 2014, 84, 195–203. [Google Scholar] [CrossRef][Green Version]

- Gu, J.; Xia, X.; He, Y.; Xu, Z. An approach to evaluating the spontaneous and contagious credit risk for supply chain enterprises based on fuzzy preference relations. Comput. Ind. Eng. 2017, 106, 361–372. [Google Scholar] [CrossRef]

| Prediction/Real | 1 | 0 |

|---|---|---|

| 1 | True (TP) | False Negative (FN) |

| 0 | False Positive (FP) | True Negative (TN) |

| Hyperparameters | Meaning | Role | Range of Values |

|---|---|---|---|

| Layers | Number of neural network layers | Change the complexity of the model | [4, 7]. |

| Number of neurons in layer l | Change the complexity of the model | [4, 8]. | |

| The activation function of the lth layer | Learning non-linear relationships | {Relu, Tanh, Sogmoid, Softmax} | |

| B | Batch size of input samples per training round | Trade-off between model accuracy and training efficiency | {16, 32, 64} |

| E | Number of model training rounds | Make the model continuously learn the distribution of the training data | [100, 500] |

| ε | Learning Rate | Control the step size of the weight update | {0.001, 0.005, 0.01, 0.05} |

| o | Adaptive learning rate algorithm | Automatically adjust the learning rate according to some strategy to find the global optimal point | {SGD, AdaGrad, RMSProp, Adam} |

| Correction factor | Control for The correction magnitude of | {0.2, 0.5, 0.8} |

| Automatic Weather Station Coverage Rate | Hydrographic Station Network Density | Weather Radar Station Coverage | Number of Flood Control Works | Ratio of Lightning Protection Device Installation | Clean Water Guarantee Number | Number of Spare Generating Sets | Organizational System Construction Status | Information Release Management | Weather Disaster Emergency Regulations Construction | Number of Shelters | Number of Doctors per 1000 People | Status of Disaster Information Dissemination Awareness | Command Staff Quality | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Number of samples | 4052 | 4052 | 4052 | 4052 | 4052 | 4052 | 4052 | 4052 | 4052 | 4052 | 4052 | 4052 | 4052 | 4052 |

| Average value | 0.029 | 0.010 | 0.058 | 0.009 | 0.005 | 0.011 | 0.015 | 0.040 | 0.023 | 0.019 | 0.016 | 0.003 | 0.005 | 0.012 |

| Variance | 0.048 | 0.034 | 0.079 | 0.045 | 0.038 | 0.049 | 0.059 | 0.061 | 0.082 | 0.060 | 0.038 | 0.023 | 0.035 | 0.031 |

| Minimum value | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 25% quantile | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 quintiles | 0.016 | 0 | 0.043 | 0 | 0 | 0 | 0 | 0.008 | 0 | 0 | 0 | 0 | 0 | 0 |

| 75th percentile | 0.036 | 0.006579 | 0.086 | 0 | 0 | 0 | 0 | 0.086 | 0 | 0 | 0 | 0 | 0 | 0 |

| Maximum value | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| Hyperparameters | Value |

|---|---|

| Layers | 5 |

| 7, 5, 5, 3, 5, respectively | |

| Relu for the hidden layer and Softmax for the output layer | |

| B | 64 |

| E | 300 |

| ε | 0.005 |

| o | Adam |

| 0.5 |

| Models/Performance | Accuracy | F1 Score | AUC |

|---|---|---|---|

| Encoder-Adaptive-Focal | 0.844 | 0.896 | 0.713 |

| Encoder-DNN | 0.828 | 0.875 | 0.652 |

| Encoder-Focal | 0.836 | 0.883 | 0.719 |

| Models/Performance | Accuracy | F1 Score | AUC |

|---|---|---|---|

| Encoder-Adaptive-Focal | 0.852 | 0.904 | 0.725 |

| Encoder-DNN | 0.835 | 0.881 | 0.665 |

| Encoder-Focal | 0.843 | 0.889 | 0.731 |

| Hyperparameters | Value |

|---|---|

| Layers | 5 |

| 7, 5, 5, 3, 5, respectively | |

| Relu for the hidden layer and Softmax for the output layer | |

| B | 64 |

| E | 300 |

| ε | 0.005 |

| o | Adam |

| 0.5 |

| Hyperparameters | Value |

|---|---|

| Layers | 5 |

| 7, 5, 5, 3, 5, respectively | |

| Relu for the hidden layer and Softmax for the output layer | |

| B | 64 |

| E | 300 |

| ε | 0.005 |

| o | Adam |

| 0.5 |

| Models/Performance | Accuracy | F1 Score | AUC |

|---|---|---|---|

| Encoder-Adaptive-Focal | 0.844 | 0.896 | 0.713 |

| Adaptive-Focal | 0.819 | 0.862 | 0.641 |

| PCA-Adaptive-Focal | 0.801 | 0.865 | 0.628 |

| Models/Performance | Accuracy | F1 score | AUC |

|---|---|---|---|

| Encoder-Adaptive-Focal | 0.844 | 0.896 | 0.713 |

| Encoder-DecisionTree | 0.781 | 0.850 | 0.533 |

| Encoder-SVM | 0.776 | 0.849 | 0.526 |

| Encoder-NaiveBayes | 0.773 | 0.845 | 0.522 |

| Hyperparameters | Meaning | Value |

|---|---|---|

| max_depth | Maximum depth of the tree | 2 |

| criterion | Conditions for delineating nodes | gini |

| bootstrap | Self-help method | True |

| min_samples_split | Minimum number of samples in the node dataset | 10 |

| min_samples_leaf | Number of samples in leaf nodes at least | 6 |

| max_leaf_nodes | The maximum number of leaf nodes the model can have | 4 |

| Hyperparameters | Meaning | Value |

|---|---|---|

| kernel | Kernel functions | RBF |

| gamma | Coefficients of the kernel function | 0.33 |

| C | Penalty coefficient of the objective function | 1.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, J.; Saga, R.; Dai, Q.; Mao, Y. Advanced Meteorological Hazard Defense Capability Assessment: Addressing Sample Imbalance with Deep Learning Approaches. Appl. Sci. 2023, 13, 12561. https://doi.org/10.3390/app132312561

Tang J, Saga R, Dai Q, Mao Y. Advanced Meteorological Hazard Defense Capability Assessment: Addressing Sample Imbalance with Deep Learning Approaches. Applied Sciences. 2023; 13(23):12561. https://doi.org/10.3390/app132312561

Chicago/Turabian StyleTang, Jiansong, Ryosuke Saga, Qiangsheng Dai, and Yingchi Mao. 2023. "Advanced Meteorological Hazard Defense Capability Assessment: Addressing Sample Imbalance with Deep Learning Approaches" Applied Sciences 13, no. 23: 12561. https://doi.org/10.3390/app132312561

APA StyleTang, J., Saga, R., Dai, Q., & Mao, Y. (2023). Advanced Meteorological Hazard Defense Capability Assessment: Addressing Sample Imbalance with Deep Learning Approaches. Applied Sciences, 13(23), 12561. https://doi.org/10.3390/app132312561