Abstract

In the field of computer vision, segmenting a 3D object into its component parts is crucial to understanding its structure and characteristics. Much work has focused on 3D object part segmentation directly from point clouds, and significant progress has been made in this area. This paper proposes a novel 3D object part segmentation method that focuses on integrating three key modules: a keypoint-aware module, a feature extension module, and an attention-aware module. Our approach starts by detecting keypoints, which provide the global feature of the inner shape that serves as the basis for segmentation. Subsequently, we utilize the feature extension module to expand the dimensions, obtain the local representation of the obtained features, provide richer object representation, and improve segmentation accuracy. Furthermore, we introduce an attention-aware module that effectively combines the features of the global and local parts of objects to enhance the segmentation process. To validate the proposed model, we also conduct experiments on the point cloud classification task. The experimental results demonstrate the effectiveness of our method, thus outperforming several state-of-the-art methods in 3D object part segmentation and classification.

1. Introduction

Point clouds are increasingly being embraced as data structures that are part of diverse application domains, such as autonomous driving and robotics, thus underscoring the significance of obtaining detailed and all-encompassing 3D representations. Employing 3D object part segmentation is a fundamental task in computer vision that involves dividing a three-dimensional object into its constituent parts. This task is crucial for various applications such as object recognition, scene understanding, robotics, and augmented reality. Understanding the intricate details of an object, its parts, and their relationships enables machines to perceive and interact with their environments more effectively.

Traditionally, 3D object part segmentation has been challenging due to the complexity of 3D shapes, variations in object categories, and the lack of annotated data for training. With the advent of deep learning techniques and the availability of large-scale 3D datasets, significant progress has been made in this domain. However, the challenge remains to develop models that can effectively handle the sparsity and irregularity of point clouds, as well as accommodate a wide variety of object shapes and structures. This requires innovative approaches that can effectively capture the geometric and topological features of 3D objects. Additionally, the quality of the segmentation largely depends on the granularity and specificity of the annotated data. Thus, enhancing the annotation quality and refining the training dataset are essential steps for improving the performance of 3D object part segmentation models.

One common approach is to use geometric methods, which rely on the inherent properties of the 3D shape. These methods often segment the object based on features such as the following: some methods adopt curvature analysis [1,2], which is a measure of how much a curve deviates from a straight line (in 2D) or a plane (in 3D). In the context of 3D object part segmentation, curvature analysis involves computing the curvature at each point on the object’s surface. Points with a high curvature are often indicative of edges or boundaries between parts. Methods like calculating the principal component analysis (PCA) of the local point neighbourhood or computing the eigenvalues of the Hessian matrix of the surface can be used to estimate the curvature. Some methods rely on surface normals [3,4], which are vectors that are perpendicular to the surface of an object. By analyzing the variation in surface normals across the object, it is possible to identify regions where the surface orientation changes abruptly. These regions often correspond to boundaries between different parts of the object. The surface normal at a point can be computed using methods like the crossproduct of the derivatives of the surface in the u and v directions or by computing the crossproduct of the vectors connecting the point to its neighbors. Some methods rely on crease detection [5], which is another important feature for part segmentation, as they often indicate boundaries between different parts. Crease detection methods typically involve analyzing the second derivative of the surface or using techniques that are similar to edge detection in image processing, such as the Canny edge detector.

Another popular approach is to use machine learning techniques, particularly deep learning models like convolutional neural networks (CNNs). These methods typically require a large dataset of labeled 3D objects with part annotations. Data-driven methods have shown great success in recent years, as they can learn complex patterns and relationships from the data. Roughly speaking, these techniques can be divided into the following classes: PointNet is a pioneering deep learning architecture designed specifically for processing point clouds, which are a common representation of 3D data. PointNet uses a set of fully connected layers to extract features from each point independently and then combines these features using a max pooling layer [6]. This allows the model to learn complex spatial hierarchies. Variants of PointNet, such as PointNet++ [7], have been introduced to address some of its limitations, such as the lack of local feature extraction capabilities. Another common approach for handling 3D data is to convert point clouds or meshes into voxel grids and then apply traditional CNNs [8]. This allows the use of well-established 2D CNN architectures, but the voxelization process can result in a loss of detail and is computationally expensive. Some graph-based methods model the 3D object as a graph, where nodes represent points or regions on the object, and edges represent connections between them [9]. Graph neural networks (GNNs) can then be used to process this graph-structured data. These methods can capture complex relationships between different parts of the object and are often more efficient than voxel-based methods. Some other methods adopt multiview CNNs to render the 3D object from multiple viewpoints and then apply 2D CNNs to these 2D images [10]. The features extracted from different views are then combined to make a final prediction. This method leverages the strength of 2D CNNs while still capturing some of the 3D structure.

Although the above methods have achieved good performance in part segmentation tasks, there are still at least three aspects that can be improved. First, although they can obtain good feature representation capabilities, the features they obtains are relatively sparse, which can make it difficult to satisfy the requirements of subsequent complex tasks. Second, while these works can capture good edge information from the input point cloud, they are unable to model the overall shape information, which is very important for the part segmentation of 3D objects. For instance, prior knowledge that the input point cloud represents a cup with a handle can be very helpful for subsequent part segmentation. Furthermore, although many works attempt to extract highly expressive features, numerous studies have demonstrated that combining multimodal data can provide very strong feature representations for downstream tasks. Given the substantial modal disparity between the edge features and the features provided by keypoints, devising a method to effectively integrate them into a more powerful feature representation has a strong impact on subsequent tasks.

Specifically, we introduce a keypoint detection algorithm to provide intrinsic shape information for part segmentation. In this paper, we use PointNet++ as our baseline, which can obtain good point cloud features, but this information is relatively sparse. We propose a feature expansion module to extract global feature representations to address the issue of sparse feature extraction. For the second problem, we propose a keypoint acquisition module that can effectively capture the intrinsic shape information of point clouds, which is of great help for object part segmentation. For the third problem, we introduce a novel feature fusion mechanism that learns weight information for features of different modalities through this mechanism.

In summary, the contributions of this paper include the following:

- We propose an attentional keypoint detection network that leverages inner-shape information for part segmentation.

- In this network, a keypoint-aware module, a feature extension module, and an attention-aware module are proposed.

- Experiments on the ShapeNet-Part dataset demonstrate the effectiveness and superiority of the proposed modules in improving segmentation accuracy.

- In order to demonstrate that the three proposed modules are not limited by the task itself, we conducted experiments on the ModelNet40 dataset to validate the effectiveness of the modules.

2. Related Work

Keypoint Detection: Keypoint detection on point clouds is a crucial step in many computer vision tasks, as keypoints provide a compact yet informative representation of 3D shapes. Early works in this field often focused on extensions of traditional 2D keypoint detectors for 3D data. The 3D scale-invariant feature transform (3D-SIFT) [11] and the 3D Harris detector [12] are two notable examples of such extensions. In the era of deep learning, PointNet [6] was one of the pioneering architectures for point cloud processing, which directly consumes point clouds and learns high-level features. Several keypoint detection methods have built upon PointNet. For instance, PointNetLK [13] introduced a point cloud registration method based on PointNet, which could also be used for keypoint detection. KPConv [14] is another significant approach that introduced a convolutional-like operation for point clouds. It employs a set of deformable convolutional kernels to adapt to the local geometry of point clouds, which has been shown to be effective for keypoint detection tasks. More recently, methods such as PointCNN [15] and DGCNN [9] have been introduced, which employ different architectures and strategies for learning features from point clouds. These architectures have also been used for keypoint detection tasks, thereby showing promising results. PointCNN [15] introduces a novel convolution operation, X-Conv, for unordered point clouds. It learns a transformation matrix to reorder the input points into a latent and potentially canonical order, which makes the convolution operation possible. The network can be trained to predict a heatmap for each keypoint, where the maxima of the heatmaps correspond to the detected keypoints. DGCNN [9] introduces a new layer called EdgeConv, which operates on a graph that is dynamically constructed from the input point cloud. For each point, the k-nearest neighbors are found, and features are aggregated from these neighbors. The EdgeConv layers help capture local geometric patterns and relationships between points, which are important for identifying keypoints.

Deep Learning on Point Clouds: Deep learning on point clouds has become a burgeoning field due to the growing availability of 3D data from sensors such as LiDAR and the increasing demand for 3D understanding in applications like autonomous vehicles, robotics, and augmented reality. One of the seminal works in this field is PointNet [6], which proposed a deep learning architecture specifically designed for point cloud data. The work of [6] uses a series of fully connected layers to process points individually, followed by a global max pooling layer to capture the global features of the point cloud. Generally speaking, it can be divided into the following aspects: point-based [14,15,16,17], graph-based [18,19,20,21,22], sparse voxel-based [23,24,25,26], spatial CNN-based [22,27,28,29] and, more recently, transformer-based [30,31,32,33,34].

Attention Mechanism/Transformer-based Segmentation: Transformer-based models have recently been applied to the task of point cloud part segmentation with promising results [32,35,36,37,38,39,40]. One such work is the Point Transformer [41], which introduces a point attention layer that operates on the point cloud data. This layer enhances the features by capturing global context through a self-attention mechanism, which is beneficial for part segmentation tasks. The work of [38] groups potential features from point clouds into superpoints and directly predicts instances through query vectors. Their model can capture the instance information through the superpoint crossattention mechanism. The work of [42] combines the transformer architecture with a U-Net-like structure for semantic segmentation tasks. Although originally proposed for 2D images, SegFormer can be adapted for 3D point cloud part segmentation. The transformer layers in SegFormer enable the model to capture long-range dependencies between points in the cloud, which is crucial for accurate segmentation. The work of [43] specifically targets point cloud segmentation tasks. It introduces a novel transformer block for point clouds and employs a hierarchical architecture to capture both local and global features. The transformer layers in it allow the model to learn complex relationships between points, which is beneficial for part segmentation.

Point Feature Enhancement: Point feature enhancement focuses on improving the quality and expressiveness of learned features from point clouds for various tasks such as classification, segmentation, and part segmentation [44,45,46,47,48,49,50,51,52]. One of the works that addresses this problem is the Feature Propagation module proposed for PointNet++ [7]. This module utilizes interpolated features from coarser resolutions to improve the quality of the features at finer resolutions, thus aiding in tasks like point cloud segmentation. The work of [14] introduces a convolution-like operation for point clouds, which learns a set of deformable convolutional kernels that adapt to the local geometry of the point cloud. This approach has been shown to be effective for capturing both the local and global features of the point cloud.

3. Our Approach

3.1. Background

PointNet++ [7] addresses one of the main limitations of the original PointNet [6], which is its inability to capture local geometric structures effectively. The key innovation of PointNet++ is the introduction of a “set abstraction” module, which groups points into overlapping local regions and applies PointNet to each group. The set abstraction module consists of three main operations: sampling, grouping, and PointNet. The sampling operation selects a subset of points as centroids, the grouping operation forms local regions around these centroids, and the PointNet operation extracts features from the points in each local region. Multiple layers of set abstraction modules are employed to progressively downsample the point cloud and extract hierarchical features. These features are then concatenated and processed by a series of fully connected layers to generate the final feature representation for the entire point cloud.

DGCNN [9] is a neural network architecture specifically designed for processing point cloud data. The key innovation of DGCNN is the EdgeConv operation, which dynamically constructs a k-nearest neighbor graph for each point in the input point cloud. The EdgeConv operation operates on the edges of this graph and aggregates information from neighboring points to generate features that capture both local geometric structures and global context. It employs a series of EdgeConv layers, thus progressively refining each point’s features. These refined features are then aggregated using a global max pooling operation to generate a global feature vector representing the entire point cloud. The main strengths of DGCNN are its ability to capture complex geometric patterns, its robustness for input permutations, and its efficiency in processing large-scale point clouds. It has achieved state-of-the-art performance on several benchmarks for point cloud processing tasks.

3.2. Keypoint-Aware Module

Currently, numerous methods are attempting to extract key points from point clouds for various tasks. For instance, the Intrinsic Shape Signature (ISS) algorithm, which relies exclusively on the shape’s internal geometry, is independent of its position and orientation in space. This algorithm has been utilized by many open-source tools, such as Open3D, for keypoint extraction from point clouds. However, this handcrafted method falls short in accurately detecting key points in complex objects. A method proposed in [53] introduced an unsupervised aligned keypoint detector to obtain key points from the input point cloud. Nevertheless, this approach requires training a separate model for each object category, which can be resource-intensive and time-consuming. To address this issue, LAKe-Net [54] proposes an unsupervised multiscale keypoint detector (UMKD), which is designed to capture key points across all input categories. This approach yields reasonable results for keypoint extraction. However, it treats each stage of the fully connected layer as an independent module, thus disregarding the varying contributions to the final output. The UMKD outputs multiscale coarse point clouds for the input data without considering the relationships between them.

In this paper, we introduce a keypoint-aware module to establish relationships among these points, thus enriching the representation of the point cloud. Before delving into the keypoint-aware module, we will first provide a brief overview of LAKe-Net.

LAKe-Net is a novel point cloud completion model designed to tackle the challenge of missing topology in partially observed point clouds. The model adopts a novel prediction approach called Keypoints–Skeleton–Shape, which involves three main steps:

- Aligned Keypoint Localization: An asymmetric keypoint locator is proposed, which consists of an unsupervised multiscale keypoint detector and a complete keypoint generator. The keypoint detector is designed to identify aligned keypoints from both complete and partial point clouds. Theoretical evidence is presented to demonstrate the detector’s efficacy in capturing aligned keypoints for objects within a subcategory.

- Surface-Skeleton Generation: A new type of skeleton, named Surface-Skeleton, is generated from the localized keypoints based on geometric priors.

- Shape Refinement: The model features a refinement subnet, where multiscale surface-skeletons are fed into each recursive skeleton-assisted refinement module.

As stated above, the UMKD module effectively addresses the issue of previous methods being needing to train separate keypoint extraction models for multiple types. However, it obtains multiple independent results through multiple softmax operations, which inadvertently increases the subsequent computational load. In this paper, we propose the keypoint-aware module, which learns the weight information of keypoints at different scales to integrate multiple output results, thereby obtaining more effective keypoint information.

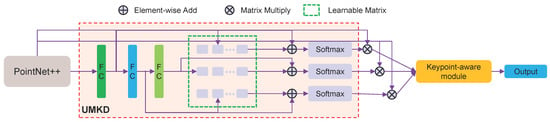

In more detail, the keypoint-aware module is designed to address the inefficiencies inherent in the UMKD approach by learning a weighting scheme for the keypoints extracted at various scales. Instead of treating each scale independently, the keypoint-aware module considers the interdependencies between different scales, thus allowing for a more coherent and meaningful integration of keypoint information. This not only reduces the computational burden by eliminating the need for multiple softmax operations, but also enhances the quality of the extracted keypoint information, thus leading to improved performance in downstream tasks. For a detailed structure of this module, please refer to Figure 1.

Figure 1.

Visualization of the keypoint-aware module. Best viewed in color.

For simplicity, we make the following assumptions. For simplicity, the point cloud data in this paper are denoted as , and the output of the UMKD is to predict a multiscale keypoints , where , and , i denotes the number of keypoints. In order to better combine these multiscale keypoints and make better use of this information, we propose a keypoint-aware module to learn a weight representation and organically combine the keypoints of these three scales. The details are as follows:

where denotes the convex weight obtained from the UMKD. Due to the dimensions of these multiscale weights not being the same, in our experiments, we used a deconvolution layer to upsample the weights and add them together. Then, we adopted the compact generalized nonlocal network (CGNL) [55] to explicitly build rich correlations between position items of weight.

where , , and denote three transform functions, and denotes the similarity between the two items.

Then, we use a softmax function to normalize the obtained to predict the more plausible new keypoint; the visualizations of the keypoints are shown in Figure 2.

Figure 2.

Visualizations of keypoints as shown in red points. Best viewed in color.

3.3. Feature Extension Module

PointNet++ is a very powerful framework that has been adopted by many works that attempt to extract reasonable feature representations for tasks such as point cloud object detection, point cloud classification, etc. However, the features extracted from [7] lack a consideration of the shape information of the input point cloud. In response to this limitation, in this article, we use EdgeConv to extract the shape information of point clouds to supplement the shortcomings of PointNet++ in extracting features. At the same time, we can also expand the dimensions of the features obtained by PointNet++ to enhance the expression ability of the features.

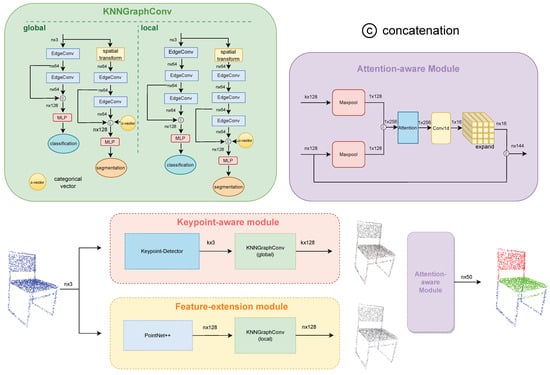

In this module, we introduce the KNNGraphConv module as a strategic augmentation for refining the features extracted by PointNet++. For simplicity, let us denote the features extracted by PointNet++ as . As mentioned above, has some unavoidable limitations. To address this, we employ EdgeConv as our submodule, which is a type of graph neural network designed to operate on point clouds. The specific network structure is illustrated in Figure 3. The EdgeConv operation is implemented on a graph representation of the point cloud, thus treating each point as a node connected to its nearest neighbors through edges. This EdgeConv operation involves the exchange of messages between neighboring nodes, thereby enabling the network to effectively capture local geometric structures and relationships among points. The primary objective of integrating the KNNGraphConv module with the features extracted by PointNet++ is to elevate the quality of the feature representation. By enhancing the network’s capacity to capture both local and global information, we anticipate a notable improvement in performance across various tasks involving point clouds, which includes but is not limited to object classification and part segmentation. This strategic integration aims to contribute to the overall effectiveness of the network in handling complex point cloud data scenarios.

Figure 3.

The overall architecture of our AKDNet model. It is built based on DGCNN with three new modules: (1) the feature extension module extracts more plausible feature representation; (2) the keypoint-aware module is utilized to obtain the inner shape information of the object; (3) the attention-aware module is utilized for adaptively gathering different feature from different models for the final prediction. Best viewed in color.

3.4. Attention-Aware Module

Following the incorporation of the two aforementioned modules, we derive features from two distinct modalities: one characterized by robust edge features from the PointNet++ branch and the other distinguished by potent inner shape feature representations from the keypoint branch. Both modalities are integral to the subsequent semantic segmentation module. Mere concatenation without due consideration to their distinctiveness and a failure to acknowledge their unique contributions to the final outcome would undermine the potential complementarity of the features from both modalities. To fully harness the synergies between these feature sets, it is imperative to implement a thoughtful integration strategy that acknowledges and leverages the strengths of each modality, thus aiming to maximize their collective efficacy in enhancing the overall performance of the semantic segmentation model. This nuanced approach ensures a more comprehensive and effective utilization of information from both the edge and inner shape features, thus optimizing the model’s ability to discern semantic patterns in the data.

In more detail, the features from the PointNet++ branch capture the edge information of the point cloud, which is important for recognizing the boundaries between different parts of the object. On the other hand, the features from the keypoint branch capture the inner shape information, which helps in understanding the overall structure of the object. Both of these features are essential for accurate semantic segmentation. However, simply concatenating these features may not be sufficient, as it does not take into account the varying importance of these features for different parts of the object. Therefore, we propose a feature fusion mechanism that learns a weighting scheme for each feature type, thus allowing for a more meaningful integration of the features and enhancing the performance of the semantic segmentation module.

For simplicity, let us denote the features from the the keypoint branch as . In more detail, the features and represent different aspects of the point cloud data, thus capturing edge information and inner shape information, respectively. To effectively combine these features, we propose a mechanism to learn a gating weight, which determines the contribution of each feature to the final output. This gating weight is learned from the data and is optimized during the training process. By learning a gating weight, we can dynamically adjust the contribution of each feature based on the input data, thus allowing for a more flexible and adaptive combination of features. This approach not only preserves the unique characteristics of each feature, but also maximizes the complementarity of the features, thereby leading to improved performance in downstream tasks. The details are as follows:

where denotes a convolutional layer; the output channel of this layer is two—one of them denotes the weight of , and the other one means the weight of —and concatenates these two feature vectors to form a larger feature vector that encompasses information from both. When the modal weights are generated, we calculate the Hadamard product as follows.

where denotes another convolutional layer.

Thus, the final prediction is:

In the following sections, we evaluate the models for different tasks—part segmentation and classification—to demonstrate the effectiveness of our proposed AKDNet.

4. Experiments and Discussions on Part Segmentation

4.1. Dataset

ShapeNet-Part [56] is a dataset specifically designed for 3D object part segmentation tasks. It is derived from the larger ShapeNet dataset, which contains 3D models from various categories. ShapeNet-Part provides fine-grained part annotations for a subset of these models, thus covering 16 object categories with 50 parts in total. Each object is represented as a point cloud, and each point is labeled with a part identifier. This dataset is widely used in the research community for developing and evaluating 3D part segmentation algorithms.

4.2. Training Details

The stochastic gradient descent (SGD) optimizer was used in the training phase, and the batch size was set to 32. The initial learning rate was configured to 0.01 for the ShapeNet-Part dataset. The network was trained for 200 epochs on the ShapeNet-Part dataset using the cosine annealing decay strategy. All experiments were implemented on the PyTorch platform using RTX 4090 GPUs.

4.3. Architecture

The network architecture is shown in Figure 3. The features were extracted by PointNet++ from the original input point cloud through three set abstraction (SA) modules, thus resulting in higher-dimensional features. These high-dimensional features were then fed into our feature extension module, which consists of one spatial transform layer and three EdgeConv layers. The spatial transform layer is used to ensure permutation invariance and rotational invariance, while the EdgeConv layers are used to learn more local features. In EdgeConv, we set the value of k to 40 to obtain better features for learning. We conducted experiments to choose the value of k, as shown in the Figure 3. The keypoint-aware module is divided into two parts: the keypoint detector and KNNGraph. In the keypoint detector, we selected 300 as the value of the keypoint through statistical analysis. Since the number of keypoint detector points is much smaller compared to the complete point cloud, to prevent overfitting in the KNNGraph, we only retained two layers of EdgeConv and set the value of k to 20. This larger value of k allows each point to learn features that are more biased towards global features. In the attention-aware module, we introduced the gate layer to obtain weights, thus learning a better fusion method for the features of the keypoint module and feature extension module. Finally, the results were obtained by feeding the features into a fully connected layer.

4.4. Ablation Study and Qualitative Results

In this section, we conduct ablative studies to demonstrate the effectiveness of three proposed modules of our AKDNet on the ShapeNet-Part dataset.

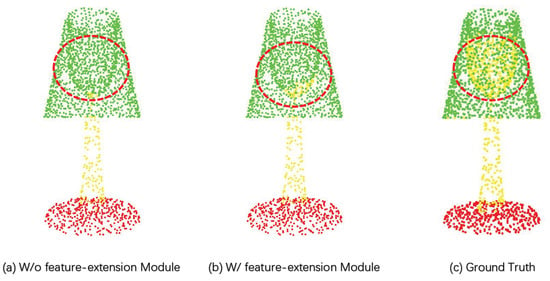

Effectiveness of Feature Extension Module: To demonstrate the effectiveness of the feature extension module, we provide the visualization result of ShapeNet-Part dataset with and without this model based on PointNet++ (see Figure 4). From the comparison between Figure 4a,b, we can observe that, through the feature extension module, our method achieved more reasonable results, especially for certain parts located within the interior regions of the shape.

Figure 4.

Qualitative comparison results between using and not using the feature extension module on PointNet++. (a) gives the result without this module on PointNet++, (b) shows the visualization results of PointNet++ combined with our feature extension module, and (c) shows the corresponding ground truth values. The red dashed circles give detailed improvement visualization results. Best viewed in color.

In more detail, the feature extension module allows the model to capture more detailed and refined features from the point cloud, thereby leading to a better representation of the shape’s structure. This is particularly beneficial for parts of the shape that are internal or less prominent, which might be challenging to accurately capture using conventional methods. The improved feature representation results in more accurate and coherent segmentation, especially in the interior regions of the shape.

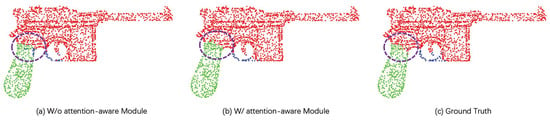

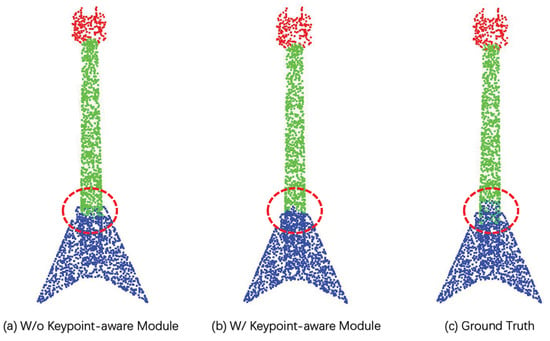

Effectiveness of the Keypoint-Aware Module: We provide visual results with and without using the module on the ShapeNet-Part dataset to validate the advantages of the keypoint-aware module, which are shown in Figure 5. From the comparison between Figure 5a,b, we can observe that, through the keypoint detection module, our method achieved more reasonable results, especially in the intersection areas of different parts. For example, the position circled in red is located at the division between the upper and lower parts of the guitar, where there is semantic discontinuity. Traditional methods do not handle this area well, but introducing the concept of keypoints can provide a better solution.

Figure 5.

Qualitative comparison results with and without the attention-aware module on PointNet++. (a) gives the result without this module on PointNet++, (b) shows the visualization results of PointNet++ combined with our attention-aware module, and (c) shows the corresponding ground truth values. The purple dashed circles give detailed improvement visualization results. Best viewed in color.

In more detail, the keypoint detection module allows the model to identify and focus on critical points in the point cloud that are indicative of important structural or semantic features. In the case of the guitar example, the keypoints help the model to recognize the boundary between the upper and lower parts of the guitar, which is semantically distinct. By focusing on these keypoints, the model can learn a more robust representation of the shape’s structure and semantics, thus leading to improved segmentation results, especially in areas where traditional methods may struggle due to the complexity and ambiguity of the features.

Effectiveness of the Attention-Aware Module: To demonstrate the effectiveness of our attention-aware module, we conducted experiments on the ShapeNet-Part dataset with and without the module. The qualitative results are shown in Figure 6. From left to right, the visualizations present the results from PointNet++ without the attention-aware module, the results from PointNet++ with this module, and the corresponding ground truth values, respectively. From the visualization, we can see that the part of the object within the red dashed circle is complex: part of it is inside, and part of it is an intersection. PointNet++ was unable to distinguish the segmented curve between them. Based on this module, our model could combine the global and local features to form more reasonable feature representations and provide more reasonable prediction results.

Figure 6.

Qualitative comparison results between using and not using the keypoint-aware module on PointNet++. (a) gives the result without this module on PointNet++, (b) shows the visualization results of PointNet++ combined with our keypoint-aware module, and (c) shows the corresponding ground truth values. The red dashed circles give detailed improvement visualization results. Best viewed in color.

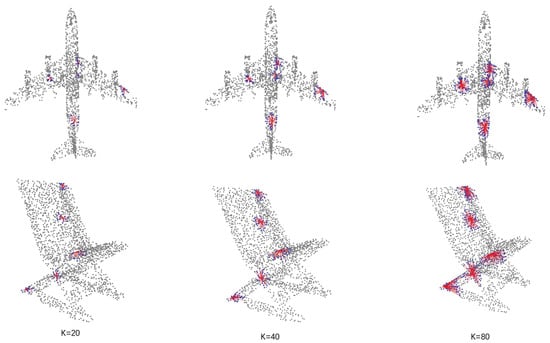

Comparisons with the State-Of-The-Art Methods. To evaluate the impact of the number of neighbors on performance, we conducted experiments with different numbers of neighbors, as shown in Table 1 and Figure 7. We found that more neighboring points did not always lead to better performance, nor did fewer neighboring points always lead to better performance. We observed that a value of 40 for the number of neighbors yielded the best performance in our experiments. This supports our hypothesis that a balanced combination of global and local features is necessary to achieve optimal results.

Table 1.

Results of our model with different numbers of nearest neighbors.

Figure 7.

Visualization of different numbers of nearest neighbors on point clouds. Red point is the selected point, and blue points are the surrounding points selected by KNN. Best viewed in color.

In Table 2, we present the performance of the three modules of the AKDNet in an ablation study. In this paper, we used PointNet++ as the baseline. First, we provide the results of PointNet++ on the ShapeNet-Part dataset, where it achieved 85.23% performance in the mean intersection over union (mIoU). The ablation experiments were conducted by incrementally adding the three proposed modules and comparing the results with the baseline. As shown in Table 2, the results clearly demonstrate that all three proposed modules improved the final prediction results.

Table 2.

Ablation study of the proposed AKDNet model on ShapeNet-Part dataset. KP Module, FE Module, and AA Module denote keypoint-aware module, feature extension module, and attention-aware module, respectively.

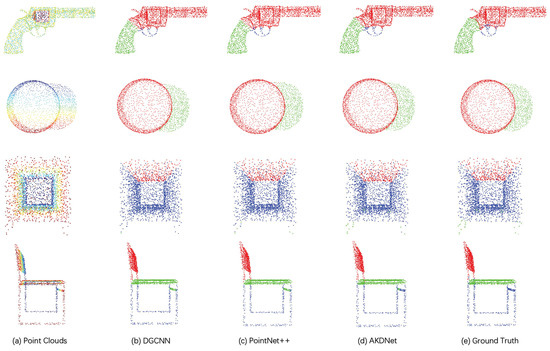

Moreover, we compared our proposed method with other state-of-the-art methods on the ShapeNet-Part dataset. The quantitative and qualitative comparison results are shown in Table 3 and Figure 8, respectively. We believe that incorporating these modules can significantly enhance the performance of existing models. In our case, the ablation study indicates that each of the proposed modules, the feature extension module, keypoint-aware module, and attention-aware module, contributed positively to the overall performance of the model, thus demonstrating their effectiveness and the importance of their integration into the model architecture.

Table 3.

Performance comparison on ShapeNet-Part validation set with state-of-the-art methods.

Figure 8.

The qualitative comparison results for 3D object part segmentation on the ShapeNet-Part dataset have been provided. We offer a comparison between DGCNN, PointNet++, and our AKDNet, along with the original point cloud and corresponding ground truth values for reference. The comparison demonstrates that AKDNet is able to produce segmentation results that are more consistent with the ground truth values, thus indicating its effectiveness in capturing and understanding the underlying structure and parts of the 3D objects. This visual evidence supports the claim that AKDNet generates more reasonable and accurate segmentation results compared to other methods. It is best viewed in color.

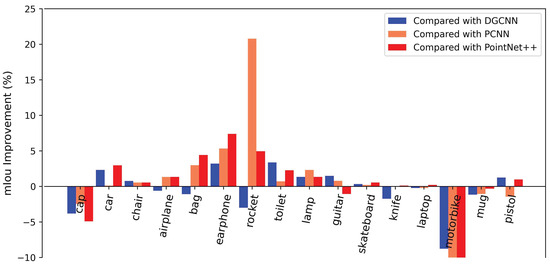

To further illustrate the effectiveness of the modules introduced in this paper, we conducted a comparative analysis of our method with three classic approaches, PointNet++, PCNN, and DGCNN, as shown in Figure 9.

Figure 9.

Performance Analysis. Per-class mIoU improvement of our AKDNet over DGCNN, PCNN, and PointNet++ on ShapeNet-Part dataset. Best viewed in color.

5. Experiments and Discussions on Point Cloud Classification

5.1. Dataset

ModelNet40 [64] is a comprehensive dataset for 3D shape classification and retrieval. It contains 12,311 CAD models from 40 categories, which are prealigned and scaled. The dataset is split into a training set with 9843 models and a test set with 2468 models. Each model in the dataset is a 3D mesh. ModelNet40 is commonly used for evaluating 3D shape classification and retrieval algorithms, as well as for other tasks like 3D object recognition and segmentation.

5.2. Training Details

Our network was optimized end-to-end using the SGD optimizer with a batch size of 80. The initial learning rate for the ModelNet40 dataset was set to 0.01. The network was trained for 250 epochs on the ModelNet40 dataset using the cosine annealing decay strategy. All experiments were implemented on the PyTorch platform using RTX 4090 GPUs.

5.3. Architecture

Unlike the segmentation task, we reduced the three set abstraction (SA) layers in the PointNet++ module to two layers for the classification task. After that, the features were fed into the feature extension module. In the classification task, the spatial transform layer is not necessary, so the feature extension module only contains three EdgeConv layers. The rest of the structure is the same as the part segmentation task.

5.4. Performance

The experimental results on the ModelNet40 dataset are provided in Table 4, which indicates that our method outperformed some of the recent methods from the past few years and achieved better results than our baseline. This demonstrates that our proposed modules for point cloud feature enhancement are not only successful for the part segmentation task, but also effectively contribute to improving performance in classification tasks.

Table 4.

Classification results on ModelNet40.

6. Conclusions

In this paper, we present a novel AKDNet designed to address the intricate task of 3D object part segmentation. Drawing inspiration from PointNet++ and DGCNN, we have introduced the keypoint-aware module, feature extension module, and attention-aware module to enhance segmentation performance. The keypoint-aware module proposes a keypoint extraction strategy to obtain the inner shape cue of the input 3D point cloud. The feature extension module establishes relationships among the current points to obtain more expressive feature representation. The attention-aware module calculates the contributions of the keypoint feature and the point feature to enhance the performance. To validate the effectiveness of the three proposed modules, we also conducted experiments on the task of point cloud classification. We obtained an 85.83 mIoU accuracy in the ShapeNet-Part dataset and a 93.0 accuracy in the ModelNet40 dataset. Qualitative and quantitative experimental results show that our AKDNet achieves reasonable performance improvement in 3D object part segmentation and classification.

Author Contributions

Conceptualization, F.Z. and Q.Z.; methodology, F.Z. and Q.Z.; software, Q.Z., H.Z., and S.L.; validation, H.Z. and S.L.; formal analysis, F.Z. and Q.Q.; investigation, F.Z. and Y.H.; resources, F.Z. and Q.Z.; data curation, Q.Z. and N.J.; writing—original draft preparation, F.Z.; writing—review and editing, Q.Q. and Y.H.; visualization, Q.Z.; supervision, F.Z.; project administration, F.Z. and X.C. All authors have read and agreed to the published version of the manuscript.

Funding

The research was funded by the BeiHang University Yunnan Innovation Institute Yunding Technology Plan (2021) of the Yunnan Provincial Key R&D Program (202103AN080001-003).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available in this article.

Acknowledgments

The authors thank the editor and the anonymous reviewers for their valuable suggestions. The authors also thank Ju Dai for her suggestions and discussion regarding this paper.

Conflicts of Interest

Author Qianfang Qi was employed by the company TravelSky Technology Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Pauly, M.; Gross, M.; Kobbelt, L.P. Efficient simplification of point-sampled surfaces. In Proceedings of the IEEE Visualization, VIS 2002, Boston, MA, USA, 27 October–1 November 2002; pp. 163–170. [Google Scholar]

- Rusinkiewicz, S. Estimating curvatures and their derivatives on triangle meshes. In Proceedings of the 2nd International Symposium on 3D Data Processing, Visualization and Transmission, 3DPVT 2004, Thessaloniki, Greece, 9 September 2004; pp. 486–493. [Google Scholar]

- Hoppe, H.; DeRose, T.; Duchamp, T.; McDonald, J.; Stuetzle, W. Surface reconstruction from unorganized points. In Proceedings of the 19th Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 1 July 1992; pp. 71–78. [Google Scholar]

- Botsch, M.; Kobbelt, L. An intuitive framework for real-time freeform modeling. ACM Trans. Graph. 2004, 23, 630–634. [Google Scholar] [CrossRef]

- Ohtake, Y.; Belyaev, A.; Seidel, H.P. Ridge-valley lines on meshes via implicit surface fitting. In ACM SIGGRAPH 2004 Papers; Association for Computing Machinery: New York, NY, USA, 2004; pp. 609–612. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation, Inc.: La Jolla, CA, USA, 2017; Volume 30, pp. 1–10. [Google Scholar]

- Maturana, D.; Scherer, S. Voxnet: A 3d convolutional neural network for real-time object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 922–928. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic graph cnn for learning on point clouds. ACM Trans. Graph. 2019, 38, 146. [Google Scholar] [CrossRef]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-view convolutional neural networks for 3d shape recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 945–953. [Google Scholar]

- Kazhdan, M.; Funkhouser, T.; Rusinkiewicz, S. Rotation invariant spherical harmonic representation of 3 d shape descriptors. In Proceedings of the Symposium on Geometry Processing, Aachen, Germany, 23–25 June 2003; Volume 6, pp. 156–164. [Google Scholar]

- Lu, Y.; Sarkis, M.; Bi, N.; Lu, G. From Local to Holistic: Self-supervised Single Image 3D Face Reconstruction Via Multi-level Constraints. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 8368–8375. [Google Scholar]

- Wang, Y.; Solomon, J.M. Deep closest point: Learning representations for point cloud registration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3523–3532. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. Kpconv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6411–6420. [Google Scholar]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. Pointcnn: Convolution on x-transformed points. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation, Inc.: La Jolla, CA, USA, 2018; Volume 31, pp. 1–11. [Google Scholar]

- Lin, Y.; Yan, Z.; Huang, H.; Du, D.; Liu, L.; Cui, S.; Han, X. Fpconv: Learning local flattening for point convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4293–4302. [Google Scholar]

- Wiersma, R.; Nasikun, A.; Eisemann, E.; Hildebrandt, K. Deltaconv: Anisotropic point cloud learning with exterior calculus. arXiv 2021, arXiv:2111.08799. [Google Scholar]

- Zhang, K.; Hao, M.; Wang, J.; de Silva, C.W.; Fu, C. Linked dynamic graph cnn: Learning on point cloud via linking hierarchical features. arXiv 2019, arXiv:1904.10014. [Google Scholar]

- Zhou, H.; Feng, Y.; Fang, M.; Wei, M.; Qin, J.; Lu, T. Adaptive graph convolution for point cloud analysis. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 4965–4974. [Google Scholar]

- Wang, L.; Huang, Y.; Hou, Y.; Zhang, S.; Shan, J. Graph attention convolution for point cloud semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10296–10305. [Google Scholar]

- Shi, W.; Rajkumar, R. Point-gnn: Graph neural network for 3d object detection in a point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1711–1719. [Google Scholar]

- Huang, C.Q.; Jiang, F.; Huang, Q.H.; Wang, X.Z.; Han, Z.M.; Huang, W.Y. Dual-graph attention convolution network for 3-D point cloud classification. IEEE Trans. Neural Netw. Learn. Syst. 2022, 99, 1–13. [Google Scholar] [CrossRef]

- Graham, B.; Engelcke, M.; Van Der Maaten, L. 3d semantic segmentation with submanifold sparse convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9224–9232. [Google Scholar]

- Choy, C.; Gwak, J.; Savarese, S. 4d spatio-temporal convnets: Minkowski convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3075–3084. [Google Scholar]

- Tang, H.; Liu, Z.; Li, X.; Lin, Y.; Han, S. Torchsparse: Efficient point cloud inference engine. Proc. Mach. Learn. Syst. 2022, 4, 302–315. [Google Scholar]

- Tang, H.; Liu, Z.; Zhao, S.; Lin, Y.; Lin, J.; Wang, H.; Han, S. Searching efficient 3d architectures with sparse point-voxel convolution. In Computer Vision—ECCV 2020; Springer: Cham, Switzerland, 2020; pp. 685–702. [Google Scholar]

- Liu, Y.; Fan, B.; Xiang, S.; Pan, C. Relation-shape convolutional neural network for point cloud analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8895–8904. [Google Scholar]

- Song, W.; Zhang, L.; Tian, Y.; Fong, S.; Liu, J.; Gozho, A. CNN-based 3D object classification using Hough space of LiDAR point clouds. Hum.-Centric Comput. Inf. Sci. 2020, 10, 19. [Google Scholar] [CrossRef]

- Fan, H.; Yu, X.; Ding, Y.; Yang, Y.; Kankanhalli, M. Pstnet: Point spatio-temporal convolution on point cloud sequences. arXiv 2022, arXiv:2205.13713. [Google Scholar]

- Lai, X.; Liu, J.; Jiang, L.; Wang, L.; Zhao, H.; Liu, S.; Qi, X.; Jia, J. Stratified transformer for 3d point cloud segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8500–8509. [Google Scholar]

- Yang, C.K.; Chen, M.H.; Chuang, Y.Y.; Lin, Y.Y. 2D-3D Interlaced Transformer for Point Cloud Segmentation with Scene-Level Supervision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Dalian, China, 27–29 January 2023; pp. 977–987. [Google Scholar]

- Ibrahim, M.; Akhtar, N.; Anwar, S.; Mian, A. SAT3D: Slot Attention Transformer for 3D Point Cloud Semantic Segmentation. IEEE Trans. Intell. Transp. Syst. 2023, 24, 5456–5466. [Google Scholar] [CrossRef]

- Schult, J.; Engelmann, F.; Hermans, A.; Litany, O.; Tang, S.; Leibe, B. Mask3D: Mask Transformer for 3D Semantic Instance Segmentation. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 8216–8223. [Google Scholar]

- Wu, X.; Lao, Y.; Jiang, L.; Liu, X.; Zhao, H. Point transformer v2: Grouped vector attention and partition-based pooling. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation, Inc.: La Jolla, CA, USA, 2022; Volume 35, pp. 33330–33342. [Google Scholar]

- Zhou, J.; Xiong, Y.; Chiu, C.; Liu, F.; Gong, X. SAT: Size-Aware Transformer for 3D Point Cloud Semantic Segmentation. arXiv 2023, arXiv:2301.06869. [Google Scholar]

- Cheng, H.X.; Han, X.F.; Xiao, G.Q. TransRVNet: LiDAR Semantic Segmentation With Transformer. IEEE Trans. Intell. Transp. Syst. 2023, 24, 5895–5907. [Google Scholar] [CrossRef]

- Li, X.; Ding, H.; Zhang, W.; Yuan, H.; Pang, J.; Cheng, G.; Chen, K.; Liu, Z.; Loy, C.C. Transformer-based visual segmentation: A survey. arXiv 2023, arXiv:2304.09854. [Google Scholar]

- Sun, J.; Qing, C.; Tan, J.; Xu, X. Superpoint transformer for 3d scene instance segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 2393–2401. [Google Scholar]

- Du, R.; Ma, Z.; Xie, P.; He, Y.; Cen, H. PST: Plant segmentation transformer for 3D point clouds of rapeseed plants at the podding stage. ISPRS J. Photogramm. Remote Sens. 2023, 195, 380–392. [Google Scholar] [CrossRef]

- Zhou, F.; Rao, J.; Shen, P.; Zhang, Q.; Qi, Q.; Li, Y. REGNet: Ray-Based Enhancement Grouping for 3D Object Detection Based on Point Cloud. Appl. Sci. 2023, 13, 6098. [Google Scholar] [CrossRef]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.H.; Koltun, V. Point transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 16259–16268. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation, Inc.: La Jolla, CA, USA, 2021; Volume 34, pp. 12077–12090. [Google Scholar]

- Guo, M.H.; Cai, J.X.; Liu, Z.N.; Mu, T.J.; Martin, R.R.; Hu, S.M. Pct: Point cloud transformer. Comput. Vis. Media 2021, 7, 187–199. [Google Scholar] [CrossRef]

- Wang, Z.; Guo, J.; Zhang, C.; Wang, B. Multiscale feature enhancement network for salient object detection in optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5634819. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, C.; Jiang, X. Imbalanced fault diagnosis of rolling bearing using improved MsR-GAN and feature enhancement-driven CapsNet. Mech. Syst. Signal Process. 2022, 168, 108664. [Google Scholar] [CrossRef]

- Li, Y.; Bao, H.; Ge, Z.; Yang, J.; Sun, J.; Li, Z. Bevstereo: Enhancing depth estimation in multi-view 3d object detection with temporal stereo. In Proceedings of the AAAI Conference on Artificial Intelligence, Arlington, VA, USA, 25–27 October 2023; Volume 37, pp. 1486–1494. [Google Scholar]

- Yan, X.; Gao, J.; Zheng, C.; Zheng, C.; Zhang, R.; Cui, S.; Li, Z. 2dpass: 2d priors assisted semantic segmentation on lidar point clouds. In Computer Vision—ECCV 2022; Springer: Cham, Switzerland, 2022; pp. 677–695. [Google Scholar]

- Kong, J.; Wang, H.; Yang, C.; Jin, X.; Zuo, M.; Zhang, X. A spatial feature-enhanced attention neural network with high-order pooling representation for application in pest and disease recognition. Agriculture 2022, 12, 500. [Google Scholar] [CrossRef]

- Zheng, C.; Yan, X.; Zhang, H.; Wang, B.; Cheng, S.; Cui, S.; Li, Z. Beyond 3d siamese tracking: A motion-centric paradigm for 3d single object tracking in point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8111–8120. [Google Scholar]

- Hang, Y.; Boryczka, J.; Wu, N. Visible-light and near-infrared fluorescence and surface-enhanced Raman scattering point-of-care sensing and bio-imaging: A review. Chem. Soc. Rev. 2022, 51, 329–375. [Google Scholar] [CrossRef]

- Song, Y.; He, F.; Duan, Y.; Liang, Y.; Yan, X. A kernel correlation-based approach to adaptively acquire local features for learning 3D point clouds. Comput.-Aided Des. 2022, 146, 103196. [Google Scholar] [CrossRef]

- Zheng, C.; Yan, X.; Gao, J.; Zhao, W.; Zhang, W.; Li, Z.; Cui, S. Box-aware feature enhancement for single object tracking on point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 13199–13208. [Google Scholar]

- Shi, R.; Xue, Z.; You, Y.; Lu, C. Skeleton merger: An unsupervised aligned keypoint detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Montreal, QC, Canada, 11–17 October 2021; pp. 43–52. [Google Scholar]

- Tang, J.; Gong, Z.; Yi, R.; Xie, Y.; Ma, L. Lake-net: Topology-aware point cloud completion by localizing aligned keypoints. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1726–1735. [Google Scholar]

- Yue, K.; Sun, M.; Yuan, Y.; Zhou, F.; Ding, E.; Xu, F. Compact generalized non-local network. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation, Inc.: La Jolla, CA, USA, 2018; Volume 31, pp. 1–10. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Lee, S.; Jeon, M.; Kim, I.; Xiong, Y.; Kim, H.J. Sagemix: Saliency-guided mixup for point clouds. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation, Inc.: La Jolla, CA, USA, 2022; Volume 35, pp. 23580–23592. [Google Scholar]

- Yu, X.; Tang, L.; Rao, Y.; Huang, T.; Zhou, J.; Lu, J. Point-bert: Pre-training 3d point cloud transformers with masked point modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19313–19322. [Google Scholar]

- Chen, J.; Kakillioglu, B.; Velipasalar, S. Background-aware 3-D point cloud segmentation with dynamic point feature aggregation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5703112. [Google Scholar] [CrossRef]

- Yang, F.; Davoine, F.; Wang, H.; Jin, Z. Continuous conditional random field convolution for point cloud segmentation. Pattern Recognit. 2022, 122, 108357. [Google Scholar] [CrossRef]

- Wang, H.; Tang, J.; Ji, J.; Sun, X.; Zhang, R.; Ma, Y.; Zhao, M.; Li, L.; Lv, T.; Ji, R.; et al. Beyond First Impressions: Integrating Joint Multi-modal Cues for Comprehensive 3D Representation. arXiv 2023, arXiv:2308.02982. [Google Scholar]

- Wu, C.; Zheng, J.; Pfrommer, J.; Beyerer, J. Attention-based Point Cloud Edge Sampling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Dalian, China, 27–29 January 2023; pp. 5333–5343. [Google Scholar]

- Zhou, W.; Jin, W.; Wang, Q.; Wang, Y.; Wang, D.; Hao, X.; Yu, Y. VTPNet for 3D deep learning on point cloud. arXiv 2023, arXiv:2305.06115. [Google Scholar]

- Yi, L.; Kim, V.G.; Ceylan, D.; Shen, I.C.; Yan, M.; Su, H.; Lu, C.; Huang, Q.; Sheffer, A.; Guibas, L. A scalable active framework for region annotation in 3d shape collections. ACM Trans. Graph. 2016, 35, 210. [Google Scholar] [CrossRef]

- Sheshappanavar, S.V.; Kambhamettu, C. Dynamic local geometry capture in 3d point cloud classification. In Proceedings of the 2021 4th International Conference on Multimedia Information Processing and Retrieval (MIPR), Tokyo, Japan, 22–24 March 2021; pp. 158–164. [Google Scholar]

- Yavartanoo, M.; Hung, S.H.; Neshatavar, R.; Zhang, Y.; Lee, K.M. Polynet: Polynomial neural network for 3d shape recognition with polyshape representation. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; pp. 1014–1023. [Google Scholar]

- Qian, G.; Hammoud, H.; Li, G.; Thabet, A.; Ghanem, B. Assanet: An anisotropic separable set abstraction for efficient point cloud representation learning. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation, Inc.: La Jolla, CA, USA, 2021; Volume 34, pp. 28119–28130. [Google Scholar]

- Berg, A.; Oskarsson, M.; O’Connor, M. Points to patches: Enabling the use of self-attention for 3d shape recognition. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 528–534. [Google Scholar]

- Zhang, R.; Guo, Z.; Gao, P.; Fang, R.; Zhao, B.; Wang, D.; Qiao, Y.; Li, H. Point-m2ae: Multi-scale masked autoencoders for hierarchical point cloud pre-training. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation, Inc.: La Jolla, CA, USA, 2022; Volume 35, pp. 27061–27074. [Google Scholar]

- Yan, S.; Yang, Y.; Guo, Y.; Pan, H.; Wang, P.S.; Tong, X.; Liu, Y.; Huang, Q. 3D Feature Prediction for Masked-AutoEncoder-Based Point Cloud Pretraining. arXiv 2023, arXiv:2304.06911. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).