Ensemble-NQG-T5: Ensemble Neural Question Generation Model Based on Text-to-Text Transfer Transformer

Abstract

1. Introduction

2. Related Work

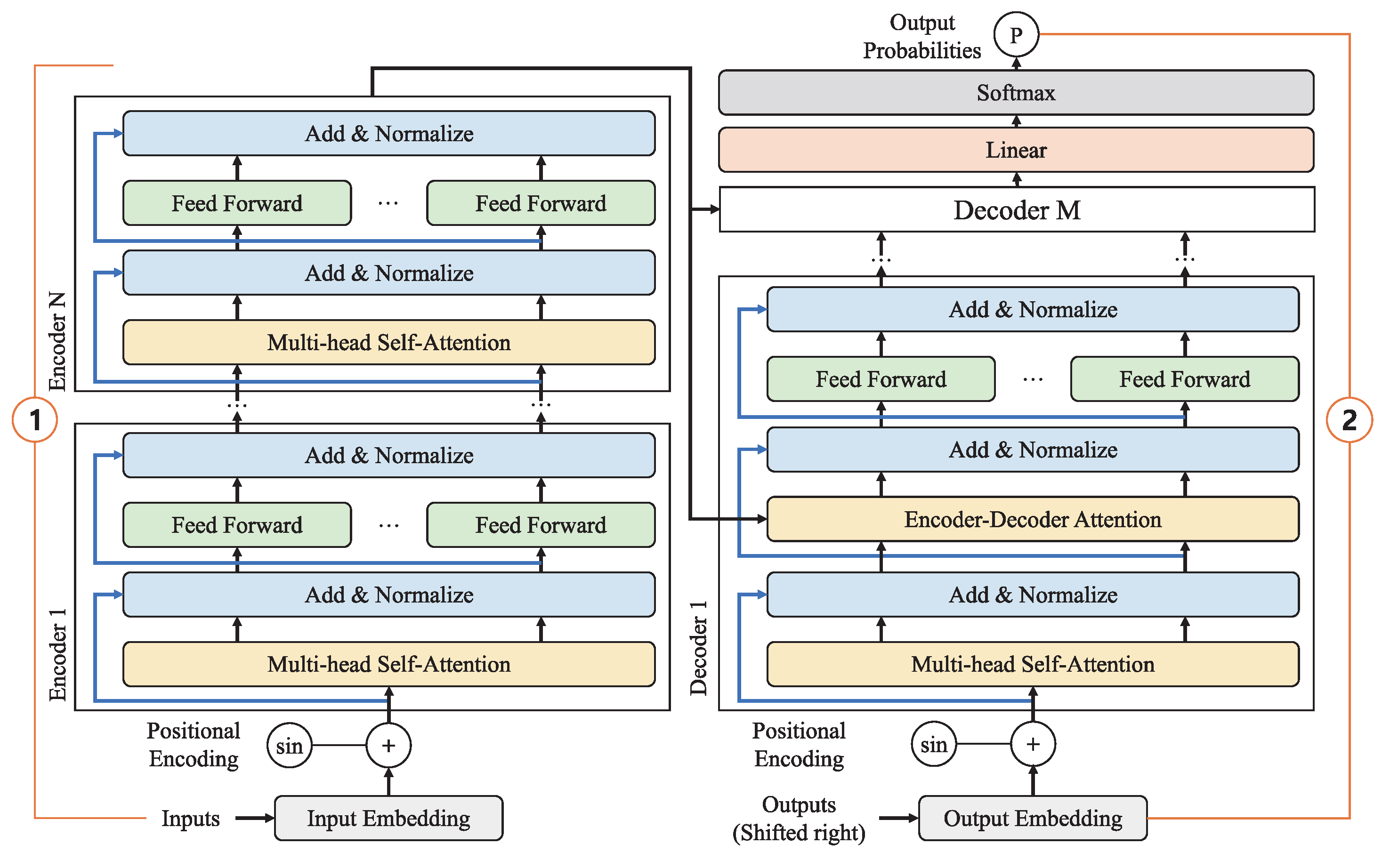

2.1. Pretrained Models

2.2. Evaluation Metrics of NQG

3. Ensemble-NQG-T5

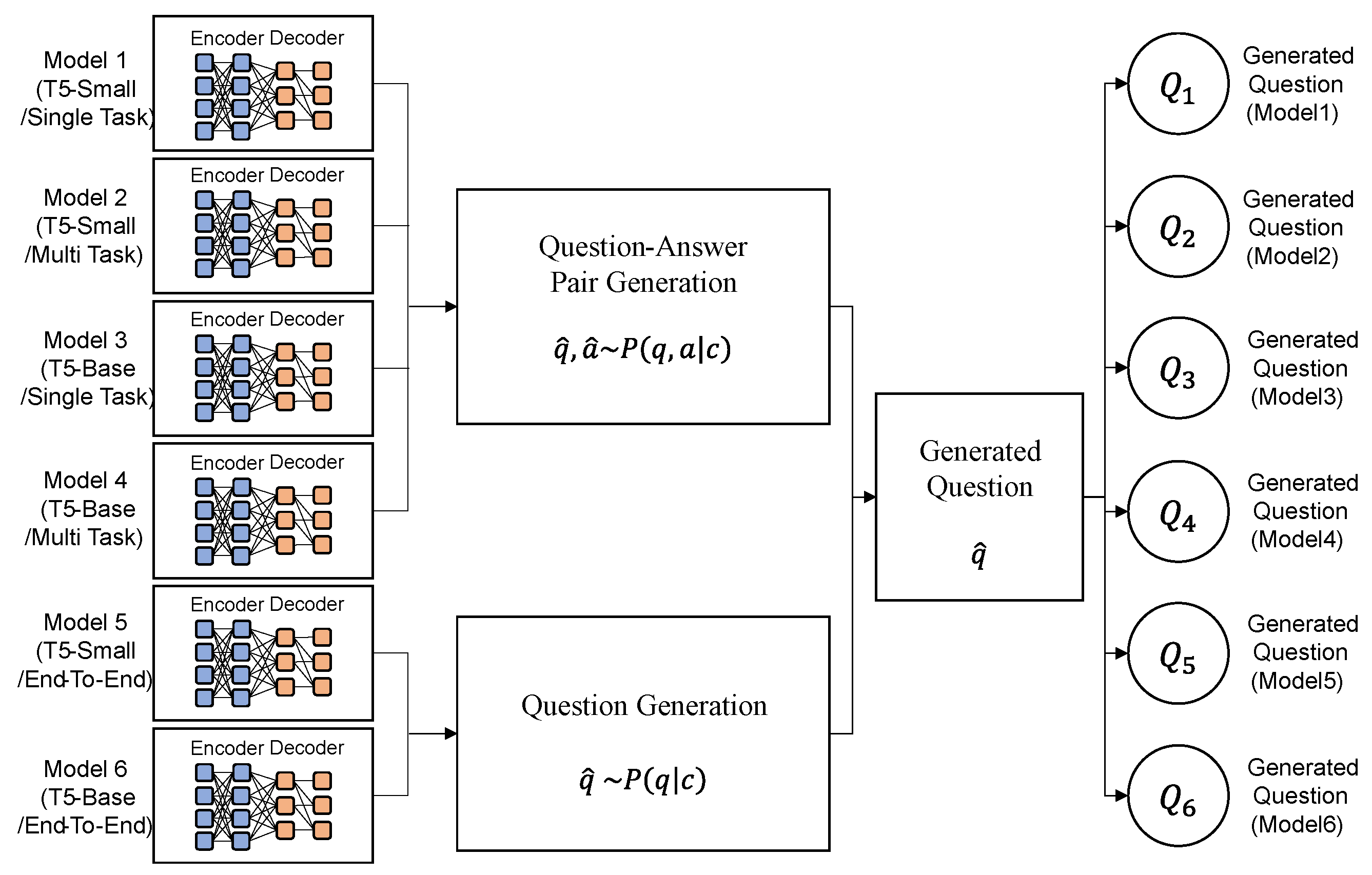

3.1. Overview

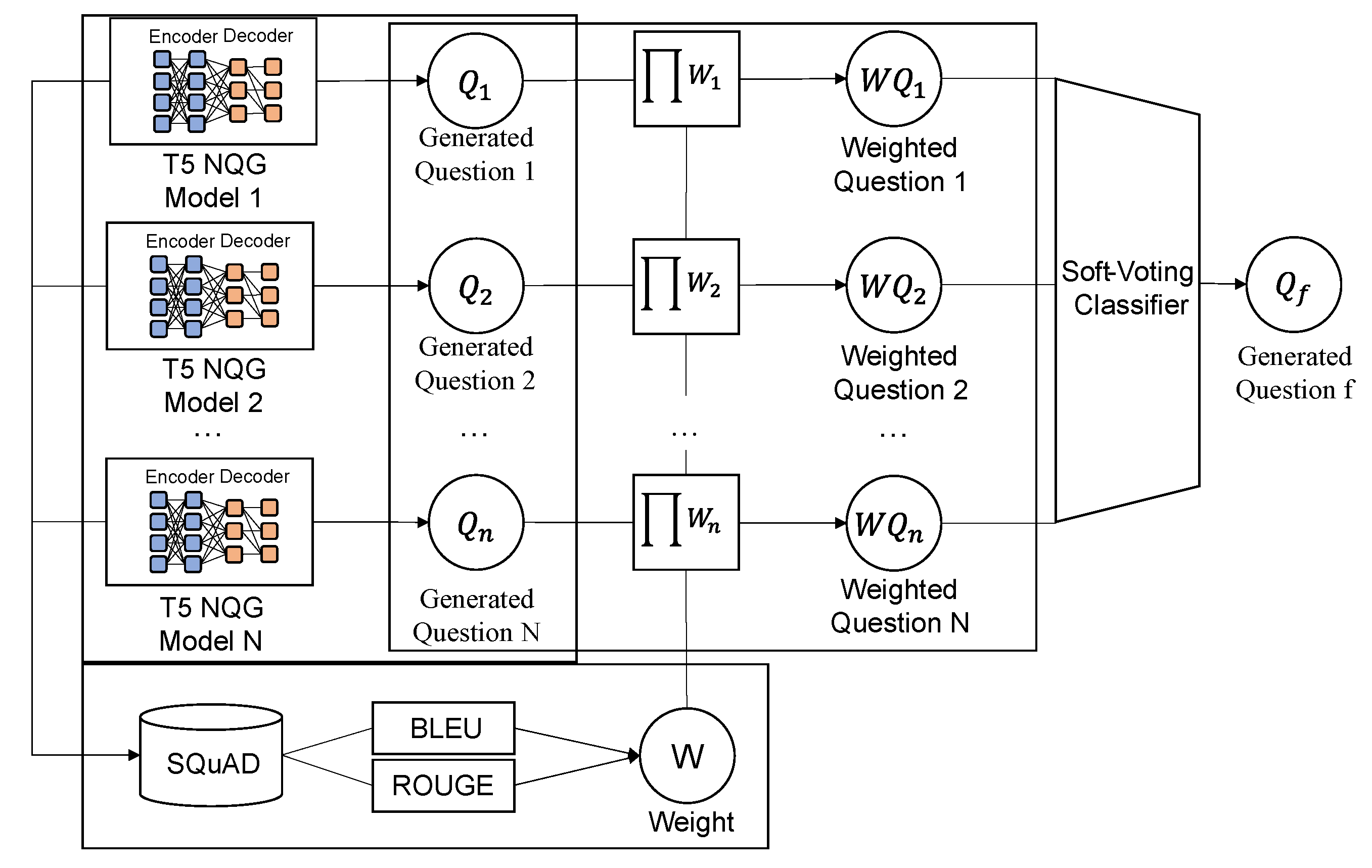

3.2. Soft-Voting Classifier

| Algorithm 1:1 Soft-Voting Classifier of Ensemble-NQG-T5 |

|

4. Experimental Results and Discussion

4.1. Dataset and Experimental Setup

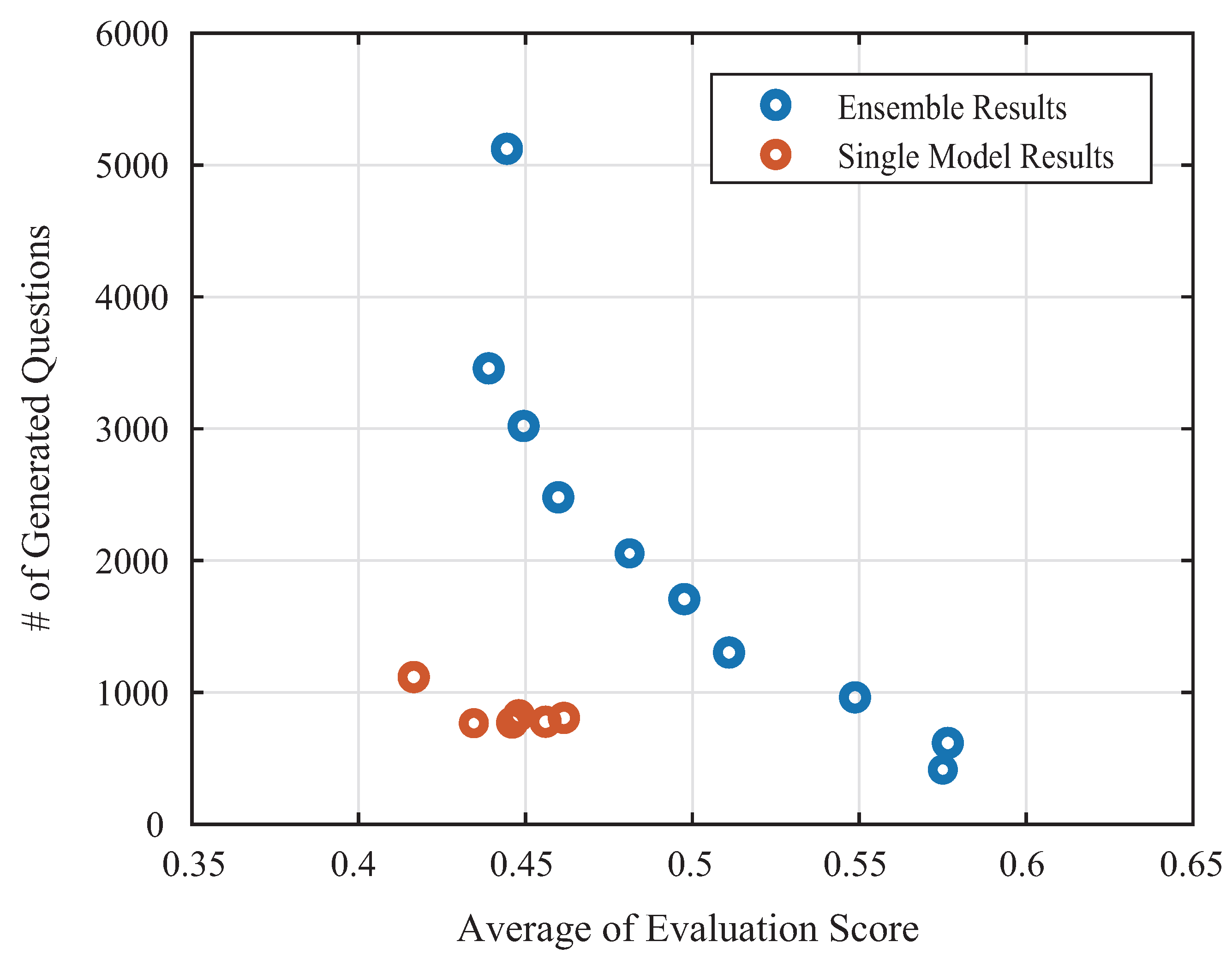

4.2. Results

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Adamopoulou, E.; Moussiades, L. Chatbots: History, technology, and applications. Mach. Learn. Appl. 2020, 2, 1–18. [Google Scholar] [CrossRef]

- Albayrak, N.; Ozdemir, A.; Zeydan, E. An overview of artificial intelligence based chatbots and an example chatbot application. Proceedings of 2018 26th Signal Processing and Communications Applications Conference, Izmir, Turkey, 2–5 May 2018. [Google Scholar]

- Microsoft. Available online: https://luis.ai/ (accessed on 21 November 2022).

- IBM. Available online: https://www.ibm.com/watson/developercloud/conversation.html/ (accessed on 21 November 2022).

- Google. Available online: https://www.api.ai/ (accessed on 21 November 2022).

- Rasa Technologies Inc. Available online: https://rasa.com/ (accessed on 21 November 2022).

- Pan, L.; Lei, W.; Chua, T.-S.; Kan, M.-Y. Recent Advances in Neural Question Generation. arXiv 2019, arXiv:1905.08949. [Google Scholar]

- Zhou, Q.; Yang, N.; Wei, F.; Tan, C.; Bao, H.; Zhou, M. Neural Question Generation from Text: A Preliminary Study. In Proceedings of the 6th National CCF Conference on Natural Language Processing and Chinese Computing, Dalian, China, 8–12 November 2017. [Google Scholar]

- XiPeng, Q.; Sun, T.; Xu, Y.; Shao, Y.; Dai, N.; Huang, X. Pre-trained models for natural language processing: A survey. Sci. China Tech. Sci. 2020, 63, 1872–1897. [Google Scholar]

- Mulder, W.D.; Bethard, S.; Moens, M.-F. A survey on the application of recurrent neural networks to statistical language modeling. Comput. Speech Lang. 2015, 30, 61–98. [Google Scholar] [CrossRef]

- Duan, N.; Tang, D.; Chen, P.; Zhou, M. Question Generation for Question Answering. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017. [Google Scholar]

- Du, X.; Shao, J.; Cardie, C. Learning to Ask: Neural Question Generation for Reading Comprehension. arXiv 2017, arXiv:1705.00106v1. [Google Scholar]

- Wang, Z.; Lan, A.S.; Nie, W.; Waters, A.E.; Grimaldi, P.J.; Baraniuk, R.G. QG-Net: A Data-Driven Question Generation Model for Educational Content. In Proceedings of the Fifth Annual ACM Conference on Learning at Scale, London, UK, 26–28 June 2018. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Process System (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the Conference NAACL-HLT: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. Available online: https://blog.openai.com/language-unsupervised (accessed on 21 November 2022).

- Chan, Y.H.; Fan, Y.C. BERT for Question Generation. In Proceedings of the 12th International Conference on Natural Language Generation, Tokyo, Japan, 29 October–1 November 2019. [Google Scholar]

- Lopez, L.E.; Cruz, D.K.; Cruz, J.C.B.; Cheng, C. Simplifying Paragraph-level Question Generation via Transformer Language Models. In Proceedings of the PRICAI 2021: Trends in Artificial Intelligence, Hanoi, Vietnam, 8–12 November 2021. [Google Scholar]

- Murakhovs’ka, L.; Wu, C.-S.; Laban, P.; Niu, T.; Lin, W.; Xiang, C. MixQG: Neural Question Generation with Mixed Answer Types. In Proceedings of the Conference NAACL, Seattle, DC, USA, 10–15 July 2022. [Google Scholar]

- Fei, Z.; Zhang, Q.; Gui, T.; Liang, D.; Wang, S.; Wu, W.; Huang, X. CQG: A Simple and Effective Controlled Generation Framework for Multi-hop Question Generation. In Proceedings of the 60th Annual Meeting of ACL, Dublin, Ireland, 22–27 May 2022. [Google Scholar]

- Lyu, C.; Shang, L.; Grahan, Y.; Foster, J.; Jiang, X.; Liu, Q. Improving Unsupervised Question Answering via Summarization-Informed Question Generation. In Proceedings of the Conference EMNLP, Punta Cana, Dominican Republic, 7–11 November 2021. [Google Scholar]

- Seo, M.; Kembhavi, A.; Farhadi, A.; Hajishirzi, H. Bi-Directional Attention Flow for Machine Comprehension. arXiv 2018, arXiv:1611.01603. [Google Scholar]

- Yu, A.W.; Dohan, D.; Luong, M.-T. QANet: Combining Local Convolution with Global Self-Attention for Reading Comprehension. arXiv 2018, arXiv:1804.09541. [Google Scholar]

- Hu, M.; Peng, Y.; Huang, Z.; Qiu, X.; Wei, F.; Zhou, M. Reinforced Mnemonic Reader for Machine Reading Comprehension. arXiv 2018, arXiv:1705.02798. [Google Scholar]

- Aniol, A.; Pietron, M.; Duda, J. Ensemble approach for natural language question answering problem. In Proceedings of the 2019 Seventh International Symposium on Computing and Networking Workshops (CANDARW), Nagasaki, Japan, 26–29 November 2019. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.-J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002. [Google Scholar]

- Lin, C.Y. ROUGE: A package for automatic evaluation of summaries. In Proceedings of the ACL-04 Workshop (Text Summarization Branches Out), Barcelona, Spain, 25–26 July 2004. [Google Scholar]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.; Le, Q.V. XLNet: Generalized autoregressive pretraining for language understanding. In Proceedings of the Advances in Neural Information Processing System, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A robustly optimized BERT pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Song, K.; Tan, X.; Qin, T.; Lu, J.; Liu, T.-Y. MASS: Masked sequence to sequence pre-training for language generation. In Proceedings of the International Conference on Machine Learning(ICML), Long Beach, CA, USA, 10–15 June 2019. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar]

- Liu, X.; He, P.; Chen, W.; Gao, J. Multi-Task Deep Neural Networks for Natural Language Understanding. arXiv 2019, arXiv:1901.11504. [Google Scholar]

- Wang, A.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S.R. GLUE: A Multi-Task Benchmark and Analysis Platform for Natural Language Understanding. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Bressels, Belgium, 31 October–4 November 2019. [Google Scholar]

- Wang, A.; Pruksachatkun, Y.; Nangia, N.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S. SuperGLUE: A Sticker Benchmark for General-Purpose Language Understanding Systems. In Proceedings of the 33rd Conference Neural Information Processing System, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Raipurkar, P.; Zhang, J.; Lopyrev, K.; Liang, P. SQuAD: 100,000+ Questions for Machine Comprehension of Text. arXiv 2016, arXiv:1606.05250. [Google Scholar]

- Chan, Y.H.; Fan, Y.C. A Recurrent BERT-based Model for Question Generation. In Proceedings of the Second Workshop on Machine Reading for Question Answering, Hong Kong, China, 4 November 2019. [Google Scholar]

- Lopez, L.E.; Cruz, D.K.C.; Cruz, J.C.B.; Cheng, C. Transformer-based End-to-End Question Generation. arXiv 2020, arXiv:2005.01107. [Google Scholar]

- Patil, S. Question Generation Using Transformers. Available online: https://github.com/patil-suraj/question_generation/ (accessed on 21 November 2022).

- Singhal, A. Modern Information Retrieval: A Brief Overview. IEEE Data Eng. Bull. 2001, 24, 35–43. [Google Scholar]

- Rajurkar, P.; Jia, R.; Liang, P. Know What You Don’t Know: Unanswerable Questions for SQuAD. arXiv 2018, arXiv:1806.03822. [Google Scholar]

- Nema, Q.; Khapra, M.M. Towards a Better Metric for Evaluating Question Generation Systems. In Proceedings of the Conference EMNLP, Brussels, Belgium, 31 October–4 November 2018. [Google Scholar]

- Ji, T.; Lyu, C.; Jones, G.; Zhou, L.; Graham, Y. QAScore-An Unsupervised Unreferenced Metric for the Question Generation Evaluation. Entropy 2021, 24, 1154. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. In Proceedings of the ICLR, Virtual Event, 27–30 April 2020. [Google Scholar]

- Xu, W.; Napoles, C.; Pavlick, E.; Chen, Q.; Callison-Burch, C. Optimizing Statistical Machine Translation for Text Simplification. Trans. ACL 2016, 4, 401–415. [Google Scholar] [CrossRef]

| Model Number | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| Type | Single Task | Multi Task | Single Task | Multi Task | End-to-End | |

| Task | T5 Small | T5 Base | T5 Small | T5 Base | ||

| Model Size | 230.8 MB | 850.3 MB | 230.8 MB | 850.3 MB | ||

| Model Number | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| BLEU-N | 0.447 | 0.465 | 0.476 | 0.482 | 0.426 | 0.471 |

| ROUGE-L | 0.422 | 0.427 | 0.436 | 0.441 | 0.407 | 0.425 |

| # of GQ | 767 | 772 | 778 | 807 | 1117 | 827 |

| Mutual Similarity Threshold | BLEU-N | ROUGE-L | # of GQ |

|---|---|---|---|

| 0.1 | 0.556 | 0.595 | 415 |

| 0.2 | 0.555 | 0.599 | 617 |

| 0.3 | 0.546 | 0.552 | 963 |

| 0.4 | 0.517 | 0.505 | 1303 |

| 0.5 | 0.522 | 0.473 | 1708 |

| 0.6 | 0.517 | 0.445 | 2055 |

| 0.7 | 0.490 | 0.429 | 2480 |

| 0.8 | 0.472 | 0.421 | 2945 |

| 0.9 | 0.461 | 0.416 | 3458 |

| 1.0 | 0.462 | 0.427 | 5123 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hwang, M.-H.; Shin, J.; Seo, H.; Im, J.-S.; Cho, H.; Lee, C.-K. Ensemble-NQG-T5: Ensemble Neural Question Generation Model Based on Text-to-Text Transfer Transformer. Appl. Sci. 2023, 13, 903. https://doi.org/10.3390/app13020903

Hwang M-H, Shin J, Seo H, Im J-S, Cho H, Lee C-K. Ensemble-NQG-T5: Ensemble Neural Question Generation Model Based on Text-to-Text Transfer Transformer. Applied Sciences. 2023; 13(2):903. https://doi.org/10.3390/app13020903

Chicago/Turabian StyleHwang, Myeong-Ha, Jikang Shin, Hojin Seo, Jeong-Seon Im, Hee Cho, and Chun-Kwon Lee. 2023. "Ensemble-NQG-T5: Ensemble Neural Question Generation Model Based on Text-to-Text Transfer Transformer" Applied Sciences 13, no. 2: 903. https://doi.org/10.3390/app13020903

APA StyleHwang, M.-H., Shin, J., Seo, H., Im, J.-S., Cho, H., & Lee, C.-K. (2023). Ensemble-NQG-T5: Ensemble Neural Question Generation Model Based on Text-to-Text Transfer Transformer. Applied Sciences, 13(2), 903. https://doi.org/10.3390/app13020903