Bet-GAT: An Efficient Centrality-Based Graph Attention Model for Semi-Supervised Node Classification

Abstract

1. Introduction

2. Literature Survey

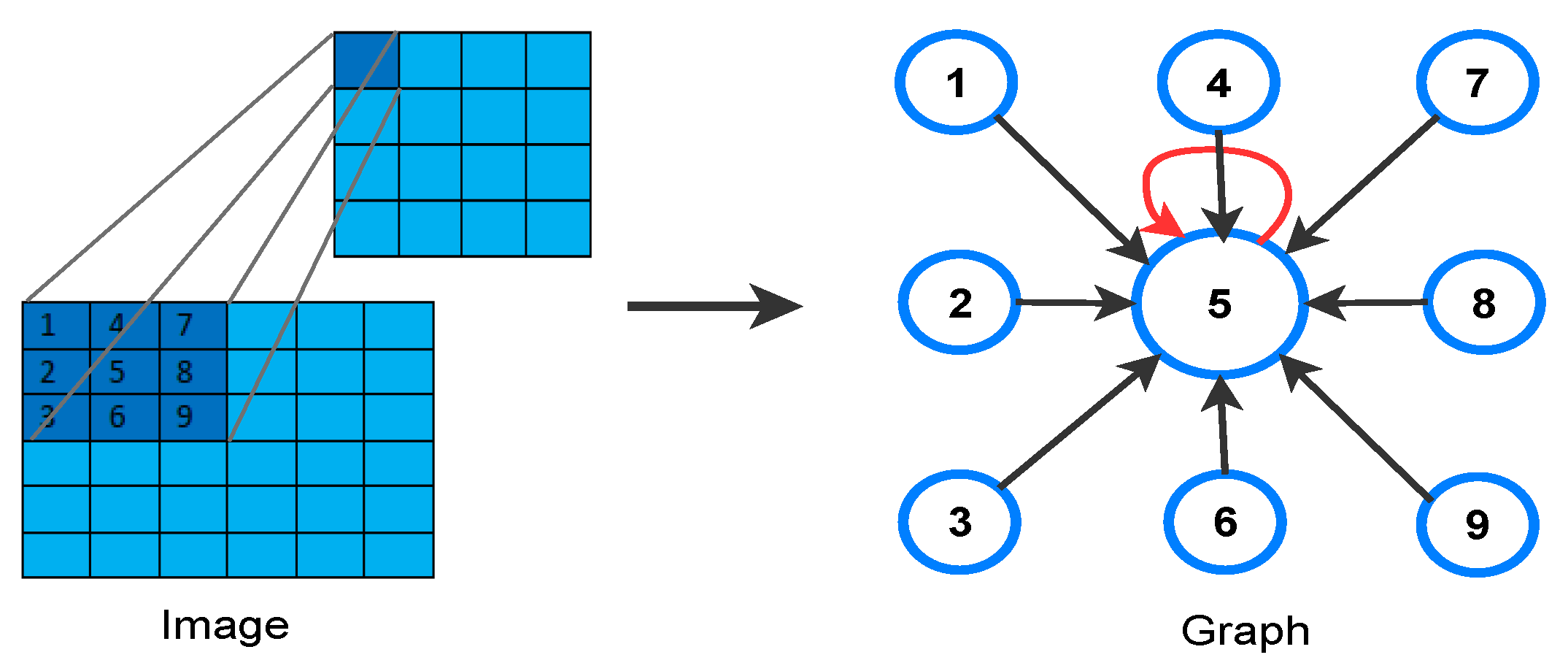

2.1. Graph Convolutional Neural Networks (GCNs)

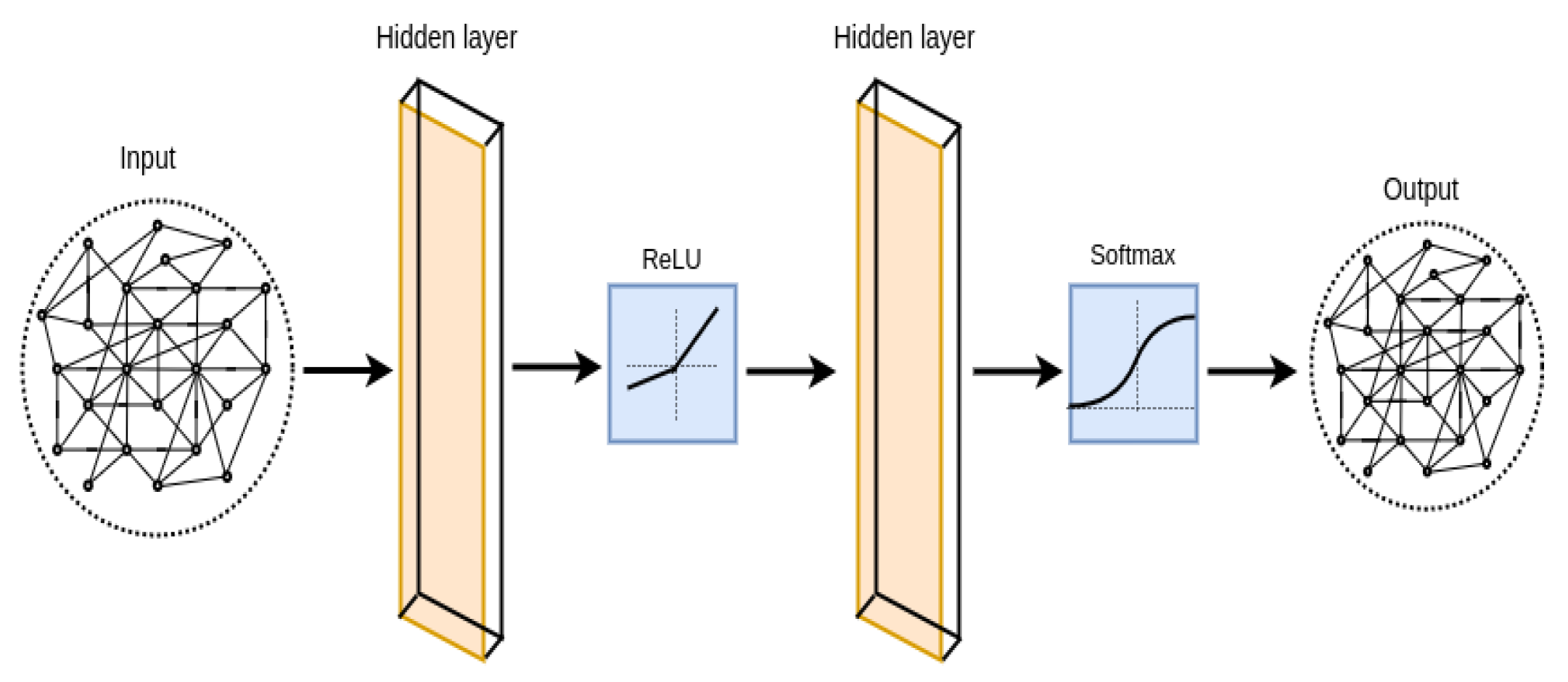

2.2. GCN to GAT Transition

3. Proposed Methodology

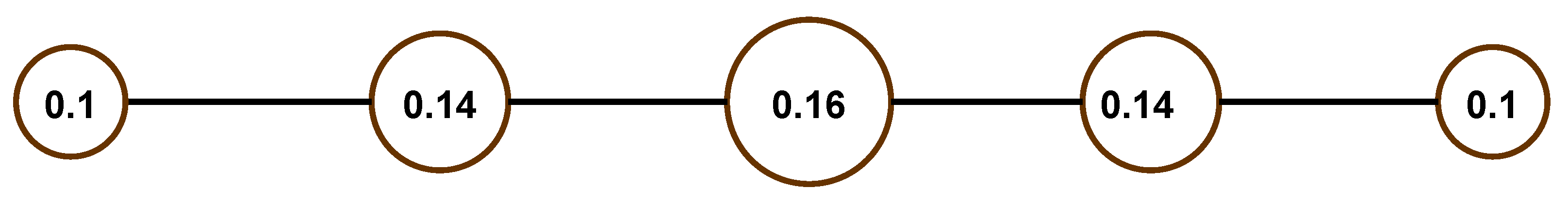

3.1. Background of Network Centrality Measures

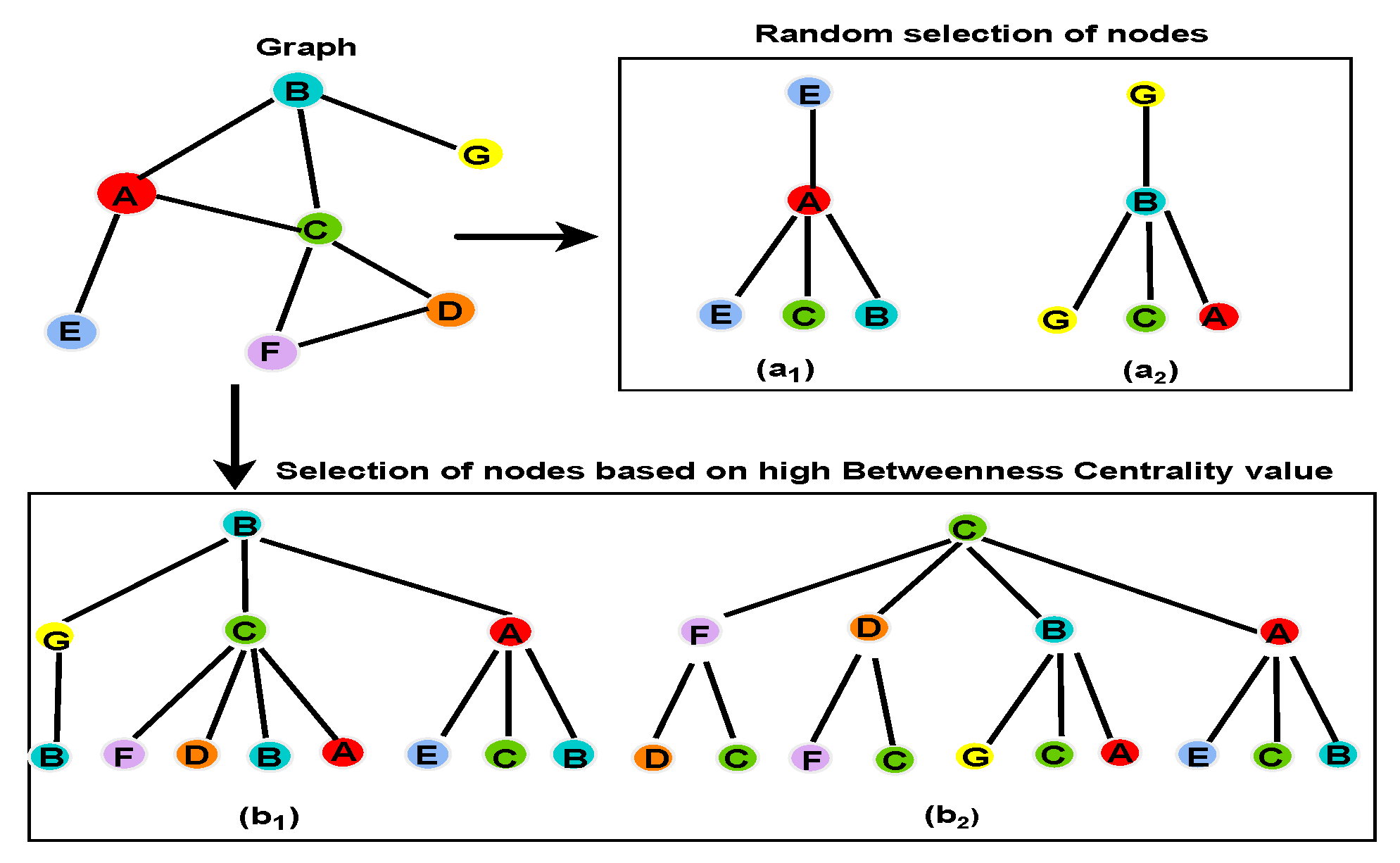

3.2. Justification of Betweenness Centrality Based Training

- (1)

- A subset of training nodes selected based on betweenness centrality improves training efficiency.

- (2)

- The probability of selecting the same subset of nodes by a traditional GAT/GCN model (by default random selection of training nodes) is near zero.

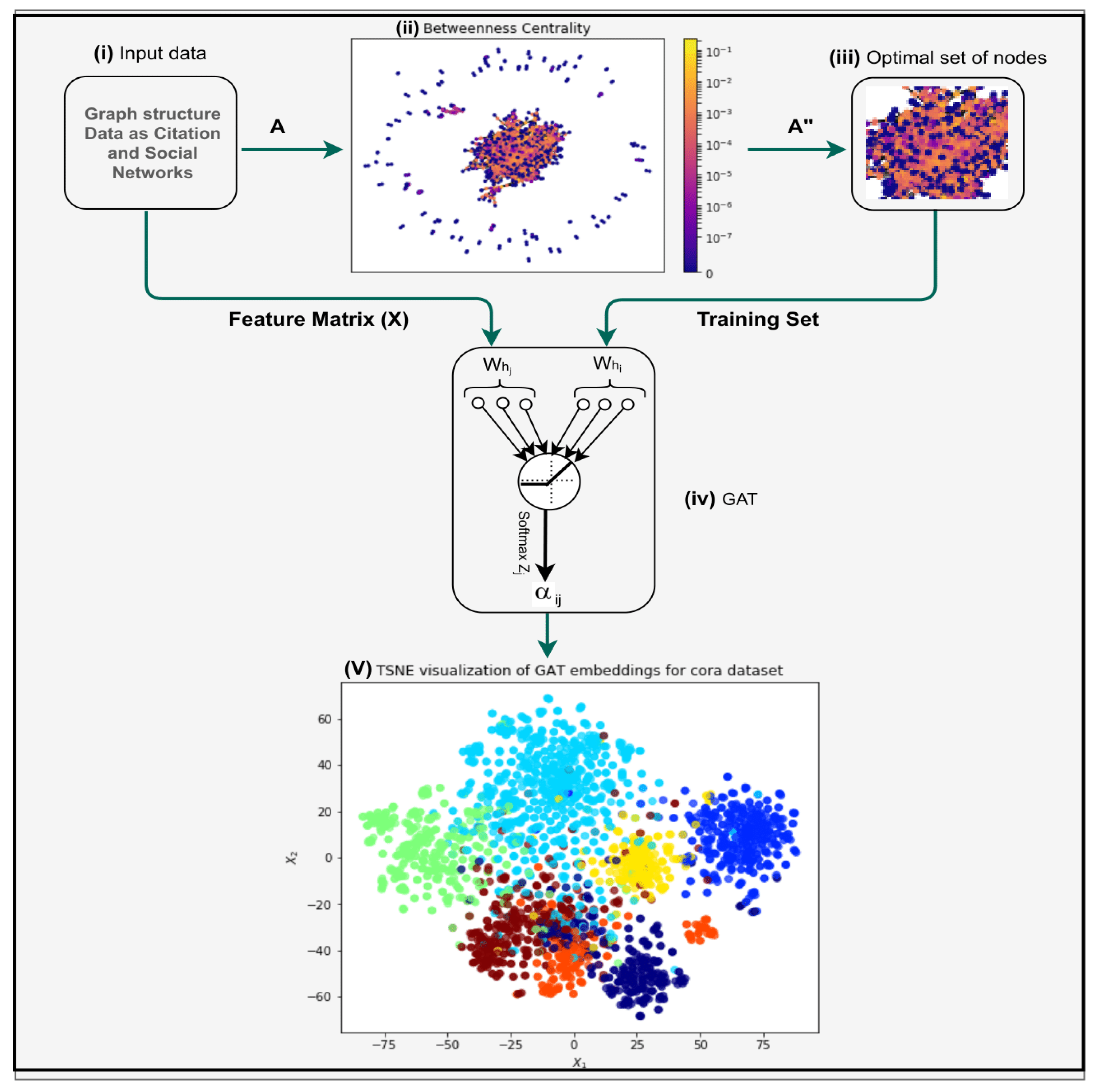

3.3. Proposed Framework

3.4. Algorithmic Description of the Problem

| Algorithm 1 Pseudocode of Bet-GAT(A, X). |

Input: A is the edge list of the network; X is the feature vector matrix corresponding to the dataset containing node labels. Output: Classification of nodes for the given network. dataset = sg.dataset(); ▹ Loading dataset centrality = nx.getcentrality(A); ▹ Get centrality nodelabels = extractlabels(X); ▹ Extracting labels from Feature Vector matrix nodesubjects = merge (A.nodes, nodelabels); ▹ Combined node ids with labels for each node in nodesubjects, centrality do nodesubjects1 = merge(nodesubjects, centrality); nodesubjects1 = sortbycentrality(nodesubjects1); end for for i in range(trainsize) do trainset[i] = nodesubjects[i]; end for for i in range(validationsize) and not in trainset do validationset[i] = nodesubjects[i]; end for for i in range(testsetsize) and not in trainset do testset[i] = nodesubjects[i]; end for trainset = encode(trainset); ▹ One hot encoding validationset = encode(validationset); ▹ One hot encoding testset = encode(testset); ▹ One hot encoding GAT = GAT (layersizes = [128, 32], activations = [“relu”, “relu”], generator = generator, dropout = 0.5, attention head = 32); ▹ Defining GAT layer predictions = layers.Dense(units = trainsettargets, activation = “softmax”)(X); ▹ Defining Prediction layer model = Model(inputs = X, outputs = predictions); ▹ Defining the model model.compile (optimizer = Adam(learningrate = 0.0001), loss = categoricalcrossentropy, metrics = [“accurracy”]); model.fit( trainset, epochs, validationdata, callbacks = till stopping criteria); ▹ Performing Training |

3.5. Dataset Description

- Citation networks: In citation networks, the nodes and edges represent documents and citation links (undirected), respectively. We consider following three datasets in the citation networks.(1). Cora: The Cora dataset comprises 2708 scientific publications. Each publication is further classified into 1 of 7 classes.(2). Citeseer: The Citeseer dataset contains 3312 scientific publications. Each publication is categorised into 1 of 6 classes.(3). PubMed: The PubMed dataset contains 19,717 scientific publications. Each publication is further classified into one of three classes.

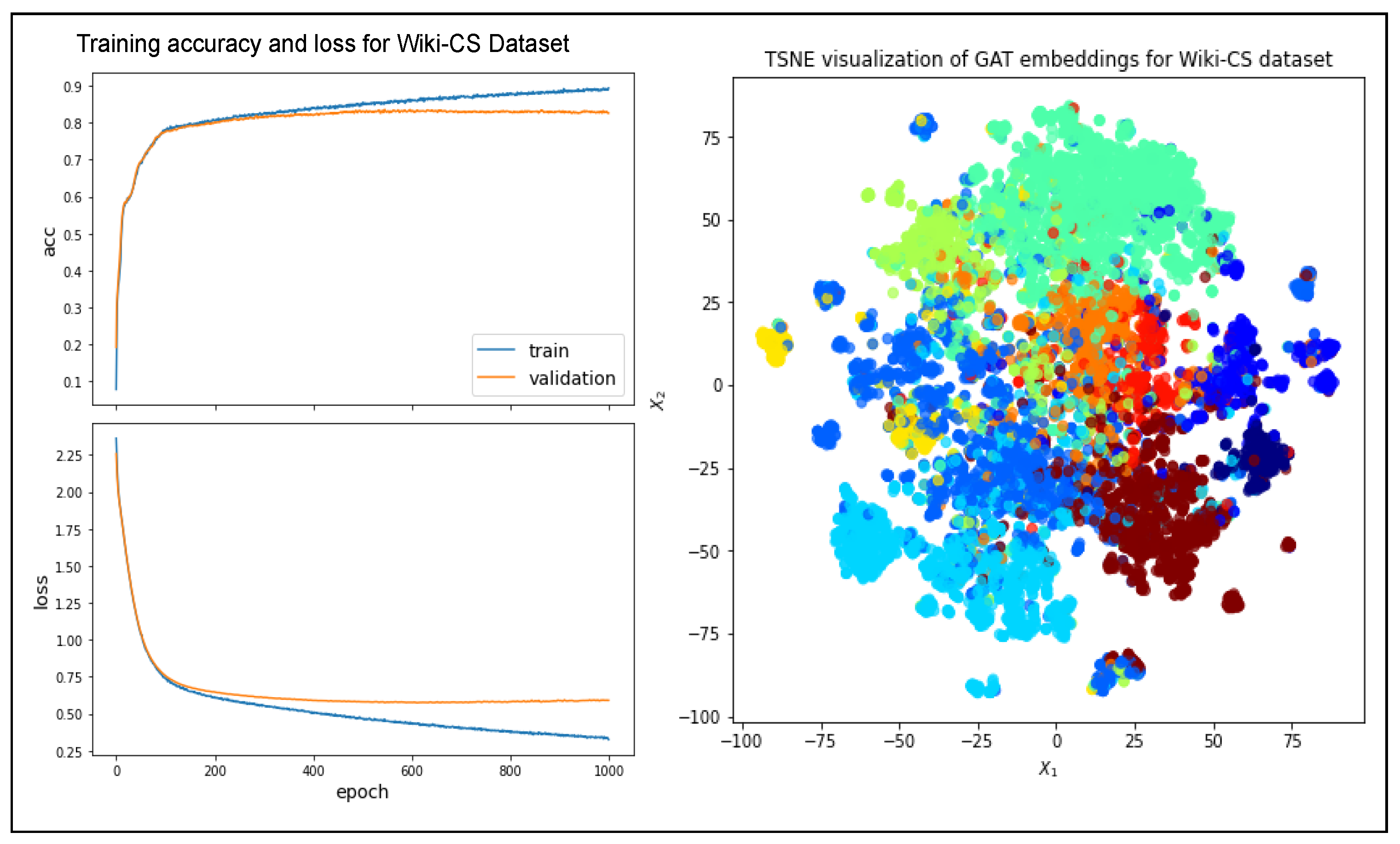

- Wikipedia-based [43]: Wiki-CS is a relatively new dataset from Wikipedia. The nodes and edges represent computer science articles and hyperlinks, respectively. The dataset contains 11,701 computer science articles which are categorised into 10 classes.

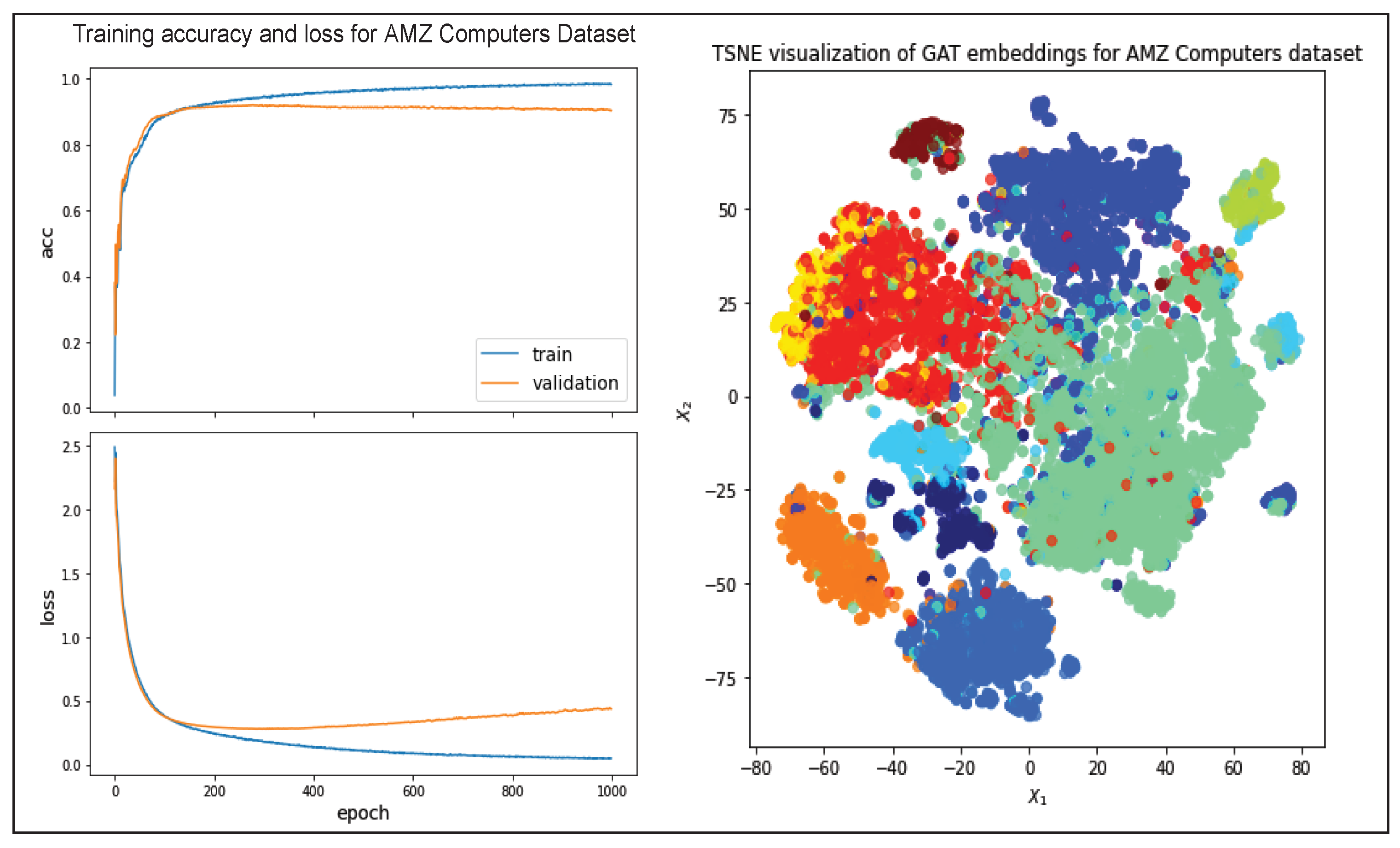

- AMZ computers [44]: AMZ Computers is an Amazon co-purchase graph. The nodes and edges of this graph represent items and co-purchased relation respectively. The dataset contains 13,752 products which are categorised into 10 classes.

4. Results and Analysis

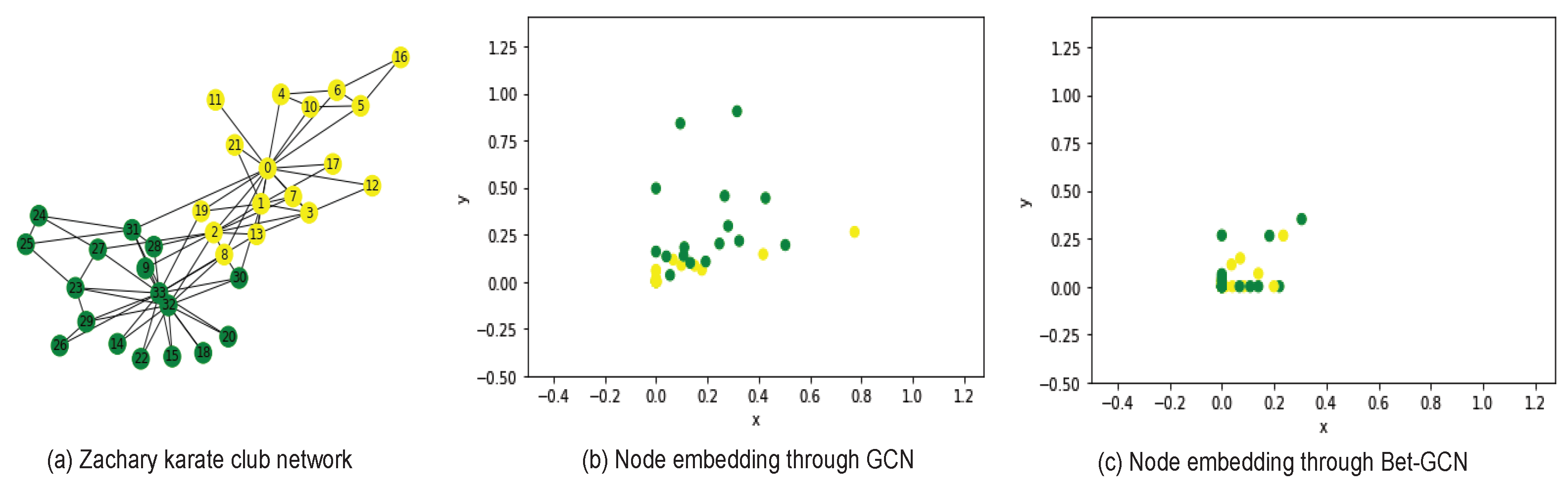

4.1. A Comparative Analysis: GCN vs. GCN with Betweenness Centrality

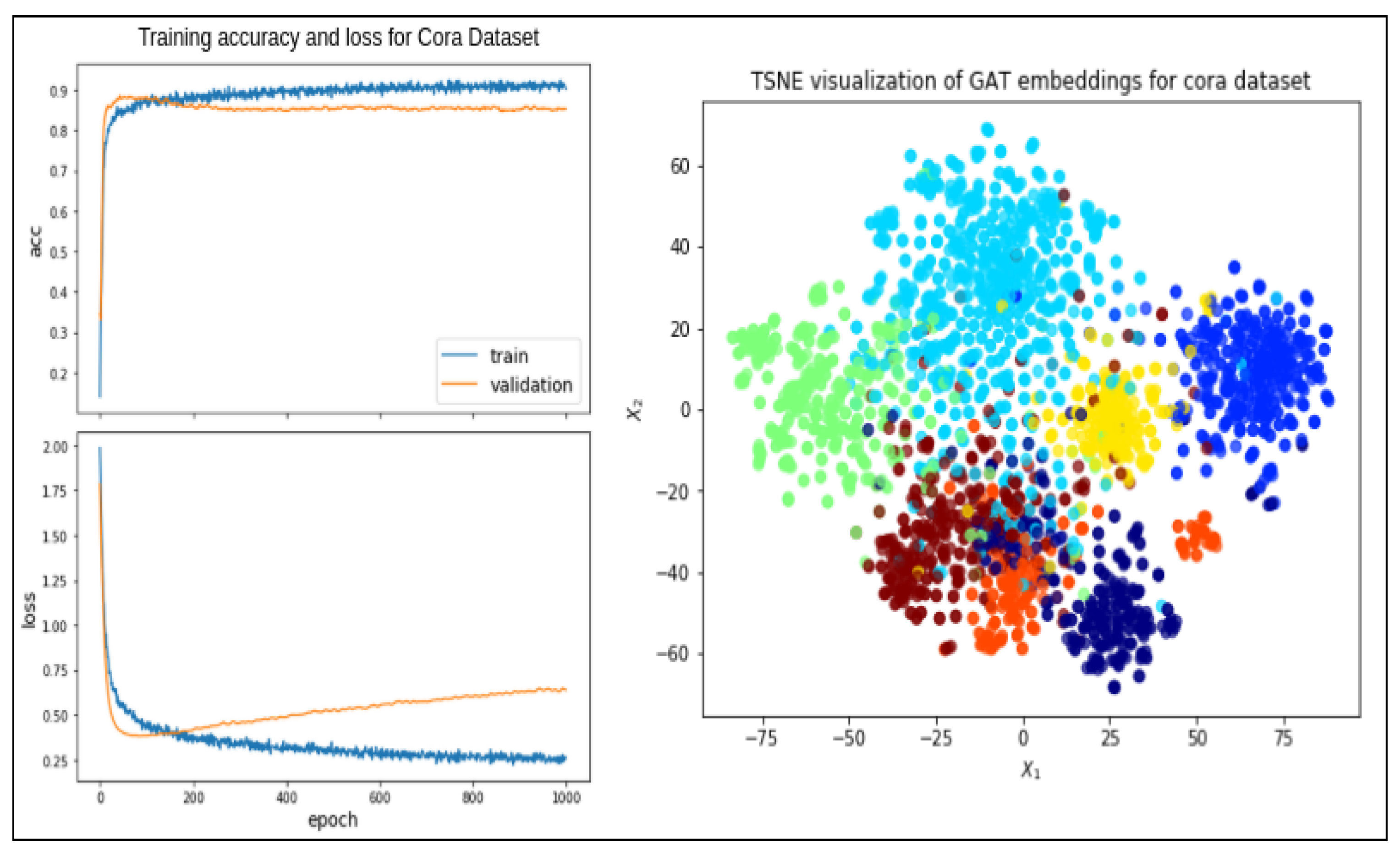

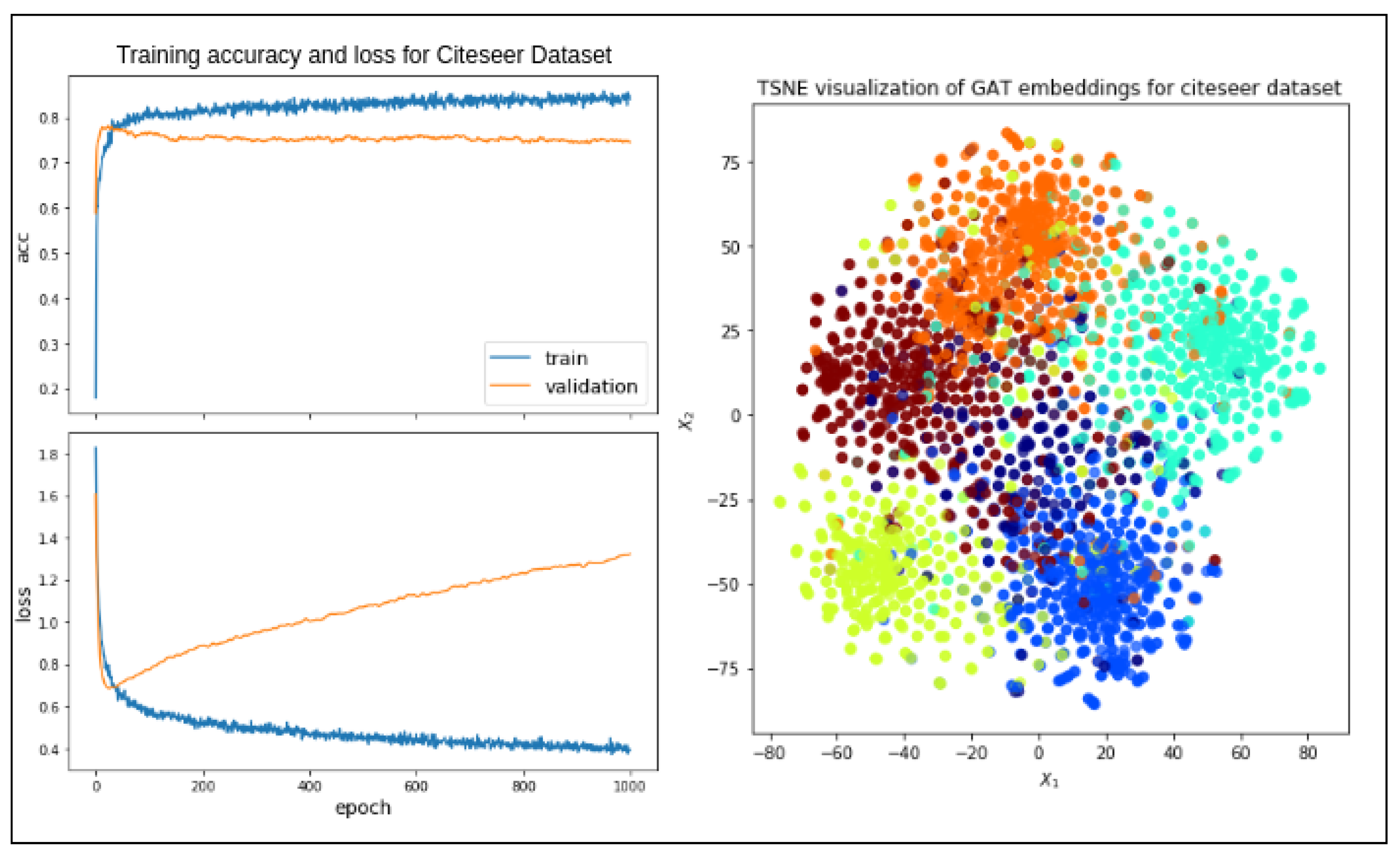

4.2. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Philip, S.Y. A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Kumar, A.; Singh, S.S.; Singh, K.; Biswas, B. Link prediction techniques, applications, and performance: A survey. Phys. Stat. Mech. Appl. 2020, 553, 124289. [Google Scholar] [CrossRef]

- Kriege, N.M.; Johansson, F.D.; Morris, C. A survey on graph kernels. Appl. Netw. Sci. 2020, 5, 1–42. [Google Scholar] [CrossRef]

- Derrow-Pinion, A.; She, J.; Wong, D.; Lange, O.; Hester, T.; Perez, L.; Nunkesser, M.; Lee, S.; Guo, X.; Wiltshire, B.; et al. Eta prediction with graph neural networks in google maps. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Queensland, Australia, 1–5 November 2021; pp. 3767–3776. [Google Scholar]

- Zaki, N.; Singh, H.; Mohamed, E.A. Identifying protein complexes in protein-protein interaction data using graph convolutional network. IEEE Access 2021, 9, 123717–123726. [Google Scholar] [CrossRef]

- Cao, P.; Zhu, Z.; Wang, Z.; Zhu, Y.; Niu, Q. Applications of graph convolutional networks in computer vision. Neural Comput. Appl. 2022, 34, 13387–13405. [Google Scholar] [CrossRef]

- Vashishth, S.; Yadati, N.; Talukdar, P. Graph-based deep learning in natural language processing. In Proceedings of the 7th ACM IKDD CoDS and 25th COMAD, Hyderabad, India, 5–7 January 2020; pp. 371–372. [Google Scholar]

- Meng, Y.; Wei, M.; Gao, D.; Zhao, Y.; Yang, X.; Huang, X.; Zheng, Y. CNN-GCN aggregation enabled boundary regression for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Lima, Peru, 4–8 October 2020; pp. 352–362. [Google Scholar]

- Wang, B.; Shen, G.; Li, D.; Hao, J.; Liu, W.; Huang, Y.; Wu, H.; Lin, Y.; Chen, G.; Heng, P.A. LHNN: Lattice Hypergraph Neural Network for VLSI Congestion Prediction. arXiv 2022, arXiv:2203.12831. [Google Scholar]

- Das, K.; Samanta, S.; Pal, M. Study on centrality measures in social networks: A survey. Soc. Netw. Anal. Min. 2018, 8, 13. [Google Scholar] [CrossRef]

- Derr, T.; Ma, Y.; Fan, W.; Liu, X.; Aggarwal, C.; Tang, J. Epidemic graph convolutional network. In Proceedings of the 13th International Conference on Web Search and Data Mining, Houston, TX, USA, 10–13 July 2020; pp. 160–168. [Google Scholar]

- Xu, B.; Shen, H.; Cao, Q.; Qiu, Y.; Cheng, X. Graph Wavelet Neural Network. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Abu-El-Haija, S.; Perozzi, B.; Kapoor, A.; Alipourfard, N.; Lerman, K.; Harutyunyan, H.; Ver Steeg, G.; Galstyan, A. Mixhop: Higher-order graph convolutional architectures via sparsified neighborhood mixing. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 21–29. [Google Scholar]

- Lu, H.; Huang, S.H.; Ye, T.; Guo, X. Graph star net for generalized multi-task learning. arXiv 2019, arXiv:1906.12330. [Google Scholar]

- Ma, J.; Tang, W.; Zhu, J.; Mei, Q. A flexible generative framework for graph-based semi-supervised learning. Adv. Neural Inf. Process. Syst. 2019, 32, 3281–3290. [Google Scholar]

- Zügner, D.; Günnemann, S. Certifiable robustness and robust training for graph convolutional networks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 246–256. [Google Scholar]

- Lin, G.; Wang, J.; Liao, K.; Zhao, F.; Chen, W. Structure Fusion Based on Graph Convolutional Networks for Node Classification in Citation Networks. Electronics 2020, 9, 432. [Google Scholar] [CrossRef]

- Gao, H.; Wang, Z.; Ji, S. Large-scale learnable graph convolutional networks. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 1416–1424. [Google Scholar]

- Luo, Y.; Ji, R.; Guan, T.; Yu, J.; Liu, P.; Yang, Y. Every node counts: Self-ensembling graph convolutional networks for semi-supervised learning. Pattern Recognit. 2020, 106, 107451. [Google Scholar] [CrossRef]

- Franceschi, L.; Niepert, M.; Pontil, M.; He, X. Learning discrete structures for graph neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 1972–1982. [Google Scholar]

- Zhou, K.; Song, Q.; Huang, X.; Hu, X. Auto-gnn: Neural architecture search of graph neural networks. arXiv 2019, arXiv:1909.03184. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Yang, H.; Zhang, P.; Zhou, C.; Hu, Y. Graphnas: Graph neural architecture search with reinforcement learning. arXiv 2019, arXiv:1904.09981. [Google Scholar]

- Jiang, B.; Zhang, Z.; Tang, J.; Luo, B. Graph optimized convolutional networks. arXiv 2019, arXiv:1904.11883. [Google Scholar]

- Wijesinghe, W.; Wang, Q. DFNets: Spectral CNNs for graphs with feedback-looped filters. Adv. Neural Inf. Process. Syst. 2019, 32, 6009–6020. [Google Scholar]

- Dabhi, S.; Parmar, M. NodeNet: A Graph Regularised Neural Network for Node Classification. arXiv 2020, arXiv:2006.09022. [Google Scholar]

- Huang, W.; Zhang, T.; Rong, Y.; Huang, J. Adaptive sampling towards fast graph representation learning. Adv. Neural Inf. Process. Syst. 2018, 31, 4563–4572. [Google Scholar]

- Wang, H.; Leskovec, J. Unifying graph convolutional neural networks and label propagation. arXiv 2020, arXiv:2002.06755. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Yu, Z.; Wang, H.; Liu, Y.; Böhm, C.; Shao, J. Community Attention Network for Semi-supervised Node Classification. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Sorrento, Italy, 17–20 November 2020; pp. 1382–1387. [Google Scholar]

- Shanthamallu, U.S.; Thiagarajan, J.J.; Spanias, A. A regularized attention mechanism for graph attention networks. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 3372–3376. [Google Scholar]

- Wang, G.; Ying, R.; Huang, J.; Leskovec, J. Improving graph attention networks with large margin-based constraints. arXiv 2019, arXiv:1910.11945. [Google Scholar]

- Roethlisberger, F.J.; Dickson, W.J. Management and the Worker; Psychology Press: London, UK, 2003; Volume 5. [Google Scholar]

- Liu, C.C.; Chen, Y.C.; Tai, S.J.D. A social network analysis on elementary student engagement in the networked creation community. Comput. Educ. 2017, 115, 114–125. [Google Scholar] [CrossRef]

- Cohen, E.; Delling, D.; Pajor, T.; Werneck, R.F. Computing classic closeness centrality, at scale. In Proceedings of the Second ACM conference on Online Social Networks, Dublin, Ireland, 1–2 October 2014; pp. 37–50. [Google Scholar]

- Boldi, P.; Vigna, S. Axioms for centrality. Internet Math. 2014, 10, 222–262. [Google Scholar] [CrossRef]

- Bonacich, P. Some unique properties of eigenvector centrality. Soc. Netw. 2007, 29, 555–564. [Google Scholar] [CrossRef]

- Barthelemy, M. Betweenness centrality in large complex networks. Eur. Phys. J. B 2004, 38, 163–168. [Google Scholar] [CrossRef]

- Bhardwaj, S.; Niyogi, R.; Milani, A. Performance analysis of an algorithm for computation of betweenness centrality. In Proceedings of the International Conference on Computational Science and Its Applications, Santander, Spain, 20–23 June 2011; pp. 537–546. [Google Scholar]

- Clevert, D.A.; Unterthiner, T.; Hochreiter, S. Fast and accurate deep network learning by exponential linear units (elus). arXiv 2015, arXiv:1511.07289. [Google Scholar]

- Zhang, Z.; Wang, X.; Zhu, W. Automated Machine Learning on Graphs: A Survey. arXiv 2021, arXiv:2103.00742. [Google Scholar]

- Kaur, M.; Kaur, H. Implementation of Enhanced Graph Layout Algorithm for Visualizing Social Network Data using NetworkX Library. Int. J. Adv. Res. Comput. Sci. 2017, 8, 287–292. [Google Scholar]

- Mernyei, P.; Cangea, C. Wiki-cs: A wikipedia-based benchmark for graph neural networks. arXiv 2020, arXiv:2007.02901. [Google Scholar]

- Shchur, O.; Mumme, M.; Bojchevski, A.; Günnemann, S. Pitfalls of graph neural network evaluation. arXiv 2018, arXiv:1811.05868. [Google Scholar]

- Zachary, W.W. An information flow model for conflict and fission in small groups. J. Anthropol. Res. 1977, 33, 452–473. [Google Scholar] [CrossRef]

- Zhu, X. Semi-Supervised Learning with Graphs; Carnegie Mellon University: Pittsburgh, PA, USA, 2005. [Google Scholar]

- Xu, K.; Li, C.; Tian, Y.; Sonobe, T.; Kawarabayashi, K.i.; Jegelka, S. Representation learning on graphs with jumping knowledge networks. In Proceedings of the International Conference on Machine Learning, Stockholm Sweden, 10–15 July 2018; pp. 5453–5462. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Datasets | Nodes | Edges | Classes | Features | Type |

|---|---|---|---|---|---|

| Cora | 2708 | 5429 | 7 | 1433 | Citation network |

| Citeseer | 3312 | 4732 | 6 | 3703 | Citation network |

| PubMed | 19,717 | 44,338 | 3 | 500 | Citation network |

| Wiki-CS | 11,701 | 4,31,726 | 10 | 767 | Wikipedia-based |

| AMZ Computers | 13,752 | 4,91,722 | 10 | 300 | Amazon Computers |

| Method | Cora | Citeseer | PubMed | Wiki-CS | AMZ Computers |

|---|---|---|---|---|---|

| MLP | 64.6 ± 1.7 | 62.0 ± 1.8 | 85.9 ± 0.3 | 73.17 ± 0.19 | 44.9 ± 5.8 |

| LR | 77.3 ± 1.8 | 71.2 ± 1.8 | 86.0 ± 0.6 | - | - |

| LPA(2005) [46] | 85.3 ± 0.9 | 70.0 ± 1.7 | 82.6 ± 0.6 | - | - |

| GCN(2017) [2] | 88.2 ± 0.8 | 77.3 ± 1.5 | 87.2 ± 0.4 | 79.07 ± 0.10 | 82.6 ± 2.4 |

| GAT(2018) [29] | 87.7 ± 0.3 | 76.2 ± 0.9 | 86.9 ± 0.5 | 79.63 ± 0.10 | 78.0 ± 1.9 |

| JK-Net(2018) [47] | 89.1 ± 1.2 | 78.3 ± 0.9 | 85.8 ± 1.1 | - | - |

| GCN-LPA(2020) [28] | 88.5 ± 1.5 | 78.7 ± 0.6 | 87.8 ± 0.6 | - | - |

| Bet-GAT(Proposed) | 89.15 ± 1.5 | 79.0 ± 0.3 | 87.5 ± 0.5 | 85.73 ± 0.3 | 91.05 ± 1.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Verma, A.K.; Saxena, R.; Jadeja, M.; Bhateja, V.; Lin, J.C.-W. Bet-GAT: An Efficient Centrality-Based Graph Attention Model for Semi-Supervised Node Classification. Appl. Sci. 2023, 13, 847. https://doi.org/10.3390/app13020847

Verma AK, Saxena R, Jadeja M, Bhateja V, Lin JC-W. Bet-GAT: An Efficient Centrality-Based Graph Attention Model for Semi-Supervised Node Classification. Applied Sciences. 2023; 13(2):847. https://doi.org/10.3390/app13020847

Chicago/Turabian StyleVerma, Atul Kumar, Rahul Saxena, Mahipal Jadeja, Vikrant Bhateja, and Jerry Chun-Wei Lin. 2023. "Bet-GAT: An Efficient Centrality-Based Graph Attention Model for Semi-Supervised Node Classification" Applied Sciences 13, no. 2: 847. https://doi.org/10.3390/app13020847

APA StyleVerma, A. K., Saxena, R., Jadeja, M., Bhateja, V., & Lin, J. C.-W. (2023). Bet-GAT: An Efficient Centrality-Based Graph Attention Model for Semi-Supervised Node Classification. Applied Sciences, 13(2), 847. https://doi.org/10.3390/app13020847