Emotional States versus Mental Heart Rate Component Monitored via Wearables

Abstract

Featured Application

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection

2.1.1. Participants

2.1.2. Materials

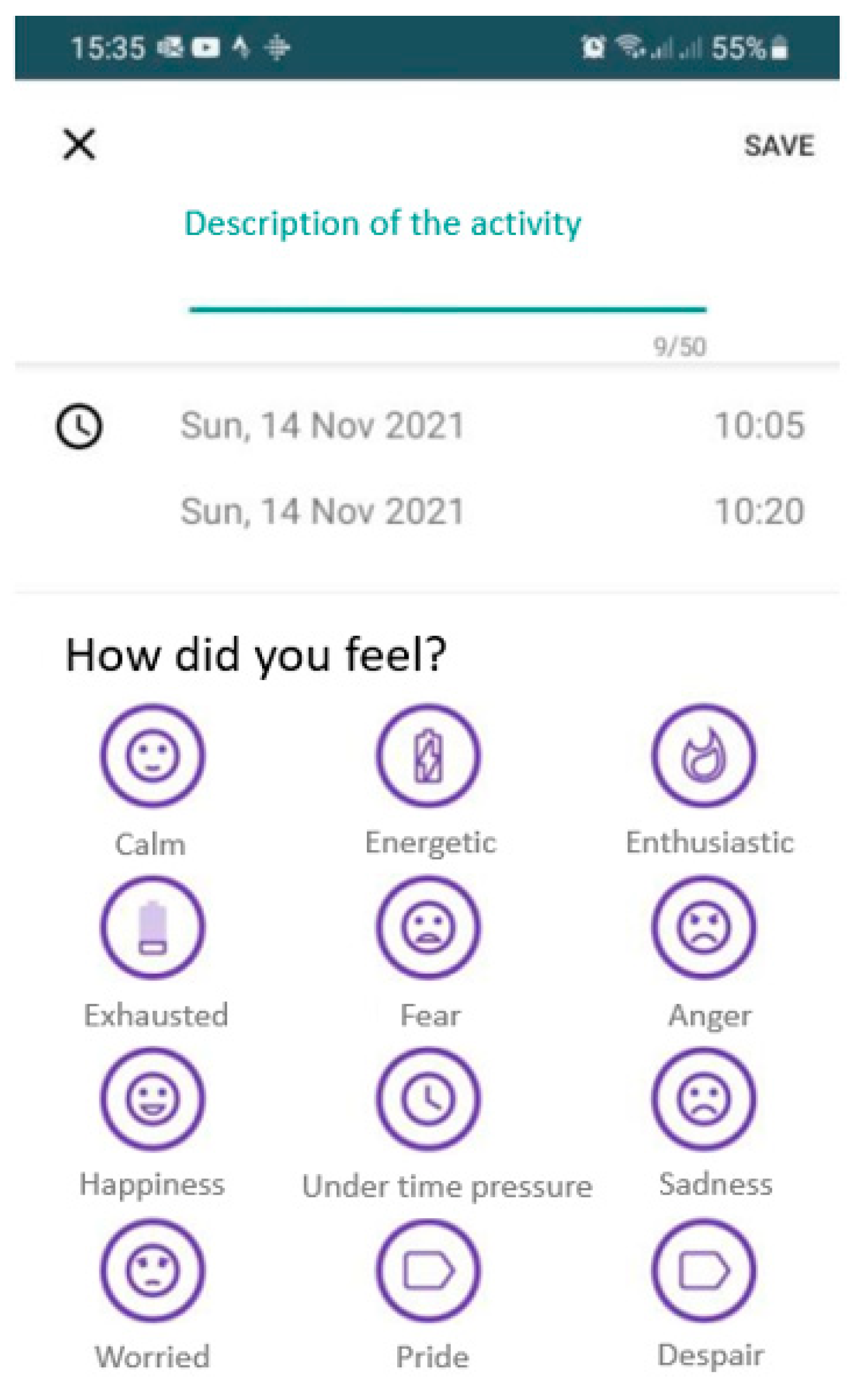

2.1.3. Experimental Methods

2.2. Modelling

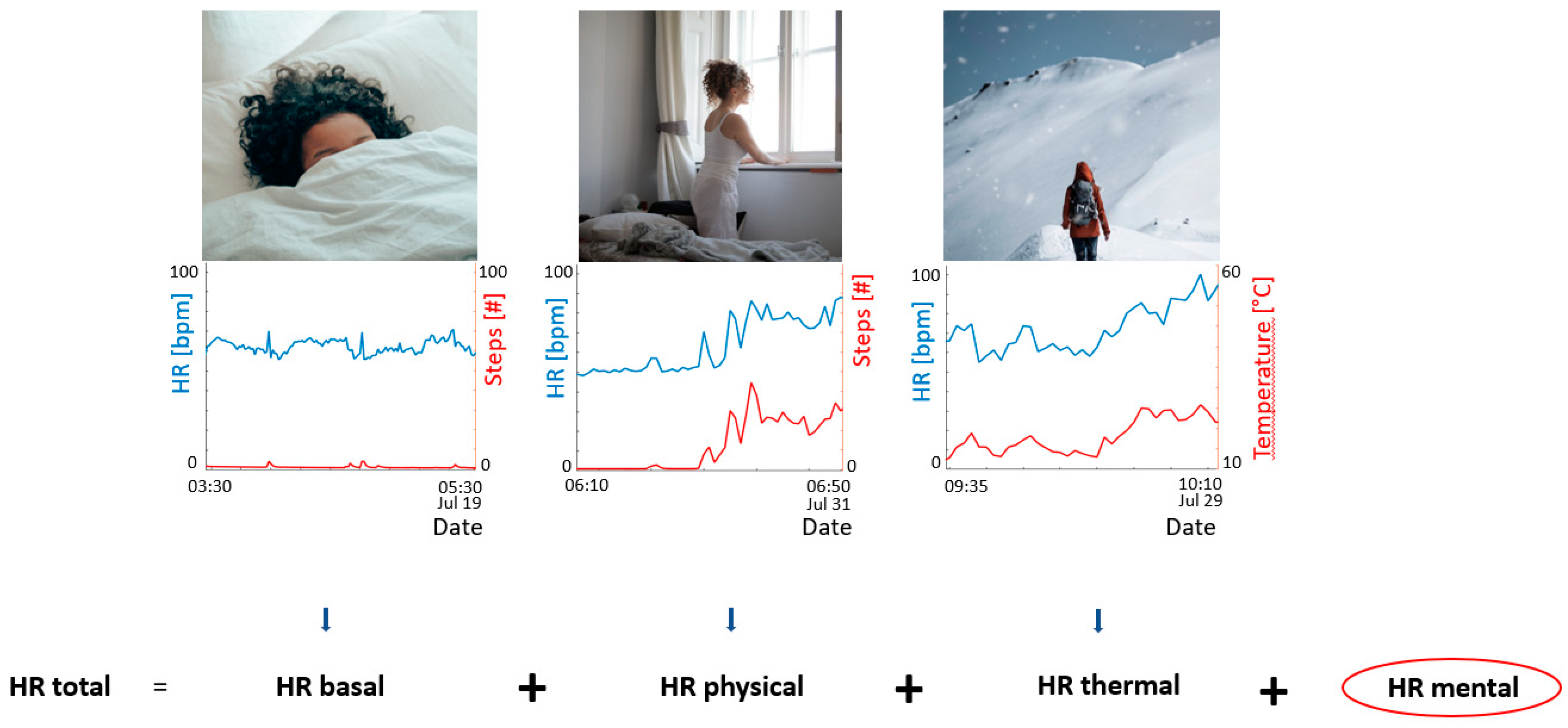

2.2.1. Mental Heart Rate Calculation

2.2.2. SISO TF Modelling

2.2.3. Emotion Classification

3. Results and Discussion

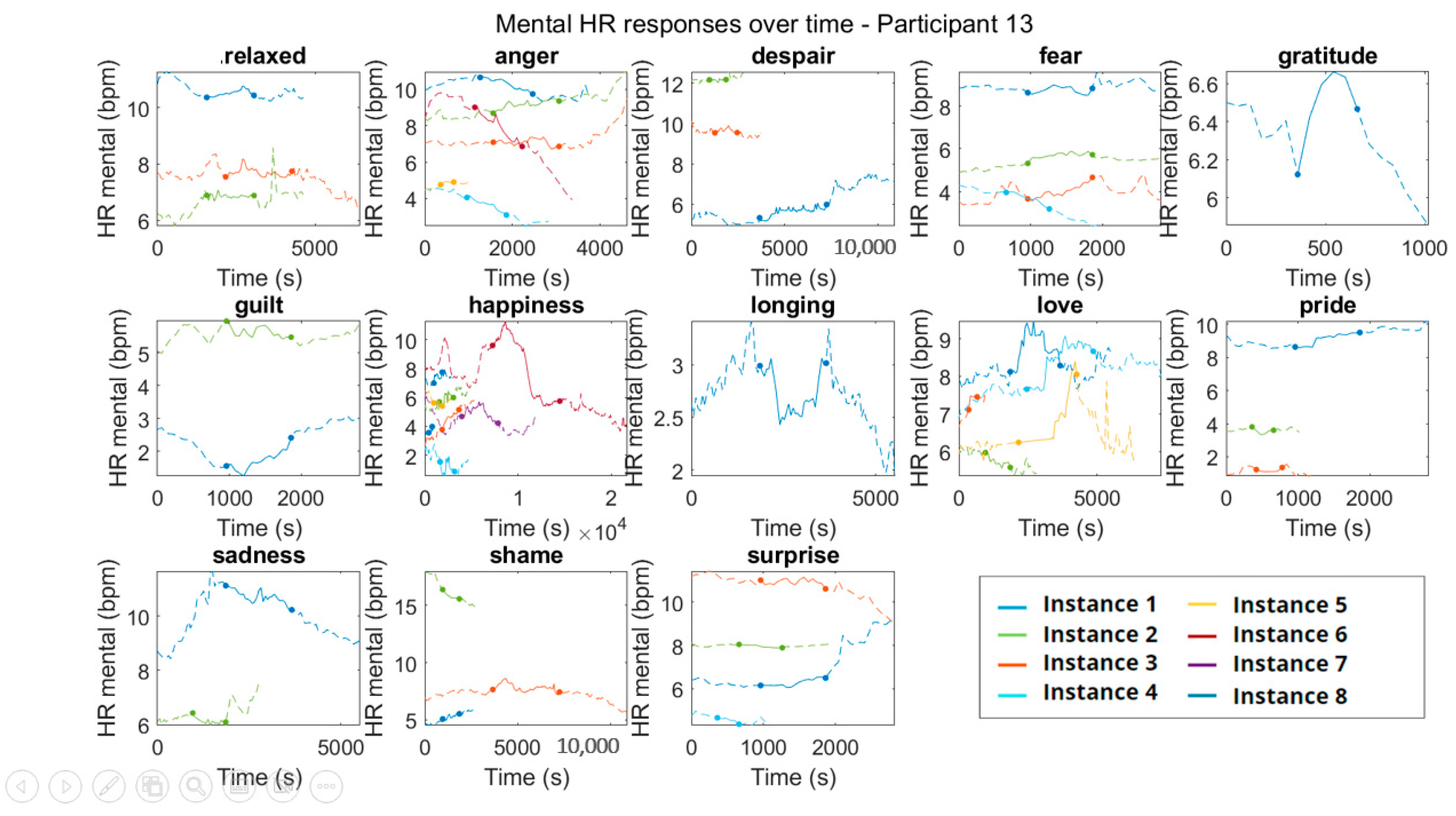

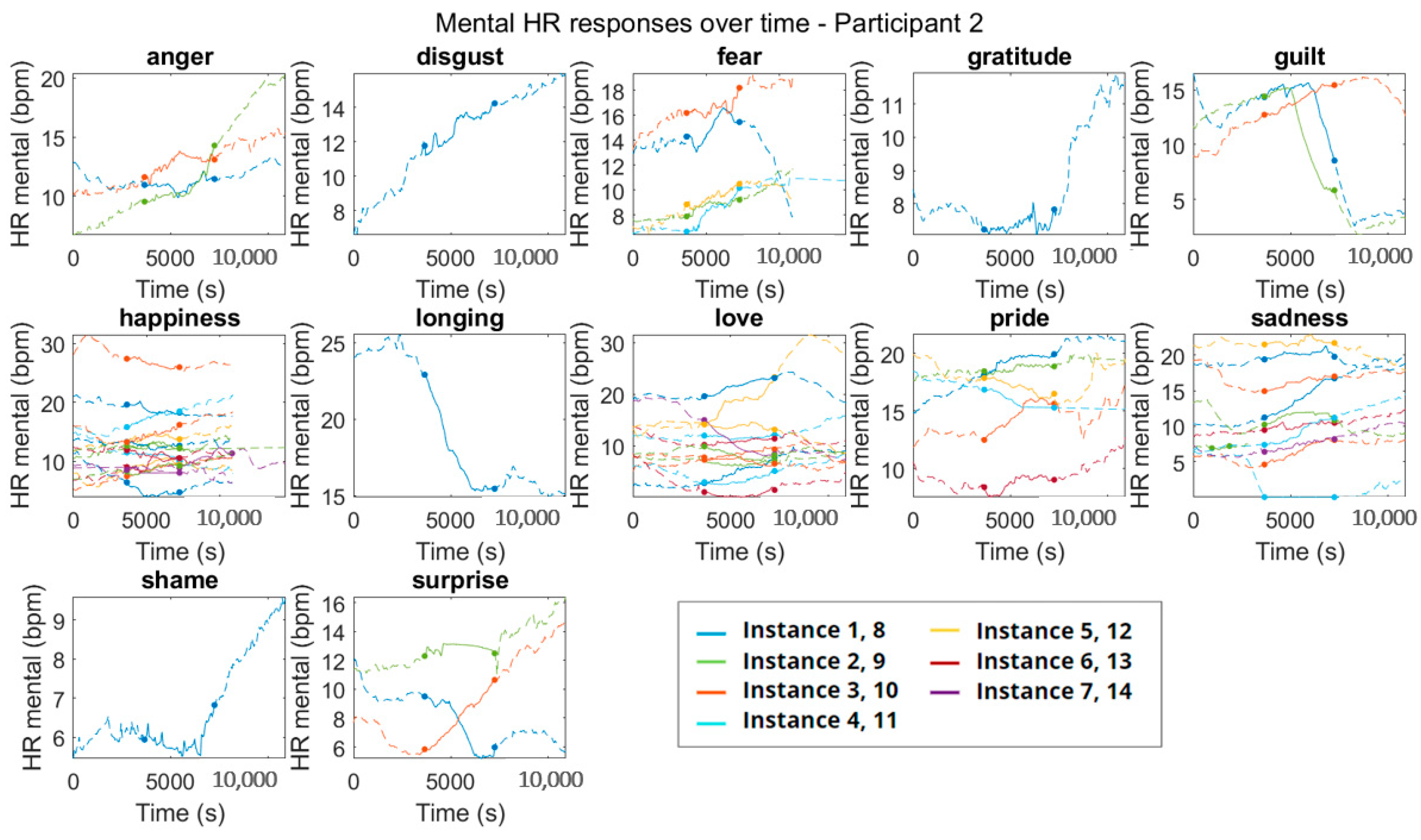

3.1. Experimental Results

3.2. SISO TF Modelling

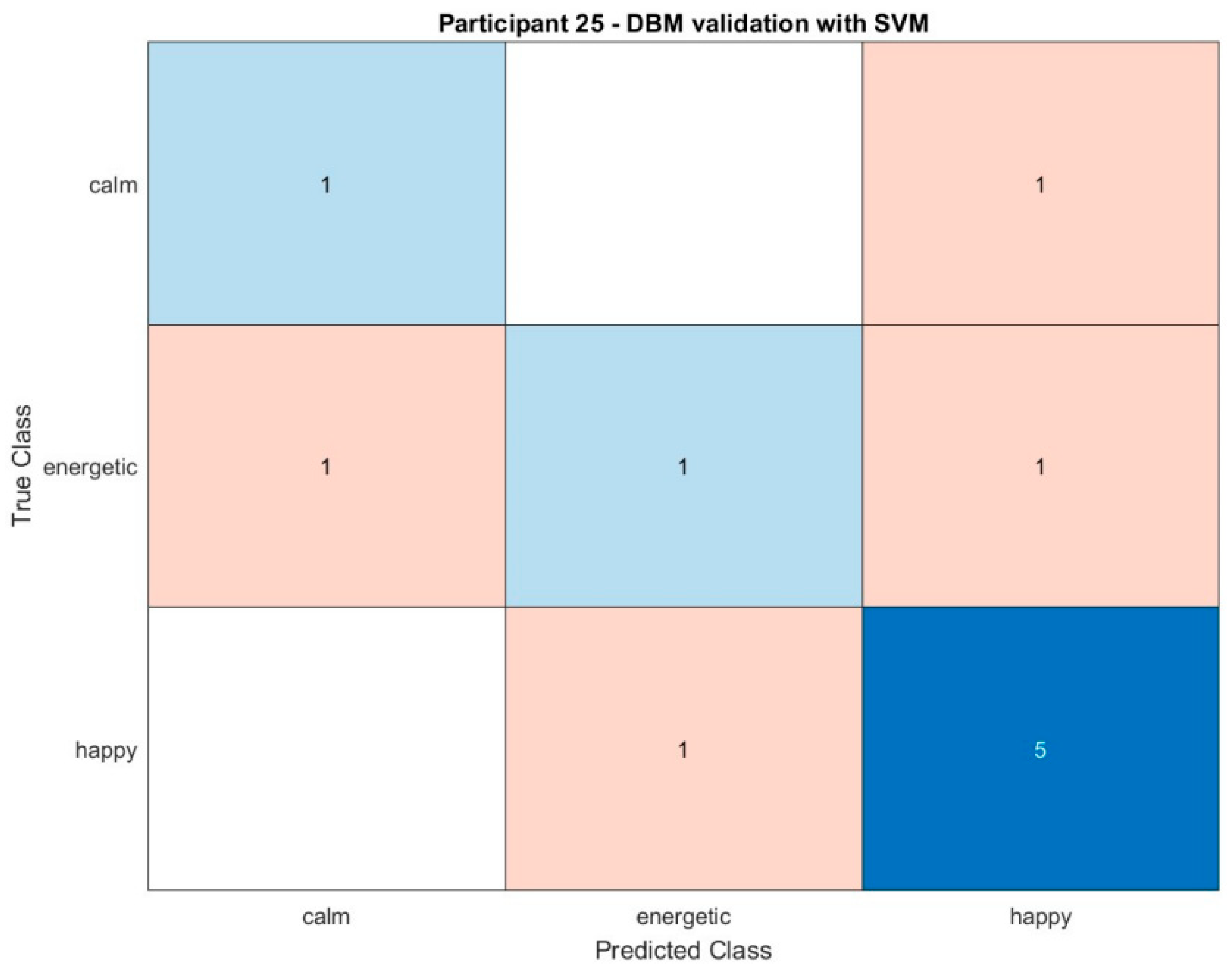

3.3. Classification of Emotions

4. Current Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Van Os, J.; Guloksuz, S.; Vijn, T.W.; Hafkenscheid, A.; Delespaul, P. The Evidence-Based Group-Level Symptom-Reduction Model as the Organizing Principle for Mental Health Care: Time for Change? World Psychiatry 2019, 18, 88–96. [Google Scholar] [CrossRef] [PubMed]

- Richter, D.; Wall, A.; Bruen, A.; Whittington, R. Is the global prevalence rate of adult mental illness increasing? Systematic review and meta-analysis. Acta Psychiatr. Scand. 2019, 140, 393–407. [Google Scholar] [CrossRef] [PubMed]

- Herrman, H. The Need for Mental Health Promotion. Aust. N. Z. J Psychiatry 2001, 35, 709–715. [Google Scholar] [CrossRef] [PubMed]

- Alonso, J.; Angermeyer, M.C.; Bernert, S.; Bruffaerts, R.; Brugha, T.S.; Bryson, H. Prevalence of Mentaldisorders in Europe: Results from the European Study of TheEpidemiology of Mental Disorders (ESEMeD) Project. Acta Psychiatr Scand 2004, 109, 21–27. [Google Scholar] [CrossRef]

- Doran, C.M.; Kinchin, I. A review of the economic impact of mental illness. Aust. Health Rev. 2019, 43, 43–48. [Google Scholar] [CrossRef]

- Kessler, R.C.; Angermeyer, M.; Anthony, J.C.; de Graaf, R.; Demyttenaere, K.; Gasquet, I.; de Girolamo, G.; Gluzman, S.; Gureje, O.; Haro, M.; et al. Lifetime prevalence and age-of-onset distributions of mental disorders in the World Health Organisation’s World Mental Health Survey Initiative. In Mental Health Services; 2007; Volume 23. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2174588/pdf/wpa060168.pdf (accessed on 8 June 2022).

- World Health Organization. Available online: http://www.who.int/news-room/fact-sheets/detail/mental-disorders (accessed on 8 June 2022).

- Kring, A.M.; Bachorowski, J.-A. Emotions and Psychopathology. Cogn. Emot. 1999, 13, 575–599. [Google Scholar] [CrossRef]

- Gross, J.J.; Muñoz, R.F. Emotion Regulation and Mental Health. Clin. Psychol. Sci. Pract. 1995, 2, 151–164. [Google Scholar] [CrossRef]

- Larradet, F.; Niewiadomski, R.; Barresi, G.; Caldwell, D.G.; Mattos, L.S. Toward Emotion Recognition from Physiological Signals in the Wild: Approaching the Methodological Issues in Real-Life Data Collection. Front. Psychol. 2020, 11, 1111. [Google Scholar] [CrossRef]

- Ladouce, S.; Donaldson, D.I.; Dudchenko, P.A.; Ietswaart, M. Understanding Minds in Real-World Environments: Toward a Mobile Cognition Approach. Front. Hum. Neurosci. 2017, 10, 694. [Google Scholar] [CrossRef]

- Shu, L.; Yu, Y.; Chen, W.; Hua, H.; Li, Q.; Jin, J.; Xu, X. Wearable Emotion Recognition Using Heart Rate Data from a Smart Bracelet. Sensors 2020, 20, 718. [Google Scholar] [CrossRef]

- Peterson, S.J.; Reina, C.S.; Waldman, D.A.; Becker, W.J. Using Physiological Methods to Study Emotions in Organizations. In Research on Emotion in Organizations; Emerald Group Publishing Limited: Bingley, UK, 2015; Volume 11, pp. 3–27. [Google Scholar] [CrossRef]

- Lovallo, W.R. Stress & Health, 3rd ed.; Sage Publications: New York, NY, USA, 2016. [Google Scholar]

- Kim, J.; Andre, E. Emotion recognition based on physiological changes in music listening. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 2067–2083. [Google Scholar] [CrossRef]

- Lin, Y.-P.; Wang, C.-H.; Jung, T.-P.; Wu, T.-L.; Jeng, S.-K.; Duann, J.-R.; Chen, J.-H. EEG-Based Emotion Recognition in Music Listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar] [CrossRef] [PubMed]

- Selvaraj, J.; Murugappan, M.; Wan, K.; Yaacob, S. Classification of emotional states from electrocardiogram signals: A non-linear approach based on hurst. Biomed. Eng. Online 2013, 12, 44. [Google Scholar] [CrossRef] [PubMed]

- Saarimäki, H.; Ejtehadian, L.F.; Glerean, E.; Jääskeläinen, I.P.; Vuilleumier, P.; Sams, M.; Nummenmaa, L. Distributed affective space represents multiple emotion categories across the human brain. Soc. Cogn. Affect. Neurosci. 2018, 13, 471–482. [Google Scholar] [CrossRef]

- Zhang, J.; Yin, Z.; Chen, P.; Nichele, S. Emotion recognition using multi-modal data and machine learning techniques: A tutorial and review. Inf. Fusion 2020, 59, 103–126. [Google Scholar] [CrossRef]

- Ahmed, M.; Rabiul Islam, S.; Anwar, A.; Moustafa, N.; Khan Pathan Editors, A.-S. Explainable Artificial Intelligence for Cyber Security; Springer: Cham, Switzerland, 2022; Volume 1025. [Google Scholar]

- Mindstretch App. Daily Insights in Your Energy Use. Available online: https://www.biorics.com/mindstretch/mindstretch-app/ (accessed on 7 November 2021).

- Berckmans, D. Automatic on-line monitoring of animals by precision livestock farming. Livest. Prod. Soc. 2006, 287, 27–30. [Google Scholar]

- O’connor, P.J. Mental Energy: Assessing the Mood Dimension. Nutr. Rev. 2006, 64, S7–S9. [Google Scholar] [CrossRef]

- Piette, D. Depression and Burnout a Different Perspective. Ph.D. Thesis, Faculty of Bioscience Engineering, Leuven, Belgium, 2020. [Google Scholar]

- BioRICS n.v. Science. Available online: https://www.biorics.com/science/ (accessed on 18 September 2021).

- Roberts, D.F. Basal Metabolism, Race and Climate. J. R. Anthropol. Inst. Great Br. Irel. 1952, 82, 169–183. [Google Scholar] [CrossRef]

- Norton, T.; Piette, D.; Exadaktylos, V.; Berckmans, D. Automated real-time stress monitoring of police horses using wearable technology. Appl. Anim. Behav. Sci. 2018, 198, 67–74. [Google Scholar] [CrossRef]

- Jansen, F.; Van der Krogt, J.; Van Loon, K.; Avezzù, V.; Guarino, M.; Quanten, S.; Berckmans, D. Online detection of an emotional response of a horse during physical activity. Vet. J. 2009, 181, 38–42. [Google Scholar] [CrossRef]

- Colombo, D.; Fernández-Álvarez, J.; Suso-Ribera, C.; Cipresso, P.; Valev, H.; Leufkens, T.; Sas, C.; Garcia-Palacios, A.; Riva, G.; Botella, C. The need for change: Understanding emotion regulation antecedents and consequences using ecological momentary assessment. Emotion 2020, 20, 30–36. [Google Scholar] [CrossRef]

- McDevitt-Murphy, M.E.; Luciano, M.T.; Zakarian, R.J. Use of Ecological Momentary Assessment and Intervention in Treatment with Adults. Focus 2018, 16, 370–375. [Google Scholar] [CrossRef]

- Beames, J.R.; Kikas, K.; Werner-Seidler, A. Prevention and early intervention of depression in young people: An integrated narrative review of affective awareness and Ecological Momentary Assessment. BMC Psychol. 2021, 9, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Fitbit Versa 2 Smartwatch Shop. Available online: https://www.fitbit.com/global/be/products/smartwatches/versa?sku=507BKBK (accessed on 9 November 2021).

- Smartwatch + GPS|Fitbit Versa 3. Available online: https://www.fitbit.com/global/be/products/smartwatches/versa3?sku=511GLNV (accessed on 9 November 2021).

- Fitness Tracker with Heart Rate. Shop Fitbit Inspire 2. Available online: https://www.fitbit.com/global/us/products/trackers/inspire2 (accessed on 9 November 2021).

- Shiffman, S.; Stone, A.A.; Hufford, M.R. Ecological momentary assessment. Annu. Rev. Clin. Psychol. 2008, 4, 1–32. [Google Scholar] [CrossRef] [PubMed]

- Taylor, C.J.; Pedregal, D.J.; Young, P.C.; Tych, W. Environmental time series analysis and forecasting with the captain toolbox. Environ. Model. Softw. 2007, 22, 797–814. [Google Scholar] [CrossRef]

- Joosen, P.; Norton, T.; Marchant-Ford, J.; Berckmans, D. Animal Welfare Monitoring by Real-Time Physiological Signals. In Proceedings of the 9th European Conference on Precision Livestock Farming, Cork, Ireland, 26–29 August 2019; pp. 337–344. [Google Scholar]

- Fernández, A.P.; Youssef, A.; Heeren, C.; Matthys, C.; Aerts, J.-M. Real-Time Model Predictive Control of Human Bodyweight Based on Energy Intake. Appl. Sci. 2019, 9, 2609. [Google Scholar] [CrossRef]

- Garnier, H.; Gilson, M.; Young, P.; Huselstein, E. An optimal IV technique for identifying continuous-time transfer function model of multiple input systems. Control Eng. Pr. 2007, 15, 471–486. [Google Scholar] [CrossRef]

- Young, P.C. The data-based mechanistic approach to the modelling, forecasting and control of environmental systems. Annu. Rev. Control 2006, 30, 169–182. [Google Scholar] [CrossRef]

- Neelamegam, S.; Ramaraj, E. Classification Algorithm in Data Mining: An Overview. Int. J. P2P Netw. Trends Technol. (IJPTT) 2013, 4, 369–374. [Google Scholar]

- Ray, S. A Quick Review of Machine Learning Algorithms. In Proceedings of the 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, 14–16 February 2019. [Google Scholar]

- The MathWorks, Inc. Classification Learner 2021a; The MathWorks Inc.: Natick, MA, USA, 2021. [Google Scholar]

- Hyperparameter Optimization in Classification Learner App-MATLAB & Simulink. Available online: https://www.mathworks.com/help/stats/hyperparameter-optimization-in-classification-learner-app.html (accessed on 13 December 2022).

- Luque, A.; Carrasco, A.; Martín, A.; de las Heras, A. The impact of class imbalance in classification performance metrics based on the binary confusion matrix. Pattern Recognit. 2019, 91, 216–231. [Google Scholar] [CrossRef]

- Bewick, V.; Cheek, L.; Ball, J. Statistics review 13: Receiver operating characteristic curves. Crit. Care 2004, 8, 508–512. [Google Scholar] [CrossRef] [PubMed]

- Hosmer, D.W.; Lemeshow, S.; Sturdivant, R.X. Assessing the Fit of the Model. In Applied Logistic Regression; John Wiley & Sons, Inc.: New York, NY, USA, 2013; pp. 153–225. [Google Scholar]

- Waugh, C.E.; Kuppens, P. Affect Dynamics; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Verduyn, P.; Lavrijsen, S. Which emotions last longest and why: The role of event importance and rumination. Motiv. Emot. 2014, 39, 119–127. [Google Scholar] [CrossRef]

- Eisele, G.; Vachon, H.; Lafit, G.; Kuppens, P.; Houben, M.; Myin-Germeys, I.; Viechtbauer, W. The Effects of Sampling Frequency and Questionnaire Length on Perceived Burden, Compliance, and Careless Responding in Experience Sampling Data in a Student Population. Assessment 2020, 29, 136–151. [Google Scholar] [CrossRef] [PubMed]

| Participant | Gender | Age | Number of Labelled Events | Number of Emotions |

|---|---|---|---|---|

| P1 | F | 25 | 17 | 7 |

| P2 | F | 22 | 86 | 13 |

| P3 | F | 22 | 0 | 0 |

| P4 | M | 23 | 17 | 6 |

| P5 | M | 51 | 99 | 10 |

| P6 | F | 22 | 76 | 9 |

| P7 | F | 22 | 21 | 4 |

| P8 | F | 22 | 100 | 11 |

| P10 | F | 22 | 42 | 8 |

| P11 | F | 18 | 583 | 11 |

| P13 | F | 26 | 60 | 14 |

| P15 | F | 26 | 9 | 5 |

| P16 | F | 23 | 13 | 5 |

| P17 | F | 22 | 9 | 5 |

| P18 | M | 24 | 7 | 6 |

| P22 | M | 23 | 23 | 7 |

| P23 | M | 21 | 2 | 1 |

| P24 | F | 22 | 19 | 4 |

| P25 | M | 24 | 75 | 9 |

| P26 | M | 22 | 61 | 10 |

| P1 | P2 | P4 | P5 | P6 | P7 | P8 | P10 | P11 | P13 | P15 | P16 | P17 | P18 | P22 | P23 | P24 | P25 | P26 | Total | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Anger | 4 | 5 | 45 | 3 | 7 | 1 | 47 | 9 | 2 | 1 | 120 | |||||||||

| Fear | 4 | 6 | 1 | 2 | 4 | 2 | 167 | 5 | 1 | 4 | 4 | 2 | 2 | 1 | 201 | |||||

| Guilt | 1 | 6 | 1 | 1 | 2 | 2 | 12 | |||||||||||||

| Happiness | 3 | 20 | 5 | 18 | 36 | 9 | 46 | 3 | 228 | 12 | 5 | 2 | 2 | 1 | 26 | 3 | 416 | |||

| Love | 1 | 15 | 5 | 3 | 32 | 5 | 1 | 61 | ||||||||||||

| Sadness | 2 | 12 | 5 | 8 | 11 | 23 | 3 | 1 | 2 | 4 | 69 | |||||||||

| Surprise | 1 | 5 | 2 | 12 | 19 | 3 | 18 | 24 | 6 | 8 | 1 | 98 | ||||||||

| Despair | 1 | 5 | 3 | 2 | 3 | 1 | 15 | |||||||||||||

| Disgust | 1 | 4 | 2 | 7 | ||||||||||||||||

| Gratitude | 1 | 1 | 1 | 1 | 4 | |||||||||||||||

| Longing | 3 | 2 | 7 | 20 | 1 | 33 | ||||||||||||||

| Pride | 9 | 1 | 4 | 1 | 21 | 3 | 1 | 1 | 42 | |||||||||||

| Shame | 2 | 1 | 14 | 3 | 1 | 21 | ||||||||||||||

| Calm | 2 | 12 | 6 | 20 | ||||||||||||||||

| Frustration | 2 | 2 | 4 | 1 | 1 | 6 | 3 | 29 | ||||||||||||

| Stress | 7 | 9 | 1 | 3 | 20 | |||||||||||||||

| Under time pressure | 4 | 9 | 5 | 17 | 35 | |||||||||||||||

| Energetic | 1 | 1 | 10 | 19 | 31 | |||||||||||||||

| Nervous | 1 | 1 | ||||||||||||||||||

| Relaxed | 6 | 6 | ||||||||||||||||||

| Worried | 1 | 4 | 5 | |||||||||||||||||

| Excited | 2 | 2 | 1 | 5 | ||||||||||||||||

| Exhausted | 7 | 3 | 10 | |||||||||||||||||

| Unhappy | 4 | 1 | 5 | |||||||||||||||||

| Total | 16 | 86 | 16 | 96 | 75 | 21 | 88 | 42 | 582 | 56 | 9 | 10 | 7 | 5 | 16 | 2 | 8 | 73 | 60 |

| Participant | KNN | SVM | Decision Tree | Random Classifier |

|---|---|---|---|---|

| P1 | 60.0% | 60.0% | 40.0% | 50.0% |

| P2 | 30.2% | 30.2% | 30.2% | 12.5% |

| P5 | 54.3% | 57.1% | 65.7% | 20.0% |

| P6 | 50.0% | 50.0% | 42.9% | 16.7% |

| P7 | 57.1% | 57.1% | 42.9% | 50.0% |

| P8 | 56.7% | 56.7% | 56.7% | 20.0% |

| P10 | 66.7% | 66.7% | 33.3% | 50.0% |

| P11 | 44.0% | 42.8% | 37.4% | 11.1% |

| P13 | 33.3% | 38.1% | 19.0% | 16.7% |

| P25 | 54.5% | 63.6% | 27.3% | 33.3% |

| P26 | 40.0% | 40.0% | 26.7% | 33.3% |

| Average | 49.7% | 51.1% | 38.4% | 28.5% |

| P1 | P2 | P5 | P6 | P7 | P8 | P10 | P11 | P13 | P25 | P26 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Happiness | 0.50 | 0.52 | 0.61 | 0.56 | 0.42 | 0.37 | 0.57 | 0.60 | 0.67 | ||

| Anger | 0.13 | 0.62 | 0.27 | 0.41 | 0.51 | 0.62 | |||||

| Surprise | 0.23 | 0.44 | 0.32 | 0.12 | 0.43 | ||||||

| Fear | 0.50 | 0.38 | 0.38 | 0.60 | |||||||

| Guilt | 0.21 | ||||||||||

| Sadness | 0.57 | 0.41 | 0.48 | 0.12 | 0.33 | 0.32 | |||||

| Love | 0.58 | 0.25 | 0.50 | 0.32 | |||||||

| Longing | 0.38 | 0.53 | |||||||||

| Pride | 0.20 | 0.62 | 0.52 | 0.32 | |||||||

| Shame | 0.61 | 0.19 | |||||||||

| Stress | 0.72 | 0.69 | |||||||||

| Under time pressure | 0.42 | 0.44 | |||||||||

| Calm | 0.83 | ||||||||||

| Relaxed | 0.32 | ||||||||||

| Energetic | 0.50 | 0.55 | |||||||||

| Worried | 0.31 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fernández, A.P.; Leenders, C.; Aerts, J.-M.; Berckmans, D. Emotional States versus Mental Heart Rate Component Monitored via Wearables. Appl. Sci. 2023, 13, 807. https://doi.org/10.3390/app13020807

Fernández AP, Leenders C, Aerts J-M, Berckmans D. Emotional States versus Mental Heart Rate Component Monitored via Wearables. Applied Sciences. 2023; 13(2):807. https://doi.org/10.3390/app13020807

Chicago/Turabian StyleFernández, Alberto Peña, Cato Leenders, Jean-Marie Aerts, and Daniel Berckmans. 2023. "Emotional States versus Mental Heart Rate Component Monitored via Wearables" Applied Sciences 13, no. 2: 807. https://doi.org/10.3390/app13020807

APA StyleFernández, A. P., Leenders, C., Aerts, J.-M., & Berckmans, D. (2023). Emotional States versus Mental Heart Rate Component Monitored via Wearables. Applied Sciences, 13(2), 807. https://doi.org/10.3390/app13020807