Featured Application

The development of this co-registration method that combines CBCT with photographic images and subsequent realistic image reconstruction can be used during the orthognathic surgical planning stage. Therefore, both orthodontists and maxillofacial surgeons will obtain more realistic and precise result predictions, allowing them to better clarify the patient’s surgical prognosis.

Abstract

Orthognathic surgery is a procedure used to correct intermaxillary discrepancies, thus promoting significant improvements in chewing and breathing. During the surgical planning stage, orthodontists often use two-dimensional imaging techniques. The assessment is based on CBCT images and dental cast models to overcome these limitations; however, the evaluation of soft tissues remains complex. The aim of the present study was to develop a co-registration method of CBCT and photo images that would result in realistic facial image reconstruction. CBCT images were three-dimensionally rendered, and the soft tissues were subsequently segmented resulting in the cranial external surface. A co-registration between the obtained surface and a frontal photo of the subject was then carried out. From this mapping, a photorealistic model capable of replicating the features of the face was generated. To assess the quality of this procedure, seven orthodontists were asked to fill in a survey on the models obtained. The survey results showed that orthodontists consider the three-dimensional model obtained to be realistic and of high quality. This process can automatically obtain a three-dimensional model from CBCT images, which in turn may enhance the predictability of surgical-orthognathic planning.

1. Introduction

Dentofacial deformities interfere with the quality of life, both psychologically and physically. Patients with dentofacial deformities require a meticulous assessment of the dentoalveolar position, facial skeleton, facial soft tissue surface, and their interdependency. The treatment plan must correct this triad in order to achieve an aesthetic and stable result with adequate function [1,2]. The treatment plan is dependent on an in-depth physical examination, cephalometric analysis, patient age, and severity of the malocclusion. In complex cases, a combination of orthodontic treatment and orthognathic surgery is required [3,4,5].

A systematic review found that three-dimensional models are accurate tools that allow clinicians to identify and locate the source of the deformity as well as its severity. Moreover, 3D models are realistic tools for treatment planning and assessment of outcomes. Since these models can be manipulated in any direction, avoiding patient recall and decreasing the time of the appointment, the treatment simulation and pre- and post-treatment comparisons are easier, allowing the clinicians to assess facial changes following orthodontic and/or surgical treatments. Despite the progress in 3D imaging, the available imaging techniques still do not allow the representation of the three elements of the face (skeleton, soft tissue, and dentoalveolar) at the same time with optimal quality [6].

A correct diagnosis and surgical plan are fundamental to obtaining improved therapeutic results. To achieve this, the clinician can use a three-dimensional computerized virtual preoperative simulation using cone beam computed tomography (CBCT) [7,8]. CBCT is a medical imaging technique that uses a cone-shaped radiation beam that rotates 180° to 360° around the patient [9], allowing the images to be reconstructed three-dimensionally [10]. It is currently an important tool in diagnosis, planning and postoperative follow-up of complex maxillofacial surgery cases [11]. It further complements conventional two-dimensional X-ray [12] imaging techniques, given that these have some limitations [13,14]. Moreover, CBCT allows for detailed planning, not only by the creation of a virtual 3D craniofacial model, but also by the virtual simulation of the planned surgery. This process is based on a simplified anatomical virtual model with hard, soft, and dental tissues. The obtained model facilitates the construction of surgical splints using CAD/CAM techniques, thus rendering manually constructed splints unnecessary [15]. CBCT reconstruction with a digital dental cast is the most accurate model to visualize the facial dentition and skeleton. However, CBCT skin is untextured and to allow a more realistic visualization of the 3D facial model, a superimposition of the textured facial soft tissue surface (e.g., 2D photographs) is required. This process is easy and cheap, and uses a specific algorithm and software obtainable through surface-based registration [6].

It is possible to obtain 3D models by reconstruction of CBCT images using techniques such as multiplanar rendering, surface rendering, which includes contour-based surface reconstruction and isosurface extraction based on marching cube, and volume rendering [16]. Multiplanar rendering is a technique that displays intensity values on arbitrary cross-sections using volumetric data. The volume is projected onto three orthogonal spatial planes (coronal, sagittal and axial), allowing the user to navigate while changing the coordinates of the three planes [16]. Surface rendering allows for volume quantification through datasets that have common values, using voxels, polygons, line segments or even points. Marching cube has the ability to define about 15 intersection patterns, resulting in a detailed construction of a desired surface [16,17,18]. Volume rendering allows the visualization of the 3D model from any direction, making it possible to obtain a high-quality analysis of the various layers. This enhanced viewing experience thus greatly facilitates the ability to interpret the data. Among the various techniques described, volume rendering presents the best image quality but requires greater computational power [16].

The most critical aspect of virtual surgery planning is currently focused on the soft facial tissue and on the outcome of future bone and tooth movement. However, the combination of 2D photographic images with 3D models generated from CBCT images has not yet been sufficiently explored. Recently, significant progress has been achieved regarding the 3D reconstruction of facial models from a single portrait image. According to current scientific evidence, it has been concluded that this method has a superior performance compared to the others, since it requires a smaller number of resources (one portrait photograph) and presents greater surface detail [19]. However, CBCT reconstruction may include artefacts caused by restorations or orthodontic appliances or defects in the clear representation of facial patient features (e.g., face color), which affect the optimal representation of the 3D facial image. Therefore, new algorithms must be tested in order to obtain a more realistic and accurate 3D facial model that allows the performance of several simulations with different osteotomies and skeletal movements. The aim of this study was to assess the possibility of external facial reconstruction using CBCT medical image volumes and patient portrait photographs.

2. Materials and Methods

2.1. Study Design

The following study was approved by the Ethics Committee of the Faculty of Medicine of the University of Coimbra (Reference: CE-039/2020) and was conducted in accordance with the Declaration of Helsinki. All participants signed a written informed consent for their participation.

2.2. Data Collection Procedure

CT images of all participants were collected from the database of patients who underwent CBCT of the skull at the Medical Imaging Department of the Coimbra Hospital and University Centre between January 2015 and February 2021.

Patients included in the study conformed to the following inclusion criteria: patients with skeletal class I, II and III dentofacial deformities in need of orthodontic-surgical treatment; Caucasian patients aged over 18 years; and patients with CBCT and photographic records acquired before and after orthognathic surgery. The exclusion criteria included: patients with congenital abnormalities or syndromes with craniofacial deformities; patients with previous head and neck trauma; patients with multiple missing teeth, untreated caries lesions, active periodontal disease; and patients with a previous history of orthodontic treatment.

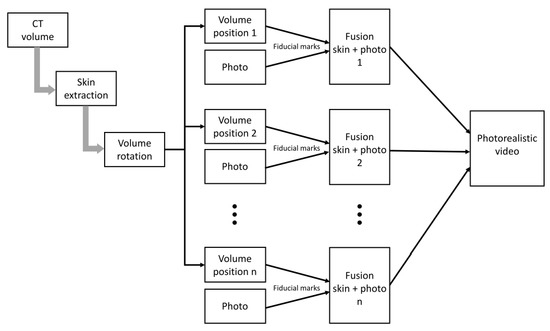

All CBCT image information was exported in DICOM format, which represents a set of standards that ensure the safe exchange and storage of radiological images. Photographs were exported in JPEG format. As for the CBCT scanner, an i-CAT machine with a voxel dimension of 0.3 mm and acquisition time window of 13.4 s was used. The machine was calibrated for all data acquisitions. Regarding photographic recording, an RGB digital sensor camera was used, and the same model and focal length were used for extraoral photographs. The exposure of the patients’ faces raises ethical issues; however, it is essential for the development of this study that these are exposed. Figure 1 presents a flowchart on the methods used to develop a co-registration method of CBCT and photo images and, consequently, for the realistic facial image reconstruction.

Figure 1.

Flowchart on the methods used to develop a co-registration method of CBCT and photo images.

2.3. CBCT Volume

2.3.1. Rendering

The software chosen for processing and exporting the CBCT volumes was Matlab R2020b. The images can be visualized using the Volume Viewer application, which has the ability to render them using several methods. Volume rendering was preferred due to its advantages.

2.3.2. Thresholding and Skin Segmentation

Data acquired by CBCT commonly present some degree of noise as well as artefacts. In order to manage the range of voxel values, a thresholding technique was used. Through the aforementioned technique, the image intensity value corresponding to air and noise from different sources was determined. The main area of interest for this study was the skin surface, so smoothing and correcting imperfections represented a considerable challenge, as CBCT shows low contrast for soft tissues.

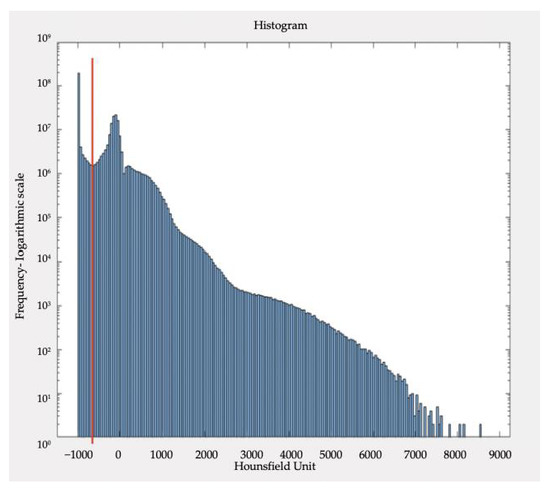

The threshold value was determined from the histogram obtained from a scan chosen from our database (Figure 2). In the histogram (logarithmic scale) under consideration, it is possible to observe an absolute minimum within the range of negative values and it was verified that this value could be used to distinguish useful information from noise and air. There is no inconvenience in preserving the data referring to hard tissue, as well as other metallic materials, such as prosthesis, that may be present.

Figure 2.

Typical distribution of Hounsfield Unites (HU) values, for a CBCT volume under study. In red, the absolute minimum value of the negative value voxels can be observed. In red is the corresponding threshold value, about −634 HU.

After defining the range of voxels, the image was binarized to ensure that all voxel values above the threshold (zone of interest) were equal to 1 and the remaining were equal to 0. Therefore, the image of the patient’s head becomes a single cohesive volume, facilitating the visualization and data processing.

2.3.3. Image Processing Techniques

To achieve an adjustable high-definition 3D model of the patient’s head, a 3D Gaussian smoothing filter with a variable standard deviation value was used. Despite being an effective filter, it is unable to fill gaps or voids or, even, to exclude single separated voxels from the patient model. Therefore, to overcome this situation, closing, erosion, and filling operations were applied to the smooth images to obtain a version without holes or separated voxels.

2.3.4. Cinematic Animation of the 3D Craniofacial Volume

After achieving the 3D volume of the face, it is necessary to capture and export the craniofacial model at various positions to establish a number of frames in order to create a three-dimensional cinematic animation.

After establishing the rotation trajectory around the craniofacial model and taking into consideration the camera position in relation to the volume, spatial transforms were used to draw the desired trajectory along the three space axes.

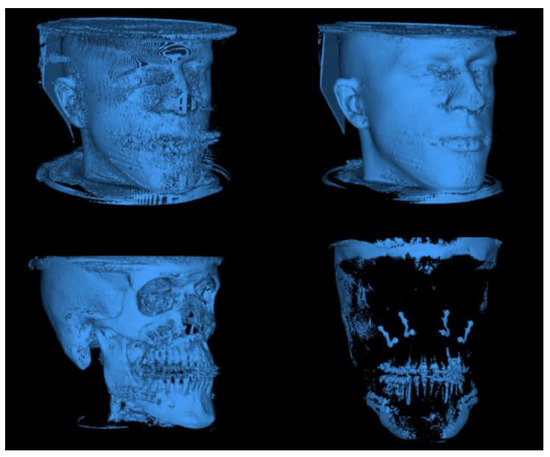

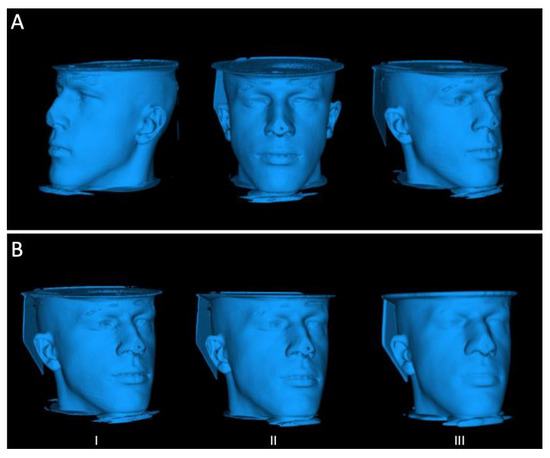

In addition to defining a trajectory, it is necessary to capture each of the frames and export them in PNG format. It is important to point out that the rotation angle amplitude, the number of frames and the volume and background colors are parameters that can be adjusted in order to obtain the best possible representation of the model (Figure 3).

Figure 3.

30 frame sequence for the cinematic animation of the 3D model.

2.4. Photographic Image

Unlike 3D volumes, photographic images lack information regarding depth; therefore, face-recognition and face-swapping methods were used to overcome this issue. These techniques are based on fiducial points, thus allowing the information of a 2D image to be mapped, even without the depth data of the facial points. Based on the information of the fiducial marks, a Python routine was implemented using several packages, such as OpenCV, NumPy and Dlib, that allowed the 2D images to be mapped to each other.

2.4.1. Face Recognition

Some face recognition algorithms identify features through reference points from an image of the face. They can be classified under two categories: geometric, which analyzes distinct features; and, photometric, a statistical approach that breaks down an image into figures and compares them with reference models. In this case, these features were useful to identify and process as a human face to extract facial landmarks and fiducial points of each patient, to later enable face-swapping. At this stage, the processed CBCT volume is the target subject, and the portrait photograph is the source.

The fiducial point detection algorithm allows these points to be identified from models based on different sets of points and that may have been obtained from the most varied computer vision techniques, such as ones obtained through machine learning techniques or neural networks. Consequently, there is no need to train a face detection model, which simplified the progress of this project.

Considering these aspects, a model was used that allowed for the identification of 68 characteristic points of the human face. This model was trained with the ibug 300-W database, a dataset consisting of 300 images of in-the-wild faces in an indoor environment and 300 faces in an outdoor environment [20].

2.4.2. Delaunay Triangulation

To implement the process of face swapping, it is necessary that both faces are divided and processed geometrically, as it is not possible to just “swap” one face for the other given that faces vary in size and perspective. However, if the face is sectioned into small triangles, the homologous triangles can be simply swapped, keeping the proportions, and adapting the facial expressions, such as a smile, open mouth or closed eyes.

Prior to the triangulation, it is necessary to create a mask that covers all 68 points. To do so, an operation to obtain the optimal perimeter is applied using the most external points of the face image.

Regarding the approach to facial point triangulation, Delaunay triangulation was applied [21]. This algorithm can maximize the minimum angle of all the angles of the triangles involved in the point triangulation. Hence, it is possible to obtain a matrix that presents three sets of coordinates for the vertices of each triangle in two dimensions, resulting in the subdivision of the 2D faces into triangles.

2.5. Fusion of 2D and 3D Modality

To achieve the aim of this work, it was necessary to merge the data from the 3D volume with the data obtained from the processing of the 2D images.

The DICOM volume has all the information regarding the different perspectives and dimensions of the patient’s face; however, it lacks the photographic aspect. On the other hand, the photograph has all the colorimetric data, such as skin tone, facial hair, and eye color.

To solve this problem, the colorimetric data was extracted from the patient’s photograph, based on the Delaunay mapping and triangulation technique. This way, it was possible to achieve a 3D animation of the patient, given its photorealism.

2.5.1. Face Swapping

In the face-swapping technique, the image of the target subject’s face must be defined, which in this study was each of the generated CBCT volume frames. The segments of the face were transposed using a single portrait photograph of the patient. The segments were transposed in the form of triangles that form homologous pairs with the target face triangles. Each of the triangles had three vertices that correspond to three of the 68 fiducial points, which facilitated information matching between the two face mappings.

On the original face from the patient’s portrait photograph, Delaunay’s triangulation was applied. However, on the target face, a different approach was used. Based on the fact that 68 fiducial points were obtained for both the target and source subjects, the triangles of the target face were calculated according to the triangles of the source face. This meant that a triangle on the origin face, composed, for example, of indices 1, 2 and 37 of the fiducial point map, will correspond to a homologous triangle on the target face, whose indices will also be 1, 2 and 37. Therefore, a triangle is characterized and identified on both faces by the indices to which the coordinates of its vertices correspond. Although the indices are equal, the area and perspective of the triangle may vary from one face to the other, making the face-swapping process demanding.

To calculate the triangles of the target face, a series of matrix operations were used. They served to identify the three indices that make up each of the triangles of the source face. Once the homologous triangles had been determined, an affine transformation was used so that the source triangle corresponded to the size and shape of the target triangle. This way, triangles that were geometrically equal to those of the target were obtained, but with an image texture that corresponded to the source face.

Finally, it was necessary to aggregate the newly transformed triangles, so that they matched the mask of the target face, and then transpose them to the image in question. For that purpose, a mask was built on the target image in order to replace it with the mask obtained from the source triangles.

2.5.2. Smoothing

After the transposition from one face to the other, the result can still be considered incomplete, since no contour smoothing or color-blending methods have been applied. Furthermore, the cinematic animation of the 3D model itself, with the skin surface already colored from the portrait image, requires an implementation that ensures a pleasant and realistic viewing experience for the user. With the face correctly transported to the target subject, the colors and contrast of the mask of the source subject are automatically adjusted. In this way, the mask is framed in the target subject in a more coherent and harmonious way.

To ensure smooth rotation of the patient’s face mask from frame to frame, a pre-processing of the 3D volume frames was performed where the fiducial points of each frame were calculated and registered. With the 68 points calculated for each frame, a smoothing adjustment was applied to the curve of each index as a function of time, resorting to a quadratic function. Through this operation, the fiducial points were recalculated so that when the process of photographic information transposition took place, a smoother animation could be obtained in its perspective transitions. The final result was exported in GIF format.

Regarding the smoothing of the trajectory curve of each of the 68 fiducial points, the coefficient of determination was used to assess the quality of the adjustment of the points to a quadratic function. For each patient, 68 coefficients were calculated for the adjustment of x-coordinates and 68 for y-coordinates as a function of time (frames). For this analysis, eight cases present in the database were studied.

2.6. Survey to Evaluate the Results

The quality of the animations obtained was assessed using an online questionnaire. The results of the methodology were evaluated by a panel of specialist doctors in the area of Orthodontics, belonging to the Institute of Orthodontics at the Faculty of Medicine, University of Coimbra.

The questionnaire consists of 19 questions, 16 of which referred to four randomly selected cases while two were related to the method used and, finally, one question pertained to their professional experience. For each case, two different rendering versions were presented, designated as version A and B, in order to assess which of them might be better regarding rendering quality and photorealism. The rendering quality comprised the smoothness, clarity, and definition of the animation. Photorealism was related to the precision of the proportions and dimensions of the subject’s face. Both the rendering quality and the photorealism were evaluated on a scale of 10 values, with 1 representing low quality and 10 representing high quality. Among the four selected cases, a decoy case was generated, i.e., an animation was exported whose portrait photograph did not correspond to the CBCT volume, to assess the realism and accuracy of the method of fusion of 2D and 3D modalities. The analysis of responses was performed resorting to descriptive statistical methods.

3. Results

3.1. Rendering CBCT Volume Processing

Each patient was represented using 576 axial sections, generated from the CBCT technique in DICOM format. The volumes obtained in the three spatial planes were analysed, taking into account the abundance of voxels with a value equal to −1000 HU (which represent air), and voxels with positive values, which are indicative of tissues with higher hardness.

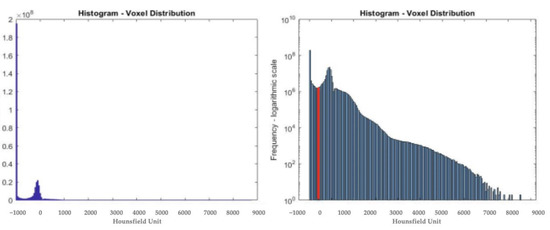

The voxels were binarized according to a threshold defined by the user. Applying this method (Figure 4) revealed the presence of noise for values around −900 HU, soft tissues (between −800 HU and 0 HU) and hard tissues (positive HU values).

Figure 4.

CBCT volume after binarization for different threshold values.

From the analysis of the distribution of voxel values (Figure 5) it was possible to automate the segmentation of the patient’s skin. The analysis of the Hounsfield units, in the form of a histogram, allows us to calculate the value that differentiates the information concerning the patient from the data representing only noise and artefacts. After histogram normalisation, the absolute minimum in the negative value range acts as the threshold value.

Figure 5.

Distribution of the corresponding HUs one volume TCFC. The graph on the right side represents these values on a logarithmic scale. In red is the corresponding threshold value, about −634 HU.

The volume is then binarised with the determined threshold value, so that the information that is useful for the representation of the 3D model can be segmented. By doing so, each volume processed by the developed algorithm will be automatically rendered and most of the noise will be automatically excluded (Figure 6).

Figure 6.

Results of the thresholding methods (A); and after applying a Gaussian filter (B) (I) Sigma = 0.5; (II) Sigma = 1.5; (III) Sigma = 4.

In addition to the thresholding technique, a three-dimensional Gaussian smoothing filter was applied to smooth the surface of the 3D model (Figure 6). Among the tested standard deviation values (sigma), for the Gaussian filter, a standard value of 1.5 was chosen, as higher values will cause a loss of detail and lower values have little impact on smoothing.

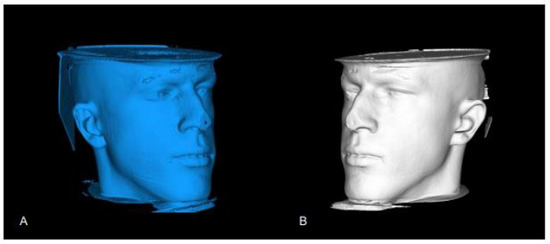

Following this process, the two-dimensional data was further filtered to fill gaps and exclude separated voxels from the patient model. The result of this data treatment sequence can be observed in Figure 7. It is important to highlight that the smoothing and correction of imperfections within the model in more susceptible areas such as eyes, nose or mouth were accomplished without losing details.

Figure 7.

Result of 2D processing: (A) Model before processing; (B) Model after processing.

Lastly, the sequence of frames was exported, translating a kinematic animation of the patient’s head model. This whole process is automated, so it is only up to the user to indicate the number of frames to export for a fixed amplitude of movement.

3.2. Image Processing and Fusion of 2D and 3D Modalities

3.2.1. Facial Recognition

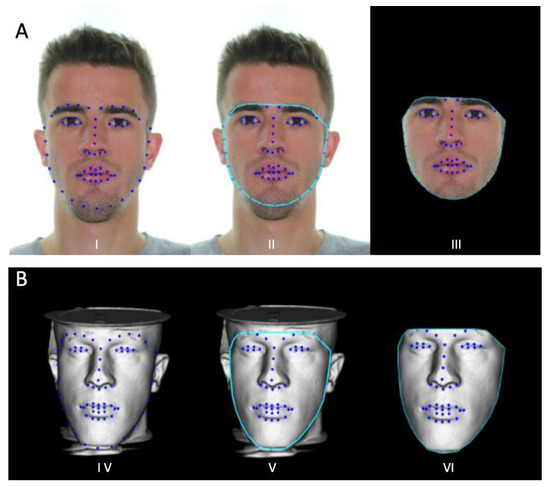

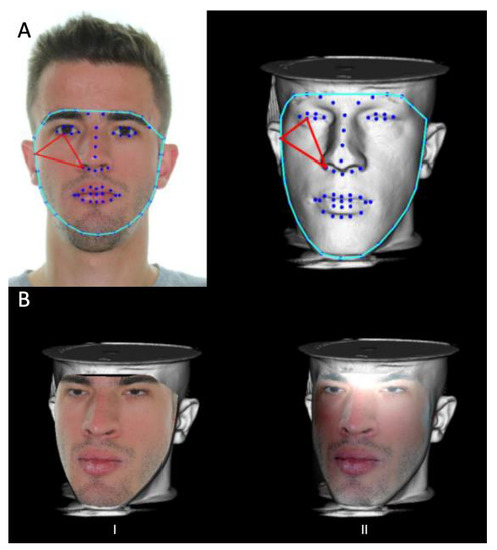

The facial recognition process was applied both to the portrait photograph and to each of the frames of the 3D animation (Figure 8). In both results, the 68 fiducial points, generated from the pre-trained shape predictor 68 face landmarks model were determined.

Figure 8.

Result of 2D processing: (A) I—Model before processing; II—Model after processing; III—Face processing sequence of a 3D model frame; and (B) IV—Identification of the 68 fiducial points; V—Face mask delimitation; VI—Face mask extraction.

3.2.2. Delaunay Triangulation

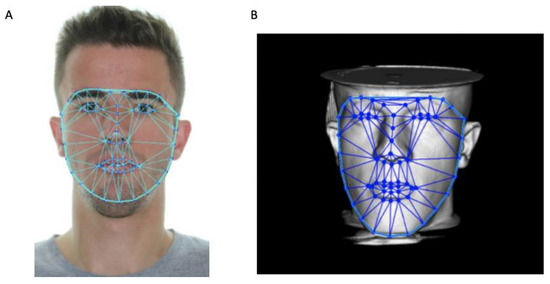

From the calculation of the 68 facial marks, each one identified by its index value (from 1 to 68), it was possible to calculate Delaunay’s triangles for the portrait photograph, which concerned the source subject. Furthermore, Delaunay’s triangles were estimated from the source face onto the target subject (Figure 9). This technique ensured that the transposition of the face segments, in the form of triangles, was done accurately and correctly. In both cases, all generated triangles were within the bounded perimeter.

Figure 9.

Delaunay’s triangulation for the face of the portrait photograph (A); and of one of the frames of the three-dimensional model (B).

The patient’s portrait photograph was processed only once for the extraction of the fiducial points of the Delaunay triangles and the face mask. On the other hand, the frames of the 3D model animation were processed twice, first to extract the fiducial points and a second time after recalculating the fiducial points, in order to obtain a smooth movement of these over time.

3.2.3. Face Swapping and Smoothing

The face-swapping technique combines the data from the three-dimensional medical volume with the data obtained from the processing of the 2D images. Figure 10 represents the basis of this algorithm: the identification of two homologous triangles between the source face and the target face. The triangles vary in shape and size, thus suitable transformations must be applied.

Figure 10.

(A)—Example of 2 homologous triangles, in red; (B)—Result of the Face Swapping technique: I—Pre-processed result; II—Result of the seamless Clone function.

The result of adapting the size and shape of the triangle, together with transposing them onto the target subject is shown in Figure 10B(I). To match and soften the contrast between the two faces, the source face was pasted (illustrated in Figure 10B(II)), where the contrast between the two modalities was lower and the hue of the source face was closer to the target.

By applying the face-swapping process to all frames of the 3D animation sequence, with the subsequent exportation in GIF format, a three-dimensional and photo-realistic animation was obtained (Table 1).

Table 1.

Result of the determination coefficients (R2) for the quadratic adjustment. For the statistical analysis, eight cases were considered, both for the 30 and 60 frames animation.

3.3. Questionnaires

A group of seven orthodontic specialists, whose professional experience ranged from 2 to 17 years, were selected to answer the questionnaire. Four out of the eight cases under study were chosen for evaluation. The statistical study of the results is shown in Table 2.

Table 2.

Results of the questionnaires (scale of 1 to 10, where 1 indicates the minimum value and 10 the maximum value).

In addition to quantifying the quality of the results presented, the experts also mentioned as positive points the usefulness in planning surgical interventions, the ability to simulate post-surgical movements and the improved communication between doctor and patient. This helps with both the management of expectations and the achievement of better surgical results. With regard to negative aspects, the following were mentioned: limitations in relation to the real image; the prediction of the surgical result not being equivalent to the result obtained; the patient not liking “seeing” themselves at the end of the treatment and probably giving up on the surgery; the rendering and superimposition are not excellent; changes in the three-dimensional perception; and the fact that some images do not reproduce the extra-oral aspect of the patients.

4. Discussion

The present study aimed to investigate the reconstruction of the external surface of the face by using CBCT and extraoral photographs of the patient.

Regarding the rendering of the CBCT volume processing, it was verified that the 3D representation was realistic to the patient’s characteristic dimensions and proportions. Despite not having developed a method to measure its accuracy, it was found that the data present in the DICOM volumes can be used together with photos to reconstruct the skin surface of the subject. The thresholding technique that was developed showed good segmentation and processing capabilities for all the subjects in the database. It is a simple and fast algorithm as opposed to machine learning techniques or the implementation of neural networks, which would require more computational power.

To export the final model, various options were considered (OBJ, PLY and STL); however the PNG format requires less memory and is the easiest to manipulate as it does not require specialized software for three-dimensional models. The low contrast of soft tissues in CBCT makes the segmentation of the skin complex; however, this problem was successfully managed. A downside of the methodology studied is the possibility that CBCT may contain metallic objects that serve as obstacles and prevent the complete representation of the patient, such as the presence of chin and head rests. As a solution, it is necessary to ensure that the CBCT is acquired with the maximum possible exposure of the patient’s head. The quality of smoothing of the model surface and noise removal are parameters that were only evaluated from a user perspective.

As for the image processing and fusion of 2D and 3D modalities, the fusion of DICOM images from CBCT with portrait photographs was successfully achieved.

The face recognition algorithm is faster to execute when compared to the face-swapping process. However, it presents limitations when it comes to its success rate at identifying all 68 fiducial points. The identification of the 68 fiducial points is not an obstacle for portrait photographs, but it becomes a challenge when it comes to the processing of 3D model frames as it is necessary that all locations corresponding to the 68-point map be clearly visible. On the other hand, this algorithm was not optimized to the recognition of faces in three-dimensional models, such as the ones used, because this presented less contrast.

The quadratic adjustment was adequate for the X coordinates, but the adjustment of the Y coordinates was less successful. Thus, it can be concluded that, according to the results obtained, the adjustment in the ordinates were of a lower quality.

Overall, the method for mapping faces onto three-dimensional models proved to be simpler and easier to process, with the disadvantage of a limited movement of the patient’s head model.

Lastly, the results of the questionnaires that were filled in by the orthodontists revealed that case 3, referred to as the decoy, performed its function well. Not only was the mean result lower when case 3 was considered, but also the mean deviation was significantly higher, which indicates that the confidence margin in the results was low. This case was the result of the combination of a CBCT volume and a portrait image of two different patients, in order to check whether a poor correspondence between the 3D and 2D modalities was perceptible. Therefore, it can be stated that the developed algorithm has a good ability to realistically replicate and map the patients’ facial features. The results also showed a superior performance of version A compared to version B. This is an unexpected result, given that a contrast smoothing function was applied to version B. A possible justification could be that version A may have greater definition and clarity of the photographic properties of the subject’s face. Furthermore, the photo-realism was better ranked compared to the rendering quality, with both showing results around 6/10. As for the coefficient variation, version B showed less dispersion, with mean deviations of less than 25%, and version A showed satisfactory values of 48% and 30%. Thus, in general, the experts’ ratings seem to be coherent, version A was preferred to version B, photo-realism and rendering quality presented similar ratings.

5. Conclusions

The developed patient skin/head segmentation technique allowed the image processing to be carried out in a very simple and efficient way. The facial recognition process provided an accurate visualization of the patient and was considered to be an added value for surgery planning by the expert panel.

Author Contributions

Conceptualization, F.C., N.F. and F.V.; methodology, M.M. (Miguel Monteiro), F.C. and N.F.; software, M.M. (Miguel Monteiro), C.N. and R.T.; validation, F.M., M.P.R., A.B.P., R.T. and C.N.; formal analysis, F.C., M.M. (Miguel Monteiro); investigation, M.M. (Miguel Monteiro), A.B.P., F.M. and M.P.R.; resources, M.S., C.O. and M.M. (Mariana McEvoy); data curation, I.F.; writing—original draft preparation, M.M. (Miguel Monteiro); writing—review and editing, M.S., C.O., M.M. (Mariana McEvoy) and I.F.; visualization, I.F.; supervision, F.V. and F.C.; project administration, I.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was approved by the Ethics Committee of the Faculty of Medicine of the University of Coimbra (Reference: CE-039/2020), and was conducted in accordance with the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Written informed consent has been obtained from the patient to publish this paper.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hajeer, M.Y.; Millet, D.T.; Ayoub, A.F.; Siebert, J.P. Applications of 3D imaging in orthodontics: Part I. J. Orthod. 2004, 31, 62–70. [Google Scholar] [CrossRef]

- Hajeer, M.Y.; Ayoub, A.F.; Millet, D.T.; Bock, M.; Siebert, J.P. Three-dimensional imaging in orthognathic surgery: The clinical application of a new method. Int. J. Adult Orthod. Orthognath. Surg. 2002, 17, 318–330. [Google Scholar]

- Vale, F.; Nunes, C.; Guimarães, A.; Paula, A.B.; Francisco, I. Surgical-Orthodontic Diagnosis and Treatment Planning in an Asymmetric Skeletal Class III Patient—A Case Report. Symmetry 2021, 13, 1150. [Google Scholar] [CrossRef]

- Naini, F.B.; Moss, J.P.; Gill, D.S. The enigma of facial beauty: Esthetics, proportions, deformity, and controversy. Am. J. Orthod. Dentofac. Orthop. 2006, 130, 277–282. [Google Scholar] [CrossRef]

- Jandali, D.; Barrera, J.E. Recent advances in orthognathic surgery. Curr. Opin. Otolaryngol. Head Neck Surg. 2020, 28, 246–250. [Google Scholar] [CrossRef]

- Plooij, J.M.; Mall, T.J.J.; Haers, P.; Borstlap, W.A.; Kuijpers-Jagtman, A.M.; Bergé, S.J. Digital three-dimensional image fusion processes for planning and evaluating orthodontics and orthognathic surgery. A systematic review. Int. J. Oral. Maxillofac. Surg. 2011, 40, 341–352. [Google Scholar] [CrossRef]

- Alkhayer, A.; Piffkó, J.; Lippold, C.; Segatto, E. Accuracy of virtual planning in orthognathic surgery: A systematic review. Head Face Med. 2020, 16, 34. [Google Scholar] [CrossRef]

- Farrell, B.B.; Franco, P.B.; Tucker, M.R. Virtual surgical planning in orthognathic surgery. Oral. Maxillofac. Surg. Clin. N. Am. 2014, 26, 459–473. [Google Scholar] [CrossRef]

- Tyndall, D.A.; Kohltfarber, H. Application of cone beam volumetric tomography in endodontics. Aust. Dent. J. 2012, 57, 72–81. [Google Scholar] [CrossRef]

- Weiss, R.; Read, A. Cone Beam Computed Tomography in Oral and Maxillofacial Surgery: An Evidence-Based Review. Dent. J. 2019, 7, 52. [Google Scholar] [CrossRef]

- Nasseh, I.; Al-Rawi, W. Cone Beam Computed Tomography. Dent. Clin. N. Am. 2018, 62, 361–391. [Google Scholar] [CrossRef]

- Francisco, I.; Ribeiro, M.P.; Marques, F.; Travassos, R.; Nunes, C.; Pereira, F.; Caramelo, F.; Paula, A.B.; Vale, F. Application of Three-Dimensional Digital Technology in Orthodontics: The State of the Art. Biomimetics 2022, 7, 23. [Google Scholar] [CrossRef]

- Xia, J.; Lp, H.H.; Samman, N.; Wang, D.; Kot, C.S.; Yeung, R.W.; Tideman, H. Computer-assisted three-dimensional surgical planning and simulation: 3D virtual osteotomy. Int. J. Oral. Maxillofac. Surg. 2000, 29, 11–17. [Google Scholar] [CrossRef]

- Ho, C.T.; Lin, H.H.; Liou, E.J.; Lo, L.J. Three-dimensional surgical simulation improves the planning for correction of facial prognathism and asymmetry: A qualitative and quantitative study. Sci. Rep. 2017, 7, 40423. [Google Scholar] [CrossRef]

- Elnagar, M.H.; Elshourbagy, E.; Ghobashy, S.; Khedr, M.; Kusnoto, B.; Evans, C.A. Three-dimensional assessment of soft tissue changes associated with bone-anchored maxillary protraction protocols. Am. J. Orthod. Dentofac. Orthop. 2017, 152, 336–347. [Google Scholar] [CrossRef]

- Kumar, T.S.; Vijai, A. 3D Reconstruction of Face from 2D CT Scan Images. Procedia Eng. 2012, 30, 970–977. [Google Scholar] [CrossRef]

- Fuchs, H.; Kedem, Z.M.; Uselton, S.P. Optimal Surface Reconstruction from Planar Contours. Commun. ACM 1977, 20, 693–702. [Google Scholar] [CrossRef]

- Lorensen, W.E.; Cline, H.E. Marching Cubes: A High Resolution 3d Surface Construction Algorithm. Comput. Graph. 1987, 21, 163–169. [Google Scholar] [CrossRef]

- Fan, Y.; Liu, Y.; Lv, G.; Liu, S.; Li, G.; Huang, Y. Full Face-and-Head 3D Model With Photorealistic Texture. IEEE Access 2020, 8, 210709–210721. [Google Scholar] [CrossRef]

- Sagonas, C.; Antonakos, E.; Tzimiropoulos, G.; Zafeiriou, S.; Pantic, M. 300 Faces in-the-Wild Challenge: Database and results. Image Vis. Comput. 2016, 47, 3–18. [Google Scholar] [CrossRef]

- Delaunay, B.; Sphère vide, S. A la mémoire de Georges Voronoi. Bull. L’académie Sci. L’urss Cl. Sci. Mathématiques Nat. 1934, 6, 793–800. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).