Featured Application

Leaf vein segmentation work can be applied in plant species classification, drought resistance research in plants, and climate and environmental analysis.

Abstract

Leaf vein segmentation is crucial in species classification and smart agriculture. The existing methods combine manual features and machine learning techniques to segment coarse leaf veins. However, the extraction of the intricate patterns is time consuming. To address the issues, we propose a coarse-to-fine two-stage hybrid network termed TTH-Net, which combines a transformer and CNN to accurately extract veins. Specifically, the proposed TTH-Net consists of two stages and a cross-stage semantic enhancement module. The first stage utilizes the Vision Transformer (base version) to extract globally high-level feature representations. Based on these features, the second stage identifies fine-grained vein features via CNN. To enhance the interaction between the two stages, a cross-stage semantic enhancement module is designed to integrate the strengths of the transformer and CNN, which also improves the segmentation accuracy of the decoder. Extensive experiments on the public dataset LVN are conducted, and the results prove that TTH-Net has significant advantages over other methods in leaf vein segmentation.

1. Introduction

Leaf vein segmentation plays a crucial role in the fields of botany and biology due to the important physiological functions and unique morphological characteristics of leaf veins. By segmenting leaf vein patterns, it can further enhance the accuracy of plant species classification [1], facilitate the development of smart agriculture [2], and contribute to research on climate condition analysis [3]. Similar to the vascular network in human beings, leaf vein patterns exhibit complex distributions and strong connectivity. Thicker veins are responsible for providing support to the leaf, running through the entire leaf, while thinner veins are responsible for nutrient transport, hence exhibiting strong connectivity. There are close connections and numerous loops between them, which pose significant challenges for extraction and segmentation tasks.

To segment leaf vein patterns, early works mainly rely on chemical reagents [4] and scanners [5] to extract veins. Lersten et al. [4] used a chemical method where the leaf tissue was cleaned, leaving behind the vein pattern. Larese et al. [5] utilized the scanner to obtain high-resolution leaf images, and then extracted the vein pattern via unconstrained hit-or-miss transforms and adaptive thresholds. Limited by image processing techniques, extraction steps are time consuming, and the results are difficult to reproduce. To enhance the automation level of leaf vein extraction, Price et al. [6] designed the LEAF GUI software specifically for vein extraction. This software captures leaf images and returns statistical data about the vein network and stomatal structures via a series of interactive thresholding and cleaning steps, which further deepens users’ understanding of leaf vein networks. With the development of image processing techniques, more and more machine learning-based algorithms have been employed for leaf vein segmentation. Sibi Chakkaravarthy et al. [7] used the angle between the main vein and secondary veins and the centroid vein angle as key parameters, combined with Hough lines, to extract the leaf veins. Radha et al. [8] utilized the canny edge detection algorithm to determine the nutritional status of plants by detecting the leaf edges and veins. The Scale Invariant Feature Transform (SIFT) operation in [9] was used to extract local features from leaf vein images. These features are considered important references for subsequent plant classification. Compared to the methods based on chemical reagents and scanners, machine learning-based algorithms rely on manually crafted feature extractors and have limited automation levels. With the remarkable performance of the FCN network in the field of segmentation, a lot of CNN-based deep learning algorithms [10,11,12,13] have been extended to the domain of image segmentation. Xu et al. [14] employed existing CNN models to extract leaf veins and achieved impressive performance. Convolutional Neural Networks (CNNs) utilize small convolutional kernels that slide over the image to capture local information and gradually build receptive fields. By stacking multiple convolutional layers, CNNs can aggregate and learn hierarchical representations, enabling them to capture both low-level and high-level features from the input image. However, due to the limitation of the receptive field, the continuity of the veins may be compromised.

To address the aforementioned issues, inspired by the recent success of Transformers in natural language processing (NLP), this paper leverages Transformers to enhance the accuracy of vein extraction. The core of the Transformer is the self-attention module. By extracting pixel relationships across the entire image, attention coefficients based on global receptive fields can be obtained. Multiplying this probability map with the original image via matrix multiplication significantly enhances the global dependency in the feature maps. Recently, related studies [15,16,17] have already demonstrated the advantage of Transformers over CNNs in modeling global context. Vision Transformer (ViT) [15] has demonstrated its effectiveness compared to CNN by dividing the image into patches and flattening them into vectors before inputting them into the Transformer. This approach allows ViT to capture global relationships and context, which is key to the transformer outperforming CNN. Based on ViT, Segmenter [16] designed a pure Transformer model, which is specifically designed for semantic segmentation tasks. Compared to convolution-based methods, Segmenter allows the modeling of global context from the first layer. This approach makes Segmenter highly competitive in the scene-understanding datasets. Additionally, SETR [17], a variant of the ViT model, introduces the global characteristics of ViT and conducts extensive experiments to prove the superior performance of the Transformer compared to CNN in semantic segmentation tasks. However, SETR retains the decoding approach of CNN in the decoder to fully leverage the excellent capability of CNN for understanding local details.

To achieve high-accuracy segmentation performance, this paper combines the strengths of the Transformer and CNN to design a coarse-to-fine model termed a two-stage transformer–CNN hybrid network (TTH-Net). Specifically, in the first stage, TTH-Net utilizes the backbone of ViT [15] to extract feature representations that contain rich global context. This stage is responsible for generating coarse-grained feature representations. Based on these features, TTH-Net employs CNN in the second stage for fine-grained recovery of the features generated by the ViT. Furthermore, to enhance the accuracy of the decoder, TTH-Net introduces a cross-stage semantic enhancement (CSE) module to connect the features generated by the encoders and decoders of both stages. Different from other two-stage networks, the CSE module fully utilizes the features generated in both stages and effectively integrates the advantages of Transformer and CNN, which results in the best performance of TTH-Net among all the compared methods.

In summary, our contributions are as follows:

- (1)

- We expand the fusion of CNN and transformer and propose a coarse-to-fine approach that combines the advantages of Transformer and CNN, which is termed a two-stage hybrid network (TTH-Net) for leaf vein segmentation.

- (2)

- We introduce the CSE module to guide the feature fusion between the CNN and transformer. This approach breaks away from the traditional stage-wise cascade and significantly enhances the segmentation accuracy of the decoder.

- (3)

- Extensive experiments conducted on the LVN dataset and our proposed TTH-Net achieve stage-of-the-art performance. Additionally, corresponding ablation experiments validate the necessity of each stage and the CSE module.

The rest of the sections in this paper are as follows. In Section 2, the typical algorithms for the leaf vein segmentation task and two-stage design are reviewed. Section 3 introduces the components of the proposed TTH-Net method. Section 4 presents the experimental results and discussions. Finally, conclusions are drawn in Section 5.

2. Related Work

In this section, we first summarize the work on leaf vein segmentation and deeply analyze the existing algorithms. Additionally, the applications of two-stage algorithms are also introduced. Finally, the motivations of our work are listed.

Leaf vein segmentation. The leaf vein segmentation task is crucial for applications in botany and biology. Many efforts [6,18,19,20,21,22,23] have been deliciated to improve the accuracy and the efficiency. Bühler et al. [18] were dedicated to automatically segmenting and analyzing leaf veins in images obtained via different imaging modalities such as microscopy and macro photography. It has designed a powerful and user-friendly image analysis tool called phenoVein, which greatly facilitates the study of plant phenotypes in ecology and genetics research. Kirchgeßner et al. [19] represented leaf veins as B-splines that contain hierarchical information about the vascular system. He accomplishes structure tracking by considering spatial information. The algorithm samples the previously estimated affine growth rates along the plant veins and perpendicular to them, representing them in physiological coordinates. To efficiently segment leaf vein patterns, Price et al. [6] designed a user-assisted software tool termed Leaf Extraction and Analysis Framework Graphical User Interface (LEAF GUI) to enhance researchers’ understanding of leaf network structures. The LEAF GUI considers the size, position, and connectivity of network veins, as well as the size, shape, and position of surrounding pores. It follows a series of interactive thresholding and cleaning steps to return statistical data and information about the leaf vein network. Blonder et al. [20] developed theories for absorption- and phase-contrast X-ray imaging. By utilizing a synchrotron accelerator light source and two commercial X-ray instruments, they successfully segment the major and minor veins. Salima et al. [21] considered leaf vein structure a crucial biological feature and combined it with the Hessian matrix to perform grouped structure segmentation of images. By analyzing the eigenvalues of pixels, the segmentation results based on the characteristics of the leaf vein structure were obtained. Katyal et al. [22] proposed a new leaf vein segmentation method that utilizes odd Gabor filters and morphological operations to achieve more precise vein segmentation. Compared to other algorithms, the Odd Gabor filter can provide more precise outputs due to its ability to effectively detect fine fibrous structures present in leaves, such as tertiary vein branches. Recently, with the development of CNN, Xu et al. [14] utilized a convolutional neural network (CNN) to end-to-end segment leaf veins, which is automatic and accurate. While CNNs have significantly improved the automation level and achieved high accuracy in leaf vein segmentation, most current methods still rely on machine learning algorithms. Therefore, there is great potential for utilizing deep learning to further boost performance.

Two-stage algorithm. The two-stage algorithms are widely used to solve image processing problems such as Image Super-Resolution [24], single-image reflection removal [25], segmentation task [26,27], intrusion detection [28], eigenvalues Detection [29], image restoration [30] and image denoising [31,32]. For example, Fan et al. [24] achieved the optimal balance between accuracy and speed by employing a deep residual design with constrained depth and combining the two-stage structure with the lightweight two-layer PConv residual block design. Li et al. [25] proposed a novel two-stage network, named the Reflection-Aware Guided Network (RAGNet), for single-image reflection removal tasks. This approach alleviates the limitations of most existing methods in utilizing the results from the previous stage to guide transmission estimation. Wang et al. [26] proposed a two-stage segmentation network to incorporate the global and local image region fitting energies by using popular active contours. The first stage of the network uses the Gaussian distribution for coarse features, while the second stage refines the local details based on the results obtained in the previous stage, which are effective for synthetical and real-world images. Ong et al. [27] designed a two-stage hierarchical artificial neural network. The first stage was responsible for capturing the main colors from the fixed feature map in an unsupervised manner, while the second stage controlled the number of color clusters for segmentation quality improvement. For single-image super-resolution, Chen et al. [30] proposed a two-step restoration approach to predict missing region features when object features are severely missing. This method significantly improves texture blurring and feature discontinuity issues. Cai et al. [31] utilized a two-phase approach to restore images corrupted by blur and impulse noise by identifying outlier candidates in the first stage and employing variational methods in the second stage. Tian et al. [32] expanded the three-stage network based on CNN by incorporating wavelet transform for image denoising. Although two-stage algorithms are widely applicable to image processing tasks, most existing methods neglect the interaction between the two stages, which results in the limited performance of the models.

In this paper, our proposed two-stage hybrid network (TTH-Net) integrates the strengths of both transformer and CNN, which also achieves state-of-the-art performance in the leaf vein segmentation task. The proposed TTH-Net utilizes deep learning methods to significantly improve the level of automation in vein segmentation while achieving high accuracy. Additionally, different from other two-stage methods, we extensively interact with the features generated from both stages and guide the representation of long-range features via auxiliary loss functions.

3. The Proposed Method

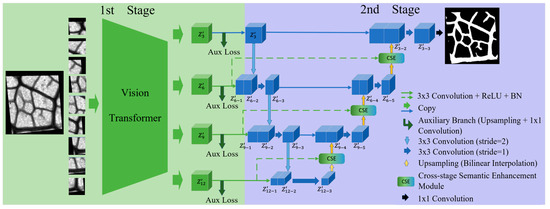

In this section, we propose a coarse-to-fine two-stage Transformer–CNN hybrid network (TTH-Net) for leaf vein segmentation. The proposed TTH-Net consists of two stages and the cross-stage semantic enhancement (CSE) module, as shown in Figure 1. The first stage utilizes ViT to extract global contexts, while the second stage employs features generated by Transformer to guide CNN for fine vein recovery. Additionally, the CSE module is introduced to facilitate the interaction between the two stages and further improve the segmentation accuracy of the decoder.

Figure 1.

The pipeline of the proposed TTH-Net.

3.1. First Stage: Coarse-Grained Feature Extraction

To model the long-range dependencies of leaf veins, the Vision Transformer (ViT) [15] is used for more representative features. Different from CNN, ViT expands the receptive field based on the entire leaf image by self-attention module, which results in strong global modeling capability. Thus, in this paper, we take ViT as the encoder to capture coarse-grained vein features.

Specifically, the ViT encoder consists of self-attention modules, Multi-Layer Perceptron (MLP), and LayerNorm. The input (H, W, and C are height, width, and channel dimensions, respectively) is first split into N flattened patches, where and is the width/height of each patch. As a result, the original input is reshaped into . By flattening these patches into one-dimensional vectors and projecting them linearly, we obtain the input of ViT. To compensate for the lost positional information during the patching, is added into and forms a new input After the LayerNorm function and linear mapping operation, we obtain the Query (Q), Key (K), and Value (V). Then, we utilize the self-attention module to model the global dependency of the vectors for accuracy improvements, which can be calculated as

where d represents the dimension of feature vectors. After the residual connection, is generated. The LayerNorm function and MLP are used to integrate contexts and output , which can be formulated as

Finally, by repeating the above process, we obtain the coarse-grained results. To facilitate the recovery of fine-grained features in subsequent steps, we retained the results from the third, sixth, ninth, and twelfth layers and reshaped them into the 2D features. Note that these features have the same resolutions. In addition, we also introduce four additional auxiliary losses to guide ViT in extracting coarse-grained feature representations.

3.2. Second Stage: Fine-Grained Feature Recovery

To enhance the TTH-Net of recognizing fine-grained features, we expand ViT in stage one by cascading a U-shaped CNN. Although transformers excel at modeling global context, fine-grained vein recognition is equally important for leaf vein segmentation tasks. The fine-grained feature restoration network consists of the encoder and decoder. The outputs {} from stage one is set as the inputs of stage two. Specifically, is first sent to stage two and convolved by convolution, Batch Normalization (BN), and ReLU function. Then, is acquired from and further stacked together with , which is downsampled by . After the convolution, BN, and ReLU function, the new feature map can be extracted more vein-related details. By repeating the above process, our proposed TTH-Net can generate more advanced representations. For the decoding part, bilinear interpolation functions are used to restore the vein semantics. To better combine the advantages of Transformer and CNN, we introduce the cross-stage semantic enhancement (CSE) module [33] to facilitate the cross-stage fusion of representations between long-distance features. For example, the output of the encoder and the feature are sent into the CSE module and generates . By concatenating with , the new feature maps are obtained after the convolution, BN, and ReLU function. By stacking these operations, the target classes in the features are divided by adding a convolution at the end of the network. More details about the CSE module are introduced in the following subsection.

3.3. Cross-Stage Semantic Enhancement Module

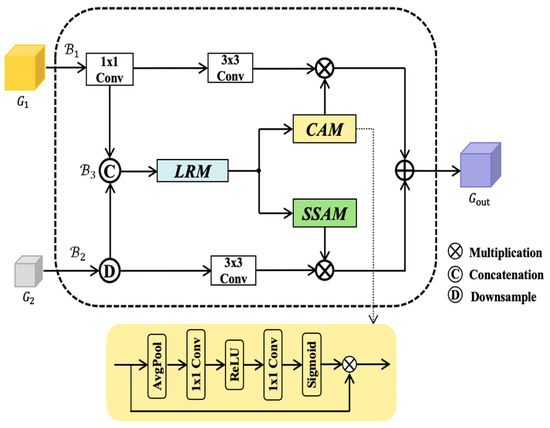

The multi-stage approach produces increasingly refined estimation results by extending the depth of the network and the number of paths for information flow. However, there are semantic gaps in the cross-stage features, and simply adding them together not only fails to improve the performance but also introduces background noise to perturb the segmentation results. To bridge the semantic gap between the different stages, we designed the cross-stage semantic enhancement (CSE) module [33] to efficiently model the long-range dependencies and improve the model performance, which is shown in Figure 2.

Figure 2.

Illustration of cross-stage semantic enhancement module.

The CSE module consists of three main branches, , , and , and each branch is implemented by the corresponding module for cross-stage semantic enhancement. Specifically, SSAM is used to guide the deeper stage to aggregate high-resolution features, and CAM is applied to reweight channels. In addition, utilizes the LRM to generate refined features, which are provided to guide SSAM and CAM to fuse the different scale features from and .

Formality, the coarse feature maps from branch are defined as , and is the low-level feature maps from branch . We first use convolution on to obtain , and is obtained by downsampling . By concatenating with , is obtained. We send into LRM and obtain the refined features . Then, is convolved by convolution and CAM employs global average pooling to extract global context from . By multiplying their results, we obtain , which is filtered for irrelevant information. is calculated as follows:

where and represent results generated by CAM and LRM, respectively. Parallelly, we use convolution on , and SSAM is applied to aggregate discriminative contextual information. Based on the results, we obtain via element-wise multiplication. is given in Equation (4).

where is the feature map generated by SSAM. Finally, the output is obtained by fusing and .

3.4. Loss Function

The total loss of the TTH-Net includes two parts, one part being the features from multiple stage outputs in the ViT of the first stage. Via the MLP module and upsampling operations, these auxiliary losses guide the Transformer in recognizing coarse-grained features. The other part of the loss is the difference between the output of the second stage and the labels. By reweighting the two parts of the losses, TTH-Net can accurately segment leaf veins. The loss is calculated as follows:

where CE is the cross-entropy loss function [34], represents the dice loss function [35], and the hyperparameter λ controls the fusion coefficient of the loss functions.

4. Experiment

4.1. Dataset

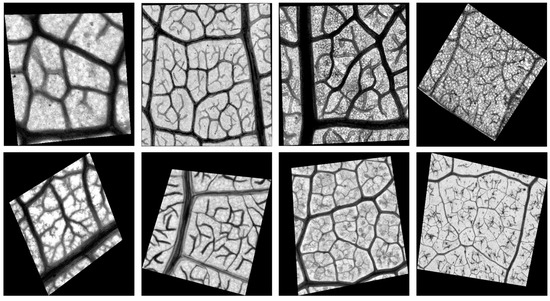

The public Leaf Venation Network (LVN) dataset [36] is collected by images of leaf venation networks from tree species in Malaysian Borneo. It consists of 726 leaf samples from 295 species belonging to 50 families in eight forest plots of the state of Sabah. These selected plots span across a gradient of logging intensity and are mixed lowland forests of different regeneration stages. Each plot has a size of 1 hectare, and all tree stems with a diameter at breast height (DBH) ≥ 10 cm are marked. In addition, we also employ a stratified random design to sample trees to capture rare and smaller species. Within each permanent plot, three 20 20 m subplots are selected, and all trees within the subplots are sampled. Leaves are collected from each stem under both sunlight and shade conditions. The image resolution is approximately 50 million pixels. Limited by the GPU memory, we use the sliced images from the LVN dataset as training samples. The original image and its cropped patch are shown in Figure 3.

Figure 3.

Leaf images in the LVN dataset.

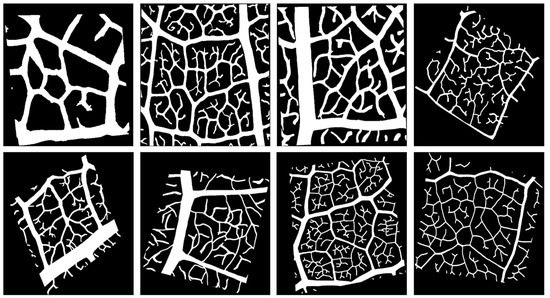

For the ground truth images, the LVN dataset provides labels corresponding to the cropped images. A compound microscope equipped with a 2× de-color objective and a color camera with 3840 2748 resolution is used to capture images of each leaf. The complete image of the sampled area is obtained by digitally stitching together 9–16 overlapping image fields per sample using a digital tablet and GIMP software (GNU). Subsequently, the images are preprocessed using MATLAB by retaining the green channel with the maximum contrast after staining and applying contrast-limited adaptive histogram equalization. All textures were manually annotated, as shown in Figure 4. Further details of the processing can be found in [36].

Figure 4.

Ground truth in the LVN dataset (Corresponding with Figure 3).

4.2. Evaluation Metrics

To comprehensively evaluate the experimental results, we selected four metrics: Dice coefficient () [37], Sensitivity () [38], Specificity (Spe), and Accuracy () [39], which are commonly used in segmentation tasks, to test the performance of the proposed model. The indicator is a commonly used similarity metric for evaluating image segmentation results. It measures the overlap between the predicted result and the ground truth label. The metric measures the model’s capability to correctly identify positive instances while the estimating the proportion of samples that the model classifies correctly, which are calculated as follows:

where , , , and represent the number of true positives, true negatives, false positives, and false negatives, respectively.

4.3. Implementation Details

We implement the proposed method based on the Pytorch framework and the Ubuntu 18.04 system. The NVIDIA GeForce GTX 3090 graphics cards are used to accelerate the training process, which has 24 Gigabyte memory. The batch size and total iteration number are set to 8 and 120,000, respectively, for the experiments on the LVN dataset. The polynomial learning rate decay schedule and SGD optimizer are employed. The initial learning rate and the weight decay are set to 0.008 and 0.9, respectively. All the images perform data augmentation, including random angle rotation, random translation, random zooming, random shearing, and horizontal flipping with ratios between 0.5 and 2. In addition, we employ a sliding window approach [40] for both the training and testing phases. Each image was cropped into patches of size 256 256 with a stride of 128 128. The undersized regions are filled with a pixel value of 255.

For the first stage of the transformer encoder, we select the base version as our backbone. Specifically, the ViT consists of twelve layers and sets the hidden size as 768, MLP size as 3072, and head numbers as twelve. The patch size is set to 16 16. The outputs of the third, sixth, ninth, and final layers of ViT are saved and used for fine-grained feature recognition in the second stage. In the second stage, the downsampling operation is used to reduce the resolution by half by setting the stride of convolution as 2. In addition, the bilinear interpolation (the ratio is 2) is adopted to upsample the resolutions of the decoder. Finally, by utilizing 1 1 convolution, the final binary results are generated.

4.4. Comparisons with the State of the Art

In this section, we evaluate the performance of the proposed model. For comprehensive comparison, we select CNN models including FCN [10], U-Net [12] GhostNet [13], MobileNetv3 [41], and DeepLabv3+ [42] Transformer models including ViT [15], Segmenter [16], Swin Transformer [43], SETR [17], DeiT [44], as well as two-stage models, such as ALSNet [45], TSUNet [46], DDNet [47], APCNet [48], CMMANet [49], and PCDNet [50] as benchmark methods. For the GhostNet and ViT models, we preserve their encoding parts and replace the original classification head with a convolution. For training and testing, all models are conducted under the same settings.

As shown in Table 1, the results of variants of CNN and transformer, as well as the outcomes of the two-stage algorithm are recorded. For leaf vein segmentation, the transformer has significant advantages over CNNs due to its global modeling capability. In addition, the two-stage algorithms based on the deep learning methods also perform well, and our proposed TTH-Net achieves the best scores in all the indicators. Specifically, compared to the DeepLabv3+ algorithm, our proposed TTH-Net surpasses it by 2.73%, 1.05%, 2.30%, and 1.89% in terms of IoU, Spe, Sen, and Acc metrics, respectively. Additionally, TTH-Net achieves an improvement of 2.1%, 1.19%, 0.61%, and 1.15% in IoU, Spe, Sen, and Acc indicators, respectively, compared to the Swin Transformer. We also record the mean (standard deviation) of the 5-fold cross-validation of each metric for the proposed method. As shown in Table 1, transformer variants such as Segmenter and DeiT exhibit stronger dependency on the data compared to CNNs. Additionally, the proposed TTH-Net, which integrates the advantages of CNN and transformer, shows a lower dependency on the data. Thus, we can conclude that the proposed TTH-Net has state-of-the-art performance on the LVN dataset for the leaf vein segmentation task and lower dependency on the data.

Table 1.

The results of the proposed TTH-Net and competitors on the LVN dataset. Note that each metric is reported via the mean (standard deviation) of 5-fold cross-validation.

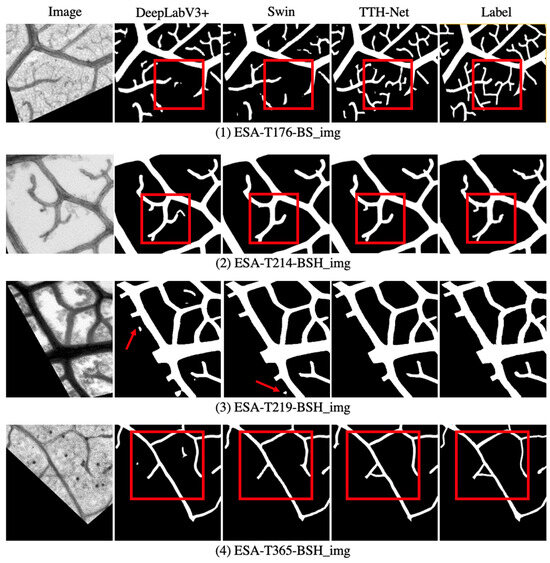

As shown in Figure 5, visual comparisons of the highest-scoring models for CNN, Transformer, and the two-stage algorithm are conducted, respectively. The CNN model, DeepLabv3+, exhibits relatively coarse texture detection of vein patterns, accompanied by numerous isolated pixels and some incorrectly classified curves. For the Swin Transformer, there is a more pronounced issue of misclassifying vein curve pixels as background pixels, which also results in a suboptimal performance. In contrast to our proposed TTH-Net, TTH-Net is capable of segmenting fine-grained vein textures with strong curve continuity. Additionally, the segmentation results exhibit minimal occurrence of isolated pixels. As a result, the proposed TTH-Net can segment veins accurately and achieve state-of-the-art performance for leaf vein segmentation tasks.

Figure 5.

Visual comparison between TTH-Net and other competitors on the LVN dataset for lea vein segmentation. The red rectangular boxes and arrows are meant to highlight the advantages of TTH-Net.

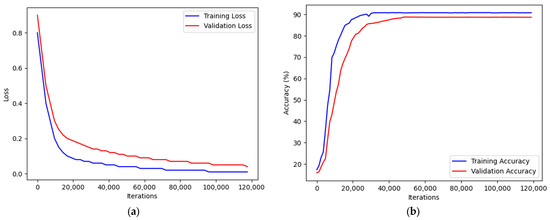

Finally, we record the curves of loss vs. number of iterations and accuracy and vs. number of iterations during the training and validation process, as shown in Figure 6. From Figure 6a, we can find that our proposed model shows the consistency between the loss curves during training and validation process, which indicates that TTH-Net is not overfit or underfit. In Figure 6b, as the number of iterations increases, the accuracy of the TTH-Net keeps improving, and the optimal number of iterations is 50,000.

Figure 6.

Training performance plots. (a) Loss vs. iterations. (b) Accuracy vs. iterations.

4.5. Ablation Study

To validate the necessity of each stage and module in TTH-Net, we conduct corresponding ablation experiments in this subsection. Specifically, we add the second stage and CSE module on top of the first stage individually and record their performances in Table 2.

Table 2.

Ablation studies of the proposed TTH-Net. Note that each metric is reported via the mean (standard deviation) of 5-fold cross-validation.

Firstly, by adding a segmentation head on top of the first stage, we obtain segmentation results for the first stage with an IoU score of 83.83%, Spe score of 81.57%, Sen score of 86.03%, and Acc score of 87.67%. Then, the CNN module is added in the second stage, and TTH-Net achieves an improvement of 0.77%, 0.77%, 0.94%, and 0.79% in IoU, Spe, Sen, and Acc metrics, respectively. Based on the two stages, the CSE module can bring an increase of 0.77% in IoU, 0.6% in Spe, 0.91% in Sen, and 0.78% in Acc to the TTH-Net. As a result, each stage and the CSE module is necessary for achieving high-precision leaf vein segmentation.

Additionally, we conduct corresponding ablation experiments on the loss function and the hyperparameter . As shown in Table 3, we select the commonly used loss functions such as focal loss [51], cross-entropy (CE) loss [34], and dice loss (DL) [35]. As shown in Table 4, among all combinations of loss functions, TTH-Net achieves the best performance when the first stage utilizes the auxiliary loss function of the Cross-Entropy (CE) loss function while the second stage adopts the DL. For the hyperparameter , we find that when , the TTH-Net achieves the highest scores across all metrics.

Table 3.

Ablation studies of the loss function. Note that each metric is reported via the mean (standard deviation) of 5-fold cross-validation.

Table 4.

Ablation studies of the hyperparameter . Note that each metric is reported via the mean (standard deviation) of 5-fold cross-validation.

5. Conclusions

In this paper, we propose a novel coarse-to-fine algorithm termed two-stage Transformer–CNN hybrid network (TTH-Net) for leaf vein segmentation task. The proposed TTH-Net consists of two stages and a cross-stage semantic enhancement (CSE) module. The first stage utilizes a transformer to obtain the global feature representations while the second stage recognizes the fine-grained features with CNN. To achieve better performance in the decoding stage, a CSE module is added to fuse the advantages of CNN and transformer, and the integrated features are fed into the decoder. In addition, the CSE module can also bridge the semantic gaps in the cross-stage features and efficiently model the long-range dependencies for further accuracy improvements. To evaluate the proposed TTH-Net, extensive experiments are conducted, and the results demonstrate that the proposed TTH-Net has the best performance among all the competitors.

Author Contributions

Conceptualization, P.S.; methodology, Y.Y.; data curation, Y.Z.; writing—original draft preparation, Y.Z.; writing—review and editing, P.S.; visualization, Y.Z.; supervision, Y.Y.; project administration, P.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Tongda College of Nanjing University of Posts and Telecommunications grant No. XK006XZ19013.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is unavailable due to privacy.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Larese, M.G.; Granitto, P.M. Finding local leaf vein patterns for legume characterization and classification. Mach. Vis. Appl. 2016, 27, 709–720. [Google Scholar] [CrossRef]

- Darwin, B.; Dharmaraj, P.; Prince, S.; Popescu, D.E.; Hemanth, D.J. Recognition of bloom/yield in crop images using deep learning models for smart agriculture: A review. Agronomy 2021, 11, 646. [Google Scholar] [CrossRef]

- Sack, L.; Scoffoni, C. Leaf venation: Structure, function, development, evolution, ecology and applications in the past, present and future. New Phytol. 2013, 198, 983–1000. [Google Scholar] [CrossRef] [PubMed]

- Lersten, N.R. Modified clearing method to show sieve tubes in minor veins of leaves. Stain Technol. 1986, 61, 231–234. [Google Scholar] [CrossRef]

- Larese, M.G.; Namías, R.; Craviotto, R.M.; Arango, M.R.; Gallo, C.; Granitto, P.M. Automatic classification of legumes using leaf vein image features. Pattern Recognit. 2014, 47, 158–168. [Google Scholar] [CrossRef]

- Price, C.A.; Symonova, O.; Mileyko, Y.; Hilley, T.; Weitz, J.S. Leaf extraction and analysis framework graphical user interface: Segmenting and analyzing the structure of leaf veins and areoles. Plant Physiol. 2011, 155, 236–245. [Google Scholar] [CrossRef]

- Sibi Chakkaravarthy, S.; Sajeevan, G.; Kamalanaban, E.; Varun Kumar, K.A. Automatic leaf vein feature extraction for first degree veins. In Proceedings of the Advances in Signal Processing and Intelligent Recognition Systems: Proceedings of the Second International Symposium on Signal Processing and Intelligent Recognition Systems (SIRS-2015), Trivandrum, India, 16–19 December 2015; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 581–592. [Google Scholar] [CrossRef]

- Radha, R.; Jeyalakshmi, S. An effective algorithm for edges and veins detection in leaf images. In Proceedings of the IEEE 2014 World Congress on Computing and Communication Technologies, Trichirappalli, India, 27 February–1 March 2014; pp. 128–131. [Google Scholar] [CrossRef]

- Selda, J.D.S.; Ellera, R.M.R.; Cajayon, L.C.; Linsangan, N.B. Plant identification by image processing of leaf veins. In Proceedings of the International Conference on Imaging, Signal Processing and Communication, Penang, Malaysia, 26–28 July 2017; pp. 40–44. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, 5–9 October 2015; Proceedings, Part III 18; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Automated and accurate segmentap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, Washington, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar] [CrossRef]

- Xu, H.; Blonder, B.; Jodra, M.; Malhi, Y.; Fricker, M. Automated and accurate segmentation of leaf venation networks via deep learning. New Phytol. 2021, 229, 631–648. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 7262–7272. [Google Scholar] [CrossRef]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.S.; et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 6881–6890. [Google Scholar] [CrossRef]

- Bühler, J.; Rishmawi, L.; Pflugfelder, D.; Huber, G.; Scharr, H.; Hülskamp, M.; Koornneef, M.; Schurr, U.; Jahnke, S. phenoVein—A tool for leaf vein segmentation and analysis. Plant Physiol. 2015, 169, 2359–2370. [Google Scholar] [CrossRef] [PubMed]

- Kirchgeßner, N.; Scharr, H.; Schurr, U. Robust vein extraction on plant leaf images. In Proceedings of the 2nd IASTED International Conference Visualization, Imaging and Image Processing, Málaga, Spain, 9–12 September 2002. [Google Scholar]

- Blonder, B.; De Carlo, F.; Moore, J.; Rivers, M.; Enquist, B.J. X-ray imaging of leaf venation networks. New Phytol. 2012, 196, 1274–1282. [Google Scholar] [CrossRef]

- Salima, A.; Herdiyeni, Y.; Douady, S. Leaf vein segmentation of medicinal plant using hessian matrix. In Proceedings of the IEEE 2015 International Conference on Advanced Computer Science and Information Systems (ICACSIS), Depok, Indonesia, 10–11 October 2015; pp. 275–279. [Google Scholar] [CrossRef]

- Katyal, V. Leaf vein segmentation using Odd Gabor filters and morphological operations. arXiv 2012, arXiv:1206.5157. [Google Scholar] [CrossRef]

- Saleem, R.; Shah, J.H.; Sharif, M.; Yasmin, M.; Yong, H.S.; Cha, J. Mango leaf disease recognition and classification using novel segmentation and vein pattern technique. Appl. Sci. 2021, 11, 11901. [Google Scholar] [CrossRef]

- Fan, Y.; Shi, H.; Yu, J.; Liu, D.; Han, W.; Yu, H.; Wang, Z.; Wang, X.; Huang, T.S. Balanced two-stage residual networks for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 161–168. [Google Scholar] [CrossRef]

- Li, Y.; Liu, M.; Yi, Y.; Li, Q.; Ren, D.; Zuo, W. Two-stage single image reflection removal with reflection-aware guidance. Appl. Intell. 2023, 53, 19433–19448. [Google Scholar] [CrossRef]

- Wang, H.; Huang, T.Z.; Xu, Z.; Wang, Y. A two-stage image segmentation via global and local region active contours. Neurocomputing 2016, 205, 130–140. [Google Scholar] [CrossRef]

- Ong, S.H.; Yeo, N.C.; Lee, K.H.; Venkatesh, Y.V.; Cao, D.M. Segmentation of color images using a two-stage self-organizing network. Image Vis. Comput. 2002, 20, 279–289. [Google Scholar] [CrossRef]

- Kaur, R.; Gupta, N. CFS-MHA: A Two-Stage Network Intrusion Detection Framework. Int. J. Inf. Secur. Priv. (IJISP) 2022, 16, 1–27. [Google Scholar] [CrossRef]

- Mashta, F.; Altabban, W.; Wainakh, M. Two-Stage Spectrum Sensing for Cognitive Radio Using Eigenvalues Detection. Int. J. Interdiscip. Telecommun. Netw. (IJITN) 2020, 12, 18–36. [Google Scholar] [CrossRef]

- Chen, Y.; Xia, R.; Zou, K.; Yang, K. FFTI: Image inpainting algorithm via features fusion and two-steps inpainting. J. Vis. Commun. Image Represent. 2023, 91, 103776. [Google Scholar] [CrossRef]

- Cai, J.F.; Chan, R.H.; Nikolova, M. Fast two-phase image deblurring under impulse noise. J. Math. Imaging Vis. 2010, 36, 46–53. [Google Scholar] [CrossRef]

- Tian, C.; Zheng, M.; Zuo, W.; Zhang, B.; Zhang, Y.; Zhang, D. Multi-stage image denoising with the wavelet transform. Pattern Recognit. 2023, 134, 109050. [Google Scholar] [CrossRef]

- Elhassan, M.A.; Yang, C.; Huang, C.; Legesse Munea, T.; Hong, X. -FPN: Scale-ware Strip Attention Guided Feature Pyramid Network for Real-time Semantic Segmentation. arXiv 2022. [Google Scholar] [CrossRef]

- Xue, Y.; Jin, G.; Shen, T.; Tan, L.; Wang, L. Template-Calibrating the Dice loss to handle neural network overconfidence for bioadaptive cross-entropy loss for UAV visual tracking. Chin. J. Aeronaut. 2023, 36, 299–312. [Google Scholar] [CrossRef]

- Yeung, M.; Rundo, L.; Nan, Y.; Sala, E.; Schönlieb, C.B.; Yang, G. Calibrating the Dice loss to handle neural network overconfidence for biomedical image segmentation. J. Digit. Imaging 2023, 36, 739–752. [Google Scholar] [CrossRef]

- Blonder, B.; Both, S.; Jodra, M.; Majalap, N.; Burslem, D.; Teh, Y.A.; Malhi, Y. Leaf venation networks of Bornean trees: Images and hand-traced segmentations. Ecology 2019, 100. [Google Scholar]

- Gu, Y.; Piao, Z.; Yoo, S.J. STHarDNet: Swin transformer with HarDNet for MRI segmentation. Appl. Sci. 2022, 12, 468. [Google Scholar] [CrossRef]

- Yang, B.; Qin, L.; Peng, H.; Guo, C.; Luo, X.; Wang, J. SDDC-Net: A U-shaped deep spiking neural P convolutional network for retinal vessel segmentation. Digit. Signal Process. 2023, 136, 104002. [Google Scholar] [CrossRef]

- Maqsood, A.; Farid, M.S.; Khan, M.H.; Grzegorzek, M. Deep malaria parasite detection in thin blood smear microscopic images. Appl. Sci. 2021, 11, 2284. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: Redesigning skip connections to exploit multiscale features in image segmentation. IEEE Trans. Med. Imaging 2019, 39, 1856–1867. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 10347–10357. [Google Scholar]

- Tang, W.; Zou, D.; Yang, S.; Shi, J.; Dan, J.; Song, G. A two-stage approach for automatic liver segmentation with Faster R-CNN and DeepLab. Neural Comput. Appl. 2020, 32, 6769–6778. [Google Scholar] [CrossRef]

- Jiang, Z.; Ding, C.; Liu, M.; Tao, D. Two-stage cascaded u-net: 1st place solution to brats challenge 2019 segmentation task. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries: 5th International Workshop, BrainLes 2019, Held in Conjunction with MICCAI 2019, Shenzhen, China, 17 October 2019; Revised Selected Papers, Part I 5; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 231–241. [Google Scholar] [CrossRef]

- Božič, J.; Tabernik, D.; Skočaj, D. End-to-end training of a two-stage neural network for defect detection. In Proceedings of the IEEE 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 5619–5626. [Google Scholar] [CrossRef]

- Liu, J.; Yang, X.; Lau, S.; Wang, X.; Luo, S.; Lee, V.C.S.; Ding, L. Automated pavement crack detection and segmentation based on two-step convolutional neural network. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 1291–1305. [Google Scholar] [CrossRef]

- Park, M.H.; Cho, J.H.; Kim, Y.T. CNN Model with Multilayer ASPP and Two-Step Cross-Stage for Semantic Segmentation. Machines 2023, 11, 126. [Google Scholar] [CrossRef]

- Jiang, Y.; Pang, D.; Li, C.; Yu, Y.; Cao, Y. Two-step deep learning approach for pavement crack damage detection and segmentation. Int. J. Pavement Eng. 2022, 1–14. [Google Scholar] [CrossRef]

- Dina, A.S.; Siddique, A.B.; Manivannan, D. A deep learning approach for intrusion detection in Internet of Things using focal loss function. Internet Things 2023, 22, 100699. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).