Estimation of Earth’s Central Angle Threshold and Measurement Model Construction Method for Pose and Attitude Solution Based on Aircraft Scene Matching

Abstract

:1. Introduction

- In this paper, an aircraft positioning posture-solving approach is proposed, utilizing analogous three-dimensional data as input. This method significantly enhances the precision of solving flat coordinates through the application of the EPnP algorithm.

- The replacement of three-dimensional solving in scene matching with a plane ensures the determination of the critical value of the central angle. This theoretical advancement lays the foundation for future investigations into the positioning posture solving of scene matching.

- The parameters of the camera, critical angle, and the inherent relationship among field angles were effectively determined in this study. These findings establish the corresponding functional relationships and provide essential measurement principles for subsequent research in this field.

2. EarM_EPnP

- Setting up the spherical coordinates system and obtaining the spherical coordinates.

- Solving the spherical coordinates based on EPnP and acquiring the results of solving the positioning posture.

- Integrating with the results of EPnP solving and taking GPS precision as a datum to decide the central angle of the Earth.

- Construction of theoretical models for the height of aerial photography, the central angle, and the field angle.

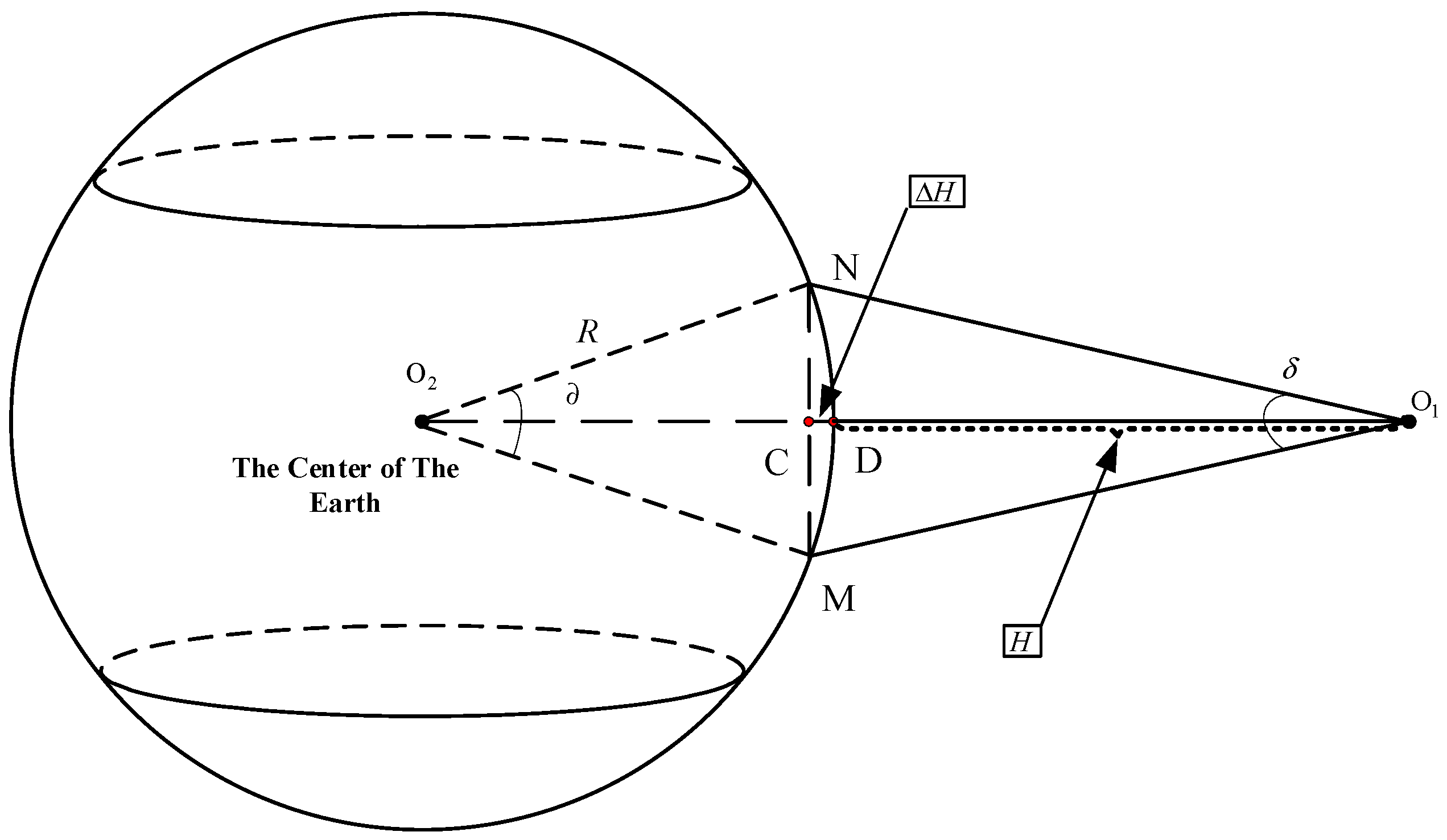

2.1. Construction of Spherical Coordinates

2.2. EPnP Positioning Posture Solving for Spherical Coordinates

2.3. Threshold Value of Central Angle

2.4. Model of Aerial and Field of View Angle under Fixed Central Angle

3. Simulative Experiment and Discussion

3.1. Experimental Conditions

3.2. Ablation Experiments of EarM_EPnP in Different Solution Points

3.3. Errors of EPnP under Different Central Angle Thresholds

3.4. The Relationship between Flight Height and Field Angle

3.5. Experiment on the Relationship between Attitude Error and Maximum Central Angle

3.6. Discussion

- This spherical model is a more realistic representation of the Earth and using the spherical model in the PNP (Perspective-n-Point) method provides more accurate positioning information for the aircraft. However, the spherical model is complex and challenging to use in practical applications due to sensor limitations. This paper derives the error propagation relationship of the spherical model and calculates corresponding central angle thresholds for errors of 1 m, 10 m, and 100 m. This simplifies the approach for engineering applications.

- The paper presents the functional relationship between flight altitude and camera field of view. By considering different error ranges, the sensor field of view can be determined in conjunction with the flight altitude. Conversely, based on the given sensor field of view and error requirements, the accurate flight altitude can be determined, providing convenience for practical applications.

- The experimental results demonstrate that the central angle threshold can be accurately and stably determined on the X and Y axes. However, there is fluctuation and simplicity on the Z-axis, indicating a limitation. Engineering applications can utilize complementary sensors such as altimeters to address this issue and it also serves as a key aspect for further research.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hadfield, S.; Lebeda, K.; Bowden, R. HARD-PnP: PnP Optimization Using a Hybrid Approximate Representation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 768–774. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Zhang, L.; Cheng, L.; Koch, R. Pose Estimation from Line Correspondences: A Complete Analysis and a Series of Solutions. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1209–1222. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Zhang, T.; Jagadeesan, J. Re-weighting and 1-Point RANSAC-Based PnP Solution to Handle Outliers. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1209–1222. [Google Scholar] [CrossRef]

- Chen, B.; Parra, Á.; Cao, J.; Li, N.; Chin, T.J. End-to-End Learnable Geometric Vision by Backpropagating PnP Optimization. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 124–127. [Google Scholar]

- Qiu, J.; Wang, X.; Fua, P.; Tao, D. Matching Seqlets: An Unsupervised Approach for Locality Preserving Sequence Matching. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 745–752. [Google Scholar] [CrossRef] [PubMed]

- Bekkers, E.J.; Loog, M.; ter Haar Romeny, B.M.; Duits, R. Template Matching via Densities on the Roto-Translation Group. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 452–466. [Google Scholar] [CrossRef]

- He, Z.; Jiang, Z.; Zhao, X.; Wu, C. Sparse Template-Based 6-D Pose Estimation of Metal Parts Using a Monocular Camera. IEEE Trans. Ind. Electron. 2020, 67, 390–401. [Google Scholar] [CrossRef]

- An, Y.; Wang, L.; Ma, R.; Wang, J. Geometric Properties Estimation from Line Point Clouds Using Gaussian-Weighted Discrete Derivatives. IEEE Trans. Ind. Electron. 2021, 68, 703–714. [Google Scholar] [CrossRef]

- Yu, J.; Hong, C.; Rui, Y.; Tao, D. Multitask Autoencoder Model for Recovering Human Poses. IEEE Trans. Ind. Electron. 2018, 65, 5060–5068. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, Z.; Gao, Y.; Tao, D. Robust Method for Measuring the Position and Orientation of Drogue Based on Stereo Vision. IEEE Trans. Ind. Electron. 2021, 68, 4298–4308. [Google Scholar] [CrossRef]

- Lee, T.-J.; Kim, C.-H.; Cho, D.-I.D. A Monocular Vision Sensor-Based Efficient SLAM Method for Indoor Service Robots. IEEE Trans. Ind. Electron. 2019, 66, 318–328. [Google Scholar] [CrossRef]

- Sun, Y.; Chen, J.; Yuen, C.; Rahardja, S. Indoor Sound Source Localization With Probabilistic Neural Network. IEEE Trans. Ind. Electron. 2018, 65, 6403–6413. [Google Scholar] [CrossRef]

- Wu, J.; She, J.; Wang, Y.; Su, C.Y. Position and Posture Control of Planar Four-Link Underactuated Manipulator Based on Neural Network Model. IEEE Trans. Ind. Electron. 2020, 67, 4721–4728. [Google Scholar] [CrossRef]

- Seadawy, A.R.; Rizvi, S.T.R.; Ahmad, S.; Younis, M.; Baleanu, D. Lump, lump-one stripe, multiwave and breather solutions for the Hunter–Saxton equation. Open Phys. 2021, 19, 1–10. [Google Scholar] [CrossRef]

- Ahmad, H.; Seadawy, A.R.; Khan, T.A. Numerical solution of Korteweg–de Vries-Burgers equation by the modified variational iteration algorithm-II arising in shallow water waves. Phys. Scr. 2020, 95, 045210. [Google Scholar] [CrossRef]

- Seadawy, A.R.; Kumar, D.; Chakrabarty, A.K. Dispersive optical soliton solutions for the hyperbolic and cubic-quintic nonlinear Schrödinger equations via the extended sinh-Gordon equation expansion method. Eur. Phys. J. Plus 2018, 133, 182. [Google Scholar] [CrossRef]

- Cranor, L.F. P3P: Making privacy policies more useful. IEEE Secur. Priv. 2003, 1, 50–55. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EP n P: An accurate O (n) solution to the P n P problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Shapiro, R. Direct linear transformation method for three-dimensional cinematography. Research Quarterly. Am. Alliance Health Phys. Educ. Recreat. 1978, 49, 197–205. [Google Scholar] [CrossRef]

- Penate-Sanchez, A.; Andrade-Cetto, J.; Moreno-Noguer, F. Exhaustive linearization for robust camera pose and focal length estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2387–2400. [Google Scholar] [CrossRef]

- Younas, U.; Seadawy, A.R.; Younis, M.; Rizvi, S.T.R. Optical solitons and closed form solutions to the (3 + 1)-dimensional resonant Schrödinger dynamical wave equation. Int. J. Mod. Phys. B 2020, 34, 2050291. [Google Scholar] [CrossRef]

- Seadawy, A.R.; Cheemaa, N. Some new families of spiky solitary waves of one-dimensional higher-order K-dV equation with power law nonlinearity in plasma physics. Indian J. Phys. 2020, 94, 117–126. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Y.; Lin, Z.; Yuille, A.L. Robust 3D Human Pose Estimation from Single Images or Video Sequences. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1227–1241. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Kyung, C.-M. A Memory- and Accuracy-Aware Gaussian Parameter-Based Stereo Matching Using Confidence Measure. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1845–1858. [Google Scholar] [CrossRef] [PubMed]

- Schetselaar, E.M. Fusion by the IHS transform: Should we use cylindrical or spherical coordinates? Int. J. Remote Sens. 1998, 19, 759–765. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Gong, Z.; Liu, T.; Dong, J. Estimation of Earth’s Central Angle Threshold and Measurement Model Construction Method for Pose and Attitude Solution Based on Aircraft Scene Matching. Appl. Sci. 2023, 13, 10051. https://doi.org/10.3390/app131810051

Liu H, Gong Z, Liu T, Dong J. Estimation of Earth’s Central Angle Threshold and Measurement Model Construction Method for Pose and Attitude Solution Based on Aircraft Scene Matching. Applied Sciences. 2023; 13(18):10051. https://doi.org/10.3390/app131810051

Chicago/Turabian StyleLiu, Haiqiao, Zichao Gong, Taixin Liu, and Jing Dong. 2023. "Estimation of Earth’s Central Angle Threshold and Measurement Model Construction Method for Pose and Attitude Solution Based on Aircraft Scene Matching" Applied Sciences 13, no. 18: 10051. https://doi.org/10.3390/app131810051

APA StyleLiu, H., Gong, Z., Liu, T., & Dong, J. (2023). Estimation of Earth’s Central Angle Threshold and Measurement Model Construction Method for Pose and Attitude Solution Based on Aircraft Scene Matching. Applied Sciences, 13(18), 10051. https://doi.org/10.3390/app131810051