ESL: A High-Performance Skiplist with Express Lane

Abstract

:1. Introduction

- We propose a new in-memory skiplist, denoted as ESL, which is composed of a cache-optimized index level (COIL), a link-based data level, and a parent of data level (PDL) between the COIL and the data level.

- We exploit the asynchronous update for the COIL and the PDL and update them using multiple background threads.

- We develop a technique to accelerate the read performance and tolerate inconsistencies. Our technique combines the exponential search [28] and linear search to accelerate the read operation and tolerate the inconsistencies in the COIL caused by the background thread shift operation.

2. Background

2.1. Skiplist

2.1.1. Cache-Conscious Skiplist

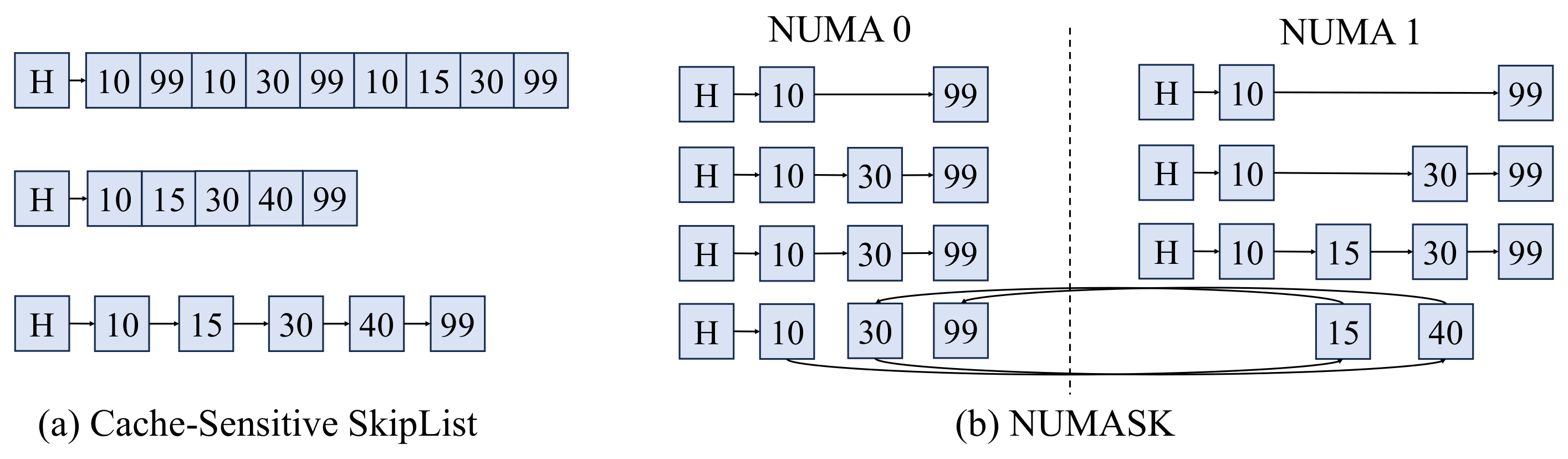

- Cache-Sensitive Skiplist (CSSL) [24] is a variant of a skiplist, which reduces the number of cache misses by merging the multiple nodes in the index levels into a single array, denoted as a fast lane. The fast lane is a consecutive array that contains all nodes, with the exception of the data level, which contains all data in the skiplist. As the nodes are in a single array and stored in a sorted manner, the data structure is more cache-friendly. CSSL includes another array, denoted as the proxy lane, which consists of the data stored at the data level. Since the proxy lane is the array, it accelerates the process of finding a target data node not found at higher levels. However, because the nodes of both index levels and data levels in a skiplist are stored in separate arrays, numerous shift operations are required for node insertion or deletion. As shown in [24], CSSL delays the fast lane and proxy lane updates to mitigate the shift operation overhead. However, it leads to the periodic reconstruction of the fast lane and proxy lane, which may hinder overall performance.

- Parallel in-memory Skiplist (PSL) [25] is a skiplist that merges multiple nodes into a node that is similar to the B+-tree node. This structure enables reducing the number of cache misses in the traversing skiplist. The Parallel in-memory Skiplist also defers updates of higher index levels and rebuilds the structure when the number of updates reaches the threshold using background threads. Since it periodically rebuilds the whole index structure in an asynchronous manner, the background threads can be the performance bottleneck.

2.1.2. Asynchronous Update for Index Levels

- No Hot Spot Skiplist (NHSSL) [26] decouples each level within the skiplist and updates the index levels asynchronously using background threads. The NHSSL hides the update overhead for index levels and employs the additional rebalancing operation to enhance the skiplist.

- NUMASK [27] is an improved version of NHSSL, so it also exploits asynchronous updates for index levels. In addition, NUMASK considers NUMA awareness by replicating index levels for every NUMA socket. Also, NUMASK adds an additional level—denoted as the intermediate layer—to the index level to efficiently find the data, which are stored in the NUMA remote socket.

2.2. Tree-Based In-Memory Index Structure

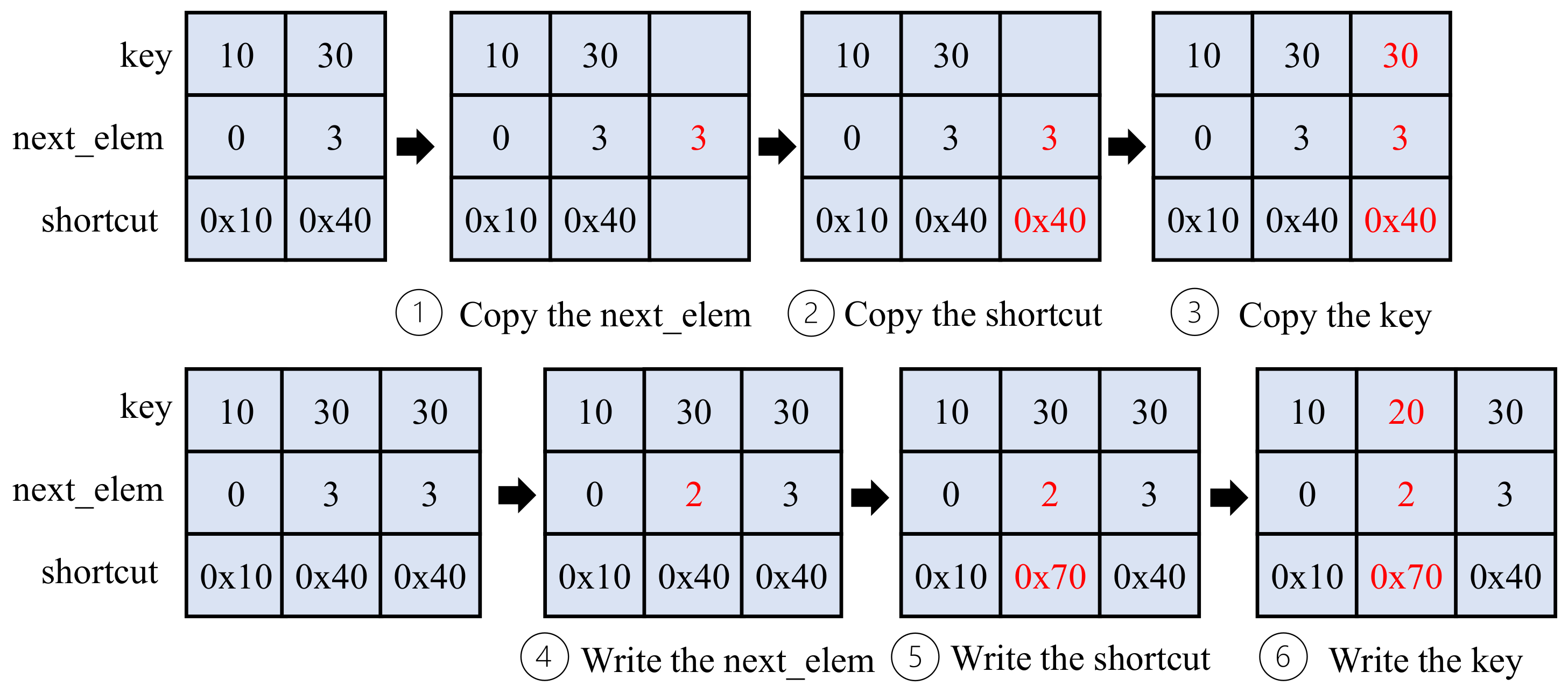

2.3. Endurable Transient Inconsistency

3. Design Overview

3.1. Design Goal of ESL

- Multi-core scalability. Most computer systems, from embedded devices to servers in the data center, have multiple core numbers, so the skiplist structure should be able to efficiently exploit multiple cores.

- Cache Efficiency Since the CPU caches have lower latency than the main memory, the key to improving the performance of a skiplist, such as the in-memory data structure, is to efficiently leverage the CPU caches.

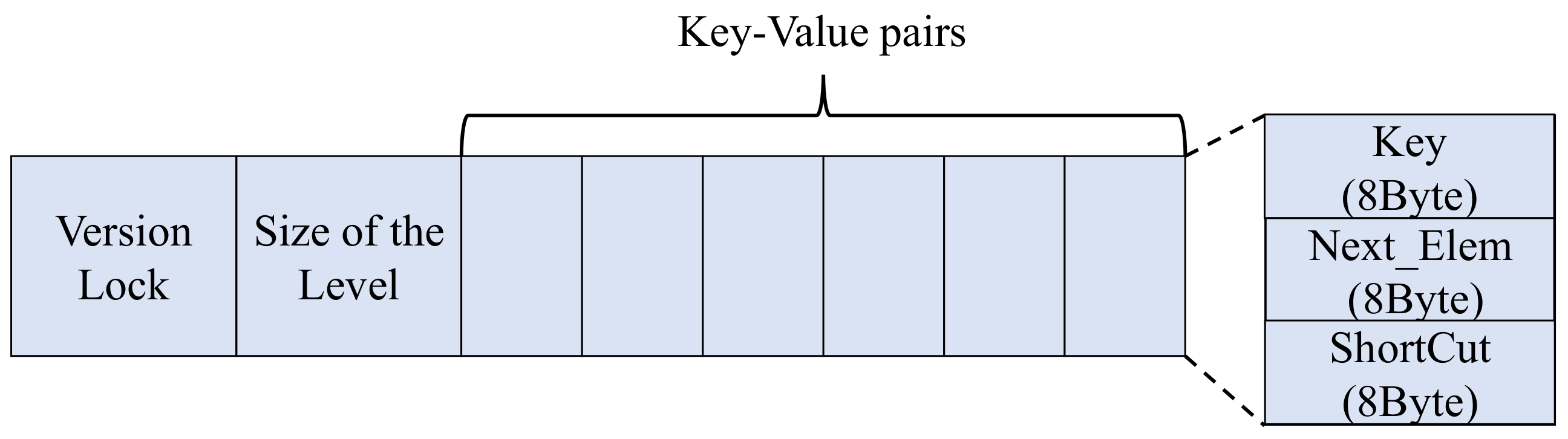

3.2. Cache-Optimized Index Level (COIL) with Express Lane

3.3. Lock-Free Data Level and Parent of the Data Level (PDL)

3.4. Asynchronous Update of the COIL and the PDL

3.5. Synchronization

- COIL: We adopt the read-optimized write exclusive (ROWEX) [39] protocol for the COIL. Traversing the COIL is an essential operation in ESL, as every operation has to find the target location using the COIL. The foreground threads do not modify the COIL; only the background threads update the COIL. Hence, ESL leverages the ROWEX protocol, which provides a non-blocking read operation with only a writer.

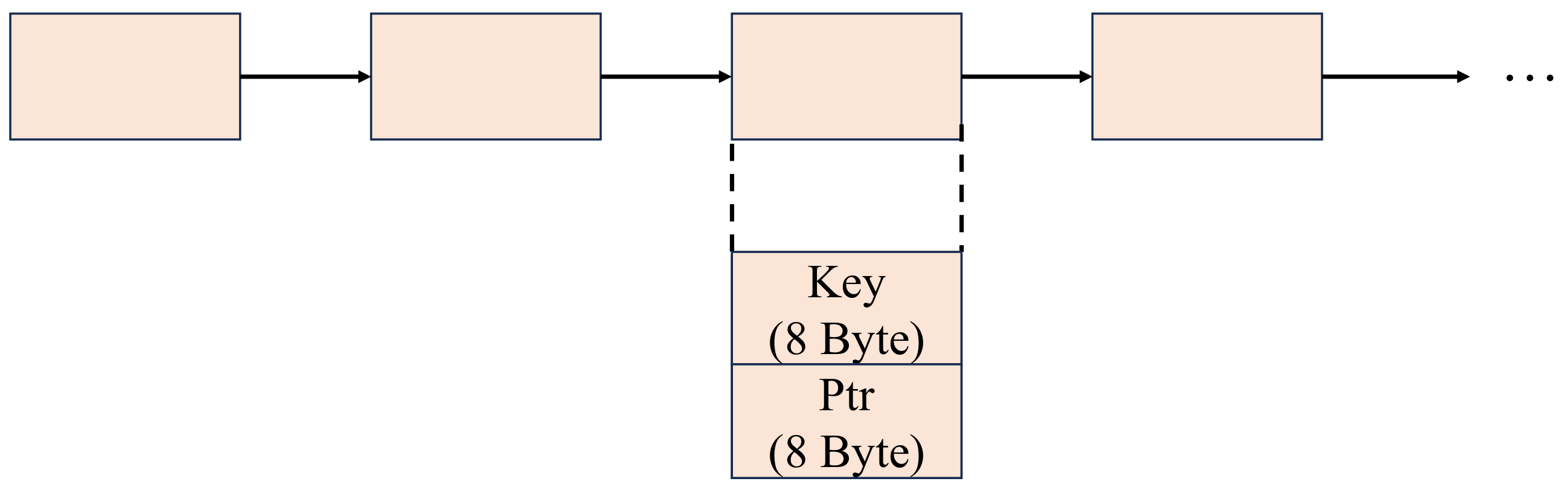

- The data level and the PDL: ESL exploits the lock-free linked list for the data level and the PDL. The main advantage of using a lock-free linked list for the data level is that the foreground threads can read or update the data level simultaneously without blocking. Also, the lock-free linked list helps minimize the tail latency [40]. Since each key–value pair is stored in a single linked list node, in the critical section, ESL only has to conduct a pointer update operation to connect the newly created data node.

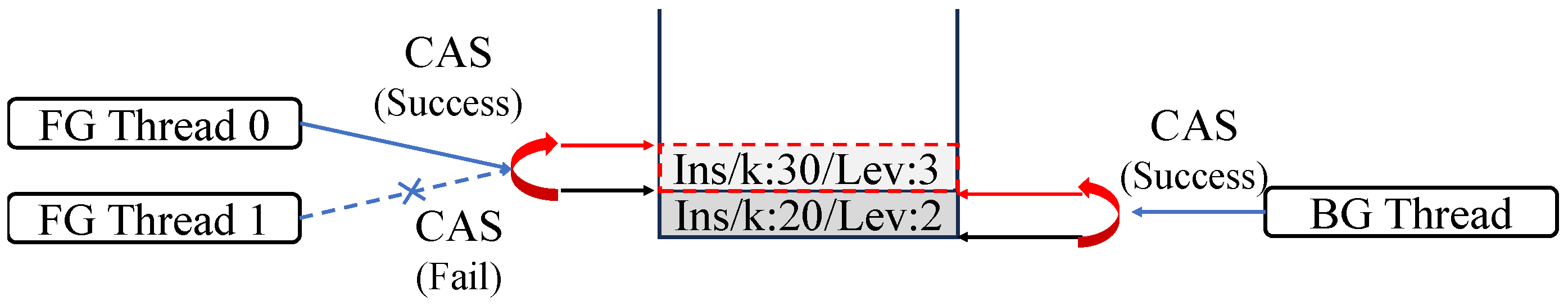

- Operation log: The operation log in ESL works in a lock-free manner. The operation log queue is implemented using compare-and-swap (CAS) instructions. The foreground threads first acquire the log space by increasing the tail of the operation log. The foreground threads can write the operation log entries only when they successfully update the allocation index number. Similarly, the background threads have to update the head using a CAS operation before processing the operation log entries.

4. Design of ESL

4.1. Structure of ESL

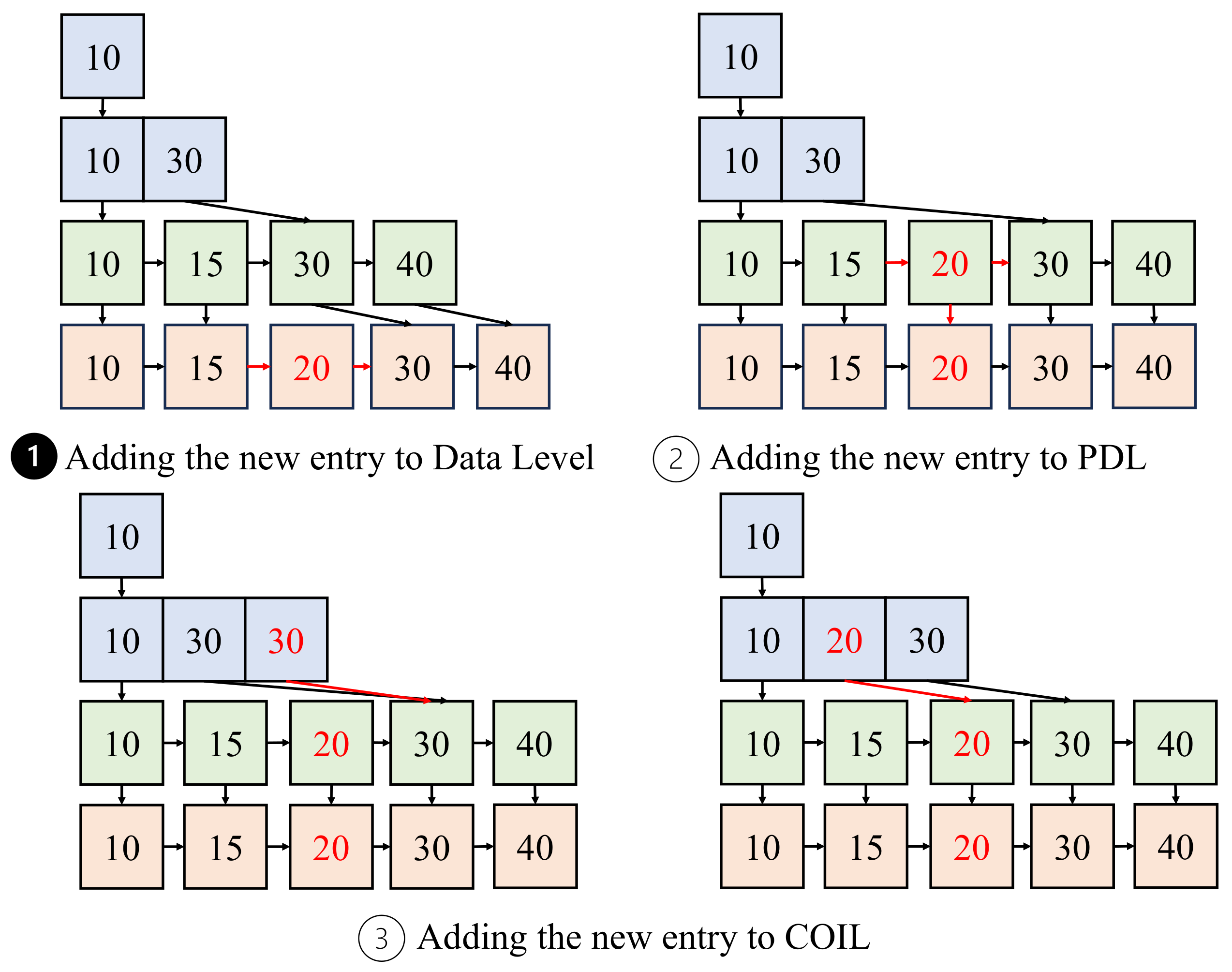

4.2. Write Operation (Insert/Update/Delete)

- Insert. Since a skiplist is a probabilistic data structure, it needs to generate a random number to determine the level for a given key–value pair. When the level is determined, the writer allocates a new data node at the data level and writes the key–value pair. After allocating the data node, the writer traverses the COIL (❶ in Figure 2) and the PDL, (❷), finds the target location at the data level (❸ in Figure 2), and then adds it using the CAS instruction to atomically connect the newly created data node (❹ in Figure 2). Finally, the writer creates a new operation log entry and adds it to the operation log (❺ in Figure 2). The background threads will process the operation using the operation log in an asynchronous manner.

- Update. The update operation of ESL does not create a new key–value pair. Instead, it changes the pointer value in the existing data node at the data level, which has the same key. Hence, the update operation of ESL requires traversing the COIL, the PDL, and the data level. Within the data level, the writer finds the target data node. After finding the target data node, the writer changes the pointer value atomically using the CAS instruction. In the update operation, ESL does not employ an asynchronous update for this update operation since there is no update operation for the COIL.

- Delete. ESL’s delete operation traverses the COIL and the PDL, similar to the insert and update operation. If the writer finds the index node, which has the same key as the given key, the writer logically deletes the index node by marking it as a deleted node. After marking the node, the writer moves to the lower level and logically deletes the index node at the index level. At the PDL and the data level, the writer finds the target node and deletes the data node by disconnecting the pointer from the previous node. After disconnecting the pointer, the writer deletes the data node. In order to safely reclaim the memory space, ESL uses epoch-based memory reclamation [37,38,41,42]. Note that since the index node in the lowest level of the COIL and the PDL has the target node’s virtual address instead of the index, deleting the data node from the PDL does not hurt the correctness.

4.3. Asynchronous Update

4.4. Non-Blocking Read

- Tolerating Inconsistencies in the COIL. In the read operation, the reader traverses the index levels. To accelerate the performance, ESL leverages an exponential search to skip the unnecessary key comparison. When the exponential search reduces the traverse range, the reader searches for the proper key using a linear search. In the search operation, the reader first compares the key of the index level and then moves to the next data until the reader finds the same key as the given key. In this case, the reader moves to the data node at the data level directly. When the reader reaches the key larger than the given key, it moves to the next index level.

- Tolerating Inconsistencies in the PDL/DL. The read operation at the data level or PDL is intuitive. Since the PDL and DL are lock-free linked lists, the linked list node, which is being created, is not visible to the reader until it is connected by the next pointer. Hence, the reader can find a consistent state only.

- Tolerating Inconsistencies between the COIL and the PDL/DL. Since the index levels and the PDL/DL are decoupled, the reader will reach another node, which is the neighbor of the target node. Similar to that of the COIL, the reader traverses the PDL or DL to find the target node.

4.5. Concurrency Control

4.5.1. Version-Based Locking Protocol for Updating the COIL

4.5.2. Lock-Free Operation Log

5. Implementation

6. Evaluation

6.1. Experimental Environment

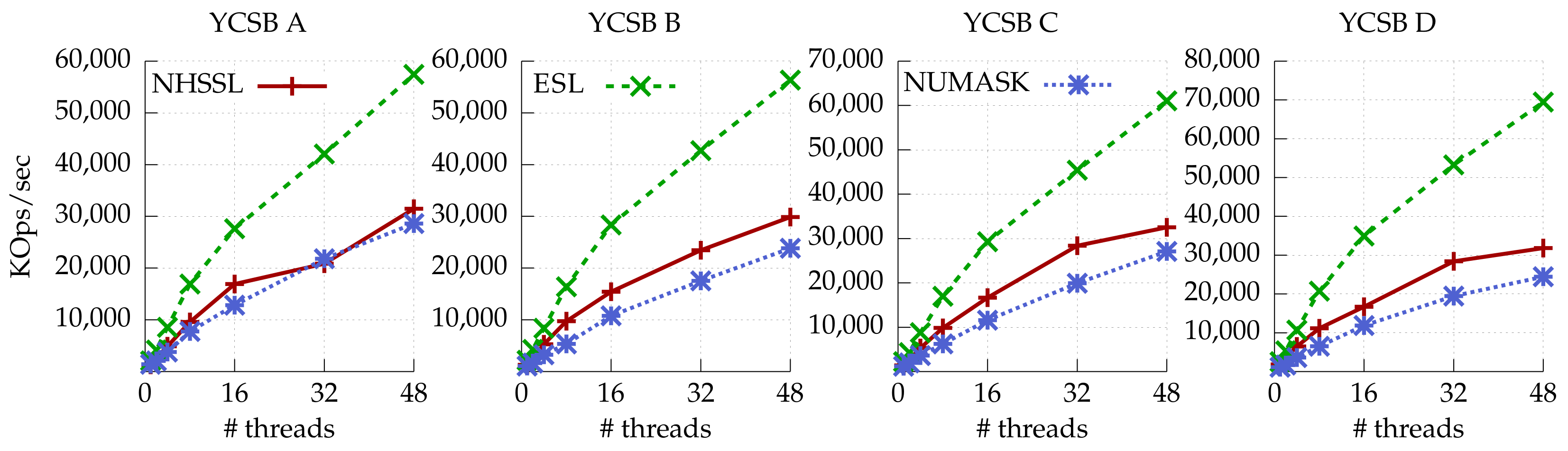

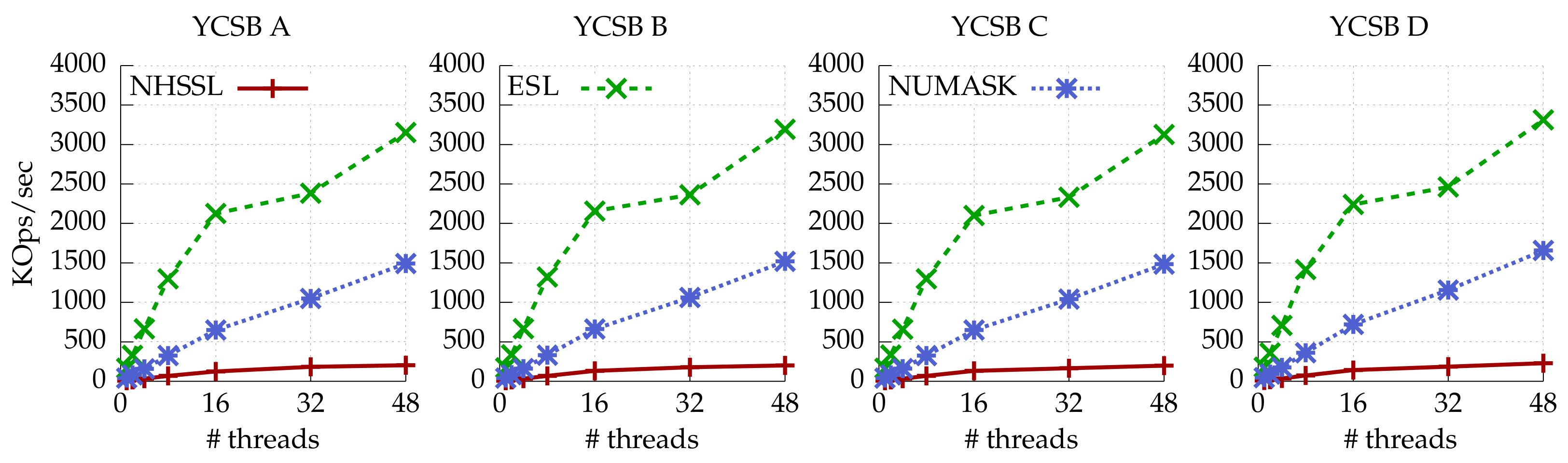

- Hardware. We performed experiments on a machine equipped with two NUMA sockets, each featuring an Intel Xeon Gold 5318Y processor with 24 physical cores. The machine offers a memory space of 768 GB DDR4 DRAM.

- Competitors We compared the performance of ESL against other skiplist data structures. We included the No Hot Spot Skiplist (NHSSL) [26] and NUMASK [27]. We used the publicly available version of NHSSL and NUMASK in Synchrobench [46,47]. We could not evaluate the Cache-Sensitive Skiplist (CSSL) [24] because the open-sourced version did not consider the multi-thread and randomized key insertion. The implementation of the Parallel in-memory Skiplist (PSL) [25] was not open-sourced as of writing this paper.

6.2. Throughput

6.2.1. High Level (Maximum Level 19)

6.2.2. Low Level (Maximum Level 5)

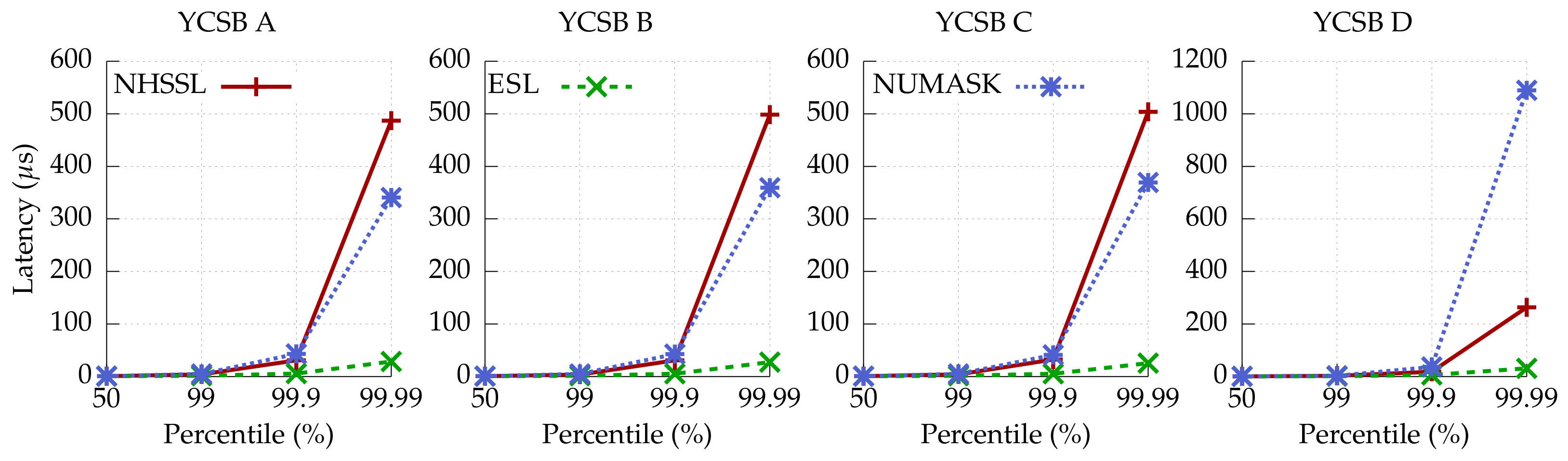

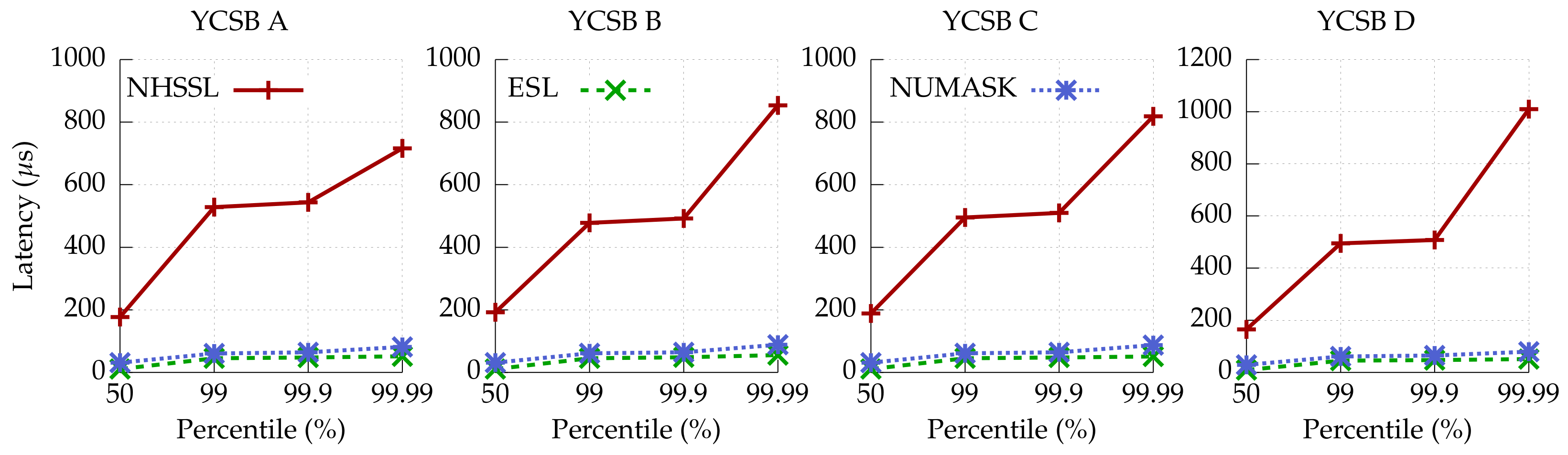

6.3. Tail Latency

6.3.1. High Level (Maximum Level 19)

6.3.2. Low Level (Maximum Level 5)

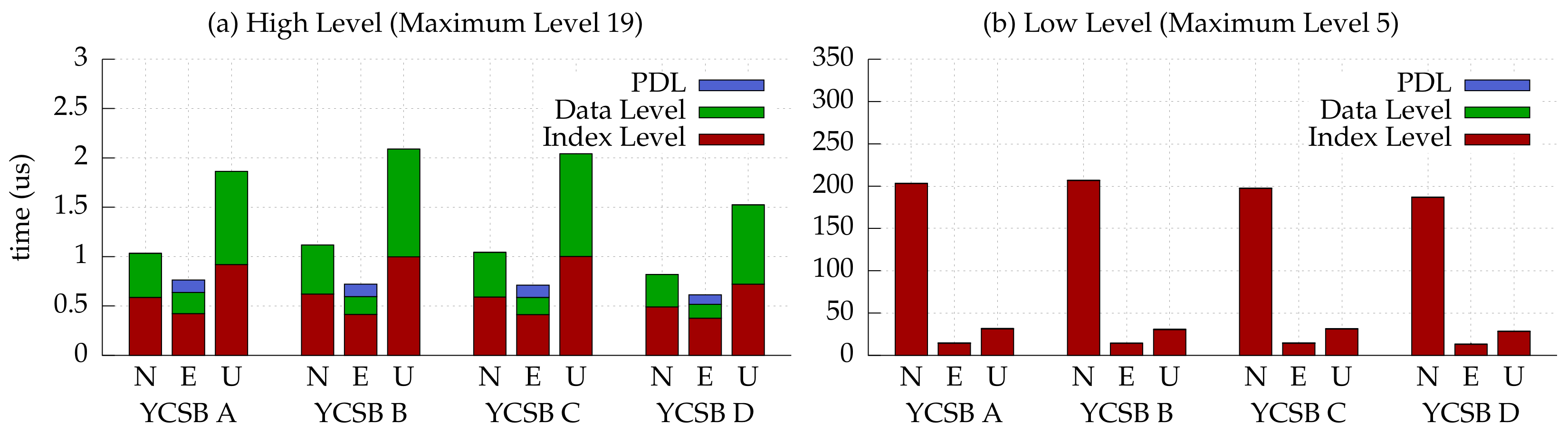

6.4. Performance Breakdown

6.4.1. High Level (Maximum Level 19)

6.4.2. Low Level (Maximum Level 5)

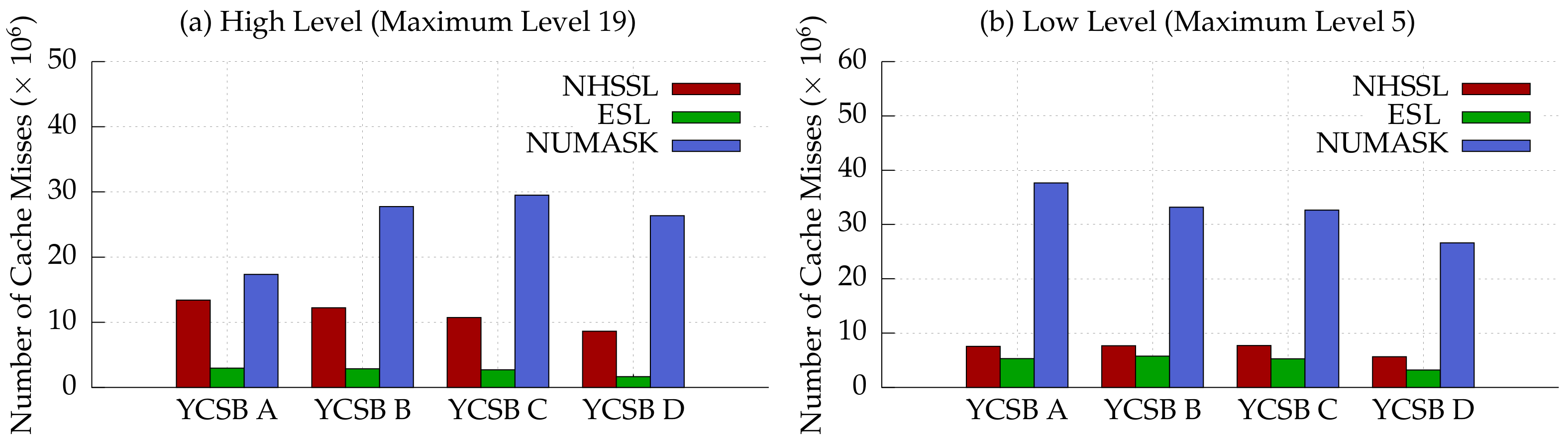

6.5. Cache Miss

6.6. Real-World Workload

7. Discussion

7.1. Limitations of ESL

- Limitations of the asynchronous update. The COIL of the ESL shifts the key–value pairs to add a new one to each index level. Even though the ESL leverages asynchronous updates for the COIL and the PDL, the overhead of the shift operation can be too high when the number of key–value pairs is too large. In that case, ESL can add more background threads or exploit a more lock-free linked list to the upper level of the PDL. Also, the asynchronous update makes the cache line dirty, so the traverse overhead of concurrent threads can be larger.

- Cache misses when moving to the lower level. In the original skiplist, each node keeps the pointers of all levels. Hence, moving to the next level does not incur the cache misses. However, ESL merges the key–value pairs in the same level, so each level is separated. Thus, moving to the next level in ESL accompanies cache misses.

7.2. Skiplist for Emerging Storage Media

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, T.; Johnson, R. Scalable Logging Through Emerging Non-volatile Memory. In Proceedings of the 40th International Conference on Very Large Data Bases (VLDB), Hangzhou, China, 1–5 September 2014. [Google Scholar]

- Arulraj, J.; Perron, M.; Pavlo, A. Write-behind Logging. In Proceedings of the 42nd International Conference on Very Large Data Bases (VLDB), New Delhi, India, 5–9 September 2016. [Google Scholar]

- Oh, G.; Kim, S.; Lee, S.W.; Moon, B. SQLite Optimization with Phase Change Memory for Mobile Applications. In Proceedings of the 41st International Conference on Very Large Data Bases (VLDB), Kohala Coast, HI, USA, 31 August–4 September 2015; pp. 1454–1465. [Google Scholar]

- Park, J.H.; Oh, G.; Lee, S.W. SQL Statement Logging for Making SQLite Truly Lite. In Proceedings of the 43rd International Conference on Very Large Data Bases (VLDB), Munich, Germany, 28 August–1 September 2017; pp. 513–525. [Google Scholar]

- Seo, J.; Kim, W.H.; Baek, W.; Nam, B.; Noh, S.H. Failure-Atomic Slotted Paging for Persistent Memory. In Proceedings of the 22nd ACM International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS), Xi’an, China, 8–12 April 2017. [Google Scholar]

- Kim, W.H.; Kim, J.; Baek, W.; Nam, B.; Won, Y. NVWAL: Exploiting NVRAM in Write-Ahead Logging. In Proceedings of the 21st ACM International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS), Atlanta, GA, USA, 2–6 April 2016. [Google Scholar]

- Volume of Data/Information Created, Captured, Copied, and Consumed Worldwide from 2010 to 2020, with Forecasts from 2021 to 2025. Available online: https://www.statista.com/statistics/871513/worldwide-data-created/ (accessed on 28 August 2023).

- DRAM and NAND Flash Prices Expected to Fall Further in 2Q23 Due to Weak Server Shipments and High Inventory Levels, Says TrendForce. Available online: https://www.trendforce.com/presscenter/news/20230509-11667.html (accessed on 28 August 2023).

- Anandtech. Intel Launches Optane DIMMs Up To 512GB: Apache Pass Is Here! 2018. Available online: https://www.anandtech.com/show/12828/intel-launches-optane-dimms-up-to-512gb-apache-pass-is-here (accessed on 6 June 2023).

- CXL Consortium. Compute Express Link: The Breakthrough CPU-to-Device Interconnect 2020. Available online: https://www.computeexpresslink.org/ (accessed on 1 July 2023).

- Kozyrakis, C.; Kansal, A.; Sankar, S.; Vaid, K. Server Engineering Insights for Large-Scale Online Services. IEEE Micro 2010, 30, 8–19. [Google Scholar] [CrossRef]

- Spec CPU2016 Results. Available online: https://www.spec.org/cpu2006/results/ (accessed on 28 August 2023).

- Spec CPU2017 Results. Available online: https://www.spec.org/cpu2017/results/ (accessed on 28 August 2023).

- 50 Years of Microprocessor Trend Data. Available online: https://github.com/karlrupp/microprocessor-trend-data (accessed on 28 August 2023).

- Lehman, P.L.; Yao, S.B. Efficient Locking for Concurrent Operations on B-Trees. ACM Trans. Database Syst. 1981, 6, 650–670. [Google Scholar] [CrossRef]

- Rao, J.; Ross, K.A. Making B+- Trees Cache Conscious in Main Memory. In Proceedings of the 2000 ACM SIGMOD/PODS Conference, Dallas, TX, USA, 16–18 May 2000; pp. 475–486. [Google Scholar]

- Hwang, D.; Kim, W.H.; Won, Y.; Nam, B. Endurable Transient Inconsistency in Byte-addressable Persistent B+-tree. In Proceedings of the 16th USENIX Conference on File and Storage Technologies (FAST), Oakland, CA, USA, 12–15 February 2018; pp. 187–200. [Google Scholar]

- Liu, J.; Chen, S.; Wang, L. LB+-Trees: Optimizing Persistent Index Performance on 3DXPoint Memory. Proc. VLDB Endow. 2020, 13, 1078–1090. [Google Scholar] [CrossRef]

- Pugh, W. Skip Lists: A Probabilistic Alternative to Balanced Trees. Commun. ACM 1990, 33, 668–676. [Google Scholar] [CrossRef]

- Google. LevelDB. Available online: https://github.com/google/leveldb (accessed on 20 July 2023).

- Facebook. RocksDB. Available online: http://rocksdb.org/ (accessed on 20 July 2023).

- Apache. Welcome to Apache HBase™. Available online: https://hbase.apache.org/ (accessed on 20 July 2023).

- MongoDB. Available online: https://www.mongodb.org/ (accessed on 20 July 2023).

- Sprenger, S.; Zeuch, S.; Leser, U. Cache-Sensitive Skip List: Efficient Range Queries on Modern CPUs. In Data Management on New Hardware: 7th International Workshop on Accelerating Data Analysis and Data Management Systems Using Modern Processor and Storage Architectures, ADMS 2016 and 4th International Workshop on In-Memory Data Management and Analytics, IMDM 2016, New Delhi, India, 1 September 2016, Revised Selected Papers 4; Blanas, S., Bordawekar, R., Lahiri, T., Levandoski, J.J., Pavlo, A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 10195, pp. 1–17. [Google Scholar] [CrossRef]

- Xie, Z.; Cai, Q.; Jagadish, H.; Ooi, B.C.; Wong, W.F. Parallelizing Skip Lists for In-Memory Multi-Core Database Systems. In Proceedings of the 33rd IEEE International Conference on Data Engineering (ICDE), San Diego, CA, USA, 19–22 April 2017. [Google Scholar] [CrossRef]

- Crain, T.; Gramoli, V.; Raynal, M. No Hot Spot Non-blocking Skip List. In Proceedings of the 33rd International Conference on Distributed Computing Systems (ICDCS), Philadelphia, PA, USA, 8–11 July 2013; pp. 196–205. [Google Scholar]

- Daly, H.; Hassan, A.; Spear, M.F.; Palmieri, R. NUMASK: High Performance Scalable Skip List for NUMA. In Proceedings of the 31st International Conference on Distributed Computing (DISC), New Orleans, LA, USA, 16–20 October 2017; pp. 18:1–18:19. [Google Scholar]

- Bentley, J.L.; Yao, A.C.C. An almost optimal algorithm for unbounded searching. Inf. Process. Lett. 1976, 5, 82–87. [Google Scholar] [CrossRef]

- Kim, C.; Chhugani, J.; Satish, N.; Sedlar, E.; Nguyen, A.D.; Kaldewey, T.; Lee, V.W.; Brandt, S.A.; Dubey, P. FAST: Fast Architecture Sensitive Tree Search on Modern CPUs and GPUs. In Proceedings of the 2010 ACM SIGMOD International Conference on Management of Data, New York, NY, USA, 6–10 June 2010; SIGMOD’10. pp. 339–350. [Google Scholar] [CrossRef]

- Cha, H.; Hao, X.; Wang, T.; Zhang, H.; Akella, A.; Yu, X. Blink-hash: An Adaptive Hybrid Index for In-Memory Time-Series Databases. Proc. VLDB Endow. 2023, 16, 1235–1248. [Google Scholar] [CrossRef]

- Choe, J.; Crotty, A.; Moreshet, T.; Herlihy, M.; Bahar, R.I. Hybrids: Cache-conscious concurrent data structures for near-memory processing architectures. In Proceedings of the 34th ACM Symposium on Parallelism in Algorithms and Architectures, Philadelphia, PA, USA, 11–14 July 2022; pp. 321–332. [Google Scholar]

- Kang, H.; Zhao, Y.; Blelloch, G.E.; Dhulipala, L.; Gu, Y.; McGuffey, C.; Gibbons, P.B. PIM-tree: A Skew-resistant Index for Processing-in-Memory. In Proceedings of the 2023 ACM Workshop on Highlights of Parallel Computing, New York, NY, USA, 16 June 2023; pp. 13–14. [Google Scholar]

- Kraska, T.; Beutel, A.; Chi, E.H.; Dean, J.; Polyzotis, N. The Case for Learned Index Structures. In Proceedings of the 2018 International Conference on Management of Data, New York, NY, USA, 22–27 June 2018; SIGMOD’18. pp. 489–504. [Google Scholar] [CrossRef]

- Ding, J.; Minhas, U.F.; Yu, J.; Wang, C.; Do, J.; Li, Y.; Zhang, H.; Chandramouli, B.; Gehrke, J.; Kossmann, D.; et al. ALEX: An Updatable Adaptive Learned Index. In Proceedings of the 2020 ACM SIGMOD International Conference on Management of Data, New York, NY, USA, 14–19 June 2020; SIGMOD’20. pp. 969–984. [Google Scholar] [CrossRef]

- Zhang, Z.; Chu, Z.; Jin, P.; Luo, Y.; Xie, X.; Wan, S.; Luo, Y.; Wu, X.; Zou, P.; Zheng, C.; et al. PLIN: A Persistent Learned Index for Non-Volatile Memory with High Performance and Instant Recovery. Proc. VLDB Endow. 2022, 16, 243–255. [Google Scholar] [CrossRef]

- Li, P.; Hua, Y.; Zuo, P.; Chen, Z.; Sheng, J. ROLEX: A Scalable RDMA-oriented Learned Key-Value Store for Disaggregated Memory Systems. In Proceedings of the 21st USENIX Conference on File and Storage Technologies (FAST 23), Santa Clara, CA, USA, 21–23 February 2023; pp. 99–114. [Google Scholar]

- Mathew, A.; Min, C. HydraList: A Scalable In-Memory Index Using Asynchronous Updates and Partial Replication. In Proceedings of the 46th International Conference on Very Large Data Bases (VLDB), Tokyo, Japan, 31 August–4 September 2020. [Google Scholar]

- Kim, W.H.; Krishnan, R.M.; Fu, X.; Kashyap, S.; Min, C. PACTree: A High Performance Persistent Range Index Using PAC Guidelines. In Proceedings of the 28th ACM Symposium on Operating Systems Principles (SOSP), Virtual, 26–29 October 2021; pp. 424–439. [Google Scholar]

- Leis, V.; Scheibner, F.; Kemper, A.; Neumann, T. The ART of Practical Synchronization. In Proceedings of the International Workshop on Data Management on New Hardware, San Francisco, CA, USA, 27 June 2016; pp. 3:1–3:8. [Google Scholar]

- Chen, Y.; Lu, Y.; Fang, K.; Wang, Q.; Shu, J. UTree: A Persistent B+-Tree with Low Tail Latency. Proc. VLDB Endow. 2020, 13, 2634–2648. [Google Scholar] [CrossRef]

- Hart, T.E.; McKenney, P.E.; Brown, A.D.; Walpole, J. Performance of Memory Reclamation for Lockless Synchronization. J. Parallel Distrib. Comput. 2007, 67, 1270–1285. [Google Scholar] [CrossRef]

- McKenney, P.E. Structured Deferral: Synchronization via Procrastination. ACM Queue 1998, 20, 20–39. [Google Scholar] [CrossRef]

- Marsaglia, G. Xorshift RNGs. J. Stat. Softw. 2003, 8, 1–6. [Google Scholar] [CrossRef]

- Cooper, B.F.; Silberstein, A.; Tam, E.; Ramakrishnan, R.; Sears, R. Benchmarking Cloud Serving Systems with YCSB. In Proceedings of the 1st ACM Symposium on Cloud Computing (SoCC), Indianapolis, IN, USA, 10–11 June 2010; pp. 143–154. [Google Scholar]

- Amazon AWS OpenStreetMap. Available online: https://registry.opendata.aws/osm/ (accessed on 23 August 2023).

- Gramoli, V. More Than You Ever Wanted to Know About Synchronization: Synchrobench, Measuring the Impact of the Synchronization on Concurrent Algorithms. In Proceedings of the 20th ACM Symposium on Principles and Practice of Parallel Programming (PPoPP), San Francisco, CA, USA, 7–11 February 2015; pp. 1–10. [Google Scholar]

- Synchrobench. Available online: https://github.com/gramoli/synchrobench (accessed on 1 July 2023).

- Linux Perf Wiki. Available online: https://perf.wiki.kernel.org/index.php/Main_Page (accessed on 23 August 2023).

- Xiao, R.; Feng, D.; Hu, Y.; Wang, F.; Wei, X.; Zou, X.; Lei, M. Write-Optimized and Consistent Skiplists for Non-Volatile Memory. IEEE Access 2021, 9, 69850–69859. [Google Scholar] [CrossRef]

- Li, Z.; Jiao, B.; He, S.; Yu, W. PhaST: Hierarchical Concurrent Log-Free Skip List for Persistent Memory. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 3929–3941. [Google Scholar] [CrossRef]

- Kannan, S.; Bhat, N.; Gavrilovska, A.; Arpaci-Dusseau, A.; Arpaci-Dusseau, R. Redesigning LSMs for Nonvolatile Memory with NoveLSM. In Proceedings of the 2018 USENIX Annual Technical Conference (ATC), Boston, MA, USA, 11–13 July 2018. [Google Scholar]

- Kim, W.; Park, C.; Kim, D.; Park, H.; ri Choi, Y.; Sussman, A.; Nam, B. ListDB: Union of Write-Ahead Logs and Persistent SkipLists for Incremental Checkpointing on Persistent Memory. In Proceedings of the 16th USENIX Symposium on Operating Systems Design and Implementation (OSDI 22), Carlsbad, CA, USA, 11–13 July 2022; pp. 161–177. [Google Scholar]

| A | B | C | D | ||

|---|---|---|---|---|---|

| N | PDL | 0 | 0 | 0 | 0 |

| Data level | 0.25 | 0.28 | 0.26 | 0.21 | |

| E | PDL | 0.17 | 0.16 | 0.16 | 0.14 |

| Data level | 0.25 | 0.21 | 0.21 | 0.17 | |

| U | PDL | 0 | 0 | 0 | 0 |

| Data level | 0.42 | 0.41 | 0.40 | 0.30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Na, Y.; Koo, B.; Park, T.; Park, J.; Kim, W.-H. ESL: A High-Performance Skiplist with Express Lane. Appl. Sci. 2023, 13, 9925. https://doi.org/10.3390/app13179925

Na Y, Koo B, Park T, Park J, Kim W-H. ESL: A High-Performance Skiplist with Express Lane. Applied Sciences. 2023; 13(17):9925. https://doi.org/10.3390/app13179925

Chicago/Turabian StyleNa, Yedam, Bonmoo Koo, Taeyoon Park, Jonghyeok Park, and Wook-Hee Kim. 2023. "ESL: A High-Performance Skiplist with Express Lane" Applied Sciences 13, no. 17: 9925. https://doi.org/10.3390/app13179925

APA StyleNa, Y., Koo, B., Park, T., Park, J., & Kim, W.-H. (2023). ESL: A High-Performance Skiplist with Express Lane. Applied Sciences, 13(17), 9925. https://doi.org/10.3390/app13179925