1. Introduction

In the contemporary landscape, design and innovation stand as the paramount themes [

1]. Design is fundamentally the deliberate crafting of forms tailored to serve human needs [

2], with the symbolic attributes of products being vital components of cognitive models. Given the intense market competition, products that resonate with and elicit positive emotional reactions from consumers hold a distinct edge [

3]. Often, purchasing decisions hinge on emotional resonances, particularly those subtle sentiments bridging human–object interactions [

4]. A harmonious alignment of Kansei cognitions between designers and users often underpins successful product designs [

5]. The adeptness in discerning users’ Kansei needs for product styling design predicates the success of the design and influences consumers’ purchasing intent and overall satisfaction [

6]. The 1990s witnessed the advent of neuroaesthetics, stemming from the confluence of rapid advancements in cognitive neuroscience and empirical aesthetic research. As product functionality differentials narrow, the emphasis on cognition and emotion-driven design increases [

7]. Undoubtedly, product styling cognition is an essential aspect of cognition research and design practice. This study investigates neuroaesthetics in the innovative product-form design, employing electroencephalography (EEG) and eye tracking.

Evaluating consumer cognitions of product styling includes both explicit and implicit metrics. Explicit measurements are typically realized through self-reporting. In Kansei engineering, traditional product image design (perceptual design) research relies on explicit measurements of conscious processing, primarily using research methods such as interviews, questionnaires, and card sorting. Liu et al. [

8] proposed a Kansei word selection method based on word evaluation frequency. However, online reviews are subjective and influenced by nonemotional factors, such as product packaging and delivery speed. Thus, the study did not consider actual implicit metrics. Gong et al. [

9] combined design thinking and Kansei engineering to study the innovative design of bamboo penholders, utilizing questionnaires, expert interviews, and cluster analysis. The data on people’s cognition of product styling were established using the questionnaire. Ge et al. [

10] developed an algorithmic model regarding consumer preferences based on color scales and Kansei engineering. The color evaluation of solid color shirts was realized using a five-level semantic difference scale. Zhang et al. [

11] proposed a cognitive alignment method to reduce the cognitive differences between users and designers. Though the study explored the cognitive disparity between users and designers in product styling design, the relationship between product samples and image evaluation was determined using an explicit measurement (questionnaire). Liang et al. [

12] used Kansei engineering theory to study automotive interior design and explore the relationship between sensory experiences, perceived values, and design elements. This investigation sourced its data from an online survey assessing product images. A survey of the field reveals that the majority of product design studies primarily employ explicit measurements.

Explicit measurements draw from extrinsic behavioral observations and introspective reflections of individuals. Notably, explicit methods encounter two primary challenges: (1) individuals often exhibit a reluctance to disclose their genuine thoughts and respond in a way that conforms to external expectations, particularly when their genuine views deviate from societal norms; (2) traditional methods rely on conscious introspection, and even when individuals are willing to express their genuine thoughts and attitudes, they cannot effectively express underlying motives, needs, and attitudes. The subconscious significantly influences product styling cognition. Thus, traditional approaches to product styling design can capture only conscious, explicit behaviors and cannot explore the nuances of unconscious cognitive processing. Consequently, traditional approaches also falter in discerning disparities between users’ cognition and action.

This study resolves the limitations of explicit measurements by using eye tracking and EEG implicit measurements. Implicit measurements capture psychological attributes without necessitating self-reporting, instead tapping into subconscious pathways [

13]. Castiblanco Jimenez et al. systematically summarized physiological measurements such as skin conductance, EEG, heart rate, pupillometry, and respiratory rate, pointing out the expansive potential the physiological methods hold, especially in the domain of user engagement [

14]. Bell et al. posited that physiological and neuroscientific techniques can advance consumer research by providing insights into subconscious mechanisms [

15]. These techniques can help researchers understand the mechanisms of consumer behavior. Guo et al. used the event-related potentials (ERPs) of EEG signals to explore users’ preferences for smartphones [

16]. Although some studies have utilized either EEG or eye tracking singularly to investigate product design, only a sparse number have combined these two approaches to explore product styling design [

17,

18]. Yang [

19] posited that quantifying neural mechanisms through EEG signals has gained traction in EEG research. The integration of EEG with other biomonitoring techniques, such as eye tracking, can facilitate multi-indicator detection. Eye-tracking signals are behavioral data captured when a user is exposed to stimulation. Hsu et al. [

20] used the eye-tracking technique to study chair design. Liu and Sogabe [

21] demonstrated that eye tracking could be applied to research in Kansei engineering. Zhou et al. [

22] indicated that the current evaluation methods for medical beds are subjective, emphasizing the need for objective tools. Merging EEG’s neural insights with eye tracking’s behavioral outputs can facilitate a deeper understanding of users’ emotional needs in product styling cognition. Studying the cognitive mechanisms of product styling from the perspective of implicit measurements is crucial for innovative product design. Previous studies have applied eye-tracking and EEG techniques to research areas such as emotion recognition, attention monitoring, and usability evaluation. However, few studies have combined EEG and eye-tracking methodologies to investigate product styling cognition.

From the subjective and objective perspectives, consumers’ product styling evaluation can be categorized into subjective evaluation, objective evaluation, and combined subjective–objective assessment. The subjective evaluation method provides a score based on the participant’s comprehension of product styling, inherently possessing subjective biases and limitations [

23]. Considering the convenience of obtaining subjective evaluation data, approximately 80% of product designs based on Kansei engineering employ the subjective evaluation method. Future product design research is likely to pivot towards objective and subjective–objective evaluations, paving the way for intelligent design methodologies. EEG and eye-movement measurements offer objective metrics, presenting a more accurate and scientifically grounded alternative to subjective measurements. Therefore, this study utilizes implicit measurement methods to investigate users’ subconscious cognitive processes in product styling design.

The primary goals of this research are as follows:

- (1)

To understand the dynamics of product styling cognition based on Kansei engineering and implicit measurements.

- (2)

To elucidate the product styling cognition process employing EEG and eye-movement metrics.

- (3)

To discern the disparities in EEG data across product styling evaluation and perception phases and delineate suitable experimental stages for styling cognition research.

- (4)

To understand EEG and eye-movement patterns in product styling cognition through implicit measurements, thereby providing a new method for product innovation design.

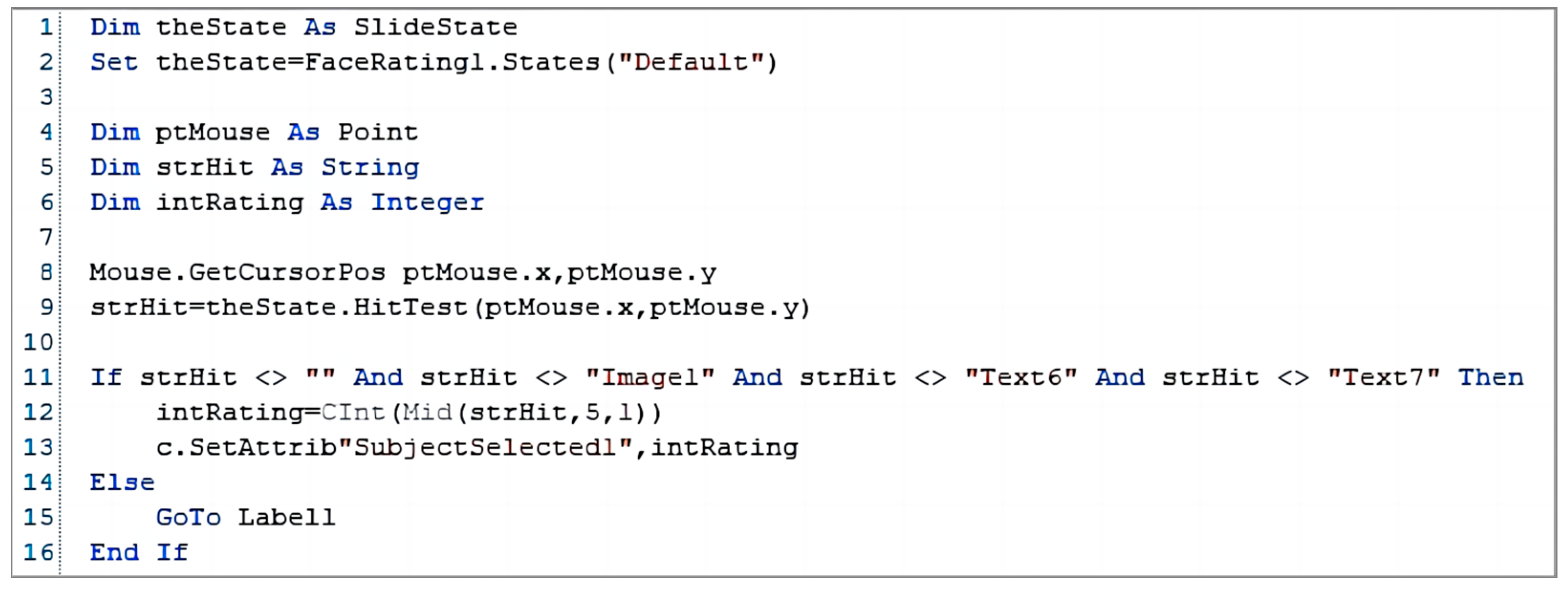

This study first discusses Kansei, Kansei engineering, image, and product image. It then proposes a method to determine the target image using an image lexical similarity calculation model and a cluster analysis algorithm; the method is substantiated by an example. A product styling target image matching experiment is conducted using E-Prime. Research samples are classified into three categories: “high match”, “medium match”, and “low match” relative to the target image. The experiments based on the combination of EEG and eye-tracking measurements are conducted to explore the mechanism of product styling cognition. Finally, the experimental data are analyzed, and the results are discussed. Thus, the study considers an implicit measurement method to study people’s cognition of product styling. Moreover, this study provides a new method for studying subconscious cognition of product styling and a new research tool for implementing Kansei engineering principles in product innovation design.

The main contributions of this research are as follows:

- (1)

The connotations of Kansei, Kansei engineering, image, and product image are analyzed to establish the groundwork for subsequent Kansei engineering theory exploration. The patterns of neural activity and eye movements are analyzed to lay the foundation for this study.

- (2)

A combined EEG and eye-tracking method for product styling image cognition research is proposed to overcome the limitations of traditional explicit measurements, such as high subjectivity.

- (3)

A series of product-form cognitive conclusions based on the combined implicit measurement are drawn to provide a scientific basis for subsequent product-form innovation design.

- (4)

A method for determining target images based on image vocabulary similarity calculation and K-means clustering analysis is validated through examples.

The remainder of this paper is organized as follows:

Section 2 presents the analysis of the relevant theoretical foundations.

Section 3 describes the experimental procedure. The experimental data are analyzed in

Section 4. A discussion of the results is presented in

Section 5. Finally, the conclusions are summarized in

Section 6.

6. Conclusions

This study used a combined approach integrating eye-tracking and EEG techniques to investigate participants’ image cognition of differently matched product study samples. The salient findings are as follows:

- (1)

The lexical similarity computation mode can be used to determine the target image in Kansei engineering.

- (2)

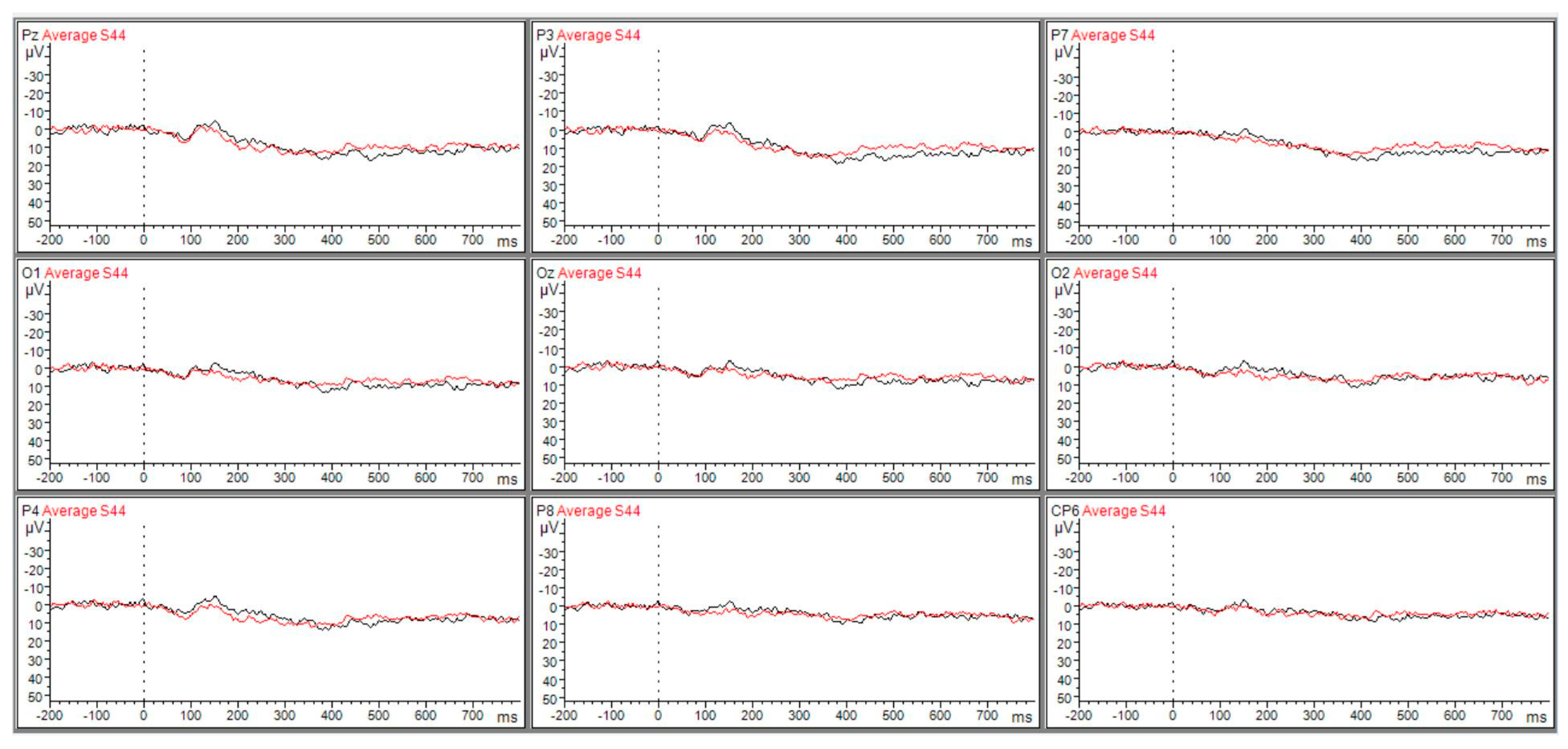

Compared with the product styling evaluation phase, the variability in ERP signals when assessing users’ cognition of product styling was more pronounced during the product styling perception phase. The results indicated that the product styling perception phase is appropriate for studying user cognition of product styling based on implicit measurements.

- (3)

Samples exhibiting “high match” with the target image elicited more pronounced EEG than “low match” samples. Furthermore, the greater the variability between “high match” and “low match” samples, the more distinct the EEG signal variations.

- (4)

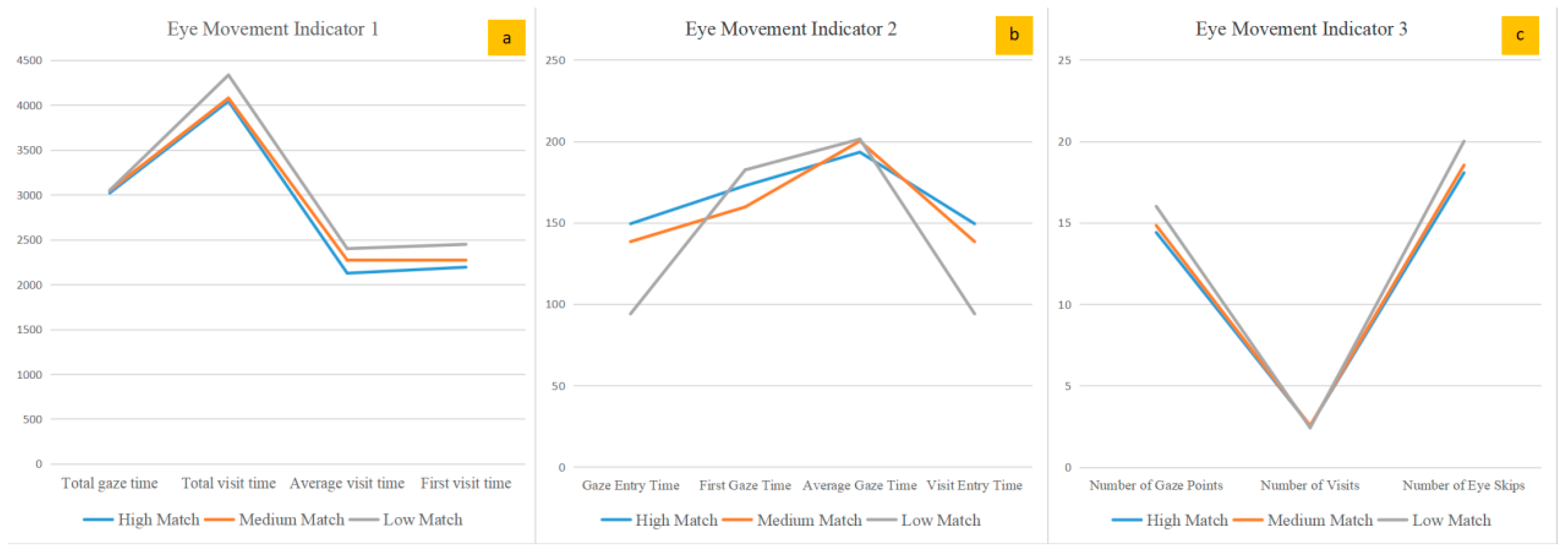

The number of gaze points and eye skips for “high match” samples was smaller than that for “medium match” samples. Similarly, the number of gaze points and eye skips for “medium match” samples was smaller than that for “low match” samples. A smaller number of gaze points and eye skips indicates extended user focus and heightened attention, suggesting that the product styling is more attractive.

For future innovative product styling designs, it would be prudent to monitor EEG signal variations in the frontal brain region during users’ product styling perceptions. A larger EEG signal amplitude in the frontal region signifies a higher match between product styling and the target image, translating to a more appealing product design. Conversely, a diminished EEG amplitude indicates a lower alignment between product styling and target image, suggesting reduced attractiveness of product styling. This method is applicable to styling design, styling research, and styling evaluation of different types of products, such as electronic and transportation products.

Furthermore, the results of eye-tracking experiments can offer invaluable insights for design evaluations and innovative product designs. Key eye-movement metrics such as total, average, and first visit times, along with gaze and visit entry times and the number of gaze points, serve as robust indicators. Elevated values of these metrics typically correlate with heightened or reduced alignment of the product design to the target image.

Nevertheless, this study has some limitations. Although a combined EEG and eye-tracking approach was used to study user cognition of product styling, the spatial resolution constraints of ERP limited the precise localization of brain functions. In the future, we will consider integrating functional magnetic resonance imaging (fMRI) with EEG and eye tracking to study product styling cognition, leveraging the superior spatial resolution of fMRI. Future work will also delve into product innovation design based on the combination of EEG responses, eye movements, heart rate, and facial expressions and a comparative analysis of the advantages and disadvantages of various measurement methods. While this study is limited to product styling image cognition, in the future, we will consider using physiological measurement tools to discern nuances in product color and material cognition.