A Depression Recognition Method Based on the Alteration of Video Temporal Angle Features

Abstract

1. Introduction

- (1)

- This paper introduces a novel feature extraction method based on facial angle. The method produces facial features that possess translation invariance and rotation invariance. Additionally, it counteracts the interference caused by patients’ head and limb movements through flipping correction, resulting in highly robust extracted features.

- (2)

- A new model is developed by combining GhostNet with multi-layer perceptron (MLP) modules and video process headers. The model is fine-tuned with respect to the features extracted using the proposed method in this paper. This novel model offers a valuable addition to the depression classification task.

- (3)

- Extensive experiments are conducted on the DAIC-WOZ dataset to validate the feasibility of the proposed method. The results obtained outperform those of similar methods, establishing the efficacy of the proposed approach.

2. Related Works

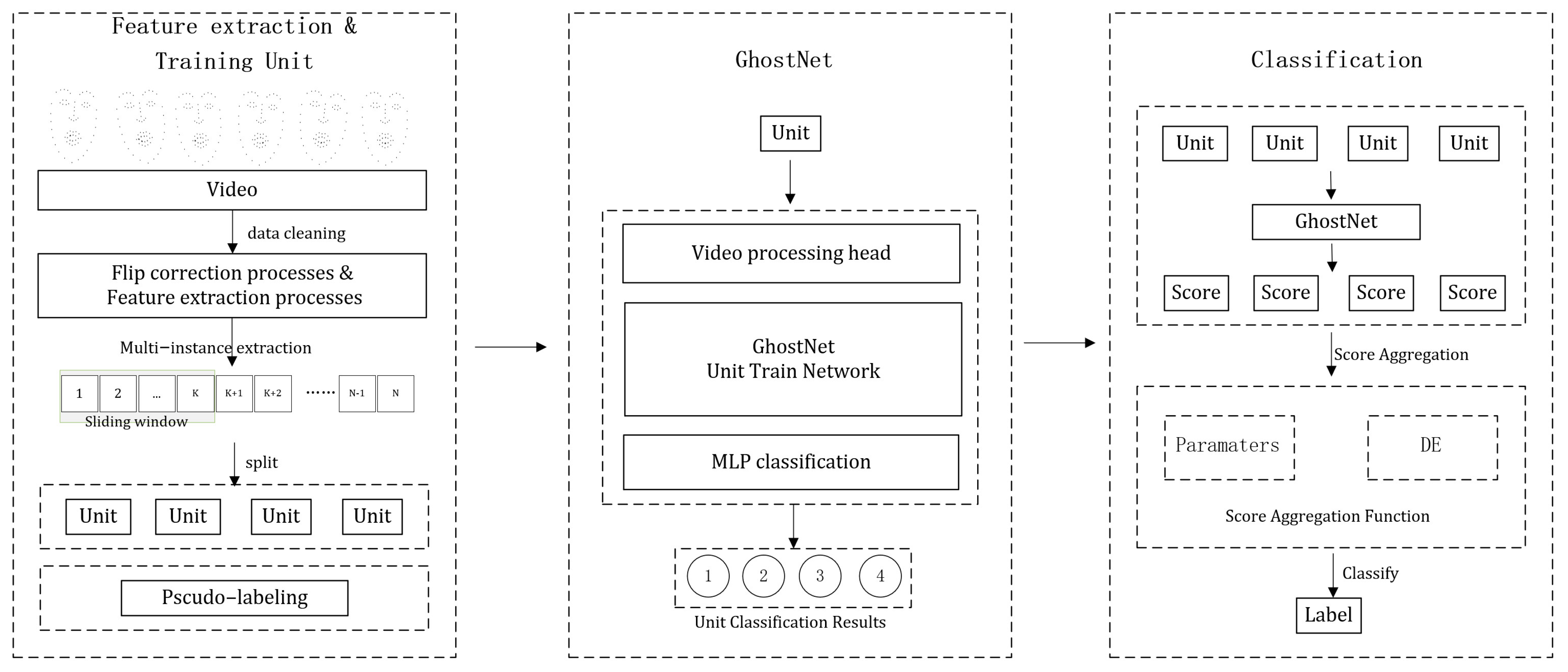

3. Proposed Framework

3.1. Facial Landmark Detection

3.2. Angle Selection Method

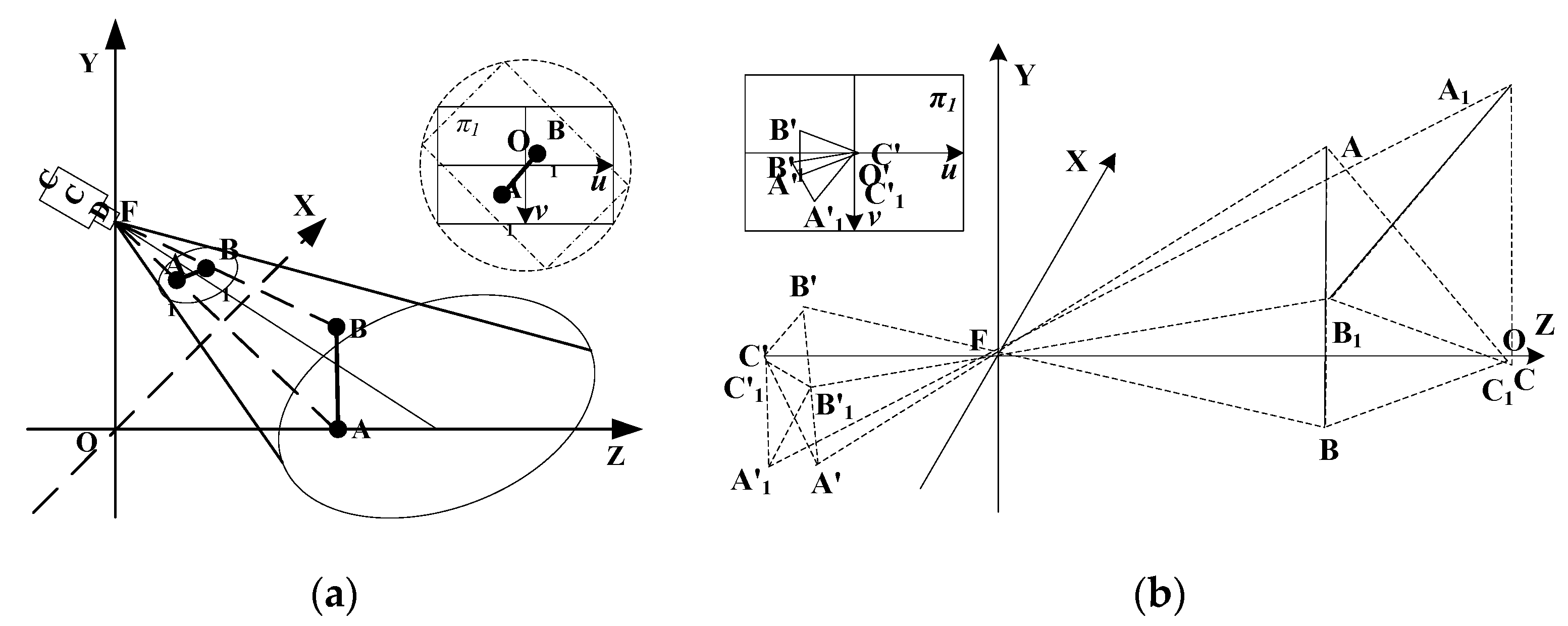

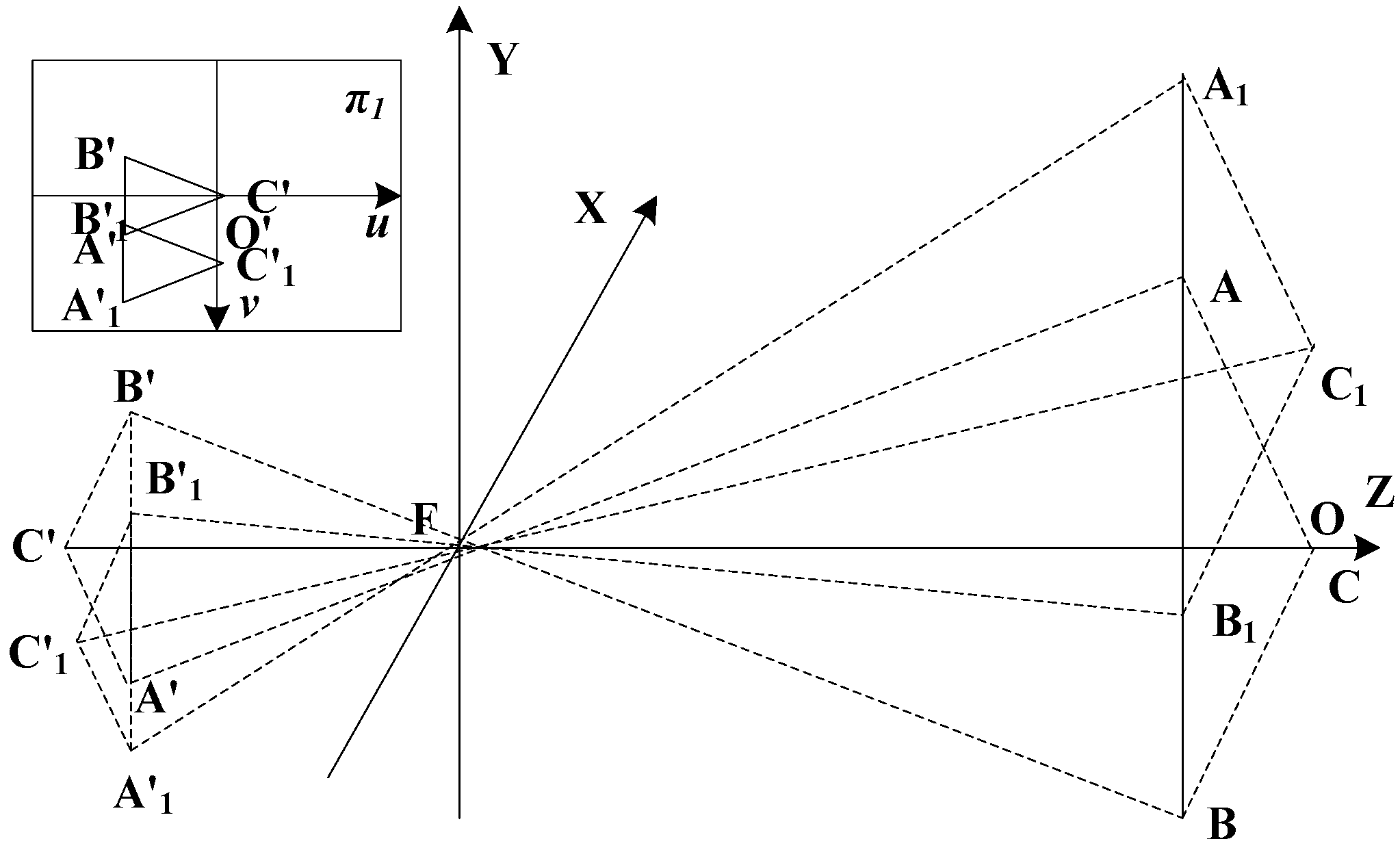

3.3. Robustness Analysis of Angle Features

3.3.1. Rotation Invariance

3.3.2. Translation Invariance

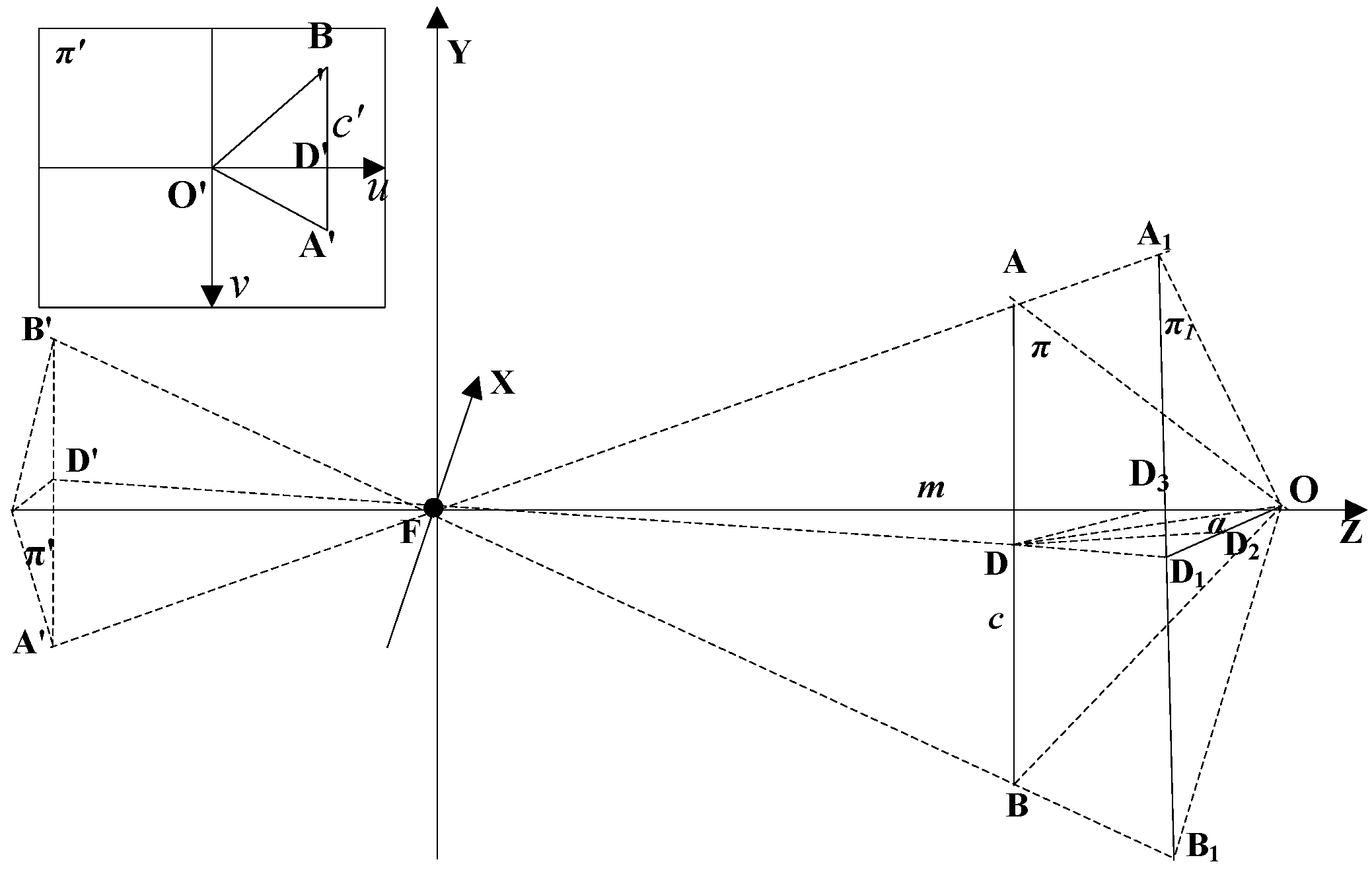

3.4. Flip Correction

3.4.1. When the Target Is Flipped Horizontally, There Exists a Relationship among the Target Angle, Image Angle, and Flip Angle

3.4.2. When the Target Is Flipped Vertically, There Exists a Relationship among the Target Angle, Image Angle, and Flip Angle

3.4.3. Deflection/Flip Correction Method

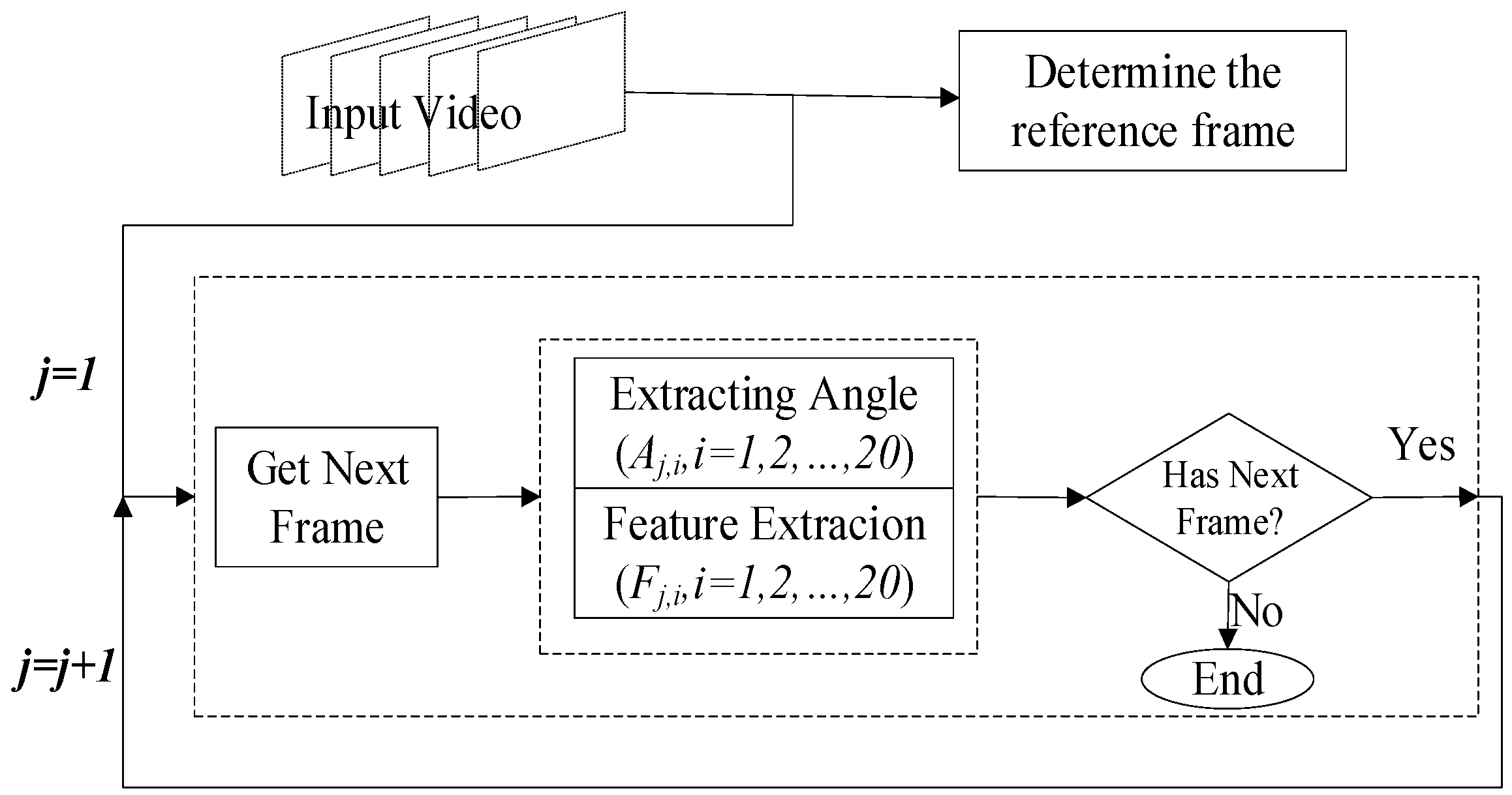

3.5. Feature Extraction and Training Unit

3.5.1. Determine the Reference Frame

3.5.2. Difference Extraction of Angle Features

- (1)

- Flip correction: Use Formulas (16) and (17) to correct the deflection and flip of the angle of this frame, and obtain .

- (2)

- Feature calculation: Calculate the expression difference between the two frames before and after; then, the feature is calculated by:n in Formula (19) is the total number of frames that can detect expressions.

3.5.3. Training Unit Acquisition

4. Experimental Studies

4.1. GhostNet

- (a)

- First, by providing the input data , use the convolution operation to obtain the intrinsic feature map . Where is the number of input channels, and are the height and width of the input data.where is the convolution kernel, is the size of the convolution kernel, and the bias term is omitted.

- (b)

- In order to obtain the required Ghost feature map, each feature map of is used to generate a Ghost feature map with operation .

- (c)

- The intrinsic feature map obtained in the first step and the Ghost feature map obtained in the second step are spliced (identity connection) to obtain the output of the Ghost module.

4.2. Aggregate Classification

4.3. Experimental Dataset

4.4. Experimental

4.4.1. Evaluation

4.4.2. Experimental Results Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ma, X.; Yang, H.; Chen, Q.; Huang, D.; Wang, Y. Depaudionet: An efficient deep model for audio based depression classification. In Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge, Amsterdam, The Netherlands, 16 October 2016; pp. 35–42. [Google Scholar]

- Yeun, E.J.; Kwon, Y.M.; Kim, J.A. Psychometric testing of the Depressive Cognition Scale in Korean adults. Appl. Nurs. Res. 2012, 25, 264–270. [Google Scholar] [CrossRef] [PubMed]

- Vos, T.; Abajobir, A.A.; Abate, K.H.; Abbafati, C.; Abbas, K.M.; Abd-Allah, F.; Criqui, M.H. Global, regional, and national incidence, prevalence, and years lived with disability for 328 diseases and injuries for 195 countries, 1990–2016: A systematic analysis for the Global Burden of Disease Study 2016. Lancet 2017, 390, 1211–1259. [Google Scholar] [CrossRef] [PubMed]

- Williams, J.B.W. A structured interview guide for the Hamilton Depression Rating Scale. Arch. Gen. Psychiatry 1988, 45, 742–747. [Google Scholar] [CrossRef] [PubMed]

- Zung, W.W.K. A Self-Rating Depression Scale. Arch. Gen. Psychiatry 1965, 12, 63–70. [Google Scholar] [CrossRef] [PubMed]

- Kroenke, K.; Spitzer, R.L.; Williams, J.B.W. The PHQ-9: Validity of a brief depression severity measure. J. Gen. Intern. Med. 2001, 16, 606–613. [Google Scholar] [CrossRef]

- Beck, A.T.; Steer, R.A.; Brown, G.K. Beck depression inventory-II. Psychol. Assess. 1996, 78, 490–498. [Google Scholar]

- Rashid, J.; Batool, S.; Kim, J.; Wasif Nisar, M.; Hussain, A.; Juneja, S.; Kushwaha, R. An augmented artificial intelligence approach for chronic diseases prediction. Front. Public Health 2022, 10, 860396. [Google Scholar] [CrossRef]

- Williamson, J.R.; Quatieri, T.F.; Helfer, B.S.; Horwitz, R.; Yu, B.; Mehta, D.D. Vocal biomarkers of depression based on motor incoordination. In Proceedings of the 3rd ACM International Workshop on Audio/Visual Emotion Challenge, Barcelona, Spain, 21 October 2013; pp. 41–48. [Google Scholar]

- Zhou, X.; Jin, K.; Shang, Y.; Guo, G. Visually Interpretable representation learning for depression recognition from facial images. IEEE Trans. Affect. Comput. 2018, 11, 542–552. [Google Scholar] [CrossRef]

- Suhara, Y.; Xu, Y.; Pentland, A.S. DeepMood: Forecasting Depressed Mood Based on Self-Reported Histories via Recurrent Neural Networks. In Proceedings of the 26th International Conference. International World Wide Web Conferences Steering Committee, Perth, Australia, 3–7 April 2017. [Google Scholar]

- Gratch, J.; Artstein, R.; Lucas, G.M.; Stratou, G.; Scherer, S.; Nazarian, A.; Morency, L.P. The distress analysis interview corpus of human and computer interviews. In Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC’14), Reykjavik, Iceland, 26–31 May 2014; pp. 3123–3128. [Google Scholar]

- Ava, L.T.; Karim, A.; Hassan, M.M.; Faisal, F.; Azam, S.; Al Haque, A.F.; Zaman, S. Intelligent Identification of Hate Speeches to address the increased rate of Individual Mental Degeneration. Procedia Comput. Sci. 2023, 219, 1527–1537. [Google Scholar] [CrossRef]

- Othmani, A.; Zeghina, A.-O.; Muzammel, M. A Model of Normality Inspired Deep Learning Framework for Depression Relapse Prediction Using Audiovisual Data. Comput. Methods Programs Biomed. 2022, 226, 107132. [Google Scholar] [CrossRef]

- Mehrabian, A. Communication Without Words. In Communication Theory; Routledge: New York, NY, USA, 2017; pp. 193–200. [Google Scholar]

- Meng, H.; Huang, D.; Wang, H.; Yang, H.; Ai-Shuraifi, M.; Wang, Y. Depression recognition based on dynamic facial and vocal expression features using partial least square regression. In Proceedings of the 3rd ACM International Workshop on Audio/Visual Emotion Challenge, Barcelona, Spain, 21 October 2013; pp. 21–30. [Google Scholar]

- Pampouchidou, A.; Simantiraki, O.; Fazlollahi, A.; Pediaditis, M.; Manousos, D.; Roniotis, A.; Tsiknakis, M. Depression assessment by fusing high and low level features from audio, video, and text. In Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge, Amsterdam, The Netherlands, 16 October 2016; pp. 27–34. [Google Scholar]

- Syed, Z.S.; Sidorov, K.; Marshall, D. Depression severity prediction based on biomarkers of psychomotor retardation. In Proceedings of the 7th Annual Workshop on Audio/Visual Emotion Challenge, Mountain View, CA, USA, 23–27 October 2017; pp. 37–43. [Google Scholar]

- Mehrabian, A.; Russell, J.A. An Approach to Environmental Psychology; MIT Press: Cambridge, MA, USA, 1974. [Google Scholar]

- Nguyen, P.Y.; Astell-Burt, T.; Rahimi-Ardabili, H.; Feng, X. Effect of nature prescriptions on cardiometabolic and mental health, and physical activity: A systematic review. Lancet Planet. Health 2023, 7, e313–e328. [Google Scholar] [CrossRef] [PubMed]

- Caligiuri, M.P.; Ellwanger, J. Motor and cognitive aspects of motor retardation in depression. J. Affect. Disord. 2000, 57, 83–93. [Google Scholar] [CrossRef] [PubMed]

- Cohn, J.F.; Kruez, T.S.; Matthews, I.; Yang, Y.; Nguyen, M.H.; Padilla, M.T.; De la Torre, F. Detecting depression from facial actions and vocal prosody. In Proceedings of the 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2009. [Google Scholar]

- Mcintyre, G.; Gocke, R.; Hyett, M.; Green, M.; Breakspear, M. An approach for automatically measuring facial activity in depressed subjects. In Proceedings of the International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2009. [Google Scholar]

- Hamm, J.; Kohler, C.G.; Gur, R.C.; Verma, R. Automated Facial Action Coding System for dynamic analysis of facial expressions in neuropsychiatric disorders. J. Neurosci. Methods 2011, 200, 237–256. [Google Scholar] [CrossRef] [PubMed]

- Yang, T.H.; Wu, C.H.; Huang, K.Y.; Su, M.H. Coupled HMM-based multimodal fusion for mood disorder detection through elicited audio-visual signals. J. Ambient Intell. Humaniz. Comput. 2017, 8, 895–906. [Google Scholar] [CrossRef]

- Gupta, R.; Malandrakis, N.; Xiao, B.; Guha, T.; Van Segbroeck, M.; Black, M.; Narayanan, S. Multimodal Prediction of Affective Dimensions and Depression in Human-Computer Interactions. In Proceedings of the International Workshop on Audio/Visual Emotion Challenge ACM, Orlando, FL, USA, 3–7 November 2014. [Google Scholar]

- Nasir, M.; Jati, A.; Shivakumar, P.G.; Nallan Chakravarthula, S.; Georgiou, P.l. Multimodal and multiresolution depression detection from speech and facial landmark features. In Proceedings of the 6th International Workshop on Audio/Visual Emotion Challenge, Amsterdam, The Netherlands, 16 October 2016; pp. 43–50. [Google Scholar]

- Wang, Y.; Ma, J.; Hao, B.; Wang, X.; Mei, J.; Li, S. Automatic depression detection via facial expressions using multiple instance learning. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 9–7 April 2020; pp. 1933–1936. [Google Scholar]

- Sun, B.; Zhang, Y.; He, J.; Xiao, Y.; Xiao, R. An automatic diagnostic network using skew-robust adversarial discriminative domain adaptation to evaluate the severity of depression. Comput. Methods Programs Biomed. 2019, 173, 185–195. [Google Scholar] [CrossRef]

- Baltrusaitis, T.; Robinson, P.; Morency, L.P. OpenFace: An open source facial behavior analysis toolkit. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; p. 16023491. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More features from cheap operations. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Mei, S.; Chen, Y.; Qin, H.; Yu, H.; Li, D.; Sun, B.; Liu, Y. A Method Based on Knowledge Distillation for Fish School Stress State Recognition in Intensive Aquaculture. CMES Comput. Model. Eng. Sci. 2022, 131, 1315–1335. [Google Scholar] [CrossRef]

- Hassan, M.M.; Hassan, M.M.; Yasmin, F.; Khan, M.A.R.; Zaman, S.; Islam, K.K.; Bairagi, A.K. A comparative assessment of machine learning algorithms with the Least Absolute Shrinkage and Selection Operator for breast cancer detection and prediction. Decis. Anal. J. 2023, 7, 100245. [Google Scholar] [CrossRef]

- Erokhina, O.V.; Borisenko, B.B.; Fadeev, A.S. Analysis of the Multilayer Perceptron Parameters Impact on the Quality of Network Attacks Identification. In Proceedings of the 2021 Systems of Signal Synchronization, Generating and Processing in Telecommunications, Kaliningrad, Russia, 30 June–2 July 2021; pp. 1–6. [Google Scholar]

- Hossain, M.S.; Amin, S.U.; Alsulaiman, M.; Muhammad, G. Applying deep learning for epilepsy seizure detection and brain mapping visualization. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2019, 15, 1–17. [Google Scholar] [CrossRef]

- Kroenke, K.; Strine, T.W.; Spitzer, R.L.; Williams, J.B.; Berry, J.T.; Mokdad, A.H. The PHQ-8 as a measure of current depression in the general population. J. Affect. Disord. 2009, 114, 163–173. [Google Scholar] [CrossRef]

- Qin, A.K.; Huang, V.L.; Suganthan, P.N. Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Trans. Evol. Comput. 2008, 13, 398–417. [Google Scholar] [CrossRef]

- Zhang, Z. Improved adam optimizer for deep neural networks. In Proceedings of the 2018 IEEE/ACM 26th International Symposium on Quality of Service (IWQoS), Banff, AB, Canada, 4–6 June 2018; pp. 10–14. [Google Scholar]

- Manoret, P.; Chotipurk, P.; Sunpaweravong, S.; Jantrachotechatchawan, C.; Duangrattanalert, K. Automatic Detection of Depression from Stratified Samples of Audio Data. arXiv 2021, arXiv:2111.10783. [Google Scholar]

- Rejaibi, E.; Komaty, A.; Meriaudeau, F.; Agrebi, S.; Othmani, A. MFCC-based recurrent neural network for automatic clinical depression recognition and assessment from speech. Biomed. Signal Process. Control 2022, 71, 103107. [Google Scholar] [CrossRef]

- Dinkel, H.; Wu, M.; Yu, K. Text-based depression detection on sparse data. arXiv 2019, arXiv:1904.05154. [Google Scholar]

- Arioz, U.; Smrke, U.; Plohl, N.; Mlakar, I. Scoping Review on the Multimodal Classification of Depression and Experimental Study on Existing Multimodal Models. Diagnostics 2022, 12, 2683. [Google Scholar] [CrossRef] [PubMed]

- Lam, G.; Dongyan, H.; Lin, W. Context-aware deep learning for multi-modal depression detection. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3946–3950. [Google Scholar]

- Song, S.; Shen, L.; Valstar, M. Human behaviour-based automatic depression analysis using hand-crafted statistics and deep learned spectral features. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 158–165. [Google Scholar]

- Wei, P.-C.; Peng, K.; Roitberg, A.; Yang, K.; Zhang, J.; Stiefelhagen, R. Multi-modal depression estimation based on sub-attentional fusion. arXiv 2022, arXiv:2207.06180. [Google Scholar]

- Haque, A.; Guo, M.; Miner, A.S.; Fei-Fei, L. Measuring depression symptom severity from spoken language and 3D facial expressions. arXiv 2018, arXiv:1811.08592. [Google Scholar]

- Yang, L.; Jiang, D.; Sahli, H. Integrating Deep and Shallow Models for Multi-Modal Depression Analysis-Hybrid Architectures. IEEE Trans. Affect. Comput. 2021, 12, 239–253. [Google Scholar] [CrossRef]

- Guo, Y.; Zhu, C.; Hao, S.; Hong, R. Automatic depression detection via learning and fusing features from visual cues. IEEE Trans. Comput. Soc. Syst. 2022, 1–8. [Google Scholar] [CrossRef]

- Saeed, S.; Shaikh, A.; Memon, M.A.; Saleem, M.Q.; Naqvi, S.M.R. Assessment of brain tumor due to the usage of MATLAB performance. J. Med. Imaging Health Inform. 2017, 7, 1454–1460. [Google Scholar] [CrossRef]

- Chen, L.; Yang, Y.; Wang, Z.; Zhang, J.; Zhou, S.; Wu, L. Lightweight Underwater Target Detection Algorithm Based on Dynamic Sampling Transformer and Knowledge-Distillation Optimization. J. Mar. Sci. Eng. 2023, 11, 426. [Google Scholar] [CrossRef]

- Hassan, M.M.; Zaman, S.; Mollick, S.; Raihan, M.; Kaushal, C.; Bhardwaj, R. An efficient Apriori algorithm for frequent pattern in human intoxication data. Innov. Syst. Softw. Eng. 2023, 19, 61–69. [Google Scholar] [CrossRef]

- Sahoo, S.P.; Modalavalasa, S.; Ari, S. DISNet: A sequential learning framework to handle occlusion in human action recognition with video acquisition sensors. Digit. Signal Process. 2022, 131, 103763. [Google Scholar] [CrossRef]

| Sequence Number | Coordinate Definition | Meaning |

|---|---|---|

| Ang(18,20,22) | Curvature of the left eyelash | |

| Ang(23,25,27) | Curvature of the right eyelash | |

| Ang(20,31,25) | Grain lift 1 | |

| Ang(20,28,25) | Grain lift 2 | |

| Ang(38,37,42) | Degree of left eye corner opening | |

| Ang(37,20,40) | Degree of left eye squinting | |

| Ang(47,46,45) | Degree of right eye corner opening | |

| Ang(43,25,46) | Degree of right eye squinting | |

| Ang(49,03,37) | Degree of left cheek contraction | |

| Ang(55,15,46) | Degree of right cheek contraction | |

| Ang(51,49,59) | Degree of left outer lip opening | |

| Ang(62,61,68) | Degree of left inner lip opening | |

| Ang(53,55,57) | Degree of right outer lip opening | |

| Ang(64,65,66) | Degree of right inner lip opening | |

| Ang(49,34,55) | Degree of mouth opening | |

| Ang(28,02,31) | Nose wrinkle 1 | |

| Ang(28,16,31) | Nose wrinkle 2 | |

| Ang(Le,31,Re) | Upside down | |

| Ang(01,30,03) | Right deflection | |

| Ang(17,30,15) | Left deflection |

| Input | Operator | #Exp | #Out | SE | Stride |

|---|---|---|---|---|---|

| 642 × 20 | Conv2d 3 × 3 | - | 84 | - | 2 |

| 322 × 84 | G-bneck | 120 | 84 | 1 | 1 |

| 322 × 84 | G-bneck | 240 | 84 | 0 | 2 |

| 162 × 84 | G-bneck | 200 | 84 | 0 | 1 |

| 162 × 84 | G-bneck | 184 | 84 | 0 | 1 |

| 162 × 84 | G-bneck | 184 | 84 | 0 | 1 |

| 162 × 84 | G-bneck | 480 | 112 | 1 | 1 |

| 162 × 112 | G-bneck | 672 | 112 | 1 | 1 |

| 162 × 112 | G-bneck | 672 | 160 | 1 | 2 |

| 82 × 160 | G-bneck | 960 | 160 | 0 | 1 |

| 82 × 160 | G-bneck | 960 | 160 | 1 | 1 |

| 82 × 160 | G-bneck | 960 | 160 | 0 | 1 |

| 82 × 160 | G-bneck | 960 | 160 | 1 | 1 |

| 82 × 160 | Conv2d 1 × 1 | - | 960 | - | 1 |

| 12 × 960 | AvgPool 8 × 8 | - | - | - | - |

| Datasets | Non-Depression | Depression |

|---|---|---|

| Training set | 77 | 30 |

| Test set | 23 | 12 |

| Methods | M | P | R | F1 |

|---|---|---|---|---|

| Manoret et al., 2021 [41] | A | 0.64 | 0.91 | 0.75 |

| Othmani et al., 2022 [14] | A | 0.51 | 0.50 | 0.50 |

| Rejaibi et al., 2022 [42] | A | 0.69 | 0.35 | 0.46 |

| Sun et al., 2019 [13] | T | 0.67 | 0.50 | 0.57 |

| Dinkel et al., 2020 [43] | T | 0.85 | 0.83 | 0.84 |

| Arioz et al., 2022 [44] | A + T | 0.53 | 0.57 | 0.53 |

| Lam et al., 2019 [45] | A + T | 0.60 | 0.75 | 0.67 |

| Ours | V | 0.77 | 0.83 | 0.80 |

| Methods | M | P | R | F1 |

|---|---|---|---|---|

| Wang et al., 2020 [28] | V | 0.81 | 0.75 | 0.78 |

| Song et al., 2018 [46] | V | 0.64 | 0.58 | 0.61 |

| Wei et al., 2022 [47] | V + A + T | 0.89 | 0.57 | 0.70 |

| Haque et al., 2018 [48] | V + A + T | 0.71 | 0.83 | 0.77 |

| Yang et al., 2021 [49] | V + A + T | 0.71 | 0.55 | 0.63 |

| Ours | V | 0.77 | 0.83 | 0.80 |

| Feature | M | P | R | F1 |

|---|---|---|---|---|

| Gaze | V | 0.85 | 0.50 | 0.63 |

| Pose | V | 0.72 | 0.66 | 0.69 |

| 2D landmarks | V | 0.69 | 0.75 | 0.72 |

| 2D Landmarks + Pose | V | 0.73 | 0.91 | 0.81 |

| 2D Landmarks + Gaze | V | 0.61 | 0.91 | 0.73 |

| Ours Angle | V | 0.77 | 0.83 | 0.80 |

| Window_Size | P | R | F1 |

|---|---|---|---|

| 180 s | 0.53 | 0.75 | 0.62 |

| 120 s | 0.77 | 0.83 | 0.80 |

| 60 s | 0.56 | 0.75 | 0.64 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, Z.; Hu, Y.; Jing, R.; Sheng, W.; Mao, J. A Depression Recognition Method Based on the Alteration of Video Temporal Angle Features. Appl. Sci. 2023, 13, 9230. https://doi.org/10.3390/app13169230

Ding Z, Hu Y, Jing R, Sheng W, Mao J. A Depression Recognition Method Based on the Alteration of Video Temporal Angle Features. Applied Sciences. 2023; 13(16):9230. https://doi.org/10.3390/app13169230

Chicago/Turabian StyleDing, Zhiqiang, Yahong Hu, Runhui Jing, Weiguo Sheng, and Jiafa Mao. 2023. "A Depression Recognition Method Based on the Alteration of Video Temporal Angle Features" Applied Sciences 13, no. 16: 9230. https://doi.org/10.3390/app13169230

APA StyleDing, Z., Hu, Y., Jing, R., Sheng, W., & Mao, J. (2023). A Depression Recognition Method Based on the Alteration of Video Temporal Angle Features. Applied Sciences, 13(16), 9230. https://doi.org/10.3390/app13169230