Caching Placement Optimization Strategy Based on Comprehensive Utility in Edge Computing

Abstract

1. Introduction

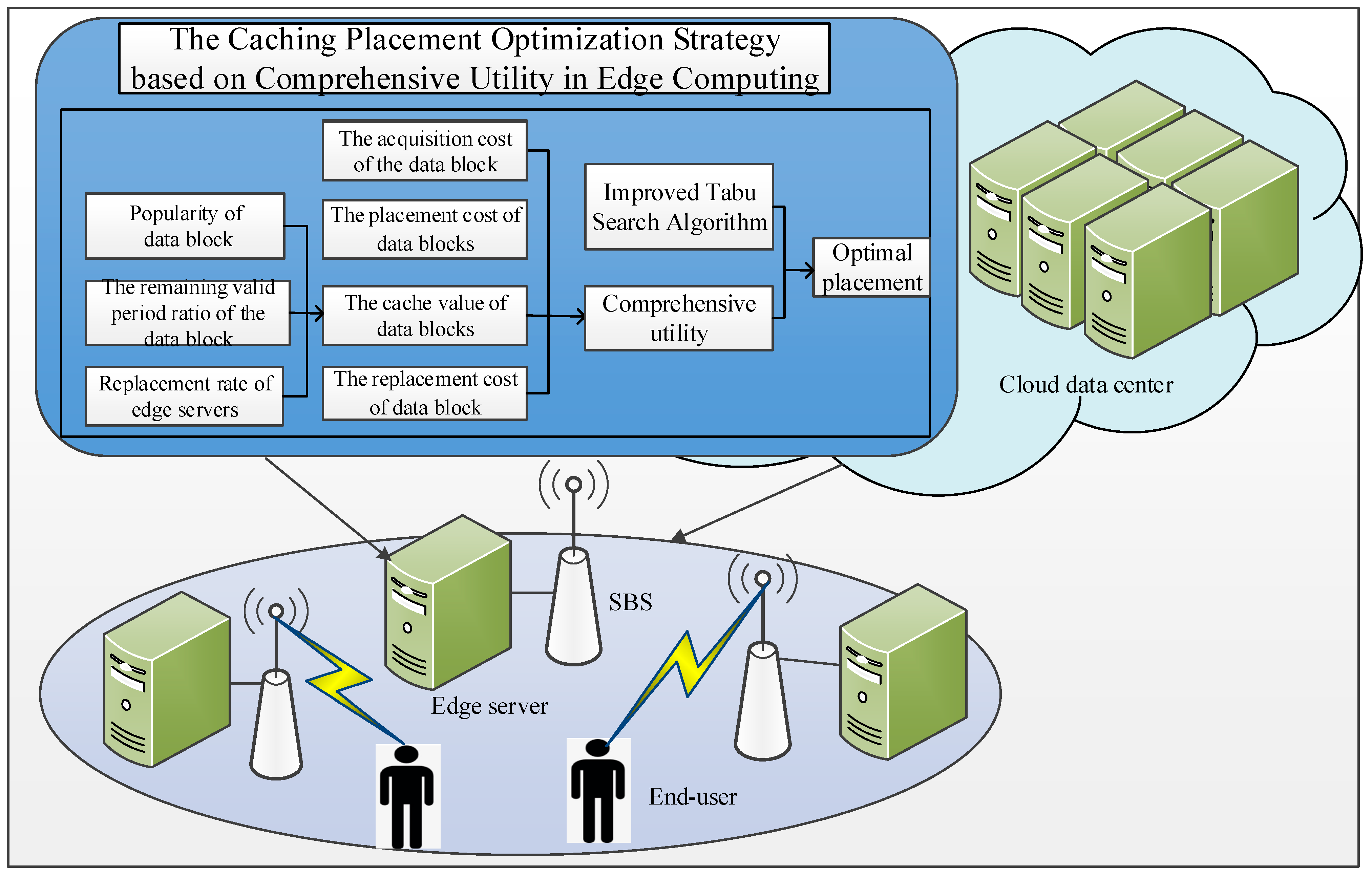

- A cache value model of data blocks is built by quantifying placement factors including data block popularity, remaining validity ratio of data blocks, and server replacement rate; a comprehensive-utility-based cache placement model is constructed based on data block cache value, data block acquisition overhead, and data block placement cost and replacement cost.

- To avoid trapping in a partial optimum, a disciplinary strategy is introduced in tabu search, and a comprehensive-utility-based cache placement optimization policy is proposed for the edge computing environment; an optimized placement of cached data blocks is achieved through an improved tabu search algorithm in this approach.

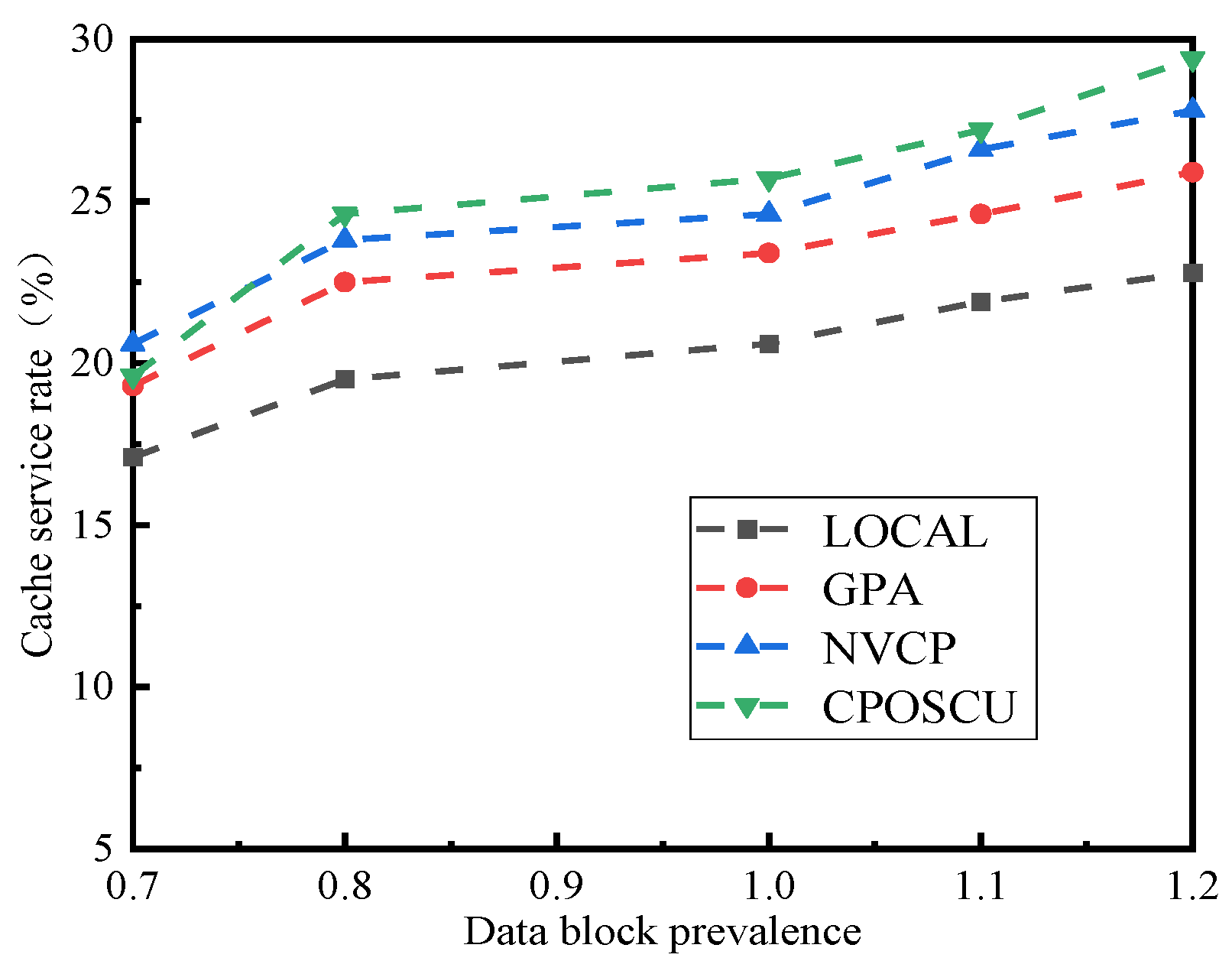

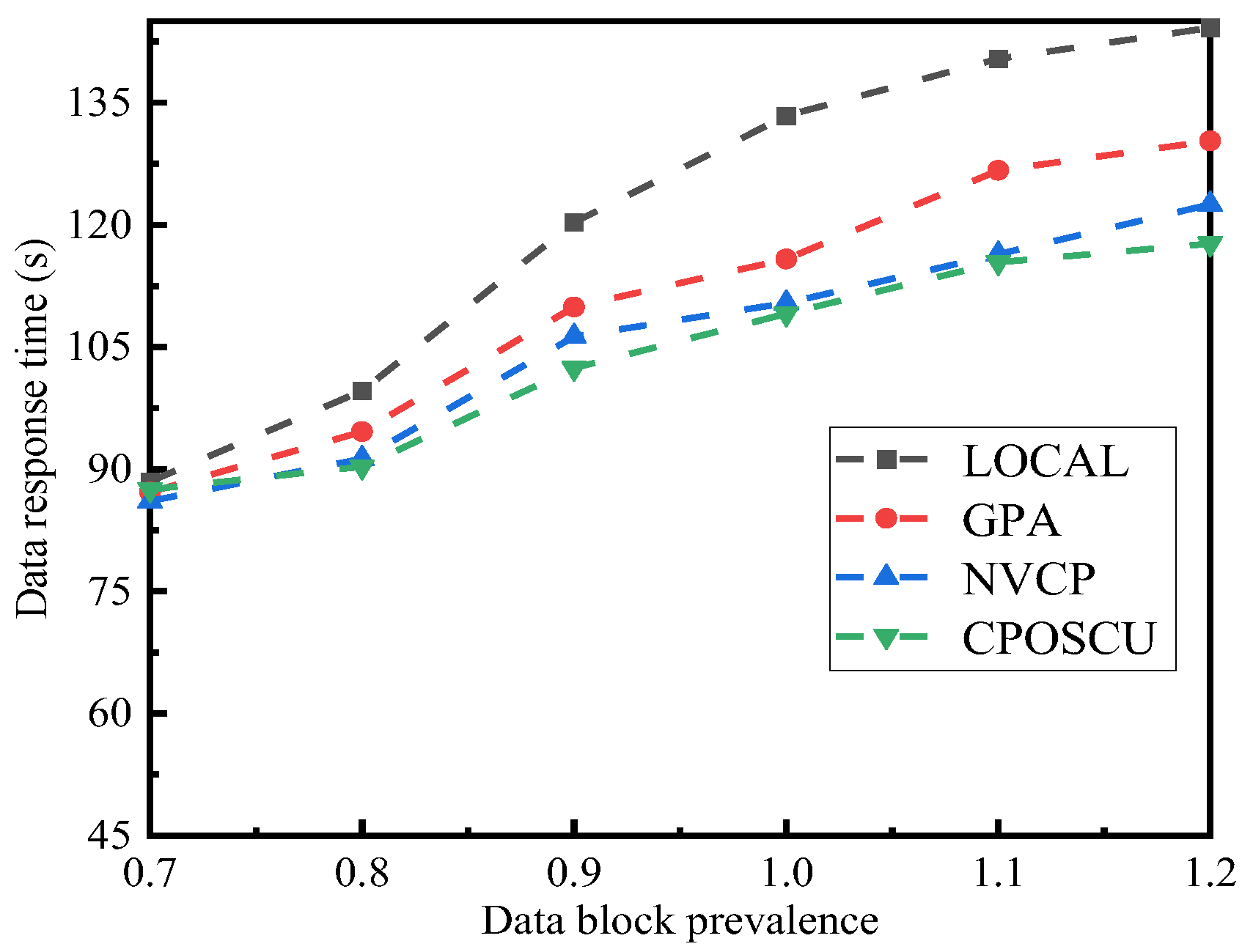

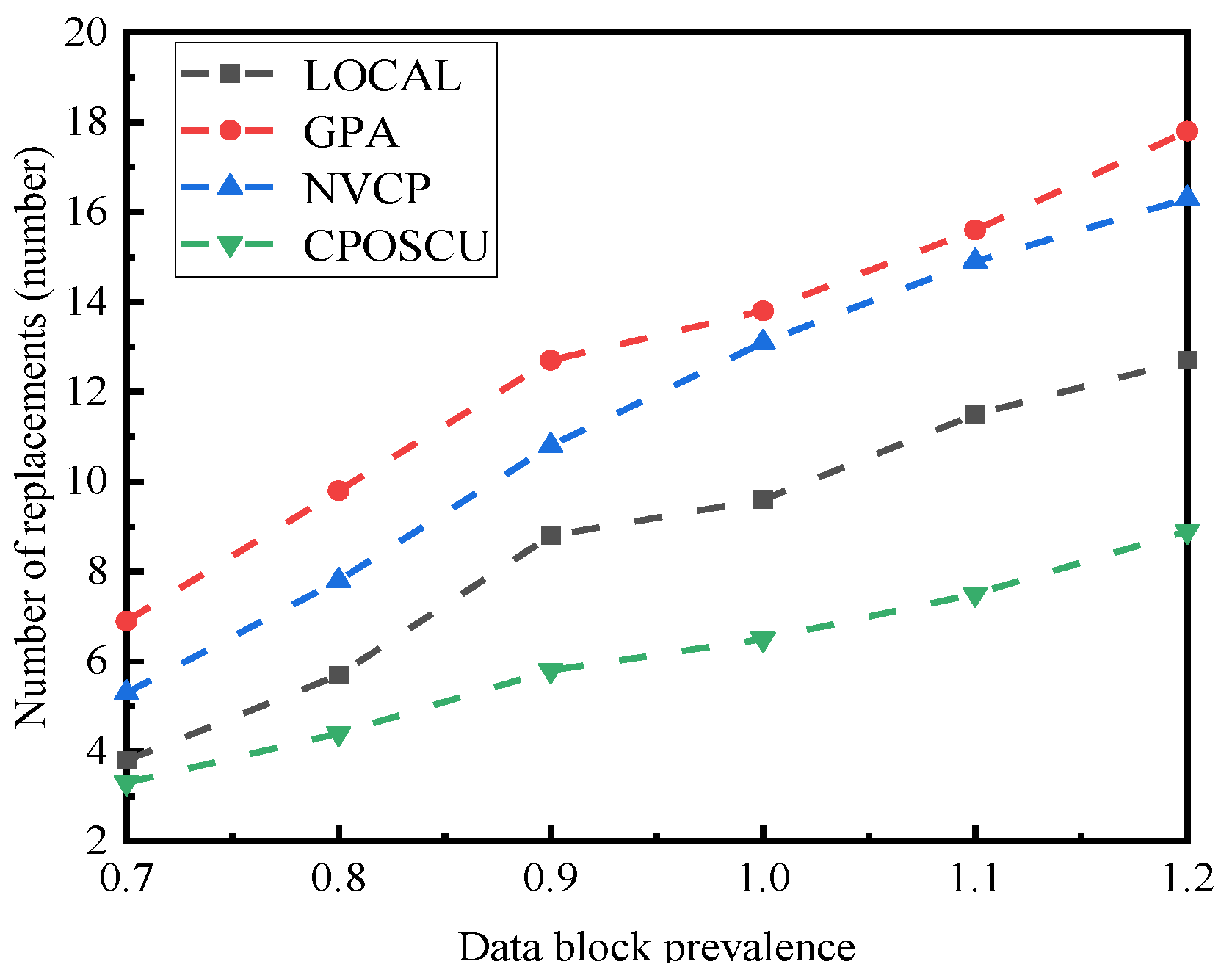

- The performance of the proposed algorithm is verified in three ways: data response time, cache service rate, and the number of permutations, by comparing the CPOSCU algorithm with LOCAL, GPA, and NVCP algorithms.

2. Related Work

3. The Comprehensive-Utility-Based Cache Placement Model in the Edge Computing Environment

3.1. The Cache Value of the Data Block

3.1.1. The Popularity of the Data Block

3.1.2. The Percentage of Remaining Validity of Data Blocks

3.1.3. Edge Server Replacement Rate

3.1.4. The Cache Value of the Data Block

3.2. The Cache Placement Overhead for Data Blocks

3.2.1. The Acquisition Cost of Data Blocks

3.2.2. The Placement Cost of Data Blocks

3.2.3. The Replacement Cost of Data Blocks

3.2.4. Comprehensive Utility

3.3. The Comprehensive-Utility-Based Caching Placement Model

4. The Cache Placement Optimization Algorithm Based on Improved Tabu Search in Edge Computing

4.1. The Cache Placement Strategy Based on the Replacement Rate to Find the Initial Solution of the Placement

- The set of data blocks for caching is and the collection of edge servers is .

- The data block value is calculated and then sequenced, ; the server replacement rate is calculated and arranged in the composition .

- Each data block is accessed sequentially, starting with the data block where no server is placed, and representing this set as: .

- The span of a data block is determined by considering its current prevalence and the remaining validity ratio, which is expressed as follows:

- The span of the data block obtained in step 4 is used to calculate the server replacement rate for that data block placement, which can be shown as:

- The resulting server replacement rate is compared to the replacement rate set to place the data blocks into the appropriate servers.

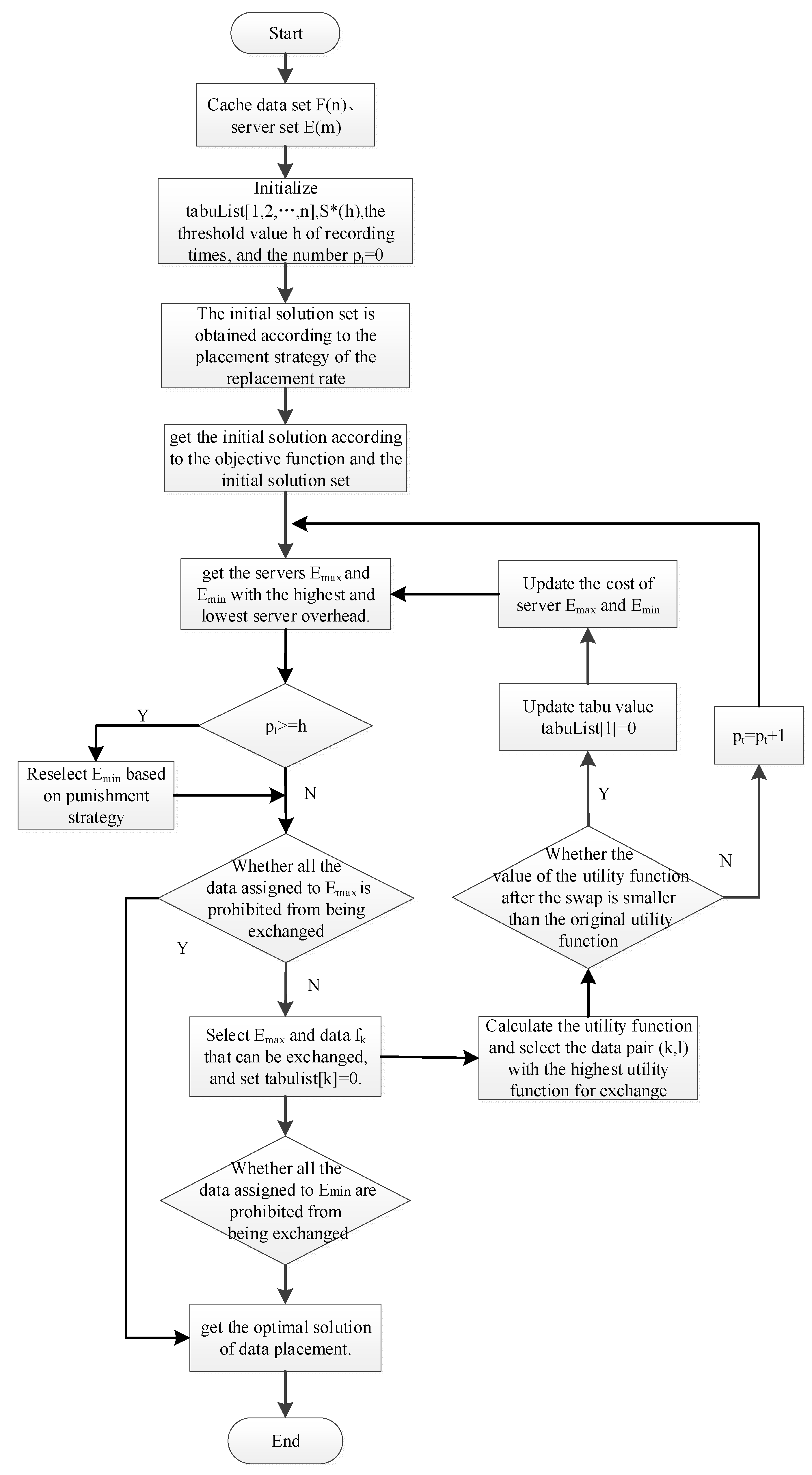

4.2. Implementation of Cache Placement Algorithm Based on Improved Tabu Search

4.2.1. A Disciplinary Strategy Is Introduced

4.2.2. The Cache Placement Algorithm Based on Improved Tabu Search

- Initialization setting.

- 2.

- Server selection.

- 3.

- Data block swapping.

4.3. The Flow Chart of the Cache Placement Algorithm Based on Improved Tabu Search

4.4. The Pseudo-Code for the Cache Placement Algorithm Based on the Improved Tabu Search

| Algorithm 1: The cache placement optimization algorithm based on improved tabu search |

| Input: the set of data blocks to be cached ; the set of servers . Output: the optimal solution for cache data placement; |

| 1. Initialization: set ; ; |

| 2. for each do |

| 3. Calculate the popular level of the data block |

| 4. Calculate the value of the remaining valid period ratio of each data block |

| 5. the data block value is saved in // |

| 6. end for each |

| 7. for each do |

| 8. Calculate the replacement rate for each server |

| 9. keep the value to the set |

| 10. end for each |

| 11. for each do |

| 12. Calculate the span of the data block // According to Formula (23) |

| 13. Calculate the replacement rate // According to Formula (24) |

| 14. for each do |

| 15. if |

| 16. keep in the solution set . |

| 17. end for each |

| 18. end for each |

| 19. Calculate the value of the comprehensive utility // According to Formula (19) |

| 20. Calculate and // According to Formulas (20) and (21) |

| 21. while the data blocks in and are not forbidden do |

| 22. Calculate and ; |

| 23. if then |

| 24. Using the disciplinary strategy choose |

| 25. end if |

| 26. find and assigned to ; |

| 27. set ; |

| 28. for to and , set do |

| 29. if assigns to and then |

| 30. Calculate and ; |

| 31. if and then |

| 32. set ; |

| 33. end if |

| 34. end if |

| 35. end for |

| 36. if then // Calculate according to Formula (28) |

| 37. set ; |

| 38. |

| 39. |

| 40. else |

| 41. |

| 42. end if |

| 43. end while |

| 44. get the optimal solution . |

- The time complexity of obtaining the initial solution using the permutation-rate-based cache placement strategy is .

- The time complexity of optimizing the initial solution using the tabu list and the swap strategy based on the improved tabu search is .

5. Experiment and Analysis

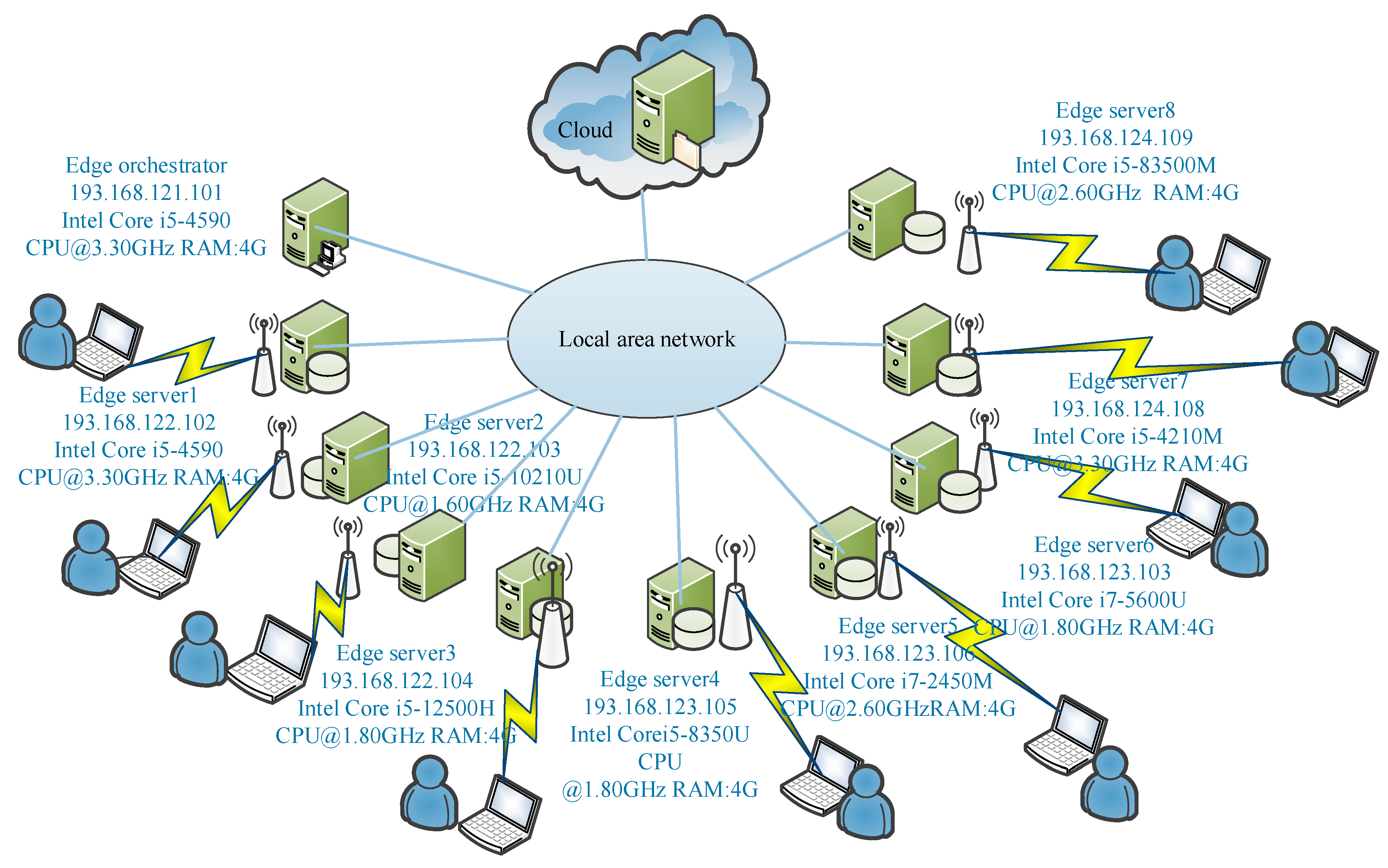

5.1. Experimental Environment

5.1.1. Experimental Equipment

5.1.2. Evaluation Metrics

- Data response time: this metric indicates how the cache placement algorithm affects the user’s data retrieval time; that is, a shorter the data response time represents a more effective algorithm.

- Cache service rate: this index reflects the quality of the cache placement algorithm. A higher cache service rate indicates a more successful algorithm. The cache service rate calculation is shown in Equation (29).

- 3.

- The replacement count: this indicator reflects the efficiency of the cache placement strategy in terms of the quantity of data blocks cached in the server. The formula is given in Equation (30).

5.2. Experimental Data and Test Cases

5.2.1. Experimental Data

5.2.2. Test Cases

5.3. Experimental Results and Analysis

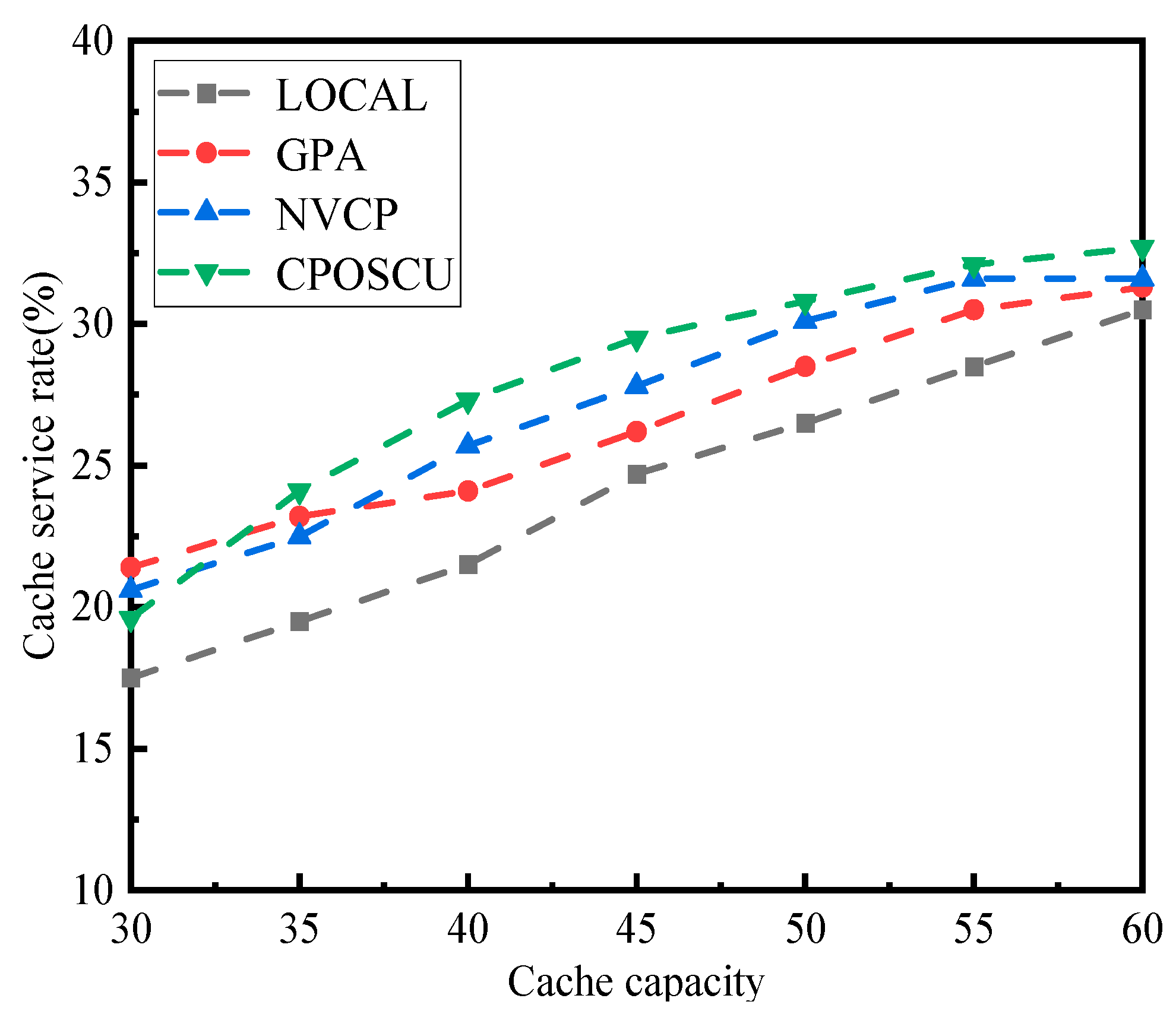

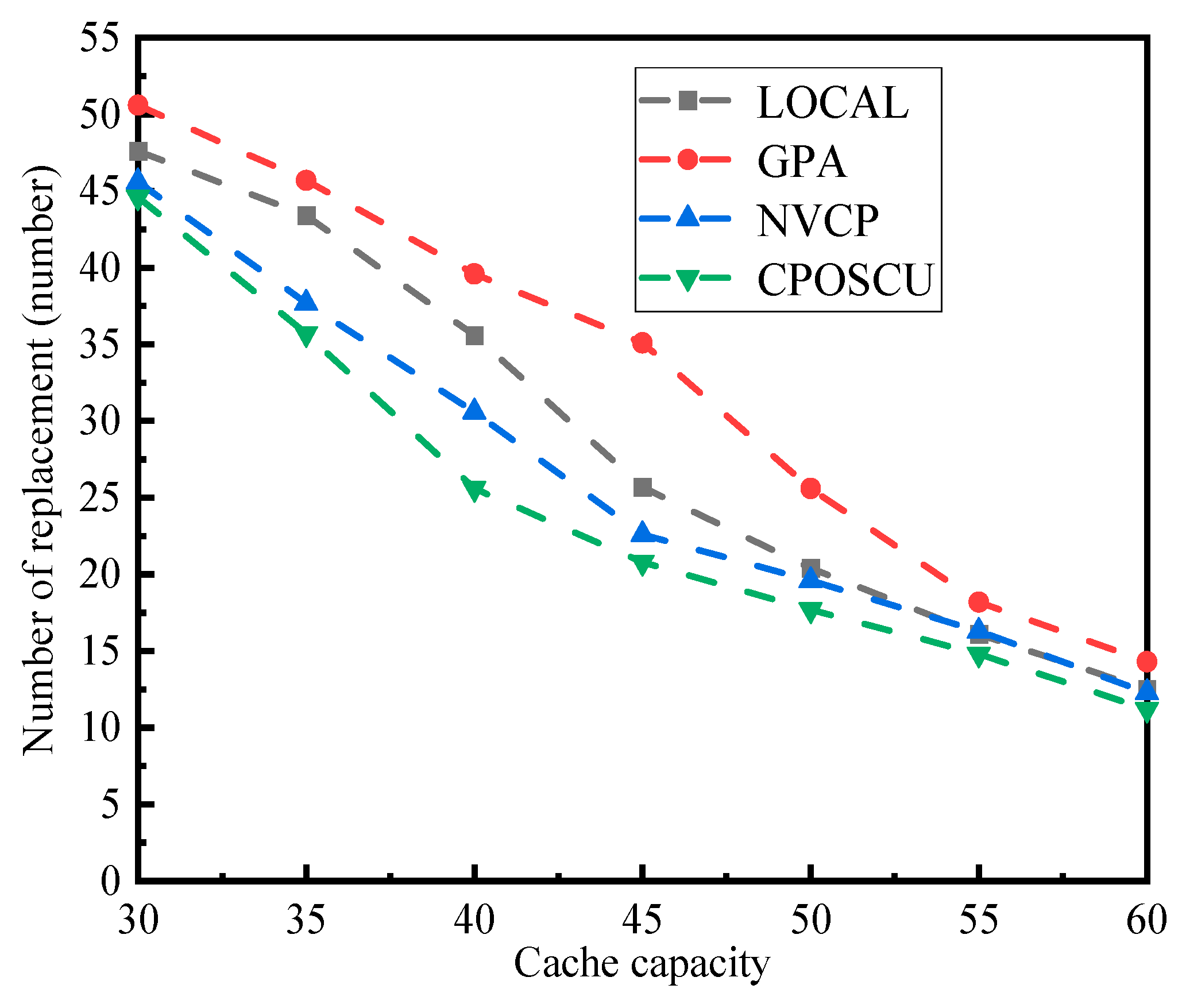

- Impact of different cache sizes on the algorithmic performance

- 2.

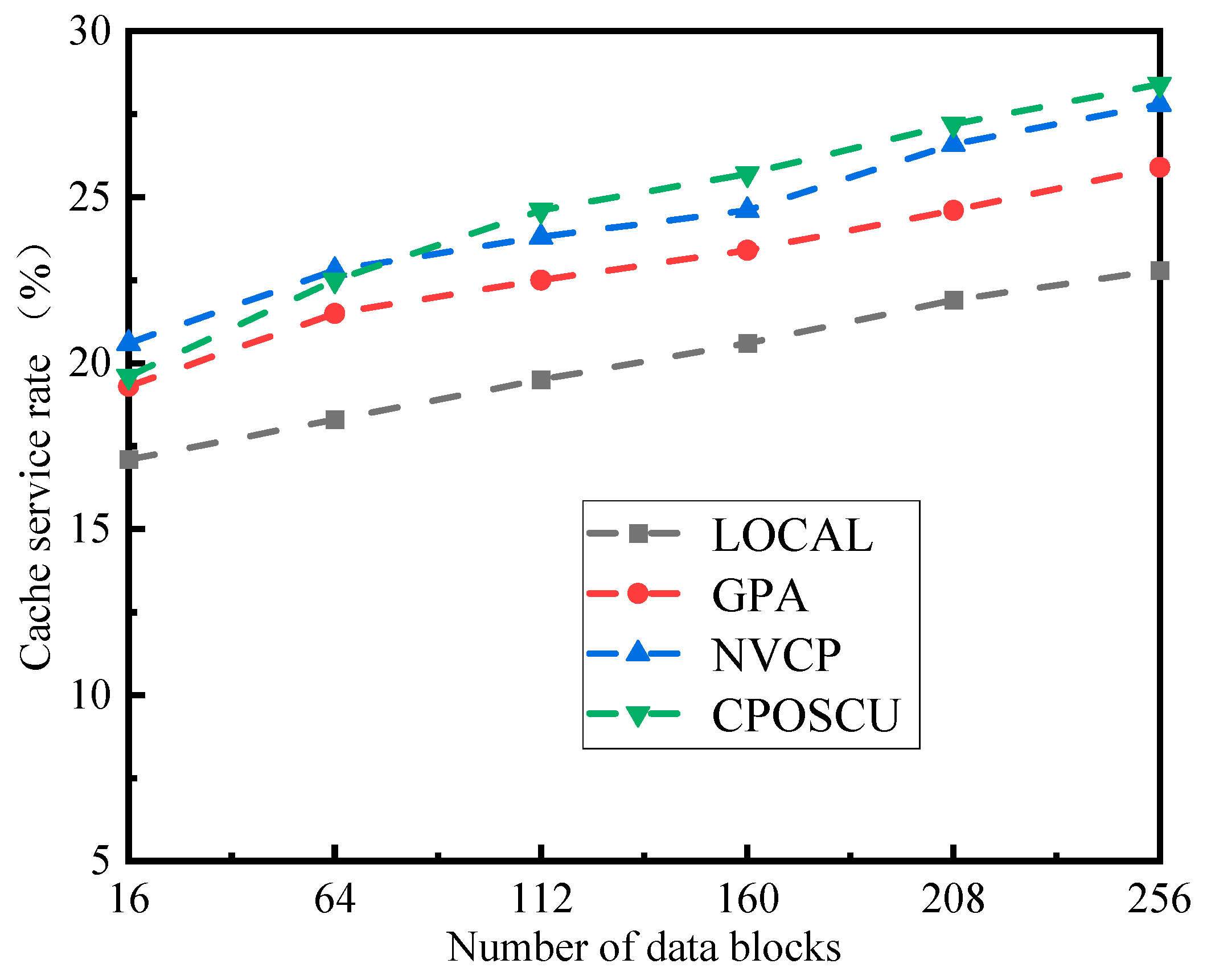

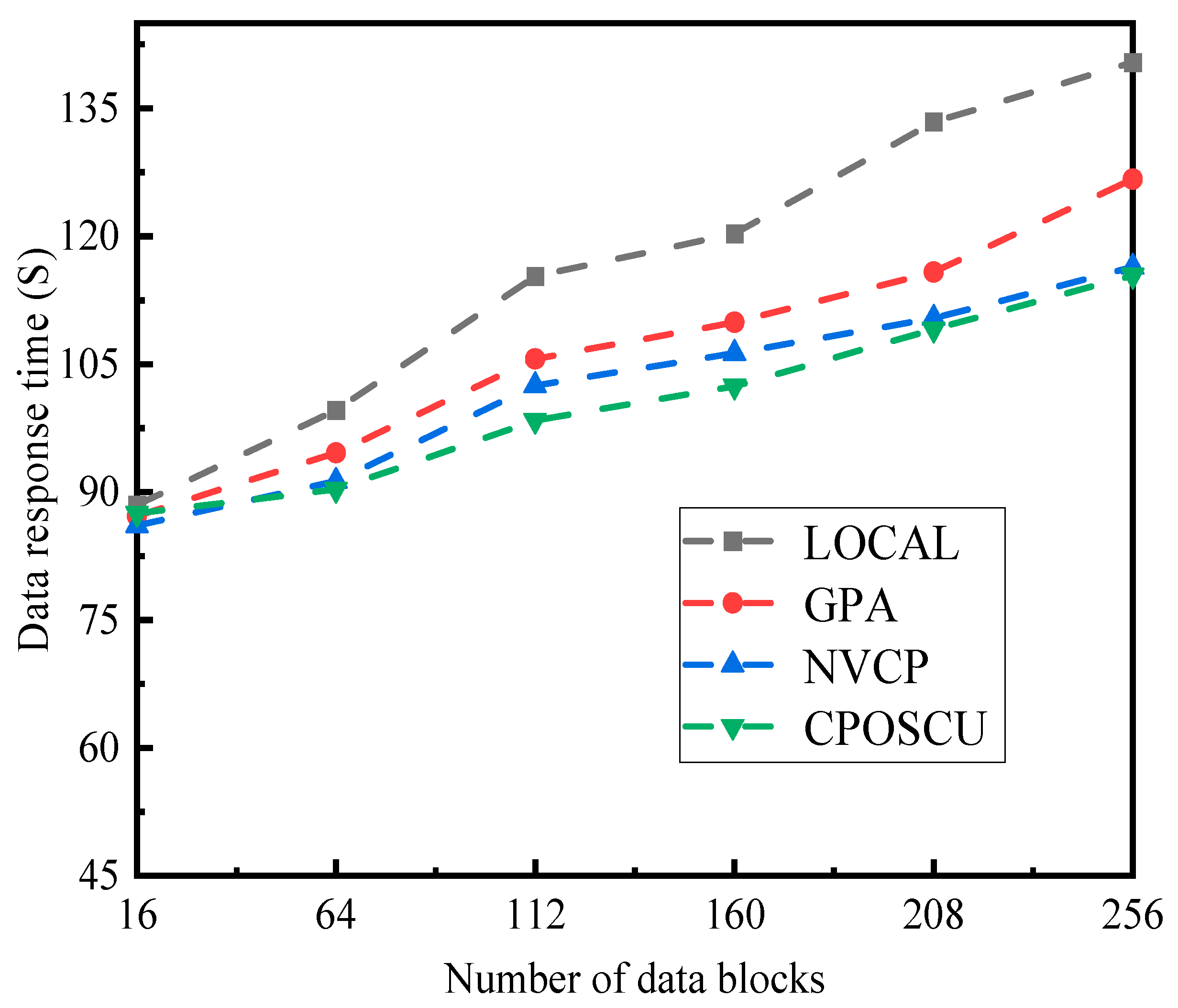

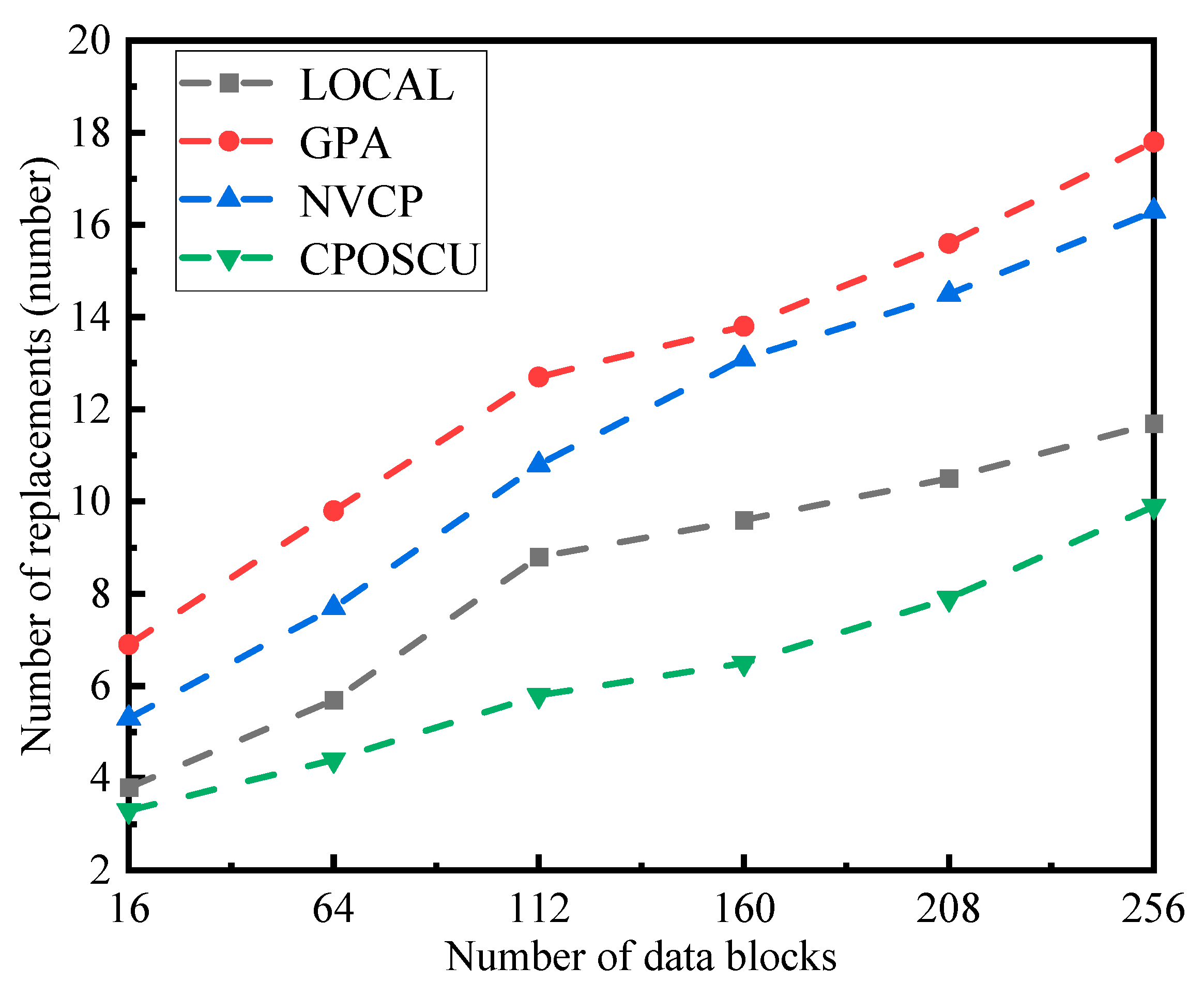

- The impact of the different number of data blocks required on the algorithmic performance

- 3.

- The Impact of different data block popularities on the algorithmic performance

5.4. Summary of Experiments

- Cache capacity has a certain impact on cache placement algorithms, i.e., as the cache capacity increases, the cache hit rate improves, while the data response time and replacement number decrease.

- The number of data blocks has a certain impact on the algorithm, i.e., with the growing quantity of data blocks, the cache hit rate, the data response time, and the replacement number all increase.

- Data popularity has a certain impact on the algorithm, i.e., as the popularity of data blocks increases, the cache hit rate improves, while the data response time and replacement number decrease.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Statista. Forecast Number of 5G Mobile Subscriptions Worldwide 2019–2026. 2022. Available online: https://www.statista.com/statistics/760275/5g-mobile-subscriptions-worldwide/ (accessed on 3 October 2022).

- Chen, Y.; Zhang, N.; Zhang, Y. Energy Efficient Dynamic Offloading in Mobile Edge Computing for Internet of Things. IEEE Trans. Cloud Comput. 2021, 8, 2163–2176. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Y.; Xu, J. Edge server placement in mobile edge computing. J. Parallel Distrib. Comput. 2018, 127, 160–168. [Google Scholar] [CrossRef]

- Pozo, E.; Germine, L.T.; Scheuer, L.; Strong, R.W. Evaluating the Reliability and Validity of the Famous Faces Doppelgangers Test, a Novel Measure of Familiar Face Recognition. Assessment 2022, 30, 1200–1210. [Google Scholar] [CrossRef] [PubMed]

- Wang, A.; Wang, P.; Miao, X.; LI, X.; Ye, N.; Liu, Y. A review on non-terrestrial wireless technologies for Smart City Internet of Things. Int. J. Distrib. Sens. Netw. 2020, 16, 1–17. [Google Scholar] [CrossRef]

- Khan, M.A. Intelligent environment enabling autonomous driving. IEEE Access 2021, 9, 32997–33017. [Google Scholar] [CrossRef]

- Qonita, M.; Giyarsih, S.R. Smart city assessment using the Boyd Cohen smart city wheel in Salatiga, Indonesia. GeoJournal 2022, 88, 479–492. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, L.; Wei, M. How does smart healthcare service affect resident health in the digital age? Empirical evidence from 105 cities of China. Front. Public Health 2022, 9, 1–10. [Google Scholar] [CrossRef]

- Yao, J.; Han, T.; Ansari, N. On mobile edge caching. IEEE Commun. Surv. Tutor. 2019, 21, 2525–2553. [Google Scholar] [CrossRef]

- Song, J.; Choi, W. Mobility-aware content placement for device-to-device caching systems. IEEE Trans. Wirel. Commun. 2019, 18, 3658–3668. [Google Scholar] [CrossRef]

- Althamary, I.; Huang, C.W.; Lin, P.; Yang, S.-R.; Cheng, C.-W. Popularity-based cache placement for fog networks. In Proceedings of the 2018 14th International Wireless Communications & Mobile Computing Conference (IWCMC), Limassol, Cyprus, 25–29 June 2018; IEEE: New York, NY, USA, 2018; pp. 800–804. [Google Scholar]

- Somesula, M.K.; Rout, R.R.; Somayajulu, D.V.L.N. Contact duration-aware cooperative cache placement using genetic algorithm for mobile edge networks. Comput. Netw. 2021, 193, 1–13. [Google Scholar] [CrossRef]

- Chen, M.; Hao, Y.; Hu, L.; Huang, K.; Lau, V.K.N. Green and mobility-aware caching in 5G networks. IEEE Trans. Wirel. Commun. 2017, 16, 8347–8361. [Google Scholar] [CrossRef]

- Wu, H.T.; Cho, H.H.; Wang, S.J.; Tseng, F.H. Intelligent data cache based on content popularity and user location for Content Centric Networks. Hum.-Centric Comput. Inf. Sci. 2019, 9, 1–16. [Google Scholar] [CrossRef]

- Banerjee, B.; Kulkarni, A.; Seetharam, A. Greedy caching: An optimized content placement strategy for information-centric networks. Comput. Netw. 2018, 140, 78–91. [Google Scholar] [CrossRef]

- Chen, W.; Han, L. Time-efficient task caching strategy for multi-server mobile edge cloud computing. In Proceedings of the 2019 IEEE 21st International Conference on High Performance Computing and Communications, IEEE 17th International Conference on Smart City and IEEE 5th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Zhangjiajie, China, 10–12 August 2019; IEEE: New York, NY, USA, 2019; pp. 1429–1436. [Google Scholar]

- Tang, Y.; Guo, K.; Ma, J.; Shen, Y.; Chi, T. A smart caching mechanism for mobile multimedia in information centric networking with edge computing. Future Gener. Comput. Syst. 2019, 91, 590–600. [Google Scholar] [CrossRef]

- Chen, J.; Wu, H.; Yang, P.; Lyu, F.; Shen, X. Cooperative edge caching with location-based and popular contents for vehicular networks. IEEE Trans. Veh. Technol. 2020, 69, 10291–10305. [Google Scholar] [CrossRef]

- Chunlin, L.; Zhang, J. Dynamic cooperative caching strategy for delay-sensitive applications in edge computing environment. J. Supercomput. 2020, 76, 7594–7618. [Google Scholar] [CrossRef]

- Baccour, E.; Erbad, A.; Mohamed, A.; Guizani, M.; Hamdi, M. Collaborative hierarchical caching and transcoding in edge network with CE-D2D communication. J. Netw. Comput. Appl. 2020, 172, 1–21. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, X.; Yang, K.; Wang, L.; Wang, W. Distributed edge caching scheme considering the tradeoff between the diversity and redundancy of cached content. In Proceedings of the 2015 IEEE/CIC International Conference on Communications in China (ICCC), Shenzhen, China, 2–4 November 2015; IEEE: New York, NY, USA, 2015; pp. 1–5. [Google Scholar]

- Li, Q.; Zhang, Y.; Li, Y.; Xiao, Y.; Ge, X. Capacity-aware edge caching in fog computing networks. IEEE Trans. Veh. Technol. 2020, 69, 9244–9248. [Google Scholar] [CrossRef]

- Jesien, K.; Lederman, H.; Arancillo, M. Journal word count specifications: A comparison of actual word counts versus submission guidelines. Curr. Med. Res. Opin. 2017, 33, 14–15. [Google Scholar]

- Xu, Y.; Ci, S.; Li, Y.; Lin, T.; Li, G. Design and evaluation of coordinated in-network caching model for content centric networking. Comput. Netw. 2016, 110, 266–283. [Google Scholar] [CrossRef]

- Gu, J.; Ji, Y.; Duan, W.; Zhang, G. Node Value and Content Popularity-Based Caching Strategy for Massive VANETs. Wirel. Commun. Mob. Comput. 2021, 2, 1–10. [Google Scholar] [CrossRef]

| Optimization Goals | Reference | Applicable Objects | Key Research Points |

|---|---|---|---|

| Aiming for low latency and high energy efficiency | [10,11,12,13] | Both latency and energy consumption directly impact Qos applications | A caching strategy based on an improved greedy algorithm; A prevalence-based cache placement strategy; A time-aware collaborative cache placement strategy based on a genetic algorithm; A mobile-aware caching strategy based on content placement. |

| Aiming for low latency and high hit rate | [14,15,16,17] | Both latency and hit rate directly affect Qos applications | An intelligent caching strategy based on the location of user requests; A task caching strategy based on multiple users and multiple servers; A content placement strategy based on a greedy algorithm; An intelligent caching strategy based on location prediction. |

| Aiming to maximize revenue | [18,19,20,21,22] | Applications that minimize CP or network costs | A collaborative caching strategy based on content location and popularity; A dynamic caching strategy based on latency-sensitive applications; A collaborative strategy for converging edge networks and device-to-device clusters; A caching strategy that considers the trade-off between cache diversity and redundancy; A caching strategy based on capacity awareness. |

| Parameters | Definition |

|---|---|

| i | Data block i |

| j | Cache server j |

| Whether a data block i is placed on the edge server j | |

| Access frequency of data block i | |

| The average access time interval of the data block i | |

| The recency of the data block i | |

| The popularity of the data block i | |

| The remaining validity ratio of the data block i | |

| The replacement rate of the edge server j | |

| The hit rate of the cached data block i on the server j | |

| The value of the data block i cached on the j-th server | |

| The replacement cost incurred to cache data blocks i on the edge server j. | |

| The network bandwidth between the edge server j and the physical location j′ | |

| The resource usage load is caused by placing a data block on the edge server j. | |

| The bandwidth cost resulting from data transmission from the edge server to users | |

| The requested rate of users requesting data blocks from the edge server j. | |

| The total number of times a user accesses the data block i from the edge server j | |

| The acquisition cost of the data block i to be cached from the physical location j′ to the edge server j | |

| The resource usage load for placing the set of data blocks to be cached to the edge server. | |

| The placement time cost of placing the set of data blocks to be cached on the edge server. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Bin, Y.; Chen, N.; Zhu, S. Caching Placement Optimization Strategy Based on Comprehensive Utility in Edge Computing. Appl. Sci. 2023, 13, 9229. https://doi.org/10.3390/app13169229

Liu Y, Bin Y, Chen N, Zhu S. Caching Placement Optimization Strategy Based on Comprehensive Utility in Edge Computing. Applied Sciences. 2023; 13(16):9229. https://doi.org/10.3390/app13169229

Chicago/Turabian StyleLiu, Yanpei, Yanru Bin, Ningning Chen, and Shuaijie Zhu. 2023. "Caching Placement Optimization Strategy Based on Comprehensive Utility in Edge Computing" Applied Sciences 13, no. 16: 9229. https://doi.org/10.3390/app13169229

APA StyleLiu, Y., Bin, Y., Chen, N., & Zhu, S. (2023). Caching Placement Optimization Strategy Based on Comprehensive Utility in Edge Computing. Applied Sciences, 13(16), 9229. https://doi.org/10.3390/app13169229