Exploring Multi-Stage GAN with Self-Attention for Speech Enhancement

Abstract

1. Introduction

- Across the commonly used objective evaluation metrics, the proposed ISEGAN-Self-Attention and DSEGAN-Self-Attention demonstrated a better speech enhancement performance in all than the ISEGAN and the DSEGAN baselines, respectively.

- We also demonstrate that with the self-attention mechanism, the ISEGAN can achieve competitive enhancement performance with the DESGAN using only half of the model footprint of the DSEGAN.

- Furthermore, we investigate the effect of the self-attention mechanism applied at different stages of the multiple generators in the ISEGAN and the DSEGAN networks with respect to their enhancement performance.

2. Background

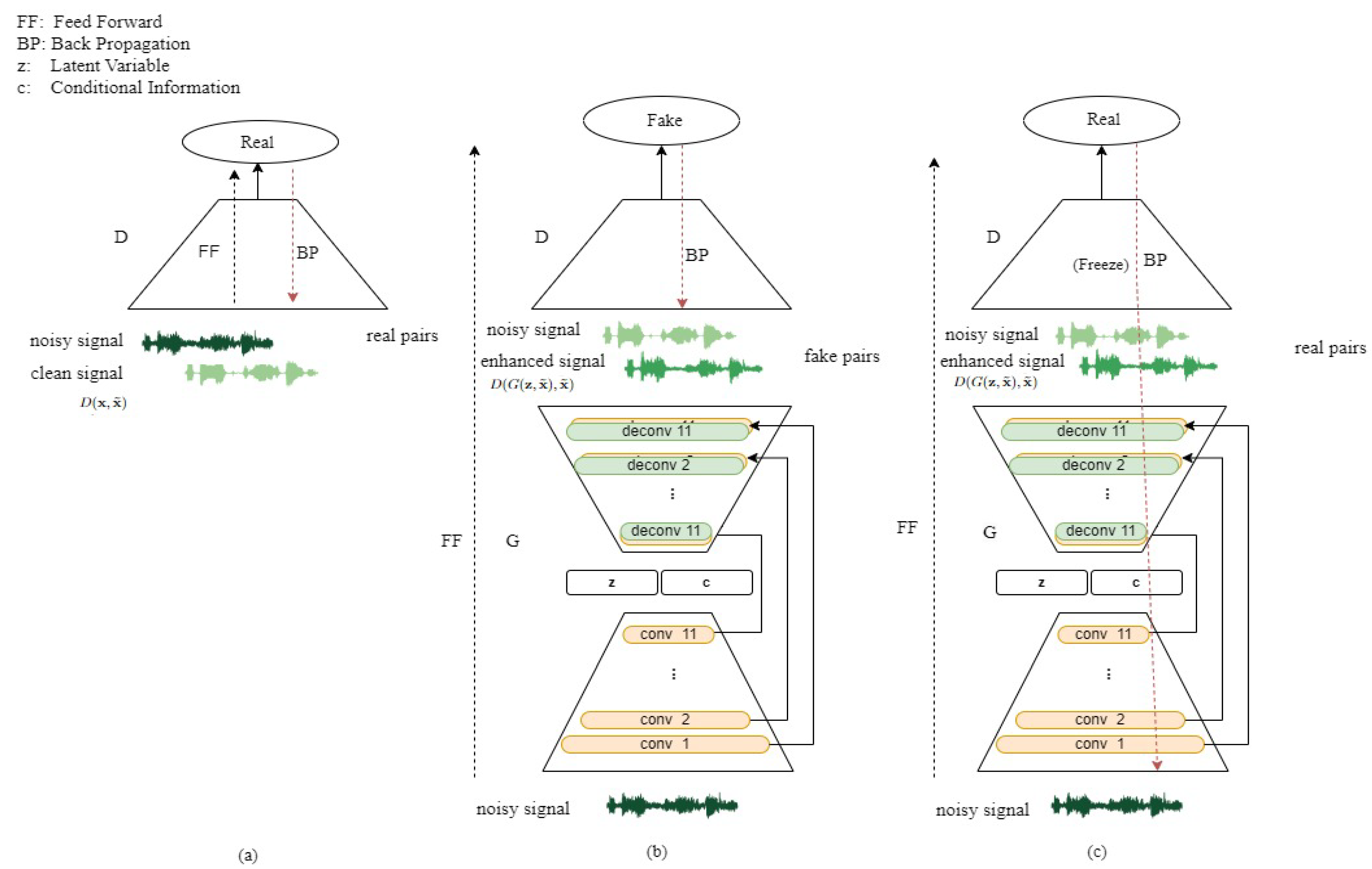

2.1. Conventional SEGAN

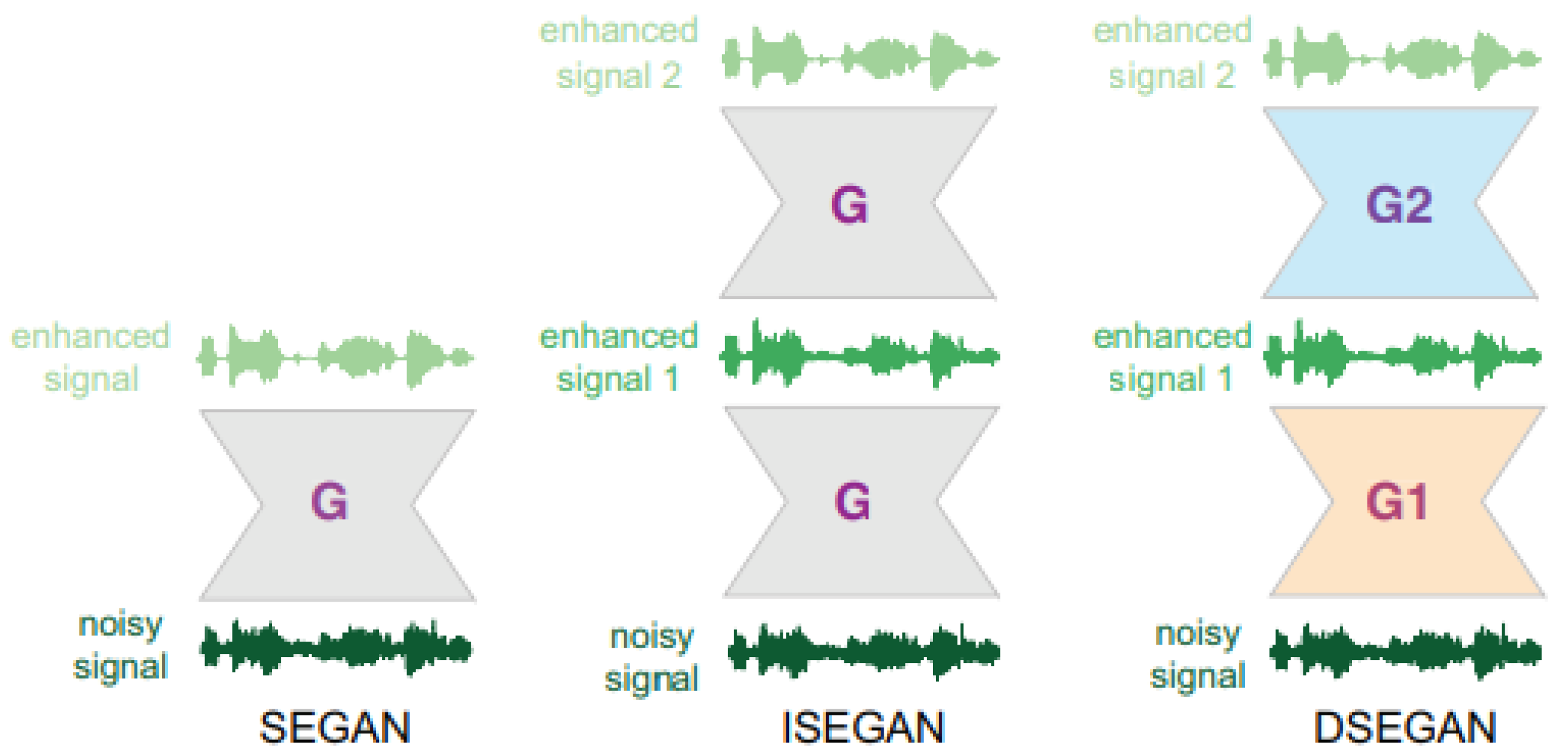

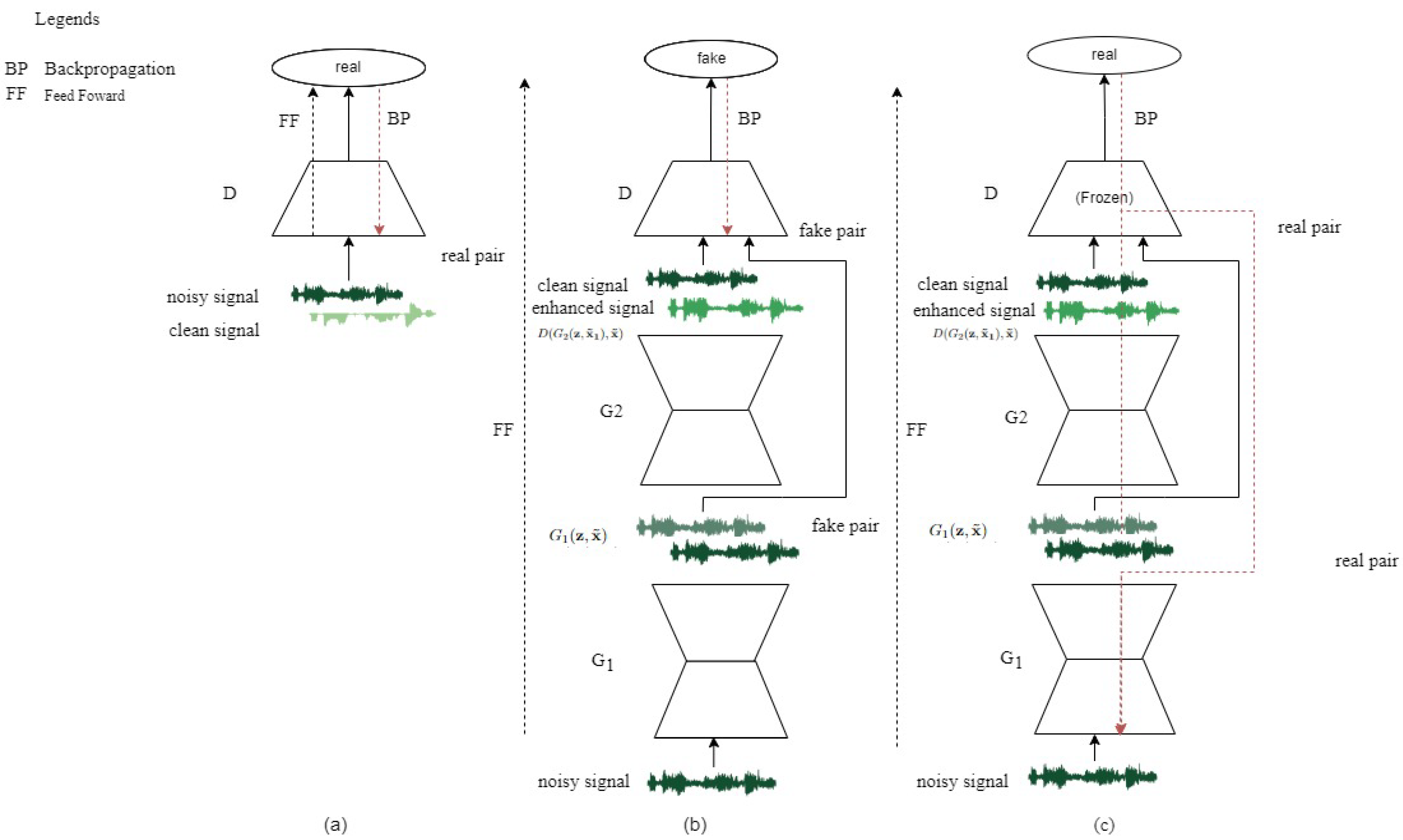

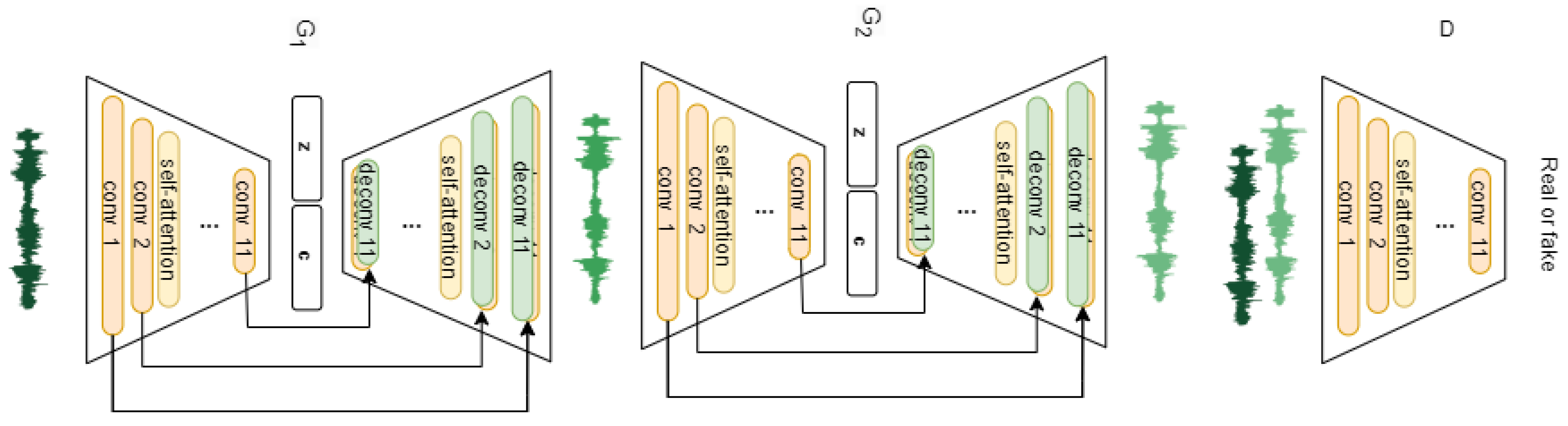

2.2. SEGAN with Multiple Generators

3. Multi-Generator SEGAN with Self-Attention

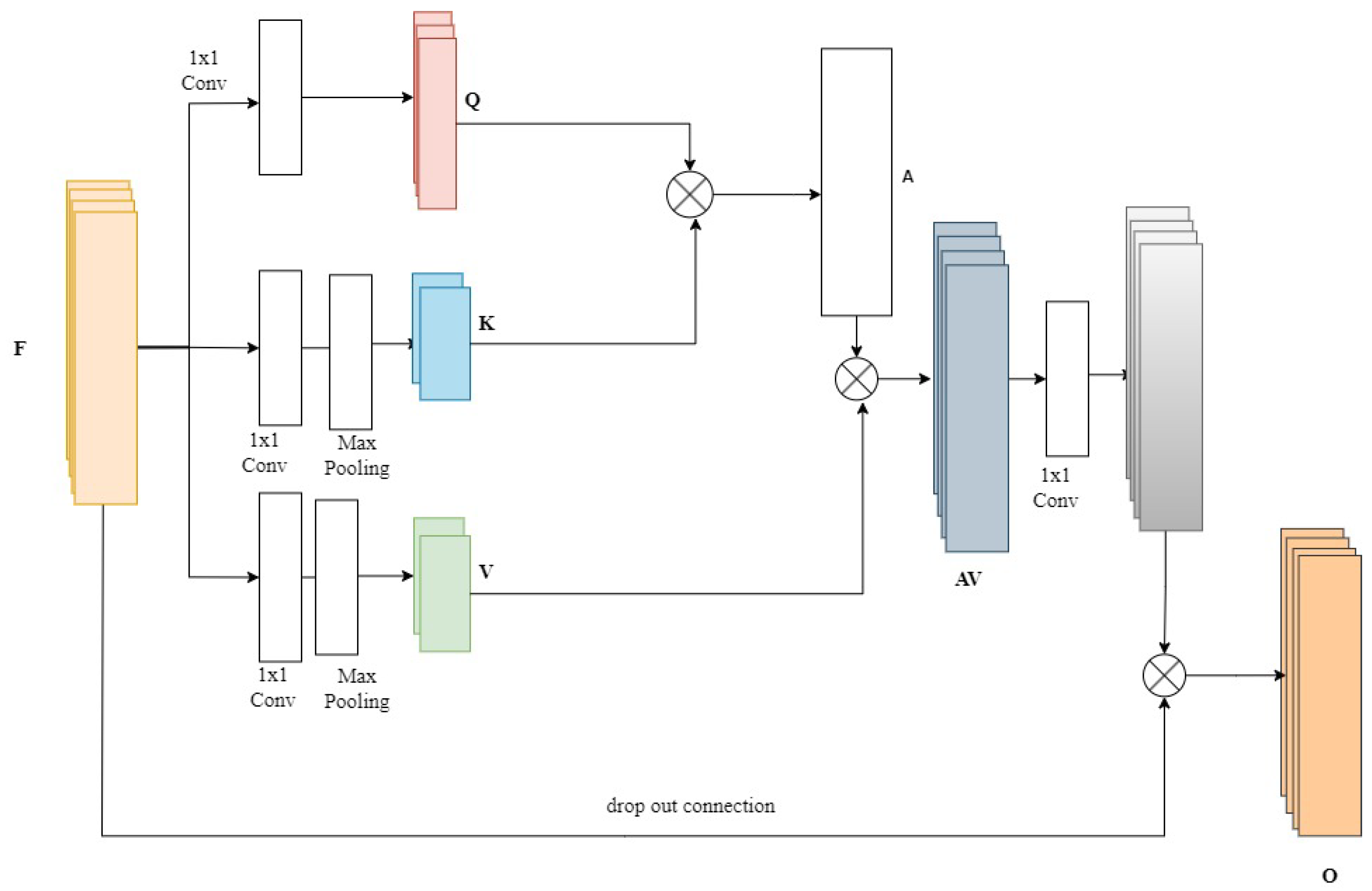

3.1. Self-Attention Block

3.2. ISEGAN-Self-Attention and DSEGAN-Self-Attention Networks

3.2.1. Multi-Generator

3.2.2. Discriminator D

4. Experiments

4.1. Baseline and Objective Evaluation

4.2. Dataset

4.3. Experimental Settings

- To quantitatively show the effects of a self-attention mechanism in the generators of a multi-generator SEGAN.

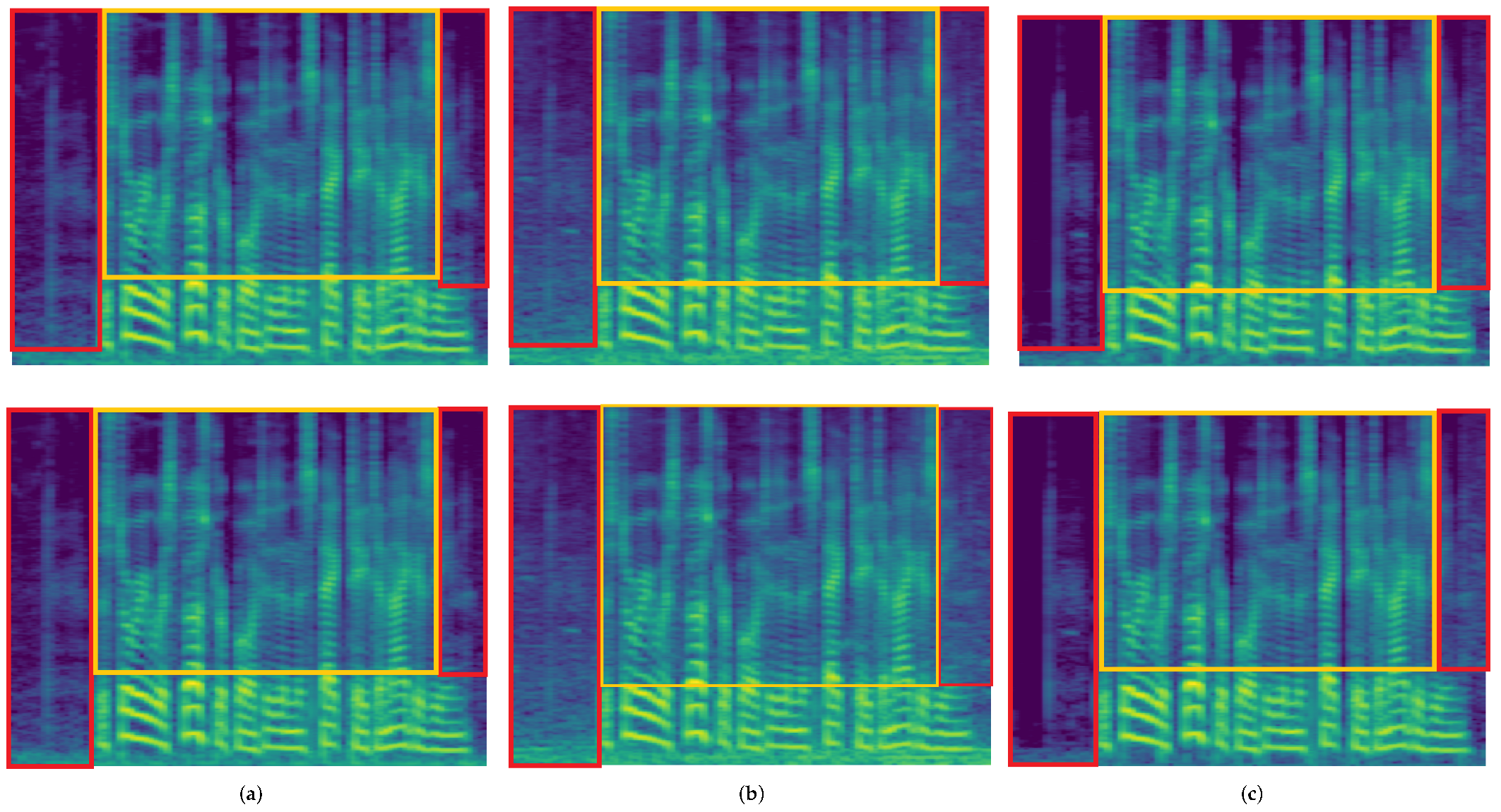

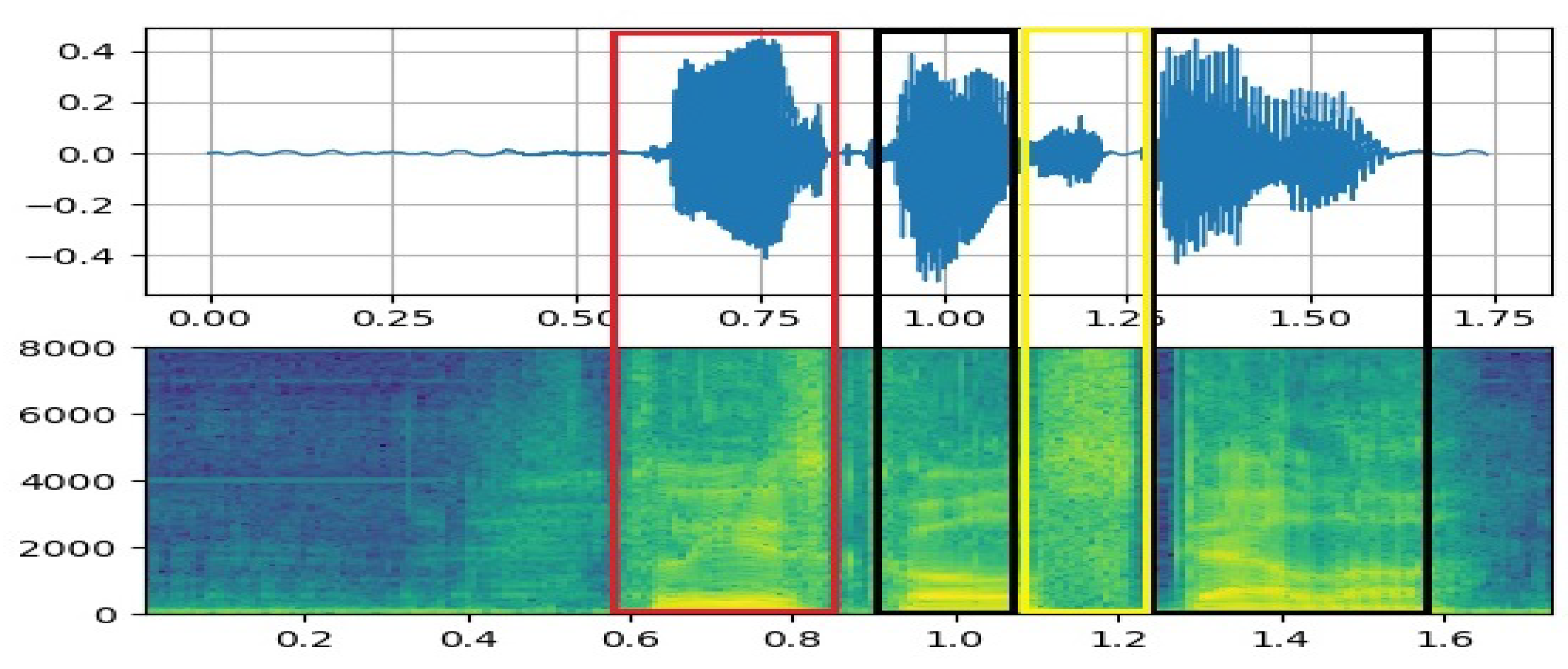

- To qualitatively show the effects of a self-attention mechanism in the generation of a multi-generator SEGAN.

- To investigate the overall performance of the model in terms of training the parameters and the generation of the enhanced speech.

5. Results and Discussion

Ablation Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SEGAN | Speech Enhancement Generative Adversarial Networks |

| SASEGAN | Self-Attention Speech Enhancement Generative Adversarial Network |

| ISEGAN | Iterated Speech Enhancement Generative Adversarial Network |

| DSEGAN | Deep Speech Enhancement Generative Adversarial Network |

| PESQ | Perceptual Evaluation of Speech Quality |

| STOI | Short-Time Objective Intelligibility |

| CBAK | Composite MOS Predictor for Background-Noise Intrusiveness |

| SSNR | Segmental Signal-to-Noise Ratio |

| CSIG | Composite Measure of Signal-to-Distortion Ratio |

| COVL | Composite MOS Predictor of Overall Signal Quality |

References

- Donahue, C.; Li, B.; Prabhavalkar, R. Exploring speech enhancement with generative adversarial networks for robust speech recognition. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5024–5028. [Google Scholar]

- Fedorov, I.; Stamenovic, M.; Jensen, C.; Yang, L.C.; Mandell, A.; Gan, Y.; Mattina, M.; Whatmough, P.N. TinyLSTMs: Efficient Neural Speech Enhancement for Hearing Aids. arXiv 2020, arXiv:2005.11138. [Google Scholar]

- Gold, B.; Morgan, N. Speech and Audio Signal Processing: Processing and Perception of Speech and Music, 1st ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1999. [Google Scholar]

- Loizou, P.C. Speech Enhancement: Theory and Practice, 2nd ed.; CRC Press, Inc.: Boca Raton, FL, USA, 2013. [Google Scholar]

- Kolmogorov, A. Interpolation and extrapolation of stationary random sequences. Izv. Acad. Sci. Ussr 1941, 5, 3–14. [Google Scholar]

- Wiener, N. Extrapolation, Interpolation, and Smoothing of Stationary Time Series: With Engineering Applications; MIT Press: Cambridge, MA, USA, 1964. [Google Scholar]

- Bhangale, K.B.; Kothandaraman, M. Survey of Deep Learning Paradigms for Speech Processing. Wirel. Pers. Commun. 2022, 125, 1913–1949. [Google Scholar] [CrossRef]

- Bulut, A.E.; Koishida, K. Low-Latency Single Channel Speech Enhancement Using U-Net Convolutional Neural Networks. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6214–6218. [Google Scholar]

- Defossez, A.; Synnaeve, G.; Adi, Y. Real Time Speech Enhancement in the Waveform Domain. arXiv 2020, arXiv:2006.12847. [Google Scholar]

- Weninger, F.; Erdogan, H.; Watanabe, S.; Vincent, E.; Roux, J.L.; Hershey, J.R.; Schuller, B. Speech enhancement with LSTM recurrent neural networks and its application to noise-robust ASR. In Proceedings of the 12th International Conference on Latent Variable Analysis and Signal Separation, Liberec, Czech Republic, 25–28 August 2015; pp. 91–99. [Google Scholar]

- Hu, Y.; Liu, Y.; Lv, S.; Xing, M.; Zhang, S.; Fu, Y.; Wu, J.; Zhang, B.; Xie, L. DCCRN: Deep Complex Convolution Recurrent Network for Phase-Aware Speech Enhancement. arXiv 2020, arXiv:2008.00264. [Google Scholar]

- Saleem, N.; Khattak, M.I.; Al-Hasan, M.; Jan, A. Multi-objective long-short term memory recurrent neural networks for speech enhancement. J. Ambient Intell. Humaniz. Comput. 2020, 12, 9037–9052. [Google Scholar] [CrossRef]

- Leglaive, S.; Girin, L.; Horaud, R. A variance modeling framework based on variational autoencoders for speech enhancement. In Proceedings of the 2018 IEEE 28th International Workshop on Machine Learning for Signal Processing (MLSP), Aalborg, Denmark, 17–20 September 2018; pp. 1–6. [Google Scholar]

- Sadeghi, M.; Leglaive, S.; Alameda-Pineda, X.; Girin, L.; Horaud, R. Audio-Visual Speech Enhancement Using Conditional Variational Auto-Encoders. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 1788–1800. [Google Scholar] [CrossRef]

- Fang, H.; Carbajal, G.; Wermter, S.; Gerkmann, T. Variational Autoencoder for Speech Enhancement with a Noise-Aware Encoder. In Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 676–680. [Google Scholar]

- Pascual, S.; Bonafonte, A.; Serrà, J. SEGAN: Speech Enhancement Generative Adversarial Network. arXiv 2017, arXiv:1703.09452. [Google Scholar]

- Baby, D.; Verhulst, S. Sergan: Speech enhancement using relativistic generative adversarial networks with gradient penalty. In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 106–110. [Google Scholar]

- Wali, A.; Alamgir, Z.; Karim, S.; Fawaz, A.; Ali, M.B.; Adan, M.; Mujtaba, M. Generative adversarial networks for speech processing: A review. Comput. Speech Lang. 2022, 72, 101308. [Google Scholar] [CrossRef]

- Wang, P.; Tan, K.; Wang, D.L. Bridging the Gap Between Monaural Speech Enhancement and Recognition With Distortion-Independent Acoustic Modeling. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 39–48. [Google Scholar] [CrossRef]

- Li, L.; Kang, Y.; Shi, Y.; Kürzinger, L.; Watzel, T.; Rigoll, G. Adversarial joint training with self-attention mechanism for robust end-to-end speech recognition. EURASIP J. Audio Speech Music. Process. 2021, 2021, 26. [Google Scholar] [CrossRef]

- Feng, T.; Li, Y.; Zhang, P.; Li, S.; Wang, F. Noise Classification Speech Enhancement Generative Adversarial Network. In Proceedings of the 2022 IEEE 6th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 4–6 March 2022; pp. 11–16. [Google Scholar]

- Phan, H.; McLoughlin, I.V.; Pham, L.; Chén, O.Y.; Koch, P.; De Vos, M.; Mertins, A. Improving GANs for speech enhancement. IEEE Signal Process. Lett. 2020, 27, 1700–1704. [Google Scholar] [CrossRef]

- Phan, H.; Le Nguyen, H.; Chén, O.Y.; Koch, P.; Duong, N.Q.; McLoughlin, I.; Mertins, A. Self-attention generative adversarial network for speech enhancement. In Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 7103–7107. [Google Scholar]

- Fu, S.W.; Liao, C.F.; Tsao, Y.; Lin, S.D. MetricGAN: Generative Adversarial Networks based Black-box Metric Scores Optimization for Speech Enhancement. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Zhang, Z.; Deng, C.; Shen, Y.; Williamson, D.S.; Sha, Y.; Zhang, Y.; Song, H.; Li, X. On Loss Functions and Recurrency Training for GAN-based Speech Enhancement Systems. arXiv 2020, arXiv:2007.14974. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Advances in Neural Information Processing Systems; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Quan, T.M.; Nguyen-Duc, T.; Jeong, W.K. Compressed Sensing MRI Reconstruction Using a Generative Adversarial Network With a Cyclic Loss. IEEE Trans. Med. Imaging 2018, 37, 1488–1497. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.C.; Liu, M.Y.; Zhu, J.Y.; Tao, A.; Kautz, J.; Catanzaro, B. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8798–8807. [Google Scholar]

- Su, J.; Jin, Z.; Finkelstein, A. HiFi-GAN: High-Fidelity Denoising and Dereverberation Based on Speech Deep Features in Adversarial Networks. arXiv 2020, arXiv:2006.05694. [Google Scholar]

- Su, J.; Jin, Z.; Finkelstein, A. HiFi-GAN-2: Studio-Quality Speech Enhancement via Generative Adversarial Networks Conditioned on Acoustic Features. In Proceedings of the 2021 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), New Paltz, NY, USA, 17–20 October 2021; pp. 166–170. [Google Scholar]

- Kumar, K.; Kumar, R.; De Boissiere, T.; Gestin, L.; Teoh, W.Z.; Sotelo, J.; de Brébisson, A.; Bengio, Y.; Courville, A.C. Melgan: Generative adversarial networks for conditional waveform synthesis. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Pham, N.Q.; Nguyen, T.S.; Niehues, J.; Müller, M.; Waibel, A.H. Very Deep Self-Attention Networks for End-to-End Speech Recognition. arXiv 2019, arXiv:1904.1337. [Google Scholar]

- Yu, G.; Wang, Y.; Zheng, C.; Wang, H.; Zhang, Q. CycleGAN-based non-parallel speech enhancement with an adaptive attention-in-attention mechanism. In Proceedings of the 2021 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Tokyo, Japan, 14–17 December 2021; pp. 523–529. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Sperber, M.; Niehues, J.; Neubig, G.; Stüker, S.; Waibel, A. Self-Attentional Acoustic Models. arXiv 2018, arXiv:1803.09519. [Google Scholar]

- Tian, Z.; Yi, J.; Tao, J.; Bai, Y.; Wen, Z. Self-Attention Transducers for End-to-End Speech Recognition. arXiv 2019, arXiv:1909.13037. [Google Scholar]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-attention generative adversarial networks. In Proceedings of the International Conference on Machine Learning (PMLR), Long Beach, CA, USA, 9–15 June 2019; pp. 7354–7363. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar]

- Isola, P.; Zhu, J.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Li, L.; Wudamu; Kürzinger, L.; Watzel, T.; Rigoll, G. Lightweight End-to-End Speech Enhancement Generative Adversarial Network Using Sinc Convolutions. Appl. Sci. 2021, 11, 7564. [Google Scholar] [CrossRef]

- Sarfjoo, S.S.; Wang, X.; Henter, G.E.; Lorenzo-Trueba, J.; Takaki, S.; Yamagishi, J. Transformation of low-quality device-recorded speech to high-quality speech using improved SEGAN model. arXiv 2019, arXiv:1911.03952. [Google Scholar]

- Sakuma, M.; Sugiura, Y.; Shimamura, T. Improvement of Noise Suppression Performance of SEGAN by Sparse Latent Vectors. In Proceedings of the 2019 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Taipei, Taiwan, 3–6 December 2019; pp. 1–2. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved techniques for training gans. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. Proc. ICML 2013, 30, 3. [Google Scholar]

- Scalart, P.; Filho, J. Speech enhancement based on a priori signal to noise estimation. In Proceedings of the 1996 IEEE International Conference on Acoustics, Speech, and Signal Processing Conference Proceedings, Atlanta, GA, USA, 9 May 1996; Volume 2, pp. 629–632. [Google Scholar]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. An algorithm for intelligibility prediction of time–frequency weighted noisy speech. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 2125–2136. [Google Scholar] [CrossRef]

- Valentini-Botinhao, C.; Wang, X.; Takaki, S.; Yamagishi, J. Investigating RNN-based speech enhancement methods for noise-robust Text-to-Speech. In Proceedings of the 9th ISCA Speech Synthesis Workshop, Sunnyvale, CA, USA, 13–15 September 2016; pp. 146–152. [Google Scholar]

- Veaux, C.; Yamagishi, J.; King, S. The voice bank corpus: Design, collection and data analysis of a large regional accent speech database. In Proceedings of the 2013 International Conference Oriental COCOSDA Held Jointly with 2013 Conference on Asian Spoken Language Research and Evaluation (O-COCOSDA/CASLRE), Gurgaon, India, 25–27 November 2013; pp. 1–4. [Google Scholar]

- Thiemann, J.; Ito, N.; Vincent, E. The diverse environments multi-channel acoustic noise database (demand): A database of multichannel environmental noise recordings. In Proceedings of the Meetings on Acoustics ICA2013, Montreal, QC, Canada, 2–7 June 2013; Volume 19, p. 035081. [Google Scholar]

- Abadi, M. TensorFlow: Learning functions at scale. In Proceedings of the 21st ACM SIGPLAN International Conference on Functional Programming, Nara, Japan, 18–24 September 2016; p. 1. [Google Scholar]

- Tieleman, T.; Hinton, G. Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude. COURSERA Neural Netw. Mach. Learn. 2012, 4, 26–31. [Google Scholar]

- McFee, B.; McVicar, M.; Faronbi, D.; Roman, I.; Gover, M.; Balke, S.; Seyfarth, S.; Malek, A.; Raffel, C.; Lostanlen, V.; et al. librosa/librosa: 0.10.0.post2. 2023. Available online: https://zenodo.org/record/7746972 (accessed on 21 May 2023).

- Loizou, P.C.; Kim, G. Reasons why current speech-enhancement algorithms do not improve speech intelligibility and suggested solutions. IEEE Trans. Audio Speech Lang. Process. 2010, 19, 47–56. [Google Scholar] [CrossRef] [PubMed]

| Metric | Noisy | Weiner | SEGAN | SASEGAN | ISEGAN | ISEGAN-Self-Attention | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| N = 2 | N = 3 | N = 4 | N = 2 | N = 3 | N = 4 | |||||

| PESQ | 1.97 | 2.22 | 2.19 | 2.34 | 2.24 | 2.19 | 2.21 | 2.66 | 2.58 | 2.59 |

| CSIG | 3.35 | 3.23 | 3.39 | 3.52 | 3.23 | 2.96 | 3.00 | 3.40 | 3.35 | 3.38 |

| CBAK | 2.44 | 2.68 | 2.90 | 3.04 | 2.95 | 2.88 | 2.92 | 3.15 | 3.09 | 3.18 |

| COVL | 2.63 | 2.67 | 2.76 | 2.91 | 2.69 | 2.52 | 2.55 | 3.04 | 2.97 | 3.01 |

| SSNR | 1.68 | 5.07 | 7.36 | 8.05 | 8.17 | 8.11 | 8.86 | 9.04 | 8.90 | 9.04 |

| STOI | 92.10 | - | 93.12 | 93.32 | 93.29 | 93.35 | 93.29 | 93.30 | 93.35 | 93.32 |

| Metric | Noisy | Weiner | SEGAN | SASEGAN | DSEGAN | DSEGAN-Self-Attention | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| N = 2 | N = 3 | N = 4 | N = 2 | N = 3 | N = 4 | |||||

| PESQ | 1.97 | 2.22 | 2.19 | 2.34 | 2.35 | 2.39 | 2.37 | 2.71 | 2.64 | 2.67 |

| CSIG | 3.35 | 3.23 | 3.39 | 3.52 | 3.55 | 3.46 | 3.50 | 3.58 | 3.37 | 3.54 |

| CBAK | 2.44 | 2.68 | 2.90 | 3.04 | 3.10 | 3.11 | 3.10 | 3.15 | 2.98 | 3.06 |

| COVL | 2.63 | 2.67 | 2.76 | 2.91 | 2.93 | 2.90 | 2.92 | 3.11 | 2.94 | 3.07 |

| SSNR | 1.68 | 5.07 | 7.36 | 8.05 | 8.70 | 8.72 | 8.59 | 9.19 | 9.01 | 9.08 |

| STOI | 92.10 | - | 93.12 | 93.32 | 93.25 | 93.28 | 93.49 | 93.48 | 93.30 | 93.36 |

| Metric | DSEGAN | ISEGAN | ISEGAN-Self-Attention | DSEGAN-Self-Attention | ||

|---|---|---|---|---|---|---|

| PESQ | 2.71 | 2.66 | 2.63 | 2.57 | 2.68 | 2.64 |

| CSIG | 3.58 | 3.58 | 3.51 | 3.49 | 3.52 | 3.50 |

| CBAK | 3.15 | 3.15 | 3.09 | 3.08 | 3.11 | 3.08 |

| COVL | 3.11 | 3.04 | 3.07 | 3.04 | 3.09 | 3.05 |

| SSNR | 9.19 | 9.04 | 9.11 | 9.03 | 9.09 | 9.02 |

| STOI | 93.29 | 93.25 | 93.38 | 93.32 | 93.42 | 93.36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asiedu Asante, B.K.; Broni-Bediako, C.; Imamura, H. Exploring Multi-Stage GAN with Self-Attention for Speech Enhancement. Appl. Sci. 2023, 13, 9217. https://doi.org/10.3390/app13169217

Asiedu Asante BK, Broni-Bediako C, Imamura H. Exploring Multi-Stage GAN with Self-Attention for Speech Enhancement. Applied Sciences. 2023; 13(16):9217. https://doi.org/10.3390/app13169217

Chicago/Turabian StyleAsiedu Asante, Bismark Kweku, Clifford Broni-Bediako, and Hiroki Imamura. 2023. "Exploring Multi-Stage GAN with Self-Attention for Speech Enhancement" Applied Sciences 13, no. 16: 9217. https://doi.org/10.3390/app13169217

APA StyleAsiedu Asante, B. K., Broni-Bediako, C., & Imamura, H. (2023). Exploring Multi-Stage GAN with Self-Attention for Speech Enhancement. Applied Sciences, 13(16), 9217. https://doi.org/10.3390/app13169217