Local Feature Enhancement for Nested Entity Recognition Using a Convolutional Block Attention Module

Abstract

1. Introduction

2. Related Work

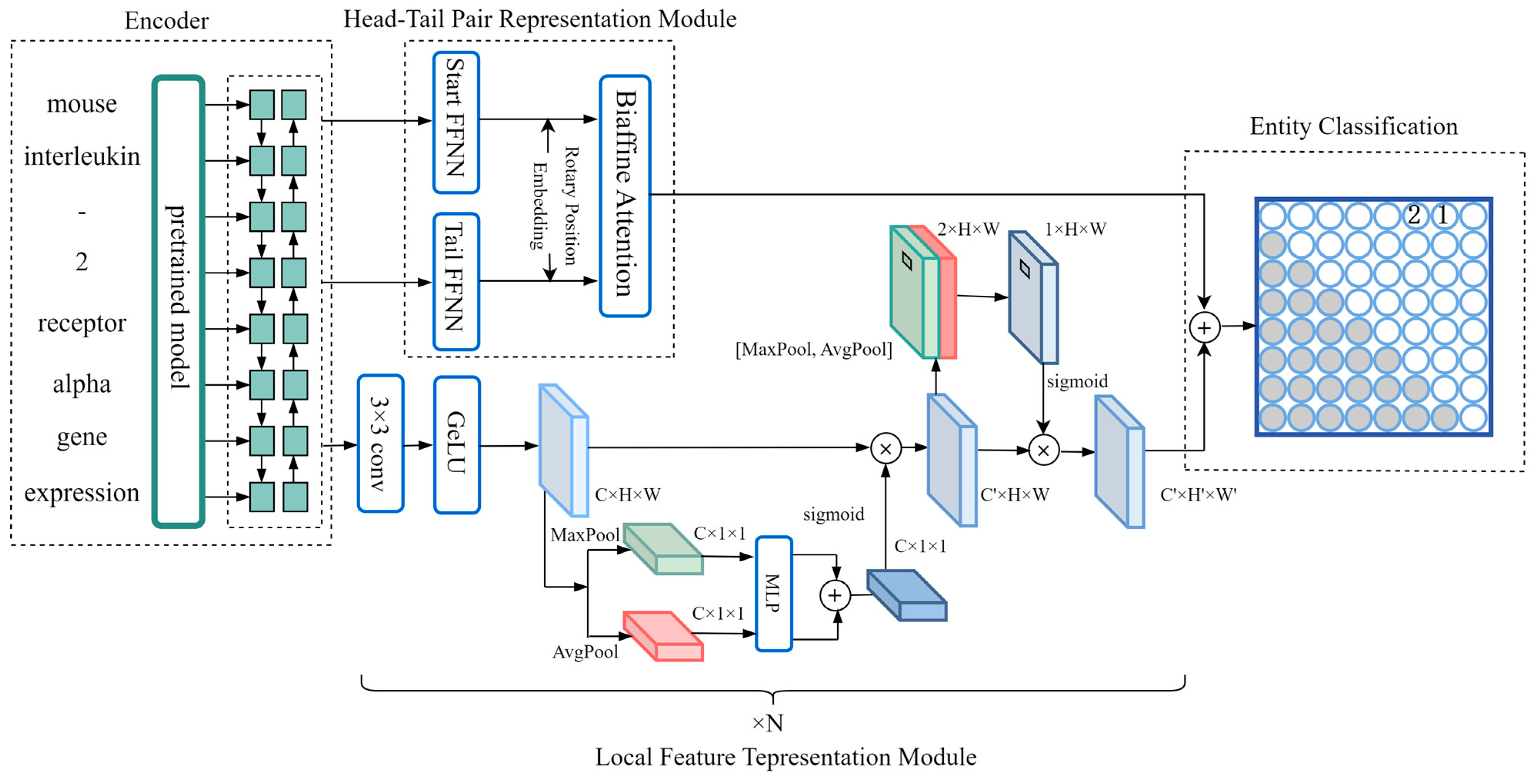

3. Methods

3.1. Encoder

3.2. Head–Tail Pair Representation Module

3.3. Local Feature Representation Module

3.4. Entity Classification

4. Experiments

4.1. Datasets

4.2. Baselines

4.3. Hyperparameters and Evaluation Indicators

4.4. Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, X.Y.; Ting, W.; Chen, H.W. Research on named entity recognition. Comput. Sci. 2005, 32, 44–48. [Google Scholar]

- Hahne, A.; Angela, D.F. Electrophysiological evidence for two steps in syntactic analysis: Early automatic and late controlled processes. J. Cogn. Neurosci. 1999, 11, 194–205. [Google Scholar] [CrossRef] [PubMed]

- Babych, B.; Anthony, H. Improving machine translation quality with automatic named entity recognition. In Proceedings of the 7th International EAMT Workshop on MT and Other Language Technology Tools, Improving MT through Other Language Technology Tools, Resource and Tools for Building MT at EACL 2003, Budapest, Hungary, 13 April 2003. [Google Scholar]

- Soricut, R.; Eric, B. Automatic question answering: Beyond the factoid. In Proceedings of the Human Language Technology Conference of the North American Chapter of the Association for Computational Linguistics: HLT-NAACL 2004, Boston, MA, USA, 2–7 May 2004. [Google Scholar]

- Zhang, J.; Xie, J.; Hou, W.; Tu, X.; Xu, J.; Song, F.; Lu, Z. Mapping the knowledge structure of research on patient adherence: Knowledge domain visualization based co-word analysis and social network analysis. PLoS ONE 2012, 7, e34497. [Google Scholar] [CrossRef] [PubMed]

- Rau, L.F. Extracting company names from text. In Proceedings the Seventh IEEE Conference on Artificial Intelligence Application, Miami Beach, FL, USA, 24–28 February 1991; IEEE Computer Society: Washington, DC, USA, 1991. [Google Scholar]

- Petasis, G.; Vichot, F.; Wolinski, F.; Paliouras, G.; Karkaletsis, V.; Spyropoulos, C.D. Using machine learning to maintain rule-based named-entity recognition and classification systems. In Proceedings of the 39th Annual Meeting of the Association for Computational Linguistics, Toulouse, France, 6–11 July 2001; pp. 426–433. [Google Scholar]

- Li, W.; McCallum, A. Rapid development of Hindi named entity recognition using conditional random fields and feature induction. ACM Trans. Asian Lang. Inf. Process. (TALIP) 2004, 2, 290–294. [Google Scholar] [CrossRef][Green Version]

- Mikheev, A.; Moens, M.; Grover, C. Named entity recognition without gazetteers. In Proceedings of the Ninth Conference of the European Chapter of the Association for Computational Linguistics, Bergen, Norway, 8–12 June 1999; pp. 1–8. [Google Scholar]

- Peng, N.; Mark, D. Improving named entity recognition for chinese social media with word segmentation representation learning. arXiv 2016, arXiv:1603.00786. [Google Scholar]

- Dong, X.; Qian, L.; Guan, Y.; Huang, L.; Yu, Q.; Yang, J. A multiclass classification method based on deep learning for named entity recognition in electronic medical records. In Proceedings of the 2016 New York Scientific Data Summit (NYSDS), New York, NY, USA, 14–17 August 2016; pp. 1–10. [Google Scholar]

- Shao, Y.; Hardmeier, C.; Nivre, J. Multilingual named entity recognition using hybrid neural networks. In Proceedings of the Sixth Swedish Language Technology Conference (SLTC), Umeå, Sweden, 17–18 November 2016. [Google Scholar]

- Wang, J.; Shou, L.; Chen, K.; Chen, G. Pyramid: A layered model for nested named entity recognition. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 5918–5928. [Google Scholar]

- Ju, M.; Miwa, M.; Ananiadou, S. A neural layered model for nested named entity recognition. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; Volume 1, pp. 1446–1459. [Google Scholar]

- Lu, W.; Roth, D. Joint mention extraction and classification with mention hypergraphs. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 857–867. [Google Scholar]

- Katiyar, A.; Cardie, C. Nested named entity recognition revisited. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; Volume 1. [Google Scholar]

- Sohrab, M.G.; Miwa, M. Deep exhaustive model for nested named entity recognition. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 2843–2849. [Google Scholar]

- Shen, Y.; Ma, X.; Tan, Z.; Zhang, S.; Wang, W.; Lu, W. Locate and label: A two-stage identifier for nested named entity recognition. arXiv 2021, arXiv:2105.06804. [Google Scholar]

- Zheng, C.; Cai, Y.; Xu, J.; Leung, H.F.; Xu, G. A boundary-aware neural model for nested named entity recognition. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Toronto, ON, Canada, 2019. [Google Scholar]

- Yuan, Z.; Tan, C.; Huang, S.; Huang, F. Fusing heterogeneous factors with triaffine mechanism for nested named entity recognition. arXiv 2021, arXiv:2110.07480. [Google Scholar]

- Su, J.; Lu, Y.; Pan, S.; Murtadha, A.; Wen, B.; Liu, Y. Roformer: Enhanced transformer with rotary position embedding. arXiv 2021, arXiv:2104.09864. [Google Scholar]

- Dozat, T.; Manning, C.D. Deep biaffine attention for neural dependency parsing. arXiv 2016, arXiv:1611.01734. [Google Scholar]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Krogh, A.; Larsson, B.; Von Heijne, G.; Sonnhammer, E.L. Predicting transmembrane protein topology with a hidden Markov model: Application to complete genomes. J. Mol. Biol. 2001, 305, 567–580. [Google Scholar] [CrossRef] [PubMed]

- Ratinov, L.; Roth, D. Design challenges and misconceptions in named entity recognition. In Proceedings of the Thirteenth Conference on Computational Natural Language Learning (CoNLL-2009), Boulder, Colorado, 4–5 June 2009; pp. 147–155. [Google Scholar]

- Konkol, M.; Konopík, M. CRF-based Czech named entity recognizer and consolidation of Czech NER research. In Text, Speech, and Dialogue: 16th International Conference, TSD 2013, Pilsen, Czech Republic, 1–5 September 2013; Proceedings 16; Springer: Berlin/Heidelberg, Germany, 2013; pp. 153–160. [Google Scholar]

- Kim, J.D.; Ohta, T.; Tateisi, Y.; Tsujii, J.I. GENIA corpus—A semantically annotated corpus for bio-textmining. Bioinformatics 2003, 9 (Suppl. 1), i180–i182. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Cheng, M.; Liu, Q.; Li, Z.; Chen, E. Nested Named Entity Recognition from Medical Texts: An Adaptive Shared Network Architecture with Attentive CRF. In CAAI International Conference on Artificial Intelligence, Beijing, China, 27–28 August 2022; Springer Nature: Cham, Switzerland, 2022; pp. 248–259. [Google Scholar]

- Wang, B.; Lu, W.; Wang, Y.; Jin, H. A neural transition-based model for nested mention recognition. arXiv 2018, arXiv:1810.01808. [Google Scholar]

- Li, X.; Feng, J.; Meng, Y.; Han, Q.; Wu, F.; Li, J. A unified MRC framework for named entity recognition. arXiv 2019, arXiv:1910.11476. [Google Scholar]

- Zhu, E.; Li, J. Boundary smoothing for named entity recognition. arXiv 2022, arXiv:2204.12031. [Google Scholar]

- Yu, J.; Bohnet, B.; Poesio, M. Named entity recognition as dependency parsing. arXiv 2020, arXiv:2005.07150. [Google Scholar]

- Tan, C.; Qiu, W.; Chen, M.; Wang, R.; Huang, F. Boundary enhanced neural span classification for nested named entity recognition. Proc. AAAI Conf. Artif. Intell. 2020, 34, 9016–9023. [Google Scholar] [CrossRef]

- Gao, W.; Li, Y.; Guan, X.; Chen, S.; Zhao, S. Research on Named Entity Recognition Based on Multi-Task Learning and Biaffine Mechanism. Comput. Intell. Neurosci. 2022, 2022, 2687615. [Google Scholar] [CrossRef]

- Xu, Y.; Huang, H.; Feng, C.; Hu, Y. A supervised multi-head self-attention network for nested named entity recognition. Proc. AAAI Conf. Artif. Intell. 2021, 35, 14185–14193. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Mitchell, A.; Strassel, P.; Huang, S.; Zakhary, R. ACE 2004 Multilingual Training Corpus LDC2005T09; Web Download; Linguistic Data Consortium: Philadelphia, PA, USA, 2005. [Google Scholar]

- Walker, C.; Strassel, S.; Medero, J.; Maeda, K. ACE 2005 Multilingual Training Corpus LDC2006T06; Web Download; Linguistic Data Consortium: Philadelphia, PA, USA, 2006. [Google Scholar]

- Levow, G.A. The third international Chinese language processing bakeoff: Word segmentation and named entity recognition. In Proceedings of the Fifth SIGHAN Workshop on Chinese Language Processing, Sydney, Australia, 22–23 July 2006; pp. 108–117. [Google Scholar]

- Zhang, Y.; Yang, J. Chinese NER using lattice LSTM. arXiv 2018, arXiv:1805.02023. [Google Scholar]

- Xia, C.; Zhang, C.; Yang, T.; Li, Y.; Du, N.; Wu, X.; Yu, P. Multi-grained named entity recognition. arXiv 2019, arXiv:1906.08449. [Google Scholar]

- Luan, Y.; Wadden, D.; He, L.; Shah, A.; Ostendorf, M.; Hajishirzi, H. A general framework for information extraction using dynamic span graphs. arXiv 2019, arXiv:1904.03296. [Google Scholar]

- Straková, J.; Straka, M.; Hajič, J. Neural architectures for nested NER through linearization. arXiv 2019, arXiv:1908.06926. [Google Scholar]

- Fu, Y.; Tan, C.; Chen, M.; Huang, S.; Huang, F. Nested named entity recognition with partially-observed treecrfs. Proc. AAAI Conf. Artif. Intell. 2021, 35, 12839–12847. [Google Scholar] [CrossRef]

- Yan, H.; Deng, B.; Li, X.; Qiu, X. TENER: Adapting transformer encoder for named entity recognition. arXiv 2019, arXiv:1911.04474. [Google Scholar]

- Gui, T.; Zou, Y.; Zhang, Q.; Peng, M.; Fu, J.; Wei, Z.; Huang, X.J. A lexicon-based graph neural network for Chinese NER. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 1040–1050. [Google Scholar]

- Kong, J.; Zhang, L.; Jiang, M.; Liu, T. Incorporating multi-level CNN and attention mechanism for Chinese clinical named entity recognition. J. Biomed. Inform. 2021, 116, 103737. [Google Scholar] [CrossRef]

- Wu, S.; Song, X.; Feng, Z.; Wu, X. Nflat: Non-flat-lattice transformer for chinese named entity recognition. arXiv 2022, arXiv:2205.05832. [Google Scholar]

| Dataset | Epochs | Batch Size | Learning Rate | Bert Learning Rate | LSTM Layer | LSTM Size | Start/Tail FFNN Size | Warm Factor | Gradient Clipping |

|---|---|---|---|---|---|---|---|---|---|

| ACE2004 | 15 | 8 | 5 × 10−4 | 5 × 10−6 | 1 | 768 | 384 | 0.1 | 1.0 |

| ACE2005 | 15 | 12 | 5 × 10−4 | 5 × 10−6 | 1 | 768 | 384 | 0.1 | 1.0 |

| GENIA | 10 | 8 | 5 × 10−4 | 5 × 10−6 | 1 | 512 | 256 | 0.1 | 5.0 |

| MSRA | 10 | 6 | 1 × 10−3 | 5 × 10−6 | 1 | 512 | 256 | 0.1 | 5.0 |

| Resume | 10 | 12 | 5 × 10−3 | 5 × 10−6 | 1 | 512 | 256 | 0.1 | 5.0 |

| Model | ACE2004 | ACE2005 | GENIA | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P (%) | R (%) | F1 (%) | P (%) | R (%) | F1 (%) | P (%) | R (%) | F1 (%) | |

| Xie et al. [42] | 81.70 | 77.40 | 79.50 | 79.00 | 77.30 | 78.20 | -- | -- | -- |

| Luan et al. [43] | -- | -- | 84.70 | -- | -- | 82.90 | -- | -- | 72.60 |

| Straková et al. [44] | -- | -- | 84.33 | -- | -- | 83.42 | -- | -- | 78.20 |

| Tan et al. [33] | 85.80 | 84.80 | 85.30 | 83.80 | 83.90 | 83.90 | 79.20 | 77.40 | 78.30 |

| Wang et al. [45] | 86.08 | 86.48 | 86.20 | 83.95 | 85.93 | 84.60 | 79.45 | 78.94 | 79.10 |

| Fu et al. [13] | 86.70 | 86.50 | 86.60 | 84.50 | 86.40 | 85.40 | 78.20 | 78.20 | 78.20 |

| Xu et al. [35] | 86.90 | 85.80 | 86.30 | 85.70 | 85.20 | 85.40 | 80.30 | 78.90 | 79.60 |

| Gao et al. [34] | 84.88 | 85.78 | 85.33 | 84.23 | 86.15 | 85.18 | 80.62 | 80.68 | 80.65 |

| Ours | 86.96 | 86.36 | 86.66 | 84.94 | 86.73 | 85.83 | 82.35 | 80.33 | 81.33 |

| Model | MSRA | Resume | ||||

|---|---|---|---|---|---|---|

| P (%) | R (%) | F1 (%) | P (%) | R (%) | F1 (%) | |

| Zhang and Yang [41] | 93.57 | 92.79 | 93.18 | 94.81 | 94.11 | 94.46 |

| Yan et al. [46] | -- | -- | 92.74 | -- | -- | 95.00 |

| Gui et al. [47] | 94.50 | 92.93 | 93.71 | 95.37 | 94.84 | 95.11 |

| Kong et al. [48] | 93.51 | 92.51 | 93.01 | 94.69 | 95.21 | 94.95 |

| Wu et al. [49] | 94.92 | 94.19 | 94.55 | 95.63 | 95.52 | 95.58 |

| Ours | 95.83 | 95.76 | 95.79 | 96.62 | 96.32 | 96.47 |

| Entity Type | BAM | RoPE-BAM | CBAM-RoPE-BAM | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P (%) | R (%) | F1 (%) | P (%) | R (%) | F1 (%) | P (%) | R (%) | F1 (%) | |

| GPE | 85.27 | 86.93 | 86.09 | 89.33 | 89.33 | 86.51 | 88.40 | 86.93 | 87.66 |

| ORG | 77.12 | 80.62 | 78.83 | 80.94 | 81.52 | 81.23 | 81.14 | 82.61 | 81.87 |

| PER | 89.61 | 90.39 | 90.00 | 90.82 | 90.52 | 90.67 | 90.48 | 90.12 | 90.30 |

| LOC | 65.49 | 70.48 | 67.89 | 72.73 | 76.19 | 74.42 | 68.91 | 78.10 | 73.21 |

| FAC | 70.93 | 54.46 | 61.62 | 69.07 | 59.82 | 64.11 | 77.08 | 66.07 | 71.15 |

| VEH | 83.33 | 88.24 | 85.71 | 82.35 | 82.35 | 82.35 | 85.00 | 100.0 | 91.89 |

| WEA | 65.52 | 59.38 | 62.30 | 84.21 | 50.00 | 62.72 | 94.44 | 53.12 | 68.00 |

| Total | 84.55 | 85.44 | 84.99 | 87.16 | 85.21 | 86.17 | 86.96 | 86.36 | 86.66 |

| Entity Type | BAM | RoPE-BAM | CBAM-RoPE-BAM | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P (%) | R (%) | F1 (%) | P (%) | R (%) | F1 (%) | P (%) | R (%) | F1 (%) | |

| NAME | 100.0 | 99.12 | 99.56 | 99.11 | 99.11 | 99.11 | 99.12 | 100.0 | 99.56 |

| CONT | 100.0 | 100.0 | 100.0 | 100.0 | 96.43 | 98.18 | 96.43 | 96.43 | 96.43 |

| RACE | 100.0 | 100.0 | 100.0 | 93.33 | 100.0 | 96.55 | 100.0 | 100.0 | 100.0 |

| TITLE | 93.69 | 96.11 | 94.88 | 97.34 | 94.95 | 96.16 | 96.99 | 96.11 | 96.55 |

| EDU | 95.69 | 99.11 | 97.37 | 99.10 | 98.21 | 98.65 | 95.69 | 99.11 | 97.37 |

| ORG | 94.33 | 96.20 | 95.26 | 95.15 | 95.84 | 95.50 | 97.05 | 95.30 | 96.17 |

| PRO | 84.62 | 100.0 | 91.67 | 93.33 | 84.85 | 88.89 | 78.59 | 100.0 | 88.00 |

| LOC | 75.00 | 100.0 | 85.71 | 100.0 | 50.00 | 66.67 | 100.0 | 66.67 | 80.00 |

| Total | 94.27 | 96.81 | 95.52 | 96.77 | 95.46 | 96.11 | 96.62 | 96.32 | 96.47 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, J.; Liu, J.; Ma, X.; Qin, X.; Jia, Z. Local Feature Enhancement for Nested Entity Recognition Using a Convolutional Block Attention Module. Appl. Sci. 2023, 13, 9200. https://doi.org/10.3390/app13169200

Deng J, Liu J, Ma X, Qin X, Jia Z. Local Feature Enhancement for Nested Entity Recognition Using a Convolutional Block Attention Module. Applied Sciences. 2023; 13(16):9200. https://doi.org/10.3390/app13169200

Chicago/Turabian StyleDeng, Jinxin, Junbao Liu, Xiaoqin Ma, Xizhong Qin, and Zhenhong Jia. 2023. "Local Feature Enhancement for Nested Entity Recognition Using a Convolutional Block Attention Module" Applied Sciences 13, no. 16: 9200. https://doi.org/10.3390/app13169200

APA StyleDeng, J., Liu, J., Ma, X., Qin, X., & Jia, Z. (2023). Local Feature Enhancement for Nested Entity Recognition Using a Convolutional Block Attention Module. Applied Sciences, 13(16), 9200. https://doi.org/10.3390/app13169200