Abstract

Interest in detecting deceptive behaviours by various application fields, such as security systems, political debates, advanced intelligent user interfaces, etc., makes automatic deception detection an active research topic. This interest has stimulated the development of many deception-detection methods in the literature in recent years. This work systematically reviews the literature focused on facial cues of deception. The most relevant methods applied in the literature of the last decade have been surveyed and classified according to the main steps of the facial-deception-detection process (video pre-processing, facial feature extraction, and decision making). Moreover, datasets used for the evaluation and future research directions have also been analysed.

1. Introduction

Lying is a complex social activity by someone (i.e., the deceiver) aimed at causing a specific behaviour in another person (i.e., the deceived) by making their view of the situation more congruent with the view and behaviour of the deceived that usually occurs, according to the deceiver’s knowledge [1]. Deception may destroy the relationship and hamper communication, leading to negative consequences [2]. Therefore, deception detection has been an investigated research topic for decades, and the development of tools and methods for detecting deceptive behaviours has become an urgent need for society. However, despite a rich corpus of deception research, detecting deceptive behaviours is still a very challenging task, mainly because human beings do not have good lie-detection abilities. A study by Bond and DePaulo [3] quantifies the human accuracy of discriminating between lies and truths at 54% on average, in other words just slightly above a random guess. This percentage is also confirmed by the Hartwig’s [4] more recent study. In response to the necessity to improve this accuracy rate, researchers have long been trying to decode human behaviour in an attempt to discover deceptive cues. In this study, what we consider “deceptive” is human behaviour in which the deceiver intentionally acts to make the deceived believe in something the deceiver considers false [5]. This is a conscious and deliberated act, opposed to the unconscious (non-deceptive) behaviour in which a person provides false information believed to be true.

To discover deceptive cues, different methods have been proposed by scientists from different disciplines, ranging from psychologists and physiologists to technologists. For instance, methods based on the psycho-physiological detection of deception that uses mainly psychological tests and physiological monitoring have been applied [6,7]. A positive contribution is also given by technologists that developed methods for deceptive detection based on technological tools. This study focuses on the technological field, in which a considerable number of methods for detecting verbal (explicit) and non-verbal (implicit) cues of deception have been developed [8]. Explicit cues are categorised according to their nature (i.e., visual, verbal, or paralinguistic) [9], while implicit cues involve facial expressions, body movements/posture, eye contact, and hand movements [10].

Detecting deceptive behaviours from explicit cues makes deception harder because explicit cues are easier to manipulate through carefully chosen language and wording, while implicit cues, even if also subject to manipulation, are more spontaneous and challenging to control consciously [11]. On the other hand, the interpretation of implicit cues tends to be more subjective and context-dependent, requiring a deeper understanding of individual differences and cultural norms, while explicit cues are typically more straightforward and easier to analyse in isolation. Finally, detection deception using implicit cues requires collecting data through video recordings or specialised sensors to capture non-verbal behaviours, which is more challenging compared to collecting text data for explicit cues.

Considering the above-mentioned differences, the solutions provided in the literature for detecting deceptive behaviours have different characteristics if relying on explicit or implicit cues. The systematic literature review provided in this paper deals with implicit cues and, in particular, focuses on a specific type of implicit cues, i.e., facial cues. Specifically, the most relevant deception-detection systems focusing on facial cues have been described and classified considering the methods used in the main steps of the facial-deception-detection process (i.e., video pre-processing, facial feature extraction, and decision making). The choice to focus on facial cues is justified by the results of several existing studies [12,13] arguing that facial cues have an important role in detecting deceptive behaviours due to their involuntary aspects. As stated by Darwin in his “inhibition hypothesis” [13], some actions of the facial muscles are the hardest to be inhibited due to the difficulty to control them voluntarily.

The main contributions of this review are as follows:

- to collect and systematise the scientific knowledge related to automated face deception detection from videos;

- to summarise (i) the methods used for video pre-processing, (ii) the facial extracted features, (iii) the decision-making algorithms, and (iv) the datasets used for the evaluation;

- to point out future research directions that emerged from the analysis of the existing studies.

The paper is structured as follows. Section 2 introduces the related work. Section 3 illustrates the methodology followed for the systematic literature review, while in Section 4, the results are discussed. Section 5 describes how the surveyed studies answered the review questions defined in the paper. Section 6 summarises future research directions that emerged from the literature review. Finally, Section 7 concludes the paper.

2. Related Work

Automated deception detection from videos is a challenging task that can find applications in many real-world scenarios, including airport security screening, job interviews, court trials, and personal credit risk assessment [14].

Various surveys of automated deception-detection methods from videos have been developed in the literature, classifying the methods according to different dimensions. In particular, recent surveys (see Table 1) have provided a very broad overview of deception-detection methods considering monomodal and multimodal features. The survey developed by Constâncio et al. [15] provides a systematic literature review of deception detection based on machine-learning methods, underlying the techniques and approaches applied, the difficulties, the kind of data, and the performance levels achieved. In this survey, verbal and non-verbal cues, such as facial expressions, gestures, and prosodic and linguistic features, have been considered. The 81 surveyed papers are classified according to the type of extracted features (emotional, psychological, and facial) and the kinds of machine-learning algorithms. Analogously, the survey in [16] gives an overview of automated deception detection through machine-intelligence-based techniques by providing a critical analysis of the existing tools and available datasets. The authors focused on deception detection through text, speech, and video data analysis by classifying the 100 surveyed papers according to both the research domain (i.e., psychological, professional, and computational) and the type of extracted features (verbal, non-verbal, and multimodal). A further survey focused on monomodal features (speech cues) is proposed by Herchonvicz and de Santiago [17], in which deep-learning-based techniques, available datasets, and metrics extracted from the surveyed papers are discussed.

Table 1.

Comparative analysis of surveys.

Table 1.

Comparative analysis of surveys.

| Reference | Cues | Techniques | Datasets | Steps of the Deception Detection Process (Figure 1) |

|---|---|---|---|---|

| Constâncio et al., 2023 [15] | Verbal and non-verbal cues | Machine-learning methods | Not surveyed | Step 2 and Step 3 |

| Alaskar et al., 2023 [16] | Verbal and non-verbal cues | Machine-intelligence-based techniques | Surveyed | Step 2 and Step 3 |

| Herchonvicz and de Santiago, 2021 [17] | Verbal cues | Deep-learning-based techniques | Surveyed | Step 2 and Step 3 |

| Our survey | Non-verbal cues | Machine-learning methods | Surveyed | Step 1, Step 2, and Step 3 |

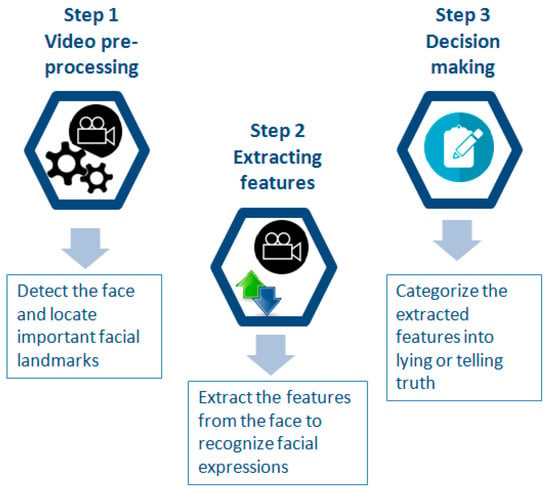

Figure 1.

The workflow of the deception detection process from videos followed in the review.

Different from existing surveys, this work focuses on deception detection methods specifically based on facial cues and proposes a classification of these methods based on the main steps of the facial deception detection process (video pre-processing, facial feature extraction, and decision making), as proposed by Thannoon et al. [18]. On the contrary, the existing surveys considered only the second and third steps of this process.

A general workflow of the followed deception detection process that used video datasets is provided in Figure 1.

During the video pre-processing step, the video is analysed by a face detector, which bounds the box containing the face, and by a facial-landmark-tracking tool, which locates and tracks the facial landmarks. In the facial-feature-extraction step, the extraction of a set of facial features for recognizing the facial cues meaningful to deception is carried out. Finally, the classification of the extracted features into truthful or deceptive behaviour is performed in the decision-making step. According to these steps, the studies included in the review are analysed to extract (i) the methods used for video pre-processing, (ii) the facial extracted features, (iii) the decision-making algorithms, and (iv) the datasets used for the evaluation.

3. Materials and Methods

This section illustrates the methodology for systematically searching and analysing the existing literature on automated face deception detection from videos. The followed methodology was the systematic literature review (SLR) described in the PRISMA recommendations [19] composed of the following steps: (1) identifying the review focus; (2) specifying the review question(s); (3) identifying studies to include in the review; (4) data extraction and study quality appraisal; (5) synthesizing the findings; and (6) reporting the results.

The review focus consists of the collection and systematization of the scientific knowledge related to automated deception detection from videos focusing on facial signals. Specifically, we aim to study the methods for video pre-processing, the facial extracted features, the decision-making algorithms, and the available datasets.

As required by the second step of the SLR process, the following review questions (RQ) were defined:

RQ 1: Which methods for video pre-processing are used?;

RQ 2: Which facial features for automated deception detection are extracted?;

RQ 3: Which decision-making algorithms for automated deception detection are used?;

RQ 4: Which datasets for automated deception detection are used?

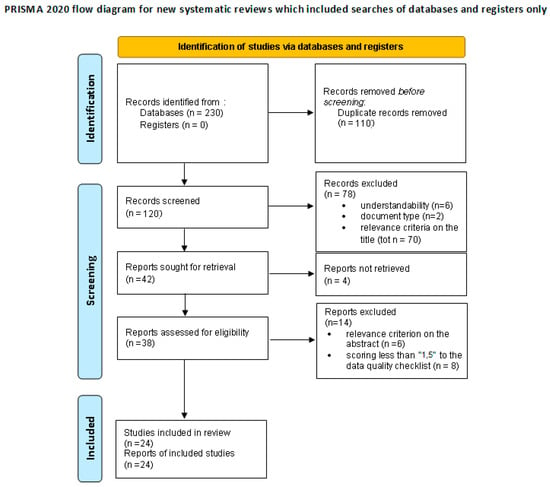

In the third step of the SLR process, the identification of the studies to include in the review was carried out following the four phases of the PRISMA recommendations [19], as shown in Figure 2.

Figure 2.

The PRISMA flow diagram, adapted from [20]. For more information, visit: http://www.prisma-statement.org/ (accessed on 9 August 2023).

For the identification of an initial set of scientific works, two indexed scientific databases (Web of Science (WoS) and Scopus) containing formally published literature (e.g., journal articles, books, and conference papers) were used. Specifically, WoS and Scopus were used due to their proven usefulness in conducting bibliometric analyses of the existing literature in various research domains to establish trends [21]. Grey literature has not been included in the systematic review because there is no gold standard method for searching grey literature, nor is there a defined methodology for the same, which makes it all the more difficult [22].

The search strings defined to search the scientific papers on the databases are as follows: (“deception detection” OR “lie detection” OR “deceptive behaviour*” OR “lie behaviour*” OR “detect* deception” AND “video*” AND “facial expression*”). Note that this study does not disambiguate among the different forms of deception (e.g., bluff, mystification, propaganda, etc.) because it aims to find studies focused on deceptive behaviours in general without restricting the search to specific forms of deception.

During the screening phase, the obtained results were filtered according to the inclusion and exclusion criteria shown in Table 2.

Table 2.

Exclusion and inclusion criteria formulated for the study.

The full text of the eligible studies was analysed by two reviewers using a quality evaluation checklist composed of four questions and related scores (see Table 3). The “disagreed” studies were evaluated by a moderator who provided the final scores.

Table 3.

Quality evaluation checklist and scores.

Studies that obtained a score < 1.5 were excluded from the qualitative analysis, while studies that scored 1.5 or more were included in the review.

Finally, analysis of the full texts of the included studies was performed, extracting the following information (if any):

- Pre-processing techniques of facial cues;

- Facial features, facial action coding system, and action units;

- Facial deception classification approaches;

- Evaluation datasets

In the following sections, the findings resulting from the application of the SLR process are described.

4. Results of the SLR

As depicted in Figure 2, during the identification phase (performed in May 2023), a total of 230 articles were retrieved using the two search engines: 203 from Scopus and 27 from WoS.

As required by the duplication criterion, removing duplicate records resulted in 120 studies. By applying the understandability criterion, 6 studies that were not written in English were excluded, and therefore, a total of 114 articles were screened for the inclusion criteria. Moreover, the studies that did not satisfy the document type criterion (2 articles) and those that did not contain in the titles the terms used for the search (70 studies) were removed, and therefore, a total of 42 studies resulted at the end of the screening phase.

Four studies that were not accessible in full text (availability criterion) and six studies that were considered not relevant for the focus of the review (relevance criterion) were removed. The relevance criterion to the abstract was also applied, and therefore, a total of 32 articles were retained for a full evaluation of eligibility, evaluated by two reviewers according to the quality evaluation checklist shown in Table 3. Seven studies with a score less than 1.5 were excluded, while the remaining twenty-four studies were included in the qualitative synthesis. Table 4 provides an overview of the selected studies.

Table 4.

Studies selected for the analysis.

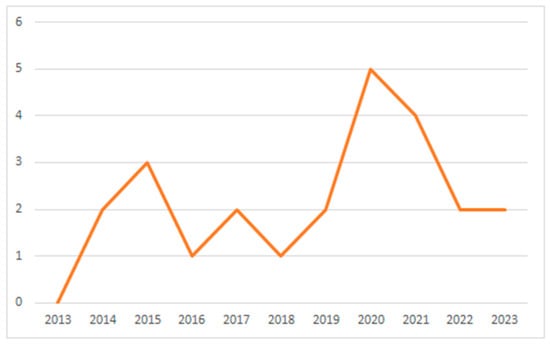

The selected studies were published mainly in conference proceedings (15 studies; 62.5%), followed by journals (9 studies; 37.5%). Figure 3 shows the temporal distribution of the selected studies, underlying the growing interest of the scientific community in the facial-deception-detection topic, which reached a peak in 2020.

Figure 3.

Temporal distribution of the surveyed studies.

5. Discussion

In this section, an analysis of the answers to the four review questions (introduced in Section 3) extracted from the 24 surveyed studies is carried out. Specifically, to deal with RQ1, we analyse the methods for video pre-processing. Addressing RQ2, the facial features are classified. Concerning RQ3, the decision-making algorithms used for automated deception detection are analysed. Finally, as part of RQ4, the used datasets are analysed.

5.1. RQ1: Which Methods for Video Pre-Processing Are Used?

Pre-processing consists of those operations that prepare data for subsequent analysis in an attempt to compensate for systematic errors. The videos are subjected to several corrections, such as geometric, radiometric, and atmospheric, although all these corrections might not be necessarily applied in all cases. These errors are systematic and can be removed before they reach the user. According to the nature of the information to be extracted from the videos, the most relevant pre-processing techniques are chosen.

Facial region detection, landmark localisation, and tracking are necessary for pre-processing when capturing videos in unconstrained recording conditions. This step has the aim of localizing and extracting the face region from the background and identifying the locations of the facial key landmark points. These points have been defined as “either the dominant points describing the unique location of a facial component (e.g., eye corner) or an interpolated point connecting those dominant points around the facial components and facial contour” [44]. Regardless of the application domain, face detection and landmark tracking from input video streams are preliminary steps necessary to process any kind of information extractable from the face.

Over the past few decades, several face-detection and landmark-tracking algorithms have been developed [33,43,45,46], as well as academic or commercial software that performs joint face detection and landmark localization [47,48,49,50,51,52,53,54].

The vast majority of these methods and tools are based on 2D image processing. The main drawback of 2D face-detection and landmark-tracking methods is that their performances depend strictly on the following four factors: the pose of the head that can generate self-occlusions and deformations; occlusions caused by masks, sunglasses, or other faces; illumination variations caused by internal camera control and skin reflectance properties; time delay caused by face changes over time. However, these methods have the advantages of being generally easier and less expensive in terms of computational load compared to 3D face-detection methods. This last class of methods uses a 3D dataset that contains both face and head shapes as range data or polygonal meshes [55]. The 3D methods allow the fundamental limitations of 2D methods to be overcome since they are insensitive to pose and illumination variations.

A widely accepted classification of face-detection techniques [56] distinguishes them into two categories: feature-based and image-based approaches. On the one hand, feature-based techniques make explicit use of face knowledge by extracting local or global features (e.g., eyes, nose, mouth) and use this knowledge to classify between facial and non-facial regions. The active shape model (ASM) [38] and the Viola–Jones method [33] are two of the most applied and successful feature-based methods so far. On the other hand, image-based approaches learn to recognise a face pattern from examples following a training step for classifying examples into face and non-face prototype classes. The only image-based approach, applied by the surveyed studies, is the Constrained Local Neural Field (CLNF) [46].

There is also academic and commercial software that performs joint face-detection and landmark localization, such as OpenFace [47], FaceSDK [49], IntraFace [48], ‘dlib’ library [50], OpenCV [51], PittPatt SDK [52], InsightFace [53], and FAN [54].

A total of 18 papers among those surveyed (24) use the face-detection and landmark-tracking methods represented in Table 5.

Table 5.

Algorithms and software tools applied for face detection and landmark tracking by surveyed papers.

From the table, we can observe that during the video pre-processing step, mainly 2D methods are used (17 studies; 94%), while only two works apply 3D methods. Moreover, the majority of the studies (14 studies; 78%) apply academic and commercial software that allows joint face-detection and landmark localization tasks to be performed. OpenFace [45] is the open-source tool most applied by surveyed facial-deception-detection works (7 studies; 39%). The remaining three surveyed works use specific face-detection and face-tracking algorithms, mainly belonging to the feature-based category. The most applied algorithms are the Viola–Jones face detector [33] and the Constrained Local Neural Field (CLNF) [46] used for face detection and face tracking, respectively.

5.2. RQ2: Which Facial Features for Automated Deception Detection Are Extracted?

The feature-extraction process consists of transforming raw data into a set of features to be used as reliable indicators for detecting deceptive behaviours.

A multitude of facial cues has been correlated with deception in the literature, including lip pressing, eye blinking, facial rigidity, smiling, and head movement. A total of 22 papers among those surveyed (24 studies) extracted the following 10 facial features, represented in Table 6 with the respective number: (I) facial expression; (II) facial rigidity; (III) head pose; (IV) eye blink; (V) eyebrow motion; (VI) wrinkle occurrence; (VII) mouth motion; (VIII) gaze motion (IX); lip movement (X).

Table 6.

Facial features extracted by surveyed studies.

More than half of the studies (15 studies; 68%) rely on the Facial Action Coding System (FACS), an anatomically based system for representing all visually discernible facial movements proposed by Ekman et al. [42]. The FACS provides a list of action units (AUs) that are the individual components of muscle movement extracted from facial expressions. Ekman et al. [57] provided a list of AUs that are the most difficult to produce deliberately and the hardest to inhibit. Therefore, the majority of the analysed studies detect the movements of these AUs as potential indicators for distinguishing liars from truth-tellers in high-stakes situations.

A total of 18 studies (82%) rely on extracting facial expression features for detecting deceptive behaviours. Facial expressions, particularly micro-expressions (i.e., facial expressions of emotion that are expressed for 0.5 s or less), are often considered strong indicators of deception. Six basic facial expressions of emotion are defined in the literature: disgust, anger, enjoyment, fear, sadness (or distress), and surprise. In particular, the surveyed studies have detected deceptive behaviours by analysing the presence of both extreme facial movements, which can indicate high-intensity emotions that are not really being experienced, and facial rigidity (i.e., little or no facial movements) that deceivers could purposefully inhibit to appear truthful. Moreover, half of the studies (11) rely on head pose, followed by gaze (9 studies; 41%).

5.3. RQ3: Which Decision-Making Algorithms for Automated Deception Detection Are Used?

During the decision-making step, the extracted features are used to train a classification approach to automatically distinguish between deceptive and truthful videos.

Specifically, 20 studies among the surveyed ones apply a deception classification approach, as represented in Table 7. Most of them (19 studies; 95%) use supervised learning techniques that require labelled training data (i.e., a set of feature vectors with their corresponding labels (deceptive vs. truthful)). The most applied supervised technique is the support vector machine (SVM), which is applied in seven studies (35%), followed by six studies (30%) applying Random Forest, five studies applying neural networks (25%), and four studies (20%) applying K-nearest neighbourhood. Various types of regression models have been experimented by surveyed papers on facial deception detection, including multinomial logistic and multivariate regression. Only one study relies on unsupervised methods that do not need manually annotated facial attributes to train the model. Specifically, it applies hidden Markov models [42].

Table 7.

Deception classification approaches applied by surveyed papers on facial deception detection.

To evaluate the deception classification approaches shown in Table 7, different metrics have been proposed in the reviewed literature, as listed below:

- -

- Area under the curve (AUC): it measures the probability that the model ranks a random positive example more highly than a random negative example;

- -

- Accuracy (ACC): it measures the probability of correspondence between a positive decision and true condition (i.e., the proportion of correct classification decisions);

- -

- Precision: it is defined as the number of true positives divided by the number of true positives plus the number of true negatives;

- -

- Recall: it is defined as the number of true positives divided by the number of true positives plus the number of false negatives;

- -

- F1 score: It is a weighted average of precision and recall.

Table 8 provides a summary of the extracted metrics. Not all the surveyed studies are included in the table because only some of them evaluated their methods. Note that this table does not provide a comparative analysis because the studies rely on different databases to validate the approaches.

Table 8.

Performance measures obtained by deception classification approaches applied by surveyed papers.

5.4. RQ4: Which Datasets for Automated Deception Detection Are Used?

An important step for a systematic evaluation of the performance of the deception-detection method is the preparation and use of well-defined evaluation datasets [58].

Table 9 summarises the datasets used by the selected studies and their main characteristics, i.e., the size, the source used to acquire the data, and the availability. The most popular dataset (seven studies; 33%) is the real-life trial dataset [59], which is available upon request. This dataset consists of 121 videos (average length of 28.0 s) collected from public court trials, including 61 deceptive and 60 truthful trial clips. The remaining studies (14 studies; 67%) developed their own dataset to evaluate the deception-detection method mainly by recording videos from game/TV shows (5 studies; 24%), followed by the use of spontaneous (4 studies; 19%) or controlled (4 studies; 19%) interviews, and by the use of storytelling (2 studies; 10%) or the download of videos from YouTube (2 studies; 10%). The larger dataset is Abd et al.’s database [18] containing 888 video clips from 102 participants. Considering the availability, the majority of the studies collected datasets without making them available (11 studies; 52%), while 7 studies (33%) made datasets available on request. Only three studies (14%) published the collected dataset.

Table 9.

Datasets used by the selected studies.

6. Future Research Directions

The development of facial-deceptive-detection methods brings many opportunities for future research. Table 10 summarises the future research directions extracted from the surveyed studies and opportunely classifies them according to the aim of the future research. Note that only the studies that provided a future work discussion have been included in the table (14 studies; 58%).

Table 10.

Future research directions envisaged by the selected studies.

The majority of the surveyed studies underline the need to extend the current dataset for the generalization of the results (five studies; 36%) and use of a multimodal approach (five studies; 36%) that combines different types of features (speech, video, text, hand gestures, head movements, etc.). In more detail, evaluating the accuracy of the detection algorithms over a larger database yields a generalization of results, as well as an increase in the performance of the algorithms, while the integration of several modalities yields higher accuracy.

Relevance is also given to the complexity reduction (four studies; 29%) by exploring innovative ways of combining and classifying the features for real-time deception detection.

Furthermore, a look at the development of further methods and the use of larger samples (three studies; 21% each) is also suggested by the surveyed studies. In more detail, the need to develop more accurate methods of action unit detection, more complex classifiers to model conversational context and time-dependent features, and affect-aware systems resulted from the analysis. On considering the use of larger samples, particular attention is given to an equal representation by gender and race and generalization to other scenarios.

Finally, it is also important to mention the future research directions looking for further psychological analysis, transferability, and increase in the extracted features (two studies; 14% each), as well as the explainability of the algorithms, model validation, and use of 3D deformable models (one study; 7% each).

7. Conclusions

This study provided a systematic literature analysis on facial deception detection in videos. The main contribution was a discussion of the methods applied in the literature of the last decade classified according to the main steps of the facial-deception-detection process (video pre-processing, facial feature extraction, and decision making). Moreover, datasets used for the evaluation and future research directions have also been analysed.

The initial search on WoS and Scopus databases returned 230 studies, of which 38 studies were retained after the application of the inclusion and exclusion criteria defined in the methodology. Finally, 24 studies were included in the qualitative synthesis according to the scores obtained in the quality evaluation checklist. The analysis revealed that in the last ten years, the interest of the scientific community in the topic of facial deception detection began growing in 2014 and reached a peak in 2020.

Considering the methods for video pre-processing, we observed that the majority of the surveyed studies use academic and commercial software for face detection and landmark tracking. Moreover, the most applied and successful feature-based methods are the active shape model [43] and the Viola–Jones method [33], while the Constrained Local Neural Field (CLNF) [46] is the only used image-based approach.

Concerning the extracted facial features, the Facial Action Coding System (FACS) is the most used system to represent all visually discernible facial movements, applied by 68% of the studies. Moreover, 82% of the studies rely on extracting facial expression features, followed by head pose (50% of the studies) and gaze motion (41%).

Concerning the decision-making algorithms, 95% of the studies use supervised learning techniques, mainly the support vector machine (SVM) (35% of the studies), followed by random forest (30%), neural networks (25%), and K-nearest neighbourhood (20%).

Furthermore, the real-life trial dataset [59] is the most popular dataset used for the evaluation of the performance of the decision-making algorithms (33% of the studies), while the remaining studies (67%) developed their own dataset mainly by recording videos from game/TV shows (24%), using spontaneous (19%) or controlled (19%) interviews, and using storytelling (10%).

Finally, the surveyed studies highlight the following future research directions: (i) extension of the current datasets for evaluating the detection algorithms; (ii) use of a multimodal approach that combines different types of features (facial, speech, video, text, hand gestures, etc.); (iii) reduction in the complexity of the detection process for real-time use; (iv) use of larger samples for an equal representation by gender and race; and (v) development of further more accurate and affect-aware methods.

The study has some limitations mainly related to the focus on facial cues from videos. Future studies could also include analysis of explicit cues (verbal communication) from face-to-face interactions. Indeed, multimodal analysis that combines both verbal and non-verbal cues is the most valuable approach for detecting deception [15].

Author Contributions

Conceptualization, A.D. (Alessia D’Andrea), A.D. (Arianna D’Ulizia), P.G. and F.F.; Methodology, A.D. (Alessia D’Andrea), A.D. (Arianna D’Ulizia), and P.G.; Validation, A.D. (Alessia D’Andrea) and A.D. (Arianna D’Ulizia); Formal analysis, A.D. (Alessia D’Andrea) and A.D. (Arianna D’Ulizia); Investigation, A.D. (Alessia D’Andrea), A.D. (Arianna D’Ulizia), and P.G.; Data curation, A.D. (Alessia D’Andrea), A.D. (Arianna D’Ulizia), and F.F.; Writing—original draft preparation, A.D. (Alessia D’Andrea) and A.D. (Arianna D’Ulizia); Writing—review and editing, A.D. (Alessia D’Andrea) and A.D. (Arianna D’Ulizia); Supervision, A.D. (Arianna D’Ulizia). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Jakubowska, J.; Białecka-Pikul, M. A new model of the development of deception: Disentangling the role of false-belief understanding in deceptive ability. Soc. Dev. 2020, 29, 21–40. [Google Scholar] [CrossRef]

- Feng, Y.; Hung, S.; Hsieh, P. Detecting spontaneous deception in the brain. Hum. Brain Mapp. 2022, 43, 3257–3269. [Google Scholar] [CrossRef] [PubMed]

- Bond, C.F., Jr.; DePaulo, B.M. Accuracy of deception judgments. Personal. Soc. Psychol. Rev. 2006, 10, 214–234. [Google Scholar] [CrossRef] [PubMed]

- Hartwig, M.; Voss, J.A.; Brimbal, L.; Wallace, D.B. Investment Professionals’ Ability to Detect Deception: Accuracy, Bias and Metacognitive Realism. J. Behav. Financ. 2017, 18, 1–13. [Google Scholar] [CrossRef]

- Zuckerman, M.; DePaulo, B.M.; Rosenthal, R. Verbal and Nonverbal Communication of Deception. In Advances in Experimental Social Psychology; Academic Press Inc.: Cambridge, MA, USA, 1981. [Google Scholar]

- Viglione, D.J.; Giromini, L.; Landis, P. The Development of the Inventory of Problems: A Brief Self-Administered Measure for Discriminating Bona Fide From Feigned Psychiatric and Cognitive Complaints. J. Pers. Assess. 2016, 99, 534–544. [Google Scholar] [CrossRef]

- Vance, N.; Speth, J.; Khan, S.; Czajka, A.; Bowyer, K.W.; Wright, D.; Flynn, P. Deception Detection and Remote Physiological Monitoring: A Dataset and Baseline Experimental Results. IEEE Trans. Biom. Behav. Identity Sci. 2022, 4, 522–532. [Google Scholar] [CrossRef]

- Randhavane, T.; Bhattacharya, U.; Kapsaskis, K.; Gray, K.; Bera, A.; Manocha, D. The liar’s walk: Detecting deception with gait and gesture. arXiv 2019, arXiv:1912.06874. [Google Scholar]

- Malik, J.S.; Pang, G.; Hengel, A.V.D. Deep learning for hate speech detection: A comparative study. arXiv 2022, arXiv:2202.09517. [Google Scholar]

- Ogale, N.A. A Survey of Techniques for Human Detection from Video; University of Maryland: College Park, MD, USA, 2006; Volume 125, p. 19. [Google Scholar]

- Ekman, P. Darwin, deception, and facial expression. Ann. N. Y. Acad. Sci. 2003, 1000, 205–221. [Google Scholar] [PubMed]

- Matsumoto, D.; Hwang, H.C. Microexpressions Differentiate Truths from Lies About Future Malicious Intent. Front. Psychol. 2018, 9, 2545. [Google Scholar] [CrossRef]

- Darwin, C. The Expression of the Emotions in Man and Animals; J. Murray: London, UK, 1872. [Google Scholar]

- Ding, M.; Zhao, A.; Lu, Z.; Xiang, T.; Wen, J.R. Face-focused cross-stream network for deception detection in videos. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Constâncio, A.S.; Tsunoda, D.F.; Silva, H.d.F.N.; da Silveira, J.M.; Carvalho, D.R. Deception detection with machine learning: A systematic review and statistical analysis. PLoS ONE 2023, 18, e0281323. [Google Scholar] [CrossRef]

- Alaskar, H.; Sbaï, Z.; Khan, W.; Hussain, A.; Alrawais, A. Intelligent techniques for deception detection: A survey and critical study. Soft Comput. 2023, 27, 3581–3600. [Google Scholar] [CrossRef]

- Herchonvicz, A.L.; de Santiago, R. Deep Neural Network Architectures for Speech Deception Detection: A Brief Survey. In Progress in Artificial Intelligence, Proceedings of the 20th EPIA Conference on Artificial Intelligence, EPIA 2021, Virtual Event, 7–9 September 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 301–312. [Google Scholar]

- Thannoon, H.H.; Ali, W.H.; Hashim, I.A. Detection of Deception Using Facial Expressions Based on Different Classification Algorithms. In Proceedings of the Third Scientific Conference of Electrical Engineering (SCEE), IEEE, Baghdad, Iraq, 19–20 December 2019; pp. 51–56. [Google Scholar]

- Liberati, M.; Tetzlaff, J.; Altman, D.G.; PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Pranckutė, R. Web of Science (WoS) and Scopus: The Titans of Bibliographic Information in Today’s Academic World. Publications 2021, 9, 12. [Google Scholar] [CrossRef]

- Paez, A. Grey literature: An important resource in systematic reviews. J. Evid. Based Med. 2017, 10, 233–240. [Google Scholar] [CrossRef] [PubMed]

- Chebbi, S.; Jebara, S.B. An Audio-Visual based Feature Level Fusion Approach Applied to Deception Detection. In Proceedings of the 15th International Conference on Computer Vision Theory and Applications (4: VISAPP), Valetta, Malta, 27–29 February 2020; pp. 197–205. [Google Scholar]

- Abbas, Z.K.; Al-Ani, A.A. An adaptive algorithm based on principal component analysis-deep learning for anomalous events detection. Indones. J. Electr. Eng. Comput. Sci. 2023, 29, 421–430. [Google Scholar] [CrossRef]

- Shen, X.; Fan, G.; Niu, C.; Chen, Z. Catching a Liar Through Facial Expression of Fear. Front. Psychol. 2021, 12, 675097. [Google Scholar] [CrossRef]

- Gadea, M.; Aliño, M.; Espert, R.; Salvador, A. Deceit and facial expression in children: The enabling role of the “poker face” child and the dependent personality of the detector. Front. Psychol. 2015, 6, 1089. [Google Scholar] [CrossRef]

- Monaro, M.; Maldera, S.; Scarpazza, C.; Sartori, G.; Navarin, N. Detecting deception through facial expressions in a dataset of videotaped interviews: A comparison between human judges and machine learning models. Comput. Hum. Behav. 2022, 127, 107063. [Google Scholar] [CrossRef]

- Su, L.; Levine, M. Does “lie to me” lie to you? An evaluation of facial clues to high-stakes deception. Comput. Vis. Image Underst. 2016, 147, 52–68. [Google Scholar]

- Abd, S.H.; Hashim, I.A.; Jalal, A.S.A. Hardware implementation of deception detection system classifier. Period. Eng. Nat. Sci. PEN 2021, 10, 151–163. [Google Scholar] [CrossRef]

- Su, L.; Levine, M.D. High-stakes deception detection based on facial expressions. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, IEEE, Stockholm, Sweden, 24–28 August 2014; pp. 2519–2524. [Google Scholar]

- Ngo, L.M.; Wang, W.; Mandira, B.; Karaoglu, S.; Bouma, H.; Dibeklioglu, H.; Gevers, T. Identity Unbiased Deception Detection by 2D-to-3D Face Reconstruction. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 145–154. [Google Scholar]

- Mathur, L.; Matarić, M.J. Introducing Representations of Facial Affect in Automated Multimodal Deception Detection. In Proceedings of the 2020 International Conference on Multimodal Interaction, Virtual, 25–29 October 2020. [Google Scholar] [CrossRef]

- Yu, X.; Zhang, S.; Yan, Z.; Yang, F.; Huang, J.; Dunbar, N.E.; Jensen, M.L.; Burgoon, J.K.; Metaxas, D.N. Is Interactional Dissynchrony a Clue to Deception? Insights from Automated Analysis of Nonverbal Visual Cues. IEEE Trans. Cybern. 2014, 45, 492–506. [Google Scholar] [CrossRef]

- Zhang, J.; Levitan, S.I.; Hirschberg, J. Multimodal Deception Detection Using Automatically Extracted Acoustic, Visual, and Lexical Features. In Proceedings of the INterspeech, Shanghai, China, 25–29 October 2020; pp. 359–363. [Google Scholar] [CrossRef]

- Belavadi, V.; Zhou, Y.; Bakdash, J.Z.; Kantarcioglu, M.; Krawczyk, D.C.; Nguyen, L.; Rakic, J.; Thuriasingham, B. MultiModal Deception Detection: Accuracy, Applicability and Generalizability. In Proceedings of the 2020 Second IEEE International Conference on Trust, Privacy and Security in Intelligent Systems and Applications (TPS-ISA), IEEE, Atlanta, GA, USA, 28–31 October 2020; pp. 99–106. [Google Scholar]

- Feinland, J.; Barkovitch, J.; Lee, D.; Kaforey, A.; Ciftci, U.A. Poker Bluff Detection Dataset Based on Facial Analysis. In Image Analysis and Processing–ICIAP 2022: 21st International Conference, Lecce, Italy, May 23–27, 2022, Proceedings, Part III; Springer International Publishing: Cham, Switzerland, 2022; pp. 400–410. [Google Scholar]

- Sen, T.K. Temporal patterns of facial expression in deceptive and honest communication. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), IEEE, San Antonio, TX, USA, 23–26 October 2017; pp. 616–620. [Google Scholar]

- Yildirim, S.; Chimeumanu, M.S.; Rana, Z.A. The influence of micro-expressions on deception detection. Multimed. Tools Appl. 2023, 82, 29115–29133. [Google Scholar] [CrossRef]

- Mathur, L.; Mataric, M.J. Unsupervised audio-visual subspace alignment for high-stakes deception detection. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, Toronto, ON, Canada, 6–11 June 2021; pp. 2255–2259. [Google Scholar]

- Pérez-Rosas, V.; Abouelenien, M.; Mihalcea, R.; Xiao, Y.; Linton, C.J.; Burzo, M. Verbal and nonverbal clues for real-life deception detection. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 2336–2346. [Google Scholar]

- Venkatesh, S.; Ramachandra, R.; Bours, P. Video based deception detection using deep recurrent convolutional neural network. In Computer Vision and Image Processing, Proceedings of the 4th International Conference, CVIP 2019, Jaipur, India, 27–29 September 2019; Revised Selected Papers, Part II 4; Springer: Singapore, 2020; pp. 163–169. [Google Scholar]

- Demyanov, S.; Bailey, J.; Ramamohanarao, K.; Leckie, C. Detection of deception in the mafia party game. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, ACM, Seattle, WA, USA, 9–13 November 2015; pp. 335–342. [Google Scholar]

- Pentland, S.J.; Twyman, N.W.; Burgoon, J.K.; Nunamaker, J.F., Jr.; Diller, C.B. A video-based screening system for automated risk assessment using nuanced facial features. J. Manag. Inf. Syst. 2017, 34, 970–993. [Google Scholar] [CrossRef]

- Carissimi, N.; Beyan, C.; Murino, V. A multi-view learning approach to deception detection. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition, IEEE, Xi’an, China, 15–19 May 2018; pp. 599–606. [Google Scholar]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Lu, S.; Tsechpenakis, G.; Metaxas, D.; Jensen, M.L.; Kruse, J. Blob analysis of the head and hands: A method for deception detection and emotional state identification. In Proceedings of the 38th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 6 January 2005. [Google Scholar]

- Michael, N.; Dilsizian, M.; Metaxas, D.; Burgoon, J.K. Motion profiles for deception detection using visual cues. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2010; pp. 462–475. [Google Scholar]

- Ekman, P.; Friesen, W.V.; Hager, J.C. Facial Action Coding System: The Manual on CD-ROM. Instructor’s Guide; Network Information Research Co.: Salt Lake City, UT, USA, 2002. [Google Scholar]

- Kanaujia, A.; Huang, Y.; Metaxas, D. Tracking facial features using mixture of point distribution models. In Computer Vision, Graphics and Image Processing; Springer: Berlin/Heidelberg, Germany, 2006; pp. 492–503. [Google Scholar]

- Wu, Y.; Ji, Q. Facial Landmark Detection: A Literature Survey. Int. J. Comput. Vis. 2019, 127, 115–142. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Robust real-time object detection. Int. J. Comput. Vis. 2001, 4, 4. [Google Scholar]

- Nechyba, M.C.; Brandy, L.; Schneiderman, H. PittPatt Face Detection and Tracking for the CLEAR 2007 Evaluation. In Multimodal Technologies for Perception of Humans. RT CLEAR 2007; Lecture Notes in Computer Science; Stiefelhagen, R., Bowers, R., Fiscus, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4625. [Google Scholar] [CrossRef]

- Guo, J.; Deng, J.; An, X.; Yu, J. Insightface. Available online: https://github.com/deepinsight/insightface (accessed on 21 August 2020).

- Bulat, A.; Tzimiropoulos, G. How far are we from solving the 2D & 3D face alignment problem? (and a dataset of 230,000 3d facial landmarks). In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Amos, B.; Ludwiczuk, B.; Satyanarayanan, M. Openface: A general-purpose face recognition library with mobile applications. CMU Sch. Comput. Sci. 2016, 6, 20. [Google Scholar]

- De la Torre, F.; Chu, W.S.; Xiong, X.; Vicente, F.; Ding, X.; Cohn, J. IntraFace. In Proceedings of the IEEE International Conference on Automatic Face & Gesture Recognition and Workshops, Ljubljana, Slovenia, 4–8 May 2015; Volume 1. [Google Scholar]

- Ekman, P.; Friesen, W.V.; Hager, J.C. The Facial Action Coding System: A Technique for the Measurement of Facial Movement; Consulting Psychologists Press: San Francisco, CA, USA, 2002. [Google Scholar]

- D’Ulizia, A.; Caschera, M.C.; Ferri, F.; Grifoni, P. Fake news detection: A survey of evaluation datasets. PeerJ Comput. Sci. 2021, 7, e518. [Google Scholar] [CrossRef]

- Perez-Rosas, V.; Abouelenien, M.; Mihalcea, R.; Burzo, M. Deception detection using real-life trial data. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, ACM, Seattle, WA, USA, 9–13 November 2015; pp. 59–66. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).