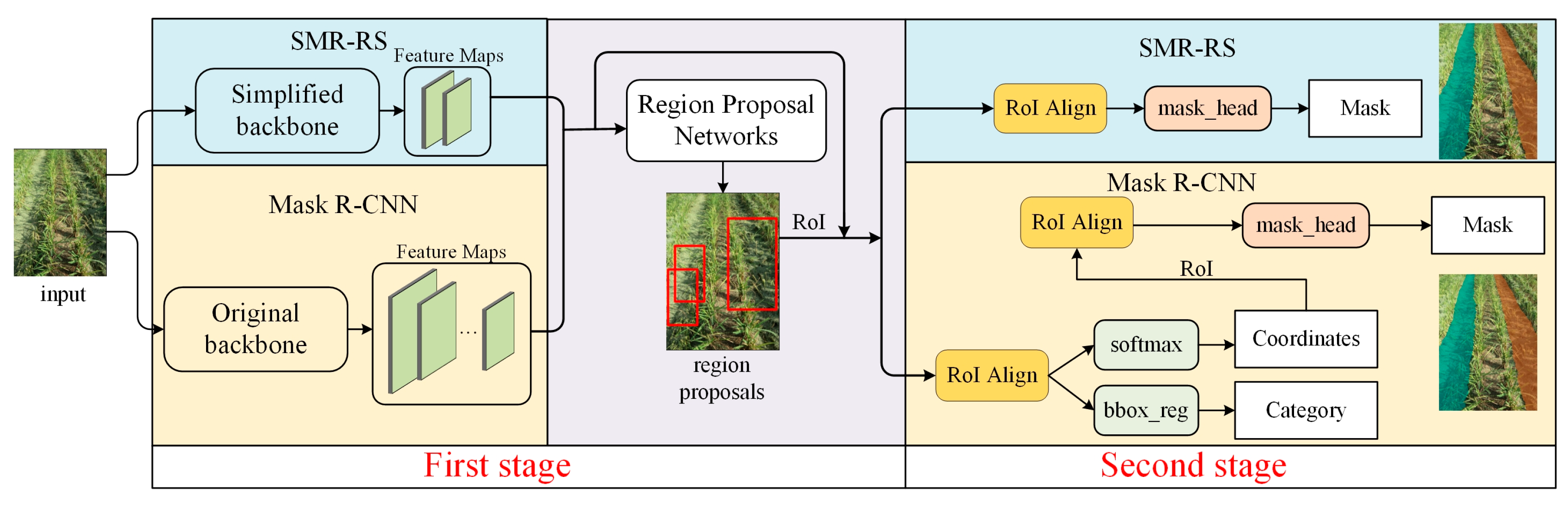

3.3. Optimization Experiments for SMR

The segmentation performance of the original SMR on the test set is shown in

Table 2. Compared with Mask R-CNN, the number of parameters of SMR was reduced significantly, from 43.7 M to 29.8 M, a reduction of 31.8%, and the GPU memory consumption was reduced by 6.3% prediction speed was increased by 6.2%, the training loss is slightly smaller, as in

Figure 5, but the segmentation mask quality was significantly reduced. To solve this problem, SMR was optimized for experiments, and the segmentation performance of SMR after optimization even surpassed that of Mask R-CNN, and this optimization trial process is described below.

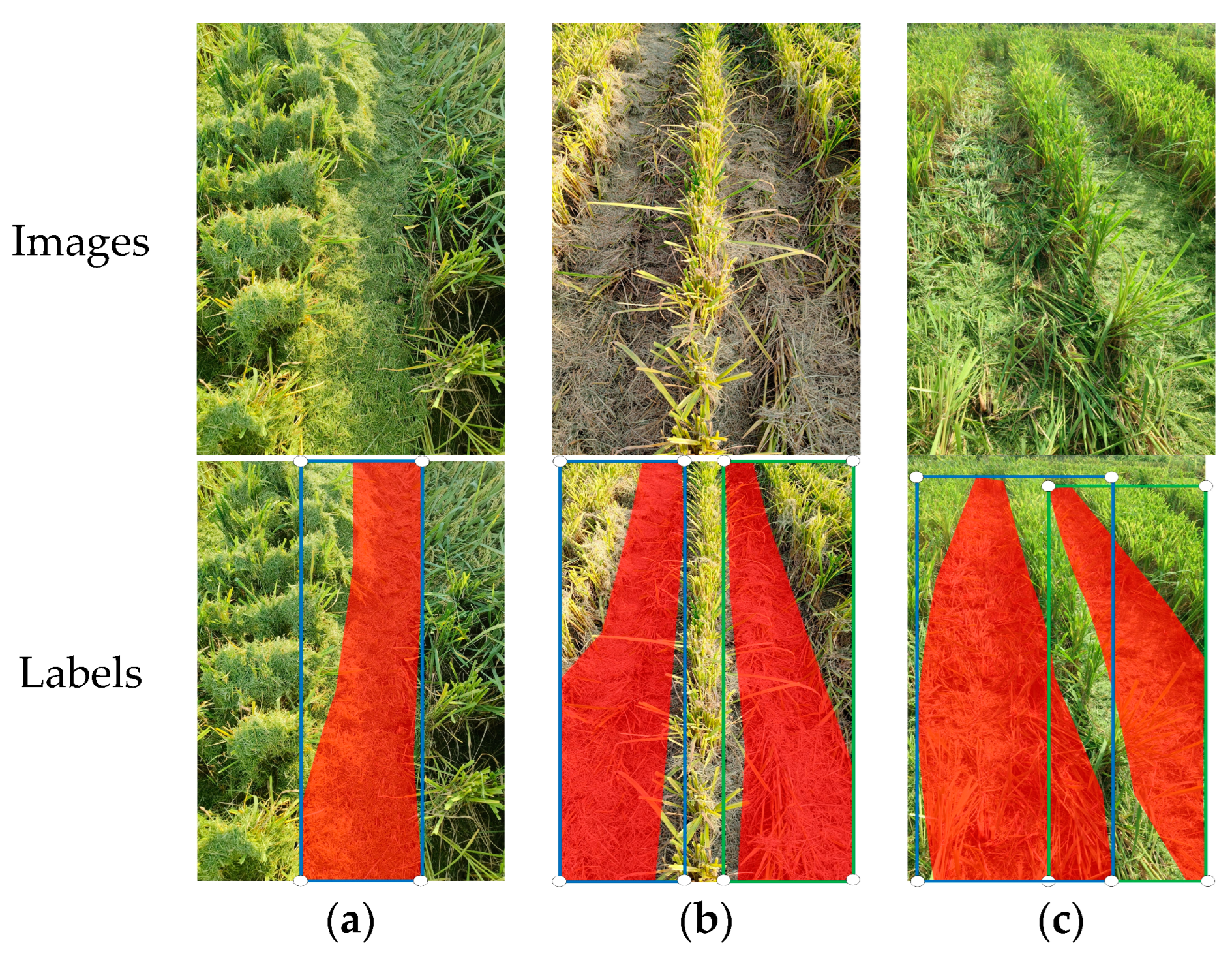

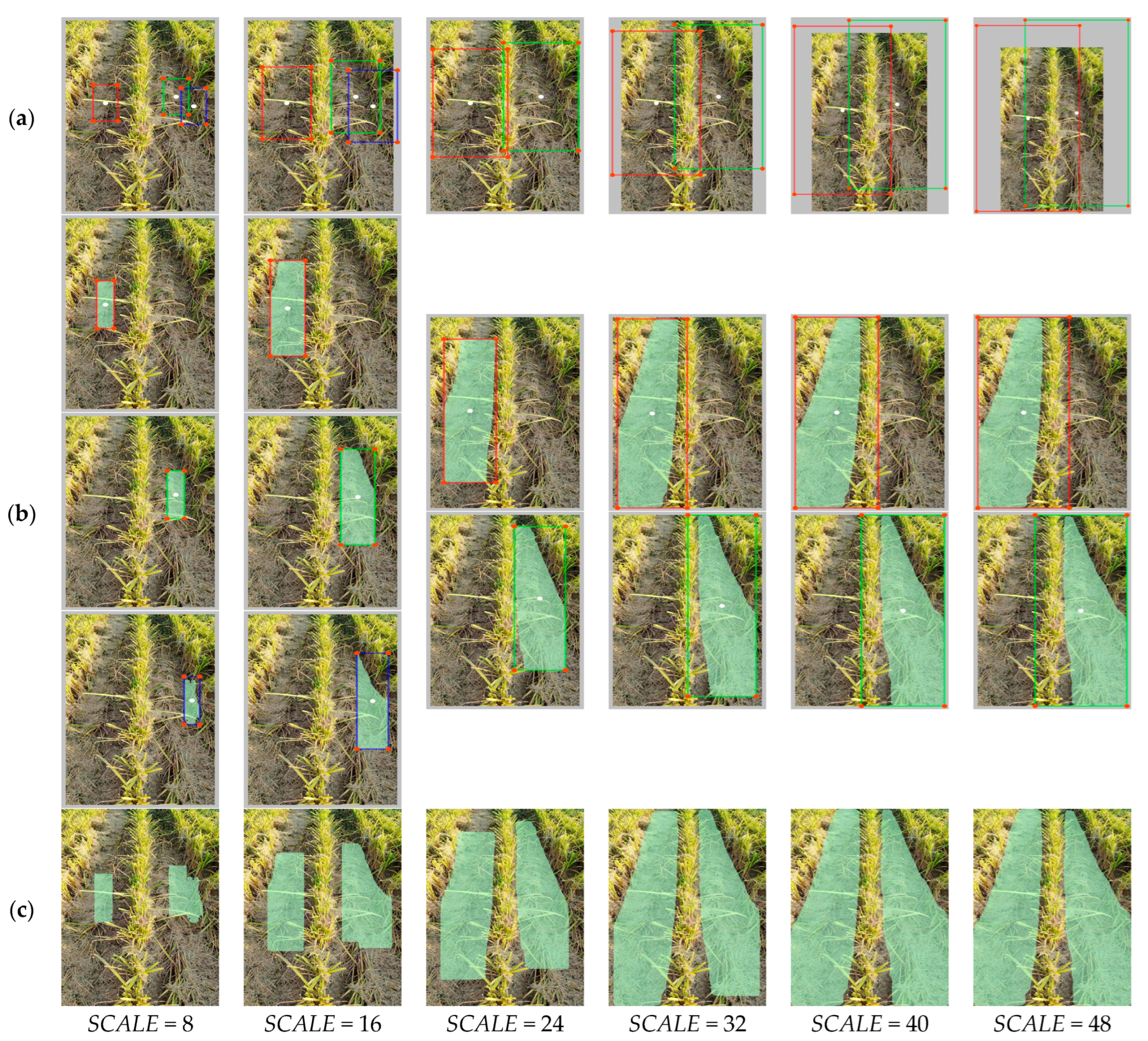

To explain the specific reasons for the poor segmentation performance of the original SMR, the prediction results of the region proposals in the test set are shown in

Figure 6; each of these images shows the top 100 region proposals (before NMS). It is clear that although the region proposals were above the row of rolled rice stubble, it is so small that it only covered a small part of the row.

According to

Section 2.2.2, the size of the region proposals can be changed by modifying the hyperparameters

SCALE and

RATIO. In the original SMR, the values of

SCALE and

RATIO are consistent with Mask R-CNN, which are

SCALE = 8 and

RATIO = {0.5, 1, 2} [

47]. On this basis, the

SCALE value of SMR was made to increase from 8 to 16, 24, 32, 40, and 48 at the same time as

RATIO varies by a factor of 0.2, 1, and 5 during the process of inference. The IoU performance of SMR and Mask R-CNN under simultaneous changes of

SCALE and

RATIO is shown in

Figure 7.

From

Figure 7 for the Mask R-CNN, changes in

SCALE and

RATIO barely improve IoU performance and bring it down. However, for SMR, as the value of

SCALE increased from 8 to 32, the IoU gradually increased and continued to increase from 32 to 48; the IoU did not increase significantly. In contrast, the change in the value of

RATIO has a less strong effect on the IoU, but there was still a certain pattern to follow, with all

SCALE conditions having higher values of IoU at 1.0×, i.e., at the original

RATIO. The performance of the mask at

SCALE = 40 and

RATIO = {0.5, 1, 2} is shown in

Table 2 in row SMR-40, which indicates that the quality of the mask exceeds that of Mask R-CNN. According to the above experimental results, the reason for the poor quality of the original SMR segmentation mask is that the

SCALE is too small, and using a larger value for prediction can effectively improve the quality of the mask; changing the

RATIO to deviate from the original would cause to a decrease.

In order to investigate the performance of SMR under different values of

SCALE of training, the SMR was trained using

SCALE = 16 and 24, respectively (the reason for not continuing to increase the

SCALE value is that the model converges slowly in the training process after further increase), and then adjusting the

SCALE value in the prediction process to optimize the mask quality. The results are shown in

Table 3, which indicates that increasing the value of

SCALE in the training process can improve the initial mask quality, but the optimal mask quality still needs to be achieved by adjusting the

SCALE in the prediction process, and there is no significant relationship between the optimal mask quality and the

SCALE value in the training process.

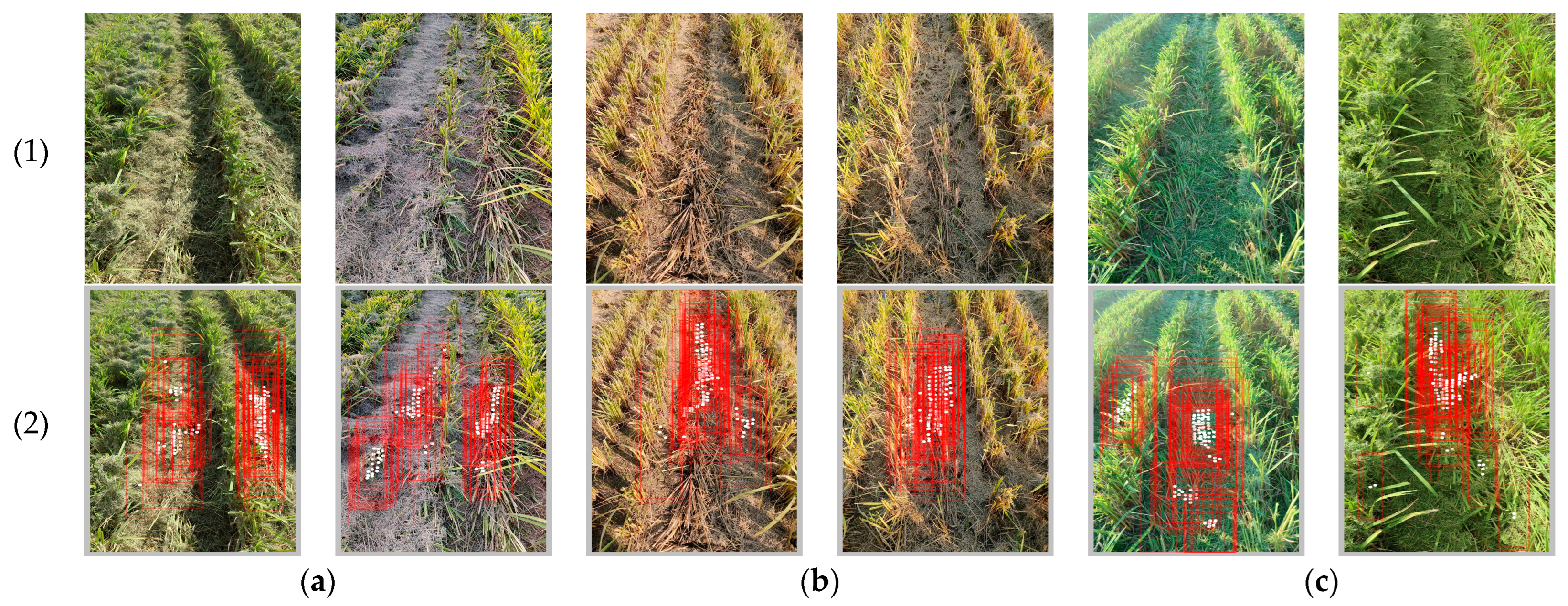

In order to visually explain the mechanism of the effect of variation in

SCALE on the prediction mask, the anchors, region proposals, and masks of the SMR in the prediction process under different

SCALE values were tracked, and one of the representative results was selected as shown in

Figure 8. For

SCALE = 8, 16, 24, 32, and 40, the model found the exact location of the stubble rows and presented at least one regional proposal centered on each of the rows, the difference being that for

SCALE = 8, the anchor size was small and the resulting region proposals were similarly small, covering only a small portion of the rolled stubble rows and therefore only a small portion of the mask. As the

SCALE increases, the size of the anchors increases, and so does the size of the region proposals. For the region proposals with the center point on the stubble row, the larger the size means that the complete stubble row is covered, and therefore the mask generated in the region proposals is more complete; at

SCALE = 32, the mask was able to cover almost the complete rolled rice row. As

SCALE continued to increase to 40 and 48, the anchors continued to increase, while the region proposals did not change significantly; in turn, the mask changes were not obvious.

The reason why the size of the region proposals increased as the anchor increased is that, according to

Table A1, as

SCALE increased, the size of the anchors increased, and from Equation (1), the larger the size of the anchors, the larger the size of the region proposals, and as

SCALE increases from 32 to 40 and 48 in

Figure 8, the size of the region proposals does not change significantly because the region proposals that are outside the scope of the image will be cropped out.

3.4. SMR Backbone Network Simplification Experiment

The performance of SMR-RS is shown in

Table 4, compared to SMR-40, which was obtained after optimizing SMR, the total parameters are reduced by 7.4%, the prediction speed is increased by 37.5%, the GPU occupation is reduced by 32.1%, the IoU value is increased by 1.5%, and the F1 value is increased by 0.6%. To achieve these, statistics are presented in the following paragraphs on the utilization of feature maps by SMR as a basis for targeted censoring of the backbone network.

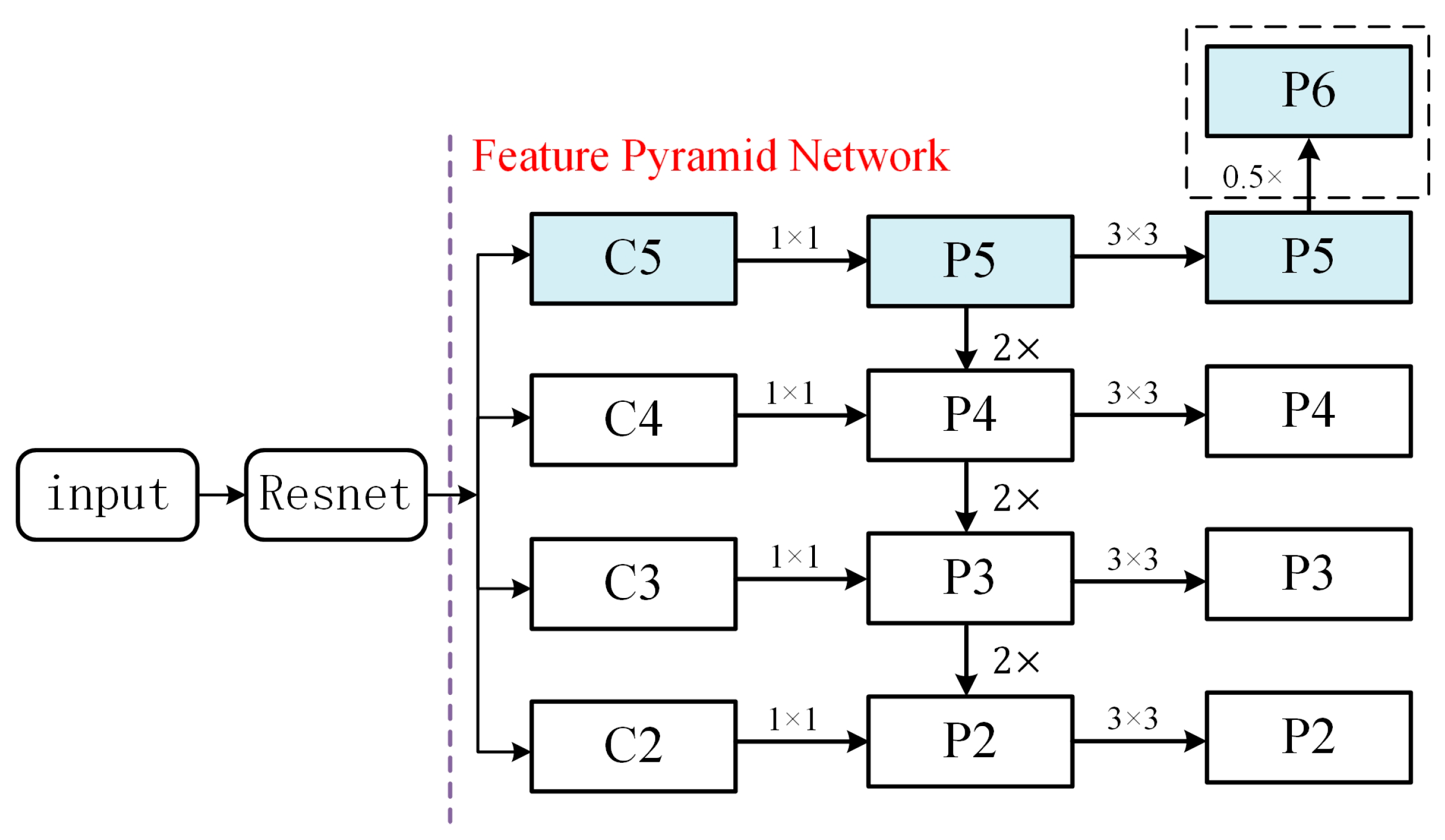

In the process of predicting the test set using SMR, it was found that most of the anchors with high scores had relatively similar sizes. Since the size of anchors generated by the same feature map is the same, it is guessed that most of the high-scoring anchors may come from the same feature map, and the feature map attribution of the anchors can be inferred from

Table A1 based on the size of the anchors. For example, it is known that the H × W of the area of one of the highest scoring anchors is 4096, and the hyperparameter

SCALE is 8, the

STRIDE is known to be 8 from

Table A1, and the anchor belongs to the feature map P3 according to the correspondence between the

STRIDE and the feature map. Based on this, the sizes of anchors with the top 100 scores predicted by SMR (trained at three different

SCALE) on the test set were counted, and the feature map attribution of anchors was also calculated, and the results are shown in

Table 5.

From

Table 6, it is clear that these anchors accounted for the vast majority on P5, a small percentage on P6, and 0 on P2, P3, and P4, so it can be assumed that feature maps P2, P3, and P4 did not contribute positively to the prediction results. To explore the effect of feature maps P5 and P6 on inference results, the SMR of the simplified backbone network was trained, and the trained model was tested under the following methods with

SCALE taken as 40:

Using feature maps P5 and P6.

Using feature map P5 only.

Using feature map P6 only.

Their performance on the test set is shown in

Table 6, where it can be seen that the segmentation performance is better in methods 1 and 2, with no degradation compared to the SMR-40, and was slightly improved in method 2 and underperformed in method 3.

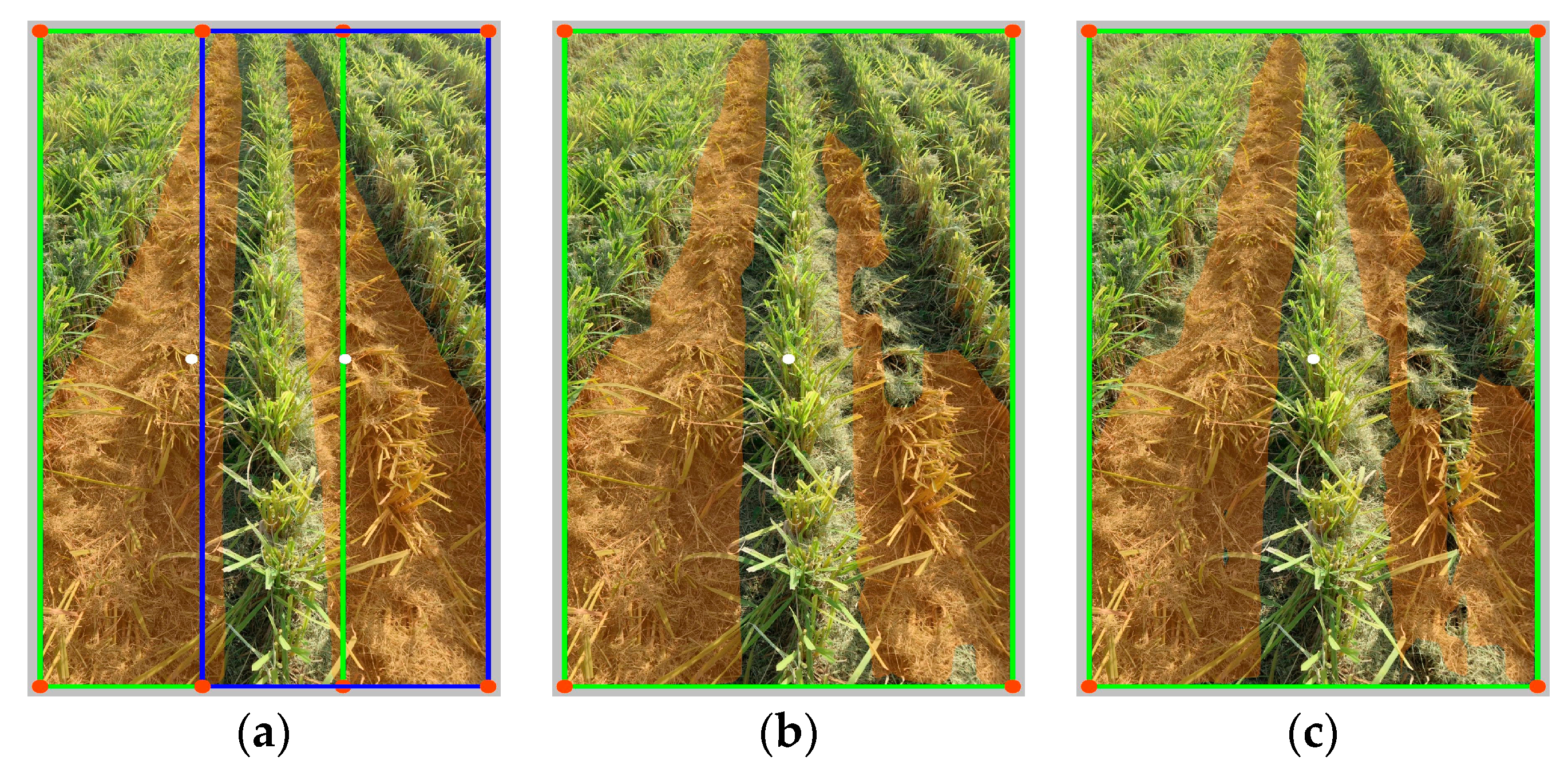

As shown in

Figure 9, both method 1 and method 3 perform less well than method 2 in terms of segmentation due to the influence of the feature map P6, which generated lower quality region proposal. Although the region proposal was identical for methods 1 and 3, the lack of semantic information in feature map P6 in method 3 caused a reduction in the quality of the mask.

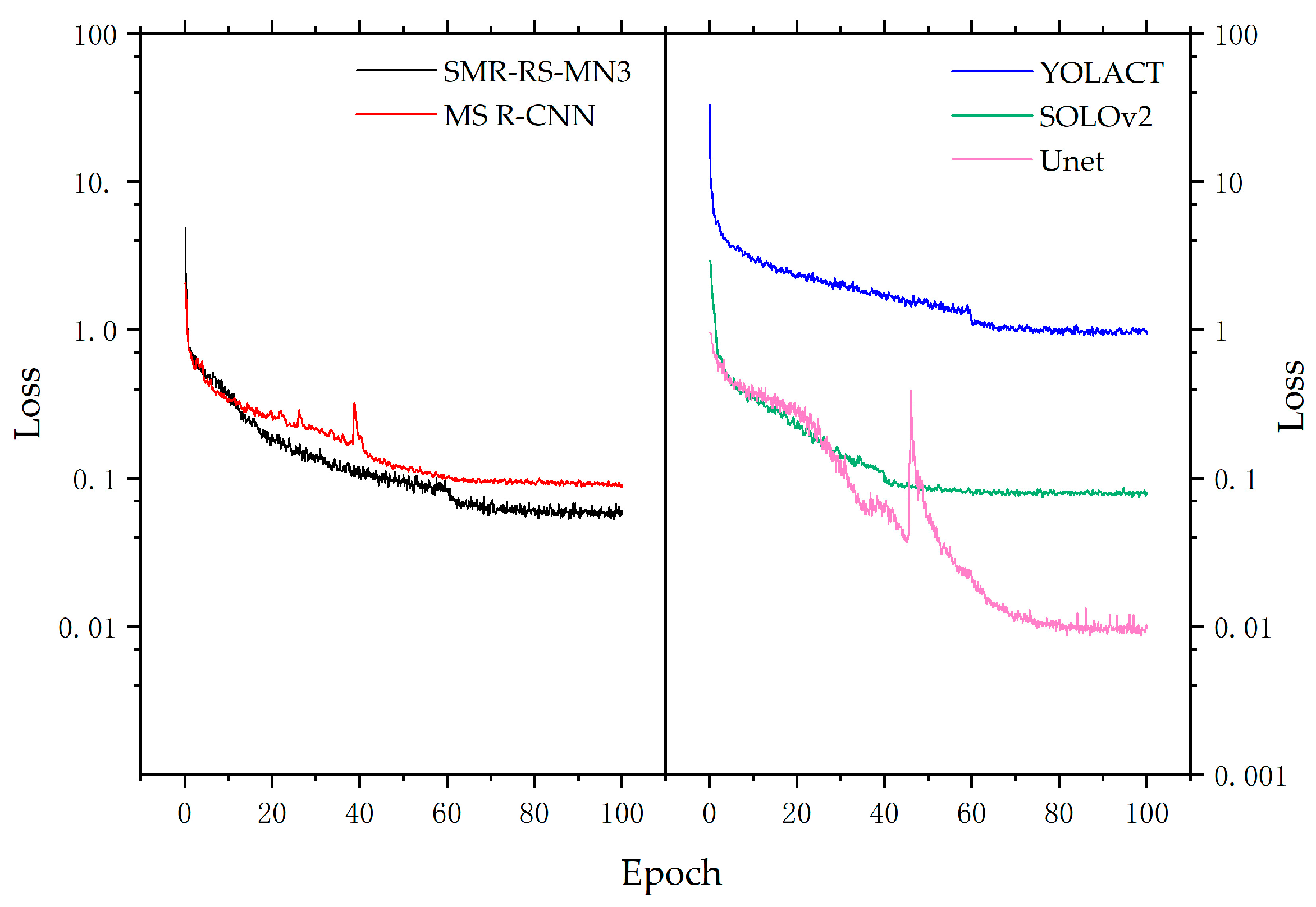

3.6. Comparison of the Performance of Different Models

The instance segmentation models SOLOv2 [

52] and Mask Scoring R-CNN [

53], YOLACT [

54], SMR-RS with MobileNetv3-Large [

55] as the backbone network feature extractor (SMR-RS-MN3) and semantic segmentation model Unet [

32] were trained according to the parameter settings in

Table 1, training losses are shown in

Figure 10, and the results obtained for each model on the test set are shown in

Table 8. SMR-RS slightly outperforms SOLOv2, YOLACT, and Mask Scoring R-CNN in terms of segmentation performance and has a significant advantage in terms of total parameters, GPU memory occupation, Flops, and running speed, outperforming SOLOv2 by 40.3%, 38.5%, 58.7% and 48.4%, YOLACT by 21.8%, 5.5%, 56.2% and 39.4% and Mask Scoring R-CNN by 37.4%, 41.1%, 56.9%, and 44.0%. Due to its lightweight backbone, the SMR-RS-MN3 has a further reduction in running time consumption and hardware resource consumption from the SMR-RS. However, this also resulted in a reduction in the quality of the segmentation. The performance of the SMR-RS-MN3 demonstrates the scalability of the SMR-RS. Although Unet has a significant inference speed advantage at lower resolutions, it has higher hardware requirements, consumes far more GPU memory and computational power (Flops), and is inferior to other models in segmentation accuracy.

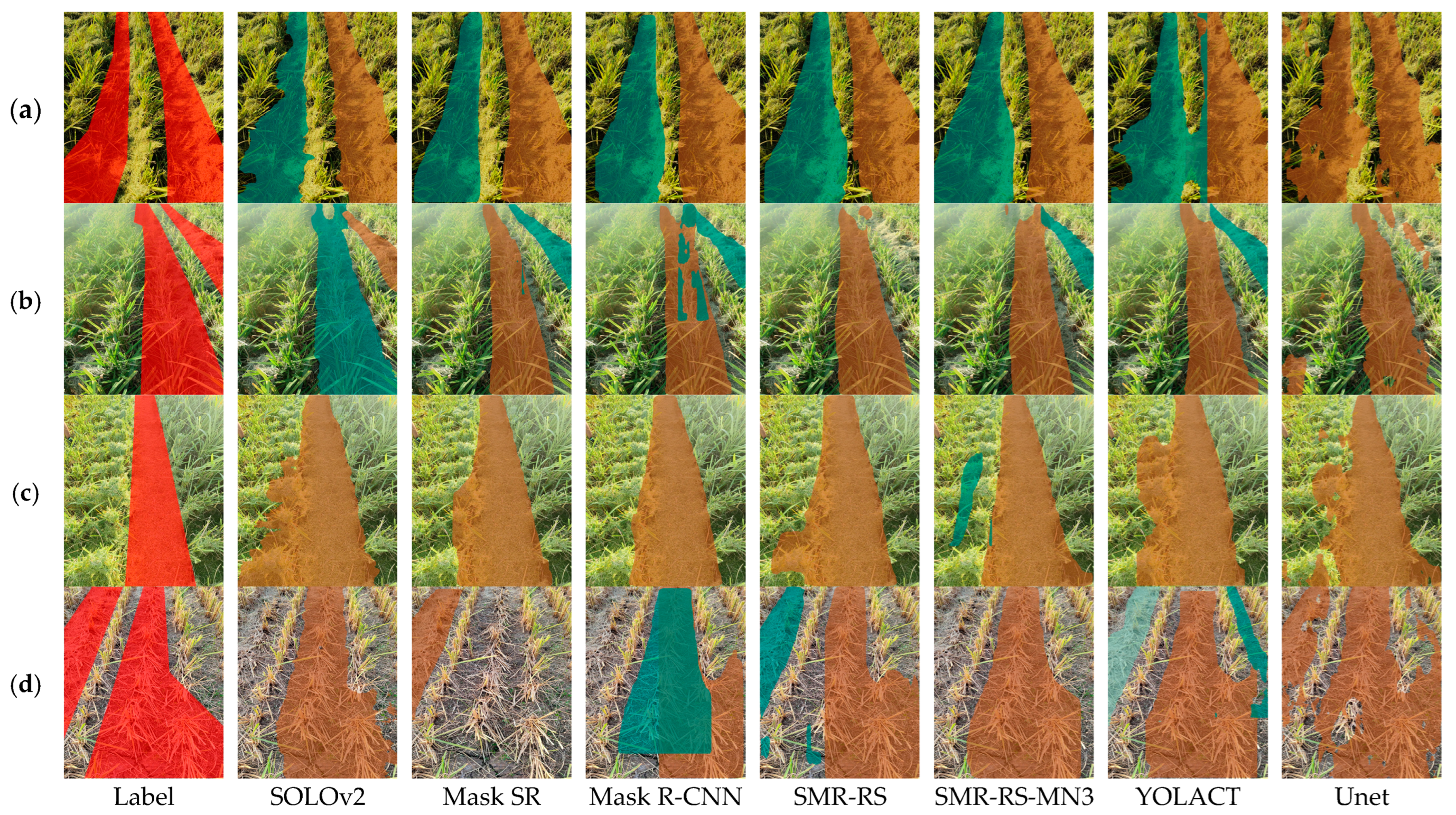

As shown in

Figure 11, the mask prediction results of the proposed model in this paper are compared with those of the state-of-the-art model; there are four representative images, as shown in (a), (b), (c), and (d). As shown in (d), for these two complexes rolled rice stubble rows, only SMR-RS and YOLACT identified all of them, but YOLOCT misidentified a non-rice stubble row, and as shown in (b); SMR-RS was insensitive to a small area of rice stubble rows. For these four images, SMR-RS-MN3 performed well overall, but for the stubble rows that were heavily straw-covered in (c), it identified a small area of unrolled rice stubble rows as rolled rice stubble rows; SOLOv2 also performed well, but the edge segmentation of rolled rice stubble rows is poor, as shown in (a), (c); Mask Scoring R-CNN has a serious under-recognition phenomenon, and the large area of rice stubble rows located in the center of (d) is not recognized; Mask R-CNNN has the phenomenon of repeated recognition, as shown in (b), (d); Unet only achieves semantic segmentation, and there are multiple small regions of false segmentation in these results.