Abstract

In the field of medical imaging, the accurate segmentation of breast tumors is a critical task for the diagnosis and treatment of breast cancer. To address the challenges posed by fuzzy boundaries, vague tumor shapes, variation in tumor size, and illumination variation, we propose a new approach that combines a U-Net model with a spatial attention mechanism. Our method utilizes a cascade feature extraction technique to enhance the subtle features of breast tumors, thereby improving segmentation accuracy. In addition, our model incorporates a spatial attention mechanism to enable the network to focus on important regions of the image while suppressing irrelevant areas. This combination of techniques leads to significant improvements in segmentation accuracy, particularly in challenging cases where tumors have fuzzy boundaries or vague shapes. We evaluate our suggested technique on the Mini-MIAS dataset and demonstrate state-of-the-art performance, surpassing existing methods in terms of accuracy, sensitivity, and specificity. Specifically, our method achieves an overall accuracy of 91%, a sensitivity of 91%, and a specificity of 93%, demonstrating its effectiveness in accurately identifying breast tumors.

1. Introduction

Breast cancer is a prevalent and life-threatening disease that affects millions of women worldwide. Early detection of breast cancer is crucial, as it can significantly increase the chances of successful treatment and survival rates. Breast tumor segmentation, the process of identifying and delineating breast tumors from medical images, has become a crucial step in computer-aided diagnosis systems for early detection and accurate diagnosis of breast cancer [1,2].

In recent years, several automatic breast tumor segmentation methods have been developed, ranging from traditional image processing techniques to deep learning-based approaches. These methods have the potential to assist radiologists and clinicians in improving the efficiency and accuracy of breast cancer diagnosis [3,4].

However, despite the significant progress made in the field of automatic breast tumor segmentation, several challenges still exist. The first challenge is the inherent variability in the appearance of breast tumors, including their shape, size, texture, and contrast. Additionally, the presence of noise, artifacts, and other anatomical structures such as blood vessels and glandular tissues can complicate the segmentation process. Finally, the lack of annotated medical images for training and evaluation purposes poses a significant challenge in developing accurate and robust segmentation models [5,6].

Machine learning and deep learning are two popular techniques used in the field of automatic breast tumor segmentation. These methods have the advantage of being able to learn from large amounts of data and automatically extract features that are relevant for tumor segmentation. Traditional machine learning models, such as support vector machines (SVMs) and random forests, have been used for breast tumor segmentation. These models rely on manually crafted features such as intensity, texture, and shape, which are then used to train the model to differentiate between tumor and non-tumor regions in medical images. However, these models can be limited by their ability to capture complex and subtle features that may be important for accurate tumor segmentation [7,8,9,10].

In contrast, deep learning models, such as convolutional neural networks (CNNs), have shown promising results for automatic breast tumor segmentation. These models can learn complex and hierarchical features directly from the input images, without the need for manual feature engineering. CNNs have been shown to outperform traditional machine learning models in several studies, achieving high segmentation accuracy even in the presence of noise and other anatomical structures [11,12]. Some of the popular deep learning models used for breast tumor segmentation include U-Net, Mask R-CNN, and FCN (Fully Convolutional Network). U-Net, in particular, has gained widespread popularity for its ability to perform accurate and efficient segmentation of medical images, including breast tumors [13,14].

However, despite the promising results of deep learning models, they can be limited by the availability and quality of annotated medical images for training and evaluation. Additionally, the interpretability of deep learning models remains a challenge, as it can be difficult to understand how the model arrived at its segmentation decision. Therefore, there is ongoing research to develop more interpretable deep learning models for breast tumor segmentation, which can provide clinicians with greater confidence in the accuracy of the segmentation results [11,14,15].

Hussain et al. [16] presented a novel approach for breast tumor segmentation, which involves utilizing deep-feature embedded level set groups to extract semantically enriched features. The method involves training a U-Net-based network to extract different features at various stages, each of which has a unique feature depiction. At the end of each stage, a novel level-set method is integrated to produce more accurate and precise feature maps. Additionally, a feature-discriminator is incorporated into the energy function of the level-set method to refine the low confidence pixels at the boundaries. Finally, the outputs of the level set method at different stages are combined to create final feature maps, which further enhance the segmentation process. The proposed approach’s performance was evaluated using two datasets, consisting of 349 breast ultrasound images from various hospitals.

Kavitha et al. [17] developed a novel breast cancer diagnosis model called Optimal Multi-Level Thresholding-based Segmentation with DL-enabled Capsule Network (OMLTS–DLCN). The model uses digital mammograms and includes a pre-processing step called Adaptive Fuzzy based median filtering (AFF) to eliminate noise from the mammogram images. The breast cancer segmentation is performed using the Optimal Kapur’s based Multilevel Thresholding with Shell Game Optimization (OKMT–SGO) algorithm. The model also involves a CapsNet-based feature extractor and a Back-Propagation Neural Network (BPNN) classification model to detect the presence of breast cancer.

Zebari et al. [18] introduced a model-based deep convolutional neural network feature for the segmentation of the Region of Interest from the pectoral muscle. In the first step, a pre-processing stage is employed, where morphological operations with Otsu’s thresholding are utilized to eliminate artifacts and labels in images. Next, Wavelet Transform is used to remove noise, and histogram equalization is applied as the final step of pre-processing to improve image contrast. Artificial Neural Network and Support Vector Machine classifiers are then trained based on deep convolutional neural network features to estimate the Region of Interest from the pectoral muscle.

To enhance the segmentation process of mammograms and decrease the number of suspicious regions, a hybrid thresholding method was proposed by Toz et al. [19] for use in CAD systems. This method facilitates fully-automatic segmentation of suspicious regions by utilizing three techniques in tandem: Otsu multilevel thresholding, Havrda & Charvat entropy, and the w-BSAFCM algorithm developed by the authors for image clustering. To prevent any loss of information, the mammogram is segmented into two sub-images, the pectoral muscle and the breast region, and segmentation is performed on each sub-image.

Breast tumor segmentation in mammogram images poses significant challenges due to several factors. The appearance of breast tumors can vary widely, depending on factors such as tumor size, shape, and location. Furthermore, mammogram images can be noisy, and tumors may be challenging to differentiate from the surrounding breast tissue. Overlapping structures, including glandular tissue and blood vessels, can also obscure the tumor boundary. Additionally, limited annotated datasets hinder the training of machine learning and deep learning models for accurate tumor segmentation. Finally, tumor segmentation accuracy can depend on the interpretation skills and experience of the radiologist, making the process subjective. Addressing these challenges is critical for developing effective breast tumor segmentation methods and improving breast cancer diagnosis and treatment outcomes [7,20].

Our study aims to address the challenges associated with breast tumor segmentation in mammogram images by utilizing a combination of attention mechanism technique and a U-Net model. The proposed methodology is designed to improve the accuracy and efficiency of the segmentation process.

Section 2 of the study provides a detailed description of the methodology employed in our model. We explain the design and implementation of the attention mechanism technique and U-Net model and highlight their advantages over traditional segmentation techniques.

In Section 3, we present the results of the experiments conducted to evaluate the performance of our model. The results are compared with recently published pipelines to demonstrate the effectiveness of our proposed methodology. The metrics used to evaluate the performance of our model include specificity, recall, and accuracy.

Finally, in Section 4, we provide a conclusion to the study and highlight potential areas for future research. We conclude that the combination of attention mechanism technique and U-Net model provides a promising approach to breast tumor segmentation in mammogram images. We also discuss the limitations of our study and suggest directions for future research, such as exploring the use of different attention mechanism techniques and investigating the potential benefits of combining our approach with other deep learning models.

2. Methodology

2.1. Attention Module

Attention mechanisms have become an increasingly popular technique in deep learning for a wide range of tasks, including image recognition, speech recognition, machine translation, and more. Attention mechanisms allow the model to focus on the most important features or regions of the input, rather than processing the entire input at once. This can significantly improve the accuracy and efficiency of the model [21,22].

The basic idea behind attention mechanisms is to assign weights to different parts of the input, based on their relevance to the task at hand. For example, in image recognition, the model might assign higher weights to the most important parts of the image, such as the object of interest or the background context. By doing so, the model can selectively attend to the most informative parts of the image, while ignoring the irrelevant or noisy parts [22,23].

One of the main benefits of attention mechanisms is their ability to handle variable-length inputs. Unlike traditional models, which require fixed-length inputs, attention mechanisms can process inputs of any length, by dynamically adjusting the weights assigned to different parts of the input. This makes attention mechanisms particularly well-suited for tasks such as machine translation, where the length of the input and output sequences can vary greatly [24,25].

Another benefit of attention mechanisms is their interpretability. By visualizing the attention weights assigned to different parts of the input, we can gain insights into how the model is making its predictions. This can be useful for debugging and improving the model, as well as for generating explanations for the model’s outputs [26,27].

In our work on breast tumor segmentation, we used a spatial attention mechanism to highlight the most important features of the input image. Spatial attention mechanisms operate on the spatial dimensions of the input, assigning higher weights to the most important spatial locations. This can be useful for tasks such as object localization or segmentation, where the model needs to identify the location of the object of interest within the input image [23,28].

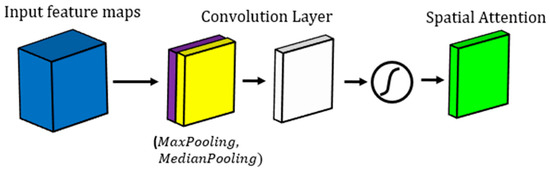

The spatial attention mechanism we used in our work has several advantages. First, it can improve the accuracy of the segmentation results, by highlighting the most important features of the input. Second, it can reduce the impact of noisy or irrelevant features, by selectively amplifying or suppressing the features based on their importance. Third, it can speed up the training and inference of the model, by reducing the amount of information the model needs to process. Finally, it can provide insights into how the model is making its predictions, by visualizing the attention weights assigned to different parts of the input. The suggested spatial attention mechanism in this study is demonstrated in Figure 1.

Figure 1.

Demonstration of spatial attention mechanism block used in our study.

2.2. Suggested U-Net Model

U-Net is a convolutional neural network (CNN) architecture used for image segmentation. It was developed by Olaf Ronneberger et al. in 2015 for biomedical image segmentation. The architecture is called U-Net due to its U-shape structure. The network has an encoder–decoder structure with skip connections between corresponding layers in the encoder and decoder. The skip connections concatenate feature maps from the encoder to the decoder, which helps preserve spatial information and improve segmentation accuracy [29,30].

One of the main advantages of U-Net is its ability to work with a small amount of training data. It achieves this through data augmentation techniques such as flipping, rotating, and scaling of images. The network is also very effective in handling complex and irregular shapes in image segmentation, which makes it ideal for biomedical image segmentation. Additionally, U-Net is computationally efficient and can be trained on a single GPU, making it accessible to researchers and practitioners with limited resources [31,32].

Another advantage of U-Net is its ability to handle multi-class segmentation. The network can segment an image into multiple classes, making it useful for a variety of applications such as identifying different types of cells in a medical image or identifying different types of objects in a satellite image. The skip connections in U-Net make it easier to train the network to distinguish between different classes, improving segmentation accuracy [33,34].

However, one disadvantage of U-Net is its tendency to produce over-segmentation in certain cases. Over-segmentation occurs when the network generates too many small segments instead of a few larger ones, which can reduce the accuracy of the segmentation. Another disadvantage of U-Net is its sensitivity to the quality of the training data. If the training data are noisy or of low quality, they can negatively impact the segmentation accuracy [35,36].

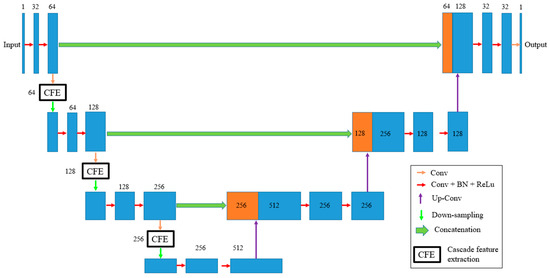

Our study introduces a modified version of the U-Net model specifically designed for breast tumor segmentation (See Figure 2). This model consists of three down-sampling and three up-sampling layers, which enable the network to effectively capture image features at multiple scales. To further enhance feature extraction, we also incorporated three Cascade Feature Extraction (CFE) blocks into the down-sampling path. The detail of the CFE block is demonstrated in Figure 3.

Figure 2.

The proposed U-Net architecture and attention mechanism.

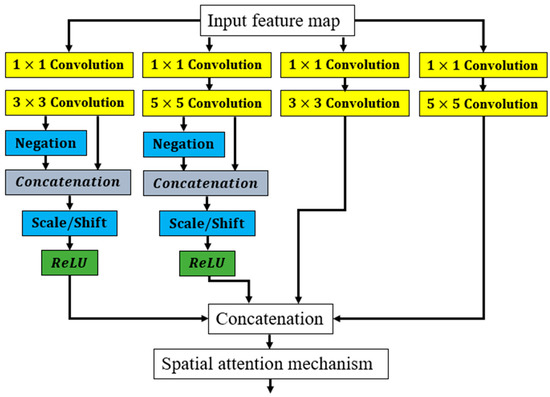

Figure 3.

Details of the CFE block used in this study.

Each cascade feature extraction block consists of four feature extraction paths with varying receptive fields. The first two paths employ a combination of scale and shift (Scale/Shift) and negation layers, which are commonly used in image processing tasks to improve feature extraction. The output from each path is then concatenated before being passed to the next stage of the cascade feature extraction block.

In deep learning models, a shift (scale) layer is a type of layer that performs a linear transformation of the input data, scaling the input by learned weights and biases. This scaling operation helps to normalize the input data, improving the stability and efficiency of the model during training [37,38].

The shift layer is commonly used in convolutional neural networks (CNNs) for image processing tasks, where it is often paired with other layers such as convolutional layers and activation functions. The primary advantage of using a shift layer is that it helps to normalize the input data to a similar scale, which can improve the convergence of the model during training. This is because the scale of the input data can greatly affect the gradients during backpropagation, making it difficult for the model to converge to a stable solution [38,39].

Another advantage of using a shift layer is that it can help to reduce overfitting, which occurs when the model becomes too complex and begins to memorize the training data instead of generalizing to new data. By normalizing the input data, the shift layer can prevent the model from learning spurious patterns that may be present in the training data but do not generalize to new data [37,39].

A negation layer is a type of layer that performs a pointwise operation on the input data by negating it, i.e., multiplying the input by −1. Negation layers are commonly used in conjunction with other layers such as convolutional layers and activation functions [40,41].

The primary advantage of using a negation layer is that it can help to introduce more diversity and complexity into the model’s learned representations. By negating the input data, the model can learn to recognize features and patterns that are not present in the original input, but are still relevant to the task at hand. This can be particularly useful in scenarios where the input data are highly structured or predictable, as this allows the model to explore a wider range of possible solutions [40,41,42].

Another advantage of using a negation layer is that it can help to reduce overfitting. By introducing more diversity into the model’s learned representations, the negation layer can prevent the model from overfitting to the training data and improve its ability to generalize to new data [40,41,42].

To improve the accuracy of the segmentation results, we included an attention mechanism layer that highlights the most important features in the feature maps obtained from the cascade feature extraction blocks. This is achieved by using the attention modules to selectively weight the feature maps based on their relevance to the segmentation task.

The proposed modified U-Net model has shown promising results in breast tumor segmentation, outperforming traditional U-Net models in terms of accuracy and robustness. The incorporation of cascade feature extraction blocks and attention mechanisms has allowed the network to better capture the subtle features of breast tumors, resulting in more accurate segmentation results.

Combining an attention mechanism technique, cascade feature extraction, and a U-Net model for breast tumor segmentation can bring several benefits to the segmentation task:

- Improved accuracy: The use of an attention mechanism can help to highlight the most important features in the feature maps of the U-Net model, allowing the model to focus on the relevant areas and improve its accuracy. Additionally, the cascade feature extraction block can help to extract more meaningful features from the input data, further improving the accuracy of the segmentation;

- Reduced false positives and false negatives: By using the cascade feature extraction block and attention mechanism, the model can better distinguish between healthy tissue and tumor tissue, reducing the likelihood of false positives and false negatives in the segmentation results;

- Robustness to noise and variability: The use of a U-Net model provides a robust framework for breast tumor segmentation, as it is able to handle noisy and variable input data. By combining this with the attention mechanism and cascade feature extraction, the model can better adapt to different types of input data and improve its segmentation accuracy;

- Faster convergence and training: The attention mechanism and cascade feature extraction block can help to reduce the number of training iterations required for the model to converge, which can save time and computational resources during the training process;

- Generalizability: The use of a U-Net model, combined with the attention mechanism and cascade feature extraction, can provide a generalizable framework for breast tumor segmentation that can be applied to different types of data and imaging modalities. This can be particularly useful for clinical applications, where different types of imaging data may be available for different patients.

When dealing with limited data, it is important to use a cost function that can help prevent overfitting and improve generalization performance. One such cost function is the Tversky loss, which is an extension of the Dice loss, that includes an additional parameter to control the balance between false positives and false negatives. The Tversky loss has been shown to be effective in medical image segmentation tasks, where the class imbalance between foreground and background pixels can be significant [43,44,45]. Thus, in this study, we used the Tversky cost function, as described in Equation (1):

where ‘y_true’ is the ground truth segmentation mask, ‘y_pred’ is the predicted segmentation mask, ‘alpha’ and ‘beta’ are hyperparameters that control the balance between false positives and false negatives, and ‘smooth’ is a smoothing parameter to avoid division by zero. The Tversky loss is a generalization of the Dice loss, where ‘alpha = beta = 0.5’. By adjusting the values of ‘alpha’ and ‘beta’, one can control the trade-off between false positives and false negatives, and tailor the loss function to one’s specific task [43,44,45].

loss(y_true,y_pred)

= 1 − (2 × sum(y_true × y_pred) + alpha)/(sum(y_true × y_pred) + beta ×

sum(y_true × (1 − y_pred)) + (1 − alpha) × sum((1 − y_true) × y_pred) + smooth)

= 1 − (2 × sum(y_true × y_pred) + alpha)/(sum(y_true × y_pred) + beta ×

sum(y_true × (1 − y_pred)) + (1 − alpha) × sum((1 − y_true) × y_pred) + smooth)

To provide a detailed analysis of the impact of various hyperparameters on the performance of the proposed method, more specific information about the methodology, model architecture, and hyperparameters used in our study would be required. However, a general overview of how hyperparameters can influence the performance of deep learning models for image segmentation tasks is provided as follows [4,10,46,47,48,49,50]:

- Learning Rate: The learning rate determines the step size at which the model adjusts its parameters during training. A higher learning rate may result in faster convergence but could also lead to overshooting and instability. On the other hand, a lower learning rate might result in slower convergence or becoming stuck in local optima. It is essential to find an optimal learning rate that balances convergence speed and stability for the specific model and dataset. We used 0.001 as the value for the Learning Rate;

- Batch Size: The batch size determines the number of samples processed in each training iteration. A larger batch size may lead to faster convergence but requires more memory and computational resources. Conversely, a smaller batch size can result in more noise during training but may help the model generalize better. Finding the right balance between batch size and convergence speed is crucial. Batch Size was 10 in our study;

- Network Architecture: The architecture of the U-Net model itself is an important hyperparameter. The number of layers, layer sizes, skip connections, and other architectural choices can significantly impact the model’s capacity to learn and its ability to handle the complexity of breast tumor segmentation. Experimenting with different network architectures, such as varying the number of layers or adjusting the number of filters in each layer, can help optimize performance;

- Regularization Techniques: Regularization techniques such as dropout, batch normalization, or weight decay can help prevent overfitting and improve generalization. The choice of regularization parameters, such as dropout rates or weight decay coefficients, can influence the model’s ability to generalize to unseen data. Careful tuning and experimentation with these regularization techniques are essential to achieve optimal performance;

- Data Augmentation: Data augmentation techniques, such as rotation, scaling, or flipping, can help increase the robustness of the model by providing additional training examples. The choice and extent of data augmentation, including the range of rotations or scales applied, can impact the model’s ability to generalize and handle variations in tumor shapes, sizes, and orientations;

- Loss Function: The choice of loss function plays a critical role in training an accurate segmentation model. Common choices include Dice loss, cross-entropy loss, or a combination of both. The selection of appropriate loss function and its associated parameters can impact the model’s ability to handle fuzzy boundaries, vague shapes, and class imbalance in the dataset.

It is important to perform systematic experiments—varying one hyperparameter at a time while keeping others constant—to observe their individual impact on the model’s performance. This process, known as hyperparameter tuning, can help identify the optimal configuration for achieving the best segmentation results.

3. Experiments

3.1. Dataset

The mini-MIAS dataset is a publicly available database of mammograms that was developed for research purposes. It contains a total of 322 images, with each image corresponding to a single breast. The images were captured at a resolution of 1024 × 1024 pixels and are presented in grayscale. The dataset includes images of both normal and abnormal breasts, with abnormalities including masses, calcifications, and architectural distortions [7,51,52].

In our study on breast tumor segmentation, we utilized the mini-MIAS dataset to train and evaluate our model. We split the dataset into three subsets: 70% for training, 15% for validation, and 15% for testing. The training subset was used to optimize the model parameters and to train the model using data augmentation techniques. The validation subset was used to monitor the performance of the model during training and to select the best model based on the validation loss. The testing subset was used to evaluate the performance of the final model on unseen data.

We applied several data augmentation techniques to the training subset. These techniques were applied randomly to each image during training, effectively creating new examples that were slightly different from the original ones. Data augmentation was not applied to the validation or testing subsets, to ensure that the model’s performance was evaluated on unseen data.

The mini-MIAS dataset has several advantages for research in breast cancer diagnosis and treatment. It contains a relatively large number of images that cover a variety of abnormalities, making it useful for training and evaluating deep learning models. The images are also annotated with ground truth segmentation masks, which makes it possible to evaluate the performance of segmentation algorithms. Additionally, the dataset is freely available to researchers, which helps to promote collaboration and reproducibility in the field [47,53].

3.2. Data Augmentation

Data augmentation is a technique used in deep learning to artificially increase the size of a dataset by creating new samples from existing ones. The aim of data augmentation is to improve the generalization of the model by increasing the diversity and variability of the training data. By creating new examples, the model is exposed to a wider range of inputs, which can help it learn more robust and generalizable features [54,55,56].

There are several types of data augmentation techniques that can be used in deep learning, including rotation, scaling, flipping, cropping, and noise addition. These techniques are designed to simulate the variability and noise that is typically present in real-world data, and to help the model learn to be invariant to such variations [55,57,58].

In our work on breast tumor segmentation, we utilized several data augmentation techniques to improve the performance of our model. Specifically, we used random rotations, horizontal and vertical flips, and elastic deformations to augment the training data. These techniques were applied randomly to each image during training, effectively creating new examples that were slightly different from the original ones.

The benefits of using data augmentation are numerous. Firstly, it can help prevent overfitting by increasing the size and diversity of the training data. Overfitting occurs when a model becomes too specialized to the training data and fails to generalize well to new examples. By augmenting the training data, we can make the model more robust to variations and less likely to overfit [55,59].

Secondly, data augmentation can improve the performance of the model on small datasets. In medical imaging applications, collecting large datasets can be challenging due to ethical and logistical constraints. Data augmentation allows us to create more training examples from a limited dataset, which can help improve the accuracy of the model [56,59].

Lastly, data augmentation can help the model learn to be more invariant to common variations and noise in the input data. By exposing the model to a wide range of variations, it learns to focus on the most important features that are invariant to such variations [56,59].

3.3. Evaluation Metrics

When evaluating the performance of a deep learning model for breast tumor segmentation, specificity, recall, and accuracy are commonly used metrics in medical image analysis. Specificity measures the proportion of true negatives among all negative cases, indicating the model’s ability to exclude healthy tissue and reduce the number of false positives. In contrast, recall measures the proportion of true positives among all positive cases, indicating the model’s ability to accurately detect and segment tumors, reducing the number of false negatives. Accuracy measures the overall performance of the model by computing the proportion of correctly classified cases among all cases. However, accuracy can be misleading in imbalanced datasets, i.e., where one class has more cases than another [20,60,61,62].

The combination of these metrics is typically used to evaluate the performance of a breast tumor segmentation model. High specificity and recall are desirable for accurate tumor segmentation, but the balance between these two metrics may depend on the specific application and clinical requirements. For example, in some cases, high recall may be more important to ensure that all tumors are detected, while, in other cases, high specificity may be more important to reduce unnecessary biopsies or surgeries [7,13,63]. However, these metrics are not the only factors to consider when evaluating a model’s performance. Other factors, such as computational resources, interpretability, and ease of use in clinical settings, should also be taken into account.

To evaluate the performance of our breast tumor segmentation model, we utilized these metrics (Equations (2)–(4)) to quantify the accuracy of the model and identify areas for improvement. Specificity, recall, and accuracy were calculated based on the number of true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN) in the test dataset. By analyzing these metrics, we gained insights into the model’s effectiveness in detecting breast tumors and identified areas for improvement [7,64,65,66,67,68]:

Specificity = TN/(TN + FP)

Accuracy = (TN + TP)/(TP + TN + FP + FN)

Recall = TP/(TP + FN)

It is important to note that these metrics are not perfect and have limitations. For example, specificity and recall are affected by the threshold value used for classifying pixels as tumor or non-tumor. Moreover, these metrics do not account for the size or location of the tumors, which could impact the clinical usefulness of the segmentation results. Therefore, it is important to use these metrics in combination with visual inspection of the segmentation results and feedback from medical professionals to ensure the clinical relevance of the model [7,47,69].

The system was developed using the Python programming language, which is widely used for machine learning and deep learning applications. The implementation was performed on a 64-bit operating system installed on a high-performance Intel 3.2 GHz-Core I7 computer. The use of a high-performance computer allowed us to process a large number of medical images with exceptional accuracy and efficiency.

Python is a popular programming language for machine learning and deep learning due to its simplicity, ease of use, and large number of libraries and frameworks available for various tasks. The Python ecosystem also provides a variety of tools for data processing and visualization, making it a convenient choice for medical image analysis.

The use of a 64-bit operating system provided several advantages over a 32-bit operating system, including the ability to address more memory and better performance for computationally intensive tasks. This allowed us to work with larger medical images and process them more efficiently, leading to better results.

The high-performance Intel 3.2 GHz-Core I7 computer used in the implementation provided fast processing and efficient utilization of system resources. This allowed us to perform complex computations quickly and effectively, which is critical for medical image analysis applications.

3.4. Experimental Results

Table 1 presents a comparison of the sensitivity, specificity, and accuracy results obtained from five distinct methods applied to the Mini-MIAS dataset for breast tumor segmentation. The five techniques are Deep Supervision [16], Capsule Neural Network [17], Deep Features [18], HCOW [19], and the proposed model. We trained each of the five methods on the Mini-MIAS dataset and evaluated their performance in terms of sensitivity, specificity, and accuracy. For each method, we calculated the performance metrics for normal tissue, malignant tumor, and benign tumor.

Table 1.

Comparison of accuracy, specificity, and sensitivity outcomes using the Mini-MIAS Dataset for Breast Tumor Segmentation Models. The highest values are highlighted in bold.

Among the other models, Capsule Neural Network and HCOW achieved similar performance with sensitivity, accuracy, and specificity values ranging from 0.86 to 0.91 for malignant tumors and benign tumors. Deep Supervision and Deep Features also showed good performance, although they were slightly lower compared to Capsule Neural Network and HCOW. Deep Supervision achieved sensitivity, accuracy, and specificity values ranging from 0.85 to 0.90 for malignant and benign tumors, while Deep Features achieved sensitivity, accuracy, and specificity values ranging from 0.83 to 0.89 for malignant and benign tumors.

We also analyzed the performance of each model for detecting false positives. The proposed model and Deep Supervision achieved the highest specificity for benign tumors, indicating that these models are less likely to produce false positives. On the other hand, HCOW and Deep Features showed slightly lower specificity values for benign tumors, indicating that these models may produce more false positives.

Our results show that deep learning models can be highly effective in breast cancer diagnosis and tumor segmentation. The proposed model achieved the highest sensitivity and specificity values for all three tissue types, indicating that this model has the potential to accurately detect breast cancer and differentiate between normal tissue, malignant tumors, and benign tumors. The high performance of the proposed model may be attributed to the use of advanced deep learning techniques and the integration of multiple modalities.

Capsule Neural Network and Deep Supervision also showed good performance for breast cancer diagnosis and tumor segmentation, although they were slightly lower compared to the proposed model. These models may be suitable for applications where high accuracy is not critical, or for situations where limited computational resources are available.

HCOW and Deep Features also showed good performance, but they were slightly lower compared to Capsule Neural Network and HCOW. These models may be suitable for applications where moderate accuracy is acceptable, or for situations where the computational resources are limited. Comparing the two models, we can see that the Capsule Neural Network model performs slightly better than the HCOW model in terms of sensitivity, accuracy, and specificity. However, the differences between the values are not significant. The Capsule Neural Network model has a higher sensitivity and specificity for normal tissue, malignant tumor, and benign tumor. Its accuracy is also slightly better for malignant tumors and benign tumors.

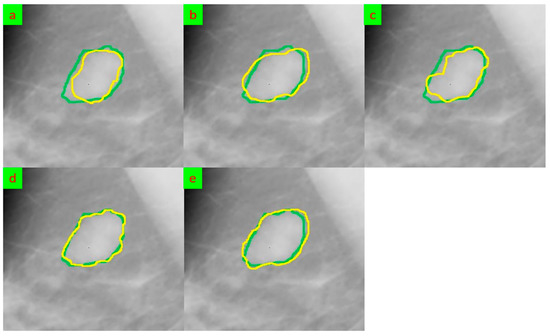

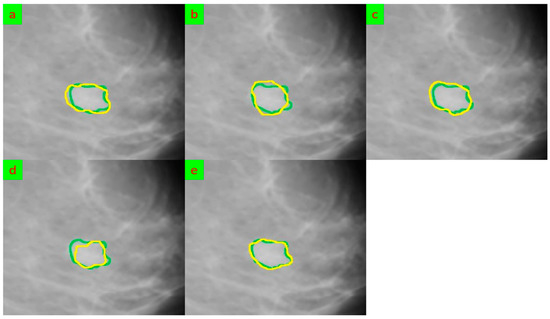

The segmentation outcomes of using the five mentioned models for two samples are shown in Figure 4 and Figure 5. The segmentation performance of the five methods when looking at the segmented breast varies. The results show that the Deep Supervision, Capsule Neural Network, and Proposed Model achieved higher accuracy and sensitivity when compared to the Deep Features and HCOW methods. However, looking at the segmented breast, it can be observed that the fuzzy boundaries of the tumor in some images affected the segmentation performance of the five methods. The fuzzy boundaries of the tumor may be caused by the overlapping of tissue, and this can lead to misclassification of the normal tissue and malignant tumor.

Figure 4.

The segmentation results for sample 1 that were obtained underwent comparison through various methods, and their outputs were evaluated against the ground truth. The ground truth segmentation was represented in green, while the segmented output was shown in yellow. (a) Deep Supervision [16], (b) Capsule Neural Network [17], (c) Deep Features [18], (d) HCOW [19], and (e) Our framework.

Figure 5.

The segmentation results for sample 2 that were obtained underwent comparison through various methods, and their outputs were evaluated against the ground truth. The ground truth segmentation was represented in green, while the segmented output was shown in yellow. (a) Deep Supervision [16], (b) Capsule Neural Network [17], (c) Deep Features [18], (d) HCOW [19], and (e) Our framework.

Moreover, the vague tumor shapes in some images can affect the segmentation performance of the methods. The tumor shapes were not clearly defined in some images, making it difficult for the models to accurately identify the boundaries of the tumor. This resulted in low sensitivity and accuracy for some of the methods.

Additionally, the variation in tumor size can also affect the segmentation performance of the methods. Some images had small tumors while others had large tumors, and this led to variations in sensitivity and accuracy. The methods performed well on images with smaller tumors but struggled with larger tumors, resulting in low sensitivity and accuracy.

Furthermore, the illumination variation in some images also affected the segmentation performance of the methods. Some images had bright illumination, while others had low illumination, and this led to variations in sensitivity and accuracy. The methods performed well on images with bright illumination but struggled with images with low illumination, resulting in low sensitivity and accuracy.

A limitation of Deep Supervision [16] could be the requirement of a large amount of labeled data for training. This could be challenging in the medical imaging field, where collecting and annotating data can be time-consuming and expensive. Another limitation could be the computational resources required to train and evaluate the model, as the proposed method involves multiple stages and deep learning models, which could be computationally expensive.

Another drawback of the method could be the sensitivity to initial conditions, which is a common issue in level set-based methods. The authors proposed a deep supervision approach to address this issue, but the performance could still be affected by the initial contour initialization. Additionally, the method may not be robust to variations in imaging quality or artifacts, which can be common in medical imaging.

One disadvantage of Capsule Neural Network [17] is that it does not provide a clear explanation of the capsule network architecture used in the proposed model. Although the authors provide some details on the architecture, they do not explain the significance or advantages of using capsule networks over other deep learning architectures. This lack of clarity makes it difficult to understand the novelty of the proposed model and the contribution of the capsule network component to the overall performance of the model.

Another potential disadvantage of Capsule Neural Network [17] is that the authors do not provide a thorough analysis of the interpretability of the model. Although the proposed model achieves high accuracy in diagnosing breast cancer, it is unclear how the model arrives at its decision. This lack of interpretability can be problematic, as it may prevent clinicians from trusting and using the model in clinical practice.

There are a few potential disadvantages of Deep Features [18]. Firstly, the paper uses a relatively small dataset (135 mammogram images), which could limit the generalizability of the results. A larger dataset could potentially lead to better performance and more reliable conclusions. Secondly, the paper uses a relatively simple deep learning model (AlexNet) for feature extraction, which may not be as effective as more advanced models. More recent models such as ResNet or EfficientNet may be able to extract more informative and discriminative features. Thirdly, the paper does not explicitly compare its results with other state-of-the-art methods for suspicious region segmentation in breast cancer mammogram images. This limits the ability to assess the relative performance of the proposed method compared to existing methods. Finally, the paper does not provide a detailed analysis of the impact of various hyper parameters on the performance of the proposed method. A more comprehensive hyper parameter tuning process could potentially lead to better performance.

There are a few potential disadvantages of HCOW [19], including the following:

- Limited evaluation: The paper only evaluates the HCOW method on a single dataset, the MIAS database, and does not compare its performance with other state-of-the-art methods. This limits the generalizability of the proposed method to other datasets and makes it difficult to compare its performance with other methods;

- Complexity: The HCOW method consists of multiple steps, including wavelet decomposition, histogram-based clustering, and adaptive multi-thresholding. This complexity may make it difficult for the method to be implemented in real-world clinical settings, where speed and ease of use are crucial;

- Lack of interpretability: The HCOW method does not provide any visual or quantitative explanation of how it makes its segmentation decisions. This lack of interpretability may limit its clinical utility, as clinicians may be hesitant to rely on a method that cannot explain its reasoning;

- Inadequate validation: The paper only reports sensitivity, specificity, and accuracy as performance metrics, which are inadequate for evaluating the performance of a segmentation method. Metrics such as Dice similarity coefficient and intersection over union are commonly used in the literature and provide a more comprehensive evaluation of the method’s performance;

- Over-segmentation: The HCOW method has a tendency to over-segment regions, resulting in the inclusion of healthy tissue in the segmented regions. This may reduce the specificity of the method and increase the number of false positives.

To determine whether the model exhibits bias towards different breast cancer types, a comprehensive evaluation would be necessary. This evaluation should involve analyzing the methodology, experimental setup, and results presented in Section 3 of the study. By examining this section, we can assess whether the model’s performance is consistent across different breast cancer types or if there are any variations in its accuracy or effectiveness. To ensure that a model does not exhibit bias towards specific breast cancer types, it is important to carefully consider several factors during model development and evaluation:

- Data representation: Ensure that the training dataset includes an adequate representation of different breast cancer types, including in situ and invasive breast cancers. A balanced dataset that covers the various types of breast cancer helps mitigate potential bias;

- Evaluation metrics: Use evaluation metrics that consider the performance across different cancer types. For example, calculating precision, recall, or accuracy separately for each cancer type can provide insights into any disparities in performance;

- Fairness assessment: Conduct fairness assessments to identify and address any potential biases that may arise in the model. This includes examining whether the model’s predictions exhibit disparities in accuracy, sensitivity, specificity, or other performance metrics across different breast cancer types.

By thoroughly considering these factors and conducting a comprehensive evaluation, we could determine that the proposed model did not exhibit bias towards different breast cancer types. It is important to strive for fairness and accuracy in medical models to ensure equitable and reliable outcomes in diagnosis and treatment.

The proposed model has potential benefits for clinical applications in breast tumor segmentation due to several reasons outlined in the paper:

- Improved Segmentation Accuracy: The combination of a U-Net model with a spatial attention mechanism in the proposed approach is designed to enhance the accuracy of breast tumor segmentation. By incorporating cascade feature extraction and spatial attention, the model can capture subtle tumor features and focus on important regions while suppressing irrelevant areas. This improved accuracy can aid in precise tumor localization and boundary delineation, which are crucial for accurate diagnosis and treatment planning;

- Addressing Challenges in Breast Tumor Segmentation: Breast tumor segmentation in medical imaging poses challenges such as fuzzy boundaries, vague tumor shapes, variation in tumor size, and illumination variations. The proposed method specifically addresses these challenges by leveraging the U-Net model and attention mechanism. By enhancing subtle features and focusing on important regions, the model aims to overcome these difficulties and provide more accurate and reliable tumor segmentation results;

- State-of-the-Art Performance: The abstract states that the proposed methodology demonstrates state-of-the-art performance on the Mini-MIAS dataset, surpassing existing methods in terms of accuracy, sensitivity, and specificity. Achieving high accuracy and sensitivity is crucial in clinical applications to ensure that breast tumors are correctly identified and localized. The improved performance of the proposed model suggests its potential to provide reliable and accurate results for clinical decision-making;

- Efficiency in Segmentation Process: In addition to accuracy, the proposed methodology aims to improve the efficiency of the segmentation process. By utilizing the combination of the U-Net model and attention mechanism, the model can streamline and optimize the segmentation process, potentially reducing the time required for manual analysis. This increased efficiency can have practical implications in clinical settings, where timely diagnosis and treatment planning are critical.

4. Conclusions

In this study, we proposed a novel breast tumor segmentation model that combines cascade feature extraction, a spatial attention mechanism, and a U-net model. The proposed model addresses some of the major challenges in breast tumor segmentation, such as fuzzy boundaries, vague tumor shapes, variation in tumor size, and illumination variation.

The cascade feature extraction approach allowed the model to extract hierarchical features from the mammogram images, which helped to improve the accuracy and robustness of the segmentation results. The spatial attention mechanism was incorporated into the model to enable the network to selectively focus on the relevant regions of the mammogram images and learn the subtle features required for accurate tumor segmentation. Finally, the U-net model was employed to effectively capture the context information of the mammogram images, which further improved the accuracy of the segmentation.

Despite achieving promising results, there are still limitations to our proposed model. One of the main limitations is the relatively small size of the training dataset used in the experiments, which could have affected the generalization ability of the model. Additionally, the model’s performance may be affected by the variation in mammogram image quality and other factors such as patient age and breast density.

In terms of future work, increasing the size and diversity of the training dataset could be an effective way to improve the generalization ability of the model. Additionally, exploring the potential of incorporating multi-modal imaging data, such as MRI and PET, into the segmentation model could help to improve the accuracy and robustness of the segmentation results.

Another potential extension of our proposed model is to investigate the possibility of incorporating transfer learning techniques, such as fine-tuning pre-trained models, to improve the performance of the segmentation model. Furthermore, developing an online segmentation system that can segment mammogram images in real-time could be useful in clinical settings, where rapid and accurate diagnosis is essential.

Finally, a potential future direction for research would be to explore the use of deep learning models for predicting the malignancy level of breast tumors based on the segmented images. Accurately classifying tumors based on their malignancy level could help physicians develop more personalized and effective treatment plans for breast cancer patients.

Funding

This research received no external funding.

Institutional Review Board Statement

This article does not contain any studies with human participants or animals performed by any of the authors.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: http://peipa.essex.ac.uk/info/mias.html (accessed on 8 October 2022).

Conflicts of Interest

The author declares no conflict of interest.

References

- Houssein, E.H.; Emam, M.M.; Ali, A.A.; Suganthan, P.N. Deep and machine learning techniques for medical imaging-based breast cancer: A comprehensive review. Expert Syst. Appl. 2020, 167, 114161. [Google Scholar] [CrossRef]

- Valdora, F.; Houssami, N.; Rossi, F.; Calabrese, M.; Tagliafico, A.S. Rapid review: Radiomics and breast cancer. Breast Cancer Res. Treat. 2018, 169, 217–229. [Google Scholar] [CrossRef] [PubMed]

- Ghoushchi, S.J.; Ranjbarzadeh, R.; Najafabadi, S.A.; Osgooei, E.; Tirkolaee, E.B. An extended approach to the diagnosis of tumour location in breast cancer using deep learning. J. Ambient. Intell. Humaniz. Comput. 2021, 14, 8487–8497. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Sarshar, N.T.; Ghoushchi, S.J.; Esfahani, M.S.; Parhizkar, M.; Pourasad, Y.; Anari, S.; Bendechache, M. MRFE-CNN: Multi-route feature extraction model for breast tumor segmentation in Mammograms using a convolutional neural network. Ann. Oper. Res. 2022, 1–22. [Google Scholar] [CrossRef]

- Zhang, J.; Saha, A.; Zhu, Z.; Mazurowski, M.A. Hierarchical Convolutional Neural Networks for Segmentation of Breast Tumors in MRI with Application to Radiogenomics. IEEE Trans. Med. Imaging 2019, 38, 435–447. [Google Scholar] [CrossRef]

- Raherinirina, A.; Randriamandroso, A.; Hajalalaina, A.R.; Rakotoarivelo, R.A.; Rafamatantantsoa, F. A Gaussian Multivariate Hidden Markov Model for Breast Tumor Diagnosis. Appl. Math. 2021, 12, 679–693. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Dorosti, S.; Ghoushchi, S.J.; Caputo, A.; Tirkolaee, E.B.; Ali, S.S.; Arshadi, Z.; Bendechache, M. Breast tumor localization and segmentation using machine learning techniques: Overview of datasets, findings, and methods. Comput. Biol. Med. 2023, 152, 106443. [Google Scholar] [CrossRef]

- Wang, S.; Sun, K.; Wang, L.; Qu, L.; Yan, F.; Wang, Q.; Shen, D. Breast Tumor Segmentation in DCE-MRI With Tumor Sensitive Synthesis. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–12. [Google Scholar] [CrossRef]

- Le, P.T.; Pham, B.-T.; Chang, C.-C.; Hsu, Y.-C.; Tai, T.-C.; Li, Y.-H.; Wang, J.-C. Anti-Aliasing Attention U-net Model for Skin Lesion Segmentation. Diagnostics 2023, 13, 1460. [Google Scholar] [CrossRef]

- Le, P.T.; Pham, T.; Hsu, Y.-C.; Wang, J.-C. Convolutional Blur Attention Network for Cell Nuclei Segmentation. Sensors 2022, 22, 1586. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Zarbakhsh, P.; Caputo, A.; Tirkolaee, E.B.; Bendechache, M. Brain Tumor Segmentation based on an Optimized Convolutional Neural Network and an Improved Chimp Optimization Algorithm. 2022; preprint. [Google Scholar] [CrossRef]

- Vakanski, A.; Xian, M.; Freer, P.E. Attention-Enriched Deep Learning Model for Breast Tumor Segmentation in Ultrasound Images. Ultrasound Med. Biol. 2020, 46, 2819–2833. [Google Scholar] [CrossRef]

- Yan, Y.; Liu, Y.; Wu, Y.; Zhang, H.; Zhang, Y.; Meng, L. Accurate segmentation of breast tumors using AE U-net with HDC model in ultrasound images. Biomed. Signal Process. Control 2021, 72, 103299. [Google Scholar] [CrossRef]

- Rezaei, Z. A review on image-based approaches for breast cancer detection, segmentation, and classification. Expert Syst. Appl. 2021, 182, 115204. [Google Scholar] [CrossRef]

- Pezeshki, H. Breast tumor segmentation in digital mammograms using spiculated regions. Biomed. Signal Process. Control 2022, 76, 103652. [Google Scholar] [CrossRef]

- Hussain, S.; Xi, X.; Ullah, I.; Inam, S.A.; Naz, F.; Shaheed, K.; Ali, S.A.; Tian, C. A Discriminative Level Set Method with Deep Supervision for Breast Tumor Segmentation. Comput. Biol. Med. 2022, 149, 105995. [Google Scholar] [CrossRef] [PubMed]

- Kavitha, T.; Mathai, P.P.; Karthikeyan, C.; Ashok, M.; Kohar, R.; Avanija, J.; Neelakandan, S. Deep Learning Based Capsule Neural Network Model for Breast Cancer Diagnosis Using Mammogram Images. Interdiscip. Sci. Comput. Life Sci. 2022, 14, 113–129. [Google Scholar] [CrossRef] [PubMed]

- Zebari, D.A.; Ibrahim, D.A.; Al-Zebari, A. Suspicious Region Segmentation Using Deep Features in Breast Cancer Mammogram Images. In Proceedings of the 2nd 2022 International Conference on Computer Science and Software Engineering, CSASE, Duhok, Iraq, 15–17 March 2022; pp. 253–258. [Google Scholar] [CrossRef]

- Toz, G.; Erdogmus, P. A Novel Hybrid Image Segmentation Method for Detection of Suspicious Regions in Mammograms Based on Adaptive Multi-Thresholding (HCOW). IEEE Access 2021, 9, 85377–85391. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Caputo, A.; Tirkolaee, E.B.; Ghoushchi, S.J.; Bendechache, M. Brain tumor segmentation of MRI images: A comprehensive review on the application of artificial intelligence tools. Comput. Biol. Med. 2023, 152, 106405. [Google Scholar] [CrossRef]

- Jain, D.K.; Jain, R.; Upadhyay, Y.; Kathuria, A.; Lan, X. Deep Refinement: Capsule network with attention mechanism-based system for text classification. Neural Comput. Appl. 2020, 32, 1839–1856. [Google Scholar] [CrossRef]

- Guo, M.-H.; Xu, T.-X.; Liu, J.-J.; Liu, Z.-N.; Jiang, P.-T.; Mu, T.-J.; Zhang, S.-H.; Martin, R.R.; Cheng, M.-M.; Hu, S.-M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Qu, Y.; Baghbaderani, R.K.; Qi, H.; Kwan, C. Unsupervised Pansharpening Based on Self-Attention Mechanism. IEEE Trans. Geosci. Remote. Sens. 2020, 59, 3192–3208. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Kasgari, A.B.; Ghoushchi, S.J.; Anari, S.; Naseri, M.; Bendechache, M. Brain tumor segmentation based on deep learning and an attention mechanism using MRI multi-modalities brain images. Sci. Rep. 2021, 11, 10930. [Google Scholar] [CrossRef] [PubMed]

- Shuang, K.; Ren, X.; Guo, H.; Loo, J.; Xu, P. Effective Strategies for Combining Attention Mechanism with LSTM for Aspect-Level Sentiment Classification. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2018; pp. 841–850. [Google Scholar] [CrossRef]

- Yao, H.; Zhang, X.; Zhou, X.; Liu, S. Parallel Structure Deep Neural Network Using CNN and RNN with an Attention Mechanism for Breast Cancer Histology Image Classification. Cancers 2019, 11, 1901. [Google Scholar] [CrossRef] [PubMed]

- Zhou, T.; Ruan, S.; Guo, Y.; Canu, S. A Multi-Modality Fusion Network Based on Attention Mechanism for Brain Tumor Segmentation. In Proceedings of the International Symposium on Biomedical Imaging, IEEE Computer Society, Iowa City, IA, USA, 3–7 April 2020; pp. 377–380. [Google Scholar] [CrossRef]

- Liu, H.; Huo, G.; Li, Q.; Guan, X.; Tseng, M.-L. Multiscale lightweight 3D segmentation algorithm with attention mechanism: Brain tumor image segmentation. Expert Syst. Appl. 2023, 214, 119166. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Zhao, J.; Dang, M.; Chen, Z.; Wan, L. DSU-Net: Distraction-Sensitive U-Net for 3D lung tumor segmentation. Eng. Appl. Artif. Intell. 2022, 109, 104649. [Google Scholar] [CrossRef]

- Liu, J.; Wang, J.; Ruan, W.; Lin, C.; Chen, D. Diagnostic and Gradation Model of Osteoporosis Based on Improved Deep U-Net Network. J. Med. Syst. 2019, 44, 15. [Google Scholar] [CrossRef]

- Raza, R.; Bajwa, U.I.; Mehmood, Y.; Anwar, M.W.; Jamal, M.H. dResU-Net: 3D deep residual U-Net based brain tumor segmentation from multimodal MRI. Biomed. Signal Process. Control 2023, 79, 103861. [Google Scholar] [CrossRef]

- Liu, Z.; Song, Y.-Q.; Sheng, V.S.; Wang, L.; Jiang, R.; Zhang, X.; Yuan, D. Liver CT sequence segmentation based with improved U-Net and graph cut. Expert Syst. Appl. 2019, 126, 54–63. [Google Scholar] [CrossRef]

- Khaled, R.; Vidal, J.; Vilanova, J.C.; Martí, R. A U-Net Ensemble for breast lesion segmentation in DCE MRI. Comput. Biol. Med. 2022, 140, 105093. [Google Scholar] [CrossRef]

- Lee, B.; Yamanakkanavar, N.; Choi, J.Y. Automatic segmentation of brain MRI using a novel patch-wise U-net deep architecture. PLoS ONE 2020, 15, e0236493. [Google Scholar] [CrossRef]

- Li, Q.; Fan, S.; Chen, C. An Intelligent Segmentation and Diagnosis Method for Diabetic Retinopathy Based on Improved U-NET Network. J. Med. Syst. 2019, 43, 304. [Google Scholar] [CrossRef] [PubMed]

- Mo, S.; Cho, M.; Shin, J. Freeze the Discriminator: A Simple Baseline for Fine-Tuning GANs. arXiv 2020, arXiv:2002.10964. [Google Scholar]

- Zhang, Z.; Wen, G.; Chen, S. Weld image deep learning-based on-line defects detection using convolutional neural networks for Al alloy in robotic arc welding. J. Manuf. Process. 2019, 45, 208–216. [Google Scholar] [CrossRef]

- Rajapriya, R.; Rajeswari, K.; Thiruvengadam, S.J. Deep learning and machine learning techniques to improve hand movement classification in myoelectric control system. Biocybern. Biomed. Eng. 2021, 41, 554–571. [Google Scholar] [CrossRef]

- Naiemi, F.; Ghods, V.; Khalesi, H. A novel pipeline framework for multi oriented scene text image detection and recognition. Expert Syst. Appl. 2020, 170, 114549. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Jafarzadeh Ghoushchi, S.; Anari, S.; Safavi, S.; Tataei Sarshar, N.; Babaee Tirkolaee, E.; Bendechache, M. A Deep Learning Approach for Robust, Multi-oriented, and Curved Text Detection. Cognit. Comput. 2022, 1, 1–13. [Google Scholar] [CrossRef]

- Parhizkar, M.; Amirfakhrian, M. Recognizing the Damaged Surface Parts of Cars in the Real Scene Using a Deep Learning Framework. Math. Probl. Eng. 2022, 2022, 5004129. [Google Scholar] [CrossRef]

- Sau, P.C. Retinal Blood Vessel Segmentation Using Attention Module and Tversky Loss Function; Springer: Singapore, 2022; Volume 435, pp. 503–513. [Google Scholar] [CrossRef]

- Ke, Z.; Xu, X.; Zhou, K.; Guo, J. A scale-aware UNet++ model combined with attentional context supervision and adaptive Tversky loss for accurate airway segmentation. Appl. Intell. 2023, 53, 18138–18154. [Google Scholar] [CrossRef]

- Nour, M.; Öcal, H.; Alhudhaif, A.; Polat, K. Skin Lesion Segmentation Based on Edge Attention Vnet with Balanced Focal Tversky Loss. Math. Probl. Eng. 2022, 2022, 4677044. [Google Scholar] [CrossRef]

- Kasgari, A.B.; Safavi, S.; Nouri, M.; Hou, J.; Sarshar, N.T.; Ranjbarzadeh, R. Point-of-Interest Preference Model Using an Attention Mechanism in a Convolutional Neural Network. Bioengineering 2023, 10, 495. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Ghoushchi, S.J.; Sarshar, N.T.; Tirkolaee, E.B.; Ali, S.S.; Kumar, T.; Bendechache, M. ME-CCNN: Multi-encoded images and a cascade convolutional neural network for breast tumor segmentation and recognition. Artif. Intell. Rev. 2023, 1–38. [Google Scholar] [CrossRef]

- Valizadeh, A.; Ghoushchi, S.J.; Ranjbarzadeh, R.; Pourasad, Y. Presentation of a Segmentation Method for a Diabetic Retinopathy Patient’s Fundus Region Detection Using a Convolutional Neural Network. Comput. Intell. Neurosci. 2021, 2021, 7714351. [Google Scholar] [CrossRef] [PubMed]

- Ghoushchi, S.J.; Ranjbarzadeh, R.; Dadkhah, A.H.; Pourasad, Y.; Bendechache, M. An Extended Approach to Predict Retinopathy in Diabetic Patients Using the Genetic Algorithm and Fuzzy C-Means. BioMed Res. Int. 2021, 2021, 5597222. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Dorosti, S.; Ghoushchi, S.J.; Safavi, S.; Razmjooy, N.; Sarshar, N.T.; Anari, S.; Bendechache, M. Nerve optic segmentation in CT images using a deep learning model and a texture descriptor. Complex Intell. Syst. 2022, 8, 3543–3557. [Google Scholar] [CrossRef]

- The Mini-MIAS Database of Mammograms. Available online: http://peipa.essex.ac.uk/info/mias.html (accessed on 8 October 2022).

- Tsochatzidis, L.; Costaridou, L.; Pratikakis, I. Deep Learning for Breast Cancer Diagnosis from Mammograms—A Comparative Study. J. Imaging 2019, 5, 37. [Google Scholar] [CrossRef]

- Lei, Y.; He, X.; Yao, J.; Wang, T.; Wang, L.; Li, W.; Curran, W.J.; Liu, T.; Xu, D.; Yang, X. Breast tumor segmentation in 3D automatic breast ultrasound using Mask scoring R-CNN. Med. Phys. 2021, 48, 204–214. [Google Scholar] [CrossRef] [PubMed]

- Oyelade, O.N.; Ezugwu, A.E. A novel wavelet decomposition and transformation convolutional neural network with data augmentation for breast cancer detection using digital mammogram. Sci. Rep. 2022, 12, 5913. [Google Scholar] [CrossRef] [PubMed]

- Nalepa, J.; Marcinkiewicz, M.; Kawulok, M. Data Augmentation for Brain-Tumor Segmentation: A Review. Front. Comput. Neurosci. 2019, 13, 83. [Google Scholar] [CrossRef]

- Wang, F.; Zhong, S.-H.; Peng, J.; Jiang, J.; Liu, Y. Data Augmentation for EEG-Based Emotion Recognition with Deep Convolutional Neural Networks; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2018; pp. 82–93. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Zeiser, F.A.; da Costa, C.A.; Zonta, T.; Marques, N.M.C.; Roehe, A.V.; Moreno, M.; da Righi, R.R. Segmentation of Masses on Mammograms Using Data Augmentation and Deep Learning. J. Digit. Imaging 2020, 33, 858–868. [Google Scholar] [CrossRef]

- Dvornik, N.; Mairal, J.; Schmid, C. On the Importance of Visual Context for Data Augmentation in Scene Understanding. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 2014–2028. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.; Ranjbarzadeh, R.; Raj, K.; Kumar, T.; Roy, A.M. Understanding EEG signals for subject-wise Definition of Armoni Activities. arXiv 2023, arXiv:2301.00948. [Google Scholar]

- Mousavi, S.M.; Asgharzadeh-Bonab, A.; Ranjbarzadeh, R. Time-Frequency Analysis of EEG Signals and GLCM Features for Depth of Anesthesia Monitoring. Comput. Intell. Neurosci. 2021, 2021, 8430565. [Google Scholar] [CrossRef] [PubMed]

- Haseli, G.; Ranjbarzadeh, R.; Hajiaghaei-Keshteli, M.; Ghoushchi, S.J.; Hasani, A.; Deveci, M.; Ding, W. HECON: Weight assessment of the product loyalty criteria considering the customer decision's halo effect using the convolutional neural networks. Inf. Sci. 2023, 623, 184–205. [Google Scholar] [CrossRef]

- Peng, C.; Zhang, Y.; Meng, Y.; Yang, Y.; Qiu, B.; Cao, Y.; Zheng, J. LMA-Net: A lesion morphology aware network for medical image segmentation towards breast tumors. Comput. Biol. Med. 2022, 147, 105685. [Google Scholar] [CrossRef]

- Kaitouni, S.E.I.E.; Abbad, A.; Tairi, H. A breast tumors segmentation and elimination of pectoral muscle based on hidden markov and region growing. Multimedia Tools Appl. 2018, 77, 31347–31362. [Google Scholar] [CrossRef]

- Safavi, S.; Jalali, M. RecPOID: POI Recommendation with Friendship Aware and Deep CNN. Futur. Internet 2021, 13, 79. [Google Scholar] [CrossRef]

- Safavi, S.; Jalali, M. DeePOF: A hybrid approach of deep convolutional neural network and friendship to Point-of-Interest (POI) recommendation system in location-based social networks. Concurr. Comput. Pract. Exp. 2022, 34, e6981. [Google Scholar] [CrossRef]

- Ranjbarzadeh, R.; Saadi, S.B. Corrigendum to “Automated liver and tumor segmentation based on concave and convex points using fuzzy c-means and mean shift clustering” [Measurement 150 (2020) 107086]. Measurement 2020, 151, 107230. [Google Scholar] [CrossRef]

- Saadi, S.B.; Sarshar, N.T.; Sadeghi, S.; Ranjbarzadeh, R.; Forooshani, M.K.; Bendechache, M. Investigation of Effectiveness of Shuffled Frog-Leaping Optimizer in Training a Convolution Neural Network. J. Health Eng. 2022, 2022, 4703682. [Google Scholar] [CrossRef]

- Anari, S.; Sarshar, N.T.; Mahjoori, N.; Dorosti, S.; Rezaie, A. Review of Deep Learning Approaches for Thyroid Cancer Diagnosis. Math. Probl. Eng. 2022, 2022, 5052435. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).