An Improved Lightweight Dense Pedestrian Detection Algorithm

Abstract

1. Introduction

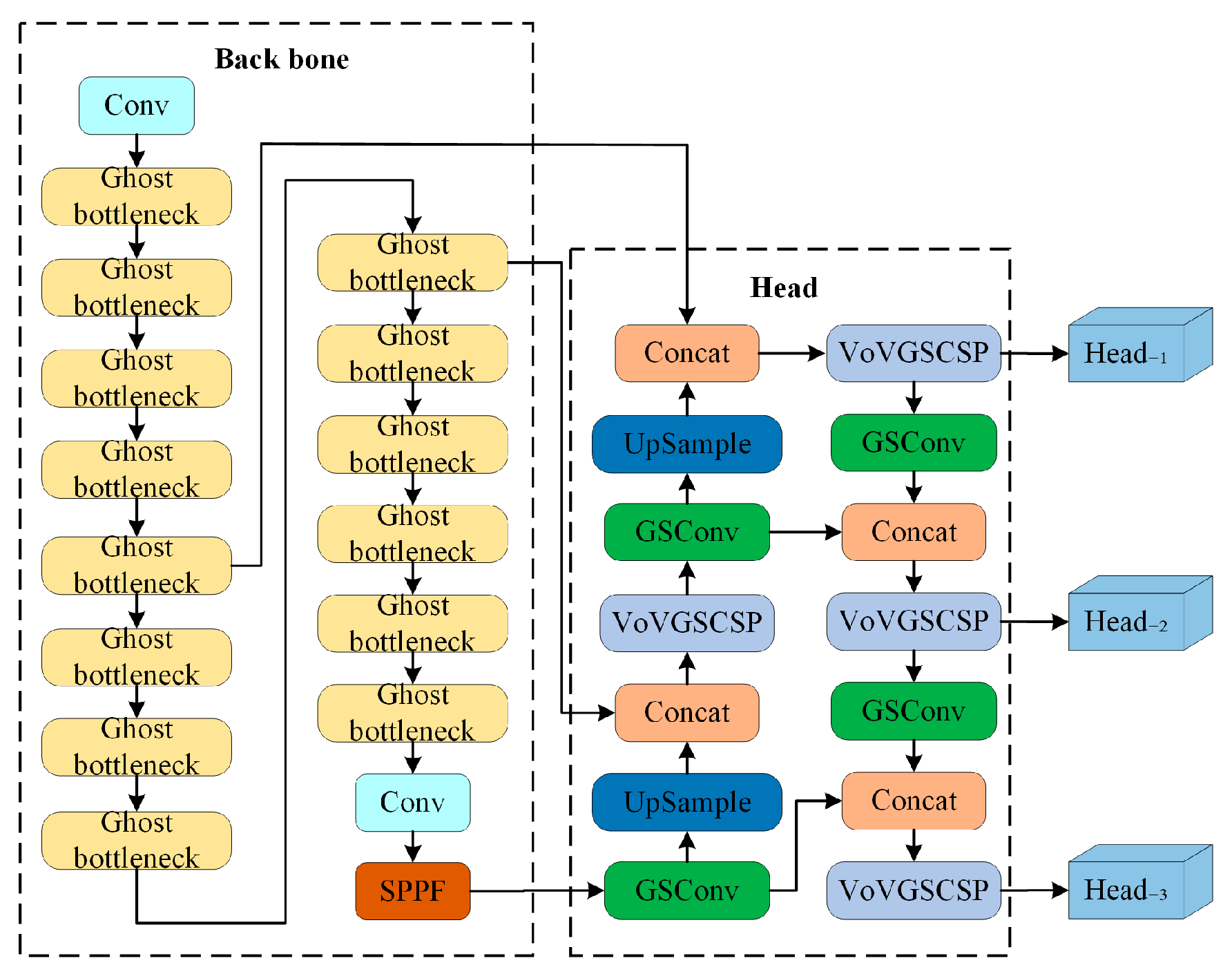

- Based on YOLOv5 to further reduce its number of parameters and computation, for the backbone feature extraction network part, GhostNet, a lightweight network, is used to replace the original CSPDarknet53, and, for the neck part, GSConv and VoV-GSCSP are used to replace it, reducing the space required for model storage, while significantly improving the detection speed in under-computing scenarios.

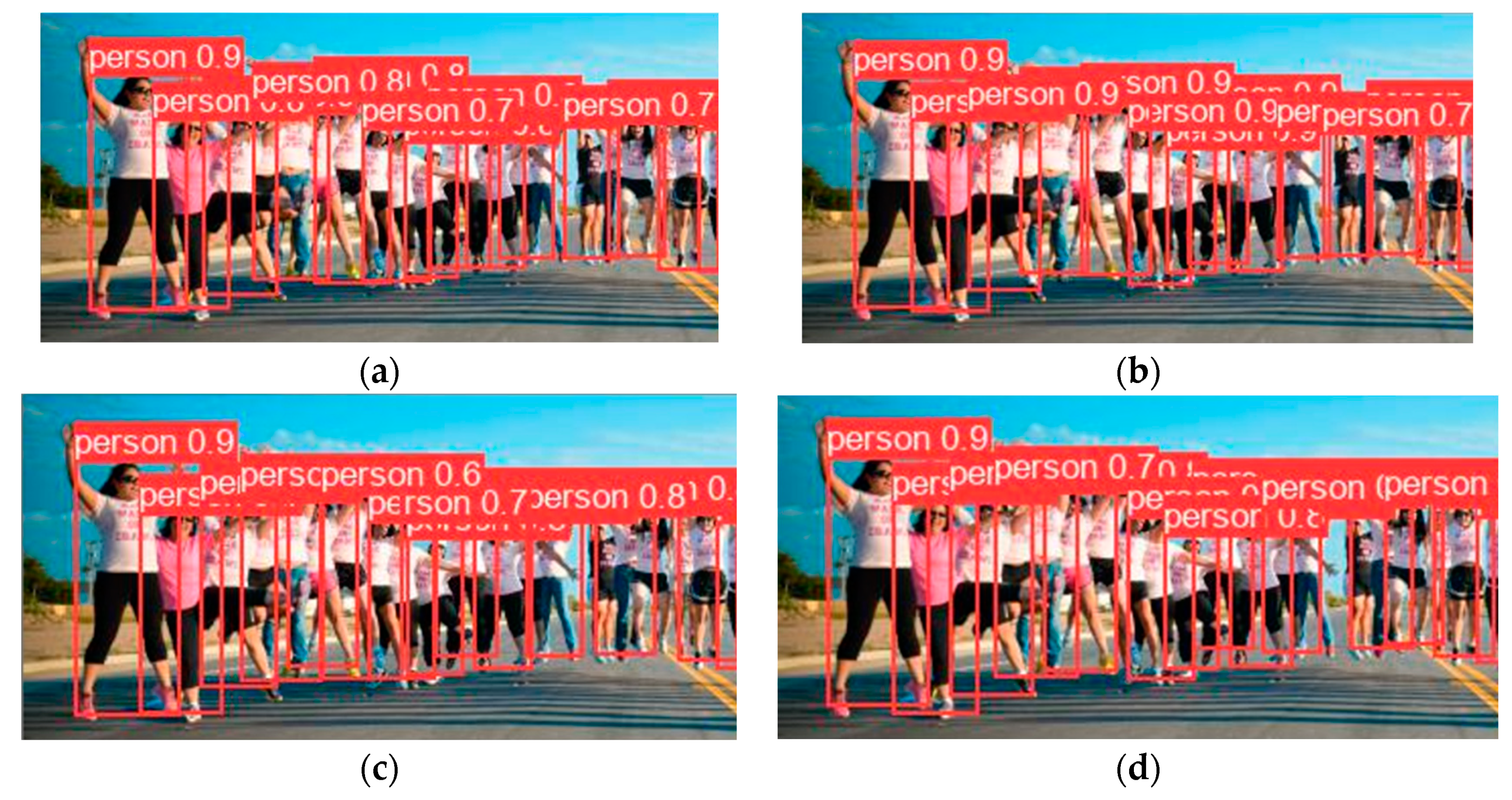

- For the problem of overlapping prediction frames in dense scenes, the original IoU loss function cannot solve the prediction frame screening task well when the targets are close together. We employ SIoU as the loss function in this paper, introduce the vector angle between the real frame and the prediction frame, and redefine the related loss function to increase the model’s accuracy in crowded scenarios.

2. Related Work

2.1. YOLO Object Detection Algorithm

2.2. Model Lightweighting

3. Proposed Algorithm

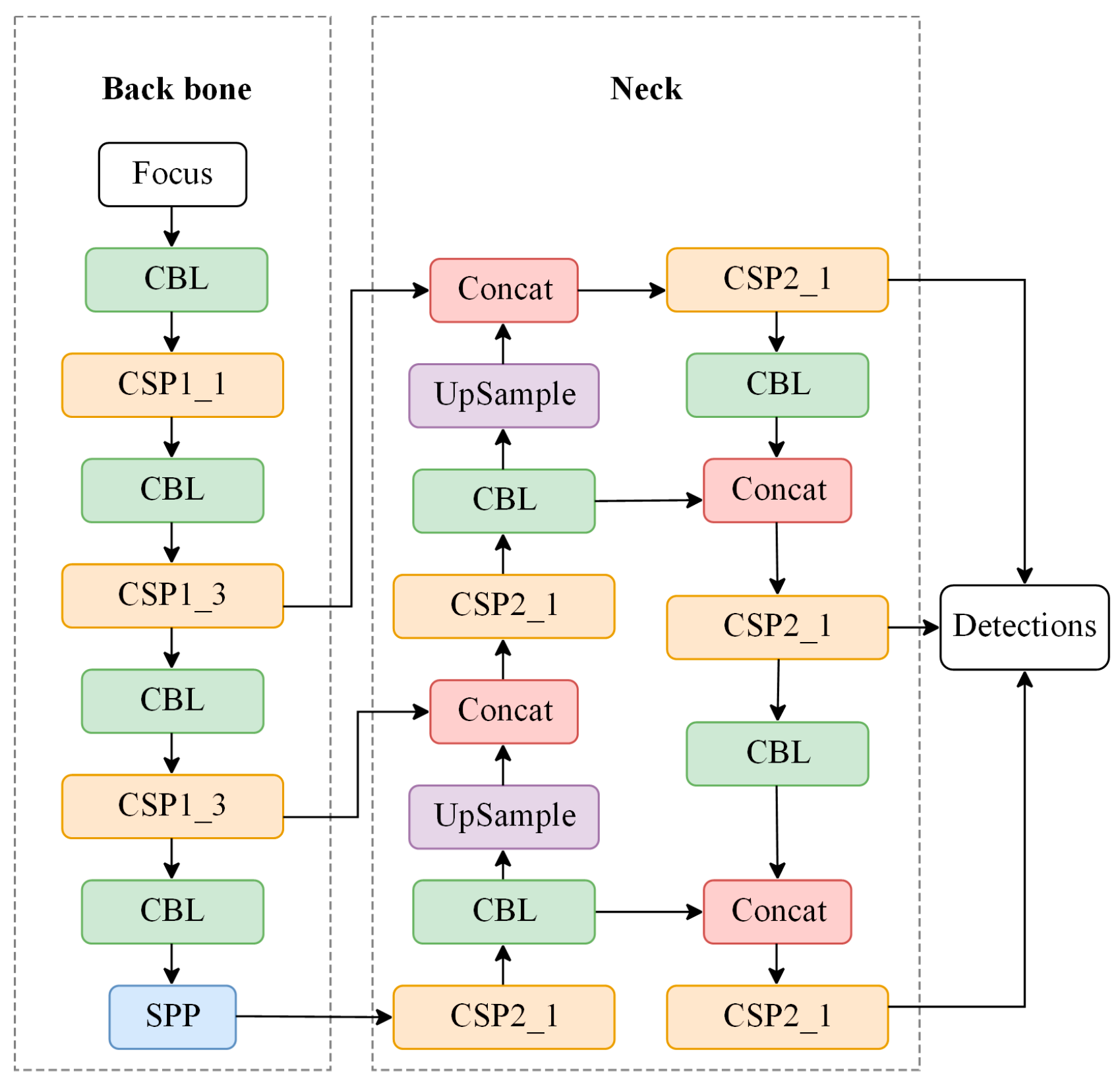

3.1. Network Structure of GS-YOLOv5

| Algorithm 1 Pseudocode of GS-YOLOv5 |

| Input: Image I, confidence threshold T Output: Detected objects with their bounding boxes and labels

|

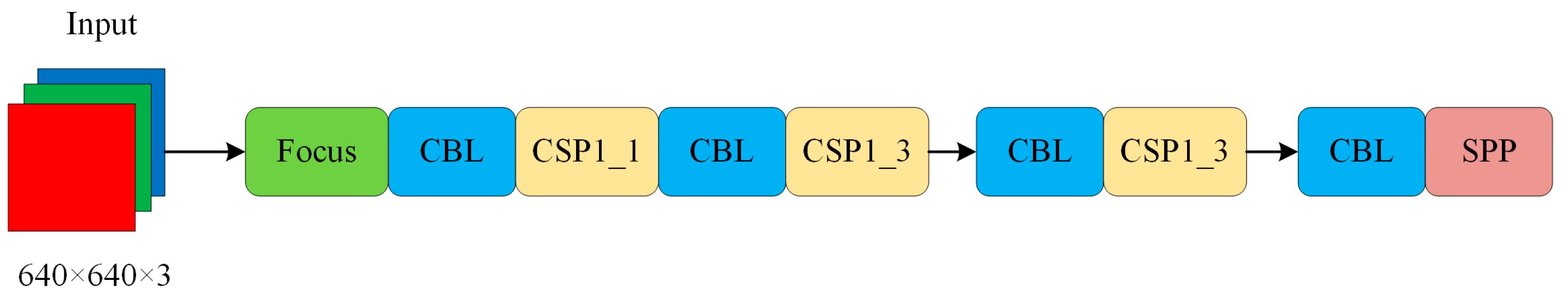

3.1.1. GhostNet Optimized Backbone Section

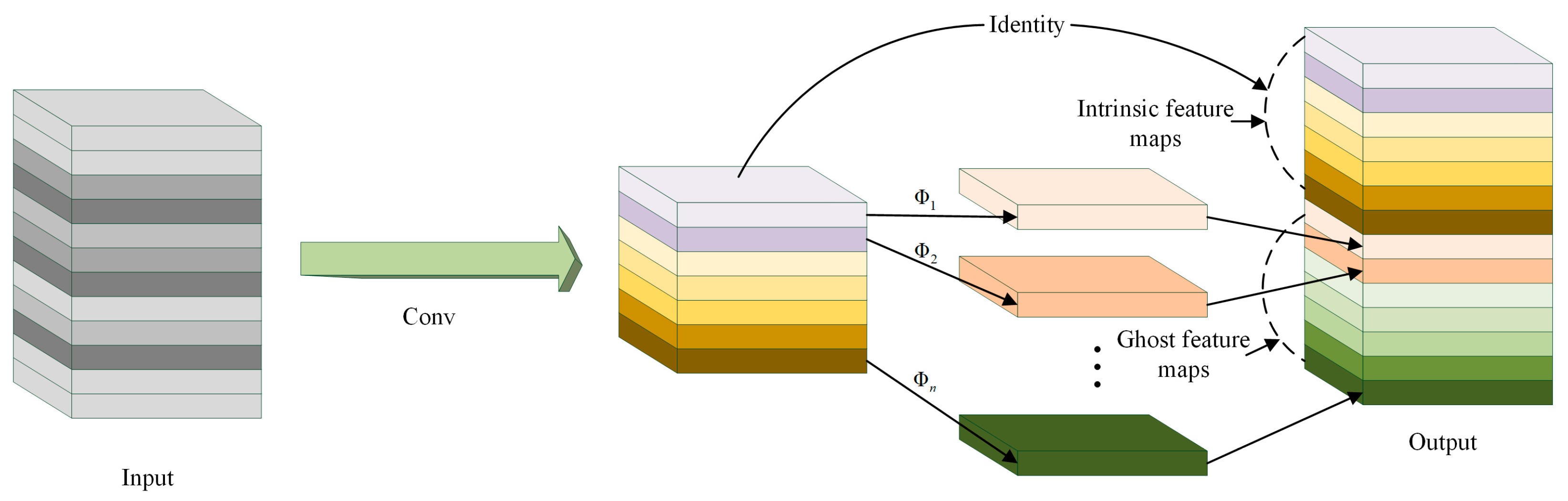

- Ghost Module

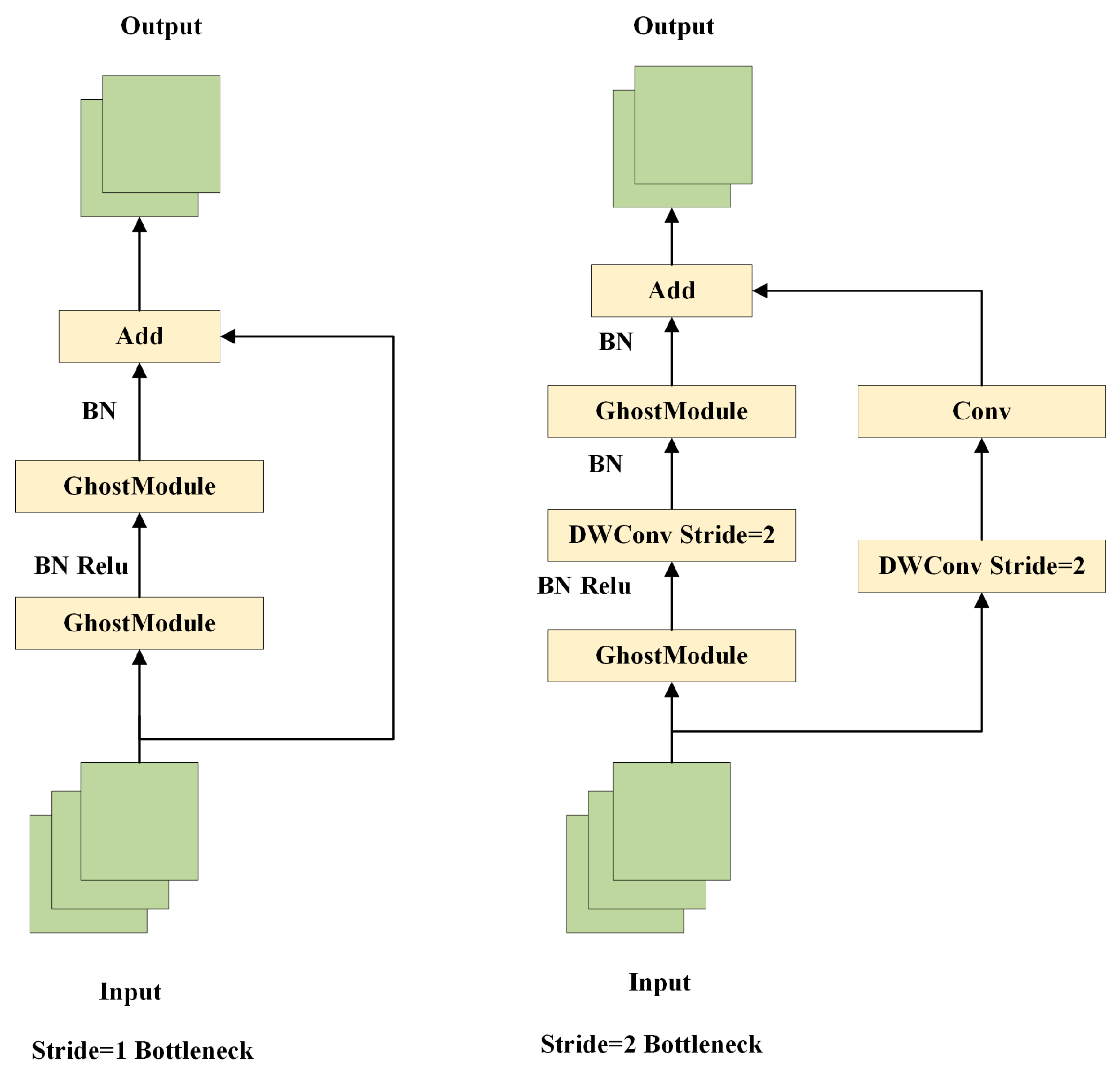

- B.

- Ghost bottleneck

3.1.2. GSConv Optimization Neck Section

- Depth Separable Convolution (DSC) and Standard Convolution (SC)

- B.

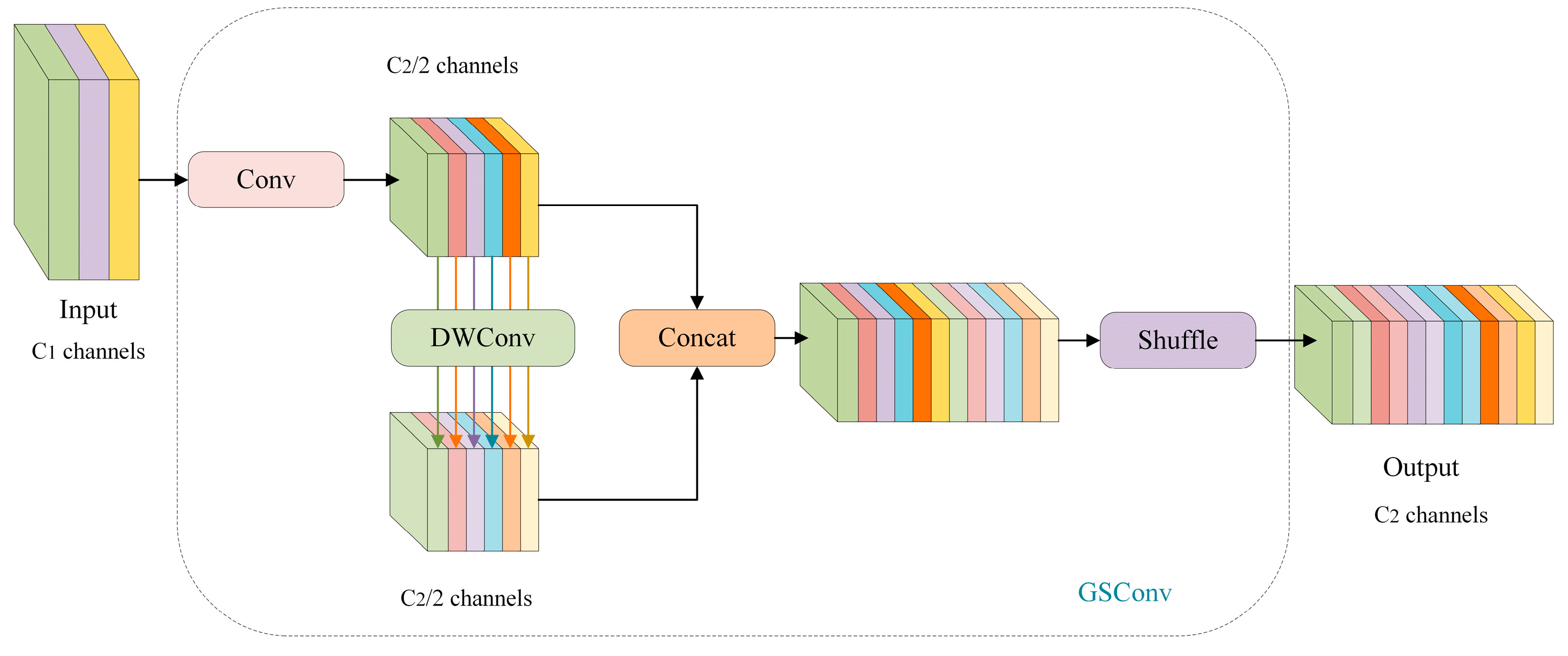

- GSConv

- C.

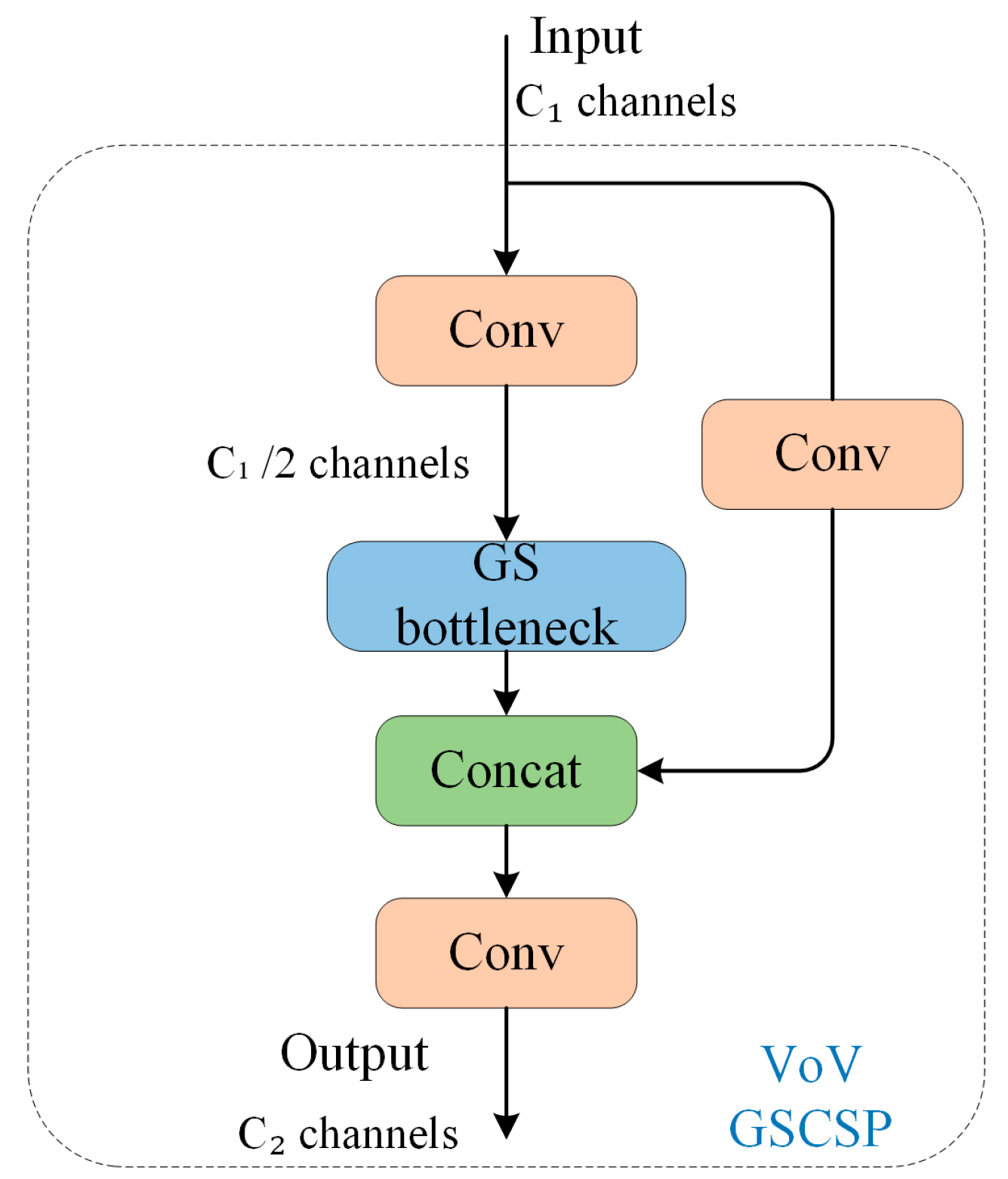

- VoV-GSCSP

3.2. SIoU Loss Function

3.2.1. Existing Loss Function Analysis

3.2.2. SIoU Loss

- Angle loss, defined as follows:where is the height difference between the actual frame’s center point and the predicted frame’s center point, and is the distance between the real frame’s center point and the predicted frame’s center point. are the coordinates of the center of the real frame, and are the coordinates of the center of the predicted frame.

- Distance loss, defined as follows:are the width and height of the minimum outer rectangle of the real box and the predicted box.

- Shape loss, defined as follows:are the width and height of the predicted and real frames, respectively, and controls the degree of attention to shape loss.

- The SIoU loss function is defined as follows:

4. Experiments

4.1. Experimental Environment and Parameter Description

4.2. Datasets

4.3. Evaluation Metrics

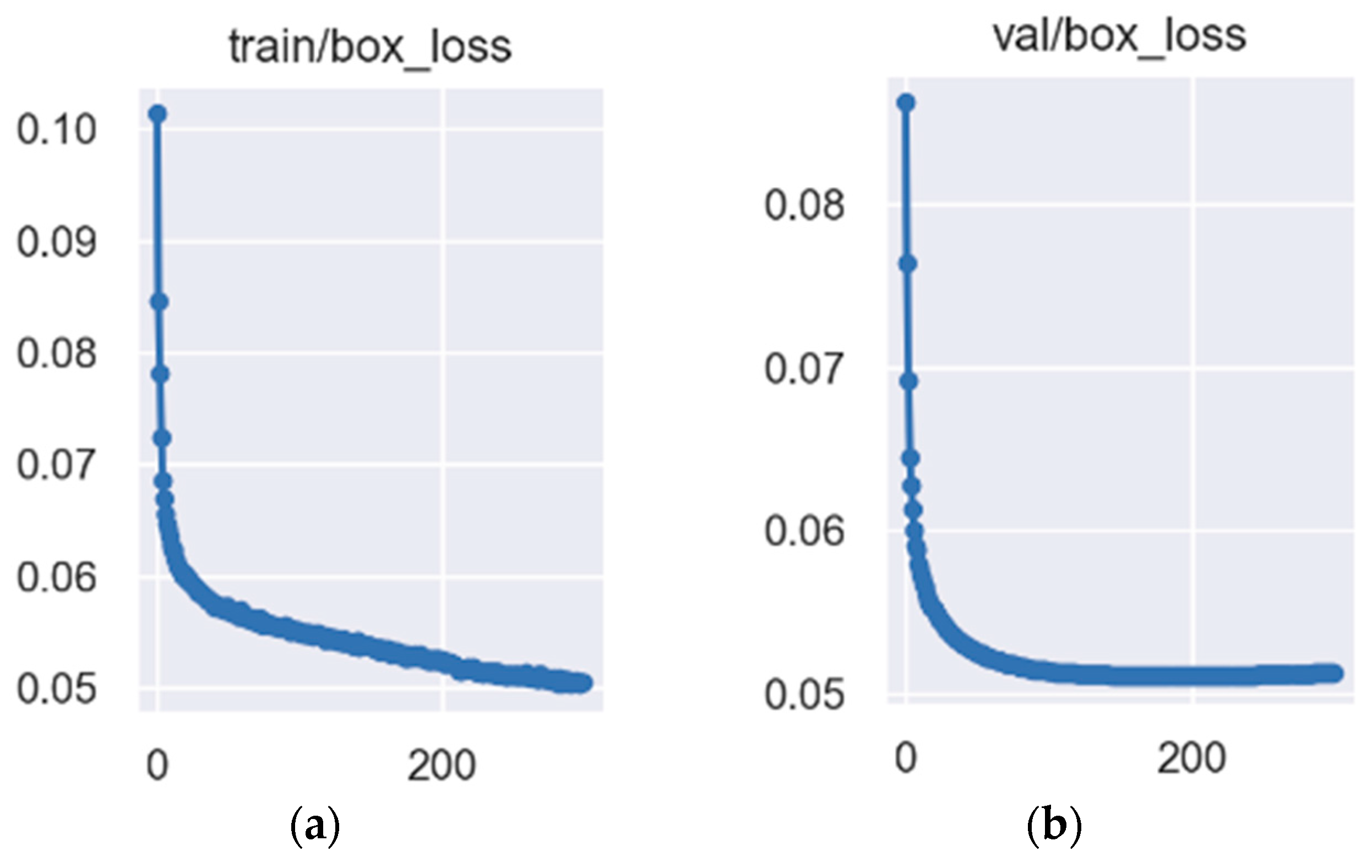

4.4. Experimental Results

4.4.1. Ablation Experiments

4.4.2. Comparison of Detection Performance with Other Algorithm

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lan, X.; Zhang, S.; Yuen, P.C. Robust Joint Discriminative Feature Learning for Visual Tracking. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (IJCAI-16), New York, NY, USA, 9–15 July 2016; pp. 3403–3410. [Google Scholar]

- Ma, A.J.; Li, J.; Yuen, P.C.; Li, P. Cross-domain person reidentification using domain adaptation ranking svms. IEEE Trans. Image Process. 2015, 24, 1599–1613. [Google Scholar] [CrossRef] [PubMed]

- Ma, A.J.; Yuen, P.C.; Zou, W.W.; Lai, J.H. Supervised spatio-temporal neighborhood topology learning for action recognition. IEEE Trans. Circuits Syst. Video Technol. 2013, 23, 1447–1460. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.; Changyu, L.; Hogan, A.; Yu, L.; Rai, P.; Sullivan, T. Ultralytics/Yolov5: Initial Release. (Version v1.0) [Z/OL]. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 20 June 2023).

- Li, J.; Wang, H.; Xu, Y.; Liu, F. Road Object Detection of YOLO Algorithm with Attention Mechanism. Front. Signal Process 2021, 5, 9–16. [Google Scholar] [CrossRef]

- Thakkar, H.; Tambe, N.; Thamke, S.; Gaidhane, V.K. Object Tracking by Detection using YOLO and SORT. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2020. [Google Scholar] [CrossRef]

- Jin, Y.; Wen, Y.; Liang, J. Embedded real-time pedestrian detection system using YOLO optimized by LNN. In Proceedings of the 2020 International Conference on Electrical, Communication, and Computer Engineering (ICECCE), Istanbul, Turkey, 12–13 June 2020; pp. 1–5. [Google Scholar]

- Wang, G.; Ding, H.; Yang, Z.; Li, B.; Wang, Y.; Bao, L. TRC-YOLO: A real-time detection method for lightweight targets based on mobile devices. IET Comput. Vis. 2022, 16, 126–142. [Google Scholar] [CrossRef]

- Zhao, Z.; Hao, K.; Ma, X.; Liu, X.; Zheng, T.; Xu, J.; Cui, S. SAI-YOLO: A lightweight network for real-time detection of driver mask-wearing specification on resource-constrained devices. Comput. Intell. Neurosci. 2021, 2021, 4529107. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Chen, S.; Zhan, R.; Wang, W.; Zhang, J. LMSD-YOLO: A Lightweight YOLO Algorithm for Multi-Scale SAR Ship Detection. Remote Sens. 2022, 14, 4801. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, S.; Song, W.; He, Q.; Wei, Q. Lightweight underwater object detection based on yolo v4 and multi-scale attentional feature fusion. Remote Sens. 2021, 13, 4706. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Chen, H.-Y.; Su, C.-Y. An enhanced hybrid MobileNet. In Proceedings of the 2018 9th International Conference on Awareness Science and Technology (iCAST), Fukuoka, Japan, 19–21 September 2018; pp. 308–312. [Google Scholar]

- Su, J.; Faraone, J.; Liu, J.; Zhao, Y.; Thomas, D.B.; Leong, P.H.; Cheung, P.Y. Redundancy-reduced mobilenet acceleration on reconfigurable logic for imagenet classification. In Applied Reconfigurable Computing. Architectures, Tools, and Applications, Proceedings of the 14th International Symposium, ARC 2018, Santorini, Greece, 2–4 May 2018; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; Volume 14, pp. 16–28. [Google Scholar]

- Tan, S.; Lu, G.; Jiang, Z.; Huang, L. Improved YOLOv5 Network Model and Application in Safety Helmet Detection. In Proceedings of the 2021 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Tokoname, Japan, 4–6 March 2021; pp. 330–333. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022, arXiv:2206.02424. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans. Cybern. 2021, 52, 8574–8586. [Google Scholar] [CrossRef] [PubMed]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

| GhostNet | GSConv | SIoU | mAP0.5:0.95 | Param | FLOPs | |

|---|---|---|---|---|---|---|

| Model 1 | 0.316 | 21.04 M | 47.9 G | |||

| Model 2 | √ | 0.319 | 12.12 M | 20.0 G | ||

| Model 3 | √ | √ | 0.318 | 12.55 M | 17.3 G | |

| Model 4 | √ | √ | √ | 0.321 | 12.55 M | 17.3 G |

| Models | mAP0.5 | mAP0.5:0.95 | p | R |

|---|---|---|---|---|

| Model 1 | 0.601 | 0.316 | 0.779 | 0.38 |

| Model 2 | 0.597 | 0.319 | 0.834 | 0.33 |

| Model 3 | 0.598 | 0.318 | 0.85 | 0.319 |

| Model 4 | 0.599 | 0.321 | 0.854 | 0.317 |

| Models | mAP0.5 | mAP0.5:0.95 | p | R |

|---|---|---|---|---|

| YOLOv5 + GhostNet + GSConv + SIoU | 0.599 | 0.321 | 0.854 | 0.317 |

| YOLOv5 + MobileNetV3 | 0.473 | 0.185 | 0.668 | 0.408 |

| YOLOv5 + ShuffleNetV2 | 0.473 | 0.186 | 0.654 | 0.414 |

| YOLOv5 + EfficientLite | 0.505 | 0.204 | 0.689 | 0.437 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Chen, S.; Sun, C.; Fang, S.; Han, J.; Wang, X.; Yun, H. An Improved Lightweight Dense Pedestrian Detection Algorithm. Appl. Sci. 2023, 13, 8757. https://doi.org/10.3390/app13158757

Li M, Chen S, Sun C, Fang S, Han J, Wang X, Yun H. An Improved Lightweight Dense Pedestrian Detection Algorithm. Applied Sciences. 2023; 13(15):8757. https://doi.org/10.3390/app13158757

Chicago/Turabian StyleLi, Mingjing, Shuang Chen, Cong Sun, Shu Fang, Jinye Han, Xiaoli Wang, and Haijiao Yun. 2023. "An Improved Lightweight Dense Pedestrian Detection Algorithm" Applied Sciences 13, no. 15: 8757. https://doi.org/10.3390/app13158757

APA StyleLi, M., Chen, S., Sun, C., Fang, S., Han, J., Wang, X., & Yun, H. (2023). An Improved Lightweight Dense Pedestrian Detection Algorithm. Applied Sciences, 13(15), 8757. https://doi.org/10.3390/app13158757