Trust Model of Privacy-Concerned, Emotionally Aware Agents in a Cooperative Logistics Problem

Abstract

1. Introduction

- A bouquet of flowers, especially if the handler is seen in a suspicious way due to personal circumstances, e.g., already married, too young/old, etc.;

- A stroller for a baby, especially if the handler’s work colleagues do not know about it;

- A set of masks when there is a mask shortage in a pandemic situation;

- A piece of cloth not coherent with the perceived gender role of the handler;

- Any kind of item with a political/ideological meaning.

2. State of the Art

2.1. Computational Representation of Emotions

2.2. Computational Representation of Trust

2.3. Computational Representation of Privacy Issues in Social Interactions

3. Proposed Emotional Model

3.1. Sources of Emotions

- Happy: for instance, when the encountered agent walks straight and looks toward its front side;

- Sad: for instance, when the encountered agent has a curved back and looks down at the floor;

- Anger: for instance, when the encountered agent raises its shoulders, its hands form fists and its moves are fast and rigid;

- Fear: for instance, when the encountered agent stands still and its hands and legs shake;

- Surprise: for instance, when the encountered agent stands still and its head leans back as it raises its hands.

- 1.

- Others: when the emotion perceived in response to the encountered agent is anger, a fear emotion is produced;

- 2.

- Alien privacy: when a privacy issue is associated the other agent involved in the meeting (which may be due to the object carried by the other agent), a surprise emotion is be produced;

- 3.

- Own privacy: when a privacy issue is associated with the subject agent involved in the meeting (which may be due to the the place of the meeting or the object carried by the subject agent), an anger emotion is produced;

- 4.

- Positive rewards of performance: when the subject agent successfully accomplishes its task, a joy emotion is produced;

- 5.

- Negative rewards of performance: when the subject agent poorly accomplishes its task, a sadness emotion is produced.

3.2. The PAD Levels of Emotions

3.3. Pad Changes Due to the Source of Emotion

- The number of tasks for which the agent is responsible in a given moment (in our logistic problem, due its own boxes to be moved and delegated boxes), as a high number of tasks causes a sense of a lack of control of the situation. Each task exceeding the first task causes a decrease of −0.05 in dominance; on the other hand, having no current task causes an increase of 0.1 in dominance, and having just one task results in a 0.05 increase;

- The overall achievement/performance of the agent across all executions (not just an instant reward for the current goal), as poor performance causes a sense of a lack of control (forcing the agent to accept offers of help from untrusted agents) and because the accumulated reward per task is less than the average reward, causing a decrease of −0.1 in dominance, while a greater-than-average reward causes an increase of 0.1.

3.4. Influence of Personality on Emotions and Performance Ability

- Because anger is associated with the highest positive values of dominance in Table 1, a psychotic personality promotes anger by causing low-level changes in dominance through a small softening constant ( instead of ) when the value is positive.

4. Proposed Trust Model

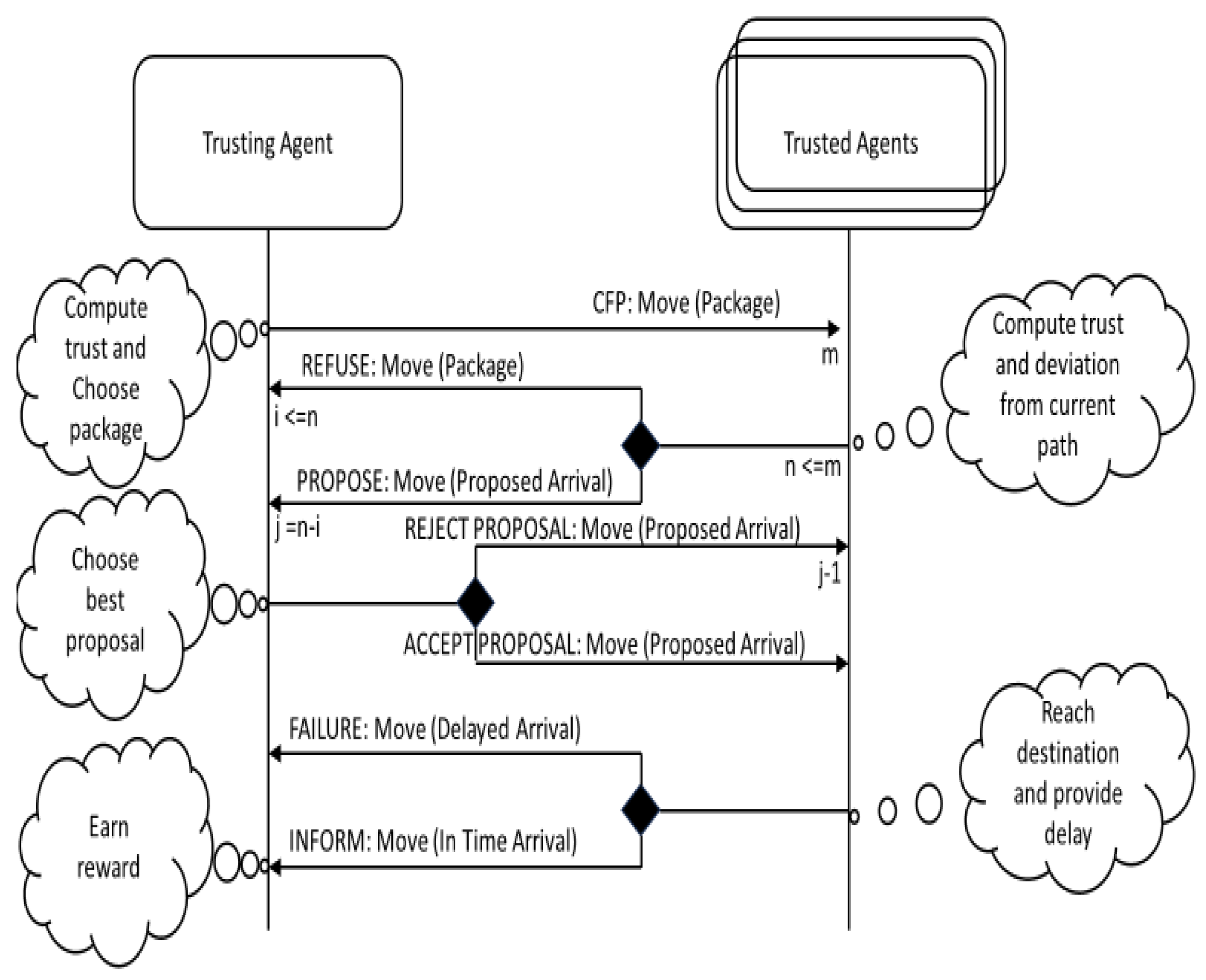

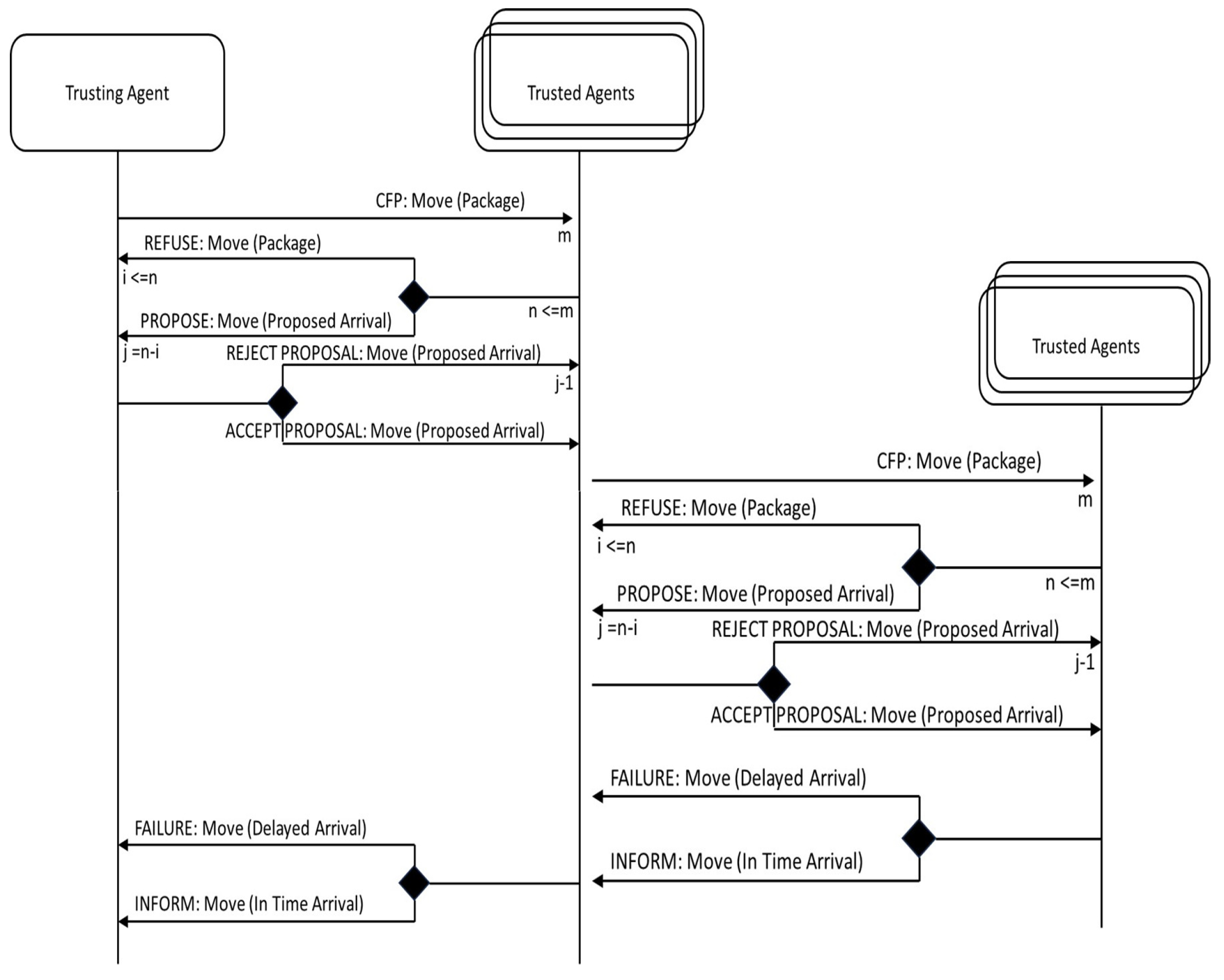

- 1.

- The decision to request cooperation, where our agent becomes the trusting agent, as it delegates a task to another (trusted) agent, taking some risks (in our logistic problem, trusting another agent to move a box towards its destination), with no associated certainty or guarantee of the future behavior of the other agent, although afterward, the trusting agent receives delayed feedback about the behavior of the trusted agent (in our logistic problem, the trusting agent learns whether the box reached the destination in a given time or not).

- 2.

- The decision to answer a cooperation request from another agent, where our agent becomes the trusting agent, as it carries out a delegated task for other (trusted) agent, taking some risks (in our case, trusting the other agent to reach the destination in a given time). Again, there is no associated certainty or guarantee of the future behavior of the other agent, although afterward, the trusting agent receives delayed feedback about the behavior of the trusted agent (in our logistic problem, the trusting agent will learn whether the moving task was performed in time or not).

- The mood (current feeling) of the trusting agent: positive emotions (joy and surprise) of the agent encourage trusting decisions, whereas negative (sadness and fear) and antisocial (anger) emotions discourage trusting decisions, with a bonus/malus of 0.1 of trust required;

- The privacy issues of the agent involved in the trusting decision (in our logistic problem, the level of privacy associated with the box to be delegated). If privacy issues are involved, then 0.1 less trust is assigned to the other agent;

- How much the other agent is trusted: Trust is computed based the previous performance of the other agent in interactions with the subject agent (in our logistic problem, the level of previous success of the other agent performing moving tasks of the subject agent). Success (delivery of the box without delay) is associated with an increase of 0.1 in trust, whereas each cycle of delay translates to a −0.05 decrease in trust;

- How much the agent needs help (in our logistic problem, the number of boxes already carried): For each box already being carried, 0.05 less trust is assigned to the other agent.

- The mood (current feeling) of the trusting agent: Positive emotions (joy and surprise) of the agent encourage trusting decisions, whereas negative (sadness and fear) and antisocial (anger) emotions discourage trusting decisions, with a bonus/malus of 0.1 of trust required;

- Privacy issues involved in the trusting decision for the agent (in our logistic problem, the level of privacy associated with the box to be moved): If privacy issues are involved for with subject agent, then 0.1 less trust is assigned to the other agent;

- How much the other agent is trusted, where trust is computed based the previous performance of the other agent in interactions with the subject agent (in our logistic problem, the level of success of the other agent in previously moving boxes of the subject agent): success (delivery of the box without delay) is associated with an increase of 0.1 in trust, whereas each cycle of delay translates to a −0.05 decrease in trust;

- How much the other agent may help (in our logistic problem, the number of boxes already carried and assigned boxes with their time requests and relative paths to their destination): for each box already being carried, 0.05 more trust to is required to accept the cooperation offer.

5. FIPA Protocols and BDI Reasoning

- A random fixed personality chosen among three possible personalities: neurotic, psychotic or extroverted;

- An initial mood (current feeling) derived from the neutral (0) PAD values of pleasure, arousal and dominance;

- An initial location (randomly chosen anywhere in the existing grid);

- An initial desire to be .

- Transformation of the closest package among all pending packages into a current package belief;

- Transformation of all other pending packages into beliefs;

- Dropping of the desire and adoption of a desire.

- The farthest package is chosen as a candidate to be delegated;

- The level of trust in the encountered in the same cell is determined;

- Trust modifiers are computed based on emotions and personality;

- A decision is made to delegate the candidate package in cases of sufficient trust in the encountered agent.

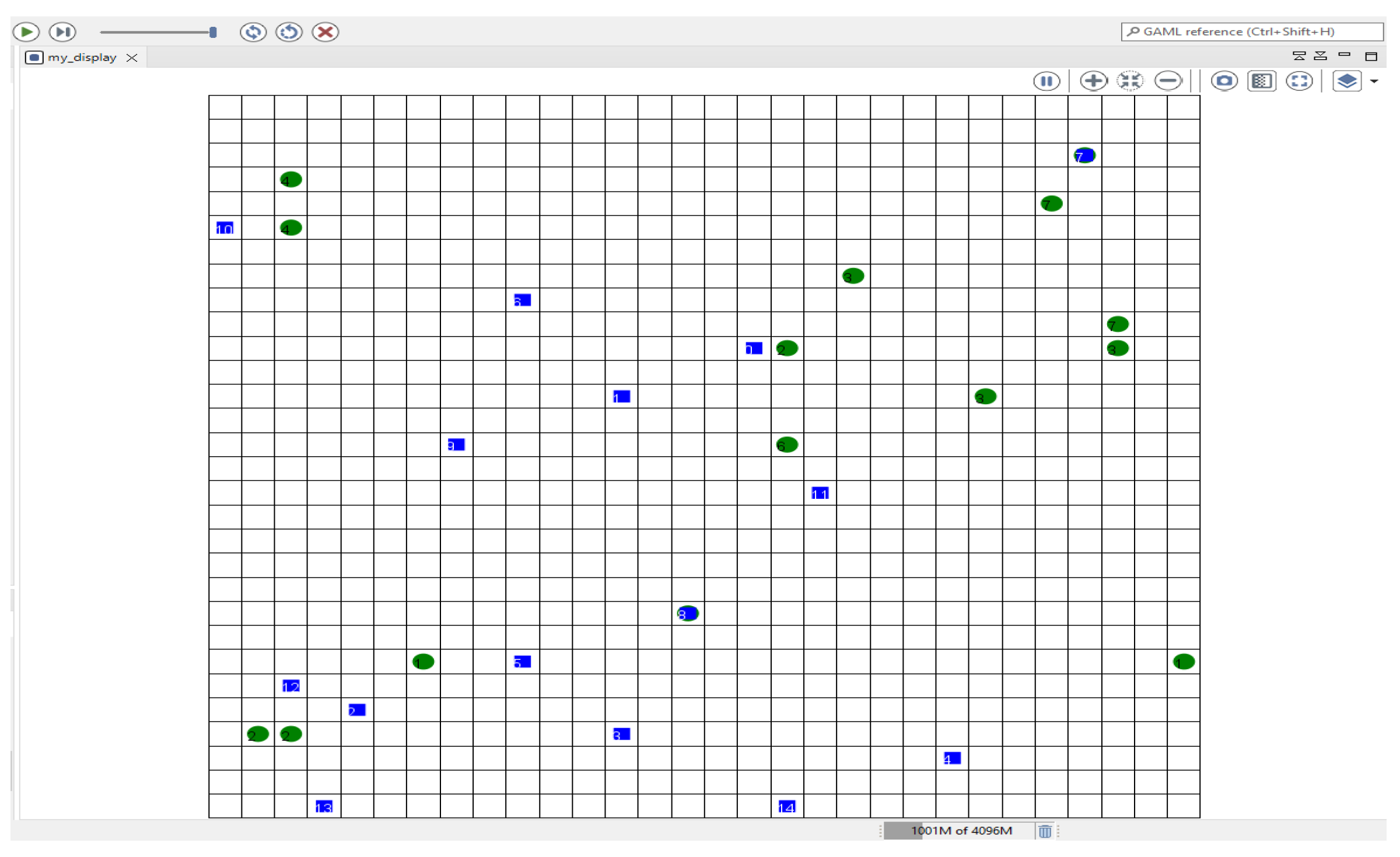

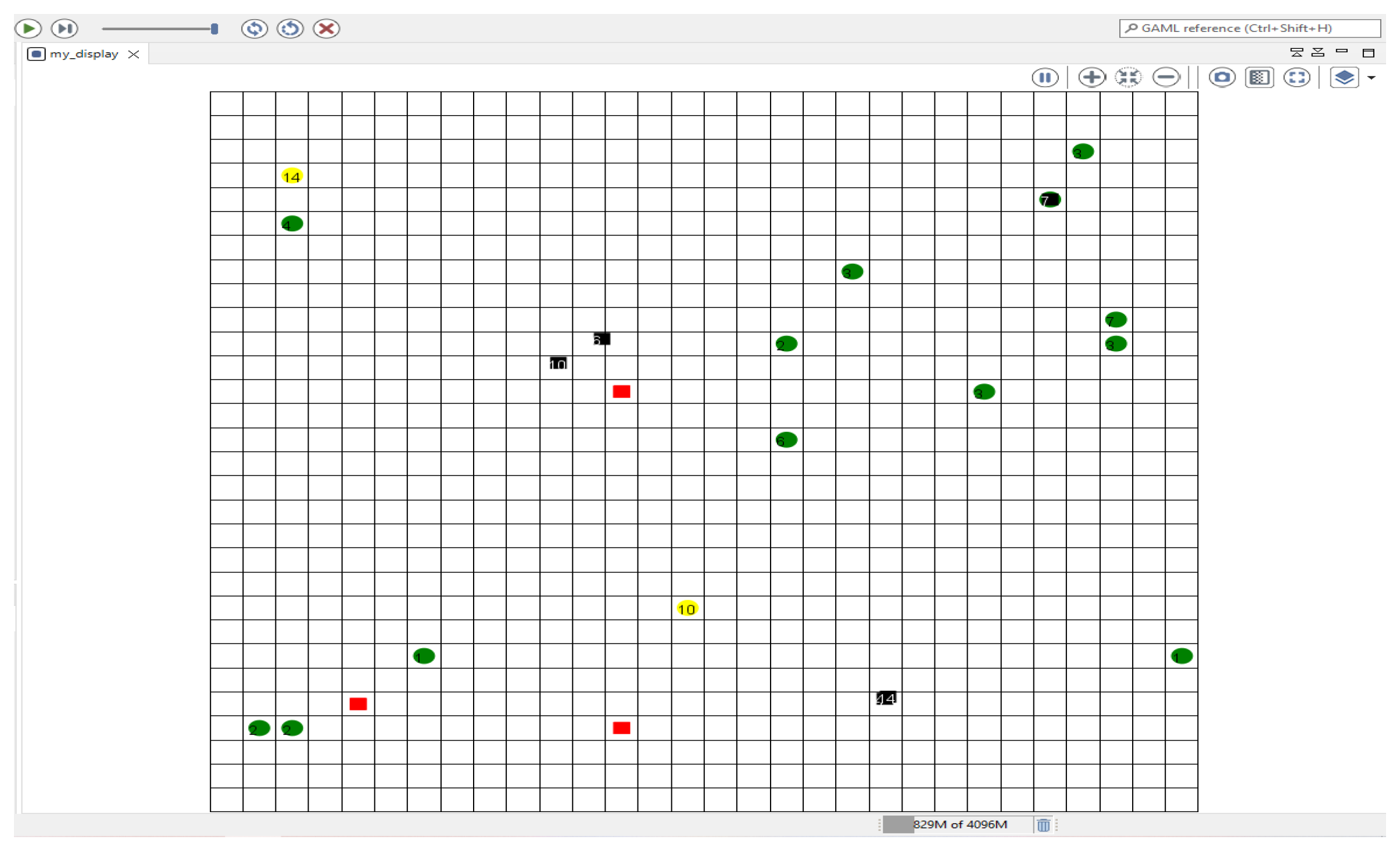

6. Problem Definition in the GAMA Platform

- Whether to include trust in the simulation or not (variable: true or false);

- Whether to include emotions in the simulation or not (variable: true or false);

- Number of packages (fixed at 15);

- Number of carrier agents (fixed at 15);

- Percentage of initially idle carrier agents (variable: 0%, 20%, 40%, 60% or 80%);

- Size of the square grid, in number of cells (fixed at 30);

- Probability of a cell/box being private (variable: 0.0, 0.2, 0.4, 0.6 or 0.8);

- Probability of being neurotic (fixed at 33%);

- Probability of being psychotic (fixed at 33%);

- Penalty associated with privacy disclosure (fixed at 2.0);

- Reward for reaching the target in time (fixed at 1.0);

- Penalty for a delay in reaching the target (fixed at 2.0);

- Basic trust threshold to cooperate (fixed at 0.5);

- Initial trust in an unknown agent (fixed at 0.5).

7. Experimental Results

- A trust model without emotions (denoted as ): the current feeling does not modify how much trust is required to propose and to accept a proposal for delegation of a task (happy and surprised emotions decrease the trust requirement, whereas sadness, anger and fearful emotions increase the trust requirement);

- No trust model at all (denoted as ): No agent trusts any other agent, and no cooperation takes place (no objects are delegated to other agents to be carried towards their destinations). All agents carry the initially assigned objects to their destinations by themselves, without any way to decrease the corresponding delays. This is the worst case, serving as the benchmark to show the improvement achieved by the other alternatives.

- The rewards for any alternative can be expected to increase as the percentage of idle agents increases; however, rewards appear to reach a saturation point between 20% and 40%, beyond which no significant increase is perceived;

- With the independence of the percentage of idle agents, the no-trust alternative obtains considerably fewer rewards than the other two alternatives (no emotions and emotional trust);

- When the percentage of idle agents is very low (20%), only a few boxes can be delegated, causing results that significantly differ from those obtained with greater percentages. This is especially true for the no-trust alternative;

- Except when the percentage of idle agents is very low (20%), the use of emotions slightly increases the rewards obtained by agents (the emotional trust alternative slightly outperforms the no-emotions alternative). Therefore, in these cases, using emotions in the trust model causes some improvement. However the difference is minimal;

- When the the percentage of idle agents is very low (20%), the use of emotions clearly leads to fewer rewards, with an apparent decrease in the quality of decisions made by the trust model (the no-emotion alternative clearly outperforms the emotional trust alternative).

- Currently carrying a private box increases the chances of proposing a delegation to another agent whenever a meeting takes place, as the box may not be private for the other agent (corresponding to a smaller burden for the other agent to carry it to its destination);

- Both private boxes and cells cause a punishment reward whenever a meeting takes place;

- Both private boxes and cells cause a decrease in the pleasure level of the agent (PAD variable) whenever a meeting takes place;

- Both private boxes and cells cause an increase in the arousal level of the agent (PAD variable) whenever a meeting takes place.

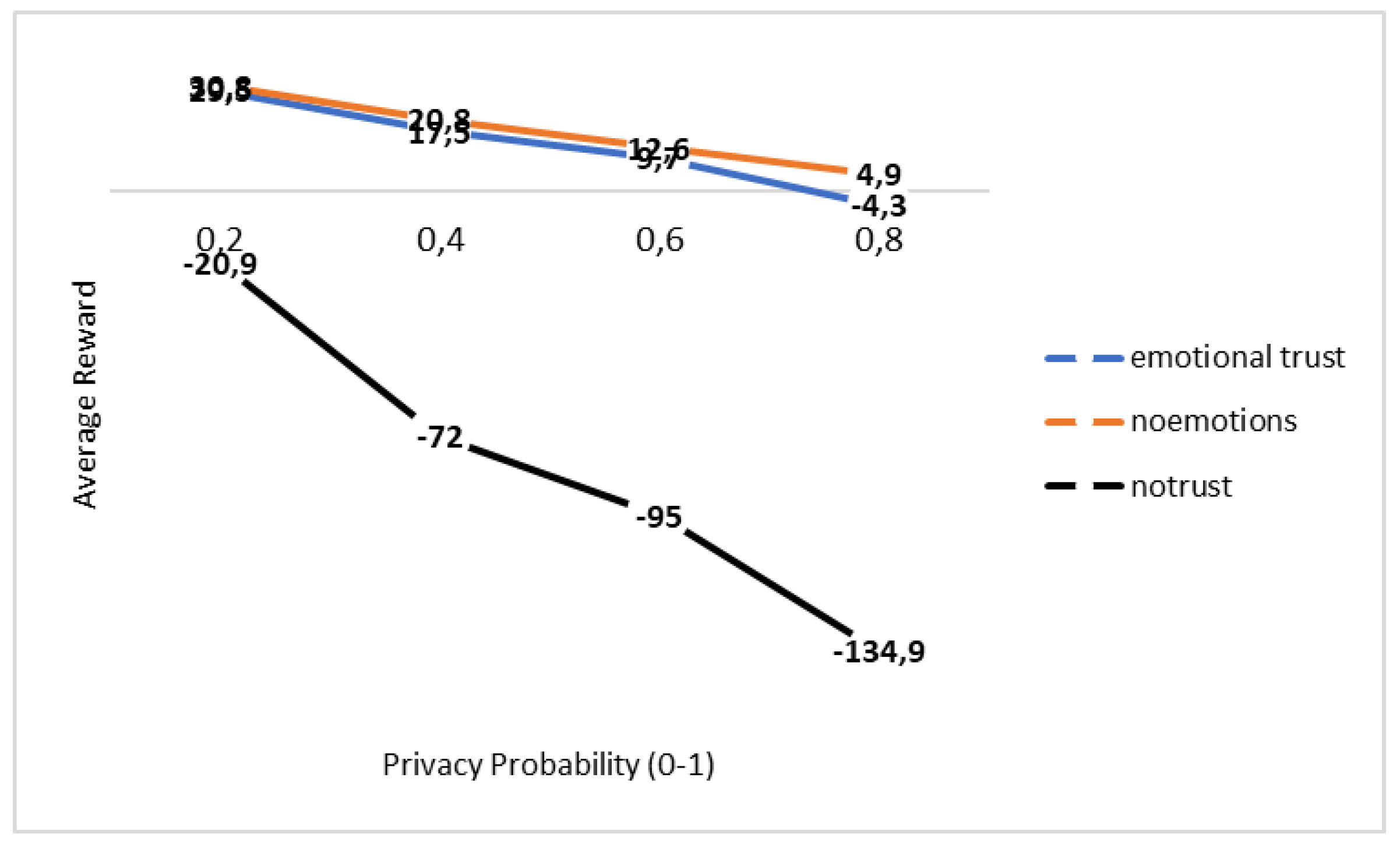

- As the privacy probability increases, the rewards associated with any alternative decrease;

- Independent of the value of privacy probability, the no-trust alternative results in considerably fewer rewards than the other two alternatives (no emotions and emotional trust);

- Except when the privacy probability is very high (0.8), the use of emotions slightly decreases the rewards obtained by agents (the emotional trust line is slightly below the noemotions line). So it appears that in these cases, using emotions in the trust model causes some decline, but the difference is very small.

- When the the privacy probability is very high (0.8), the use of emotions appears to obtain worse rewards, and it seems to decrease the quality of the decisions taken by the trust model (the no-emotions alternative slightly outperforms the emotional trust alternative).

8. Conclusions

- We proposed a particular way to include privacy in an emotional model that is compliant with psychological theories and previous practical approaches to these theories, as explained in Section 1;

- We proposed a particular way to include privacy in the cooperation decisions of a trust model;

- We suggested a set of particular values for all variables that form our privacy-sensible emotional model;

- We implemented our model in the reasoning of symbolic agents in GAML (the programming language of the GAMA agent platform) according to the belief, desires and intentions deliberative paradigm, which is communicated using the IEEE FIPA standard.

- We also defined a cooperative logistic problem to test our model.

- And finally, we have executed agent simulations that generated two different comparisons. Such comparisons allowed us to observe the contribution of emotions and trust in the defined cooperative logistic problem to improve both of our goals: time savings and privacy protection.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Picard, R. Affective Computing; MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Johnson, G. Theories of Emotion. Internet Encyclopedia of Philosophy. 2009. Available online: http://www.iep.utm.edu/emotion/ (accessed on 26 July 2023).

- Ekman, P. Basic Emotions. In Handbook of Cognition and Emotion; John Wiley & Sons: Hoboken, NJ, USA, 1999; pp. 45–60. [Google Scholar]

- Plutchik, R. Emotions and Life: Perspectives from Psychology, Biology, and Evolution; American Psychological Association: Washington, DC, USA, 2003. [Google Scholar]

- Becker, C.; Prendinger, H.; Ishizuka, M.; Wachsmuth, I. Evaluating Affective Feedback of the 3D Agent Max in a Competitive Cards Game. In Affective Computing and Intelligent Interaction; Springer: Berlin/Heidelberg, Germany, 2005; pp. 466–473. [Google Scholar]

- Damasio, A. Descartes’ Error: Emotion, Reason, and the Human Brain; Quill: New York, NY, USA, 1994. [Google Scholar]

- Ortony, A.; Clore, G.; Collins, A. The Cognitive Structure of Emotion. Contemp. Sociol. 1988, 18. [Google Scholar] [CrossRef]

- Russell, J. A Circumplex Model of Affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Watson, D.; Tellegen, A. Toward a Consensual Structure of Mood. Psychol. Bull. 1985, 98, 219–235. [Google Scholar] [CrossRef] [PubMed]

- Mehrabian, A.; Russell, J. An Approach to Environmental Psychology; MIT Press: Cambridge, MA, USA, 1974. [Google Scholar]

- McCracken, L.M.; Zayfert, C.; Gross, R.T. The pain anxiety symptoms scale: Development and validation of a scale to measure fear of pain. Pain 1992, 50, 67–73. [Google Scholar] [CrossRef]

- Eysenk, H. The biological basis of personality. Nature 1963, 199, 1031–1034. [Google Scholar] [CrossRef]

- Tan, H.H.; Foo, M.D.; Kwek, M. The Effects of Customer Personality Traits on the Display of Positive Emotions. Acad. Manag. J. 2004, 47, 287–296. [Google Scholar] [CrossRef]

- Rothbart, M. Becoming Who We Are: Temperament and Personality in Development; Guilford Press: New York, NY, USA, 2012. [Google Scholar]

- Kagan, J.; Fox, N. Biology, culture, and temperamental biases. In Handbook of Child Psychology: Social, Emotional, and Personality Development; Eisenberg, N., Damon, W., Lerner, R., Eds.; John Wiley & Sons: Hoboken, NJ, USA, 2006; pp. 167–225. [Google Scholar]

- Eysenck, H.J.; Eysenck, S.B.G. Psychoticism as a Dimension of Personality; Taylor & Francis Group: Abingdon, UK, 1976. [Google Scholar]

- LeDoux, J. The Emotional Brain: The Mysterious Underpinnings of Emotional Life; Touchstone Book, Simon & Schuster: Manhattan, NY, USA, 1996. [Google Scholar]

- Cassell, J. Embodied Conversational Interface Agents. Commun. ACM 2000, 43, 70–78. [Google Scholar] [CrossRef]

- Prendinger, H.; Ishizuka, M. (Eds.) Life-Like Characters: Tools, Affective Functions, and Applications; Cognitive Technologies; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- de Rosis, F.; Pelachaud, C.; Poggi, I.; Carofiglio, V.; Carolis, B.D. From Greta’s mind to her face: Modelling the dynamics of affective states in a conversational embodied agent. Int. J. Hum.-Comput. Stud. 2003, 59, 81–118. [Google Scholar] [CrossRef]

- Ochs, M.; Devooght, K.; Sadek, D.; Pelachaud, C. A Computational Model of Capability-Based Emotion Elicitation for Rational Agent. In Proceedings of the 1st workshop on Emotion and Computing-Current Research and Future Impact, German Conference on Artificial Intelligence (KI), Bremen, Germany, 19 June 2006; pp. 7–10. [Google Scholar]

- Breazeal, C. Emotion and Sociable Humanoid Robots. Int. J. Hum.-Comput. Stud. 2003, 59, 119–155. [Google Scholar] [CrossRef]

- Itoh, K.; Miwa, H.; Zecca, M.; Takanobu, H.; Roccella, S.; Carrozza, M.; Dario, P.; Takanishi, A. Mechanical design of emotion expression humanoid robot we-4rii. In CISM International Centre for Mechanical Sciences, Courses and Lectures; Springer International Publishing: Cham, Switzerland, 2006; pp. 255–262. [Google Scholar]

- Roether, C.L.; Omlor, L.; Christensen, A.; Giese, M.A. Critical features for the perception of emotion from gait. J. Vis. 2009, 9, 15. [Google Scholar] [CrossRef]

- Xu, S.; Fang, J.; Hu, X.; Ngai, E.; Wang, W.; Guo, Y.; Leung, V.C.M. Emotion Recognition From Gait Analyses: Current Research and Future Directions. IEEE Trans. Comput. Soc. Syst. 2022, 1–15. [Google Scholar] [CrossRef]

- Duval, S.; Becker, C.; Hashizume, H. Privacy Issues for the Disclosure of Emotions to Remote Acquaintances Without Simultaneous Communication. In Universal Access in Human Computer Interaction. Coping with Diversity, Proceedings of the 4th International Conference on Universal Access in Human-Computer Interaction, UAHCI 2007, Held as Part of HCI International 2007, Beijing, China, 22–27 July 2007, Proceedings, Part I; Stephanidis, C., Ed.; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4554, pp. 82–91. [Google Scholar]

- McStay, A. Emotional AI: The Rise of Empathic Media; SAGE Publications: Thousand Oaks, CA, USA, 2018. [Google Scholar]

- Weiss, G. Multiagent Systems: A Modern Approach to Distributed Artificial Intelligence; MIT Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Wooldridge, M.; Jennings, N. Agent theories, architectures and languages: A survey. In Lecture Notes in Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 1995; Volume 890, pp. 1–39. [Google Scholar]

- Sabater-Mir, J.; Sierra, C. Review on Computational Trust and Reputation Models. Artif. Intell. Rev. 2005, 24, 33–60. [Google Scholar] [CrossRef]

- Falcone, R.; Castelfranchi, C. Social Trust: A Cognitive Approach. In Trust and Deception in Virtual Societies; Castelfranchi, C., Tan, Y.H., Eds.; Springer: Dordrecht, The Netherlands, 2001; pp. 55–90. [Google Scholar]

- Rao, A.S.; Georgeff, M.P. BDI Agents: From Theory to Practice. ICMAS 1995, 95, 312–319. [Google Scholar]

- Poslad, S. Specifying protocols for multi-agent system interaction. ACM Trans. Autonom. Adapt. Syst. 2007, 4, 15-es. [Google Scholar] [CrossRef]

- Barber, K.S.; Fullam, K.; Kim, J. Challenges for Trust, Fraud and Deception Research in Multi-Agent Systems. In Proceedings of the 2002 International Conference on Trust, Reputation and Security: Theories and Practice, AAMAS’02, Berlin, Germany, 15 July 2002; pp. 8–14. [Google Scholar]

- Bitencourt, G.K.; Silveira, R.A.; Marchi, J. TrustE: An Emotional Trust Model for Agents. In Proceedings of the 11th Edition of the European Workshop on Multi-agent Systems (EUMAS 2013), Toulouse, France, 12–13 December 2013; pp. 54–67. [Google Scholar]

- Granatyr, J.; Osman, N.; Dias, J.A.; Nunes, M.A.S.N.; Masthoff, J.; Enembreck, F.; Lessing, O.R.; Sierra, C.; Paiva, A.M.; Scalabrin, E.E. The Need for Affective Trust Applied to Trust and Reputation Models. ACM Comput. Surv. 2017, 50, 1–36. [Google Scholar] [CrossRef]

- Steunebrink, B.; Dastani, M.; Meyer, J.J.C. The OCC model revisited. In Proceedings of the 4th Workshop on Emotion and Computing, Paderborn, Germany, 15 September 2009; pp. 40–47. [Google Scholar]

- Yu, B.; Singh, M.P. A Social Mechanism of Reputation Management in Electronic Communities. In The CIA; Klusch, M., Kerschberg, L., Eds.; Springer: Berlin/Heidelberg, Germany, 2000; Volume 1860, pp. 154–165. [Google Scholar]

- Parliament, E. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and repealing Directive 95/46/EC (General Data Protection Regulation). OJ 2016, L 119, 1–88. [Google Scholar]

- Gotterbarn, D. Privacy Lost: The Net, Autonomous Agents, and ‘Virtual Information’. Ethics Inf. Technol. 1999, 1, 147–154. [Google Scholar] [CrossRef]

- Wright, D. Making privacy impact assessment more effective. Inf. Soc. 2013, 29, 307–315. [Google Scholar] [CrossRef]

- Stewart, B. Privacy Impact Assessment: Optimising the Regulator’s Role. In Privacy Impact Assessment; Springer: Berlin/Heidelberg, Germany, 2012; pp. 437–444. [Google Scholar]

- Stark, L. The emotional context of information privacy. Inf. Soc. 2016, 32, 14–27. [Google Scholar] [CrossRef]

- Russell, J.A.; Mehrabian, A. Evidence for a three-factor theory of emotions. J. Res. Personal. 1977, 11, 273–294. [Google Scholar] [CrossRef]

- Becker-Asano, C.; Wachsmuth, I. Affective computing with primary and secondary emotions in a virtual human. Auton. Agents Multi-Agent Syst. 2010, 20, 32–49. [Google Scholar] [CrossRef]

- Rao, A.S.; George, M.P. BDI agents: From theory to practice. In Proceedings of the First International Conference on Multi-Agent Systems (ICMAS-95), San Francisco, CA, USA, 12–14 June 1995; pp. 312–319. [Google Scholar]

- Grignard, A.; Taillandier, P.; Gaudou, B.; Vo, D.A.; Huynh, N.Q.; Drogoul, A. GAMA 1.6: Advancing the Art of Complex Agent-Based Modeling and Simulation. In PRIMA; Boella, G., Elkind, E., Savarimuthu, B.T.R., Dignum, F., Purvis, M.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8291, pp. 117–131. [Google Scholar]

- Caillou, P.; Gaudou, B.; Grignard, A.; Truong, C.Q.; Taillandier, P. A Simple-to-Use BDI Architecture for Agent-Based Modeling and Simulation. In Advances in Social Simulation 2015; Jager, W., Verbrugge, R., Flache, A., de Roo, G., Hoogduin, L., Hemelrijk, C., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 15–28. [Google Scholar]

- Taillandier, P.; Vo, D.A.; Amouroux, E.; Drogoul, A. GAMA: A Simulation Platform That Integrates Geographical Information Data, Agent-Based Modeling and Multi-scale Control. In Principles and Practice of Multi-Agent Systems; Desai, N., Liu, A., Winikoff, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 242–258. [Google Scholar]

- Bourgais, M.; Taillandier, P.; Vercouter, L. An Agent Architecture Coupling Cognition and Emotions for Simulation of Complex Systems. In Proceedings of the Social Simulation Conference, Rome, Italy, 19–23 September 2016. [Google Scholar]

| Emotion | Pleasure | Arousal | Dominance |

|---|---|---|---|

| joy | 0.75 | 0.48 | 0.35 |

| sad | −0.63 | 0.27 | −0.33 |

| surprise | 0.4 | 0.67 | −0.13 |

| fearful | −0.64 | 0.6 | −0.43 |

| angry | −0.51 | 0.59 | 0.25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carbo, J.; Molina, J.M. Trust Model of Privacy-Concerned, Emotionally Aware Agents in a Cooperative Logistics Problem. Appl. Sci. 2023, 13, 8681. https://doi.org/10.3390/app13158681

Carbo J, Molina JM. Trust Model of Privacy-Concerned, Emotionally Aware Agents in a Cooperative Logistics Problem. Applied Sciences. 2023; 13(15):8681. https://doi.org/10.3390/app13158681

Chicago/Turabian StyleCarbo, Javier, and Jose Manuel Molina. 2023. "Trust Model of Privacy-Concerned, Emotionally Aware Agents in a Cooperative Logistics Problem" Applied Sciences 13, no. 15: 8681. https://doi.org/10.3390/app13158681

APA StyleCarbo, J., & Molina, J. M. (2023). Trust Model of Privacy-Concerned, Emotionally Aware Agents in a Cooperative Logistics Problem. Applied Sciences, 13(15), 8681. https://doi.org/10.3390/app13158681