Depth Map Super-Resolution Reconstruction Based on Multi-Channel Progressive Attention Fusion Network

Abstract

1. Introduction

- (1)

- A novel multi-channel progressive attention fusion network is proposed, incorporating local residual connections and global residual connections to predict the high-frequency residual information of the image, which is more conducive to the recovery of depth map details.

- (2)

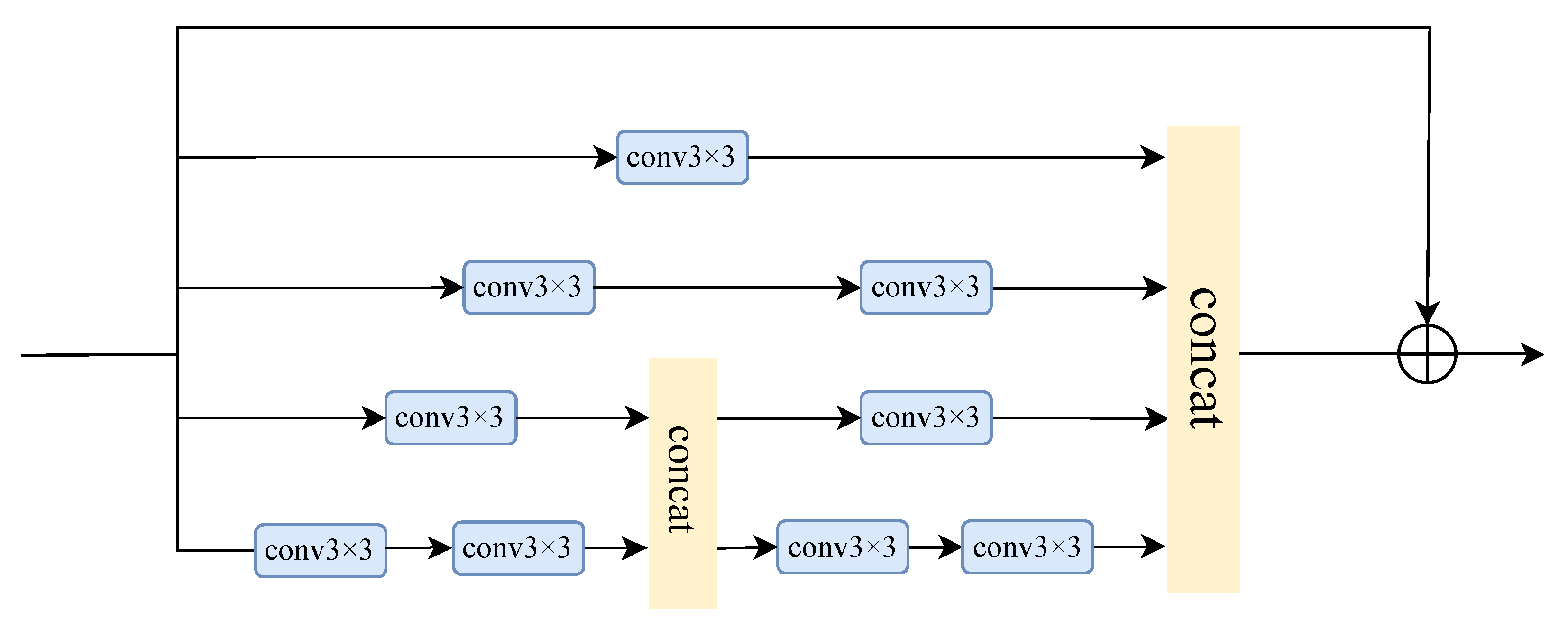

- The multi-scale feature optimization residual module is utilized to enhance the network’s multi-scale feature representation capability through multiple intertwined paths.

- (3)

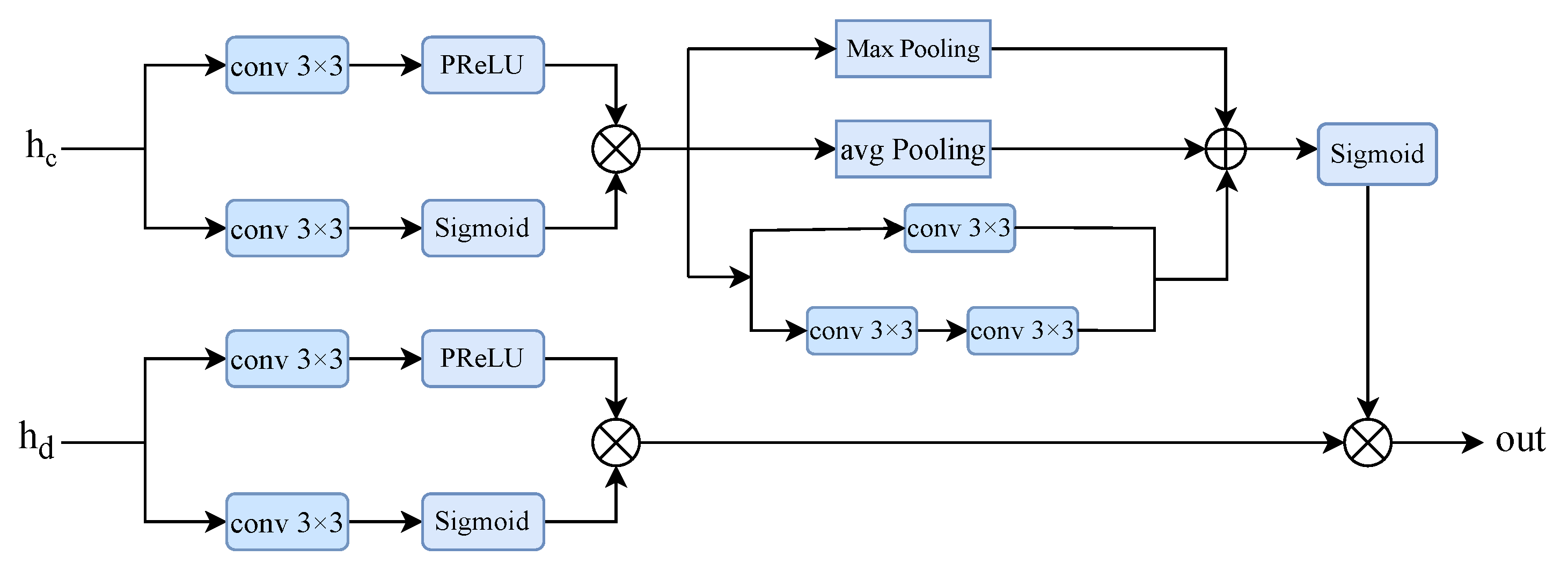

- We propose an attention-based multi-branch feature fusion module that can adaptively fuse features from both the depth and color images.

2. Related Works

2.1. Single DSR Technology

2.2. Color Image-Guided DSR Technology

3. Proposed Method

3.1. Overview

3.2. Attention-Based Multi-Branch Feature Fusion Module

3.3. Multi-Scale Feature Optimization Module

3.4. Loss Function

4. Experimental Results

4.1. Experimental Setting

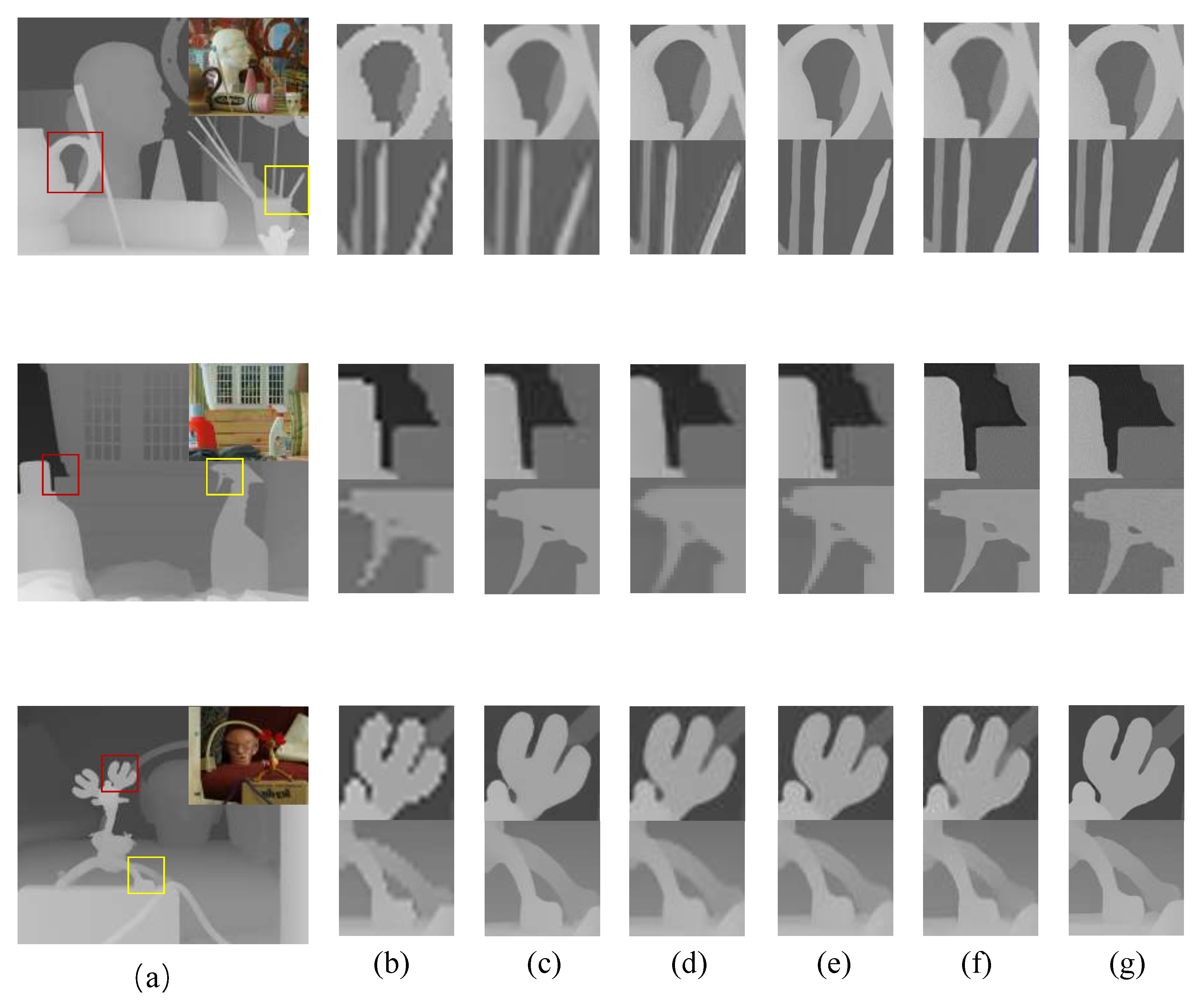

4.2. Evaluation

4.2.1. Evaluate under Noise-Free Conditions

4.2.2. Evaluate under Noisy Conditions

4.3. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. Kinectfusion: Real-time 3d reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 559–568. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Sinha, G.; Shahi, R.; Shankar, M. Human computer interaction. In Proceedings of the IEEE/CVF Conference on 3rd International Conference on Emerging Trends in Engineering and Technology, Goa, India, 19–21 November 2010; pp. 1–4. [Google Scholar]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef]

- Kopf, J.; Cohen, M.F.; Lischinski, D.; Uyttendaele, M. Joint bilateral upsampling. ACM Trans. Graph. (ToG) 2007, 26, 96-es. [Google Scholar] [CrossRef]

- Yang, S.; Cao, N.; Guo, B.; Li, G. Depth map super-resolution based on edge-guided joint trilateral upsampling. Vis. Comput. 2022, 38, 883–895. [Google Scholar] [CrossRef]

- Mac Aodha, O.; Campbell, N.D.; Nair, A.; Brostow, G.J. Patch based synthesis for single depth image super-resolution. In Proceedings of the Computer Vision—ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Proceedings, Part III 12. Springer: Berlin/Heidelberg, Germany, 2012; pp. 71–84. [Google Scholar]

- Li, Y.; Min, D.; Do, M.N.; Lu, J. Fast guided global interpolation for depth and motion. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part III 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 717–733. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Li, J.; Fang, F.; Mei, K.; Zhang, G. Multi-scale residual network for image super-resolution. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 517–532. [Google Scholar]

- Qin, J.; Huang, Y.; Wen, W. Multi-scale feature fusion residual network for single image super-resolution. Neurocomputing 2020, 379, 334–342. [Google Scholar] [CrossRef]

- Mei, Y.; Fan, Y.; Zhou, Y.; Huang, L.; Huang, T.S.; Shi, H. Image super-resolution with cross-scale non-local attention and exhaustive self-exemplars mining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 5690–5699. [Google Scholar]

- Song, X.; Dai, Y.; Qin, X. Deeply supervised depth map super-resolution as novel view synthesis. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 2323–2336. [Google Scholar] [CrossRef]

- Huang, L.; Zhang, J.; Zuo, Y.; Wu, Q. Pyramid-structured depth map super-resolution based on deep dense-residual network. IEEE Signal Process. Lett. 2019, 26, 1723–1727. [Google Scholar] [CrossRef]

- Xian, C.; Qian, K.; Zhang, Z.; Wang, C.C. Multi-scale progressive fusion learning for depth map super-resolution. arXiv 2020, arXiv:2011.11865. [Google Scholar]

- Xie, J.; Feris, R.S.; Sun, M.T. Edge-guided single depth image super resolution. IEEE Trans. Image Process. 2015, 25, 428–438. [Google Scholar] [CrossRef]

- Zhao, L.; Bai, H.; Liang, J.; Wang, A.; Zhao, Y. Single depth image super-resolution with multiple residual dictionary learning and refinement. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 739–744. [Google Scholar]

- Zhou, D.; Wang, R.; Lu, J.; Zhang, Q. Depth image super resolution based on edge-guided method. Appl. Sci. 2018, 8, 298. [Google Scholar] [CrossRef]

- Song, X.; Dai, Y.; Zhou, D.; Liu, L.; Li, W.; Li, H.; Yang, R. Channel attention based iterative residual learning for depth map super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 5631–5640. [Google Scholar]

- Ye, X.; Sun, B.; Wang, Z.; Yang, J.; Xu, R.; Li, H.; Li, B. Depth super-resolution via deep controllable slicing network. In Proceedings of the 28th ACM International Conference on Multimedia, Virtual Event, 12–16 October 2020; pp. 1809–1818. [Google Scholar]

- Diebel, J.; Thrun, S. An application of markov random fields to range sensing. Adv. Neural Inf. Process. Syst. 2005, 18, 291–298. [Google Scholar]

- Wang, Z.; Ye, X.; Sun, B.; Yang, J.; Xu, R.; Li, H. Depth upsampling based on deep edge-aware learning. Pattern Recognit. 2020, 103, 107274. [Google Scholar] [CrossRef]

- Hui, T.W.; Loy, C.C.; Tang, X. Depth map super-resolution by deep multi-scale guidance. In Proceedings of the Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part III 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 353–369. [Google Scholar]

- Zuo, Y.; Wu, Q.; Fang, Y.; An, P.; Huang, L.; Chen, Z. Multi-scale frequency reconstruction for guided depth map super-resolution via deep residual network. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 297–306. [Google Scholar] [CrossRef]

- Zhong, Z.; Liu, X.; Jiang, J.; Zhao, D.; Chen, Z.; Ji, X. High-resolution depth maps imaging via attention-based hierarchical multi-modal fusion. IEEE Trans. Image Process. 2021, 31, 648–663. [Google Scholar] [CrossRef]

- Chen, C.; Lin, Z.; She, H.; Huang, Y.; Liu, H.; Wang, Q.; Xie, S. Color image-guided very low-resolution depth image reconstruction. Signal Image Video Process. 2023, 17, 2111–2120. [Google Scholar] [CrossRef]

- Guo, J.; Xiong, R.; Ou, Y.; Wang, L.; Liu, C. Depth Image Super-resolution via Two-Branch Network. In Proceedings of the Cognitive Systems and Information Processing: 6th International Conference, ICCSIP 2021, Suzhou, China, 20–21 November 2021; Revised Selected Papers 6. Springer: Berlin/Heidelberg, Germany, 2022; pp. 200–212. [Google Scholar]

- Sun, B.; Ye, X.; Li, B.; Li, H.; Wang, Z.; Xu, R. Learning scene structure guidance via cross-task knowledge transfer for single depth super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7792–7801. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Butler, D.J.; Wulff, J.; Stanley, G.B.; Black, M.J. A naturalistic open source movie for optical flow evaluation. In Proceedings of the Computer Vision—ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Proceedings, Part VI 12. Springer: Berlin/Heidelberg, Germany, 2012; pp. 611–625. [Google Scholar]

- Kiechle, M.; Hawe, S.; Kleinsteuber, M. A joint intensity and depth co-sparse analysis model for depth map super-resolution. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1545–1552. [Google Scholar]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Deep laplacian pyramid networks for fast and accurate super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 624–632. [Google Scholar]

- Yang, J.; Ye, X.; Li, K.; Hou, C.; Wang, Y. Color-guided depth recovery from RGB-D data using an adaptive autoregressive model. IEEE Trans. Image Process. 2014, 23, 3443–3458. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1646–1654. [Google Scholar]

- Zuo, Y.; Fang, Y.; Yang, Y.; Shang, X.; Wang, B. Residual dense network for intensity-guided depth map enhancement. Inf. Sci. 2019, 495, 52–64. [Google Scholar] [CrossRef]

- Bansal, A.; Jonna, S.; Sahay, R.R. Pag-net: Progressive attention guided depth super-resolution network. arXiv 2019, arXiv:1911.09878. [Google Scholar]

- Ye, X.; Sun, B.; Wang, Z.; Yang, J.; Xu, R.; Li, H.; Li, B. PMBANet: Progressive multi-branch aggregation network for scene depth super-resolution. IEEE Trans. Image Process. 2020, 29, 7427–7442. [Google Scholar] [CrossRef]

- Liu, P.; Zhang, Z.; Meng, Z.; Gao, N.; Wang, C. PDR-Net: Progressive depth reconstruction network for color guided depth map super-resolution. Neurocomputing 2022, 479, 75–88. [Google Scholar] [CrossRef]

- Ferstl, D.; Reinbacher, C.; Ranftl, R.; Rüther, M.; Bischof, H. Image guided depth upsampling using anisotropic total generalized variation. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 993–1000. [Google Scholar]

| Method | 2× | 4× | 8× | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cones | Teddy | Tsukuba | Venus | Cones | Teddy | Tsukuba | Venus | Cones | Teddy | Tsukuba | Venus | |

| Bicubic | 2.51 | 1.93 | 5.82 | 1.31 | 3.52 | 2.86 | 8.67 | 1.91 | 5.36 | 4.04 | 12.31 | 2.76 |

| JID [34] | 1.58 | 1.27 | 3.83 | 0.75 | 3.23 | 1.98 | 6.13 | 1.02 | 4.92 | 2.94 | 9.57 | 1.37 |

| SRCNN [9] | 1.76 | 1.35 | 3.82 | 0.84 | 2.98 | 1.94 | 5.91 | 1.11 | 4.94 | 2.92 | 9.15 | 1.45 |

| LapSRN [35] | 0.98 | 0.97 | 1.72 | 0.72 | 2.85 | 1.68 | 5.34 | 0.77 | 4.67 | 2.96 | 8.94 | 1.34 |

| MSG-Net [23] | 1.13 | 0.99 | 2.20 | 0.59 | 2.95 | 1.78 | 5.21 | 0.78 | 5.23 | 3.18 | 10.25 | 1.18 |

| MFR-SR [24] | 1.27 | 1.08 | 2.65 | 0.54 | 2.43 | 1.75 | 5.63 | 0.85 | 4.73 | 2.49 | 8.03 | 1.25 |

| RDN-GDE [38] | 0.88 | 0.85 | 1.41 | 0.56 | 2.38 | 1.58 | 3.73 | 0.73 | 4.66 | 2.88 | 7.79 | 1.09 |

| DSDMSR [13] | 1.04 | 0.83 | 1.80 | 0.53 | 2.19 | 1.54 | 4.15 | 0.78 | 4.70 | 3.06 | 7.43 | 1.28 |

| ours | 0.69 | 0.74 | 1.13 | 0.39 | 1.72 | 1.26 | 2.94 | 0.53 | 4.28 | 2.12 | 7.11 | 0.76 |

| Method | Art | Books | Moebius | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2× | 4× | 8× | 16× | 2× | 4× | 8× | 16× | 2× | 4× | 8× | 16× | |

| Bicubic | 2.57 | 3.85 | 5.53 | 8.37 | 1.01 | 1.58 | 2.27 | 3.36 | 0.91 | 1.35 | 2.06 | 2.97 |

| JID [34] | 1.16 | 1.84 | 2.77 | 10.86 | 0.59 | 0.93 | 1.14 | 9.15 | 0.61 | 0.87 | 1.37 | 10.3 |

| SRCNN [9] | 0.98 | 2.09 | 4.75 | 7.81 | 0.39 | 0.94 | 2.15 | 3.24 | 0.45 | 0.97 | 2.01 | 2.82 |

| LapSRN [35] | 0.88 | 1.79 | 2.73 | 6.31 | 0.78 | 0.94 | 1.29 | 2.35 | 0.77 | 0.95 | 1.33 | 2.37 |

| AR [36] | 3.07 | 3.99 | 4.68 | 6.87 | 1.38 | 1.94 | 2.05 | 2.84 | 0.98 | 1.23 | 1.73 | 2.56 |

| VDSR [37] | 1.32 | 2.07 | 3.24 | 6.66 | 0.48 | 0.83 | 1.72 | 2.14 | 0.54 | 0.91 | 1.57 | 2.15 |

| MSG-Net [23] | 0.66 | 1.47 | 3.01 | 4.57 | 0.37 | 0.67 | 1.03 | 1.80 | 0.60 | 0.76 | 1.29 | 1.63 |

| MFR-SR [24] | 0.71 | 1.54 | 2.71 | 4.35 | 0.42 | 0.63 | 1.05 | 1.78 | 0.42 | 0.72 | 1.10 | 1.73 |

| DRN [15] | 0.66 | 1.59 | 2.57 | 4.83 | 0.54 | 0.83 | 1.19 | 1.70 | 0.52 | 0.86 | 1.21 | 1.87 |

| RDN-GDE [38] | 0.56 | 1.47 | 2.60 | 4.16 | 0.36 | 0.62 | 1.00 | 1.68 | 0.38 | 0.69 | 1.06 | 1.65 |

| PAG-Net [39] | 0.33 | 1.15 | 2.08 | 3.68 | 0.26 | 0.46 | 0.81 | 1.38 | - | - | - | - |

| PMBANet [40] | 0.61 | 1.42 | 1.92 | 2.44 | 0.41 | 0.95 | 1.10 | 1.58 | 0.39 | 0.91 | 1.23 | 1.58 |

| PDR-Net [41] | - | 1.63 | 1.92 | 2.37 | - | 1.10 | 1.35 | 1.66 | - | 1.03 | 1.17 | 1.54 |

| ours | 0.27 | 1.07 | 1.87 | 2.53 | 0.21 | 0.39 | 0.76 | 1.24 | 0.22 | 0.52 | 0.87 | 1.57 |

| Method | Reindeer | Laundry | Dolls | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2× | 4× | 8× | 16× | 2× | 4× | 8× | 16× | 2× | 4× | 8× | 16× | |

| Bicubic | 1.92 | 2.80 | 3.99 | 5.86 | 1.60 | 2.40 | 3.45 | 5.07 | 0.89 | 1.29 | 1.95 | 2.62 |

| JID [34] | 0.91 | 1.47 | 2.19 | 4.15 | 0.72 | 1.19 | 1.77 | 3.47 | 0.73 | 0.96 | 1.26 | 2.06 |

| SRCNN [9] | 0.81 | 1.87 | 3.87 | 5.64 | 0.67 | 1.74 | 3.45 | 5.04 | 0.61 | 0.95 | 1.52 | 2.54 |

| LapSRN [35] | 0.80 | 1.31 | 1.92 | 4.56 | 0.78 | 1.12 | 1.67 | 3.79 | 0.77 | 0.98 | 1.42 | 2.28 |

| AR [36] | 2.99 | 3.09 | 4.33 | 4.99 | 2.39 | 2.43 | 3.01 | 4.47 | 1.01 | 1.23 | 1.65 | 2.23 |

| VDSR [37] | 1.01 | 1.50 | 2.28 | 4.17 | 0.72 | 1.19 | 1.59 | 3.20 | 0.63 | 0.89 | 1.31 | 2.09 |

| MSG-Net [23] | 0.66 | 1.47 | 2.46 | 4.57 | 0.79 | 1.12 | 1.51 | 4.26 | 0.68 | 0.92 | 1.47 | 1.86 |

| MFR-SR [24] | 0.65 | 1.23 | 2.06 | 3.74 | 0.61 | 1.11 | 1.75 | 3.01 | 0.60 | 0.89 | 1.22 | 1.74 |

| DRN [15] | 0.59 | 1.11 | 1.80 | 3.11 | 0.52 | 0.92 | 1.52 | 2.97 | 0.58 | 0.91 | 1.31 | 1.87 |

| RDN-GDE [38] | 0.51 | 1.17 | 2.05 | 3.58 | 0.48 | 0.96 | 1.63 | 2.86 | 0.56 | 0.88 | 1.21 | 1.71 |

| PAG-Net [39] | 0.31 | 0.85 | 1.46 | 2.52 | 0.30 | 0.71 | 1.27 | 1.88 | - | - | - | - |

| PMBANet [40] | 0.41 | 1.17 | 1.74 | 2.17 | 0.38 | 0.99 | 1.54 | 1.78 | 0.36 | 0.96 | 1.24 | 1.42 |

| PDR-Net [41] | - | 1.44 | 1.67 | 1.99 | - | 1.29 | 1.52 | 1.90 | - | 1.03 | 1.22 | 1.45 |

| ours | 0.27 | 0.79 | 1.38 | 2.17 | 0.25 | 0.73 | 1.21 | 1.74 | 0.37 | 0.84 | 1.15 | 1.53 |

| Method | 2× | 4× | 8× | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cones | Teddy | Tsukuba | Venus | Cones | Teddy | Tsukuba | Venus | Cones | Teddy | Tsukuba | Venus | |

| Bicubic | 4.81 | 4.51 | 7.07 | 4.25 | 5.60 | 5.02 | 9.53 | 4.52 | 6.88 | 5.70 | 13.06 | 4.91 |

| TGV [42] | 3.36 | 2.67 | 7.20 | 1.58 | 4.31 | 3.20 | 10.10 | 1.91 | 7.74 | 4.93 | 16.08 | 2.65 |

| SRCNN [9] | 2.32 | 2.10 | 4.49 | 1.28 | 4.10 | 3.32 | 7.88 | 2.69 | 6.35 | 5.32 | 12.13 | 3.94 |

| DGN [23] | 2.29 | 2.14 | 4.34 | 1.55 | 3.57 | 3.20 | 7.90 | 2.77 | 5.45 | 4.62 | 11.09 | 3.95 |

| MFR-SR [24] | 2.09 | 1.98 | 3.59 | 1.25 | 3.31 | 2.72 | 6.63 | 1.57 | 5.01 | 3.79 | 9.95 | 1.99 |

| RDN-GDE [38] | 2.02 | 1.97 | 3.70 | 1.11 | 3.13 | 2.82 | 6.56 | 1.59 | 4.84 | 3.93 | 9.78 | 2.46 |

| ours | 1.85 | 1.69 | 3.48 | 0.97 | 2.88 | 2.49 | 6.37 | 1.34 | 4.67 | 3.52 | 9.51 | 1.57 |

| MSFO | AMBFF | RMSE |

|---|---|---|

| No | No | 2.57 |

| No | Yes | 2.24 |

| Yes | No | 2.32 |

| Yes | Yes | 2.15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Huang, Q. Depth Map Super-Resolution Reconstruction Based on Multi-Channel Progressive Attention Fusion Network. Appl. Sci. 2023, 13, 8270. https://doi.org/10.3390/app13148270

Wang J, Huang Q. Depth Map Super-Resolution Reconstruction Based on Multi-Channel Progressive Attention Fusion Network. Applied Sciences. 2023; 13(14):8270. https://doi.org/10.3390/app13148270

Chicago/Turabian StyleWang, Jiachen, and Qingjiu Huang. 2023. "Depth Map Super-Resolution Reconstruction Based on Multi-Channel Progressive Attention Fusion Network" Applied Sciences 13, no. 14: 8270. https://doi.org/10.3390/app13148270

APA StyleWang, J., & Huang, Q. (2023). Depth Map Super-Resolution Reconstruction Based on Multi-Channel Progressive Attention Fusion Network. Applied Sciences, 13(14), 8270. https://doi.org/10.3390/app13148270