Addressing Business Process Deviations through the Evaluation of Alternative Pattern-Based Models

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

3.1. Element Characterization and Notations

3.1.1. State Token

- K is the set of KPIs.

- P is the set of products.

- R is the set of the available resources.

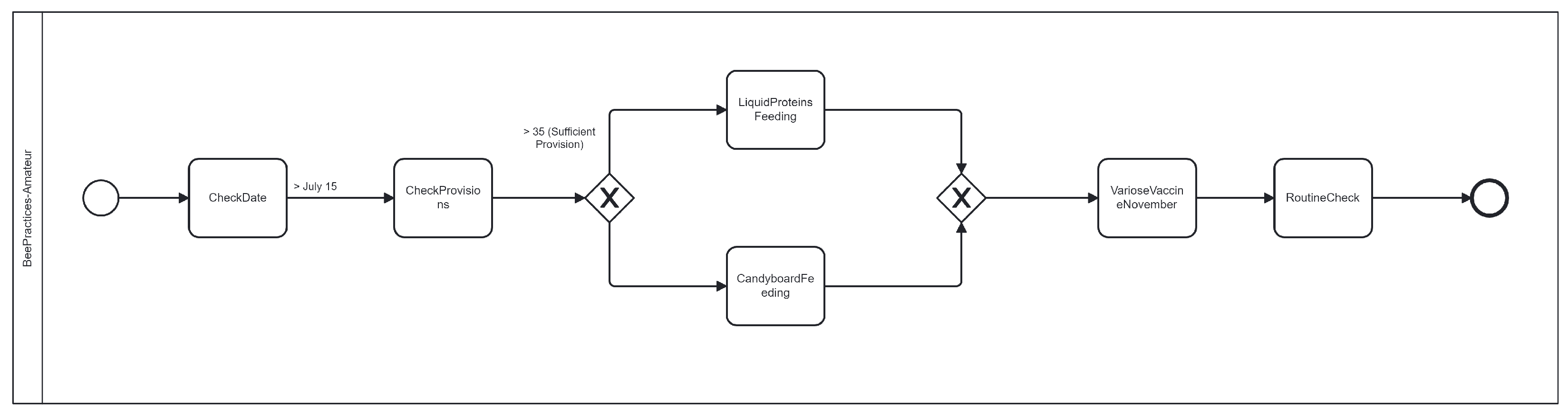

- V represents a set of system variables that are distinct from the variables included in the KPIs, products, and resources sets. It is important to note that V is a limited set of system variables that are specifically determined during the design phase of the model, and their selection is contingent upon the particular business domain. For instance, in the apiculture domain examples could include temperature, weight, and weather conditions, while other domains may feature a distinct set of variables along the execution.

3.1.2. Task/Activity

Task Characterization

- Constraints : The constraint defines a condition expressed by a Boolean expression that must be true at entry, during, and at exit of task A. The constraints mostly apply to elements K and V of the state token . For example:where is a KPI belonging to K and is a state variable belonging to V.

- Resources : Resources can be either local or global, and specifying them for each task or activity helps manage them more efficiently. It is important to mention at this point that resources management is not the object of this study. However, it is crucial to ensure normal execution of the workflow. As reported in [55], resources can be sorted in various ways. In this study, the main focus would be the behaviour of a resource rather than its type, namely allocation, utilization, and consumption. One can determine the availability of a resource r belonging to type T by verifying if r is present in the set of resources of type T available for the process. represents the availability of resources needed by A as a Boolean expression. must be true to enter task A. This expression mostly applies to element R and P of the state token . This expresses which resources are allocated by task A and will consequently be no longer available for other tasks during the execution of task A.

- Inputs : stands for the state token’s necessary condition which is true at task entry. These conditions are mainly applied to K and V.

- Outputs : stands for the state token’s necessary condition which is true at task exit. These conditions are applied to K, P, V and R. represents the transformation applied to the state token prior to entry, resulting in the state token after exit. This expresses the changes in KPIs, such as duration and cost, as well as the impact on resources and the product resulting from the task. In fact, indicates which resources are kept by A during its execution but does not provide information about which will be available again at the exit of task A. The expression of the consumption of resources by task A is then expressed in .

Output State Token Estimation

- Sequential executionConsidering a sequential configuration, as shown in Figure 2, is estimated and becomes . Then, as describe above, can be estimated (cd Section Output State Token Estimation of Section 3.1.2). This can be easily generalized to a sequence of n tasks.

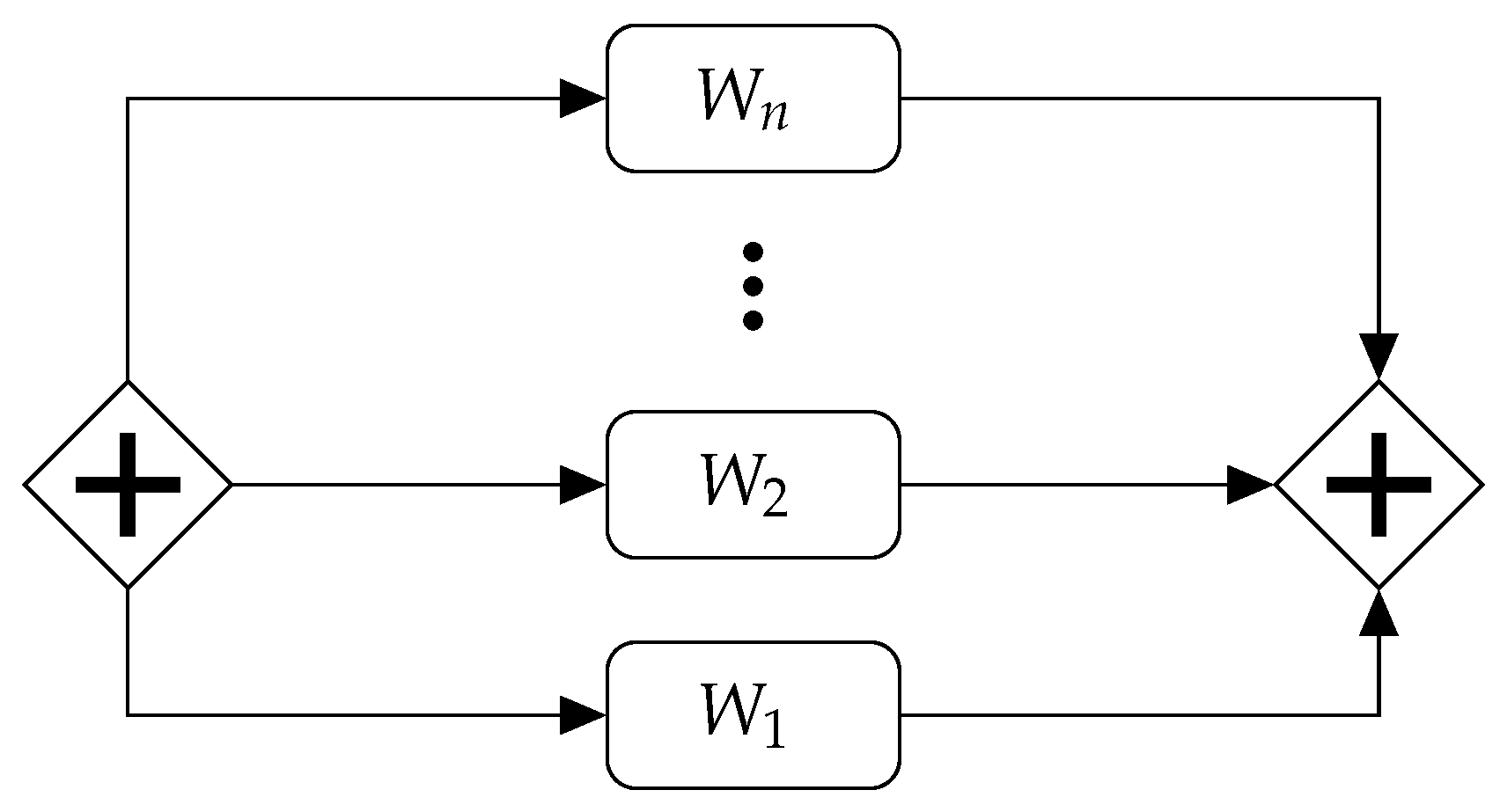

- Parallel executionIn the parallel execution configuration, as illustrated in Figure 3, all paths are considered. Subsequently, each input state token is divided into several parts (one for each branch) such that the recombination of all parts reconstitutes the original input state token. Next, the estimated output state token for each branch is computed, and their recombination yields the corresponding estimated output state token for the parallel execution of . Furthermore, the estimated state token for the entire parallel execution is obtained by computing a simple superset of the union of all the estimated state tokens. Instead of individually evaluating the transformation of each input state token, an estimation is made for the set of state tokens associated with each part (input of the branches). Nevertheless, this does not change the rest of the process.

- Exclusive executionIn the case of exclusive execution, as shown in Figure 4, each branch is executed with the subset of the input state tokens that fit the entry condition of the branch. At the end, a simple superset of the union of all the estimated output tokens obtained from each branches is computed.

3.1.3. Evaluation of BPMN Models

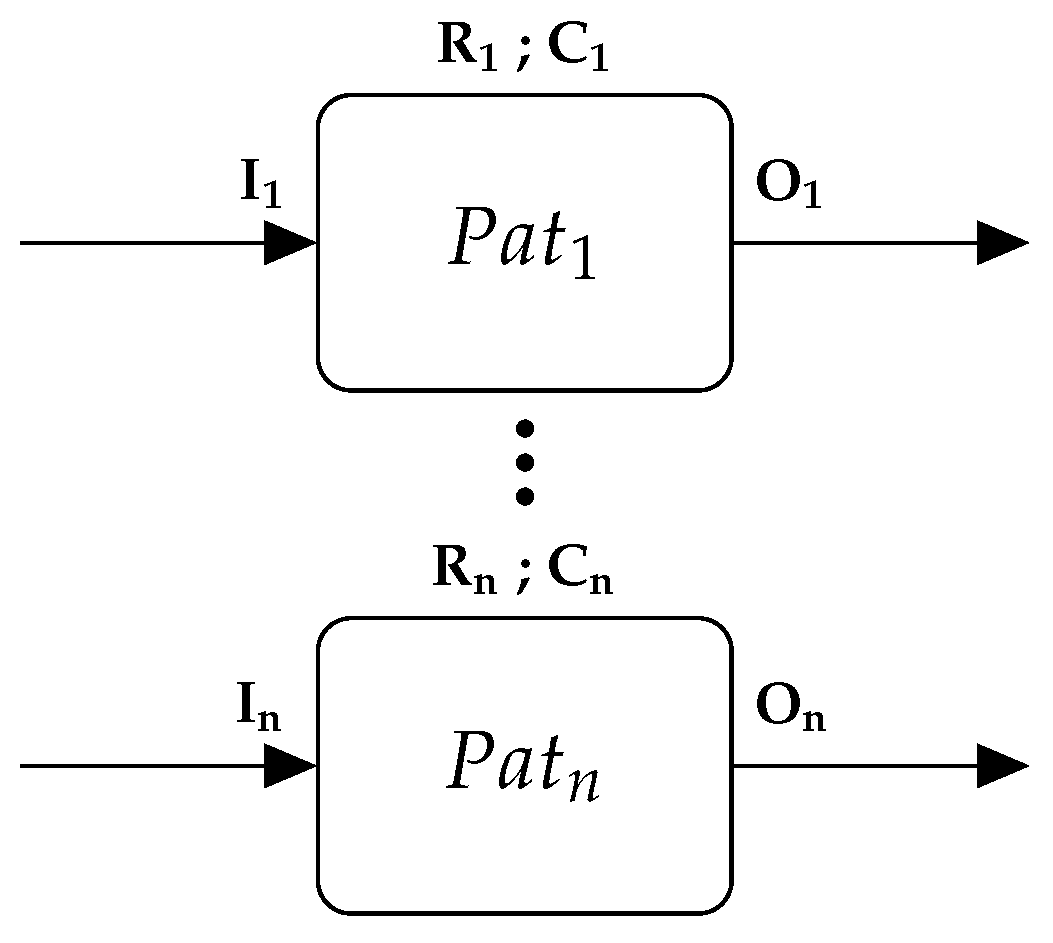

3.1.4. Library of Patterns

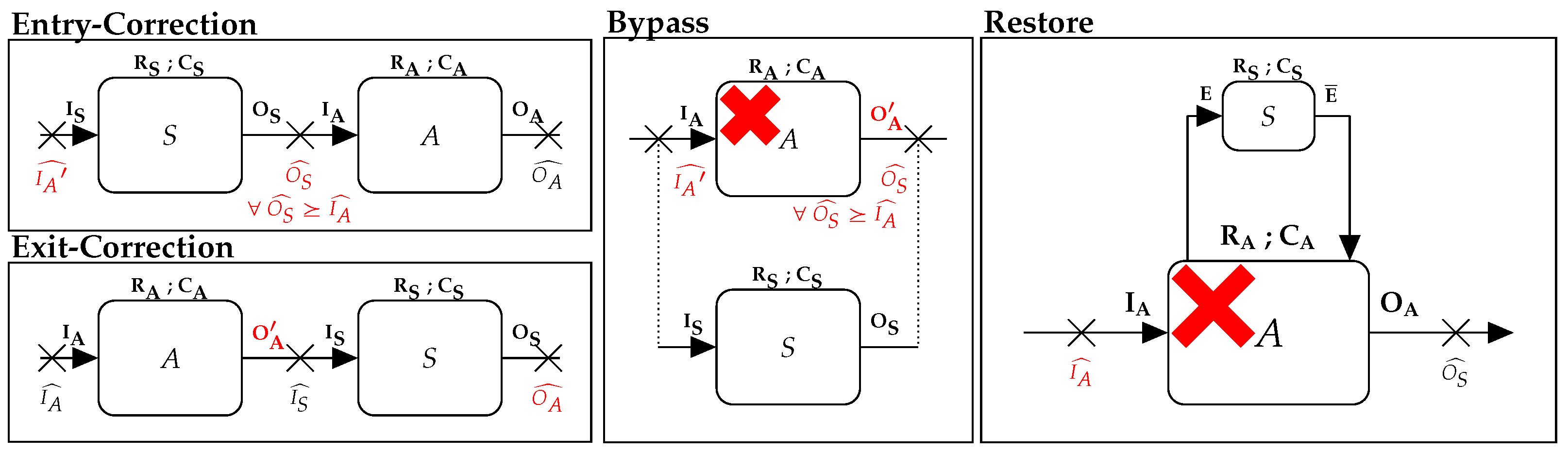

3.1.5. Repairing Typology

3.1.6. Repairing Mechanism

- 1.

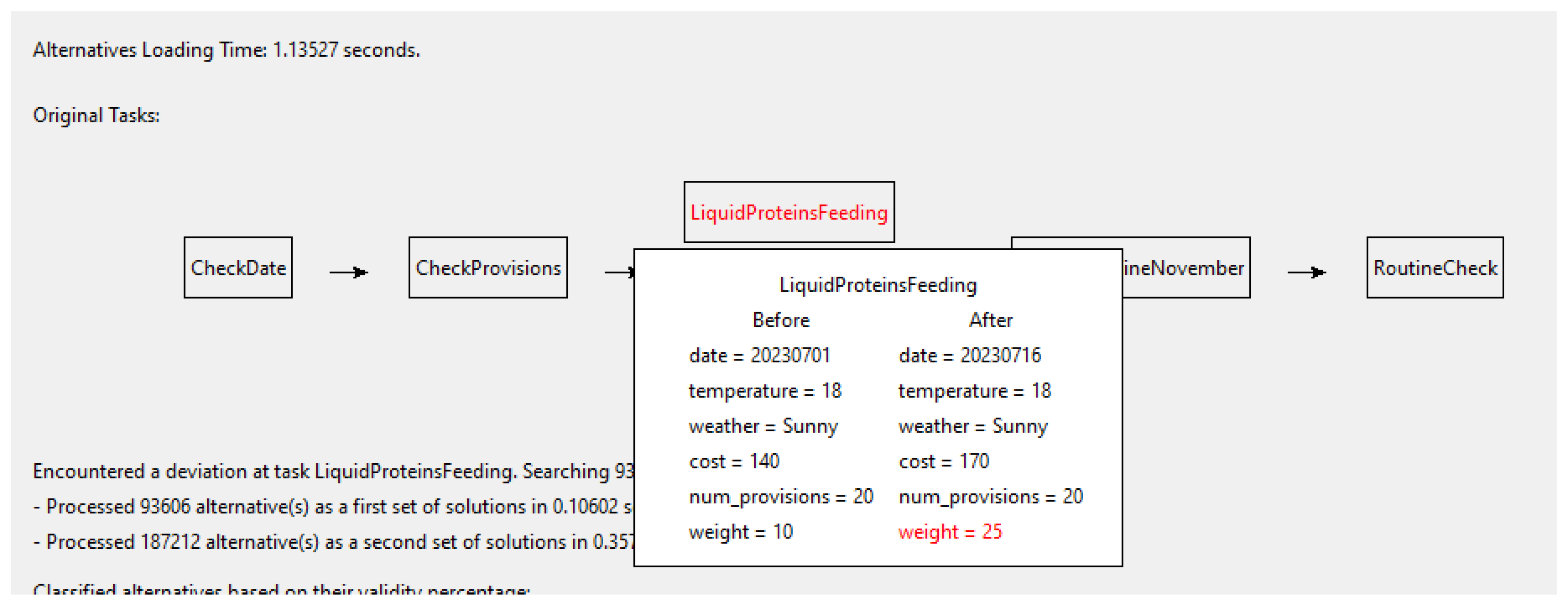

- Deviation detection:This paper assumes that a deviation has already been detected and focuses on the repair mechanism. Before calculating the state token, it is important to highlight three types of deviations: at the entry, during the execution and at the exit of a task (cf. Figure 6).

- 2.

- State token calculation:The subsequent step entails the computation of all estimated state tokens that would be correct if no deviation had been encountered. All (at the start and exit of tasks, and AND and XOR gates) between the deviation point D and the exit of the model are computed. In the following is called nodes. The estimated state tokens () are compared with the estimated state token of the exit point for each pattern from the library to assess their compatibility and identify potential points of return for each pattern in the main model. (cf. matching output requirements below 3). This step is reprocessed for each advanced computational iteration. However, with each iteration, the number of computed nodes is reduced. As the computational method becomes more complex, it become more time consuming. Nevertheless, the number of elements to compute decreases. It is anticipated that the reduction in the number of computed elements surpasses the increase in time consumption per element computation.).

- 3.

- Library search:The stage is set for exploring the library of patterns containing workflows that may serve as solutions for the identified deviation. This process involves three phases: validating constraints, matching input requirements, and matching output requirements. At each phase, a significant number of incompatible patterns are swiftly discarded, resulting in a refined and condensed set of candidate solutions.

- Validating constraints:This refers to the first step of the elimination process in which patterns that do not meet the required global constraints are swiftly removed, as defined in constraints in Section 3.1.2 and Table 2.

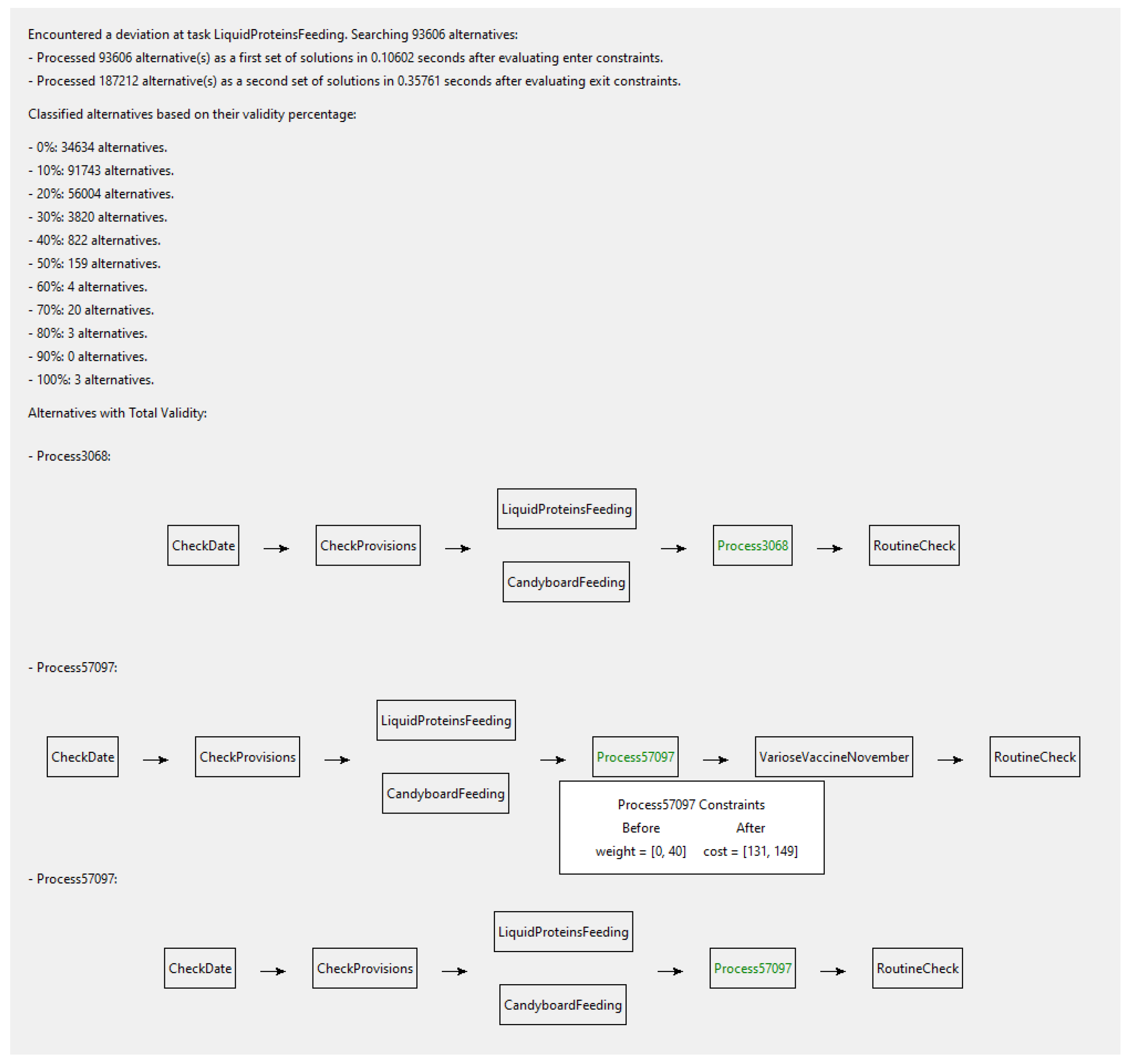

- Matching input requirements:The state token is evaluated against the inputs of each pattern. Recalling Task Characterization in Section 3.1.2 and Table 2, the input requirements are constraints that must be verified with the state token. Two methods in matching the inputs requirements are distinguished:Method 1:Only patterns which strictly satisfy the constraints are kept in the potential solution set and all others are eliminated. This method is highly restrictive, and there is a possibility of overlooking candidates. Moreover, the selected cases may not necessarily represent valid solutions.Method 2:The proposed method involves retaining patterns that partially satisfy the constraints, while discarding those outside a set threshold value. The ratio of satisfaction can be expressed as a percentage and compared to a threshold value determined by the domain expert during the design phase. Patterns falling below the threshold value are discarded. This method is less restrictive, as it does not miss any solutions, but it requires more computation.The difference between the two methods lies in the number of patterns retained in the potential set. Method 1 results in few selected patterns, with many discarded, while the second method retains more solutions in the solution set. In practice, one or a combination of the two methods can be applied to each constraint.For example, exceeding a cost constraint by €4 in the car industry may be tolerable, whereas exceeding a €4 cost constraint in a electronic chip manufacturing may not be acceptable.

- Matching output requirements:At this stage, the remaining workflow patterns are validated against the estimated state tokens from the deviation point to the end of the model, using the same methods described in Output State Token Estimation of Section 3.1.2 and Section 3.1.3. However, at this stage, the set of candidates is comprises a combination of a workflow pattern with its potential injection point within the model (couple ). In simpler terms, the same pattern can be injected (pattern’s input and output) at the deviation point or it can bypass one or more blocks within the main model.

- 4.

- Advanced techniques:At this stage, with a smaller set of candidates, more sophisticated computational techniques can be utilized to further refine the analysis. For instance, methods such as probability theory, fuzzy logic, and simulation tools can be considered as viable options. These techniques can help in the identification of the most effective solution patterns among the reduced set of candidates.

- 5.

- Solution ranking:Once the set of candidates has been reduced to combinations of workflows with corresponding returning points, the next step is to evaluate each solution to rank and present them to the user. The evaluation process is critical in selecting the optimal solution(s) that meet the BP requirements while minimizing any potential negative effects on the system. In doing so, this aids in the decision-making process. To evaluate and rank candidates after narrowing them down to a collection of workflows with returning points, a BP specialist can set an objective function based on KPIs, such as quality, granularity, cost, and interoperability. The subjective nature of this function means that KPIs and their weights can differ across industries and specialists, highlighting the need for customized solutions. The objective function is denoted as , and can include KPIs such as granularity and overquality, providing an additional layer of evaluation. Granularity helps determine whether the proposed solutions are too general or too specific by analysing the number of blocks being bypassed on the main model; while overquality assesses whether a solution produces a higher output or quality than required, which may not be preferable over other solutions.

- 6.

- Solution selection:The solution selection process can either be manual or automatic, depending on the configuration. Automatic selection involves the system choosing the top-ranked solution based on the objective function, while manual selection involves presenting all the relevant performance indicators and the objective function to the decision maker for each solution. Regardless of the selection method, the chosen solution must not only solve the local problem but also align with the global objective.

- 7.

- Solution injection:After selecting a preferred solution, manually or automatically, it will be injected into the executed process. At this level there are two modes of injection: Non-recursive deviation and recursive deviation. The former is for isolated incidents while the latter involves an iterative approach where the system proposes an optimization at the main model level based on historical data. This enables the system to learn from past experiences, adapt to new circumstances, and continually improve its performance.

4. Experiments and Results

5. Discussion and Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Russell, N.; van der Aalst, W.M.; ter Hofstede, A.; Edmond, D. Workflow Patterns; MIT Press Ltd.: Cambridge, MA, USA, 2016; Volume 8, p. 384. [Google Scholar]

- Nqampoyi, V.; Seymour, L.F.; Laar, D.S. Effective Business Process Management Centres of Excellence. In Research and Practical Issues of Enterprise Information Systems; Tjoa, A.M., Xu, L.D., Raffai, M., Novak, N.M., Eds.; Springer: Cham, Switzerland, 2016; pp. 207–222. [Google Scholar]

- IEEE Std 1320.1-1998; IEEE Standard for Functional Modeling Language—Syntax and Semantics for IDEF0. IEEE: Piscataway, NJ, USA, 1998; pp. 1–116. [CrossRef]

- Van der Aalst, W.M.P. Everything You Always Wanted to Know About Petri Nets, but Were Afraid to Ask. In Business Process Management; Hildebrandt, T., van Dongen, B.F., Röglinger, M., Mendling, J., Eds., Springer: Cham, Switzerland, 2019; pp. 3–9. [Google Scholar]

- Davis, W.S. Tools and Techniques for Structured Systems Analysis and Design; Addison-Wesley Longman Publishing Co., Inc: Reading, MA, USA, 1983. [Google Scholar]

- Grigorova, K.; Mironov, K. Comparison of business process modeling standards. Int. J. Eng. Sci. Manag. Res. 2014, 1, 1–8. [Google Scholar]

- Specification, O.A. OMG Systems Modeling Language (OMG SysML™), V1. 0. 2007. Available online: https://www.omg.org/spec/SysML/1.0/PDF (accessed on 10 June 2023).

- Cimino, M.G.; Vaglini, G. An Interval-Valued Approach to Business Process Simulation Based on Genetic Algorithms and the BPMN. Information 2014, 5, 319–356. [Google Scholar] [CrossRef] [Green Version]

- White, S.A.; Miers, D. BPMN Modeling and Reference Guide: Understanding and Using BPMN; Future Strategies Inc.: Toronto, ON, Canada, 2008. [Google Scholar]

- El Kassis, M.; Trousset, F.; Daclin, N.; Zacharewicz, G. Business Process Web-based Platform for Multi modeling and Distributed Simulation. In Proceedings of the I3M 2022-International Multidisciplinary Modeling & Simulation Multiconference, Rome, Italy, 19–21 September 2022. [Google Scholar]

- OMG. Business Process Model and Notation (BPMN), Version 2.0. 2011. Available online: https://www.omg.org/spec/BPMN/2.0/PDF (accessed on 10 June 2023).

- Freund, J.; Rücker, B. Real-Life BPMN: Includes an Introduction to DMN. 2019. Available online: https://www.infomath-bib.de/tmp/data2/Real-Life%20BPMN%20-%20edition%204.pdf (accessed on 10 June 2023).

- Bendraou, R.; da Silva, M.A.A.; Gervais, M.-P.; Blanc, X. Support for Deviation Detections in the Context of Multi-Viewpoint-Based Development Processes. In Proceedings of the CAiSE Forum, Luxembourg, 5–9 June 2012; pp. 23–31. [Google Scholar]

- Rabah-Chaniour, S.; Zacharewicz, G.; Chapurlat, V. Process Centered Digital Twin. GDR MACS (2022). Online Oral Presentation. Available online: https://action-jn.sciencesconf.org/resource/page/id/5 (accessed on 31 May 2022).

- Speakman, S.; Tadesse, G.A.; Cintas, C.; Ogallo, W.; Akumu, T.; Oshingbesan, A. Detecting Systematic Deviations in Data and Models. Computer 2023, 56, 82–92. [Google Scholar] [CrossRef]

- Kady, C.; Chedid, A.M.; Kortbawi, I.; Yaacoub, C.; Akl, A.; Daclin, N.; Trousset, F.; Pfister, F.; Zacharewicz, G. IoT-Driven Workflows for Risk Management and Control of Beehives. Diversity 2021, 13, 13070296. [Google Scholar] [CrossRef]

- Hodge, V.; Austin, J. A survey of outlier detection methodologies. Artif. Intell. Rev. 2004, 22, 85–126. [Google Scholar] [CrossRef] [Green Version]

- Tariq, Z.; Charles, D.; McClean, S.; McChesney, I.; Taylor, P. Anomaly Detection for Service-Oriented Business Processes Using Conformance Analysis. Algorithms 2022, 15, 257. [Google Scholar] [CrossRef]

- Ni, W.; Zhao, G.; Liu, T.; Zeng, Q.; Xu, X. Predictive Business Process Monitoring Approach Based on Hierarchical Transformer. Electronics 2023, 12, 1273. [Google Scholar] [CrossRef]

- Ducq*, Y.; Vallespir, B. Definition and aggregation of a performance measurement system in three aeronautical workshops using the ECOGRAI method. Prod. Plan. Control 2005, 16, 163–177. [Google Scholar] [CrossRef]

- Heguy, X.; Zacharewicz, G.; Ducq, Y.; Tazi, S.; Vallespir, B. A performance measurement extension for BPMN: One step further quantifying interoperability in process model. In Enterprise Interoperability VIII: Smart Services and Business Impact of Enterprise Interoperability; Springer: Cham, Switzerland, 2019; pp. 333–345. [Google Scholar]

- Ougaabal, K.; Zacharewicz, G.; Ducq, Y.; Tazi, S. Visual Workflow Process Modeling and Simulation Approach Based on Non-Functional Properties of Resources. Appl. Sci. 2020, 10, 4664. [Google Scholar] [CrossRef]

- Kherbouche, O.M.; Ahmad, A.; Basson, H. Using model checking to control the structural errors in BPMN models. In Proceedings of the IEEE 7th International Conference on Research Challenges in Information Science (RCIS), Paris, France, 29–31 May 2013; pp. 1–12. [Google Scholar] [CrossRef]

- Mallek, S.; Daclin, N.; Chapurlat, V. The application of interoperability requirement specification and verification to collaborative processes in industry. Comput. Ind. 2012, 63, 643–658. [Google Scholar] [CrossRef]

- Da Silva, A.S.; Ma, H.; Zhang, M. A GP approach to QoS-aware web service composition including conditional constraints. In Proceedings of the 2015 IEEE Congress on Evolutionary Computation (CEC), Sendai, Japan, 25–28 May 2015; pp. 2113–2120. [Google Scholar] [CrossRef]

- Zhang, S.; Paik, I. An efficient algorithm for web service selection based on local selection in large scale. In Proceedings of the 2017 IEEE 8th International Conference on Awareness Science and Technology (iCAST), Taichung, Taiwan, 8–10 November 2017; pp. 188–193. [Google Scholar] [CrossRef]

- Borgida, A.; Murata, T. Tolerating exceptions in workflows: A unified framework for data and processes. In Proceedings of the International Joint Conference on Work Activities Coordination and Collaboration, San Francisco, CA, USA, 22–25 February 1999; pp. 59–68. [Google Scholar]

- Svendsen, A. Application Reconfiguration Based on Variability Transformations; Technical Report 2009-566 School of Computing; Queen’s University Kingston: Kingston, ON, Canada, 2009; p. 4. [Google Scholar]

- Gao, H.; Huang, W.; Yang, X.; Duan, Y.; Yin, Y. Toward service selection for workflow reconfiguration: An interface-based computing solution. Future Gener. Comput. Syst. 2018, 87, 298–311. [Google Scholar] [CrossRef]

- Rosa, M.L.; Aalst, W.M.V.D.; Dumas, M.; Milani, F.P. Business process variability modeling: A survey. ACM Comput. Surv. (CSUR) 2017, 50, 1–45. [Google Scholar] [CrossRef] [Green Version]

- Gottschalk, F.; Van der Aalst, W.M.; Jansen-Vullers, M.H. Configurable process models–a foundational approach. In Reference Modeling; Springer: Cham, Switzerland, 2007; pp. 59–78. [Google Scholar]

- Becker, J.; Delfmann, P.; Knackstedt, R. Adaptive reference modeling: Integrating configurative and generic adaptation techniques for information models. In Reference Modeling: Efficient Information Systems Design through Reuse of Information Models; Springer: Cham, Switzerland, 2007; pp. 27–58. [Google Scholar]

- Bayer, J.; Kettemann, S.; Muthig, D. Principles of Software Product Lines and Process Variants; Technical Report; 2004. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=13e31abc3bbbf13af8a71157b4761839d7587bab (accessed on 10 June 2023).

- Hallerbach, A.; Bauer, T.; Reichert, M. Issues in modeling process variants with provop. In Proceedings of the Business Process Management Workshops: BPM 2008 International Workshops, Milano, Italy, 1–4 September 2008; Revised Papers 6; Springer: Cham, Switzerland, 2009; pp. 56–67. [Google Scholar]

- Haidar, H.; Daclin, N.; Zacharewicz, G.; Doumeingts, G. Enterprise Modeling and Simulation. In Body of Knowledge for Modeling and Simulation: A Handbook by the Society for Modeling and Simulation International; Springer: Cham, Switzerland, 2023; pp. 221–247. [Google Scholar]

- Hauder, M.; Pigat, S.; Matthes, F. Research Challenges in Adaptive Case Management: A Literature Review. In Proceedings of the 2014 IEEE 18th International Enterprise Distributed Object Computing Conference Workshops and Demonstrations, Ulm, Germany, 1–2 September 2014; pp. 98–107. [Google Scholar] [CrossRef]

- Herranz, C.; Martín-Moreno Banegas, L.; Dana Muzzio, F.; Siso-Almirall, A.; Roca, J.; Cano, I. A Practice-Proven Adaptive Case Management Approach for Innovative Health Care Services (Health Circuit): Cluster Randomized Clinical Pilot and Descriptive Observational Study. J. Med. Internet Res. 2023, 25, e47672. [Google Scholar] [CrossRef] [PubMed]

- Badakhshan, P.; Conboy, K.; Grisold, T.; vom Brocke, J. Agile business process management: A systematic literature review and an integrated framework. Bus. Process Manag. J. 2020, 26, 1505–1523. [Google Scholar] [CrossRef]

- Abrahamsson, P.; Conboy, K.; Wang, X. ‘Lots done, more to do’: The current state of agile systems development research. Eur. J. Inf. Syst. 2009, 18, 281–284. [Google Scholar] [CrossRef] [Green Version]

- Conboy, K. Agility from first principles: Reconstructing the concept of agility in information systems development. Inf. Syst. Res. 2009, 20, 329–354. [Google Scholar] [CrossRef]

- Russell, N.; van der Aalst, W.M.; Ter Hofstede, A.H. Exception Handling Patterns in Process-Aware Information Systems; BPM Cent. Rep. BPM-06 BPMcenter. Org; 2006; Volume 208. Available online: https://www.researchgate.net/profile/Nick_Russell2/publication/228618501_Exception_handling_patterns_in_process-aware_information_systems/links/0fcfd50850ad0af074000000/Exception-handling-patterns-in-process-aware-information-systems.pdf (accessed on 10 June 2023).

- Adams, M.; Ter Hofstede, A.H.; Van Der Aalst, W.M.; Edmond, D. Dynamic, extensible and context-aware exception handling for workflows. In Proceedings of the Move to Meaningful Internet Systems 2007: CoopIS, DOA, ODBASE, GADA, and IS: OTM Confederated International Conferences CoopIS, DOA, ODBASE, GADA, and IS 2007, Vilamoura, Portugal, 25–30 November 2007; pp. 95–112. [Google Scholar]

- Jasinski, A.; Qiao, Y.; Fallon, E.; Flynn, R. A Workflow Management Framework for the Dynamic Generation of Workflows that is Independent of the Application Environment. In Proceedings of the 2021 IFIP/IEEE International Symposium on Integrated Network Management (IM), Virtual Conference, 17–21 May 2021; pp. 152–160. [Google Scholar]

- Andree, K.; Ihde, S.; Pufahl, L. Exception handling in the context of fragment-based case management. In Proceedings of the Enterprise, Business-Process and Information Systems Modeling: 21st International Conference, BPMDS 2020, 25th International Conference, EMMSAD 2020, CAiSE 2020, Grenoble, France, 8–9 June 2020; pp. 20–35. [Google Scholar]

- Górski, T.; WOźniak, A.P. Optimization of Business Process Execution in Services Architecture: A Systematic Literature Review. IEEE Access 2021, 9, 111833–111852. [Google Scholar] [CrossRef]

- Xie, L.; Luo, J.; Qiu, J.; Pershing, J.A.; Li, Y.; Chen, Y. Availability “weak point” analysis over an SOA deployment framework. In Proceedings of the NOMS 2008—2008 IEEE Network Operations and Management Symposium, Salvador, Brazil, 7–11 April 2008; pp. 473–480. [Google Scholar] [CrossRef]

- Mennes, R.; Spinnewyn, B.; Latré, S.; Botero, J.F. GRECO: A Distributed Genetic Algorithm for Reliable Application Placement in Hybrid Clouds. In Proceedings of the 2016 5th IEEE International Conference on Cloud Networking (Cloudnet), Pisa, Italy, 3–5 October 2016; pp. 14–20. [Google Scholar] [CrossRef]

- Dyachuk, D.; Deters, R. Service Level Agreement Aware Workflow Scheduling. In Proceedings of the IEEE International Conference on Services Computing (SCC 2007), Salt Lake City, UT, USA, 9–13 July 2007; pp. 715–716. [Google Scholar] [CrossRef]

- Dyachuk, D.; Deters, R. Ensuring Service Level Agreements for Service Workflows. In Proceedings of the 2008 IEEE International Conference on Services Computing, Honolulu, HI, USA, 8–11 July 2008; Volume 2, pp. 333–340. [Google Scholar] [CrossRef]

- Zhong, Y.; Li, W.; Wang, X.; Fan, J. An approach to agent-coalition-based Automatic Web Service Composition. In Proceedings of the 2012 IEEE 16th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Wuhan, China, 23–25 May 2012; pp. 37–42. [Google Scholar] [CrossRef]

- Qiqing, F.; Xiaoming, P.; Qinghua, L.; Yahui, H. A Global QoS Optimizing Web Services Selection Algorithm Based on MOACO for Dynamic Web Service Composition. In Proceedings of the 2009 International Forum on Information Technology and Applications, Chengdu, China, 15–17 May 2009; Volume 1, pp. 37–42. [Google Scholar] [CrossRef]

- Hashmi, K.; AlJafar, H.; Malik, Z.; Alhosban, A. A bat algorithm based approach of QoS optimization for long term business pattern. In Proceedings of the 2016 7th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 5–7 April 2016; pp. 27–32. [Google Scholar] [CrossRef]

- Kalasapur, S.; Kumar, M.; Shirazi, B.A. Dynamic Service Composition in Pervasive Computing. IEEE Trans. Parallel Distrib. Syst. 2007, 18, 907–918. [Google Scholar] [CrossRef]

- Ukor, R.; Carpenter, A. Flexible Service Selection Optimization Using Meta-Metrics. In Proceedings of the 2009 Congress on Services - I, Los Angeles, CA, USA, 6–10 July 2009; pp. 593–598. [Google Scholar] [CrossRef]

- Russell, N.; Van Der Aalst, W.M.; Ter Hofstede, A.H.; Edmond, D. Workflow resource patterns: Identification, representation and tool support. In Proceedings of the CAiSE, Porto, Portugal, 13–17 June 2005; Springer: Cham, Switzerland, 2005; Volume 5, pp. 216–232. [Google Scholar]

- Bocciarelli, P.; D’Ambrogio, A. A BPMN extension for modeling non functional properties of business processes. In Proceedings of the 2011 Symposium on Theory of Modeling & Simulation: DEVS Integrative M&S Symposium, Boston, MA, USA, 4–9 April 2011; pp. 160–168. [Google Scholar]

| State Token | Annotation |

|---|---|

| Actual state token before entry of task A | |

| Actual state token after exit of task A | |

| Estimated state token after exit of task A |

| Task Characterization | Annotation | Task Entry | During Execution | Task Exit |

|---|---|---|---|---|

| Resources | True | True | … | |

| Constraints | True | True | True | |

| Input | True | … | … | |

| Output | … | … | True |

| Generated Patterns | Patterns without Duplication | Total Elapsed Time (s) |

|---|---|---|

| 1000 | 837 | 0.010 |

| 10,000 | 9930 | 0.153 |

| 100,000 | 93,606 | 1.589 |

| 1,000,000 | 905,217 | 26.291 |

| 2,000,000 | 1,809,169 | 55.908 |

| Number of Patterns | 100% Matching | 70% Matching | 50% Matching | 30% Matching |

|---|---|---|---|---|

| 1000 (837) | 4 | 8 | 114 | 843 |

| 10,000 (9930) | 6 | 26 | 280 | 5966 |

| 100,000 (93,606) | 9 | 59 | 475 | 13,754 |

| 1,000,000 (905,217) | 31 | 130 | 1174 | 25,149 |

| 2,000,000 (1,809,169) | 64 | 274 | 2387 | 50,177 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kady, C.; Jalloul, K.; Trousset, F.; Yaacoub, C.; Akl, A.; Daclin, N.; Zacharewicz, G. Addressing Business Process Deviations through the Evaluation of Alternative Pattern-Based Models. Appl. Sci. 2023, 13, 7722. https://doi.org/10.3390/app13137722

Kady C, Jalloul K, Trousset F, Yaacoub C, Akl A, Daclin N, Zacharewicz G. Addressing Business Process Deviations through the Evaluation of Alternative Pattern-Based Models. Applied Sciences. 2023; 13(13):7722. https://doi.org/10.3390/app13137722

Chicago/Turabian StyleKady, Charbel, Khaled Jalloul, François Trousset, Charles Yaacoub, Adib Akl, Nicolas Daclin, and Gregory Zacharewicz. 2023. "Addressing Business Process Deviations through the Evaluation of Alternative Pattern-Based Models" Applied Sciences 13, no. 13: 7722. https://doi.org/10.3390/app13137722

APA StyleKady, C., Jalloul, K., Trousset, F., Yaacoub, C., Akl, A., Daclin, N., & Zacharewicz, G. (2023). Addressing Business Process Deviations through the Evaluation of Alternative Pattern-Based Models. Applied Sciences, 13(13), 7722. https://doi.org/10.3390/app13137722