1. Introduction

Speech enhancement refers to a set of signal processing techniques aimed at improving the quality and intelligibility of speech signals by suppressing interference signals, such as background noise and reverberation. External noise and environmental reverberation can negatively impact speech signals received by microphones, resulting in a low signal-to-noise ratio (SNR) that impairs human hearing and the accuracy of systems used in human–computer interactions, such as speech recognition and speech wake-up applications [

1]. Therefore, the study of speech enhancement techniques is critical to enhancing speech signals and improving overall system performance.

Traditional single-channel speech enhancement algorithms rely on signal processing techniques such as time-domain, frequency-domain, spatial-domain, and high-order statistics. However, it is challenging to adapt these methods to changing acoustic scenarios [

2]. Over the past decade, deep learning-based algorithms have achieved significant success in speech enhancement. In particular, several methods that exploit time–frequency-domain feature mapping have made marked progress in noise reduction, as demonstrated in [

3,

4,

5]. Nevertheless, these approaches typically ignore the modeling of speech signal phase information. Unlike time–frequency-domain-based methods that only focus on magnitude regression using the short-time Fourier transform (STFT), some recent methods [

6,

7] reconstruct the speech signal by directly processing the time-domain waveform. Deep Complex Convolution Recurrent Network for Phase-Aware Speech Enhancement (DCCRN) [

8] innovatively proposes complex number-domain features as input features to neural networks and demonstrates great potential in noise reduction. The DCCRN design simulates complex value operations using a complex number network. In DCCRN, the input of the network is both the real and imaginary (RI) information of the complex STFT. By concurrently considering the amplitude and phase of the STFT, DCCRN significantly improves the performance of speech enhancement models.

In addition, multichannel speech enhancement generally outperforms single-channel speech enhancement due to additional spatial information provided by the number of available microphones [

9]. There are two main types of multichannel approaches: time-domain and frequency-domain approaches. Classic signal processing-based techniques such as beamforming have been used, including minimum variance distortionless response (MVDR) [

10] and generalized sidelobe canceller (GSC) formulation [

11], where the energy of the signal at the output is minimized, while the energy in the target direction is maintained. A beamformer requires a target steering vector [

12], and in most cases, the method for estimating the steering vector is based on direction of arrival (DOA) estimation [

13]. However, the estimation is not error-free and can significantly impact the performance of the beamforming technique.

Recently, time-domain convolutional denoising autoencoders (TCDAEs) [

9] have been designed; they exploit the delay between multichannel signals to directly extract the characteristics of time-domain signals using an encoder–decoder network. Although TCDAEs exhibit better performance than single-channel DAEs [

14], they use limited noise types, which results in suboptimal performance. Wang et al., proposed a complex spectral mapping approach combined with minimum variance distortionless response (MVDR) beamforming, which produces beamforming filter coefficients based on the covariance matrix of the signal and noise [

15]. Alternatively, Li et al. [

16] proposed neural network adaptive beamforming (NAB), which learns beamforming filters without estimating the direction of arrival (DOA) or computing the covariance matrix. NAB outperforms conventional beamforming methods but is applied to an adaptive neural network in the time–frequency domains. The filter-and-sum network (FaSNet) by Luo et al., performs time-domain filter-and-sum beamforming at the frame level and directly estimates beamforming filters with a neural network [

17]. The beamforming filters are then applied to the time-domain input signal of each frame, similar to the beamforming method.

In this study, we propose a dual-channel speech enhancement technique based on complex time-domain mapping inspired by adaptive neural network beamforming and complex time-domain operations. To fully utilize the information from the two speech channels in the time domain, we developed a complex neural network adaptive beamformer (CNAB) approach to predict complex time-domain beamforming filter coefficients. During the training process, the coefficients are updated according to changes in the noisy dataset, which differs from the fixed-filter approach of previous studies [

18,

19]. Additionally, the real and imaginary (RI) parts of the beamforming filter coefficients estimated by the CNAB perform complex convolution and summation operations on the input to each channel. The resulting complex time-domain beamforming output is then used as input to the local complex full convolutional network (CFCN) to predict the complex time-domain information of the enhanced speech. The primary contributions of this study are as follows:

To enable time-domain complex operations and prepare the input of the network, we utilized the Hilbert transform to construct a complex signal.

We developed a complex neural network adaptive beamforming technique that utilizes complex inputs to perform speech enhancement.

We developed a complex full convolutional network that takes the output of the CNAB as input to enhance speech.

By combining all of these components, we designed and tested a dual-channel complex enhancement network that performs speech denoising in the time domain.

The rest of the paper is structured as follows:

Section 2 presents the proposed algorithm, which covers the preparation of complex inputs, the loss function, the structure of the CNAB, and the structure of the CFCN. In

Section 3, we describe the experimental settings, including dataset creation, different scenarios, and network parameters.

Section 4 presents the experimental results and associated discussions on the performance of the proposed network compared with the baseline and different models in various scenarios. Finally, we draw conclusions and suggest topics for future research in

Section 5.

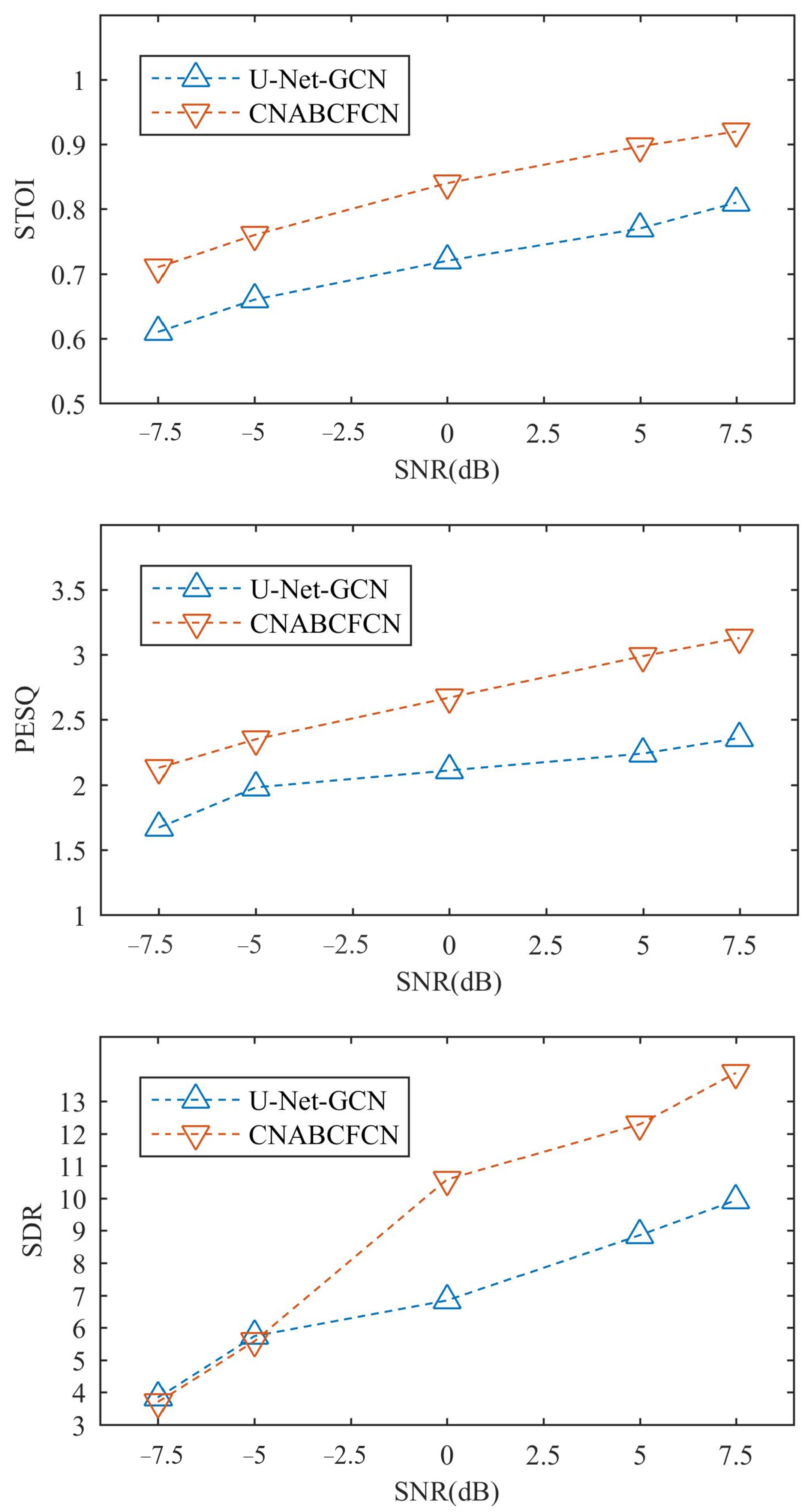

3. Experimental Settings

This section presents the dataset used in the experiments and outlines the procedure used to create the two-channel dataset. We also describe the hyperparameter settings of each neural network layer in the CNABFCN model and select the appropriate network parameters using experimental data. In the experiments, we compared the SI-SDR performance of the model using different hyperparameters to determine the optimal model parameters.

3.1. Generation of the Two-Channel Dataset

In the experiment, we trained and evaluated the proposed speech enhancement model using the deep noise suppression (DNS) challenge dataset [

23]. The clean audio signals were derived from LibriVox, a public English audiobook dataset, and the noises were selected from Audioset and Freesound. All audio signals were sampled at 16 kHz, with clean clips and noise clips having lengths of 31 s and 10 s, respectively. We used the script provided in the DNS challenge to generate 75 h of audio clips, with each clip having a size of 6 s. In total, we generated 36,400 clips (approximately 60 h) for the training set and 4550 clips (approximately 8 h) for the validation set, and the remaining 4050 clips (approximately 7 h) were used as the test set.

To produce dual-channel datasets, we used the image method [

24] and studied the effects of single-direction and multiple-direction target sources on the performance of speech enhancement. We tested two different scenarios.

Scenario I: In the first scenario, the target source was located in a single direction. As shown in

Figure 4, the target source was close to the reference microphone, and it was on the same horizontal line as the microphones. The target position was marked with the red box, while the incoming noise was uniformly sampled from 0 and 90 degrees at 15-degree intervals.

Scenario II: In the second scenario, shown in

Figure 5, the azimuth angle of the target source was indicated by the red dotted lines and ranged between −45 and 45

. The azimuth angle of the noise was between −90 and 90

and evenly distributed at 15-degree intervals. This scenario created a so-called acoustic fence, where the speech inside the region of interest (ROI) could be passed with minimal distortion.

The clean and noisy signals were located 1 m away from the microphones, while the distance between the two microphones was 3 cm. In the simulated reverberation room, which had a size of 10 m × 7 m × 3 m (width × depth × height), the sound source signal was convolved with two different room impulse responses (RIRs) before being received by the two microphones. The signal-to-noise ratios (SNRs) were randomly mixed from −5 to 10 dB at 1 dB intervals. In the test set, the SNRs were set to −5, 0, 5, 10, and 20 dB, and the clean speech signal captured by the reference microphone was used as the label for the CNABCFCN model.

3.2. Configurations of CNAB

In this study, the 6 s simulated speech signal with a sampling frequency of 16 kHz was divided into 1 s segments, each consisting of 16,000 samples. The Hilbert transform was applied to each input speech segment to extract the real and imaginary parts, which were used as inputs to the complex shared-LSTM. Since both real information and imaginary information were in the time domain, LSTM was used to extract features directly, as it is better suited for modeling temporal information.

Table 1 summarizes the parameters of the CNAB model.

According to

Table 1, the input segment dimension was (B, 2, 16,000), with B representing the batch size, and the input had real and imaginary information, with the middle dimension being set to 2. Each segment consisted of 16,000 sampling points, which were divided into 100 temporal sequences, with each sequence having a duration of 10 ms, creating 160 sampling points. The input format of the complex shared-LSTM was

. A complex shared-LSTM layer was composed of two 512-cell LSTM layers, denoted by

and

. The last step of the timesteps was used as input to the two complex split-LSTM layers. Finally, the complex linear activation layer was employed to estimate the beamforming filter coefficients of 25 sampling points.

3.3. CFCN Configurations

The CFCN model is responsible for processing single-channel features after beamforming and estimating the final clean speech. The CFCN configuration is presented in

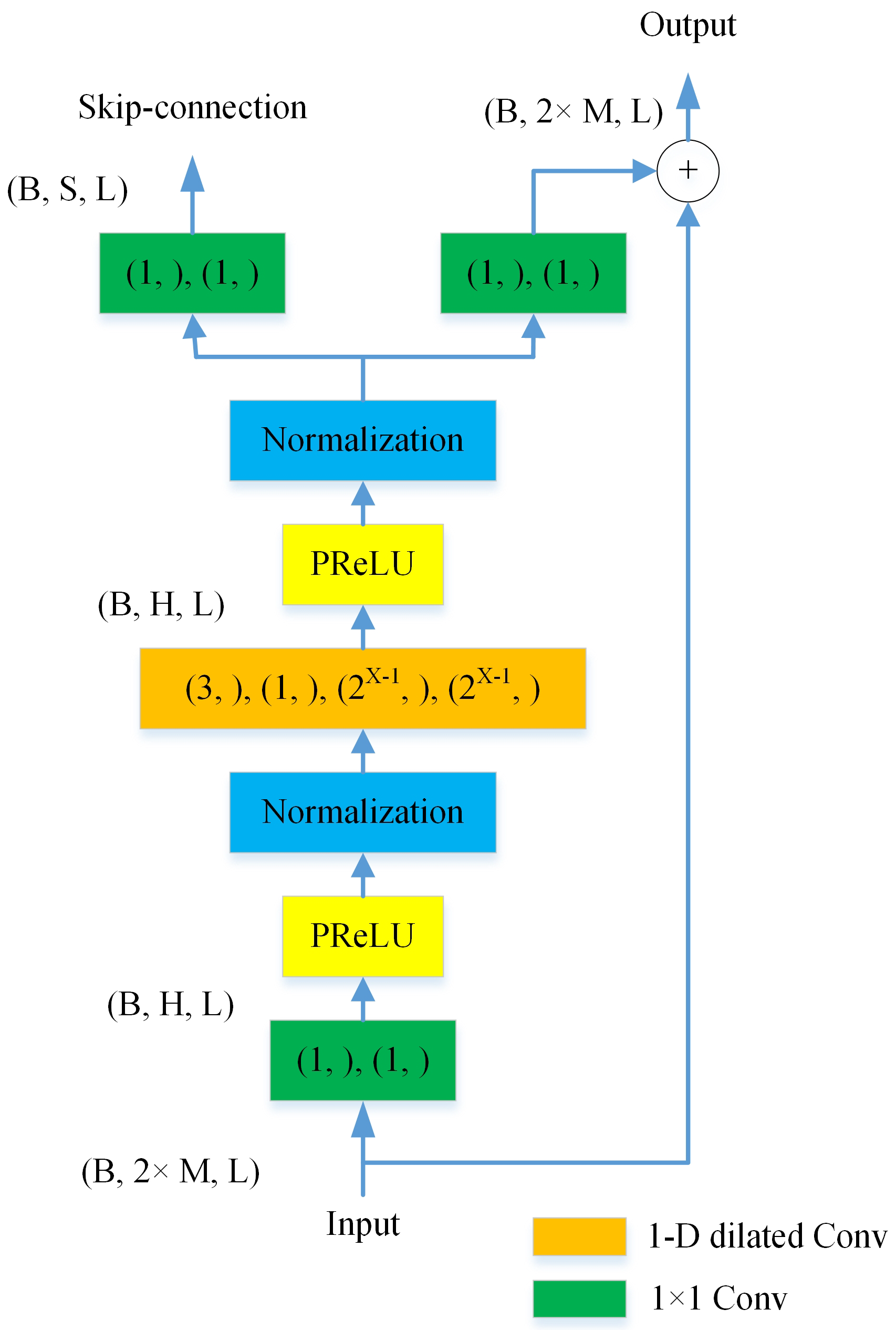

Table 2. The model consists of eight 1D dilated Convs forming a block, and this process is repeated three times; the detailed structure of 1D Conv is provided in

Figure 6. Dilated convolution is known for modeling long-term temporal dependencies through large receptive fields. The use of complex operations can convert all Convs into complex ones, but this process does not substantially improve noise reduction performance and may increase computational complexity. A suitable number of complex Convs for balancing performance and complexity is, therefore, crucial.

In

Table 3, we provide SI-SDR results for different numbers of complex Convs in the CFCN, demonstrating that increasing the use of complex Convs cannot always enhance performance. We modified the last 1D dilated Conv of each repetition into a complex one, marked by the gray rectangle in

Figure 3, and this configuration was adopted for the CFCN.

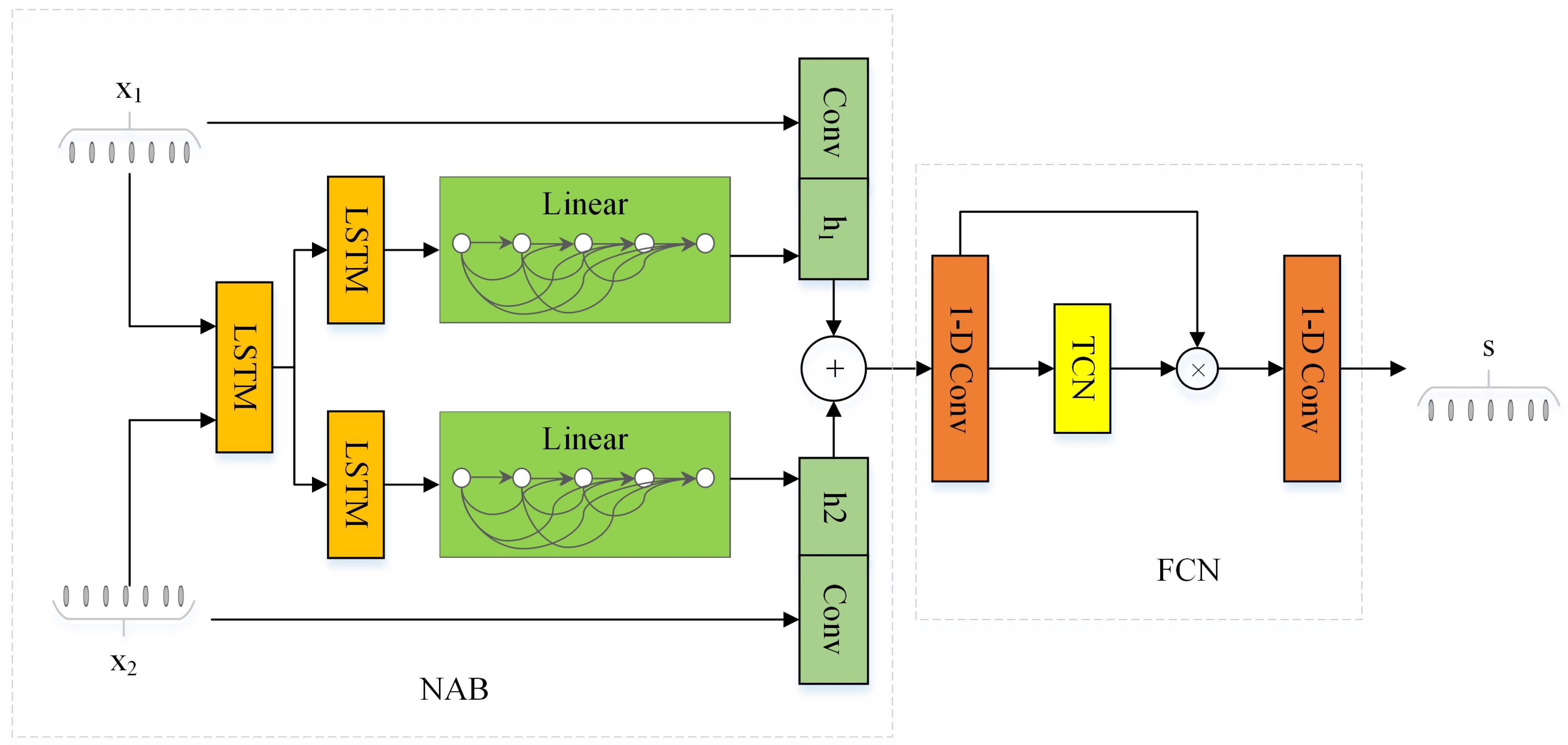

3.4. Baseline NABFCN System

We compared our network with a baseline system, named NABFCN, to demonstrate the potential of the complex network. NABFCN is an end-to-end system, whose structure is similar to that of CNABCFCN, except that it accepts real inputs. All network structures of NABFCN consist of common LSTM and convolutions, and the frame structure of NABFCN is illustrated in

Figure 7. The input noisy speech is still

L = 16,000 long, like the CNAB’s. The NAB model is also composed of three LSTM layers and two linear layers, whose input and output parameters are consistent with the CNAB and which are summarized in

Table 4. To be consistent with the length of the filter, the size of the linear layer was chosen as

.

The linear layer of NAB produces beamforming filter coefficients that are convolved with the 160 time-domain sampling points to generate the NAB output. The FCN model used in NABFCN has a structure similar to that of the CFCN, but it lacks complex 1D Convs. As the output of NABFCN is a real signal, its loss function only utilizes real information to calculate the loss value as follows:

where

and

denote clean speech and enhanced speech, respectively.

To evaluate the benefits of the complex time-domain features and complex neural networks developed in this work, we kept the network parameters of NABFCN and CNABCFCN consistent.