Multiclass Apple Varieties Classification Using Machine Learning with Histogram of Oriented Gradient and Color Moments

Abstract

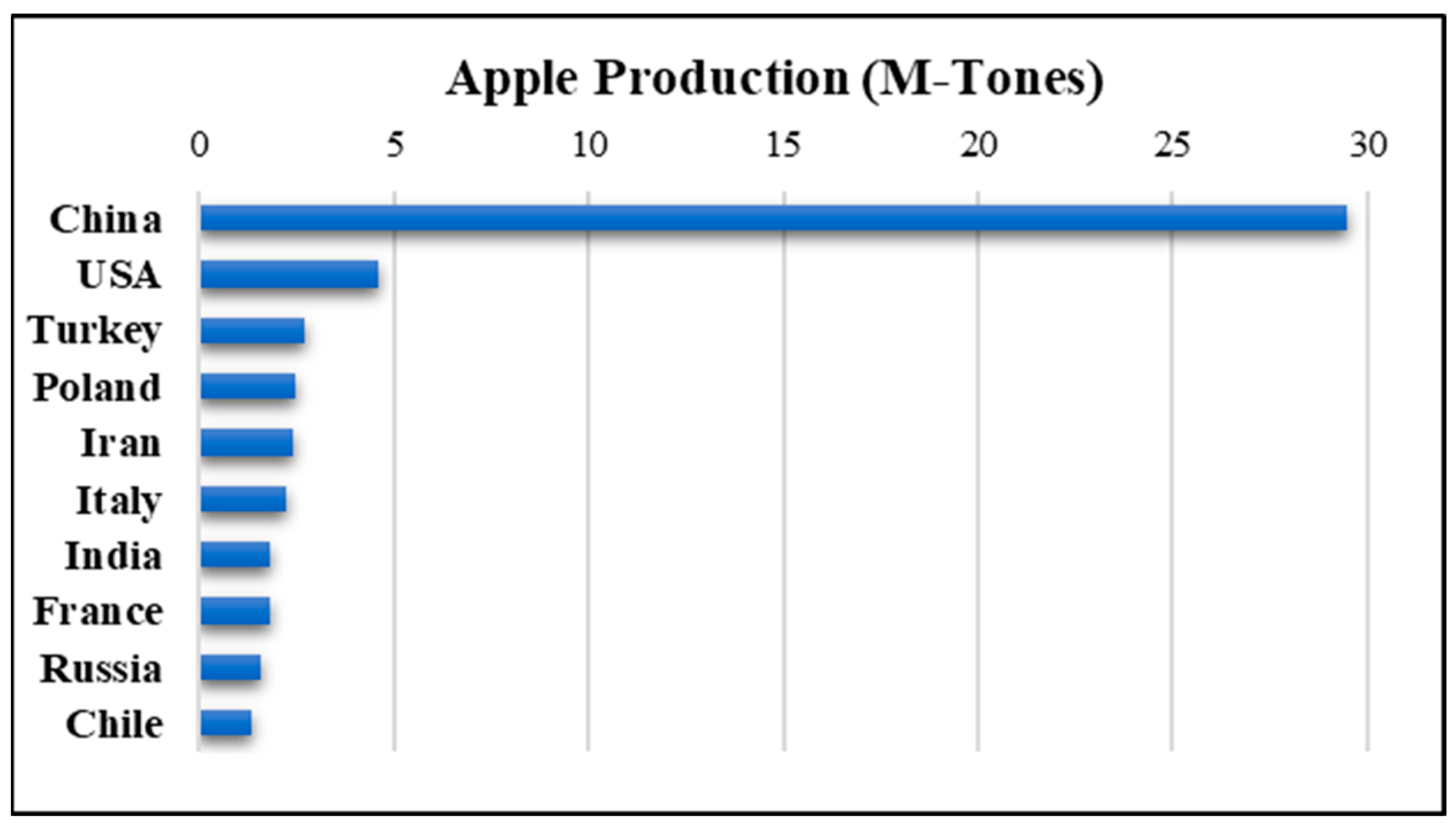

1. Introduction

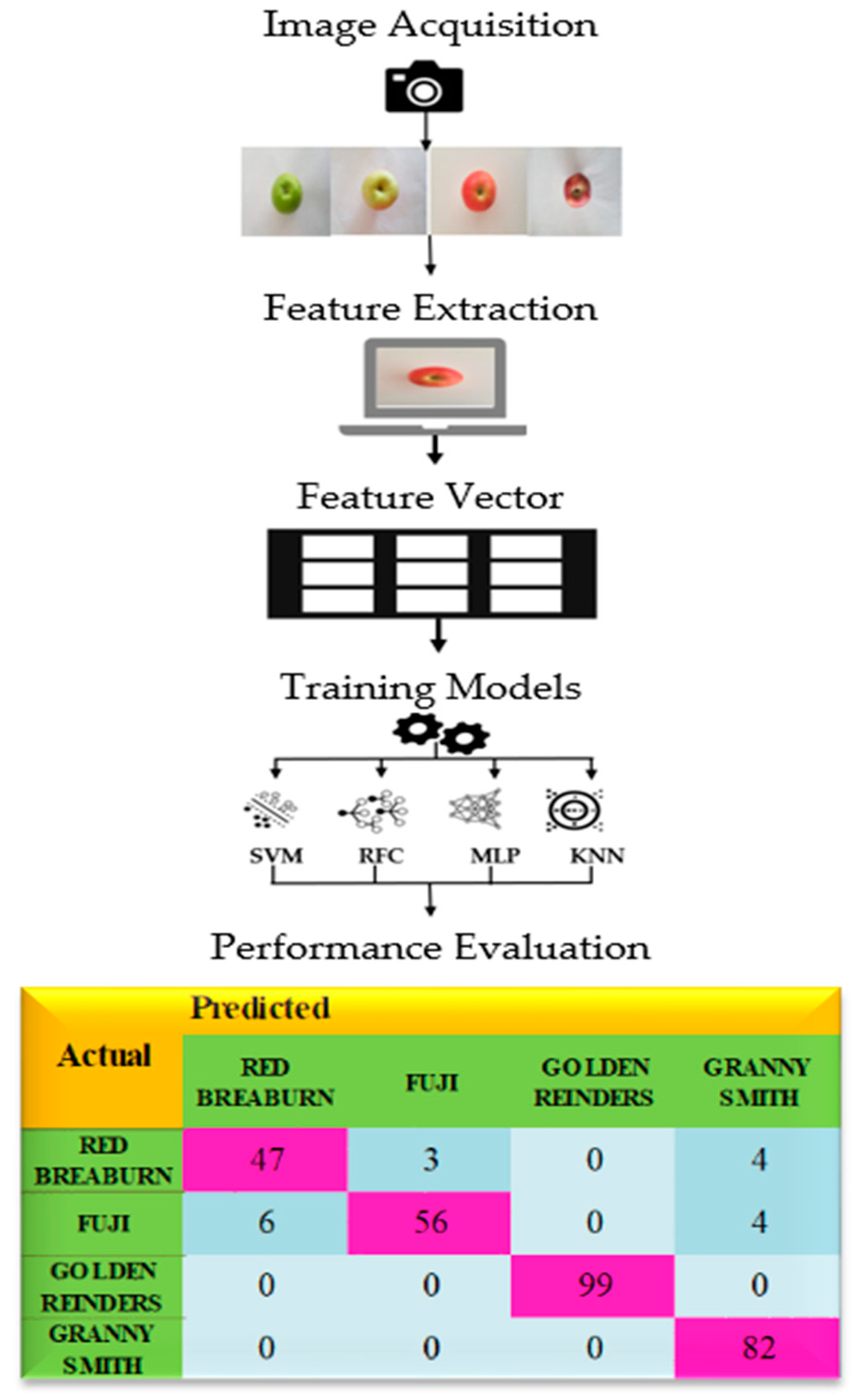

2. Materials and Methods

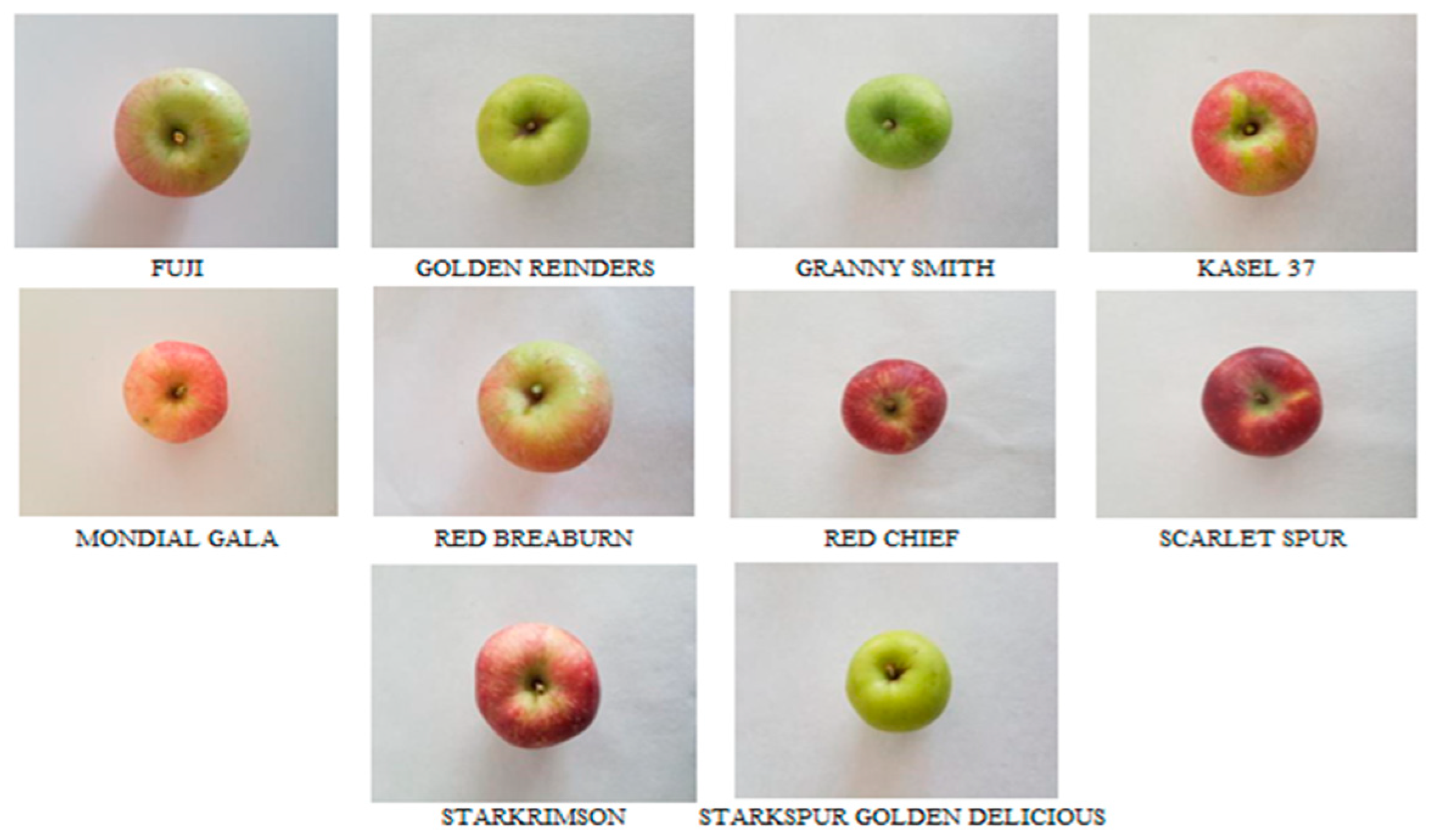

2.1. Image Acquisition

2.2. Image Features Extraction

2.2.1. Histogram of Oriented Gradient HOG

2.2.2. Color Moments

2.3. Features Used for Training

2.4. Machine Learning Classification Models

2.5. Performance Measures

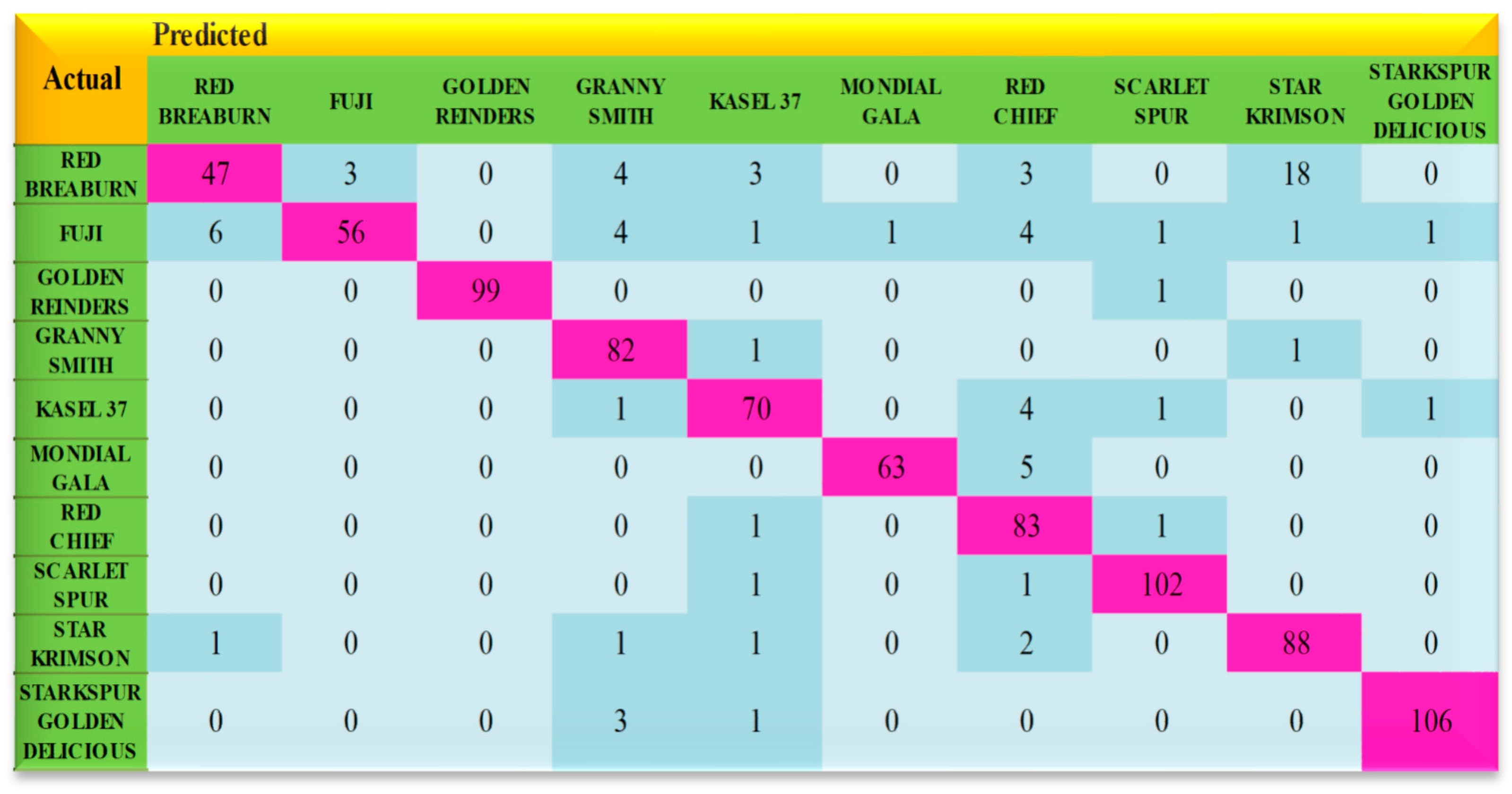

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Özbek, S. Special Fruiting; No: 128, 1978 Textbook: 11; Ç.Ü. Faculty of Agriculture Publications: Adana, Turkey, 1978. (In Turkish) [Google Scholar]

- Wang, N.; Joost, W.; Zhang, F.S. Towards sustainable intensification of apple production in China-Yield gaps and nutrient use efficiency in apple farming systems. J. Integr. Agric. 2016, 15, 716–725. [Google Scholar] [CrossRef]

- Tijero, V.; Girardi, F.; Botton, A. Fruit Development and Primary Metabolism in Apple. Agronomy 2016, 11, 1160. [Google Scholar] [CrossRef]

- Chen, X.; Feng, T.; Zhang, Y.; He, T.; Feng, J.; Zhang, C. Genetic Diversity of Volatile Components in Xinjiang Wild Apple (Malus sieversii). J. Genet. Genom. 2007, 34, 171–179. [Google Scholar] [CrossRef] [PubMed]

- Popa, L.; Ciupercă, R.; Nedelcu, A.; Voicu, E.; Ştefan, V.; Petcu, A. Researches regarding apples sorting process by their size. INMATEH-Agric. Eng. 2014, 43–42, 97–102. [Google Scholar]

- Atay, A.N.; Atay, E. Innovational trends in apple breeding and cultivar management. Yüzüncü Yil. Univ. J. Agric. Sci. 2018, 28, 234–240. [Google Scholar]

- Bayav, A.; Konak, K.; Karamürsel, D.; Öztürk, F.P. Potential of Apple Production, Marketing and Export in Turkey. GAP IV. Agric. Congr. 2005, 1, 427–437. [Google Scholar]

- İşçi, M. Determination of Susceptibility Levels of Some Common Insecticides against Codling Moth (Cydia pomonella (L) Lep.: Tortricidae) Using in Apple Orchards of Isparta. Ph.D. Thesis, Süleyman Demirel University Graduate School of Applied and Natural Sciences Department of Plant Protection, Isparta, Türkiye, 2014. [Google Scholar]

- FAOSTAT. Food and Agriculture Organization of the United Nations. Available online: http://www.fao.org/faostat/en/#data/QC (accessed on 23 February 2021).

- Veringă, D.; Vintilă, M.; Popa, L.; Ştefan, V.; Petcu, A.S. Determination of the relaxation time at static compression of Idared apples variety. INMATEH-Agric. Eng. J. 2015, 47, 75–80. [Google Scholar]

- Lampridi, M.G.; Sørensen, C.G.; Bochtis, D. Agricultural sustainability: A review of concepts and methods. Sustainability 2019, 11, 5120. [Google Scholar] [CrossRef]

- Veringă, D.; Vintilă, M.; Popa, L.; Ştefan, V.; Petcu, A.S. Determination of the relaxation period at static compression of golden delicios apples variety. INMATEH-Agric. Eng. J. 2016, 48, 61–66. [Google Scholar]

- Sabanci, K.; Ünlerşen, M.F. Different apple varieties classification using KNN and MLP algorithms. Int. J. Intell. Syst. Appl. Eng. 2016, 8, 17–20. [Google Scholar] [CrossRef]

- Ronald, M.; Evans, M. Classification of selected apple fruit varieties using Naive Bayes. Indian J. Comput. Sci. Eng. (IJCSE) 2016, 7, 13–19. [Google Scholar]

- Bhargava, A.; Bansal, A. Classification and Grading of Multiple Varieties of Apple Fruit. Food Anal. Methods 2021, 14, 1359–1368. [Google Scholar] [CrossRef]

- Huang, Y.; Yang, Y.; Sun, Y.; Zhou, H.; Chen, K. Identification of Apple Varieties Using a Multichannel Hyperspectral Imaging System. Sensors 2020, 20, 5120. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Xie, S.; Chen, Z.; Liu, H.; Kang, J.; Fan, Z.; Li, W. A Shallow Convolutional Neural Network for Apple Classification. IEEE Access 2020, 8, 111683–111692. [Google Scholar] [CrossRef]

- De Goma, J.C.; Quilas, C.A.M.; Valerio, M.A.B.; Young, J.J.P.; Sauli, Z. Fruit recognition using surface and geometric information. J. Telecommun. Electron. Comput. Eng. (JTEC) 2018, 10, 39–42. [Google Scholar]

- Patel, C.C.; Chaudhari, V.K. Comparative Analysis of Fruit Categorization Using Different Classifiers. In Advanced Engineering Optimization through Intelligent Techniques; Springer: Berlin/Heidelberg, Germany, 2019; pp. 153–164. [Google Scholar]

- Nosseir, A.; Ahmed, S.E.A. Automatic Classification for Fruits’ Types and Identification of Rotten Ones using KNN and SVM. Int. J. Online Biomed. Eng. 2019, 15, 47. [Google Scholar] [CrossRef]

- Jana, S.; Parekh, R. Shape-based fruit recognition and classification. In Proceedings of the Computational Intelligence, Communications, and Business Analytics: First International Conference—CICBA 2017, Kolkata, India, 24–25 March 2017; Revised Selected Papers, Part II. Springer: Singapore; pp. 184–196. [Google Scholar]

- Macanha, P.A.; Eler, D.M.; Garcia, R.E.; Junior, W.E. Handwritten feature descriptor methods applied to fruit classification. In Advances in Intelligent Systems and Computing; Information Technology—New Generations; Latifi, S., Ed.; Springer: Cham, Switzerland, 2018; Volume 558, pp. 699–705. [Google Scholar]

- Ghazal, S.; Qureshi, W.; Khan, U.; Iqbal, J.; Rashid, N.; Tiwana, M. Analysis of visual features and classifiers for fruit classification problem. Comput. Electron. Agric. 2021, 187, 106267. [Google Scholar] [CrossRef]

- Kumari, R.S.S.; Gomathy, V. Fruit Classification using Statistical Features in SVM Classifier. In Proceedings of the 4th International Conference on Electrical Energy Systems (ICEES), Chennai, India, 7–9 February 2018; pp. 526–529. [Google Scholar] [CrossRef]

- Kumar, G.; Bhatia, P.K. A detailed review of feature extraction in image processing systems. In Proceedings of the Fourth International Conference on Advanced Computing & Communication Technologies, Washington, DC, USA, 8–9 February 2014; pp. 5–12. [Google Scholar]

- Jiang, X. Feature extraction for image recognition and computer vision. In Proceedings of the 2nd IEEE International Conference on Computer Science and Information Technology, Beijing, China, 8–11 August 2009; pp. 1–15. [Google Scholar] [CrossRef]

- Liang, H.; Sun, X.; Sun, Y.; Gao, Y. Text feature extraction based on deep learning: A review. EURASIP J. Wirel. Commun. Netw. 2017, 2017, 211. [Google Scholar] [CrossRef]

- Mutlag, W.K.; Ali, S.K.; Aydam, Z.M.; Taher, B.H. Feature extraction methods: A review. J. Phys. Conf. Ser. 2020, 1591, 012028. [Google Scholar] [CrossRef]

- Calzada-Ledesma, V.; Puga-Soberanes, H.J.; Rojas-Domínguez, A.; Ornelas-Rodriguez, M.; Carpio, M.; Gómez, C.G. A comparison of image texture descriptors for pattern classification. In Fuzzy Logic Augmentation of Neural and Optimization Algorithms: Theoretical Aspects and Real Applications, Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2018; Volume 749, pp. 291–303. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–25 June 2005; IEEE Computer Society Press: Piscataway, NJ, USA, 2005; pp. 886–889. [Google Scholar]

- Kodituwakku, S.R.; Selvarajah, S. Comparison of color features for image retrieval. Indian J. Comput. Sci. Eng. 2011, 1, 207–211. [Google Scholar]

- Wang, Z.; Zhuang, Z.; Liu, Y.; Ding, F.; Tang, M. Color classification and texture recognition system of solid wood panels. Forests 2021, 12, 1154. [Google Scholar] [CrossRef]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Han, J.; Kamber, M.; Pei, J. Data Mining Concepts and Techniques, 3rd ed.; Morgan Kaufmann Publishers: Burlington, MA, USA, 2012. [Google Scholar]

- Kujawa, S.; Niedbała, G. Artificial Neural Networks in Agriculture. Agriculture 2021, 11, 497. [Google Scholar] [CrossRef]

- Zeng, X.; Martinez, T.R. Distribution-balanced stratified cross-validation for accuracy estimation. J. Exp. Theor. Artif. Intell. 2000, 12, 1–12. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef]

- Rajasekar, L.; Sharmila, D. Performance analysis of soft computing techniques for the automatic classification of fruits dataset. Soft Comput. 2019, 23, 2773–2788. [Google Scholar] [CrossRef]

- Adige, S.; Kurban, R.; Durmuş, A.; Uslu, V.V. Classification of apple images using support vector machines and deep residual networks. Neural Comput. Appl. 2023, 35, 12073–12087. [Google Scholar] [CrossRef]

- Bhatt, A.K.; Pant, D. Automatic apple grading model development based on back propagation neural network and machine vision, and its performance evaluation. AI Soc. 2015, 30, 45–56. [Google Scholar] [CrossRef]

- Sofu, M.; Er, O.; Kayacan, M.; Cetişli, B. Design of an automatic apple sorting system using machine vision. Comput. Electron. Agric. 2016, 127, 395–405. [Google Scholar] [CrossRef]

| Class Data | Total Images | Training | Validation | Test |

|---|---|---|---|---|

| Red Braeburn | 579 | 405 | 87 | 87 |

| Fuji | 477 | 335 | 70 | 72 |

| Golden Reinders | 621 | 435 | 93 | 93 |

| Granny Smith | 590 | 413 | 89 | 88 |

| Kasel 37 | 525 | 368 | 78 | 79 |

| Mondial Gala | 477 | 334 | 71 | 72 |

| Red Chief | 612 | 429 | 91 | 92 |

| Scarlet Spur | 640 | 448 | 96 | 96 |

| Starkrimson | 618 | 433 | 92 | 93 |

| Starkspur Golden Delicious | 669 | 468 | 100 | 101 |

| Model | Performance Metrics | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | Specificity | F1-Score | AUC-ROC | AUC-PR | Cohen’s Kappa | MCC | |

| SVM | 98.17 | 98.2 | 97.97 | 97.3 | 98.07 | 99.85 | 98.89 | 97.96 | 97.96 |

| RFC | 96.67 | 96.62 | 96.35 | 97.14 | 96.46 | 99.94 | 99.42 | 96.29 | 96.3 |

| MLP | 98.62 | 98.59 | 98.41 | 98.67 | 98.48 | 99.99 | 99.9 | 98.47 | 98.47 |

| KNN | 91.28 | 91.42 | 90.4 | 94 | 90.38 | 99.06 | 95.55 | 90.29 | 90.38 |

| Task | Models and Accuracy | References |

|---|---|---|

| Recognize fruits and vegetables | KNN, 97.5% | [39] |

| Classify six apple varieties | SVM, 96% | [40] |

| Apple grading | ANN, 96% | [41] |

| Automatic apple sorting | Decision Tree, 73–96% | [42] |

| Classify six apple varieties | SVM, 95.27% | [15] |

| Classify seven fruit types | Naive Bayes, 95% | [21] |

| Classify 15 fruit types | MLP, 97% | [22] |

| Classify fruit types | NN, 99–100% | [23] |

| Classify ten fruit types | SVM, 95.3% | [24] |

| Classify six fruit types | SVM, 91.67% | [19] |

| Identify fruit types | KNN, 96% | [20] |

| Detect rotten fruits | SVM, 98% | [20] |

| Classify ten apple varieties | MLP, 98.62% | Proposed Method |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taner, A.; Mengstu, M.T.; Selvi, K.Ç.; Duran, H.; Kabaş, Ö.; Gür, İ.; Karaköse, T.; Gheorghiță, N.-E. Multiclass Apple Varieties Classification Using Machine Learning with Histogram of Oriented Gradient and Color Moments. Appl. Sci. 2023, 13, 7682. https://doi.org/10.3390/app13137682

Taner A, Mengstu MT, Selvi KÇ, Duran H, Kabaş Ö, Gür İ, Karaköse T, Gheorghiță N-E. Multiclass Apple Varieties Classification Using Machine Learning with Histogram of Oriented Gradient and Color Moments. Applied Sciences. 2023; 13(13):7682. https://doi.org/10.3390/app13137682

Chicago/Turabian StyleTaner, Alper, Mahtem Teweldemedhin Mengstu, Kemal Çağatay Selvi, Hüseyin Duran, Önder Kabaş, İbrahim Gür, Tuğba Karaköse, and Neluș-Evelin Gheorghiță. 2023. "Multiclass Apple Varieties Classification Using Machine Learning with Histogram of Oriented Gradient and Color Moments" Applied Sciences 13, no. 13: 7682. https://doi.org/10.3390/app13137682

APA StyleTaner, A., Mengstu, M. T., Selvi, K. Ç., Duran, H., Kabaş, Ö., Gür, İ., Karaköse, T., & Gheorghiță, N.-E. (2023). Multiclass Apple Varieties Classification Using Machine Learning with Histogram of Oriented Gradient and Color Moments. Applied Sciences, 13(13), 7682. https://doi.org/10.3390/app13137682