LTAnomaly: A Transformer Variant for Syslog Anomaly Detection Based on Multi-Scale Representation and Long Sequence Capture

Abstract

:1. Introduction

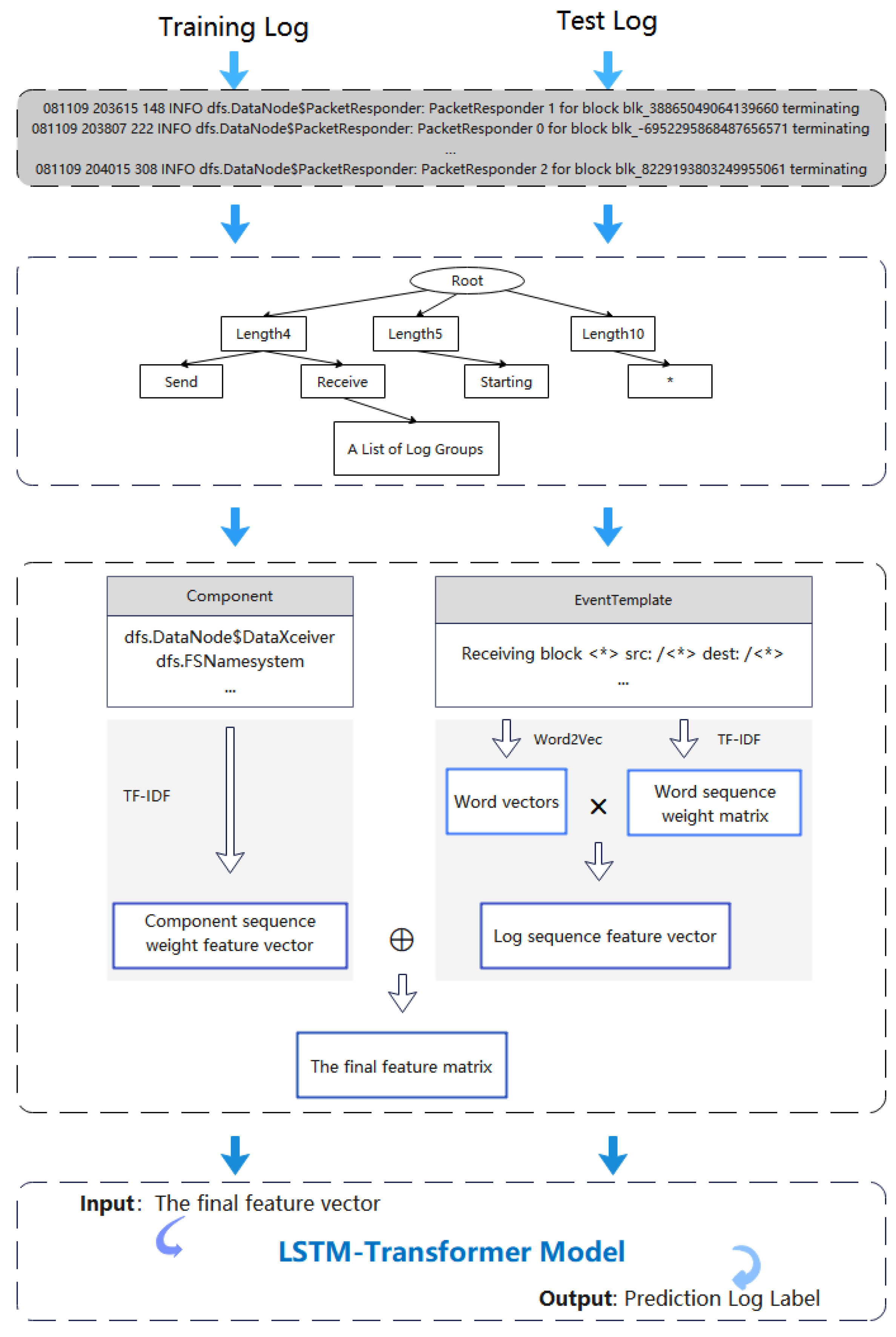

- This paper presents a novel log representation considering component values. This approach precisely collects the component value information of the log and the semantic information captured on the log sequence. The potential relationships of multiple features are fully considered, improving the utilization of log information and anomaly detection accuracy.

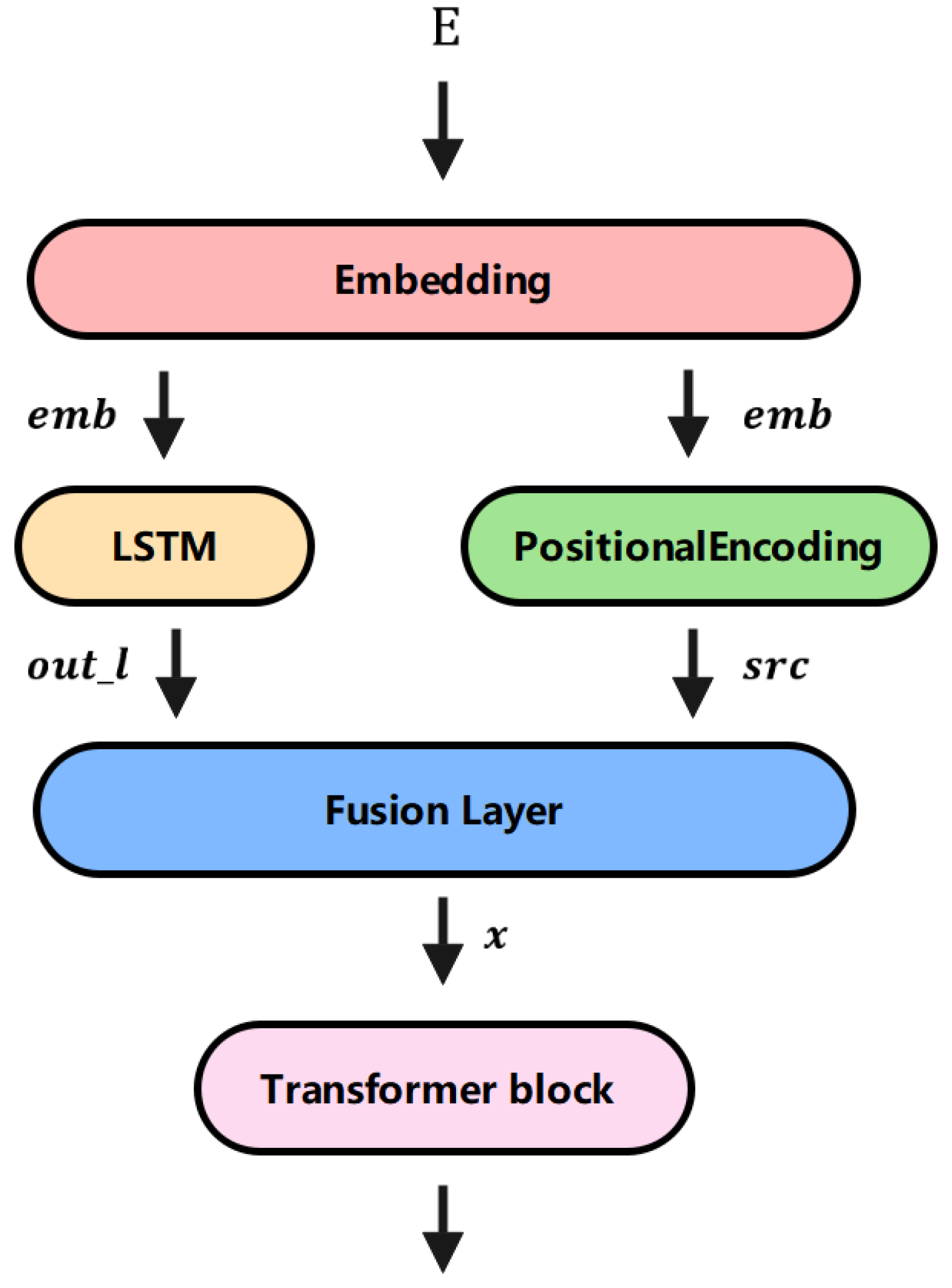

- This paper proposes LTAnomaly, a model for log anomaly detection based on LSTM and Transformer. LTAnomaly models the temporal dimension through LSTM and captures global contextual information using the attention mechanism in Transformer. When the model processes the sequence sequentially, it receives the influence from the global information, which improves the feature information dependency. This method is progressive and effective in log anomaly detection.

- We conduct comparative experiments with LTAnomaly and six baseline models on the public log data sets HDFS and BGL and conduct two groups of ablation experiments. The experimental results show that our model is superior to other log anomaly detection methods in terms of detection accuracy and time performance.

2. Related Work

2.1. Log Parsing and Preprocessing

2.2. Anomaly Detection

3. Proposed Models

3.1. Overview

3.2. Log Parsing and Preprocessing

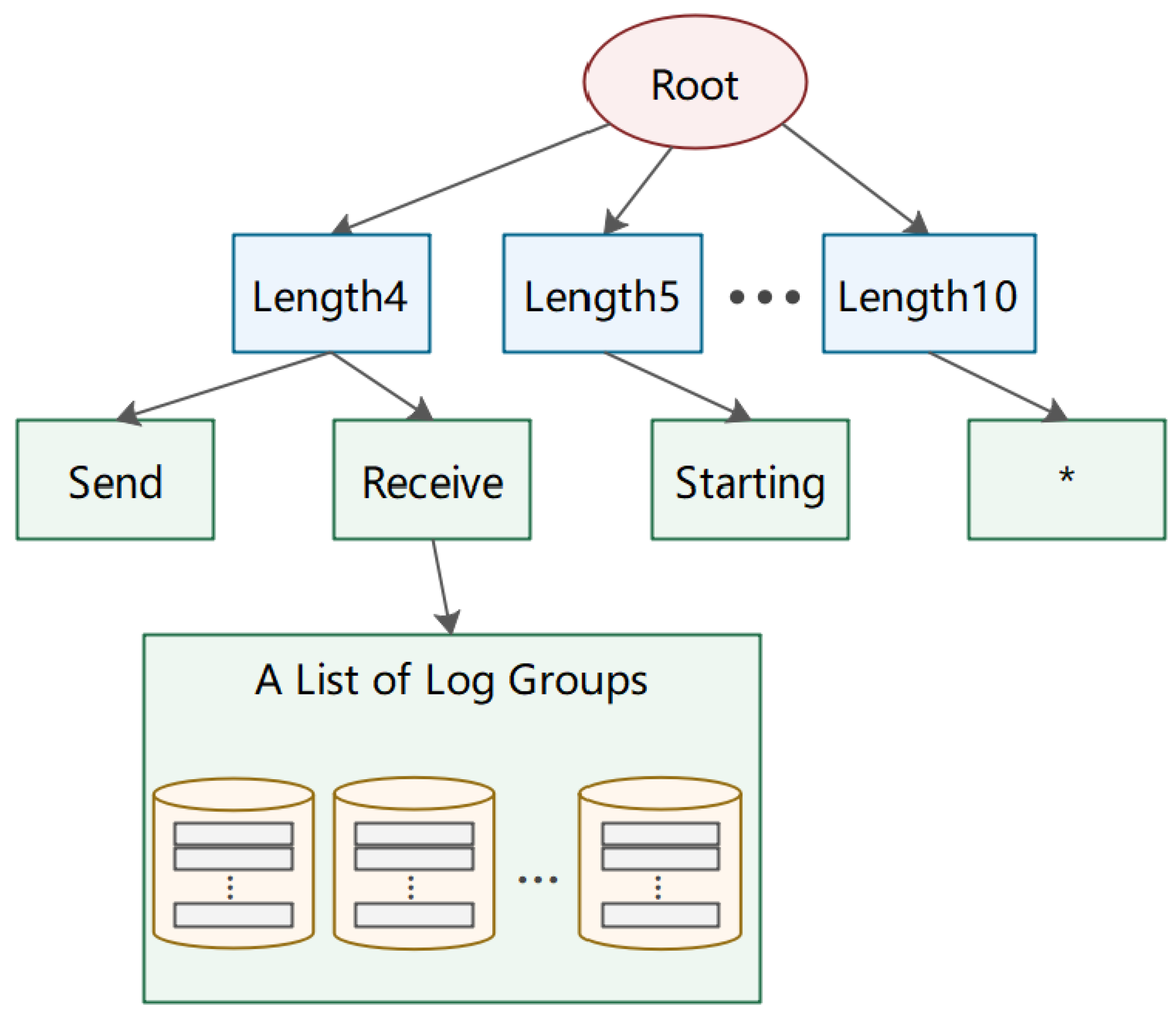

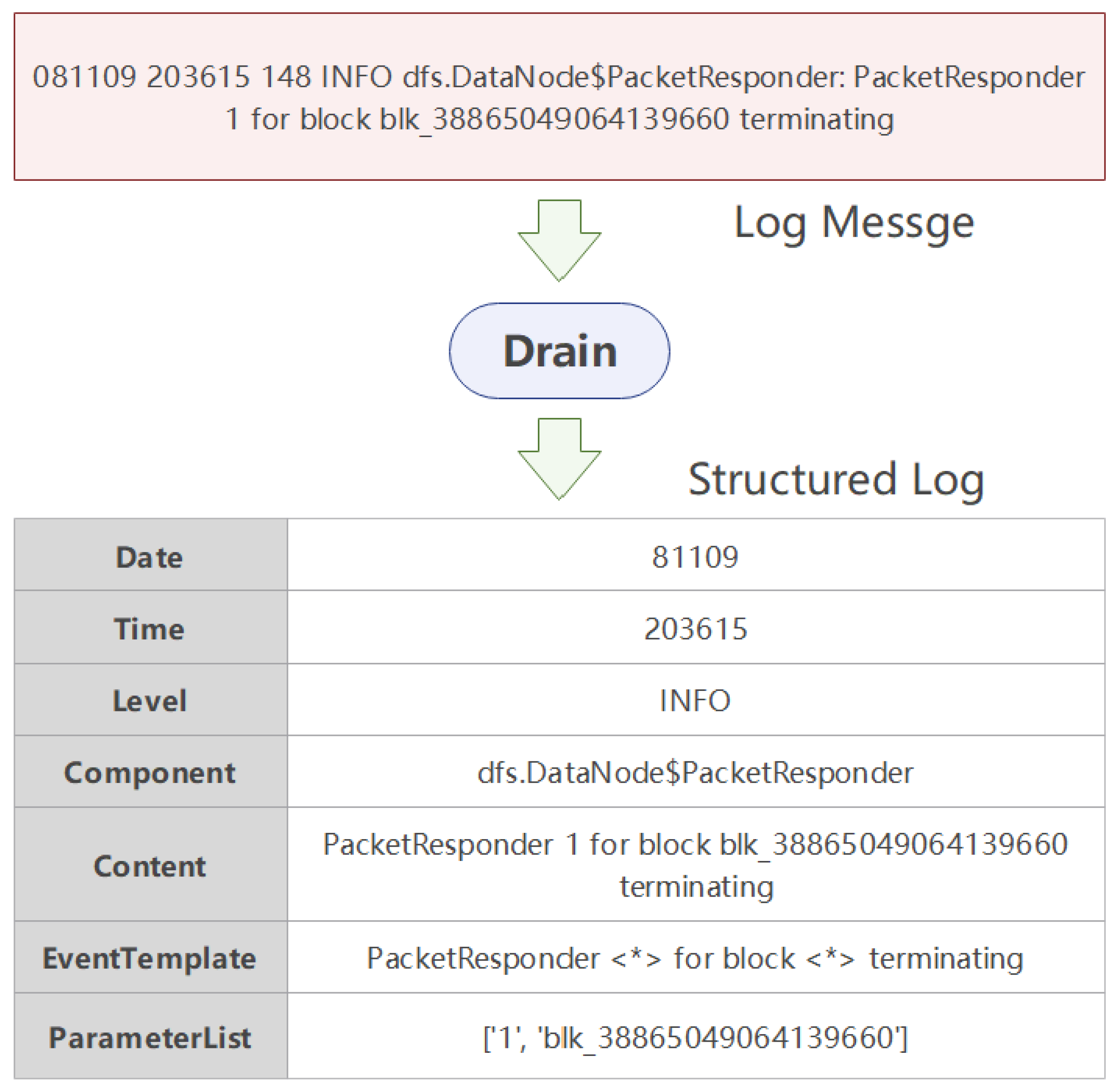

3.2.1. Log Parsing

3.2.2. Log Sequence Feature Matrix Generation

- Log sequence vector: Each dataset has a unique identifier. In the HDFS dataset, the block_id is set as the identifier for a specific sequence of operations. Log events are grouped on the strength of these identifiers, or log items generated by concurrent processes are broken into separate and single-threaded sequential sequences. Each row in Figure 4 refers to a log execution sequence, and each number (ID) in the execution sequence indicates a log. All logs in each log execution sequence have the same block_id. Logs with identical block_ids are arranged together in order to form a log sequence vector. All of these efforts help detect exceptions when the log execution sequence is incorrect.

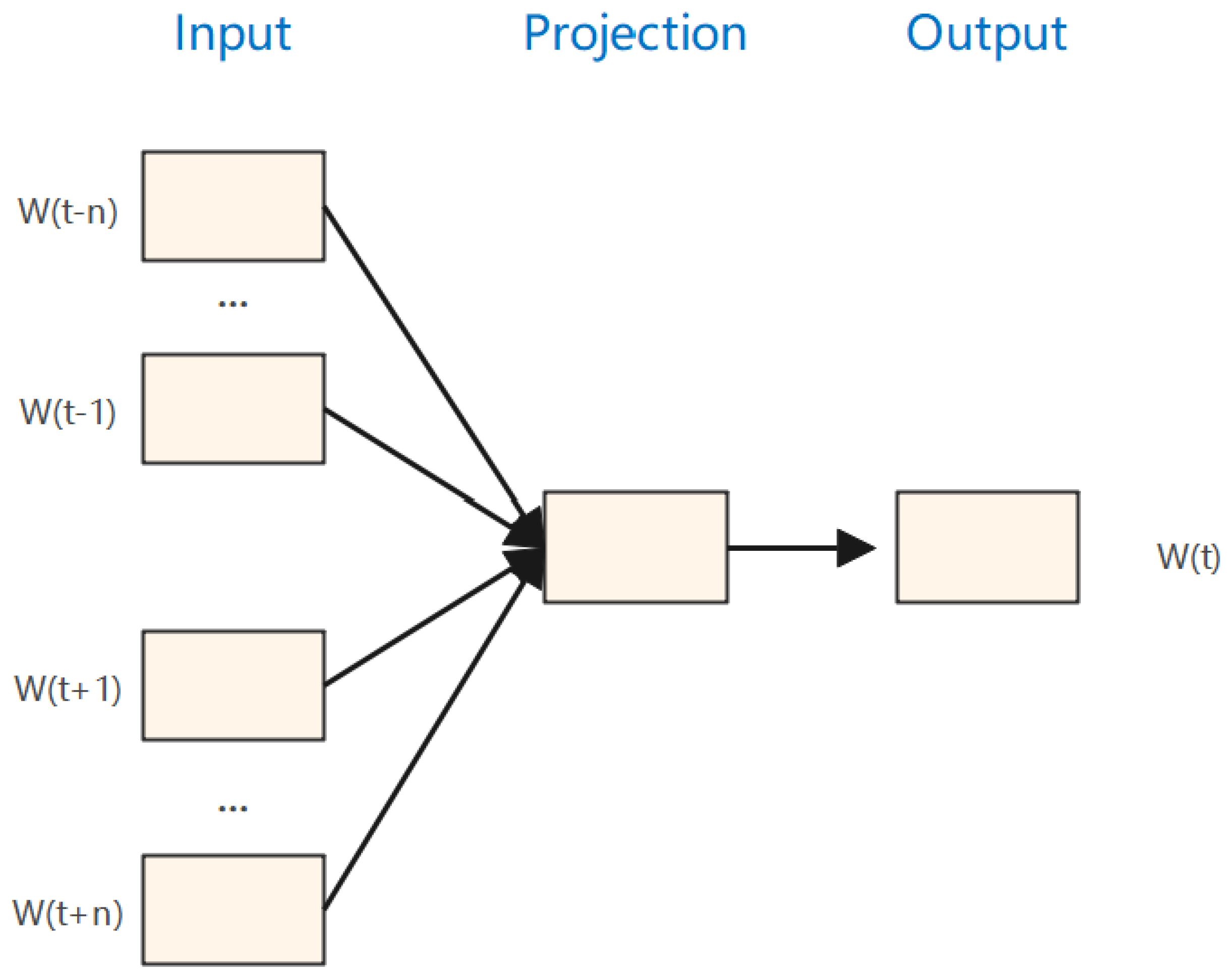

- Word vector: Word2Vec is an effective method of embedding words that takes the context of the words into account during training to establish a language model f(x) = y between the goal word (x) and its context (y). In this way, the targeted word is obtained according to the context word or vice versa. Word2Vec is performed in LTAnomaly to effectively process semantic information in log messages.Word2Vec requires words as input, so we need to first split the log template content. The log can be separated by spaces or other methods. For instance, “PacketResponder <*> for block <*> terminating” is divided into four words: “PacketResponder”, “for”, “block”, and “terminating.” Then, we use Word2Vec to predict targeted words from context words. As shown in Figure 5, one-hot encoding was performed on the top n words and the bottom n words of the target word. Next, the hidden and softmax layers are used to predict the targeted word, obtaining a word vector for each word. The word vectors of all words form a word vector matrix V according to their order of appearance in the log sequence vector.

- Word sequence weight matrix: TF-IDF is a popular weighting technique implemented in both information search and data mining. The importance of words in a sentence can be measurable by TF-IDF, which meets the demand for high resolution. A word’s weight tends to grow according to its quantity in the log sequence. However, if a word occurs frequently, the weight of the word decreases. For instance, if the word “Block” occurs regularly in a log event, then the word may better describe the log event. In contrast, if “Block” occurs in whole log events, then it is not possible to distinguish log events based on this word, so its weight should be reduced. Therefore, we denote significance with the term frequency (TF) and measure the occurrence of log events by the inverse document frequency (IDF). These are calculated asandwhere is the number of occurrences of , is summed over words in whole log sequences, and s and , respectively, denote the sum of log sequences and the sum of log sequences with . The TF-IDF weight W of each word is computed as TF×IDF. The TF-IDF weights of the log sequences constitute a word sequence vector weight matrix W .

- Log sequence feature matrix: To produce the log sequence feature matrix, we multiply the word vector matrix V by the word sequence weight matrix W to obtain the log sequence feature vector Ew , i.e.,Therefore, the log sequence characteristic matrix can pay attention not only to exceptions that occur in the log execution order but also to the semantic information of the log. This method represents log messages more accurately than existing log representations.

3.2.3. Component Sequence Matrix Generation

3.2.4. Final Feature Matrix Generation

3.3. Anomaly Detection

4. Experiments

4.1. Datasets

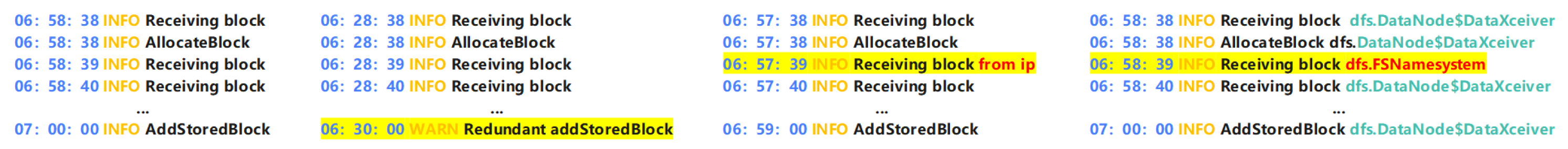

Log Anomaly Characteristics

4.2. Evaluation Metrics

4.3. Experimental Results

4.3.1. HDFS Dataset Evaluation

4.3.2. BGL Dataset Evaluation

4.4. Ablation Study

4.5. Explainability

4.6. Robustness

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Khan, S.; Parkinson, S. Eliciting and Utilising Knowledge for Security Event Log Analysis: An Association Rule Mining and Automated Planning Approach. Expert Syst. Appl. 2018, 113, 116–127. [Google Scholar] [CrossRef] [Green Version]

- Meng, W.; Liu, Y.; Zhu, Y.; Zhang, S.; Pei, D.; Liu, Y.; Chen, Y.; Zhang, R.; Tao, S.; Sun, P.; et al. Loganomaly: Unsupervised Detection of Sequential and Quantitative Anomalies in Unstructured Logs. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, IJCAI’19, Macao, China, 10–16 August 2019; AAAI Press: Washington, DC, USA, 2019; pp. 4739–4745. [Google Scholar]

- Gao, P.; Xiao, X.; Li, D.; Li, Z.; Jee, K.; Wu, Z.; Kim, C.H.; Kulkarni, S.R.; Mittal, P. SAQL: A Stream-Based Query System for Real-Time Abnormal System Behavior Detection. In Proceedings of the 27th USENIX Conference on Security Symposium, SEC’18, Berkeley, CA, USA, 15–17 August 2018; USENIX Association: Baltimore, MD, USA, 2018; pp. 639–656. [Google Scholar]

- Gao, P.; Xiao, X.; Li, Z.; Jee, K.; Xu, F.; Kulkarni, S.R.; Mittal, P. AIQL: Enabling Efficient Attack Investigation from System Monitoring Data. In Proceedings of the 2018 USENIX Conference on Usenix Annual Technical Conference, USENIX ATC ’18, Boston, MA, USA, 11–13 July 2018; USENIX Association: Boston, MA, USA, 2018; pp. 113–125. [Google Scholar]

- Xu, W.; Huang, L.; Fox, A.; Patterson, D.; Jordan, M.I. Detecting Large-Scale System Problems by Mining Console Logs. In Proceedings of the ACM SIGOPS 22nd Symposium on Operating Systems Principles, SOSP ’09, New York, NY, USA, 11–14 October 2009; Association for Computing Machinery: New York, NY, USA, 2009; pp. 117–132. [Google Scholar] [CrossRef] [Green Version]

- Lou, J.G.; Fu, Q.; Yang, S.; Xu, Y.; Li, J. Mining Invariants from Console Logs for System Problem Detection. In Proceedings of the 2010 USENIX Conference on USENIX Annual Technical Conference, USENIXATC’10, Berkeley, CA, USA, 23–25 June 2010; USENIX Association: Boston, MA, USA, 2010; p. 24. [Google Scholar]

- He, P.; Zhu, J.; He, S.; Li, J.; Lyu, M.R. Towards Automated Log Parsing for Large-Scale Log Data Analysis. IEEE Trans. Dependable Secur. Comput. 2018, 15, 931–944. [Google Scholar] [CrossRef]

- Du, M.; Li, F.; Zheng, G.; Srikumar, V. DeepLog: Anomaly Detection and Diagnosis from System Logs through Deep Learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, CCS ’17, Dallas, TX, USA, 30 October–3 November 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1285–1298. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, Y.; Lin, Q.; Qiao, B.; Zhang, H.; Dang, Y.; Xie, C.; Yang, X.; Cheng, Q.; Li, Z.; et al. Robust Log-Based Anomaly Detection on Unstable Log Data. In Proceedings of the 2019 27th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, ESEC/FSE, Tallinn, Estonia, 26–30 August 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 807–817. [Google Scholar] [CrossRef]

- Liang, Y.; Zhang, Y.; Xiong, H.; Sahoo, R. Failure Prediction in IBM BlueGene/L Event Logs. In Proceedings of the Seventh IEEE International Conference on Data Mining (ICDM 2007), Omaha, NE, USA, 28–31 October 2007; pp. 583–588. [Google Scholar] [CrossRef] [Green Version]

- Zhang, K.; Xu, J.; Min, M.R.; Jiang, G.; Pelechrinis, K.; Zhang, H. Automated IT system failure prediction: A deep learning approach. In Proceedings of the 2016 IEEE International Conference on Big Data (Big Data), Washington, DC, USA, 5–8 December 2016; pp. 1291–1300. [Google Scholar] [CrossRef]

- Huang, S.; Liu, Y.; Fung, C.; He, R.; Zhao, Y.; Yang, H.; Luan, Z. HitAnomaly: Hierarchical Transformers for Anomaly Detection in System Log. IEEE Trans. Netw. Serv. Manag. 2020, 17, 2064–2076. [Google Scholar] [CrossRef]

- Zhou, J.; Qian, Y.; Zou, Q.; Liu, P.; Xiang, J. DeepSyslog: Deep Anomaly Detection on Syslog Using Sentence Embedding and Metadata. IEEE Trans. Inf. Forensics Secur. 2022, 17, 3051–3061. [Google Scholar] [CrossRef]

- Cheng, S.W.; Pei, D.; Wang, C.J. Error Log Clustering of Internet Software. J. Chin. Comput. Syst. 2018, 39, 865–870. [Google Scholar]

- Vaarandi, R. A data clustering algorithm for mining patterns from event logs. In Proceedings of the 3rd IEEE Workshop on IP Operations & Management (IPOM 2003) (IEEE Cat. No.03EX764), Kansas City, MO, USA, 1–3 October 2003; pp. 119–126. [Google Scholar] [CrossRef]

- Makanju, A.; Zincir-Heywood, A.N.; Milios, E.E. A Lightweight Algorithm for Message Type Extraction in System Application Logs. IEEE Trans. Knowl. Data Eng. 2012, 24, 1921–1936. [Google Scholar] [CrossRef]

- Du, M.; Li, F. Spell: Streaming Parsing of System Event Logs. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016; pp. 859–864. [Google Scholar] [CrossRef]

- Du, M.; Li, F. Spell: Online Streaming Parsing of Large Unstructured System Logs. IEEE Trans. Knowl. Data Eng. 2019, 31, 2213–2227. [Google Scholar] [CrossRef]

- He, P.; Zhu, J.; Zheng, Z.; Lyu, M.R. Drain: An Online Log Parsing Approach with Fixed Depth Tree. In Proceedings of the 2017 IEEE International Conference on Web Services (ICWS), Honolulu, HI, USA, 25–30 June 2017; pp. 33–40. [Google Scholar] [CrossRef]

- Zhang, S.; Meng, W.; Bu, J.; Yang, S.; Liu, Y.; Pei, D.; Xu, J.; Chen, Y.; Dong, H.; Qu, X.; et al. Syslog processing for switch failure diagnosis and prediction in datacenter networks. In Proceedings of the 2017 IEEE/ACM 25th International Symposium on Quality of Service (IWQoS), Vilanova i la Geltru, Spain, 14–16 June 2017; pp. 1–10. [Google Scholar] [CrossRef]

- Studiawan, H.; Sohel, F.; Payne, C. Automatic Event Log Abstraction to Support Forensic Investigation. In Proceedings of the Australasian Computer Science Week Multiconference, ACSW ’20, Melbourne, Australia, 4–6 February 2020; Association for Computing Machinery: New York, NY, USA, 2020. [Google Scholar] [CrossRef] [Green Version]

- Studiawan, H.; Payne, C.N.; Sohel, F. Automatic Graph-Based Clustering for Security Logs. In Proceedings of the Advanced Information Networking and Applications (AINA), Matsue, Japan, 27–29 March 2019. [Google Scholar]

- Zhu, J.; He, S.; Liu, J.; He, P.; Xie, Q.; Zheng, Z.; Lyu, M.R. Tools and Benchmarks for Automated Log Parsing. In Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP), Montréal, QC, Canada, 27 May 2019; pp. 121–130. [Google Scholar] [CrossRef] [Green Version]

- Doreswamy, H.; Hooshmand, M.K.; Gad, I. Feature Selection Approach Using Ensemble Learning for Network Anomaly Detection. Caai Trans. Intell. Technol. 2020, 5, 283–293. [Google Scholar] [CrossRef]

- Xu, H.; Pang, G.; Wang, Y.; Wang, Y. Deep Isolation Forest for Anomaly Detection. IEEE Trans. Knowl. Data Eng. 2023, 1–14. [Google Scholar] [CrossRef]

- Zeng, C.; Jiang, Y.; Zheng, L.; Li, J.; Li, L.; Li, H.; Shen, C.; Zhou, W.; Li, T.; Duan, B.; et al. FIU-Miner: A fast, integrated, and user-friendly system for data mining in distributed environment. In Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Chicago, IL, USA, 11–14 August 2013. [Google Scholar]

- Mondal, T.; Pramanik, P.; Bhattacharya, I.; Boral, N.; Ghosh, S. Analysis and Early Detection of Rumors in a Post Disaster Scenario. Inf. Syst. Front. 2018, 20, 961–979. [Google Scholar] [CrossRef]

- Troudi, A.; Zayani, C.A.; Jamoussi, S.; Amor, I.A. A New Mashup Based Method for Event Detection from Social Media. Inf. Syst. Front. 2018, 20, 981–992. [Google Scholar] [CrossRef]

- Shukla, A.K.; Srivastav, S.; Kumar, S.; Muhuri, P.K. UInDeSI4.0: An efficient Unsupervised Intrusion Detection System for network traffic flow in Industry 4.0 ecosystem. Eng. Appl. Artif. Intell. 2023, 120, 105848. [Google Scholar] [CrossRef]

- Malki, A.; Atlam, E.S.; Gad, I. Machine learning approach of detecting anomalies and forecasting time-series of IoT devices. Alex. Eng. J. 2022, 61, 8973–8986. [Google Scholar] [CrossRef]

- Chen, M.; Zheng, A.; Lloyd, J.; Jordan, M.; Brewer, E. Failure diagnosis using decision trees. In Proceedings of the International Conference on Autonomic Computing, New York, NY, USA, 17–18 May 2004; pp. 36–43. [Google Scholar] [CrossRef]

- Farshchi, M.; Schneider, J.G.; Weber, I.; Grundy, J. Experience report: Anomaly detection of cloud application operations using log and cloud metric correlation analysis. In Proceedings of the 2015 IEEE 26th International Symposium on Software Reliability Engineering (ISSRE), Gaithersbury, MD, USA, 2–5 November 2015; pp. 24–34. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, Y.; Meng, W.; Luo, Z.; Bu, J.; Yang, S.; Liang, P.; Pei, D.; Xu, J.; Zhang, Y.; et al. PreFix: Switch Failure Prediction in Datacenter Networks. Proc. ACM Meas. Anal. Comput. Syst. 2018, 2, 1–29. [Google Scholar] [CrossRef]

- Bertero, C.; Roy, M.; Sauvanaud, C.; Trédan, G. Experience report: Log mining using natural language processing and application to anomaly detection. In Proceedings of the 2017 IEEE 28th International Symposium on Software Reliability Engineering (ISSRE), Toulouse, France, 23–26 October 2017; pp. 351–360. [Google Scholar]

- Yang, R.; Qu, D.; Qian, Y.; Dai, Y.; Zhu, S. An online log template extraction method based on hierarchical clustering. Eurasip J. Wirel. Commun. Netw. 2019, 2019, 135. [Google Scholar] [CrossRef] [Green Version]

- Ruff, L.; Vandermeulen, R.A.; Görnitz, N.; Binder, A.; Müller, E.; Müller, K.R.; Kloft, M. Deep semi-supervised anomaly detection. arXiv 2019, arXiv:1906.02694. [Google Scholar]

- Pang, G.; Shen, C.; Cao, L.; Hengel, A.V.D. Deep Learning for Anomaly Detection: A Review. ACM Comput. Surv. 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Brown, A.; Tuor, A.; Hutchinson, B.; Nichols, N. Recurrent Neural Network Attention Mechanisms for Interpretable System Log Anomaly Detection. In Proceedings of the First Workshop on Machine Learning for Computing Systems, MLCS’18, Tempe, AZ, USA, 12 June 2018; Association for Computing Machinery: New York, NY, USA, 2018. [Google Scholar] [CrossRef] [Green Version]

- Catillo, M.; Pecchia, A.; Villano, U. AutoLog: Anomaly detection by deep autoencoding of system logs. Expert Syst. Appl. 2022, 191, 116263. [Google Scholar] [CrossRef]

- Li, S.; Jin, X.; Xuan, Y.; Zhou, X.; Chen, W.; Wang, Y.X.; Yan, X. Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. Adv. Neural Inf. Process. Syst. 2019, 32, 1–11. [Google Scholar]

- Rae, J.W.; Potapenko, A.; Jayakumar, S.M.; Lillicrap, T.P. Compressive transformers for long-range sequence modelling. arXiv 2019, arXiv:1911.05507. [Google Scholar]

- Gulati, A.; Qin, J.; Chiu, C.C.; Parmar, N.; Zhang, Y.; Yu, J.; Han, W.; Wang, S.; Zhang, Z.; Wu, Y.; et al. Conformer: Convolution-augmented transformer for speech recognition. arXiv 2020, arXiv:2005.08100. [Google Scholar]

- He, P.; Zhu, J.; He, S.; Li, J.; Lyu, M.R. An Evaluation Study on Log Parsing and Its Use in Log Mining. In Proceedings of the 2016 46th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Toulouse, France, 28 June–1 July 2016; pp. 654–661. [Google Scholar] [CrossRef]

- Lin, Q.; Zhang, H.; Lou, J.G.; Zhang, Y.; Chen, X. Log Clustering Based Problem Identification for Online Service Systems. In Proceedings of the 2016 IEEE/ACM 38th International Conference on Software Engineering Companion (ICSE-C), Austin, TX, USA, 14–22 May 2016; pp. 102–111. [Google Scholar]

- Wang, X.; Cao, Q.; Wang, Q.; Cao, Z.; Zhang, X.; Wang, P. Robust log anomaly detection based on contrastive learning and multi-scale MASS. J. Supercomput. 2022, 78, 17491–17512. [Google Scholar] [CrossRef]

- Chen, Y.; Luktarhan, N.; Lv, D. LogLS: Research on System Log Anomaly Detection Method Based on Dual LSTM. Symmetry 2022, 14, 454. [Google Scholar] [CrossRef]

| Technique | Precision | Recall | F1-Measure |

|---|---|---|---|

| DeepLog | 0.92 | 0.95 | 0.934 |

| LogCluster | 0.96 | 0.83 | 0.890 |

| LogRobust | 0.96 | 0.96 | 0.970 |

| HitAnomaly | 0.99 | 0.97 | 0.979 |

| CL2MLog | 0.96 | 0.98 | 0.970 |

| LogLS | 0.96 | 0.98 | 0.970 |

| LTAnomaly | 0.98 | 0.99 | 0.985 |

| Model | Number of Logs | Time Consumption |

|---|---|---|

| Deeplog | 787,095 | 2 h 17 m 29 s |

| HitAnomaly | 787,095 | 4 h 29 m 56 s |

| LTAnomaly | 787,095 | 3 h 22 m 6 s |

| Techniques | Precision | Recall | F1-Measure |

|---|---|---|---|

| DeepLog | 0.91 | 0.71 | 0.797 |

| LogCluster | 0.42 | 0.87 | 0.541 |

| LogRobust | 0.91 | 0.78 | 0.840 |

| HitAnomaly | 0.95 | 0.90 | 0.924 |

| CL2MLog | 0.91 | 0.97 | 0.939 |

| LogLS | 0.68 | 0.99 | 0.809 |

| LTAnomaly | 0.97 | 0.98 | 0.975 |

| Dataset | Techniques | Precision | Recall | F1-Measure | |

|---|---|---|---|---|---|

| W | C | ||||

| BGL | - | O | 0.95 | 0.91 | 0.929 |

| O | - | 0.79 | 0.86 | 0.823 | |

| - | - | 0.97 | 0.98 | 0.975 | |

| HDFS | - | O | 0.97 | 0.98 | 0.975 |

| O | - | 0.83 | 0.89 | 0.859 | |

| - | - | 0.98 | 0.99 | 0.985 | |

| Dataset | Techniques | Precision | Recall | F1-Measure |

|---|---|---|---|---|

| BGL | LSTM | 0.92 | 0.86 | 0.889 |

| Transformer | 0.95 | 0.91 | 0.929 | |

| LTAnomaly | 0.97 | 0.98 | 0.975 | |

| HDFS | LSTM | 0.96 | 0.98 | 0.970 |

| Transformer | 0.99 | 0.97 | 0.979 | |

| LTAnomaly | 0.98 | 0.99 | 0.985 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, D.; Sun, M.; Li, M.; Chen, Q. LTAnomaly: A Transformer Variant for Syslog Anomaly Detection Based on Multi-Scale Representation and Long Sequence Capture. Appl. Sci. 2023, 13, 7668. https://doi.org/10.3390/app13137668

Han D, Sun M, Li M, Chen Q. LTAnomaly: A Transformer Variant for Syslog Anomaly Detection Based on Multi-Scale Representation and Long Sequence Capture. Applied Sciences. 2023; 13(13):7668. https://doi.org/10.3390/app13137668

Chicago/Turabian StyleHan, Delong, Mengjie Sun, Min Li, and Qinghui Chen. 2023. "LTAnomaly: A Transformer Variant for Syslog Anomaly Detection Based on Multi-Scale Representation and Long Sequence Capture" Applied Sciences 13, no. 13: 7668. https://doi.org/10.3390/app13137668

APA StyleHan, D., Sun, M., Li, M., & Chen, Q. (2023). LTAnomaly: A Transformer Variant for Syslog Anomaly Detection Based on Multi-Scale Representation and Long Sequence Capture. Applied Sciences, 13(13), 7668. https://doi.org/10.3390/app13137668