1. Introduction

Internet shopping is becoming more and more popular, leading to a rapid increase in postal parcels that need to be processed and shipped [

1]. High-throughput parcel sorting lines have been developed and utilized in logistic centers that automate most of the work to redistribute the incoming parcels to their destinations [

2]. However, manual work is still required to separate the incoming parcels from the pile and place them on the conveyor belt, forming a bottleneck in otherwise highly elaborate sorting centers. These bottlenecks are prone to get worse when demand increases, especially during holidays. In combination with an overall increase in unit labor cost, higher hourly compensation levels, and a decrease in the cost of robots, the upcoming installations of robotic systems will have decreased the required time for the return on investment [

3]. From an economic point of view, these aspects highly motivate the research and development of new approaches to tackle processes that traditionally require human resources.

This seemingly easy and dull job of taking parcels from the pile and placing them on the belt poses several challenges that make its automation difficult. First, packages come in various sizes, shapes, and colors, so any computer vision algorithm, if used, must be highly flexible and not tied to any particular object type. Second, the mix of soft and hard objects, some being very thin, poses challenges to the mechanical design of their transfer mechanisms. Third, objects are piled and partially occluded, so the order in which they can be picked must be taken into account. Fourth, the resulting system has to provide high throughput and work reliably without the need for manual intervention.

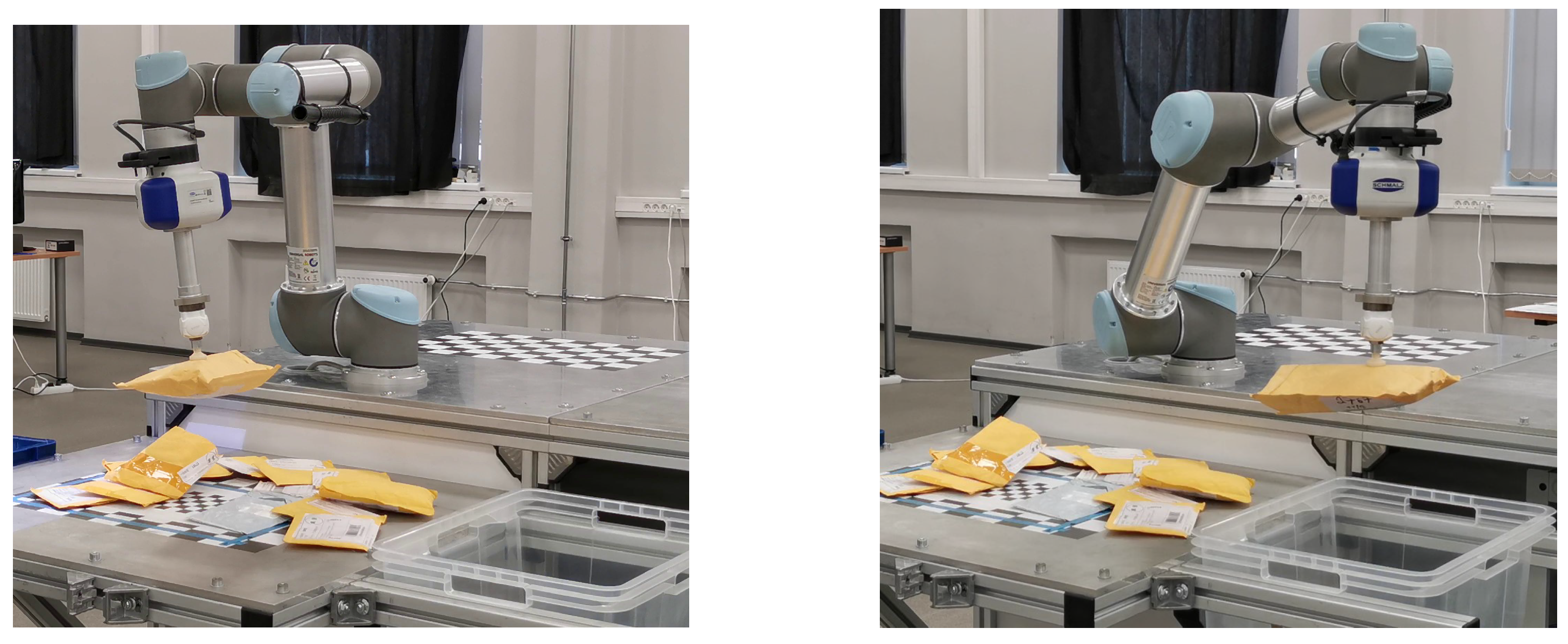

The main scientific contribution of this article lies in the evaluation of an AI-based robot solution for automating the parcel-handling process. The proposed architecture tackles the challenges posed by diverse parcel characteristics, ensuring adaptability, efficiency, and reliability in parcel-sorting centers. The developed system and both the design choices and implementation in the physical setup are shown and described in detail. Various crucial aspects for the implementation of similar systems are discussed and possible shortcomings are identified. Universal Robot UR5 is employed, which is a hardware-agnostic robot control and a computer-vision system for detecting and moving parcels from an unstructured pile to the required location as illustrated in

Figure 1. The solution can handle arbitrarily shaped objects, such as postal parcels, without additional training, and the achieved results show the reliability of the developed system in different scenarios.

2. Related Work

For several decades, robotic systems have played a crucial role in automating various systems [

4]. These systems are traditionally deployed in static and known environments where robotic motions can be fully preprogrammed or rely on simple input devices for an adjustment in the grasping position, typically varying only in two dimensions [

5]. Robotic systems in such environments have several prerequisites that are frequently not feasible to comply with due to different limitations of the manufacturing plant. A typical limitation is the availability of space to divide and position objects on one level, which is also the case for the problem addressed in this article. In the post office parcel-handling context, the sorting process can be fully automated; however, it requires a substantially large space in the factory or even redesigning the whole process, where an industrial robot system, if used at all, could then be deployed with a rather simple 2D vision system [

6,

7].

In situations where space cannot be allocated to create structured conditions for the robotic system, uncertainty inevitably arises in the robot’s workspace. This makes it challenging to grasp objects positioned in this region and necessitates the use of so-called smart industrial robot control [

8]. While there are many application areas for smart industrial robot control [

9], grasping objects in unstructured or weakly structured environments [

10] remains one of the greatest challenges in modern robotics [

8]. The grasping pipeline typically relies on an additional computer vision system that realizes a computer-vision-based control. This control method can be composed of several different approaches in both system architecture design level and learning strategies [

11,

12]. Different scenarios or combinations of conditions in the region of interest have been the main drive in the development of various approaches to tackle the grasping problem, mainly depending on the level of uncertainty in the region of interest and the type of the objects. Even though there can be a range of flexibility in the scenarios where the system can sustain the required level of performance, this can have a negative influence on the performance efficiency and maintainability aspects [

13].

Firstly, the deep-learning-based approaches can be divided into two branches—working with known or unknown objects, e.g., model-based or model-free methods [

14]. Even though model-based methods have become quite effective [

15], they require previous knowledge about the objects of interest, which can become quite cumbersome when the training data need to be acquired. Recently, this aspect has been addressed by synthetic data generation methods [

16] and sim-to-real transfer techniques [

17]. Nevertheless, the goal of all the grasping techniques is to estimate the grasp pose of the object. For model-based methods, several steps before the estimated grasp pose can be present, such as object detection [

18] and segmentation with respective post-processing steps depending on the application scenario [

19] or various approaches that directly estimate the 6D pose [

20,

21] of the object, from which the grasp pose can be derived.

On the other hand, model-free approaches search for the grasp pose directly without relying on prior knowledge of the object for training the system. These methods are highly desirable as they can generalize to previously unseen objects. They circumvent the pose estimation step and directly estimate the grasp pose by identifying a graspable spot in the region of interest [

8].

One important model-free grasping method is Dex-Net [

22]. To train this method, grasps across 1500 3D object models were analyzed, resulting in a dataset of 2.8 million point clouds, suction grasps, and robustness labels (Dex-Net 3.0). The dataset was then used to train a grasp quality convolutional neural network (GQ-CNN) to classify robust suction targets in single object point clouds. The trained model was able to generalize to basic (prismatic or cylindrical), typical (more complex geometry), and adversarial (with few available suction-grasp points) objects with success rates of 98%, 82%, and 58%, respectively. A more recent implementation of Dex-Net [

23] (Dex-Net 4.0) containing five million synthetic depth images was used to train policies for a parallel-jaw and vacuum gripper, resulting in a 95% accuracy in gripping novel objects. In [

24], a generative grasping convolutional neural network (GG-CNN) was proposed, where the quality and pose of grasps are predicted at every pixel, achieving an 83% grasp success rate on unseen objects with adversarial geometry. These methods are well suited for closed-loop control up to 50 Hz, enabling successful manipulation in dynamic environments where objects are moving. Furthermore, multiple grasp candidates are provided simultaneously, and the highest quality candidate ensures a relatively high accuracy in previously unknown environments.

A significant challenge is grasping objects in a cluttered real-world scene. In such a setting, not only should the object to be grasped be analyzed, but also the surroundings for the robot should not collide with it. GraspNet [

25] addresses this challenge and learns to generate diverse grasps covering the whole graspable surface. Gripper collisions are avoided by considering them during training and by predicting grasps directly without relying on accurate segmentation.

Several object manipulation systems, applicable to post office automation, have been developed in the past. A system for package handling automation employing a robot arm with a vacuum gripper is described in [

26]. Mailpiece singulation, i.e., removing items one by one from a moving conveyor, was described in [

27]. They considered a bin-picking task, where a mix of objects with different shapes and weights should be grasped and removed, and designed a system comprising a range imaging camera, sparse data range imaging algorithm, grasp target selection algorithm, and a vacuum gripper. An implementation of a system for object manipulation was presented in [

28] and showcased for two use cases: fish picking and postal parcel sorting. It employs model-free object localization based on Mask R-CNN for object detection and finding a grasp point for each segmented instance. A context-aware model for parcel sorting and delivery was proposed in [

29]. The system includes the detection and localization of the parcel, recognizing the barcode and reading the delivery address, and performing robotic manipulation to transfer the parcel to the designated delivery box.

The literature review reveals several promising strategies for addressing this challenge. However, it is deficient in essential implementation specifics and comprehensive architectural recommendations for real-world systems. This article presents a proposed robotic system designed for post office package handling. It provides a detailed account of the key system components and offers insights into implementation aspects in physical systems.

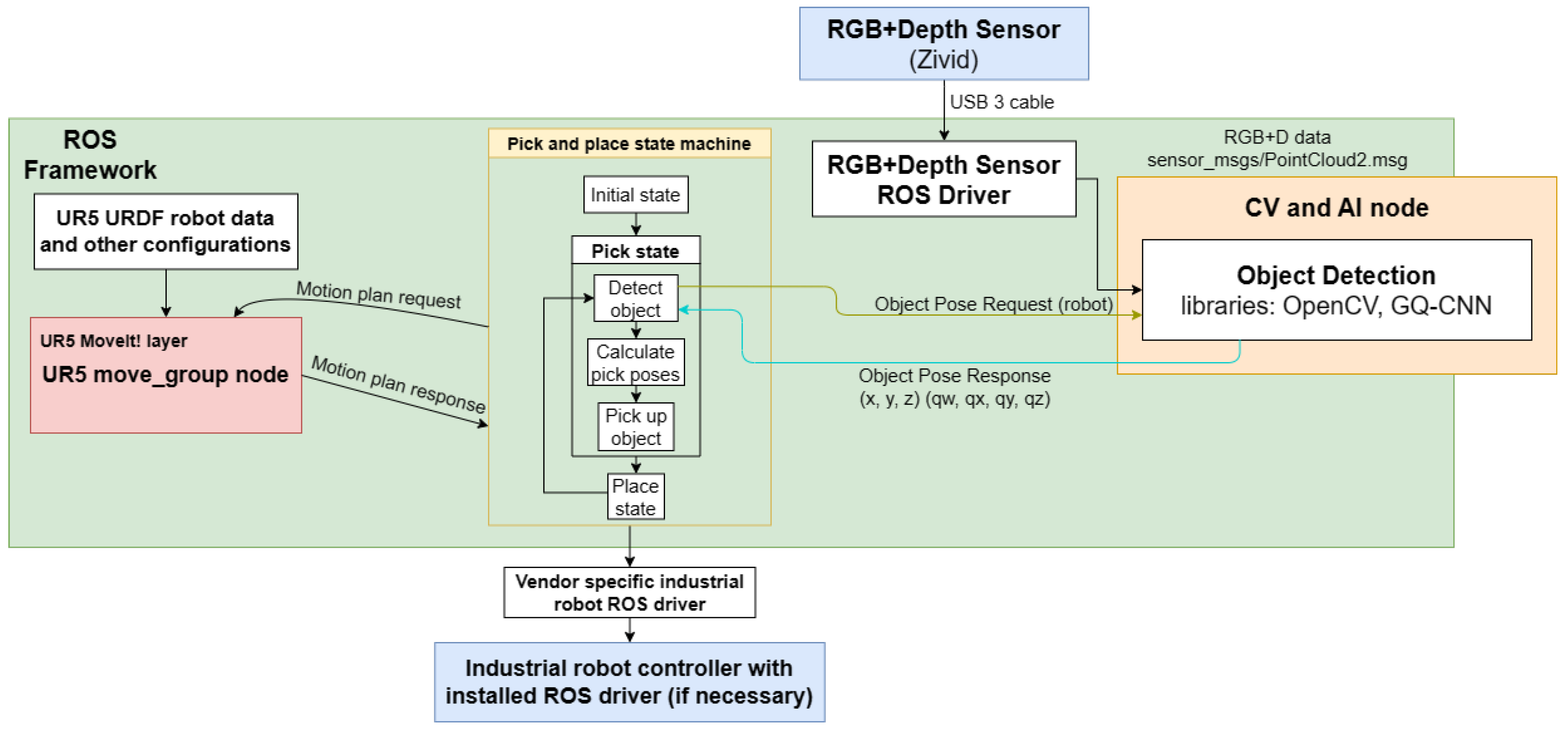

3. Architecture

The proposed robotic system for post office package handling, as illustrated in

Figure 2, builds upon the TRINITY use-case demonstration of the artificial-intelligence-based stereo vision system [

30], consisting of multiple modules. The whole system is based on the robot operating system (ROS) [

31] framework, which is used for communicating between modules and controlling the system. The system includes a 6-DOF robot arm with a gripper, an RGB-Depth sensor, and two processing units for computer-vision-based and robotic-motion-based computations.

The workflow of the system can be described as follows: first, upon request, a 3D image of the workspace is captured with the RGB-Depth sensor and sent to a grasp quality convolutional neural network (GQ-CNN) Dex-Net 4.0 [

23] for finding a grasp pose.

Second, when a pose is found, it is sent to a robot control state machine in a format of X, Y, Z coordinates and a W, X, Y, Z quaternion orientation. The robot control state machine is a set of states that describes the whole process in an overseeable and defined way with predictable outcomes. It consists of multiple robot functions, such as moving to a desired pose or picking and releasing a desired object. It is implemented using the State Machine Asynchronous C++ (SMACC) [

32] package.

Third, after receiving a pose, a grasp path is planned and, if successful, the state machine goes on through a full pick and place cycle. The robot control is implemented using the MoveIt! Motion Planning Framework [

33], which manages the planning of paths and communication with the hardware. From the hardware side, the communication is processed using a robot-specific ROS driver by ROS-I in conjunction with a low-level controller provided by the industrial robot. After the object is placed in a predefined location, the process starts anew.

3.1. Hardware Setup

The system was built and tested with the Universal Robot UR5 6-DOF robot arm [

34] with an attached Schmalz vacuum gripper [

35] for gripping the objects. An image of the work environment can be seen in

Figure 3, where, at the top, the depth sensor can be seen, on the right, the robot, and, in the middle, the transferable parcels.

3.1.1. Grippers

Choosing the right gripper for object manipulation is one of the main prerequisites for the successful automation of pick and place tasks. Many parameters should be considered, but usually the task itself mainly determines the gripper type. The two main gripping strategies are vacuum gripping and parallel gripping. Specifically for the robot system proposed in this article, a vacuum gripping technique enabled by Schmalz Rob-Set UR was used as it supports the system’s hardware and software requirements and is well documented. Additionally, a wide variety of different vacuum caps were also included in the hardware infrastructure, which allow for rapid testing when different kinds of object material are introduced in the pick and place task, essentially also allowing us to experimentally determine the most suitable vacuum cap for parcel handling.

3.1.2. Depth Sensors

The information about the dynamically changing environment, specifically the grasping pose of randomly dropped objects, which can vary in six dimensions, was acquired using a depth sensor. Two different depth sensors (Zivid One M and Intel RealSense D415) that vary in such parameters as precision, field of view, working range, weight, and dimensions were considered.

After examining initial experiments and the nature of computer vision techniques required to handle post office packages, it was determined that the system relies on a high precision of the depth information. The common point precision of an Intel Realsense D415 is 1–3 mm, whereas, for a Zivid One M depth camera, it is around 60 m. For this reason, the Zivid camera was chosen to be used in this robotic system. Zivid uses structured light for 3D information capture. It operates with a resolution of 1920 × 1200 at a frame rate of up to 12 frames per second and utilizes the USB 3.0 SuperSpeed as the connection interface. The Zivid camera is ideal for high-precision tasks when the camera can be mounted statically in a position in the workspace, where its field of view covers most of it, as the comparatively large dimensions (226 mm × 86 mm × 165 mm) and weight (2 kg) of this camera would restrict some movements for the industrial robot if mounted on it.

3.1.3. Industrial Robot

One of the main hardware components of the system is the industrial robot. An industrial collaborative robot (Universal Robots UR5) was used, which is ideal for automating low/medium-weight processing tasks. It has a maximum payload of 5 kg, a maximum joint speed of 180 degrees/second, an 850 mm reach radius, which can be improved with gripper modifications, and a pose repeatability of +/− 0.1 mm. Nevertheless, as the system is built upon ROS, the robot control module can be adjusted for usage with different kinds of robot manufacturers [

36].

3.1.4. Processing Units

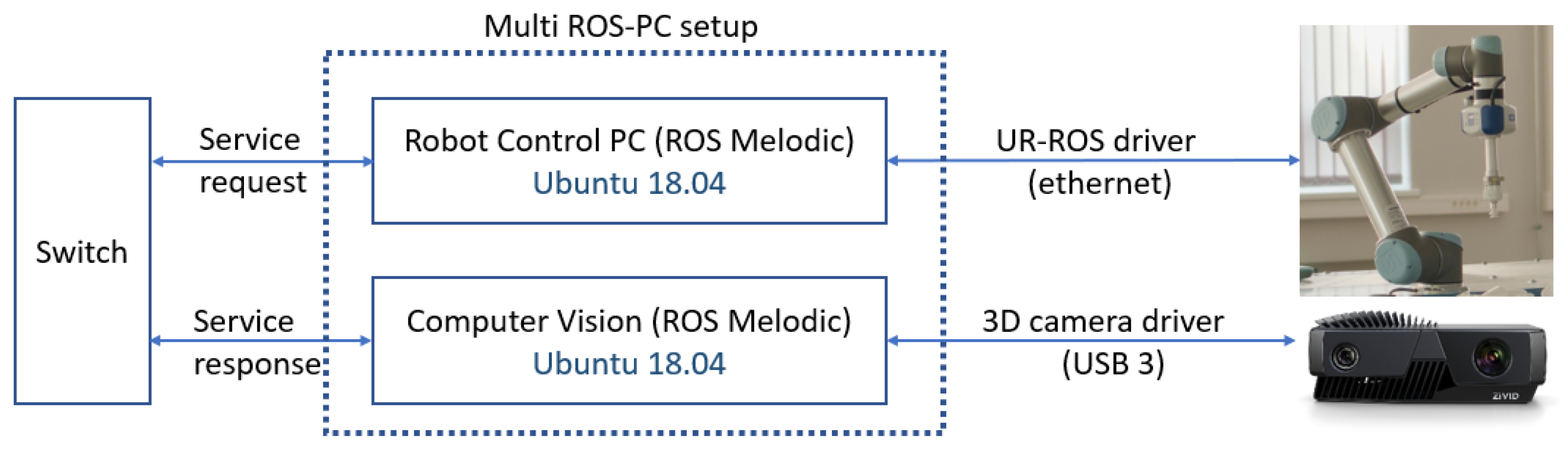

As depicted in

Figure 4, the proposed system is composed of two processing units, one for robot control and the other for computer vision, both of which were configured as a multi-ROS-PC setup. This was carried out to make each of the units independent from one another in terms of computing, so no unexpected interruptions would occur. To ensure that the robot control is not affected by system latencies, the robot control PC was set up with real-time capabilities by using Ubuntu 18.04 with an enabled real-time kernel. The real-time kernel has compatibility issues with NVIDIA GPU drivers, which are needed for computer vision tasks, which is why a two-unit setup was used. The robot control PC runs ROS Melodic. It has two Ethernet cards to ensure a direct connection with the robot’s controller and ROS multi-master ecosystem. Apart from the two Ethernet cards, it can be an ordinary desktop computer, as the main task is to generate feasible trajectories of an industrial robot, and there are no other processes that require high-performance capabilities. In this case, it had the following specifications: Intel Core i7-8400K CPU@ 3.70 GHz and 16 GB RAM.

The processing unit for computer-vision-related computations, on the other hand, requires a graphics processing unit, both for running ZIVID and for grasp point detection. It runs on regular Ubuntu 18.04, also with ROS Melodic. In this case, the computing capabilities can have a big influence on the overall cycle times of the pick and place process. The computer vision PC has the following specifications: AMD Ryzen 5 3600@ 3.60 GHz, 32GB RAM, and NVIDIA GeForce RTX3060Ti.

3.2. Software Ecosystem

ROS. The whole system communication was conducted using ROS Melodic Morenia, as it is the ROS version compatible with Ubuntu Linux 18.04. All further described packages are also compatible with this ROS version.

State Machine Asynchronous C++. The state machine control of the system was carried out using the SMACC library for ROS. Conceptually, SMACC defines each individual state machine component as Orthogonal, which also includes a Client. Clients define connections outside of the state machine. In the state machine, transitions between the states are triggered by Events [

32].

As possible alternatives for controlling the system in such a manner, there exist such ROS packages as SMACH [

37] and behavior trees [

38]. Behavior trees offer similar functionalities as state machines. With behavior trees, the development of more complex systems, such as those with uncertainties, is possible, but such possibilities can also make the regular development process more complex than necessary, especially when debugging. For this reason, it was chosen to make the system using state machines.

As a different option for implementing state machines, the SMACH package was explored. The previously mentioned SMACC package is based on the SMACH package, the only real difference being that SMACC can be developed in C++ but SMACH is developed in the Python programming language. Since the overall system is all in C++, the SMACC package was chosen for easier development.

Data acquisition. An important factor for choosing any hardware that will need to work with an open-source framework, such as ROS, is said hardware’s support for this framework. In

Section 3.1.2, sensors such as Intel Realsense and Zivid are mentioned, and both of them also support being used with ROS by providing special ROS drivers. These drivers include functions for easy use such as capturing an image or a video stream, changing capture settings, and so on.

It is also important to note that, when using additional sensors in such systems, a special calibration process called hand–eye calibration is required in order to have all the physical system units operate in a combined coordinate space. This requires acquiring data with both the sensor and the actuator at the same time and then calculating coordinate transformations between them.

MoveIt! The path planning and execution were carried out using the MoveIt! Motion Planning Framework. It is a collection of tools for robot motion planning, visualization, and execution, which can be used in C++ and Python. It employs such tools as the Open Motion Planning Library (OMPL) [

39], which provides a set of sampling-based motion planners to choose from for a particular task, and it comes with a set of functions to use for robot control. In addition, it provides the option to visualize a digital twin of the system for easy offline development and can also provide useful information about the planned paths in both offline and online scenarios.

UR driver. Similar to choosing sensors, the choice of the robot itself is also strongly influenced by its support for the framework used. Universal Robot supports work with their robots in the ROS framework by providing the UR ROS driver. This includes all the necessary tools for connecting, communicating, and controlling the robot easily, which makes this robot an easy choice for the development of such systems.

3.3. Communication and Synchronization

Since the whole system is controlled by a state machine, the system functions by transitioning from one definite state to the next. As mentioned in

Section 3.1.4, the robot control and workspace sensing are processed on two different units. This provides an extra layer of complexity to the system in the form of communication and synchronization.

The signal to start detection can be sent once the robot is no longer blocking the workspace, but this happens in multiple states before the robot is ready to begin a new cycle. Since the grasp point detection takes an unpredictable amount of time, the robot system can both run forward or fall behind during the process in terms of synchronizing when the new grasp point is sent. To make the process more time efficient, the communication between processing units happens in parallel with the robot’s motion. When a new grasp point is detected, the point is not sent as a message but, rather, it is set as a new value on the ROS parameter server with the tag new, describing that the robot has not yet used this point. Once the robot has reached a state where it can act upon a new point, it checks this new tag, which can be set to either true or false. Depending on when both of these parts of the system reach this point, the robot either waits until a new pose with this tag set to true appears, or reaches the new cycle starting pose and instantly starts working on the new cycle. In this way, the two parts of the system are both co- and independent in their functionality.

3.4. Grasp Point Detection

As the postal parcels have irregular shapes—see

Figure 5a—for grasp point calculation, the Dex-Net 4.0 [

23] library was selected, which delivers robust performance in the proposed application. It was configured to utilize the grasp quality convolutional neural networks (GQ-CNNs) method for grasp detection and delivers information for a system with a suction gripper, for which it is well suited. The camera intrinsics were obtained directly from the camera using its ROS driver and the calibration was performed beforehand. The chosen Zivid camera naturally produces images in 1920 × 1200 resolution and such images take approximately 10 s to process with Dex-Net. To increase the performance, the images were firstly cropped to show only the region of interest (ROI) where the packages reside. Afterward, they were downscaled to 640 × 480.

The output quality of the Dex-Net is greatly enhanced if one provides a segmentation mask in its input showing precisely the region occupied with objects (the objects do not need to be segmented individually). To produce such a mask, the depth image of a clean table was taken during the calibration phase. When the system is operating, the active workspace data were compared with the empty table depth image to show only pixels with a depth value of fewer than 2 millimeters (minimal parcel height) above it. Such a setup allows Dex-Net to produce reliable grasps; see

Figure 5.

3.5. Robot Control

The control system consists of multiple states that manage different robot actions. The whole robot movement process is divided into several states, such as moving to a predefined start position, moving to an ever-changing grasp position, activating the gripper, and so on.

Trajectory planning for movement in these states was performed using MoveIt!, which is connected to the robot and gripper drivers with the movegroup interface. In the MoveIt! setup of the system, the planners best suited for the job can be chosen from the ones provided in the OMPL. The physical setup of the system is also represented digitally in the MoveIt! setup. Both the table and the robot have a 3D model, which represents the system in real-time. This provides the necessary information for the planner to plan safe, collision-free trajectories. This also allows the system operator to see both the planned trajectories and found object coordinates before they are acted upon.

4. Results and Discussion

The described system was implemented in the lab environment and validated for post office parcel handling in multiple scenarios, illustrated in

Figure 6. The experiments were conducted in five different scenarios, each consisting of a different amount of packages, and the system was tested for its ability to clear the workspace. The physical setup of the robot is depicted in

Figure 3. The robot is located near the table holding a pile of realistic parcels—see

Figure 5a—and they should be moved to a container standing nearby. The camera is mounted approximately 1 m above the table.

Five scenarios, consisting of 1, 5, 10, 15 and 20 parcels in the workspace, were used for testing. Each scenario was tested 10 times, and the packages were randomly distributed in the workplace. The packages were piled on top of each other when possible to replicate real-life setups as much as possible. The attempts for each scenario were counted and compared to the expected amount, which was set to one attempt per package in the scenario. Afterward, the average deviation from the expected attempt amount was calculated and, lastly, an overall average deviation was calculated to see the mean error of the system. The results can be seen in

Table 1.

As per results, the 1-package scenario showed a perfect score, 5 packages resulted in an average of 5.3 attempts per 5 packages, for 10 packages, it took an average of 10.4 attempts, for 15 packages, an average of 16 attempts were needed, and, for 20 packages, an average of 21.3 attempts was required. Overall, the system achieved a 100% success rate for emptying the workspace, meaning that all the packages were successfully handled; however, an average of a 4.37% increase in additional grasps can be observed when compared to the perfect one attempt per package. By measuring only one attempt per package, the system succeeded with 94.1% successful grasps. Even though some grasps did not achieve success, the system could recover and grasp the object on the second try.

While testing, it was observed that most of the failed attempts can be attributed to a grasp pose located on an uneven part where the vacuum gripper cannot achieve the required vacuum to grasp the desired package. This issue could be addressed by a more flexible vacuum cap or increasing the vacuum, whereas the available hardware in this experimental setup was already adjusted as much as possible for these experiments. A customized vacuum system for a specific use-case and package materials could increase the average grasping success rates.

To the best of the author’s knowledge, there is no relevant literature available on the post office parcel-handling process; however, the available similar systems working with unknown and arbitrarily placed objects show similar precision ratings [

22,

23,

24]. Furthermore, the developed system experimentally proves the viability of the robotic system for deployments in complex scenarios, where the objects can be very similar but also differ in terms of size and materials.

In a real use case, there would be a conveyor belt in place of the container. The system was tested with different pile configurations and parcel types, and, in all cases, the system worked reliably, as can be seen in the video demonstration

https://www.youtube.com/watch?v=djxrbQZtiKk, accessed on 20 June 2023.

During the initial stages of development, several issues, which were later fixed, were encountered:

Very thin parcels such as envelopes were undetectable with low-precision depth cameras. Therefore the high-precision Zivid camera was chosen.

The distance calibration from the camera to the table has to be precise; otherwise, the pickup point may be seemingly inside the table and the robot driver refuses to grasp at that location as it might see it as a potential collision.

The package could have wrinkles or bends at the grasp point and the suction gripper would therefore be unable to hold the object. In addition, the detected grasp point and the gripper approach direction could turn out to be physically impossible for the robot, of which Dex-Net was not aware (this case mostly occurs with large, bulky parcels near the edges of the pickup zone). To deal with these cases, recovery actions were implemented. In such cases, the system tries to grasp again at a newly calculated point. Due to randomized grasp point selection, the system can eventually achieve a successful grasp, although some time might be wasted.

After these fixes, the system always recovered from failures in the experiments and was able to transfer the packages.

5. Conclusions

In this paper, an AI-based robotic solution for automating the task of parcel placement was presented, and a series of choices and solutions for implementing such a system with currently available hardware and software components were proposed. The architecture of the proposed system, which comprises a Universal Robot UR5 robotic arm, a Zivid RGB-D camera, multiple processing units, Dex-Net grasp pose estimation software, and the robot control system components, all successfully integrated under the ROS framework, is described in detail in this paper.

The architecture details of the proposed solution and insights that will facilitate building similar systems have been given. The choices presented in this paper lead to a well-functioning system. It was found that Dex-Net grasp detection provides a high precision of the grasp point. The solution can handle arbitrarily shaped objects, such as postal parcels, without additional training. It easily integrates within any workflow under the ROS framework and successfully addresses the identified challenges. With this solution, installed at parcel delivery centers, human workers can be freed to perform more pleasant work.