In this section, we first present a brief introduction to our overall EC-GAN architecture. Then, we describe the method for the disentanglement of latent space and facial expression control. Finally, we describe the loss functions applied in EC-GAN.

In general cases, the dataset contains two parts: a ground truth face image

and its corresponding emotion label

. We denote the partially masked version as

.

M is a binary matrix; 1 indicates the observed region and 0 indicates the missed region to be inpainted; thus,

, where ⊙ represents the Hadamard product. The generated image in our model is denoted by

and the final completed result

is calculated by

3.1. EC-GAN Overall Image Completion Framework

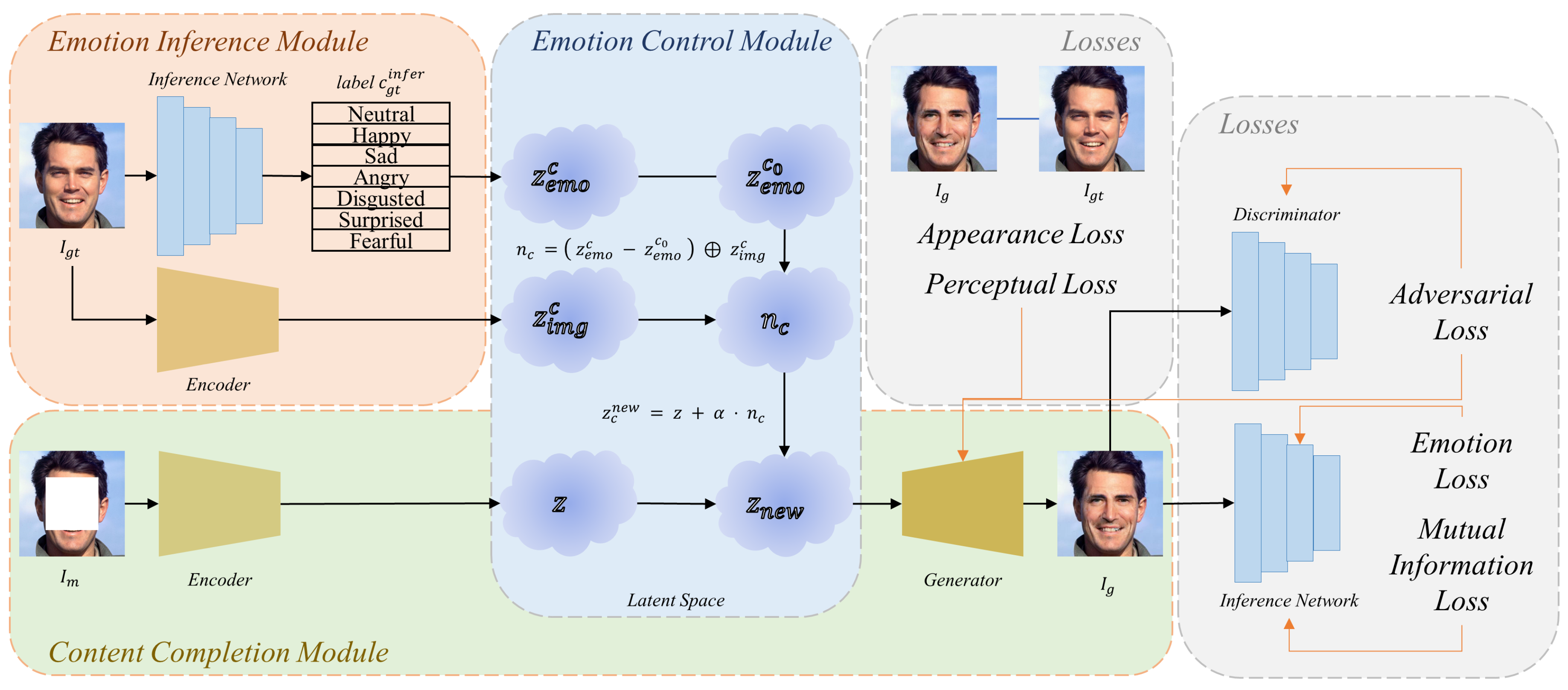

The overview architecture of EC-GAN is illustrated in

Figure 1. The content completion module (with a pale green background) contains an encoder

E and a generator

G. The main network of our generator is similar to PIC-Net [

13], but we modify the encoder and generator to enable them to deal with extra-label information. The encoder maps the visible parts

into the native latent space, and the corresponding latent codes are denoted by

z. The generator reconstructs the image from

z.

To disentangle the emotion semantics from the native latent space, we propose the emotion inference module (with a light orange background in

Figure 1), which contains two parallel paths, one for the emotion semantics path and one for the image content path. The emotion semantics path designs an inference network to infer the emotion labels

of the ground truth and calculates the information entropy of the emotion noted as

, while the image content path maps the ground truth

to the latent codes of content

. Once the generator

G produces a result sample

, the emotion semantics path maximizes the mutual information between the inferred truth emotion labels

and the generated sample

, resulting in the disentanglement of the emotion semantics from the latent space (disentanglement between

and

).

Then the final latent codes

are obtained by combining

and

together in the emotion control module (with a pale blue background

Figure 1). Therefore, our model can calculate the emotion vectors

, which are utilized to move the latent codes in the content completion module from

z to

by the emotion control module. More details will be introduced in

Section 3.3.

The encoder

E in the content completion module is optimized by minimizing the following loss function:

where

x is the data sample from the generative distribution

and

z is the latent variable extracted from the prior distribution

. In our EC-GAN model, the input data sample

x is the truth image sample

, and the label

c is the inferred truth emotion label

. The generator

G and the discriminator

D of GAN try to minimize the loss functions

and

, respectively:

Therefore, the combined loss function of our content completion module is:

3.2. The Disentangled Emotion Inference Module

Since the encoder

E is constructed by multiple convolutional layers with different scales, it mashes up all the facial features in the latent space, which makes the generator

G fail to extract the emotion information from the latent codes. To generate images with customized emotions, we need to disentangle the emotion semantics from the latent space [

37]. To address this problem, we designed an emotion inference module which utilizes the mutual information [

41] to achieve the independent encoding of emotion semantics in the latent space.

The emotion inference module contains two parallel paths. One path is the inference network designed to infer the labels of images and then calculate the entropy of emotions, and the other is the encoder aiming to embed the remaining image information into the latent space. The outputs of the two paths are combined in the emotion control module, which will be interpreted in

Section 3.3. The emotion inference network is always trained from scratch for different quantities of emotions. Meanwhile, in order to save calculation costs, the emotion inference module shares the same architecture and parameters with the discriminator

D, except for the last output layer.

First, the emotion inference network tries to obtain the inferred emotion labels

of the ground truth

and the inferred generation labels

of the constructed image

. To obtain the emotion semantics, we optimized the inference network in a supervised manner. By using the given emotion label

, we can utilize the L-1 loss function to optimize the emotion loss of the inference module:

Next, according to information theory, mutual information measures the reduction in uncertainty between two random variables. If the two variables are independent, the mutual information becomes zero. In contrast, if two variables are strongly correlated, then one of them can be predicted from the other. Consequently, we propose to maximize the mutual information between the inferred emotion labels c and the generation distribution to strengthen their correlation. In the next parts, is replaced with c for convenience.

We set the inferred emotion labels

c as part of the input of generator

G and note this part as the emotion entropy or latent codes of emotion semantics

in advance. The latent codes of emotions

are calculated by a replication strategy in which we expand the

dimension to match the latent space dimension, which is noted as the mapping function:

where

m is the number of emotion categories and

is the dimension of the latent space. By doing so, the generator

G can produce samples containing the information from the inferred emotion labels

c and will change expressions as

c changes. Then, we can calculate the mutual information

.

However, the mutual information term

is hard to maximize directly as it requires the posterior information

[

36]. Therefore, we utilize a lower bound of it to approximate

by defining an auxiliary distribution

:

In fact, the entropy of the inferred emotion labels

can be treated as a constant in that it highly relies on the truth labels

. Thus, the mutual information loss can be defined as follows and be minimized during training:

With the above analysis, we can divide the latent space into two independent parts; one represents the emotion semantics

, which is from the inference module, and the other represents the remaining image semantics

, which is from the encoder. The visualization process is shown in

Figure 2.

3.3. Emotion Control Module

Now that the emotion inference module has disentangled the emotion semantics from the native latent space, we can edit and modify these semantics independently. To do this, we propose an emotion control module to customize the expression of the generated face.

During training, the latent codes of each emotion from the emotion inference module are recorded as

,

, and the neutral expression codes are denoted by

. Take the emotion

happy as an example; when the smile gradually fades from the face, it presents a calm face, which is considered

neutral. Thus, we regard the neutral expression as the start point for all other expressions. Then, the emotion vector can be calculated by:

As a result, we can conveniently edit the original latent codes

z using the following linear transformation:

where setting the directional parameters

will make the generation move in the positive direction, e.g., from

neutral to

smiling. Unlike InterFaceGAN, our model only changes the latent codes of emotions, which were separated from the native latent space in

Section 3.2. At the same time, the other parts of the facial semantics are preserved. The visualization process is shown in

Figure 3.

3.4. Loss Function

In this section, we summarize the losses utilized in our model during the training process. The joint loss is composed of five parts. In addition to the content completion loss

, the emotion inference loss

, and the mutual information loss

described earlier, we also introduce the appearance loss [

13]

and perceptual loss [

25]

to further improve the photorealism and the local semantic consistency of completed results during training. Concretely,

regularizes the consistency between pairs of distributions,

ensures the correctness of the emotion inference, and

encourages the encoder to disentangle the latent space.

adds more fidelity to the generation outputs, and

measures the distance between the features of ground truth and those of generated images.

The appearance loss is calculated as

and the perceptual loss is calculated as follows

where

and

are the extracted feature stacks for layer

l.

The full loss in our model is