Fault Diagnosis Method of Rolling Bearing Based on CBAM_ResNet and ACON Activation Function

Abstract

:1. Introduction

- (1)

- A novel CNN named CBAM_ResNet is proposed and the ACON activation function is used in it. Our method does not rely on expert experience for fault diagnosis, it achieves 100% diagnosis of the experimental data.

- (2)

- The effects of realistic environments such as noise and load variations on our method are considered, and compared with other publicly available fault diagnosis methods in the same consideration, our method performs better.

- (3)

- By employing two optimization techniques: embedding the CBAM attention mechanism into the residual block and introducing the ACON activation function, the performance of fault diagnosis is improved, which maintains high diagnostic accuracy under noise interference and has the ability to generalize to cope with load variations.

2. Materials and Methods

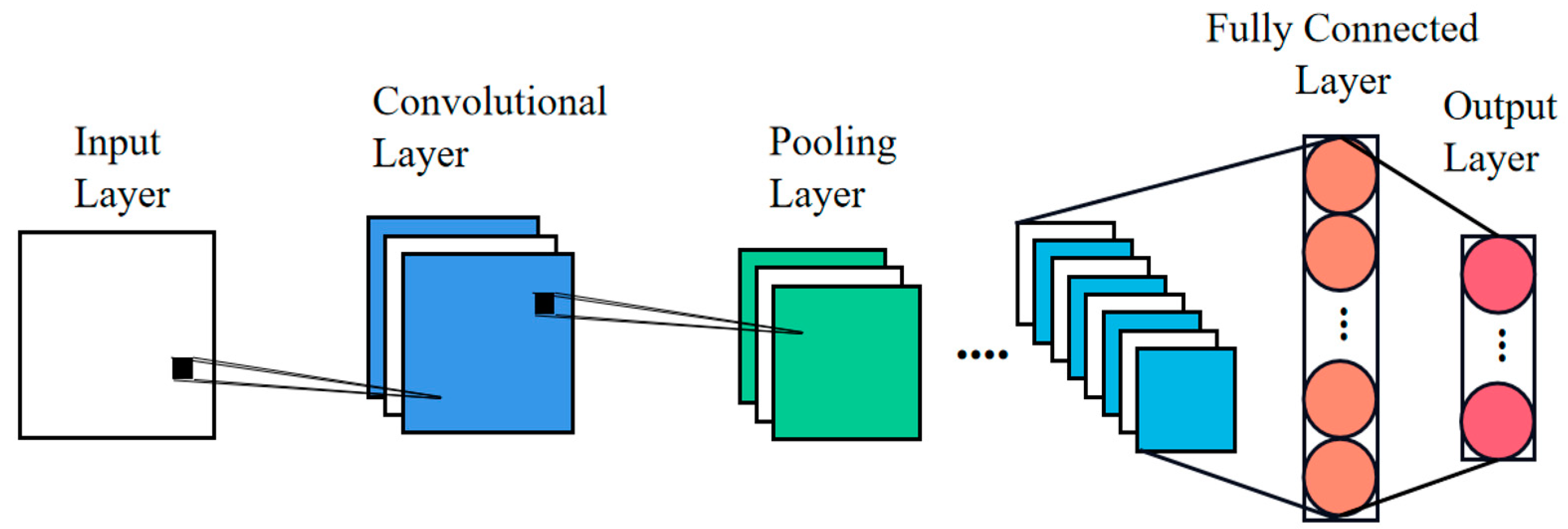

2.1. CNN

2.1.1. Convolution Layer

2.1.2. Pooling Layer

2.1.3. ReLU Activation Function

2.1.4. Fully Connected Layer

2.2. Residual Network

2.3. ACON Activation Function

2.3.1. ACON-C

2.3.2. Meta ACON-C

2.4. CBAM

2.4.1. Channel Attention Module

2.4.2. Spatial Attention Module

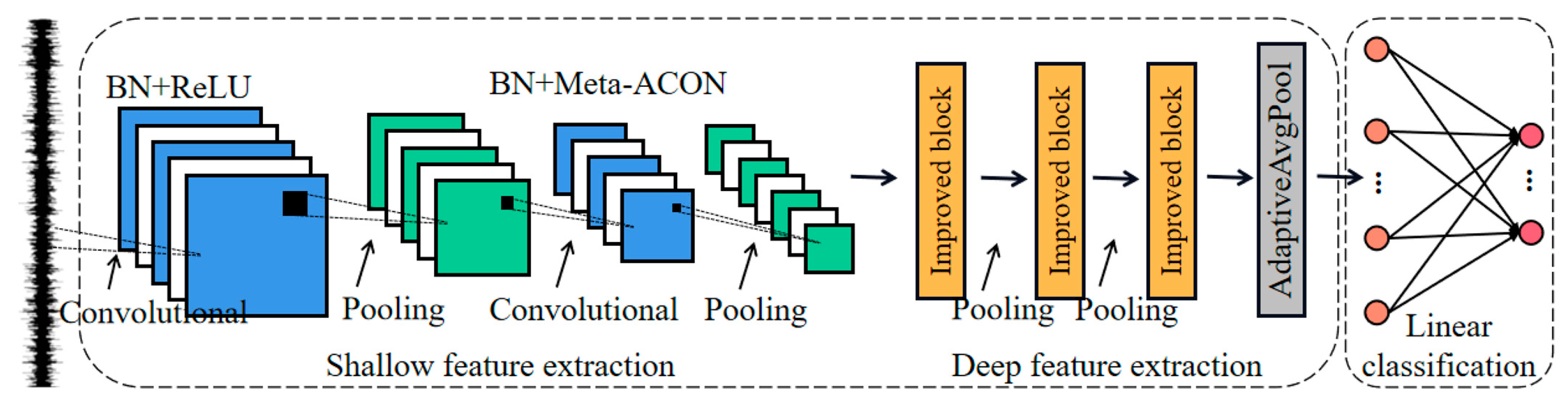

2.5. Proposed Methodology

2.5.1. Proposed Model

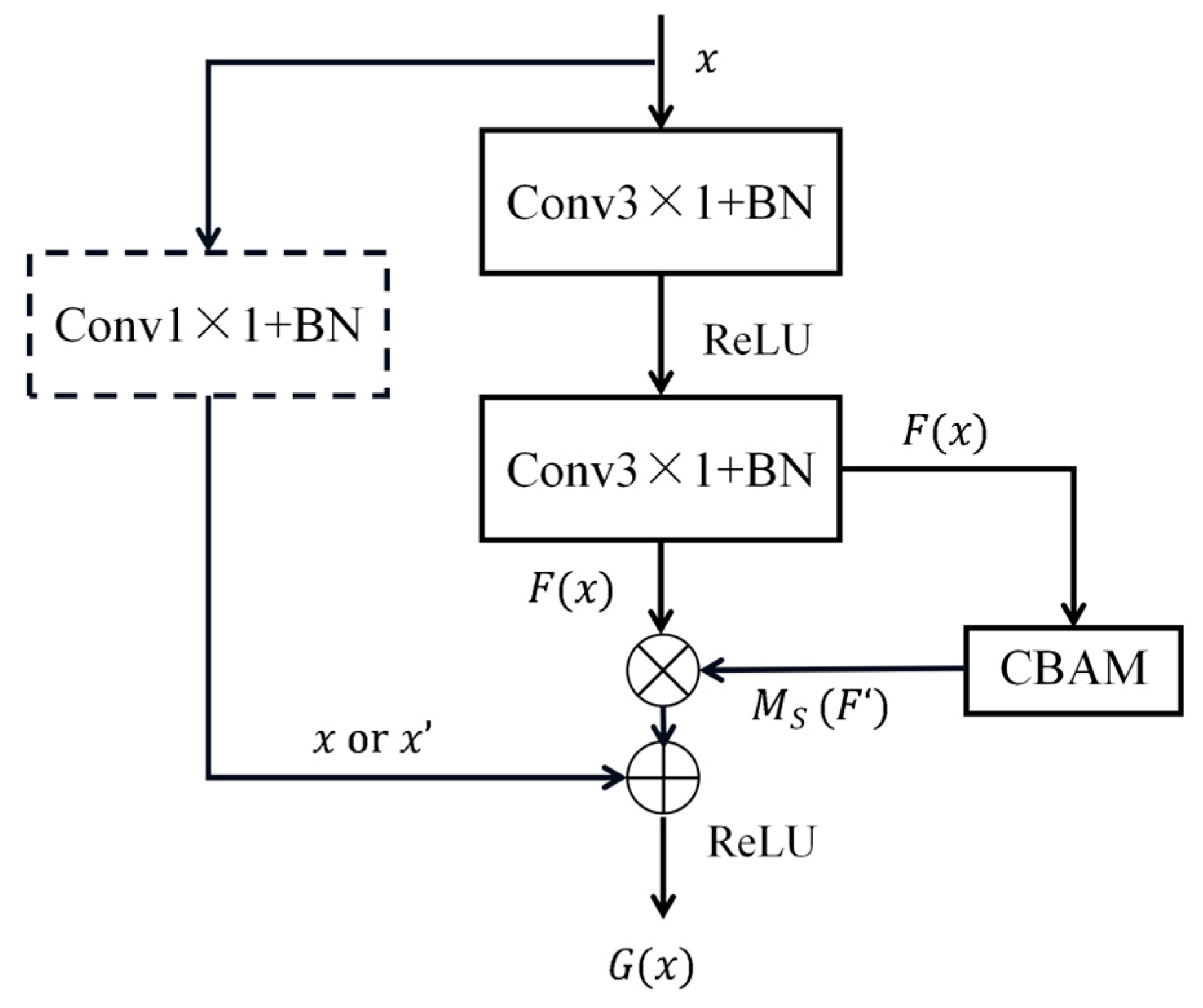

2.5.2. Improved Residual Block

2.5.3. Model Parameters

2.6. Experimental Data

3. Experimental Results and Discussion

- Step 1: Use sliding window sampling to partition the training set, validation set and testing set.

- Step 2: Construct a neural network model consistent as in Section 2.5.

- Step 3: By feeding samples from the training set into the model for training, forward and backward propagation is achieved to update the parameters of the model.

- Step 4: At the end of a single iteration, use the validation set to observe and record the fault diagnosis results of the current model.

- Step 5: Determine if the total number of iterations is reached, if yes, stop the iteration and save the model, otherwise repeat steps 3 and 4.

- Step 6: Samples from the testing set that are not involved in the training process are input to the saved model.

- Step 7: Obtain the fault diagnosis results.

3.1. Diagnostic Results of the Proposed Model

3.2. Diagnostic Results under Noise Interference

3.3. Diagnostic Results under Variable Load

3.4. Discussion

- (1)

- The first model is without embedding the CBAM attention mechanism inside the residual block and without using the Meta ACON-C activation function, named Base.

- (2)

- The second model is to embed the CBAM attention mechanism inside the residual block, but without using the Meta ACON-C activation function, named Base + A.

- (3)

- The third model is to use the Meta ACON-C activation function in the second feature extraction layer of the model, but without embedding the CBAM attention mechanism in the residual block, named Base + B.

- (4)

- The proposed model is named Base + A + B.

3.4.1. Discussion 1

3.4.2. Discussion 2

4. Conclusions

- (1)

- To diagnose the location and degree of bearing faults, a fault diagnosis model based on CBAM_ResNet and ACON activation function is proposed. It achieves 100% diagnostic accuracy for experimental data without considering noise interference and variable loads.

- (2)

- After considering five different noise interferences from 2 to 10 dB, in increments of 2, the diagnostic accuracy using our method is 97.68%, which is 7.1%, 6.24%, and 2.78% higher, respectively, compared with the other three published methods.

- (3)

- Six groups of experiments are set up with a test set of 10 dB noise and variable load domain, and the diagnostic accuracy of our method is 93.93%. It exceeds the comparison methods by 3.18%, 4.68%, and 3.32%, respectively.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Miao, Y.; Zhao, M.; Lin, J.; Lei, Y. Application of an improved maximum correlated kurtosis deconvolution method for fault diagnosis of rolling element bearings. Mech. Syst. Signal Process. 2017, 92, 173–195. [Google Scholar] [CrossRef]

- Sharma, S.; Tiwari, S.K. A novel feature extraction method based on weighted multi-scale fluctuation based dispersion entropy and its application to the condition monitoring of rotary machines. Mech. Syst. Signal Process. 2022, 171, 108909. [Google Scholar] [CrossRef]

- Khadim, M.S.; Kuldeep, S.; Vinod, K.G. Health monitoring and fault diagnosis in induction motor—A review. Int. J. Adv. Res. Elect. Electron. Instrum. Eng. 2014, 3, 6549–6565. [Google Scholar]

- Shao, H.; Jiang, H.; Lin, Y. A novel method for intelligent fault diagnosis of rolling bearings using ensemble deep auto-encoders. Mech. Syst. Signal Process. 2018, 102, 278–297. [Google Scholar] [CrossRef]

- Liu, R.; Yang, B.; Enrico, Z.; Chen, X. Artificial intelligence for fault diagnosis of rotating machinery: A review. Mech. Syst. Signal Process. 2018, 108, 33–47. [Google Scholar] [CrossRef]

- Habbouche, H.; Amirat, Y.; Benkedjouh, T.; Benbouzid, M. Bearing Fault Event-Triggered Diagnosis Using a Variational Mode Decomposition-Based Machine Learning Approach. IEEE Trans. Energy Conver. 2022, 37, 466–474. [Google Scholar] [CrossRef]

- Jaouher, B.A.; Nader, F.; Lotfi, S.; Brigitte, C.-M.; Farhat, F. Application of empirical mode decomposition and artificial neural network for automatic bearing fault diagnosis based on vibration signals. Appl. Acoust. 2015, 89, 16–27. [Google Scholar]

- Hou, X.; Hu, P.; Du, W.; Gong, X.; Wang, H.; Meng, F. Fault diagnosis of rolling bearing based on multi-scale one-dimensional convolutional neural network. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1, 1207. [Google Scholar] [CrossRef]

- Hu, B.; Liu, J.; Zhao, R.; Xu, Y.; Huo, T. A New Fault Diagnosis Method for Unbalanced Data Based on 1DCNN and L2-SVM. Appl. Sci. 2022, 12, 9880. [Google Scholar] [CrossRef]

- Tang, G.; Pang, B.; Tian, T.; Zhou, C. Fault Diagnosis of Rolling Bearings Based on Improved Fast Spectral Correlation and Optimized Random Forest. Appl. Sci. 2018, 8, 1859. [Google Scholar] [CrossRef] [Green Version]

- Xiao, M.; Liao, Y.; Bartos, P.; Filip, M.; Geng, G.; Jiang, Z. Fault diagnosis of rolling bearing based on back propagation neural network optimized by cuckoo search algorithm. Multimed. Tools Appl. 2021, 81, 1567–1587. [Google Scholar] [CrossRef]

- Tian, A.; Zhang, Y.; Ma, C.; Chen, H.; Sheng, W.; Zhou, S. Noise-robust machinery fault diagnosis based on self-attention mechanism in wavelet domain. Measurement 2023, 207, 112327. [Google Scholar] [CrossRef]

- Saucedo-Dorantes, J.J.; Arellano-Espitia, F.; Delgado-Prieto, M.; Osornio-Rios, R.A. Diagnosis Methodology Based on Deep Feature Learning for Fault Identification in Metallic, Hybrid and Ceramic Bearings. Sensors 2021, 21, 5832. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Liu, J.; Xie, J.; Wang, C.; Ding, T. Conditional GAN and 2-D CNN for Bearing Fault Diagnosis with Small Samples. IEEE Trans. Instrum. Meas. 2021, 70, 1–12. [Google Scholar] [CrossRef]

- Wen, L.; Li, X.; Gao, L.; Zhang, Y. A New Convolutional Neural Network-Based Data-Driven Fault Diagnosis Method. IEEE Trans. Ind. Electron. 2018, 65, 5990–5998. [Google Scholar] [CrossRef]

- Jin, G.; Zhu, T.; Akram, M.W.; Jin, Y.; Zhu, C. An Adaptive Anti-Noise Neural Network for Bearing Fault Diagnosis under Noise and Varying Load Conditions. IEEE Access 2020, 8, 74793–74807. [Google Scholar] [CrossRef]

- Cao, J.; He, Z.; Wang, J.; Yu, P. An Antinoise Fault Diagnosis Method Based on Multiscale 1DCNN. Shock Vib. 2020, 2020, 8819313. [Google Scholar] [CrossRef]

- Zhang, W.; Peng, G.; Li, C.; Chen, Y.; Zhang, Z. A New Deep Learning Model for Fault Diagnosis with Good Anti-Noise and Domain Adaptation Ability on Raw Vibration Signals. Sensors 2017, 17, 425. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Wang, H.; Liu, J.; Qin, Y.; Peng, D. Multitask Learning Based on Lightweight 1DCNN for Fault Diagnosis of Wheelset Bearings. IEEE Trans. Instrum. Meas. 2021, 70, 1–11. [Google Scholar] [CrossRef]

- Zhang, W.; Li, C.; Peng, G.; Chen, Y.; Zhang, Z. A deep convolutional neural network with new training methods for bearing fault diagnosis under noisy environment and different working load. Mech. Syst. Signal Process. 2018, 100, 439–453. [Google Scholar] [CrossRef]

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.A. Deep learning for time series classification: A review. Data Min. Knowl. Disc. 2019, 33, 917–963. [Google Scholar] [CrossRef] [Green Version]

- Hao, X.; Zheng, Y.; Lu, L.; Pan, H. Research on Intelligent Fault Diagnosis of Rolling Bearing Based on Improved Deep Residual Network. Appl. Sci. 2021, 11, 10889. [Google Scholar] [CrossRef]

- Feng, Z.; Wang, S.; Yu, M. A fault diagnosis for rolling bearing based on multilevel denoising method and improved deep residual network. Digit. Signal Process. 2023, 140, 104106. [Google Scholar] [CrossRef]

- Lv, H.; Chen, J.; Pan, T.; Zhang, T.; Feng, Y.; Liu, S. Attention mechanism in intelligent fault diagnosis of machinery: A review of technique and application. Measurement 2022, 199, 111594. [Google Scholar] [CrossRef]

- Tong, J.; Tang, S.; Wu, Y.; Pan, H.; Zheng, J. A fault diagnosis method of rolling bearing based on improved deep residual shrinkage networks. Measurement 2023, 206, 112282. [Google Scholar] [CrossRef]

- Wang, X.; Liu, X.; Wang, J.; Xiong, X.; Bi, S.; Deng, Z. Improved Variational Mode Decomposition and One-Dimensional CNN Network with Parametric Rectified Linear Unit (PReLU) Approach for Rolling Bearing Fault Diagnosis. Appl. Sci. 2022, 12, 9324. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, S.; Wei, Y.; Zhang, H. A novel feature adaptive extraction method based on deep learning for bearing fault diagnosis. Measurement 2021, 185, 110030. [Google Scholar] [CrossRef]

- Huang, Y.; Liao, A.; Hu, D.; Shi, W.; Zheng, S. Multi-scale convolutional network with channel attention mechanism for rolling bearing fault diagnosis. Measurement 2022, 203, 111935. [Google Scholar] [CrossRef]

- Ruan, D.; Wang, J.; Yan, J.; Gühmann, G. CNN parameter design based on fault signal analysis and its application in bearing fault diagnosis. Adv. Eng. Inform. 2023, 55, 101877. [Google Scholar] [CrossRef]

- Zhang, X.; He, C.; Lu, Y.; Chen, B.; Zhu, L.; Zhang, L. Fault diagnosis for small samples based on attention mechanism. Measurement 2022, 187, 110242. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ma, N.; Zhang, X.; Liu, M.; Sun, J. Activate or Not: Learning Customized Activation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8028–8038. [Google Scholar]

- Xi, X.; Wu, Y.; Xia, C.; He, S. Feature fusion for object detection at one map. Image Vision Comput. 2022, 123, 104466. [Google Scholar] [CrossRef]

- Chen, X. The Advance of Deep Learning and Attention Mechanism. In Proceedings of the 2022 International Conference on Electronics and Devices, Computational Science (ICEDCS), Marseille, France, 20–22 September 2022; pp. 318–321. [Google Scholar]

- Zhao, M.; Zhong, S.; Fu, X.; Tang, B.; Pecht, M. Deep Residual Shrinkage Networks for Fault Diagnosis. IEEE Trans. Ind. Inform. 2020, 16, 4681–4690. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X.; Wu, S.; Wan, L.; Xie, F. Wind turbine fault detection based on deep residual networks. Expert Syst. Appl. 2023, 213, 119102. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Installing Previous Versions of PyTorch. Available online: https://pytorch.org/get-started/previous-versions/ (accessed on 15 June 2023).

- Smith, A.W.; Randall, B.R. Rolling element bearing diagnostics using the Case Western Reserve University data: A benchmark study. Mech. Syst. Signal Process. 2015, 64–65, 100–131. [Google Scholar] [CrossRef]

- Sharma, S.; Tiwari, S.K.; Singh, S. Integrated approach based on flexible analytical wavelet transform and permutation entropy for fault detection in rotary machines. Measurement 2021, 169, 108389. [Google Scholar] [CrossRef]

- Wang, W. Study on Motor Fault Diagnosis Method Based on Multi-Scale Convolutional Neural Network. Master’s Thesis, China University of Mining and Technology, Suzhou, China, 2020. [Google Scholar]

- Li, X.; Jia, X.; Zhang, W.; Ma, H.; Luo, Z.; Li, X. Intelligent cross-machine fault diagnosis approach with deep auto-encoder and domain adaptation. Neurocomputing 2020, 383, 235–247. [Google Scholar] [CrossRef]

- Zhang, Z.; Chen, H.; Li, S.; An, Z.; Wang, J. A novel geodesic flow kernel based domain adaptation approach for intelligent fault diagnosis under varying working condition. Neurocomputing 2020, 376, 54–64. [Google Scholar] [CrossRef]

- Qian, W.; Li, S.; Yi, P.; Zhang, K. A novel transfer learning method for robust fault diagnosis of rotating machines under variable working conditions. Measurement 2019, 138, 514–525. [Google Scholar] [CrossRef]

- Maaten, L.; Hinton, G. Visualizing High-Dimensional Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Network Structure | Parameter Setting 2 | Output Size | Activation Function |

|---|---|---|---|

| Conv_1 | k = 64, s = 16, p = 24 | 32 × 64 | ReLU |

| MaxPooling_1 | k = s = 2 | 32 × 32 | - |

| Conv_2 | k = 3, s = 1, p = 1 | 32 × 32 | Meta ACON-C |

| MaxPooling_2 | k = s = 2 | 32 × 16 | - |

| Improved block with CBAM 1 | - | 32 × 16 | ReLU |

| MaxPooling_3 | k = s = 2 | 32 × 8 | - |

| Improved block with CBAM | - | 64 × 8 | ReLU |

| MaxPooling_4 | k = s = 2 | 64 × 4 | - |

| Improved block with CBAM | - | 128 × 4 | ReLU |

| Adaptive AvgPooling | - | 128 × 1 | - |

| Fully connected layer | in = 128, out = 10 | 10 | - |

| Motor Load | 0HP | 1HP | 2HP | 3HP |

| Approx. Speed | 1797 r/min | 1772 r/min | 1750 r/min | 1730 r/min |

| Fault Position | Fault Sizes (Inches) | Load | |||

|---|---|---|---|---|---|

| 0HP | 1HP | 2HP | 3HP | ||

| Normal | / | *+ 1 | *+ | *+ | *- |

| IRF | 0.007 | *+ | *+ | *+ | *- |

| 0.014 | *+ | *+ | *+ | *- | |

| 0.021 | *+ | *+ | *+ | *- | |

| 0.028 | *- | *- | *- | *- | |

| BF | 0.007 | *+ | *+ | *+ | *- |

| 0.014 | *+ | *+ | *+ | *- | |

| 0.021 | *+ | *+ | *+ | *- | |

| 0.028 | *- | *- | *- | *- | |

| ORF | 0.007 | *+ | *+ | *+ | *- |

| 0.014 | *+ | *+ | *+ | *- | |

| 0.021 | *+ | *+ | *+ | *- | |

| 0.028 | - | - | - | - | |

| Fault Sizes (Inches) | Fault Position | Tra/Val/Test 1 | Fault Type Label |

|---|---|---|---|

| None | Normal | 240/80/80 | 0 |

| 0.007 | IRF | 240/80/80 | 1 |

| RBF | 240/80/80 | 2 | |

| ORF | 240/80/80 | 3 | |

| 0.014 | IRF | 240/80/80 | 4 |

| RBF | 240/80/80 | 5 | |

| ORF | 240/80/80 | 5 | |

| 0.021 | IRF | 240/80/80 | 7 |

| RBF | 240/80/80 | 8 | |

| ORF | 240/80/80 | 9 |

| Load Condition of Data | Accuracy (%) |

|---|---|

| 0 HP | 100 |

| 1 HP | 100 |

| 2 HP | 100 |

| 0~2 HP 1 | 100 |

| Reference | Domain of Input Data | Whether End-to-End | Average Accuracies Results (%) 1 |

|---|---|---|---|

| Wang et al. [41] | Time | Yes | 90.58 |

| Hou et al. [8] | Frequency | No | 91.44 |

| Zhang et al. [15] | Time | Yes | 94.90 |

| Proposed model | Time | Yes | 97.68 |

| Fault Type Label | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|

| 0 | 98.76 | 100 | 99.38 |

| 1 | 100 | 100 | 100 |

| 2 | 100 | 95 | 97.43 |

| 3 | 100 | 100 | 100 |

| 4 | 84.21 | 100 | 91.43 |

| 5 | 91.95 | 100 | 95.81 |

| 6 | 100 | 75 | 85.71 |

| 7 | 100 | 100 | 100 |

| 8 | 98.76 | 100 | 99.38 |

| 9 | 100 | 100 | 100 |

| Domain Type | Source Domain | Target Domain | |

|---|---|---|---|

| Description | Labeled signal samples under a single load | Unlabeled signal samples under another load | |

| Domain details | Train samples of 0 HP | Test samples of 1 HP | Test samples of 2 HP |

| Train samples of 2 HP | Test samples of 1 HP | Test samples of 0 HP | |

| Train samples of 1 HP | Test samples of 0 HP | Test samples of 2 HP | |

| Objectives of the experiments | Generalize the fault diagnosis knowledge learned from source domain to target domain, diagnose samples in the target domain | ||

| Names of the Models | Optimization Techniques 1 | |

|---|---|---|

| Embedding CBAM | Using Meta ACON-C | |

| Base | × | × |

| Base + A | √ | × |

| Base +B | × | √ |

| Base + A + B (proposed model) | √ | √ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, H.; Pan, J.; Li, J.; Huang, F. Fault Diagnosis Method of Rolling Bearing Based on CBAM_ResNet and ACON Activation Function. Appl. Sci. 2023, 13, 7593. https://doi.org/10.3390/app13137593

Qin H, Pan J, Li J, Huang F. Fault Diagnosis Method of Rolling Bearing Based on CBAM_ResNet and ACON Activation Function. Applied Sciences. 2023; 13(13):7593. https://doi.org/10.3390/app13137593

Chicago/Turabian StyleQin, Haihua, Jiafang Pan, Jian Li, and Faguo Huang. 2023. "Fault Diagnosis Method of Rolling Bearing Based on CBAM_ResNet and ACON Activation Function" Applied Sciences 13, no. 13: 7593. https://doi.org/10.3390/app13137593