1. Introduction

The automated recognition of plant nematodes is of significant value for pest control, soil ecology, biogeography, habitat conservation, and climate maintenance [

1,

2,

3]. However, accurate identification is challenging, owing to their high phenotypic diversity [

4] and ambiguous diagnostic features [

5]. The traditional methods of plant nematode identification are based on manually identifying morphological and molecular characteristics by manual recognition [

6,

7,

8,

9]. However, the time-consuming nature of manual identification makes it unsuitable for dealing with large-scale ecological data and challenging for non-professionals. Therefore, developing automated methods to identify nematodes efficiently and reliably is crucial.

Automated classification approaches in biology combine computer-vision algorithms and machine-learning techniques [

10,

11]. The development of convolutional neural networks (CNN) [

12,

13,

14] to extract features automatically has greatly improved diagnostic accuracy [

15,

16], as it replaced the tedious and imprecise traditional methods of manual feature extraction. Nematologists have demonstrated that automated solutions for identifying plant diseases using images and machine learning can significantly increase diagnostic accuracy [

17]. In recent years, researchers have gradually shifted to using CNN to identify plant nematodes [

18,

19,

20,

21,

22]. Thevenoux al. [

20] applied computer vision algorithms to identify

G. pallida and

G. rostochiensis with an accuracy of 91%. They used the promising novel approach of the automated morphological identification of nematodes optimized to recognize only

Globodera species. Abadeet al. [

21] proposed NemaNet, a neural network with specialized configurations for classifying the overall nematode structure, with an accuracy of 98.82%. Xue et al. [

22] proposed an online nematode recognition system that combines digital image-processing methods and neural networks for classification. This model serves as a valuable tool for those without nematology experience. However, the recognition accuracy needs to be improved for genera with similar morphologies. Because these methods may be limited by the dataset and diversity of nematodes, they lack universality, generalization, and interpretability.

Cutting-edge machine learning techniques can identify plant nematodes with high accuracy; however, improving their accuracy and interpretability remains challenging. Here, we summarize the difficulties in classifying plant nematodes to point out potential directions for further improvement: (1) The inter-specific similarity of nematodes is exceptionally high. Owing to biodiversity and other reasons, the female and male worms of nematodes have different characteristics, making it challenging to determine whether they belong to the same nematode species. (2) It is difficult to match the key features found by neural networks and experts because of the limited interpretability of neural networks. (3) There is a lack of datasets with multiple categories, features, and hierarchies; however, training neural networks relies on a large amount of data.

This study uses deep learning technology to design a multimodal, multi-feature joint recognition method for plant nematodes. Drawing on the recognition model of nematode experts, we combine target recognition and classification models to simulate the recognition process performed by experts and construct a network model structure with interpretability. The multi-part features for the nematodes are combined for recognition to form an automatic decision identification system for plant nematodes.

The main contributions of this study to improving plant nematode identification methods are as follows:

- (1)

We propose a method for extracting the key features of plant nematodes.

- (2)

We propose a multi-feature joint training scheme to improve the recognition accuracy of plant nematodes.

- (3)

We construct an interpretable, multimodal, and intelligent decision-making model for plant nematodes.

2. Materials and Methods

2.1. Data Acquisition

This study constructed a plant nematode dataset involving 23 species with approximately 18,000 data images for joint identification by multiple parts. Images were captured using the ZEISS Axio Imager Z1 with a uniform image resolution of 1388 × 1040. The details of this dataset are presented in

Table 1. Owing to the limitations of the high magnification and field of a microscope, capturing a complete nematode in a single image is challenging. Therefore, different parts of plant nematodes, such as the head and tail, must be captured separately under a high-magnification microscope. In the dataset, owing to the relatively small number of images in some species, we used data augmentation methods, such as flipping, rotating, scaling, cropping, translation, and affine, to solve data imbalance. We kept the number of images of each species at approximately to avoid falling into over-fitting in model training. We identified 23 species by the female tail (

Meloidogyne, Globodera with J2 juvenile tail), with 6900 images; 23 species were jointly identified by the head and female tail, with 13,800 images; 11 species were jointly identified by the head, female tail, and male tail, with 9900 images.

The plant nematode samples were obtained by port interception, domestic collection, and domestic and foreign exchanges. Permanent slide specimens or live samples of plant nematodes and their approximate species were included. After morphological and molecular biological identification, images of the nematodes were collected under a microscope (using a 40× objective lens).

The procedure for converting a plant nematode sample to an image dataset is as follows:

- (1)

Laboratory sample extraction: Nematodes of woodchips, plant materials, and soil samples were extracted via a modified Baermann funnel technique for 24 h.

- (2)

Temporary slide construction: A small drop of fixative was placed at the center of a clean glass slide. The desired specimens were lifted using a “nematode pick” under a dissecting microscope and placed in the fixative. The slide was then placed under the dissecting microscope; the nematodes were arranged at the center of the slide and the bottom of the drop. Next, a cover glass (18 mm wide) was placed gently over the drop using forceps or by supporting it with a needle. The excess fixative was removed carefully using filter paper. The slides were then observed under a compound microscope.

- (3)

Microscope analysis: Using low-resolution microscope analysis, the field of view was divided at a certain focal length, following which the focal length was adjusted; next, plant nematodes were manually searched in each field of view image while finally returning to the site of interest.

2.2. Key Feature Region Extraction Method

We trained attention to feature identification networks, such as Faster-RCNN [

23], SSD [

24], and YOLO [

25], with the help of experts’ empirical knowledge for labeling. Feature identification networks are mainly used to locate synaptic features such as the head, female tail, and male tail, which are also regions of interest to experts in morphological identification. This system focuses more on joint identification by general and representative features, and it could successfully exclude features that very few nematode groups could be identified by, such as vulval flaps.

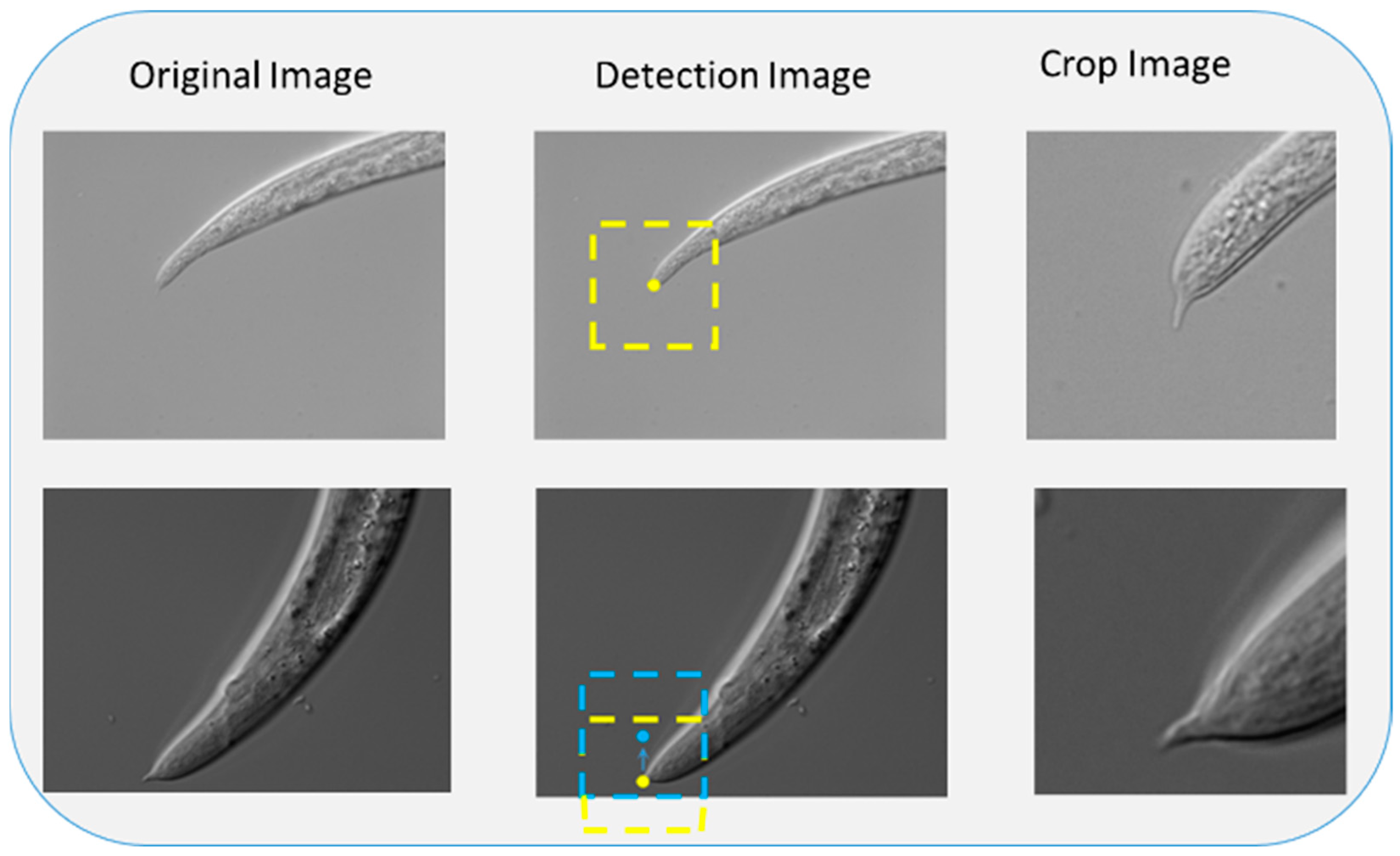

The detailed procedures for feature recognition and image cropping are described below. First, feature points of the input image are located by the feature identification network,

where

denotes the input image,

D denotes the target detection model, and (

x,

y) denotes the center of the feature points. Based on the selected centers, the image is cropped into a fixed-sized local patch image.

Figure 1 presents the cropping process of the patch image. Different cropping sizes can be determined based on the specific classification task.

The cropped images were put into a classification model for training, and the classification model was optimized using a cross-entropy loss function:

where

is the number of samples,

y is the label, and

is the category predicted.

2.3. Multi-FeatureJoint IdentificationStrategy

Multi-feature nematode identification strategies are urgently needed because single-feature strategies entail the following challenges: (1) The female tails of nematodes of different families or genera are exceedingly similar. (2) The female tails of nematodes of the same genus and different species are exceedingly similar. In both cases, the neural network cannot accurately identify nematode species when only the female tail picture is provided because the difference between features is negligible. Naturally, experts introduce multiple feature parts when single features cannot distinguish nematodes, and such differentiated features provide the basis for morphological classification.

Likewise, we drew on the classification strategies of experts and combined images of multiple parts as input to train the neural network. The number of selected features varies for different nematode species. Cropped images of different features are sampled from the dataset and concatenated. We used the head and female tail of nematodes for double-image concatenation and the nematode head, female tail, and male tail for triple-image concatenation. As shown in

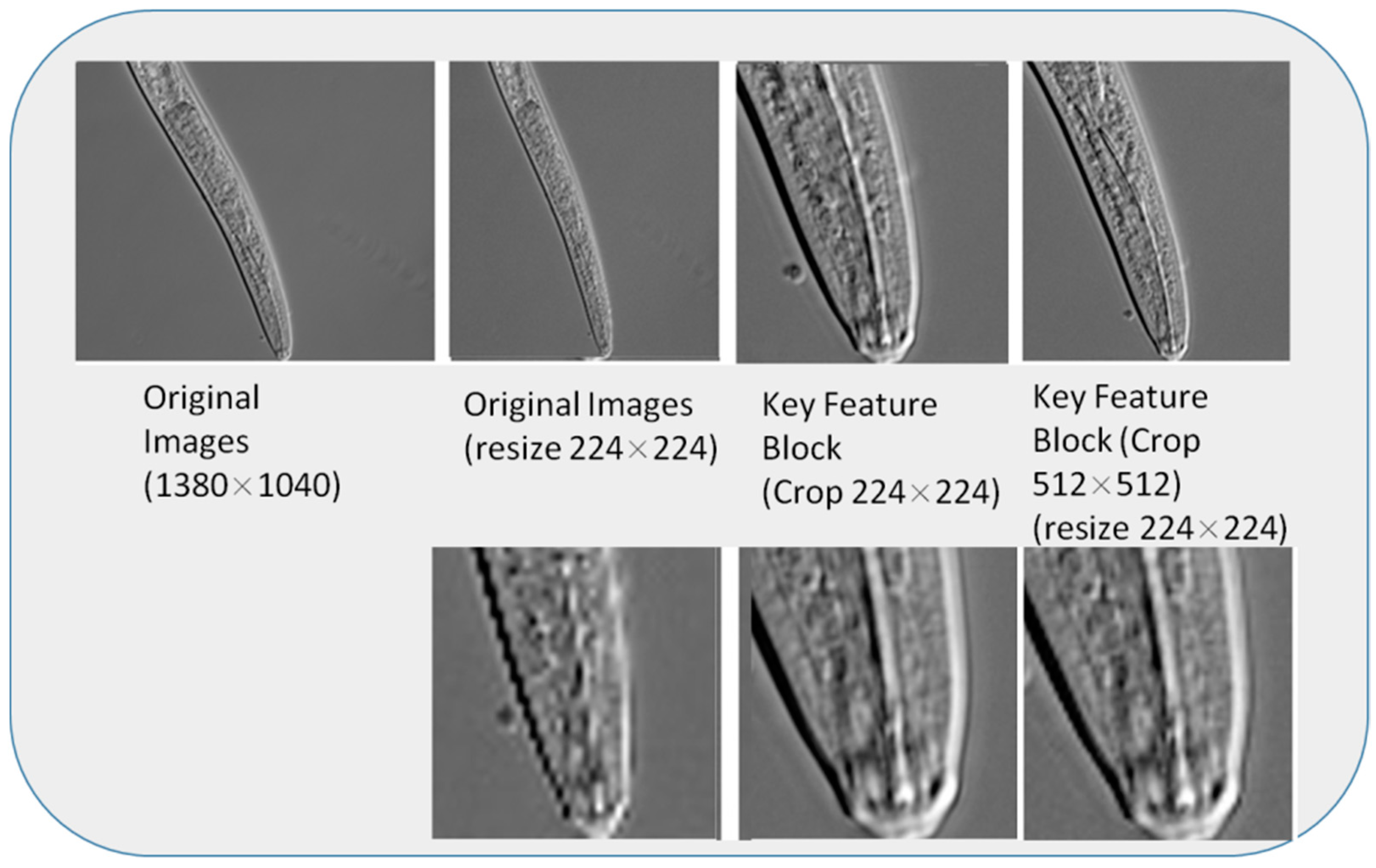

Figure 2, the concatenated images were used as the training input for the classification model. Moreover, attention should be paid to the image sizes provided to the network. When the concatenated image size is too large, excessive GPU resources will be needed for model classification. Therefore, in the image cropping stage, we set the image patch size for concatenation as 224 × 224. The sample sizes used for training were 224 × 448 and 448 × 448 for double-image and triple-image concatenations, respectively.

However, images of nematodes are taken at different objective magnifications. When the concatenation magnification is increased, the nematode images contain more details; however, there is less information about the structure of the nematode body. If the crop size is too small, the features of different nematodes will be incompletely cropped (e.g.,

X. brevicollum head,

B. mucronatus male tail), and similar nematode features are easily confused (e.g.,

G. rostochiensis juvenile tail,

M. incognita juvenile tail,

M. mali juvenile tail). Therefore, in the image cropping stage, we first set the cropping size to 512 × 512 and then scaled the image to 224 × 224using bicubic interpolation [

26].

Figure 3 presents the results of scaling under different cropping sizes. Compared to the original images, the corresponding scaled images have significant aliasing, with blurred details and gradient information loss. In contrast, after scaling the cropped image, the aliasing is reduced, and the image is detailed enough for visual perception.

2.4. Intelligent Expert Decision-MakingSystem

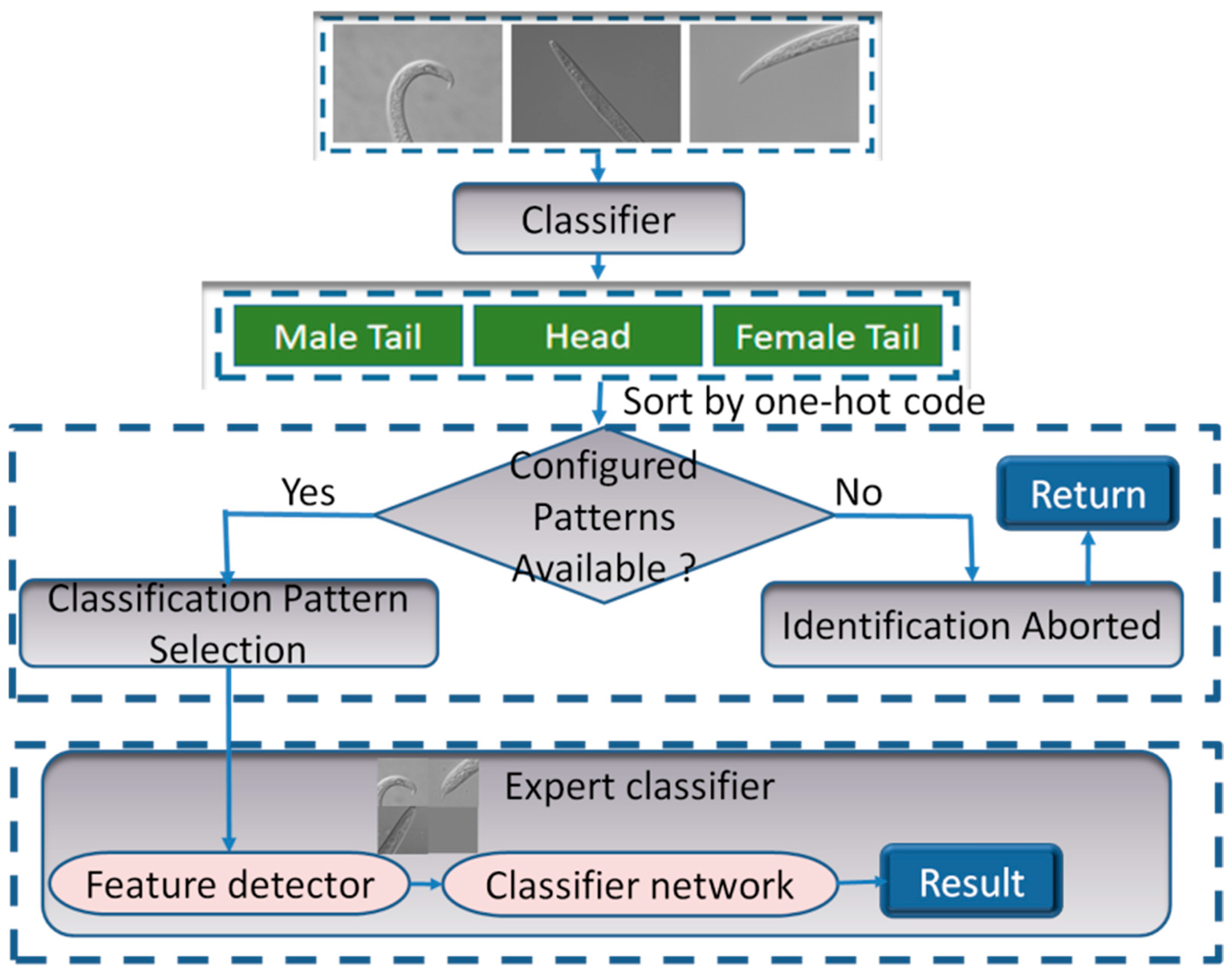

An intelligent expert authentication system was designed, consisting of an identification-pattern intelligent selection module and an expert classifier, as shown in

Figure 4. Briefly, the entire pipeline is as follows:

- (1)

Determining the number of images.

- (2)

Identifying the pattern for the input image sequence.

- (3)

Selecting the classification model that corresponds to the input pattern.

- (4)

Extracting and cropping the key feature region.

- (5)

Uniting multi-feature union.

- (6)

Recognizing the joint image and returning the identification results.

The multi-identification-pattern intelligent selection module was implemented using the classification network. Currently, the input patterns for nematode identification are single images (including the head, female tail, or male tail), double diagrams (including a combination of the head and female tail), and triple diagrams (including a combination of the head, female tail, and male tail). After recognizing the part of the nematode image, the one-hot encoding sequence (i.e., 0 for the head, 1 for the female tail, and 2 for the male tail) was performed. The sequence is then sorted to derive the input pattern. If the input pattern matches one of the configured patterns, the corresponding expert classifier is selected for identification according to the input pattern. Otherwise, the identification process is interrupted, and the user is prompted that there is no corresponding identification pattern.

The expert classifier is an expert classification network that primarily consists of a feature detector module and a classification network. The feature detector is responsible for image pre-processing. It removes redundant features and obtains feature regions in the image of expert interest. As the training of the classification network is a process of inductive bias without the constraint of external rules, the features extracted by the CNN may not be those of interest to the experts, leading to inaccuracy in the classification task. Therefore, a feature detector was introduced to extract the attention regions of the experts in advance. An external attention mechanism, as a feature detector, avoids the influence of non-critical features on the classification task by actively focusing on discriminative features. For the classification network, current state-of-the-art network architectures, such as VGG, ResNet, MobileNet, and EfficientNet, can be selected. For training, weights should be pre-trained by ImageNet to accelerate the training and improve the model performance.

3. Results

3.1. Experimental Environment Evaluation Criteria

The experiments were implemented on a Linux machine with Ubuntu 20.04, two Intel(R) Xeon(R) Silver 4214 CPUs, four Nvidia® RTX 2080 Ti 12 GB GPUs, and 256 GB of DDR4 RAM.

We used batch sizes of 8 and 1000 epochs. In each epoch, we sampled 4000 images from the dataset. The performances of different models, including MobileNet, ShuffleNet, VGG16, ResNet101, DenseNet121, Xception, ConvNeXt, NemaNet, Vit, and EfficientNetB6 [

27,

28,

29,

30], were assessed by comparing their accuracies. We divided the dataset into training and validation sets in the ratio 9:1. The root mean square propagation (RMSProp) algorithm was used for optimization, with an initial learning rate of 2 × 10

−5. With this training strategy, the model performs better with peak accuracy. In measuring the model performance, the accuracy rate is selected as the primary evaluation metric in this study.

3.2. Performance of the Feature Extraction under Different Cropping Strategies

We used generic target-detection networks, such as Faster-RCNN, SSD512, and YOLOv4, to test the feature extraction performance. The performance of the above three target-detection networks was evaluated in terms of detection accuracy and time. The feature maps of different sizes of the SSD512 model were independent for repeated detection of the same target by different sizes of detection frames. Faster-RCNN, as a second-order target-detection network, was more accurate than SSD512 but slower in detection than the other two models. The YOLOv4 model backbone network, Darknet53, introduces a residual network for extracting features, and the multi-scale prediction significantly improved the detection performance of small targets, with a mean average precision of 99.4%. It had the highest detection speed of 38 f/s. Therefore, we used the YOLOv4 network in our proposed system to obtain more accurate key features of nematodes for the next stage of feature interception.

After obtaining the key features based on the feature extractor, we performed the key feature region interception part of the data pre-processing before the identification stage. In the single-image classification task, we compared the classification model performance following four image pre-processing strategies: (1) Original: train with the original image size. (2) Resize: rescale the image to 512 × 512. (3) Crop: crop the image to 224 × 224 and 512 × 512 patches using the target-detection method. (4) Crop + Resize: crop the image to 512 × 512 using the target-detection method and concurrently rescale the image to 224 × 224.

Table 2 presents the performance of the expert classification network under different cropping strategies. In the single-image classification task, the approach with the 512 × 512 cropping size performed the best. More importantly, the cropping plus resizing approach did not entail excessive performance loss and was 422% faster in training than the direct cropping approach.

We only compared the cropping and cropping plus resizing strategies in the double- and triple-image classification tasks. In the double-image task, the two methods had no significant differences in performance. In the triple-image task, the training speed of the smaller-size image was 400% faster than that of the larger-size image and was significantly better in performance.

The cropping strategy used for the expert classification network was the method of first cropping the image size to 512 × 512 pixels and then rescaling it to 224 × 224 pixels.

3.3. Performance of Multi-Feature Joint Classification Network

To test the performance of the system, we set up two classification tasks: single-feature and multi-feature joint recognition tasks.

- (1)

Single-feature recognition task: Nematodes were classified based on the tail characteristics of female insects. A single-image, 23-species classification task was set up, amounting to 6900 images.

- (2)

Multi-feature joint recognition task: (a) For nematodes that were classified based on the joint recognition of head and female tail features, a double-image, 23-species classification task was set up, with 13,800 images, referring to the nematode category used in the single-feature recognition task. (b) Joint recognition of nematodes from the head, female tail, and male tail features for category differentiation, with three images and 11-species classification tasks, was set up with 9900 images. For comparison, an additional 11-species classification task of two images was set up, with 6600 images.

- (3)

Dataset partitioning strategy: For single-feature classification tasks, all of the datasets were randomly divided into training and testing sets in the ratio 8:2. For the multi-feature joint classification tasks, the datasets of different parts were randomly divided into training and testing sampling sets in the ratio 8:2. Both sets were randomly sampled and combined from the corresponding training and testing sets in different parts. After model training, we evaluated the model performance using a test set. Ten tests were conducted, and the test set was resampled after each test. The average accuracy was considered to be the final test accuracy, and the training set was not resampled. In addition, to facilitate the comparison of the experimental results for single and double images, the dataset partitioning strategy of the dual image joint classification task is referred to as the single-feature classification task.

Regarding the cropping strategies, the images were first cropped to 512 × 512-sized patches and then rescaled to 224 × 224. We tested our system on multiple backbone networks: lightweight CNNs, state-of-the-art CNNs, and models based on the transformer architecture, including MobileNet, ShuffleNet, VGG16, ResNet, DenseNet, Xception, EfficientNet, ConvNeXt, NemaNet, and vision transformer. As shown in

Table 3, the classification accuracy was obtained for each model. For the single classification task, we compared the results obtained by the original model described above with the different results obtained by our expert classification model when adopting the network model as the classifier. As shown in

Table 3, the accuracy of the 23-species classification task was calculated for the classic and expert models. Compared to the original model, the classification accuracy of our expert model was higher by an average of 6.9%. For our dataset and target task, the EfficientNetB6 network model performed the best, with an accuracy of 84.1% when using the expert classifier.

For multi-feature joint classification tasks, we compared the performance of the 23-species single-feature and dual-feature expert classification models. We also compared the performance of the 11-species dual-feature and triple-feature joint expert classification models. In the 23-species classification tasks, the accuracy of dual-feature classification was, on average, 17.6% higher than that of single-feature classification (see

Table 4). In the 11-species classification tasks, the accuracy of triple-feature joint classification was, on average, 1.24% higher than that of dual-feature joint classification. The accuracy of dual-image joint classification exceeded that of single-feature classification. The introduction of additional features helped the classifier to distinguish the nematode categories. Multi-feature joint classification aligns with expert classification experience and can be effectively combined with deep-learning methods. Compared with double-image joint recognition, the accuracy of triple-image joint recognition was improved, indicating that more features make it easier to classify nematodes, which is also consistent with expert knowledge.

4. Discussions

The successful application of deep-learning techniques in various fields has promoted the automated intelligent identification of plant nematodes, although the corresponding research is in its preliminary exploration stage. Notably, most current automated nematode identification methods are based on single features and limited datasets, resulting in low classification accuracies [

17,

18,

19,

20,

21,

22,

23].

Here in, we directly create a multi-feature plant nematode dataset from nematode samples and develop a standard procedure for image preparation. The proposed multi-mode, multi-feature nematode identification method can capture key features, and appropriate cropping sizes are proposed. Notably, the intelligent decision-making expert identification system can perform classification with from single to triple features as input, the greater the number of features, the better the accuracy. The multi-feature joint training model proposed in this article simulates the recognition logic of domain experts and features strong interpretability. The experimental results reveal that the expert model exhibits high accuracy. Simultaneously, expert models can combine samples from different parts to reduce the number of samples required for model training. The proposed approach significantly outperforms existing nematode identification systems and achieves new state-of-the-art accuracy. Moreover, the method reduces the time cost of image recognition to the millisecond level and can be completely applied to nematode identification scenarios to assist practitioners in this field.

The advent of deep learning technologies has addressed several difficulties that are associated with image classification; however, issues in online nematode image classification persist. In this study, the problems encountered in nematode classification are considered examples to explore feasible solutions and promote the application of deep-learning technology to the field of nematodes. However, the scope of this study can be further expanded in the following respects: First, the expert model is unsuitable for nematode classification tasks involving genera with very similar morphological characteristics (such as P. loosiLoof and P. caffeae). Second, the landmarks of different nematode types are different. The proposed expert classification model constructs the joint identification model using the head, female tail, and male tail. Hence, this approach cannot entirely describe the characteristics of plant nematodes. Third, the deep-learning-based nematode classification model is prone to overfitting problems owing to limited training samples and unpredictable user sample sources during training, which contributes to insufficient model generalization to, and difficulty in its application for, actual classification scenarios.

With gradual developments in deep-learning technology, nematologists are increasingly studying its application to the field of nematodes. However, the research in this field is currently in its preliminary stage, and considerable improvements are yet to be made. In particular, future research directions can be summarized as follows: (1) In terms of network model structure and deep-learning algorithm improvements, future research can focus on model structures with a strong generalization ability, better robustness, and stronger interpretability, combined with more advanced deep-learning algorithms, to further improve the recognition accuracy of nematode species. (2) In terms of practical application requirements, developing independent high-precision image recognition methods for individual nematode species is also a worthy prospect for in-depth research.

Author Contributions

Y.Z. (Ying Zhu) conceived this work, collected image data, analyzed data, wrote this manuscript. Y.Z. (Yi Zhu) developed software and analyzed data. Y.Z. (Ying Zhu), P.W., J.Z., Y.Z. (Yi Zhu), J.X. and X.O. revised this manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Key R&D Program of Zhejiang (No. 2022C03114); the National Natural Science Foundation of China (No. 62174121); the Ningbo Public Welfare Science and Technology Project (No. 2021S024); the National Natural Science Foundations of China (No. 62005128); the Ningbo Science and Technology Innovation Project (No. 2020Z019); the Scientific Research Project of the General Administration of Customs (No. 2020HK161);the Ningbo Science and Technology Innovation Project (No. 2022Z075); the Scientific Research Fund of Zhejiang Provincial Education Department (No. Y202147558).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no known competing financial interest or personal relationships that could have influenced the work reported in this paper. The sponsors had no role in the design, execution, interpretation, or writing of the study.

References

- Coomans, A. Present status and future of nematode systematic. Nematology 2002, 4, 573–582. [Google Scholar] [CrossRef]

- Jones, J.; Gheysen, G.; Fenoll, C. Genomics and Molecular Genetics of Plant-Nematode Interactions; Springer: Dordrecht, The Netherlands, 2011. [Google Scholar]

- Hoogen, V.; Geisen, S.; Routh, D.; Ferris, H.; Traunspurger, W.; Wardle, D.A.; de Goede, R.G.M.; Adams, B.J.; Ahmad, W.; Andriuzzi, W.S.; et al. Soil nematode abundance and functional group composition at a global scale. Nature 2019, 572, 194–198. [Google Scholar] [CrossRef] [Green Version]

- Nadler, S. Species delimitation and nematode biodiversity: Phylogenies rule. Nematology 2002, 4, 615–625. [Google Scholar] [CrossRef]

- Derycke, S.; Fonseca, G.; Vierstraete, A.; Vanfleteren, J.; Vincx, M.; Moens, T. Disentangling taxonomy within the Rhabditis (Pellioditis) marina (Nematoda, Rhabditidae) species complex using molecular and morphological tools. Zool. J. Linn. Soc. 2008, 152, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Giblin-Davis, R.M.; Kanzaki, N.; Ye, W.; Center, B.J.; Thomas, W.K. Morphology and systematic of Bursaphelenchus gerberae n. sp.(Nematoda: Parasitaphelenchidae), a rare associate of the palm weevil, Rhynchophorus palmarum in Trinidad. Zootaxa 2006, 1189, 39–53. [Google Scholar] [CrossRef]

- Liao, J.L.; Zhang, L.H.; Feng, Z.X. A reliable identification of Bursaphelenchus xylophilus by rDNA amplification. Nematol. Mediterreane 2001, 29, 131–135. [Google Scholar]

- Hillis, D.M.; Dixon, M.T. Ribosomal DNA: Molecular evolution and phylogenetic inference. Q. Rev. Biol. 1991, 66, 411–453. [Google Scholar] [CrossRef] [PubMed]

- Castagnone, C.; Abad, P.; Castagnone-Sereno, P. Satellite DNA-based species-specific identification of single individuals of the pinewood nematode Bursaphelenchus xylophilus (Nematoda: Aphelenchoididae). Eur. J. Plant Pathol. 2005, 112, 191–193. [Google Scholar] [CrossRef]

- Deepak, S.; Ameer, P.M. Brain tumor classification using deep CNN features via transfer learning. Comput. Biol. Med. 2019, 111, 103345. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Corrigendum: Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 546, 686. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.; Islam, N.; Jan, Z.; Din, I.U.; Rodrigues, J.J.C. A novel deep learning-based framework for the detection and classification of breast cancer using transfer learning. Pattern Recognit. Lett. 2019, 125, 1–6. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2019, 53, 5455–5516. [Google Scholar] [CrossRef] [Green Version]

- Kaur, S.; Pandey, S.; Goel, S. Plants disease identification and classification through leaf images: A Survey. Arch. Comput. Methods Eng. 2019, 26, 507–530. [Google Scholar] [CrossRef]

- Thyagharajan, K.K.; Kiruba, R. A review of visual descriptors and classification techniques used in leaf species identification. Arch. Comput. Methods Eng. 2019, 26, 933–960. [Google Scholar] [CrossRef]

- Chen, L.; Strauch, M.; Daub, M.; Jiang, X.; Jansen, M.; Luigs, H.G.; Schultz-Kuhlmann, S.; Krüssel, S.; Merhof, D. A CNN framework based on line annotations for detecting nematodes in microscopic images. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 3–7 April 2020; pp. 508–512. [Google Scholar]

- Lu, X.; Wang, Y.; Fung, S.; Qing, X. I-Nema: A biological image dataset for nematode recognition. arXiv 2021, arXiv:2103.08335. [Google Scholar] [CrossRef]

- Wang, L.; Kong, S.; Pincus, Z.; Fowlkes, C. Celeganser: Automated analysis of nematode morphology and age. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 968–969. [Google Scholar]

- Thevenoux, R.; Van Linh, L.E.; Villessèche, H.; Buisson, A.; Beurton-Aimar, M.; Grenier, E.; Folcher, L.; Parisey, N. Image based species identification of Globodera quarantine nematodes using computer vision and deep learning. Comput. Electron. Agric. 2021, 186, 106058. [Google Scholar] [CrossRef]

- Abade, A.; Porto, L.F.; Ferreira, P.A.; de Barros Vidal, F. NemaNet: A convolutional neural network model for identification of soybean nematodes. Biosyst. Eng. 2022, 213, 39–62. [Google Scholar] [CrossRef]

- Qing, X.; Wang, Y.; Lu, X.; Li, H.; Wang, X.; Li, H.; Xie, X. NemaRec: A deep learning-based web application for nematode image identification and ecological indices calculation. Eur. J. Soil Biol. 2022, 110, 103408. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7, Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2023, arXiv:2207.02696. [Google Scholar]

- Xing, F.; Xie, Y.; Su, H.; Liu, F.; Yang, L. Deep Learning in microscopy image analysis: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 4550–4568. [Google Scholar] [CrossRef] [PubMed]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2, Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet V2, Practical guidelines for efficient CNN architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 122–138. [Google Scholar]

- Cao, Y.; Liu, S.; Peng, Y.; Li, J. DenseUNet: Densely connected UNet for electron microscopy image segmentation. IET Image Process. 2020, 14, 2682–2689. [Google Scholar] [CrossRef]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable person re-identification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).