A Lightweight Multi-View Learning Approach for Phishing Attack Detection Using Transformer with Mixture of Experts

Abstract

1. Introduce

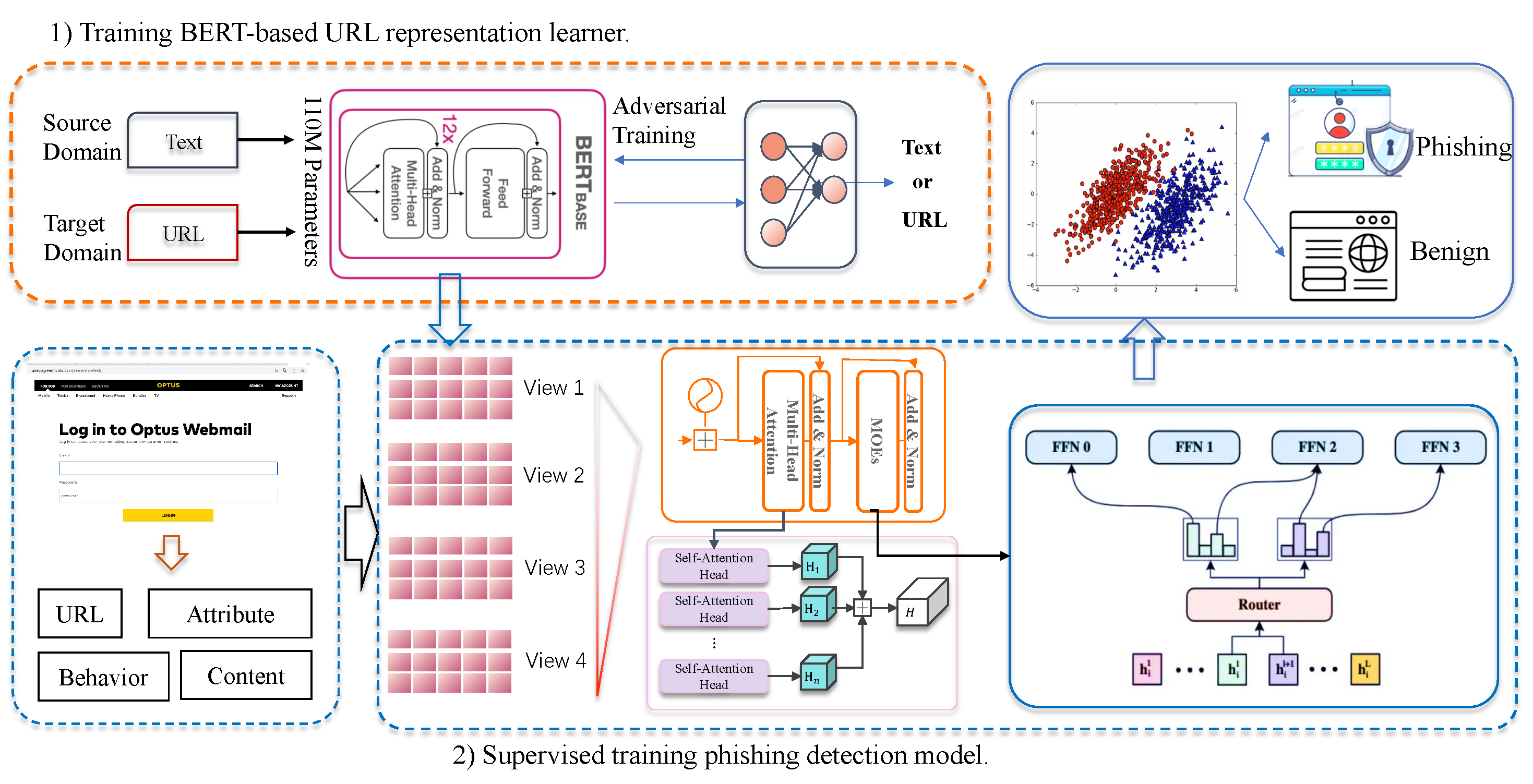

- The proposed method employs adversarial post-training to transform a pretrained language model into a powerful URL feature extractor. This approach allows us to transfer the general knowledge learned from massive data by the pretrained language model to URL representation learning at a low cost, resulting in semantic-aware URL embeddings.

- Our research introduces three highly informative website features that are easily obtained yet possess significant discriminative value. These features encompass descriptions of website attributes, content, and behavior, which, in conjunction with the website’s URL, form the multi-view information of the website. This allows us to capture phishing attack cues from different perspectives, enabling accurate and evasion-resistant phishing detection.

- We propose utilizing a Transformer network with a mixture-of-experts mechanism to learn the multi-view information of webpages. By dynamically allocating weights to different views and adaptively learning the relevance of features within each view, our method effectively considers the relationships both between and within the constructed views of the webpages.

- The proposed method demonstrates superior performance when evaluated on real phishing websites, outperforming state-of-the-art techniques with higher accuracy and fewer labeled data. Furthermore, it maintains a recognition precision of over 96% even after 3 months, showcasing its robustness and effectiveness in detecting phishing attacks.

2. Related Work

2.1. URL-Based Methods

2.2. Methods Leveraging Multi-View Information

3. Method

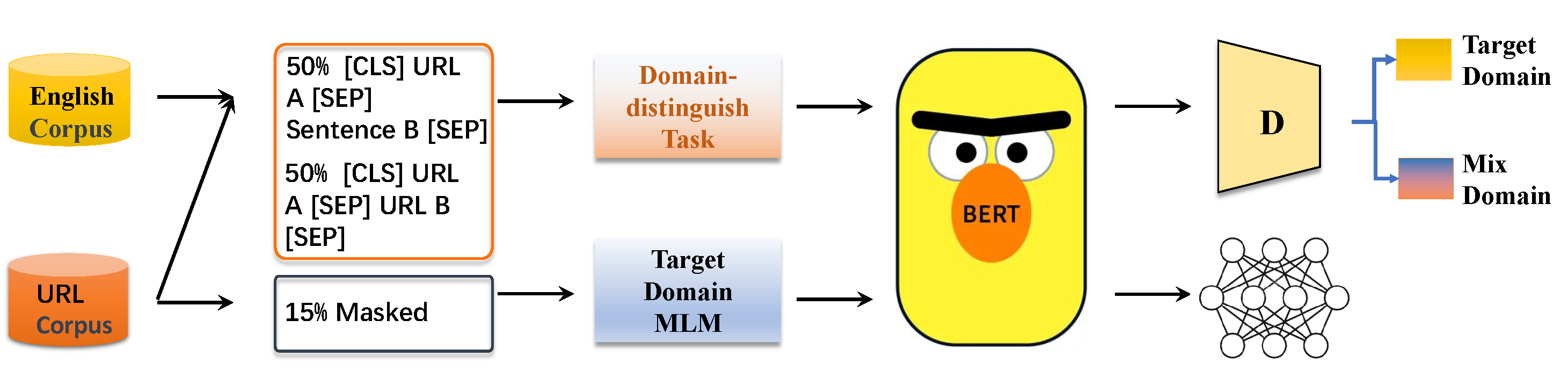

3.1. BERT-Based Universal URL Feature Learning

3.1.1. Problem Definition and Notations

3.1.2. Background of BERT

3.1.3. Domain-Distinguish Task

3.1.4. Target Domain MLM

3.1.5. Adversarial Training

3.2. Additional Views

3.3. Training with Multi-View Features

3.3.1. Computation with MoE

3.3.2. Routing

4. Experiments

4.1. Implementation Details

4.2. Dataset

4.3. Baseline Approaches

4.4. Experimental Setting

4.5. Evaluation Metrics

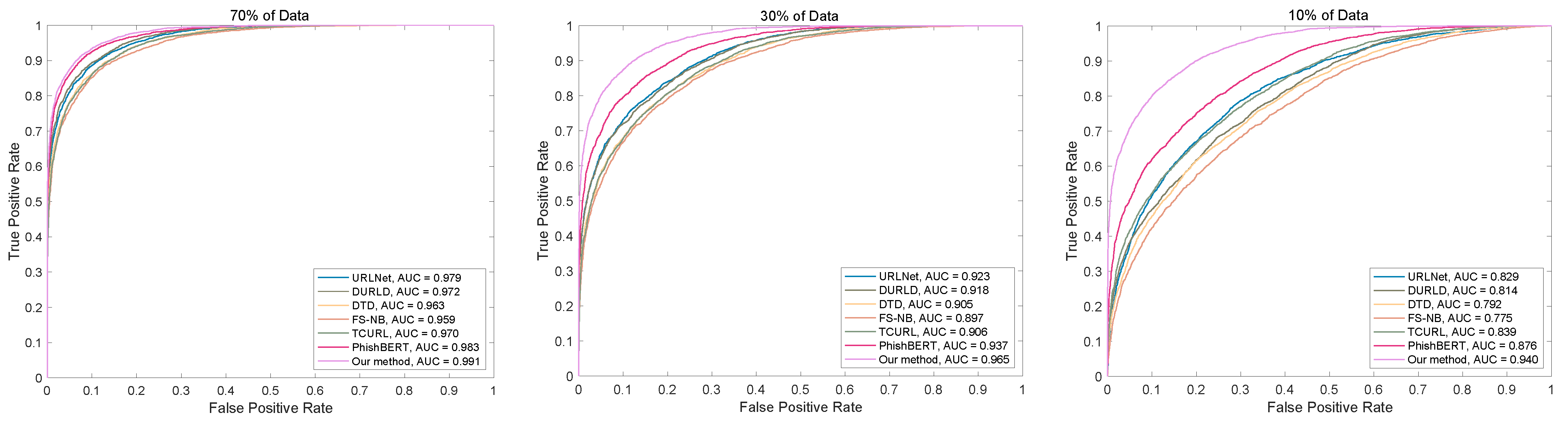

4.6. Evaluation on Balanced Dataset

4.7. Evaluation on Unbalanced Dataset

4.8. Data Drift Evaluation

4.9. Ablation Study

- 1

- : This scheme removes perspectives other than the URL and utilizes only our adversarially trained BERTurl model.

- 2

- : This scheme utilizes the original English BERT to obtain the initial representation of the URL and combines it with several other perspective features used in this study.

- 3

- : This scheme utilizes the fine-tuned BERT for the URL, in conjunction with other perspective features used in this study.

- 4

- .

5. Discussion

5.1. Competitive Analysis

- Our model incorporates multiple perspectives by extracting various features from a webpage, including its URL, domain registration, privacy requests, loading time, and redirection information, which are closely related to phishing websites. By considering these features, our model is able to capture various aspects and complex patterns associated with phishing attempts, thereby improving performance and enhancing the handling of small sample learning and class imbalance scenarios.

- Furthermore, we utilize a Transformer network with mixture of experts to integrate multi-perspective features for supervised learning in phishing detection tasks. The combination of Transformer’s attention mechanism and mixture of experts allows the model to extract essential knowledge from multi-perspective information, accurately learn class distribution boundaries in class-imbalanced data, and particularly focus on highly expressive and stable features, thereby enhancing its long-term detection capability.

- URLNet [21], DURLD [46], and PhishBERT [27] have demonstrated the importance of utilizing URLs. However, these methods are constrained by the limited scope of labeled learning. To address this limitation, we propose an unsupervised URL feature learning method based on BERT. This method incorporates adversarial and domain-aware learning, taking into consideration resource efficiency and leveraging the powerful representation capabilities of pretrained models. Our approach not only enables us to learn the structural and semantic information of URLs but also allows us to acquire expanded knowledge from models trained on massive amounts of text. These contributions serve as a solid foundation for the performance of our proposed model.

5.2. Analysis of Limitations and Scalability

5.2.1. Scalability

5.2.2. Limitations

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| URL | Uniform Resource Locator |

| ROC | Receiver Operating Characteristic |

| AUC | Area Under the Curve |

References

- Zabihimayvan, M.; Doran, D. Fuzzy rough set feature selection to enhance phishing attack detection. In Proceedings of the 2019 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), New Orleans, LA, USA, 23–26 June 2019; pp. 1–6. [Google Scholar]

- Basnet, R.; Mukkamala, S.; Sung, A.H. Detection of phishing attacks: A machine learning approach. Soft Comput. Appl. Ind. 2008, 226, 373–383. [Google Scholar]

- Al-Ahmadi, S. A deep learning technique for web phishing detection combined URL features and visual similarity. Int. J. Comput. Netw. Commun. (IJCNC) 2020, 12, 41–54. [Google Scholar] [CrossRef]

- Cui, Q.; Jourdan, G.V.; Bochmann, G.V.; Couturier, R.; Onut, I.V. Tracking phishing attacks over time. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 667–676. [Google Scholar]

- Goel, D.; Jain, A.K. Mobile phishing attacks and defence mechanisms: State of art and open research challenges. Comput. Secur. 2018, 73, 519–544. [Google Scholar] [CrossRef]

- Prakash, P.; Kumar, M.; Kompella, R.R.; Gupta, M. Phishnet: Predictive blacklisting to detect phishing attacks. In Proceedings of the 2010 Proceedings IEEE INFOCOM, San Diego, CA, USA, 14–19 March 2010; pp. 1–5. [Google Scholar]

- Sarker, B.; Sarker, B.; Podder, P.; Robel, M. Progression of Internet Banking System in Bangladesh and its Challenges. Int. J. Comput. Appl. 2020, 177, 11–15. [Google Scholar] [CrossRef]

- Okereafor, K.; Adelaiye, O. Randomized cyber attack simulation model: A cybersecurity mitigation proposal for post covid-19 digital era. Int. J. Recent Eng. Res. Dev. (IJRERD) 2020, 5, 61–72. [Google Scholar]

- Moghimi, M.; Varjani, A.Y. New rule-based phishing detection method. Expert Syst. Appl. 2016, 53, 231–242. [Google Scholar] [CrossRef]

- Adewole, K.S.; Akintola, A.G.; Salihu, S.A.; Faruk, N.; Jimoh, R.G. Hybrid rule-based model for phishing URLs detection. In Emerging Technologies in Computing, Proceedings of the Second International Conference, iCETiC 2019, London, UK, 19–20 August 2019; Proceedings 2; Springer: Cham, Switzerland, 2019; pp. 119–135. [Google Scholar]

- Blum, A.; Wardman, B.; Solorio, T.; Warner, G. Lexical feature based phishing URL detection using online learning. In Proceedings of the 3rd ACM Workshop on Artificial Intelligence and Security, Chicago, IL, USA, 8 October 2010; pp. 54–60. [Google Scholar]

- Saxe, J.; Berlin, K. eXpose: A character-level convolutional neural network with embeddings for detecting malicious URLs, file paths and registry keys. arXiv 2017, arXiv:1702.08568. [Google Scholar]

- Afroz, S.; Greenstadt, R. Phishzoo: Detecting phishing websites by looking at them. In Proceedings of the 2011 IEEE Fifth International Conference on Semantic Computing, Palo Alto, CA, USA, 18–21 September 2011; pp. 368–375. [Google Scholar]

- Liu, R.; Lin, Y.; Yang, X.; Ng, S.H.; Divakaran, D.M.; Dong, J.S. Inferring phishing intention via webpage appearance and dynamics: A deep vision based approach. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022; pp. 1633–1650. [Google Scholar]

- Mahajan, R.; Siddavatam, I. Phishing website detection using machine learning algorithms. Int. J. Comput. Appl. 2018, 181, 45–47. [Google Scholar] [CrossRef]

- Ahammad, S.H.; Kale, S.D.; Upadhye, G.D.; Pande, S.D.; Babu, E.V.; Dhumane, A.V.; Bahadur, M.D.K.J. Phishing URL detection using machine learning methods. Adv. Eng. Softw. 2022, 173, 103288. [Google Scholar] [CrossRef]

- Heidari, A.; Jamali, M.A.J.; Navimipour, N.J.; Akbarpour, S. A QoS-Aware Technique for Computation Offloading in IoT-Edge Platforms Using a Convolutional Neural Network and Markov Decision Process. IT Prof. 2023, 25, 24–39. [Google Scholar] [CrossRef]

- Heidari, A.; Navimipour, N.J.; Unal, M. A Secure Intrusion Detection Platform Using Blockchain and Radial Basis Function Neural Networks for Internet of Drones. IEEE Internet Things J. 2023, 10, 8445–8454. [Google Scholar] [CrossRef]

- Catillo, M.; Pecchia, A.; Villano, U. A Deep Learning Method for Lightweight and Cross-Device IoT Botnet Detection. Appl. Sci. 2023, 13, 837. [Google Scholar] [CrossRef]

- Nwakanma, C.I.; Ahakonye, L.A.C.; Njoku, J.N.; Odirichukwu, J.C.; Okolie, S.A.; Uzondu, C.; Ndubuisi Nweke, C.C.; Kim, D.S. Explainable Artificial Intelligence (XAI) for Intrusion Detection and Mitigation in Intelligent Connected Vehicles: A Review. Appl. Sci. 2023, 13, 1252. [Google Scholar] [CrossRef]

- Le, H.; Pham, Q.; Sahoo, D.; Hoi, S.C. URLNet: Learning a URL representation with deep learning for malicious URL detection. arXiv 2018, arXiv:1802.03162. [Google Scholar]

- Tajaddodianfar, F.; Stokes, J.W.; Gururajan, A. Texception: A character/word-level deep learning model for phishing URL detection. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 2857–2861. [Google Scholar]

- Jiang, J.; Chen, J.; Choo, K.K.R.; Liu, C.; Liu, K.; Yu, M.; Wang, Y. A deep learning based online malicious URL and DNS detection scheme. In Security and Privacy in Communication Networks, Proceedings of the 13th International Conference, Secure Comm 2017, Niagara Falls, ON, Canada, 22–25 October 2017; Proceedings 13; Springer: Cham, Switzerland, 2018; pp. 438–448. [Google Scholar]

- Alshehri, M.; Abugabah, A.; Algarni, A.; Almotairi, S. Character-level word encoding deep learning model for combating cyber threats in phishing URL detection. Comput. Electr. Eng. 2022, 100, 107868. [Google Scholar] [CrossRef]

- Aljabri, M.; Mirza, S. Phishing attacks detection using machine learning and deep learning models. In Proceedings of the 2022 7th International Conference on Data Science and Machine Learning Applications (CDMA), Riyadh, Saudi Arabia, 1–3 March 2022; pp. 175–180. [Google Scholar]

- Patgiri, R.; Biswas, A.; Nayak, S. deepBF: Malicious URL detection using learned bloom filter and evolutionary deep learning. Comput. Commun. 2023, 200, 30–41. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, W.; Xu, H.; Qin, Z.; Ren, K.; Ma, W. A Large-Scale Pretrained Deep Model for Phishing URL Detection. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Xuan, C.D.; Nguyen, H.D.; Tisenko, V.N. Malicious URL detection based on machine learning. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 148–153. [Google Scholar] [CrossRef]

- Wu, T.; Wang, M.; Xi, Y.; Zhao, Z. Malicious URL Detection Model Based on Bidirectional Gated Recurrent Unit and Attention Mechanism. Appl. Sci. 2022, 12, 12367. [Google Scholar] [CrossRef]

- Abdul Samad, S.R.; Balasubaramanian, S.; Al-Kaabi, A.S.; Sharma, B.; Chowdhury, S.; Mehbodniya, A.; Webber, J.L.; Bostani, A. Analysis of the Performance Impact of Fine-Tuned Machine Learning Model for Phishing URL Detection. Electronics 2023, 12, 1642. [Google Scholar] [CrossRef]

- Ozcan, A.; Catal, C.; Donmez, E.; Senturk, B. A hybrid DNN–LSTM model for detecting phishing URLs. Neural Comput. Appl. 2023, 35, 4957–4973. [Google Scholar] [CrossRef]

- Tan, C.C.L.; Chiew, K.L.; Yong, K.S.; Sebastian, Y.; Than, J.C.M.; Tiong, W.K. Hybrid phishing detection using joint visual and textual identity. Expert Syst. Appl. 2023, 220, 119723. [Google Scholar] [CrossRef]

- Opara, C.; Wei, B.; Chen, Y. HTMLPhish: Enabling phishing web page detection by applying deep learning techniques on HTML analysis. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Pandey, P.; Mishra, N. Phish-Sight: A new approach for phishing detection using dominant colors on web pages and machine learning. Int. J. Inf. Secur. 2023, 1–11. [Google Scholar] [CrossRef]

- Aljofey, A.; Jiang, Q.; Rasool, A.; Chen, H.; Liu, W.; Qu, Q.; Wang, Y. An effective detection approach for phishing websites using URL and HTML features. Sci. Rep. 2022, 12, 8842. [Google Scholar] [CrossRef] [PubMed]

- Benavides-Astudillo, E.; Fuertes, W.; Sanchez-Gordon, S.; Rodriguez-Galan, G.; Martínez-Cepeda, V.; Nuñez-Agurto, D. Comparative Study of Deep Learning Algorithms in the Detection of Phishing Attacks Based on HTML and Text Obtained from Web Pages. In International Conference on Applied Technologies, Proceedings of the 4th International Conference, ICAT 2022, Quito, Ecuador, 23–25 November 2022; Springer: Cham, Switzerland, 2022; pp. 386–398. [Google Scholar]

- Paturi, R.; Swathi, L.; Pavithra, K.S.; Mounika, R.; Alekhya, C. Detection of Phishing Attacks using Visual Similarity Model. In Proceedings of the 2022 International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Salem, India, 9–11 May 2022; pp. 1355–1361. [Google Scholar]

- Ariyadasa, S.; Fernando, S.; Fernando, S. Combining Long-Term Recurrent Convolutional and Graph Convolutional Networks to Detect Phishing Sites Using URL and HTML. IEEE Access 2022, 10, 82355–82375. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Du, C.; Sun, H.; Wang, J.; Qi, Q.; Liao, J. Adversarial and domain-aware BERT for cross-domain sentiment analysis. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 June 2020; pp. 4019–4028. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Saenko, K.; Darrell, T. Adversarial discriminative domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7167–7176. [Google Scholar]

- Shi, Y.; Chen, G.; Li, J. Malicious domain name detection based on extreme machine learning. Neural Process. Lett. 2018, 48, 1347–1357. [Google Scholar] [CrossRef]

- Xue, F.; Shi, Z.; Wei, F.; Lou, Y.; Liu, Y.; You, Y. Go wider instead of deeper. AAAI Conf. Artif. Intell. 2022, 36, 8779–8787. [Google Scholar] [CrossRef]

- Bengio, Y. Deep learning of representations: Looking forward. In Statistical Language and Speech Processing, Proceedings of the First International Conference, SLSP 2013, Tarragona, Spain, 29–31 July 2013; Proceedings 1; Springer: Cham, Switzerland, 2013; pp. 1–37. [Google Scholar]

- Shazeer, N.; Mirhoseini, A.; Maziarz, K.; Davis, A.; Le, Q.; Hinton, G.; Dean, J. Outrageously large neural networks: The sparsely-gated mixture-of-experts layer. arXiv 2017, arXiv:1701.06538. [Google Scholar]

- Srinivasan, S.; Vinayakumar, R.; Arunachalam, A.; Alazab, M.; Soman, K. DURLD: Malicious URL detection using deep learning-based character level representations. In Malware Analysis Using Artificial Intelligence and Deep Learning; Springer: Berlin/Heidelberg, Germany, 2021; pp. 535–554. [Google Scholar]

- Castell-Uroz, I.; Poissonnier, T.; Manneback, P.; Barlet-Ros, P. URL-based Web tracking detection using deep learning. In Proceedings of the 2020 16th International Conference on Network and Service Management (CNSM), Izmir, Turkey, 2–6 November 2020; pp. 1–5. [Google Scholar]

- Rajalakshmi, R.; Aravindan, C. A Naive Bayes approach for URL classification with supervised feature selection and rejection framework. Comput. Intell. 2018, 34, 363–396. [Google Scholar] [CrossRef]

- Wang, C.; Chen, Y. TCURL: Exploring hybrid transformer and convolutional neural network on phishing URL detection. Knowl.-Based Syst. 2022, 258, 109955. [Google Scholar] [CrossRef]

| URLNet | DURLD | DTD | FS-NB | TCURL | PhishBERT | Our Method | |

|---|---|---|---|---|---|---|---|

| Accuracy | 0.9793 | 0.9704 | 0.9767 | 0.9718 | 0.9748 | 0.9856 | 0.9861 |

| TPR | 0.9839 | 0.9795 | 0.9698 | 0.9681 | 0.9714 | 0.9852 | 0.9896 |

| FPR | 0.0253 | 0.0387 | 0.0164 | 0.0245 | 0.0218 | 0.0140 | 0.0174 |

| AUC | 0.9843 | 0.9813 | 0.9751 | 0.9736 | 0.9787 | 0.9905 | 0.9932 |

| F1-Score | 0.9794 | 0.9707 | 0.9765 | 0.9717 | 0.9747 | 0.9856 | 0.9861 |

| URLNet | DURLD | DTD | FS-NB | TCURL | PhishBERT | Our Method | ||

|---|---|---|---|---|---|---|---|---|

| 70% of Data | Accuracy | 0.9756 | 0.9752 | 0.9661 | 0.9679 | 0.9737 | 0.9810 | 0.9853 |

| TPR | 0.9698 | 0.9620 | 0.9558 | 0.9565 | 0.9619 | 0.9730 | 0.9839 | |

| FPR | 0.0239 | 0.0236 | 0.0330 | 0.0311 | 0.0252 | 0.0183 | 0.0146 | |

| F1-Score | 0.8687 | 0.8659 | 0.8244 | 0.8322 | 0.8589 | 0.8950 | 0.9176 | |

| 30% of Data | Accuracy | 0.9175 | 0.9084 | 0.8962 | 0.9063 | 0.8918 | 0.9237 | 0.9567 |

| TPR | 0.8908 | 0.8809 | 0.8596 | 0.8870 | 0.8632 | 0.8991 | 0.9395 | |

| FPR | 0.0801 | 0.0891 | 0.1005 | 0.0919 | 0.1056 | 0.0741 | 0.0417 | |

| F1-Score | 0.6425 | 0.6155 | 0.5796 | 0.6118 | 0.5704 | 0.6623 | 0.7832 | |

| 10% of Data | Accuracy | 0.7887 | 0.7927 | 0.7673 | 0.7438 | 0.8080 | 0.8458 | 0.8878 |

| TPR | 0.7320 | 0.7348 | 0.7059 | 0.7060 | 0.7658 | 0.8000 | 0.8708 | |

| FPR | 0.2062 | 0.2020 | 0.2271 | 0.2528 | 0.1882 | 0.1500 | 0.1107 | |

| F1-Score | 0.3657 | 0.3711 | 0.3355 | 0.3145 | 0.3990 | 0.4634 | 0.5637 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Ma, W.; Xu, H.; Liu, Y.; Yin, P. A Lightweight Multi-View Learning Approach for Phishing Attack Detection Using Transformer with Mixture of Experts. Appl. Sci. 2023, 13, 7429. https://doi.org/10.3390/app13137429

Wang Y, Ma W, Xu H, Liu Y, Yin P. A Lightweight Multi-View Learning Approach for Phishing Attack Detection Using Transformer with Mixture of Experts. Applied Sciences. 2023; 13(13):7429. https://doi.org/10.3390/app13137429

Chicago/Turabian StyleWang, Yanbin, Wenrui Ma, Haitao Xu, Yiwei Liu, and Peng Yin. 2023. "A Lightweight Multi-View Learning Approach for Phishing Attack Detection Using Transformer with Mixture of Experts" Applied Sciences 13, no. 13: 7429. https://doi.org/10.3390/app13137429

APA StyleWang, Y., Ma, W., Xu, H., Liu, Y., & Yin, P. (2023). A Lightweight Multi-View Learning Approach for Phishing Attack Detection Using Transformer with Mixture of Experts. Applied Sciences, 13(13), 7429. https://doi.org/10.3390/app13137429