Biosignals Monitoring of First Responders for Cognitive Load Estimation in Real-Time Operation

Abstract

:Featured Application

Abstract

1. Introduction

2. Theoretical Framework

2.1. Biosignals

- Awareness: Relates to the degree of the respondent’s cognizance of his/her emotions being captured. Physiological emotion signals are considered more implicit than self-reporting that is based on the user’s subjective experience.

- Obtrusiveness: The user’s experience of the medium. Sensors that are attached to the human body (EMG or Electrooculography EOG) have been reported to be obtrusive.

- Invasiveness: Realistic use in real-life settings. The standard computer equipment (webcams for recording facial expressions, measuring keyboard pressure or mouse clicks from log files) considered non-invasive in contrast with the use of extra equipment (professional cameras or artificial labs) or long questionnaires for self-report.

- Task relevance: Measurement is applied in parallel with the user’s task (real-time) without interrupting the learning process. Task irrelevance is the main flaw of self-reporting.

- Electromyography: measured by the muscle response to a nerve’s stimulation of a certain muscle.

- Electroencephalography (EEG): brain activity.

- Galvanic Skin Response (GSR)/Electrodermal Activity/Skin Conductance (EDA or SC): record the electrical activity in the skin.

- Electrocardiogram (ECG): heart activity (heart rate, inter-beat interval, heart rate variability).

- Electrooculogram: eye pupil’s size and movement.

- Blood Volume Pulse (BVP): relative blood in an area.

- Respiration: measures rate of respiration and depth of breath.

2.2. Cognitive Load

- Cardiovascular and respiratory measurements: heart rate, heart rate variability, respiratory measurements, blood volume pulse, inter-beat interval, and other cardiovascular features.

- Eye activity: pupil dilation, blink rate, fixation, eye-tracking, and other ocular indices. Pupil diameter can be used as an indicator of informational mental strain [46]. Protective eye reflexes at first regulate the incidence of light; increasing mental strain leads to wider pupils; if the parasympathetic system is active, pupil diameter decreases; the action of the sympathetic system leads to an increase in the pupil diameter.

- Electrodermal and temperature measurements: Electrodermal activity (EDA) sensors measure sympathetic nervous system arousal and features related to stress, engagement, and excitement. The infrared thermophile sensor reads body skin temperature.

- Brain activity: electroencephalography (EEG) measures electrical potential from the cortex, functional magnetic resonance imaging (fMRI) and functional near-infrared spectroscopy (fNIRS) measure changes in blood flow that occurs with brain activity.

3. Materials and Methods

3.1. Physiological Variables Selection

3.2. Sensors

3.2.1. Chest Band

3.2.2. Wristband

3.2.3. HoloLens

3.2.4. Facial Information

3.3. System Integration

- Docker-phone receives the data of the wristband and chest band sent by the phone.

- Docker-holo receives the data sent by the HoloLens.

- Docker-serial receives the data of the facial information sent by the microcontroller.

- Docker-mongo runs the MongoDB database where all the incoming data are stored.

- Docker-flask is a flask web interface to externally monitor all the processes.

4. Experimental Tests

4.1. In-Lab Scenario

4.2. Practice Scenario

5. Results

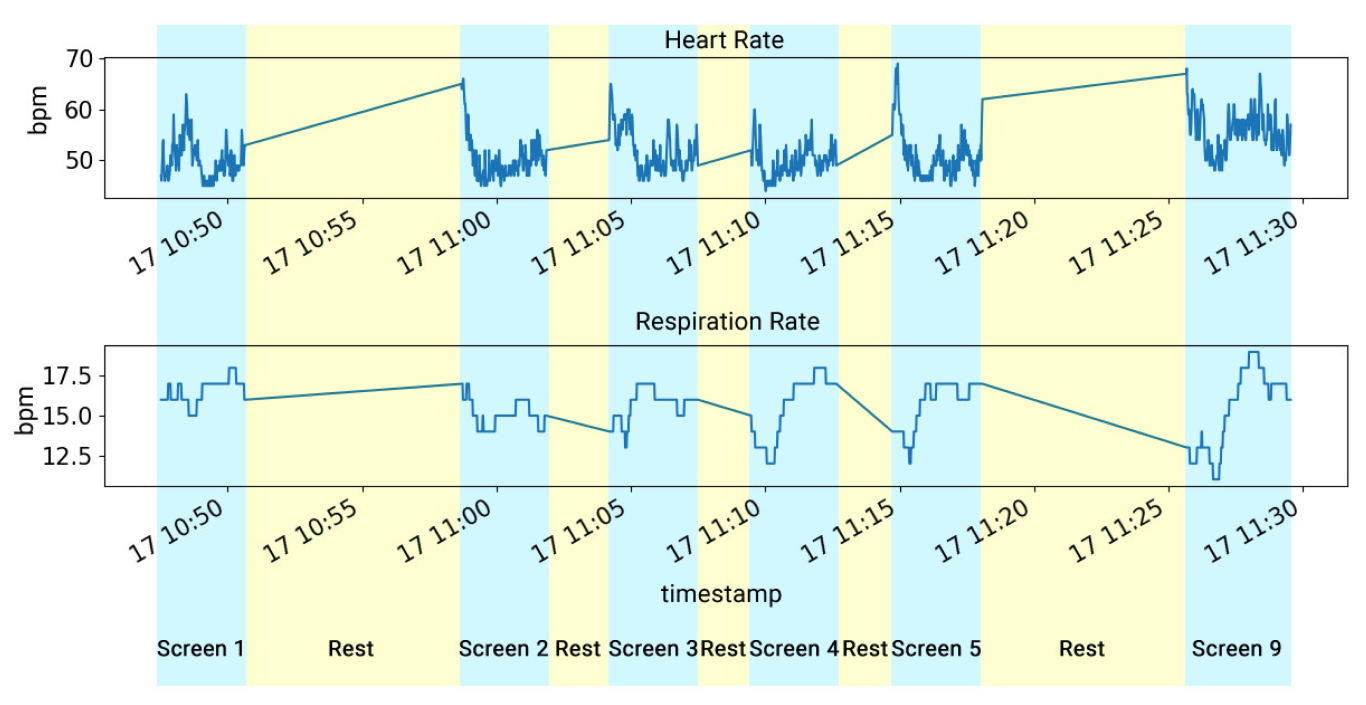

5.1. In-Lab Tests

5.2. Practice Tests

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lv, Z.; Li, Y. Wearable Sensors for Vital Signs Measurement: A Survey. J. Sens. Actuator Netw. 2022, 11, 19. [Google Scholar] [CrossRef]

- Lucke, J.A.; De Gelder, J.; Blomaard, L.C.; Heringhaus, C.; Alsma, J.; Schuit, S.C.E.K.N.; Brink, A.; Anten, S.; Blauw, G.J.; De Groot, B.; et al. Vital signs and impaired cognition in older emergency department patients: The APOP study. PLoS ONE 2019, 14, e0218596. [Google Scholar] [CrossRef] [PubMed]

- Kebe, M.; Gadhafi, R.; Mohammad, B.; Sanduleanu, M.; Saleh, H.; Al-Qutayri, M. Human vital signs detection methods and potential using radars: A Review. Sensors 2020, 20, 1454. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stuart, T.; Hanna, J.; Gutruf, P. Wearable devices for continuous monitoring of biosignals: Challenges and opportunities. APL Bioeng. 2022, 6, 021502. [Google Scholar] [CrossRef] [PubMed]

- Leenen, J.P.; Leerentveld, C.; van Dijk, J.D.; van Westreenen, H.L.; Schoonhoven, L.; Patijn, G.A. Current evidence for continuous vital signs monitoring by wearable wireless devices in hospitalized adults: Systematic Review. J. Med. Internet Res. 2020, 22, e18636. [Google Scholar] [CrossRef] [PubMed]

- Ray, T.; Choi, J.; Reeder, J.; Lee, S.P.; Aranyosi, A.J.; Ghaffari, R.; Rogers, J.A. Soft, skin-interfaced wearable systems for sports science and analytics. Curr. Opin. Biomed. Eng. 2019, 9, 47–56. [Google Scholar] [CrossRef]

- Dunn, J.; Kidzinski, L.; Runge, R.; Witt, D.; Hicks, J.L.; Rose, S.M.S.-F.; Li, X.; Bahmani, A.; Delp, S.L.; Hastie, T.; et al. Wearable sensors enable personalized predictions of clinical laboratory measurements. Nat. Med. 2021, 27, 1105–1112. [Google Scholar] [CrossRef]

- Grothe, J.; Tucker, S.; Blake, A.; Achutan, C.; Medcalf, S.; Suwondo, T.; Fruhling, A.; Yoder, A. Exploring First Responders’ Use and Perceptions on Continuous Health and Environmental Monitoring. Int. J. Environ. Res. Public Health 2023, 20, 4787. [Google Scholar] [CrossRef]

- Prabhu, M.; Sai Shibu, N.B.; Rao, S.N. Rescutrack: An edge computing-enabled Vitals Monitoring System for first responders. In Proceedings of the 2022 IEEE 3rd Global Conference for Advancement in Technology (GCAT), Bangalore, India, 7–9 October 2022. [Google Scholar] [CrossRef]

- Katiyar, K.; Kumari, P.; Srivastava, A. Interpretation of Biosignals and Application in Healthcare. In Information and Communication Technology (ICT) Frameworks in Telehealth; Mittal, M., Battineni, G., Eds.; TELe-Health; Springer: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- Pluntke, U.; Gerke, S.; Sridhar, A.; Weiss, J.; Michel, B. Evaluation and classification of physical and psychological stress in firefighters using heart rate variability. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019. [Google Scholar] [CrossRef]

- Longo, L.; Wickens, C.D.; Hancock, G.; Hancock, P.A. Human Mental Workload: A Survey and a Novel Inclusive Definition. Front. Psychol. 2022, 13, 883321. [Google Scholar] [CrossRef]

- Reimer, B.; Mehler, B. The impact of cognitive workload on physiological arousal in young adult drivers: A field study and simulation validation. Ergonomics 2011, 54, 932–942. [Google Scholar] [CrossRef]

- Picard, R.W.; Vyzas, E.; Healey, J. Toward machine emotional intelligence: Analysis of affective physiological state. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1175–1191. [Google Scholar] [CrossRef] [Green Version]

- Chen, S.; Epps, J. Automatic classification of eye activity for cognitive load measurement with emotion interference. Comput. Methods Programs Biomed. 2013, 110, 111–124. [Google Scholar] [CrossRef] [PubMed]

- Greenberg, K.; Zheng, R. Cognitive load theory and its measurement: A study of secondary tasks in relation to working memory. J. Cogn. Psychol. 2022, 34, 497–515. [Google Scholar] [CrossRef]

- Rai, A.A.; Ahirwal, M.K. Electroencephalogram-Based Cognitive Load Classification during Mental Arithmetic Task; Lecture Notes in Electrical Engineering; Springer: Singapore, 2022; pp. 479–487. [Google Scholar] [CrossRef]

- Ayres, P.; Lee, J.Y.; Paas, F.; van Merrienboer, J.J.G. The Validity of Physiological Measures to Identify Differences in Intrinsic Cognitive Load. Front. Psychol. 2021, 12, 702538. [Google Scholar] [CrossRef] [PubMed]

- Cooper, G. Cognitive load theory as an aid for instructional design. Australas. J. Educ. Technol. 1990, 6, 108–113. [Google Scholar] [CrossRef] [Green Version]

- Biondi, F.N.; Saberi, B.; Graf, F.; Cort, J.; Pillai, P.; Balasingam, B. Distracted worker: Using pupil size and blink rate to detect cognitive load during manufacturing tasks. Appl. Ergon. 2023, 106, 103867. [Google Scholar] [CrossRef]

- Sweller, J.; van Merriënboer, J.J.G.; Paas, F. Cognitive Architecture and Instructional Design: 20 Years Later. Educ. Psychol. Rev. 2019, 31, 261–292. [Google Scholar] [CrossRef] [Green Version]

- Van Merriënboer, J.J.G.; Kirschner, P.A. Ten Steps to Complex Learning: A Systematic Approach to Four-Component Instructional Design, 3rd ed.; Routledge: New York, NY, USA, 2018. [Google Scholar]

- Workman, M. Cognitive styles and the effects of stress from cognitive load and time pressures on judgemental decision making with learning simulations: Implications for HRD. Int. J. Hum. Resour. Dev. Manag. 2016, 16, 30–46. [Google Scholar] [CrossRef]

- Lyell, D.; Magrabi, F.; Coiera, E. The Effect of Cognitive Load and Task Complexity on Automation Bias in Electronic Prescribing. Hum. Factors J. Hum. Factors Ergon. Soc. 2018, 60, 1008–1021. [Google Scholar] [CrossRef]

- Schaefer, M.; Rumpel, F.; Sadrieh, A.; Reimann, M.; Denke, C. Personal involvement is related to increased search motivation and associated with activity in left BA44—A pilot study. Front. Hum. Neurosci. 2015, 9, 144. [Google Scholar] [CrossRef] [Green Version]

- Fruhling, A.; Reisher, E. Assessing Decision Makers’ cognitive Load for a First Responder Health Monitoring System. SAIS Proceedings, 30. 2022. Available online: https://aisel.aisnet.org/cgi/viewcontent.cgi?article=1029&context=sais2022 (accessed on 18 June 2023).

- Hudlicka, E. To feel or not to feel: The role of affect in human–computer interaction. Int. J. Hum. Comput. Stud. 2003, 59, 1–32. [Google Scholar] [CrossRef]

- Larraga-García, B.; Quintana-Díaz, M.; Gutiérrez, Á. Simulation-Based Education in Trauma Management: A Scoping Review. Int. J. Environ. Res. Public Health 2022, 19, 13546. [Google Scholar] [CrossRef] [PubMed]

- Lillywhite, B.; Wolbring, G. Emergency and Disaster Management, Preparedness, and Planning (EDMPP) and the ‘Social’: A Scoping Review. Sustainability 2022, 14, 13519. [Google Scholar] [CrossRef]

- Khanal, S.; Medasetti, U.S.; Mashal, M.; Savage, B.; Khadka, R. Virtual and Augmented Reality in the Disaster Management Technology: A Literature Review of the Past 11 years. Front. Virtual Real. 2022, 3, 843195. [Google Scholar] [CrossRef]

- Regal, G.; Murtinger, M.; Schrom-Feiertag, H. Augmented CBRNE Responder-Directions for Future Research. In Proceedings of the 13th Augmented Human International Conference (AH2022), Winnipeg, MB, Canada, 26–27 May 2022; Association for Computing Machinery: New York, NY, USA, 2022; Article 10; pp. 1–4. [Google Scholar] [CrossRef]

- Reuter, C.; Ludwig, T.; Pipek, V. Ad Hoc Participation in Situation Assessment: Supporting Mobile Collaboration in Emergencies. ACM Trans. Comput.-Hum. Interact. 2014, 21, 1–26. [Google Scholar] [CrossRef]

- Buchner, J.; Buntins, K.; Kerres, M. The impact of augmented reality on cognitive load and performance: A systematic review. J. Comput. Assist. Learn. 2021, 38, 285–303. [Google Scholar] [CrossRef]

- Steffen, J.H.; Gaskin, J.E.; Meservy, T.O.; Jenkins, J.L. The Missing Framework for Virtually Assisted Activities. In Proceedings of the International Conference on Information Systems, Seoul, Republic of Korea, 10–13 December 2017; Available online: https://dblp.org/rec/conf/icis/SteffenGMJ17 (accessed on 18 June 2023).

- Mirbabaie, M.; Fromm, J. Reducing the Cognitive Load of Decision-Makers in Emergency Management through Augmented Reality. In Proceedings of the 27th European Conference on Information Systems (ECIS), Stockholm & Uppsala, Sweden, 8–14 June 2019; Available online: https://aisel.aisnet.org/ecis2019_rip/50 (accessed on 18 June 2023).

- Mayer, R.E.; Fiorella, L. Principles for Reducing Extraneous Processing in Multimedia Learning: Coherence, Signaling, Redundancy, Spatial Contiguity, and Temporal Contiguity Principles. In The Cambridge Handbook of Multimedia Learning, 2nd ed.; Mayer, R.E., Ed.; Cambridge University Press: Cambridge, UK, 2014; pp. 279–315. [Google Scholar] [CrossRef]

- Ortony, A.; Clore, G.L.; Collins, A. The Cognitive Structure of Emotions; Cambridge University Press: Cambridge, UK, 1988. [Google Scholar] [CrossRef] [Green Version]

- Feidakis, M. A Review of Emotion-Aware Systems for e-Learning in Virtual Environments. In Formative Assessment, Learning Data Analytics and Gamification; Elsevier: Amsterdam, The Netherlands, 2016; pp. 217–242. [Google Scholar] [CrossRef]

- Feidakis, M.; Rangoussi, M.; Kasnesis, P.; Patrikakis, C.; Kogias, D.; Charitopoulos, A. Affective Assessment in Distance Learning: A Semi-explicit Approach. Int. J. Technol. Learn. 2019, 26, 19–34. [Google Scholar] [CrossRef]

- Owen, E.; Sweller, J. What do students learn while solving mathematics problems? J. Educ. Psychol. 1985, 77, 272–284. [Google Scholar] [CrossRef]

- Paas, F.G.W.C. Training strategies for attaining transfer of problem-solving skill in statistics: A cognitive-load approach. J. Educ. Psychol. 1992, 84, 429–434. [Google Scholar] [CrossRef]

- Naismith, L.M.; Cheung, J.J.H.; Ringsted, C.; Cavalcanti, R.B. Limitations of subjective cognitive load measures in simulation-based procedural training. Med. Educ. 2015, 49, 805–814. [Google Scholar] [CrossRef]

- Brian Gore. NASA-TLX—Task Load Index. Available online: https://humansystems.arc.nasa.gov/groups/TLX/ (accessed on 15 December 2020).

- Galy, E.; Cariou, M.; Mélan, C. What is the relationship between mental workload factors and cognitive load types? Int. J. Psychophysiol. 2012, 83, 269–275. [Google Scholar] [CrossRef] [PubMed]

- Ayres, P. Something old, something new from cognitive load theory. Comput. Human Behav. 2020, 113, 106503. [Google Scholar] [CrossRef]

- Chen, S.; Epps, J. Using Task-Induced Pupil Diameter and Blink Rate to Infer Cognitive Load. Human Comput. Interact. 2014, 29, 390–413. [Google Scholar] [CrossRef]

- Kimura, T.; Takemura, N.; Nakashima, Y.; Kobori, H.; Nagahara, H.; Numao, M.; Shinohara, K. Warmer Environments Increase Implicit Mental Workload Even If Learning Efficiency Is Enhanced. Front. Psychol. 2020, 11, 568. [Google Scholar] [CrossRef]

- Ashworth, E.T.; Cotter, J.D.; Kilding, A.E. Impact of elevated core temperature on cognition in hot environments within a military context. Eur. J. Appl. Physiol. 2021, 121, 1061–1071. [Google Scholar] [CrossRef]

- Saitis, C.; Parvez, M.Z.; Kalimeri, K. Cognitive Load Assessment from EEG and Peripheral Biosignals for the Design of Visually Impaired Mobility Aids. Wirel. Commun. Mob. Comput. 2018, 2018, 8971206. [Google Scholar] [CrossRef] [Green Version]

- Setz, C.; Arnrich, B.; Schumm, J.; La Marca, R.; Tröster, G.; Ehlert, U. Discriminating Stress From Cognitive Load Using a Wearable EDA Device. IEEE Trans. Inf. Technol. Biomed. 2009, 14, 410–417. [Google Scholar] [CrossRef]

- Boucsein, W. Electrodermal Activity; Springer: Boston, MA, USA, 2012. [Google Scholar] [CrossRef]

- Trutschel, U.; Heinze, C.; Sirois, B.; Golz, M.; Sommer, D.; Edwards, D. Heart Rate Measures Reflect the Interaction of Low Mental Workload and Fatigue during Driving Simulation. In Proceedings of the 4th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Portsmouth, UK, 17–19 October 2012; pp. 261–264. [Google Scholar] [CrossRef]

- Solhjoo, S.; Haigney, M.C.; McBee, E.; van Merrienboer, J.J.G.; Schuwirth, L.; Artino, A.R., Jr.; Battista, A.; Ratcliffe, T.A.; Lee, H.D.; Durning, S.J. Heart Rate and Heart Rate Variability Correlate with Clinical Reasoning Performance and Self-Reported Measures of Cognitive Load. Sci. Rep. 2019, 9, 14668. [Google Scholar] [CrossRef]

- Hidalgo-Muñoz, A.R.; Béquet, A.J.; Astier-Juvenon, M.; Pépin, G.; Fort, A.; Jallais, C.; Tattegrain, H.; Gabaude, C. Respiration and Heart Rate Modulation Due to Competing Cognitive Tasks While Driving. Front. Hum. Neurosci. 2019, 12, 525. [Google Scholar] [CrossRef]

- Criée, C.; Sorichter, S.; Smith, H.; Kardos, P.; Merget, R.; Heise, D.; Berdel, D.; Köhler, D.; Magnussen, H.; Marek, W.; et al. Body plethysmography—Its principles and clinical use. Respir. Med. 2011, 105, 959–971. [Google Scholar] [CrossRef]

- Singh, G.; Tee, A.; Trakoolwilaiwan, T.; Taha, A.; Olivo, M. Method of respiratory rate measurement using a unique wearable platform and an adaptive optical-based approach. Intensiv. Care Med. Exp. 2020, 8, 15. [Google Scholar] [CrossRef]

- Chu, M.; Nguyen, T.; Pandey, V.; Zhou, Y.; Pham, H.N.; Bar-Yoseph, R.; Radom-Aizik, S.; Jain, R.; Cooper, D.M.; Khine, M. Respiration rate and volume measurements using wearable strain sensors. NPJ Digit. Med. 2019, 2, 8. [Google Scholar] [CrossRef] [Green Version]

- Brunken, R.; Plass, J.L.; Leutner, D. Direct Measurement of Cognitive Load in Multimedia Learning. Educ. Psychol. 2003, 38, 53–61. [Google Scholar] [CrossRef]

- Lallé, S.; Conati, C.; Carenini, G. Prediction of individual learning curves across information visualizations. User Model. User-Adapt. Interact. 2016, 26, 307–345. [Google Scholar] [CrossRef]

- Anderson, E.W.; Potter, K.C.; Matzen, L.E.; Shepherd, J.F.; Preston, G.A.; Silva, C.T. A User Study of Visualization Effectiveness Using EEG and Cognitive Load. Comput. Graph. Forum 2011, 30, 791–800. [Google Scholar] [CrossRef]

- Cole, M.J.; Gwizdka, J.; Liu, C.; Belkin, N.J.; Zhang, X. Inferring user knowledge level from eye movement patterns. Inf. Process. Manag. 2013, 49, 1075–1091. [Google Scholar] [CrossRef] [Green Version]

- Sonkusare, S.; Ahmedt-Aristizabal, D.; Aburn, M.J.; Nguyen, V.T.; Pang, T.; Frydman, S.; Denman, S.; Fookes, C.; Breakspear, M.; Guo, C.C. Detecting changes in facial temperature induced by a sudden auditory stimulus based on deep learning-assisted face tracking. Sci. Rep. 2019, 9, 4729. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dias, R.D.; Ngo-Howard, M.C.; Boskovski, M.T.; Zenati, M.; Yule, S.J. Systematic review of measurement tools to assess surgeons’ intraoperative cognitive workload. Br. J. Surg. 2018, 105, 491–501. [Google Scholar] [CrossRef]

- Cardone, D.; Perpetuini, D.; Filippini, C.; Mancini, L.; Nocco, S.; Tritto, M.; Rinella, S.; Giacobbe, A.; Fallica, G.; Ricci, F.; et al. Classification of Drivers’ Mental Workload Levels: Comparison of Machine Learning Methods Based on ECG and Infrared Thermal Signals. Sensors 2022, 22, 7300. [Google Scholar] [CrossRef] [PubMed]

- Perpetuini, D.; Filippini, C.; Nocco, S.; Tritto, M.; Cardone, D.; Merla, A. A Machine Learning Approach to Classify Driver Mental Workload as Assessed by Electroencephalography through Infrared Thermal Imaging. In Proceedings of the 2022 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 17–18 November 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Lin, C.J.; Lukodono, R.P. Classification of mental workload in Human-robot collaboration using machine learning based on physiological feedback. J. Manuf. Syst. 2022, 65, 673–685. [Google Scholar] [CrossRef]

- Kasnesis, P.; Toumanidis, L.; Burrello, A.; Chatzigeorgiou, C.; Patrikakis, C.Z. Multi-Head Cross-Attentional PPG and Motion Signal Fusion for Heart Rate Estimation. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Rhodes Island, Greece, 4–10 June 2023. [Google Scholar]

- Palumbo, A. Microsoft HoloLens 2 in Medical and Healthcare Context: State of the Art and Future Prospects. Sensors 2022, 22, 7709. [Google Scholar] [CrossRef] [PubMed]

- Swani, L.; Tyagi, P. Dockerization (Replacement of VMs). Int. Res. J. Eng. Technol. 2017. Available online: www.irjet.net (accessed on 4 January 2023).

| Sensor | Framework | |||

|---|---|---|---|---|

| Measurement | Frequency (Hz) | Measurement | Frequency (Hz) | |

| Chest Band | Temperature | 1 | Temperature | 1 |

| Respiration Rate | 25 | Respiration Rate | 1 | |

| ECG | 250 | Heart Rate | 1 | |

| Wristband | Accelerometer | 32 | Heart Rate | 0.5 |

| PPG | 64 | Temperature | 1 | |

| EDA | 4 | GSR | 1 | |

| HoloLens | Eye Gaze | 30 | Eye Gaze | 30 |

| Facial | Temperature | 10 | Temperature | 10 |

| EMG | 1000 | EMG | 1000 | |

| Profession | Number | Age (Years) | Experience (Years) | ||

|---|---|---|---|---|---|

| Male | Female | (Mean ± Std) | (Mean ± Std) | ||

| In-Lab Scenario | Doctors | 6 | 18 | 47.50 ± 7.33 | 20.38 ± 7.67 |

| Nurses | 9 | 20 | 45.34 ± 4.94 | 22.14 ± 5.40 | |

| Technicians | 2 | 6 | 46.59 ± 7.63 | 19.32 ± 7.53 | |

| Firefighters | 5 | 0 | 39.40 ± 7.91 | 9.60 ± 9.85 | |

| Practice Scenario | Doctors | 1 | 2 | 41.67 ± 5.73 | 15.00 ± 7.12 |

| Nurses | 3 | 6 | 42.33 ± 6.86 | 18.89 ± 6.23 | |

| Technicians | 8 | 0 | 40.75 ± 10.49 | 13.88 ± 8.18 | |

| Firefighters | 5 | 0 | 33.33 ± 2.87 | 1.67 ± 0.47 | |

| RR | HR | GSR | Body Temperature | Eye Gaze | |

|---|---|---|---|---|---|

| NASA-TLX | 0.8252 | 0.7248 | 0.5334 | 0.3195 | 0.7605 |

| Performance | 0.5344 | 0.6741 | 0.6356 | 0.5968 | 0.5344 |

| RR | HR | GSR | Body Temperature | Eye Gaze | |

|---|---|---|---|---|---|

| NASA-TLX | 0.8976 | 0.5020 | 0.6465 | 0.6651 | 0.8608 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gutiérrez, Á.; Blanco, P.; Ruiz, V.; Chatzigeorgiou, C.; Oregui, X.; Álvarez, M.; Navarro, S.; Feidakis, M.; Azpiroz, I.; Izquierdo, G.; et al. Biosignals Monitoring of First Responders for Cognitive Load Estimation in Real-Time Operation. Appl. Sci. 2023, 13, 7368. https://doi.org/10.3390/app13137368

Gutiérrez Á, Blanco P, Ruiz V, Chatzigeorgiou C, Oregui X, Álvarez M, Navarro S, Feidakis M, Azpiroz I, Izquierdo G, et al. Biosignals Monitoring of First Responders for Cognitive Load Estimation in Real-Time Operation. Applied Sciences. 2023; 13(13):7368. https://doi.org/10.3390/app13137368

Chicago/Turabian StyleGutiérrez, Álvaro, Patricia Blanco, Verónica Ruiz, Christos Chatzigeorgiou, Xabier Oregui, Marta Álvarez, Sara Navarro, Michalis Feidakis, Izar Azpiroz, Gemma Izquierdo, and et al. 2023. "Biosignals Monitoring of First Responders for Cognitive Load Estimation in Real-Time Operation" Applied Sciences 13, no. 13: 7368. https://doi.org/10.3390/app13137368

APA StyleGutiérrez, Á., Blanco, P., Ruiz, V., Chatzigeorgiou, C., Oregui, X., Álvarez, M., Navarro, S., Feidakis, M., Azpiroz, I., Izquierdo, G., Larraga-García, B., Kasnesis, P., Olaizola, I. G., & Álvarez, F. (2023). Biosignals Monitoring of First Responders for Cognitive Load Estimation in Real-Time Operation. Applied Sciences, 13(13), 7368. https://doi.org/10.3390/app13137368