Load Forecasting Based on LVMD-DBFCM Load Curve Clustering and the CNN-IVIA-BLSTM Model

Abstract

1. Introduction

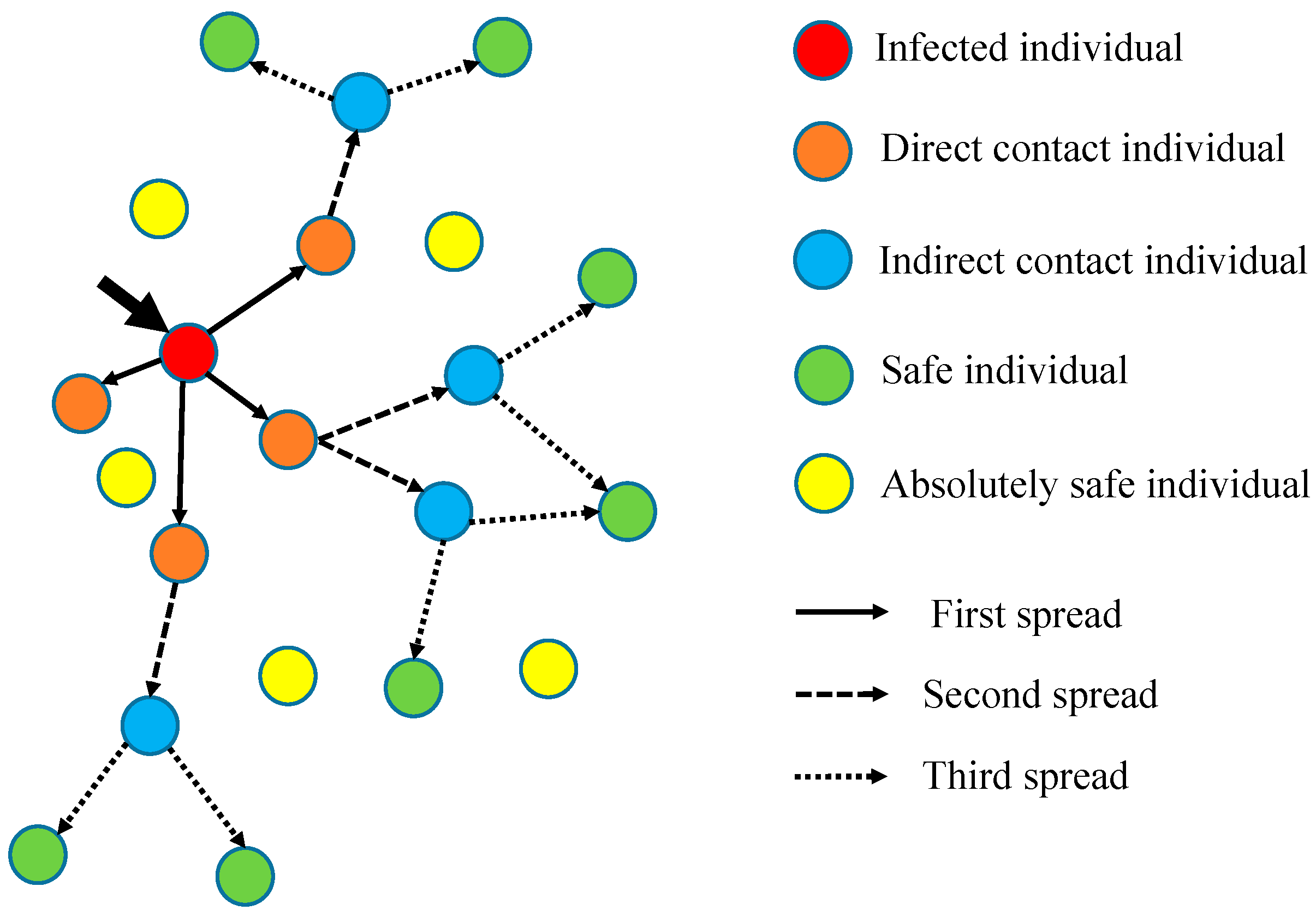

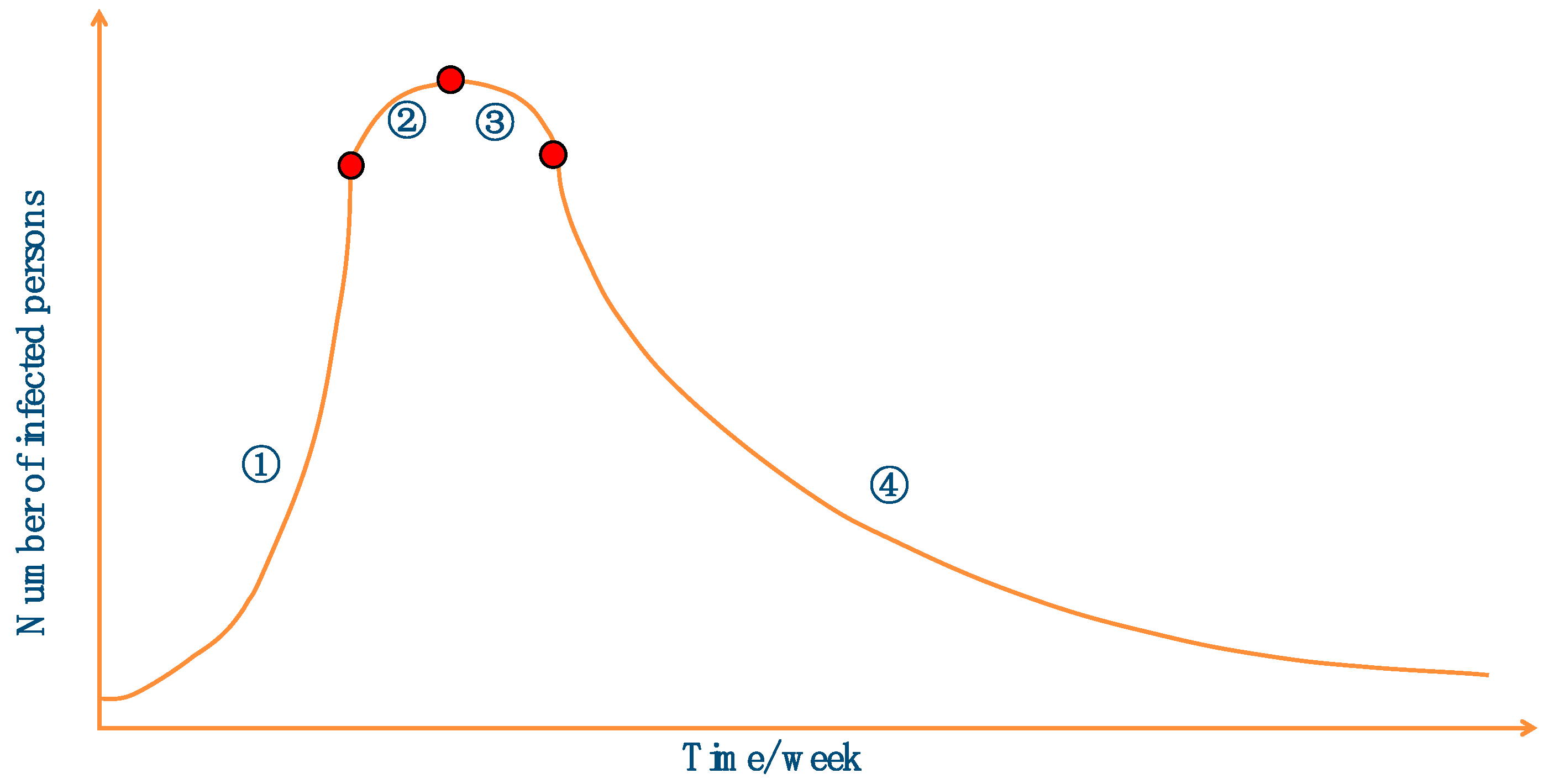

2. Influenza Virus Immunity Optimization Algorithm

2.1. Biological Characteristics

- The initial number of infected individuals is small, and they are randomly distributed in the population. The category to which an individual currently belongs and the corresponding update formula are determined based on the distance between the individual and the infected individual;

- The overall number of the population is kept constant, and absolutely safe individuals randomly move to different locations in the population. The number and location of other individuals change dynamically with the number of iterations;

- Individuals in the population have three states: infected, uninfected, and immune. Infected individuals have immunity after infecting other individuals and will not be infected in the following process. A population is immune when 80% or more of the individuals in the population are immune;

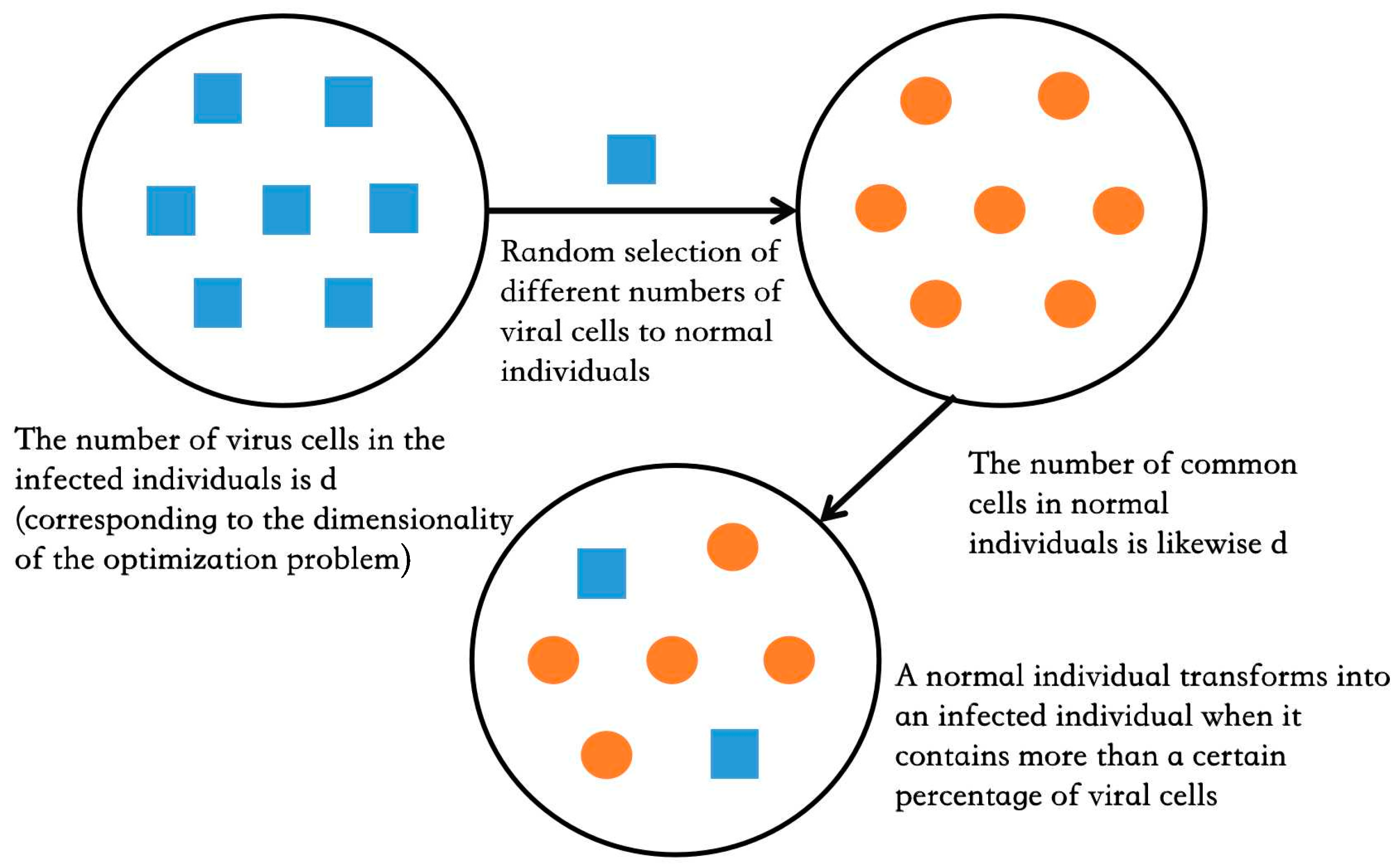

- Individuals are infected by contracting virus cells from an infected person, and the process of virus cell exchange is shown in Figure 2. The influenza virus cells and the diseased cells are taken as the smallest units within an individual to represent the dimension of the optimization problem.

2.2. Mathematical Model

- Infected individuals are updated by Equation (5):

- Extremely susceptible individuals are updated by Equation (6):

- Susceptible individuals are updated by Equation (7):

- Secure individuals updated by Equation (8):

- Absolutely safe individuals updated by Equation (9):

| Algorithm 1 IVIA pseudo-code |

| Input: |

| n: The number of people |

| Nmax: The maximum number of iterations |

| m: Initial number of infected individuals (usually set to 1) |

| L: Maximum safe contact distance |

| R: Herd immunity ratio |

| C: The distance between individuals |

| lb,ub: Search boundary |

| 1: Population initialization |

| 2: Setting parameters |

| 3: while (t < N) |

| 4: Calculate fitness value and sort |

| 5: for i = 1:n |

| 6: if (C < 0.2L) then |

| 7: Using Equation (5) to update the location of the infected individuals |

| 8: Record the current status of the individuals |

| 9: else if (0.2L < C < 0.5L) then |

| 10: Using Equation (6) to update the location of highly susceptible individuals |

| 11: Record the current status of the individuals |

| 12: else if (0.5L < C < 0.8L) then |

| 13: Using Equation (7) to update the location of susceptible individuals |

| 14: Record the current status of the individuals |

| 15: else if (0.8L < C < L) then |

| 16: Using Formula (8) to update the position of a safe individuals |

| 17: Record the current status of the individual |

| 18: else |

| 19: Using Equation (9) to update the position of an absolutely safe individuals |

| 20: end if |

| 21: Recalculate fitness value |

| 22: end for |

| 23: Calculate immunity rate |

| 24: t = t + 1 |

| 25: end while |

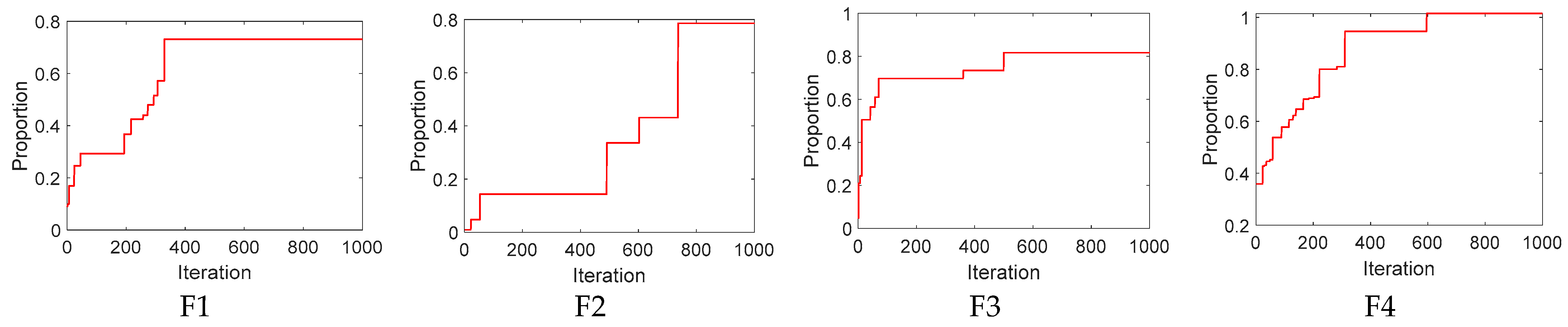

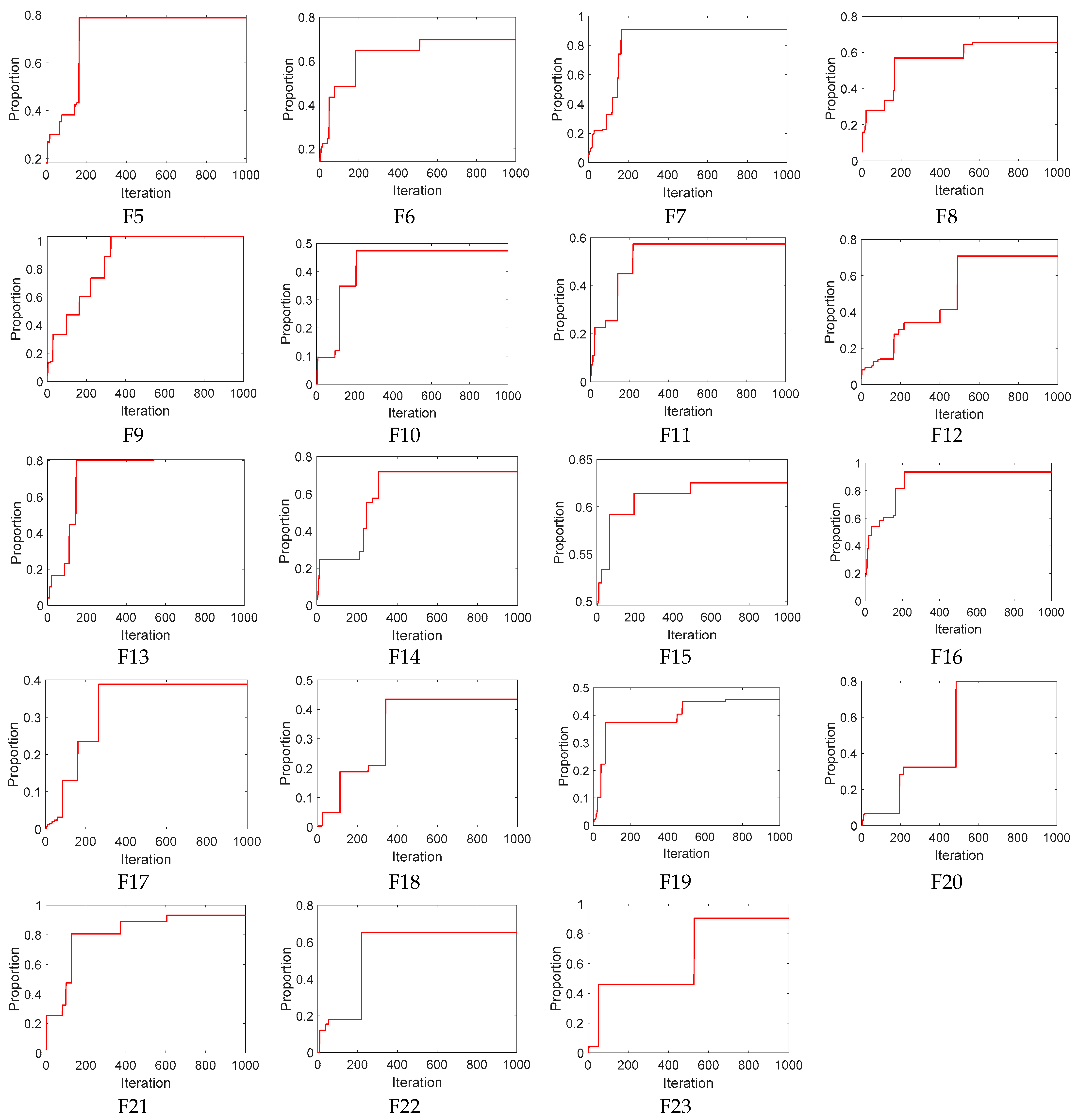

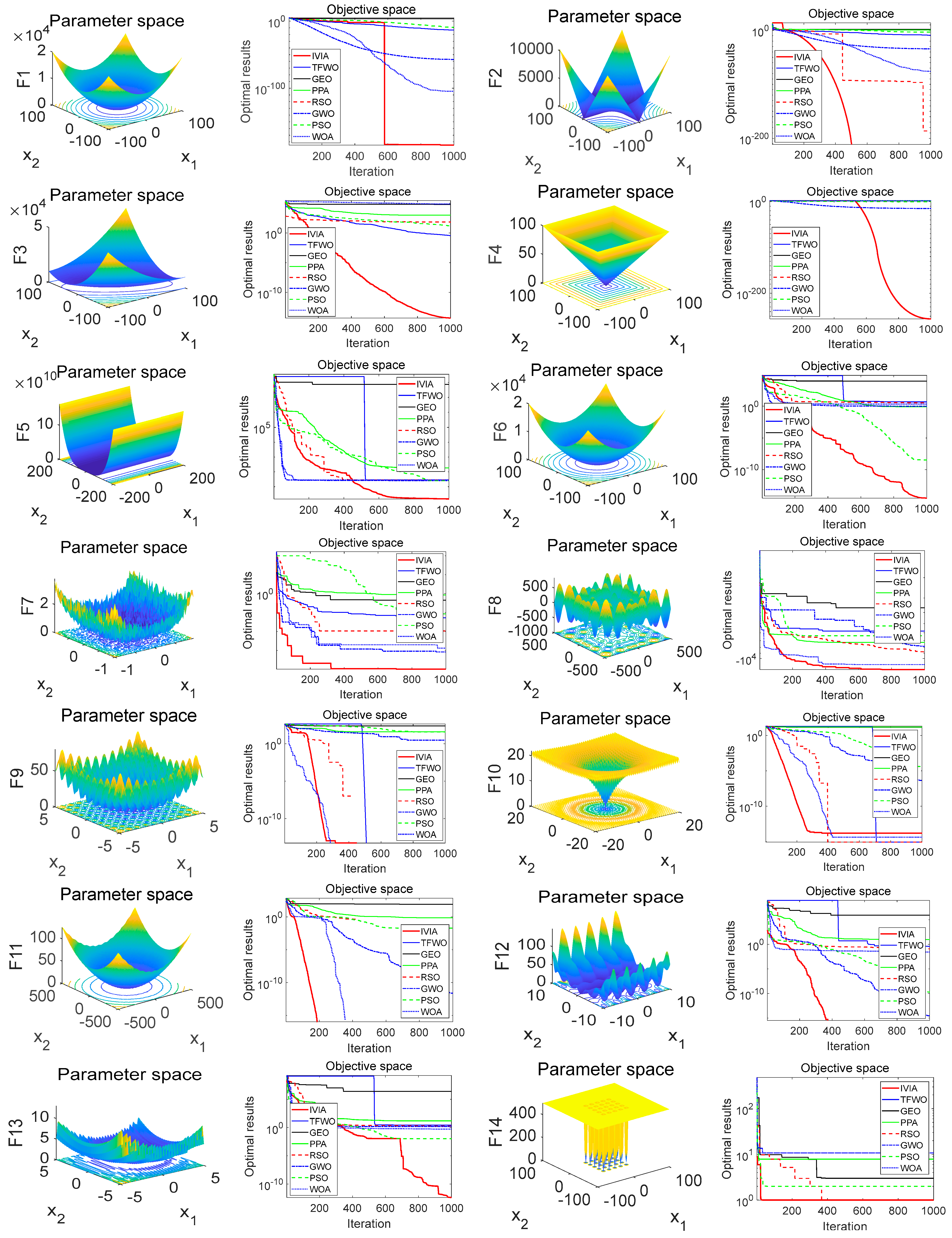

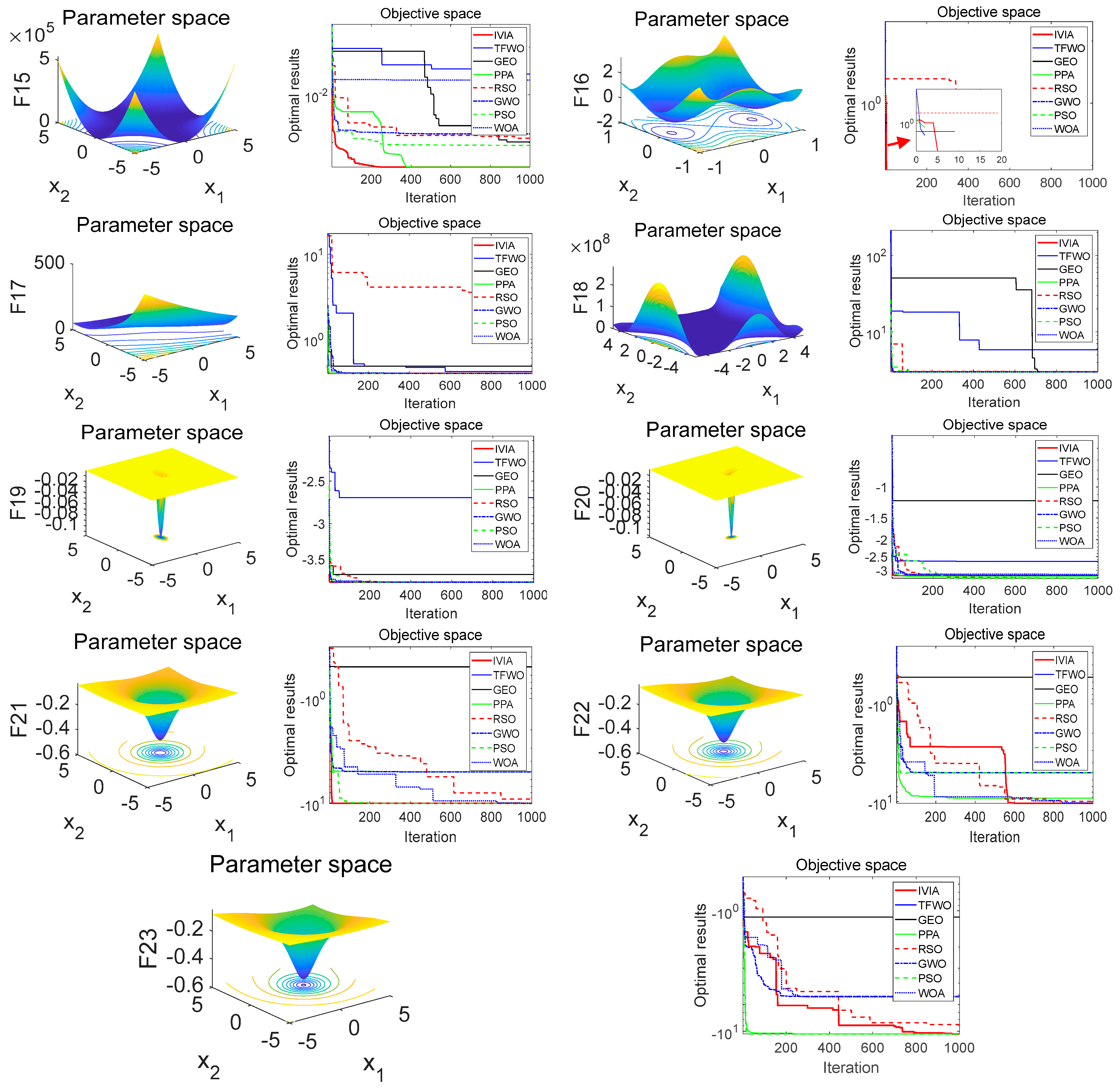

2.3. Algorithm Testing

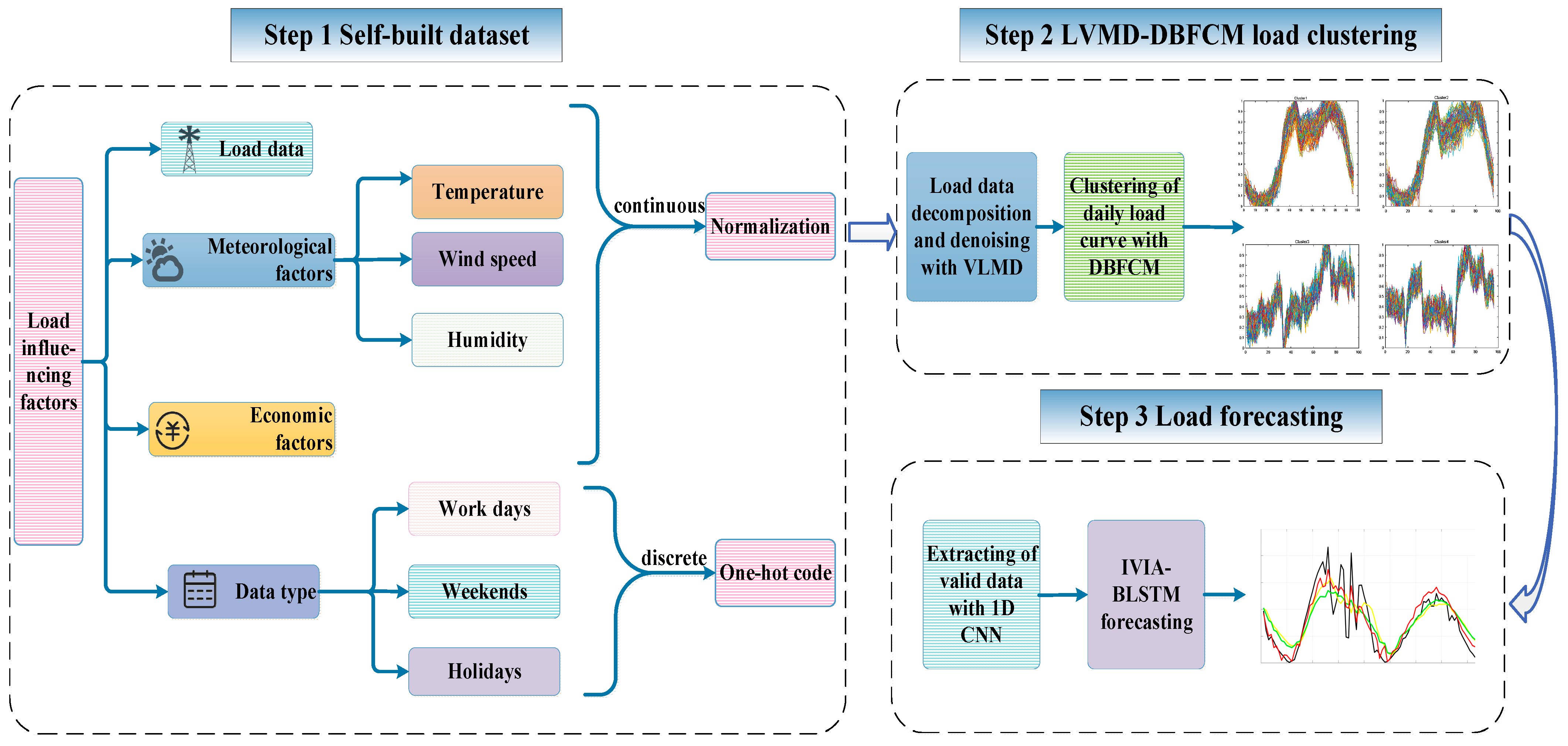

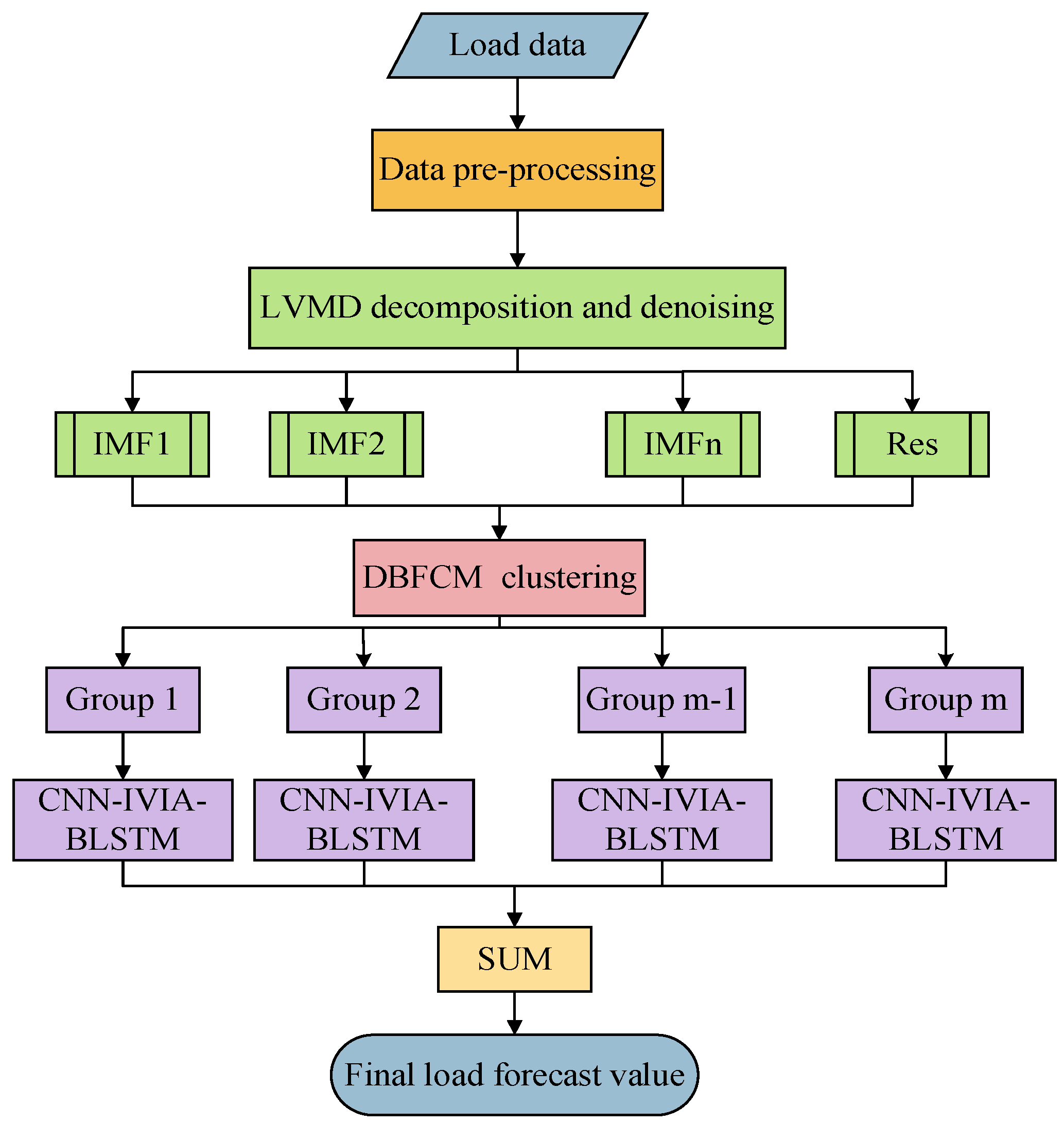

3. Methodology

3.1. Self-Built Dataset

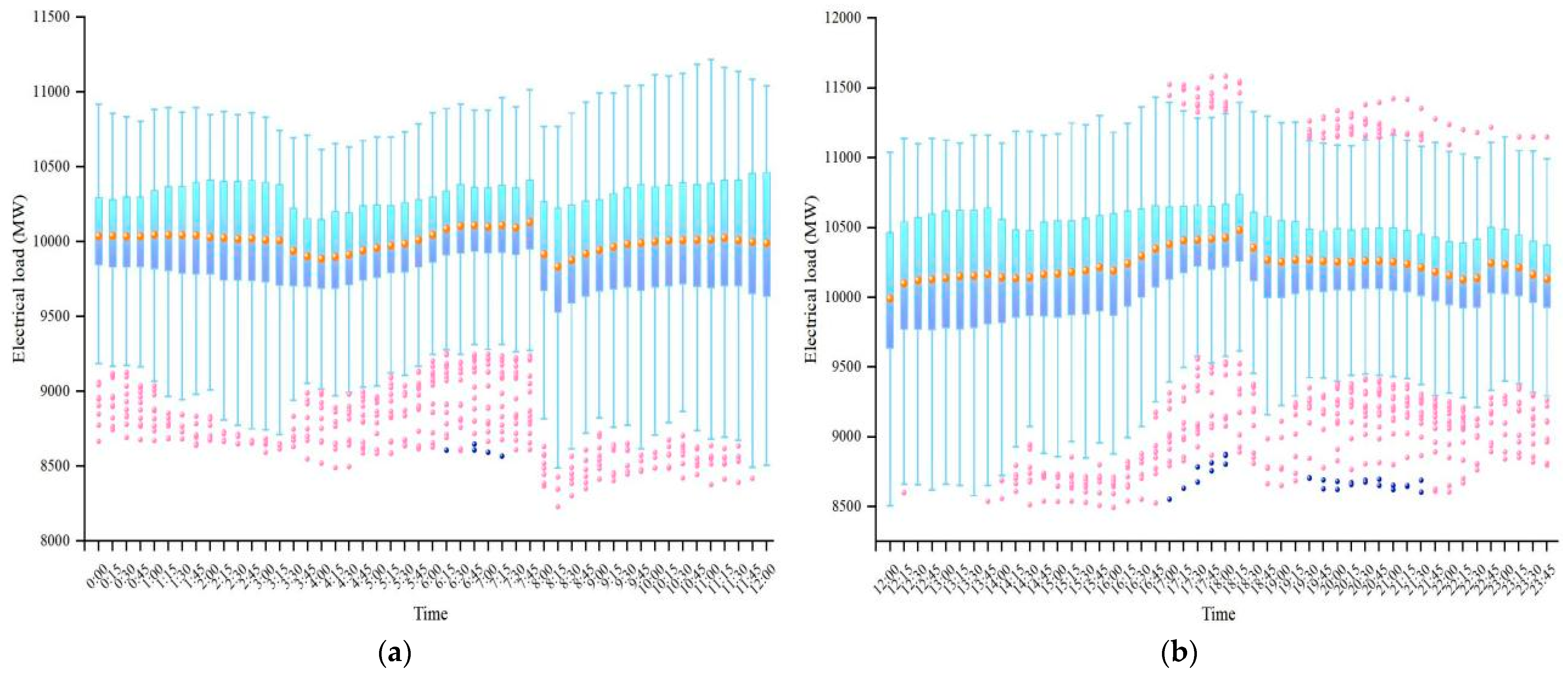

3.1.1. Dataset Visualization

3.1.2. Data Preprocessing

- Abnormal data recognition and correction

- Data normalization

3.2. LVMD-DBFCM Imbalanced Data Clustering

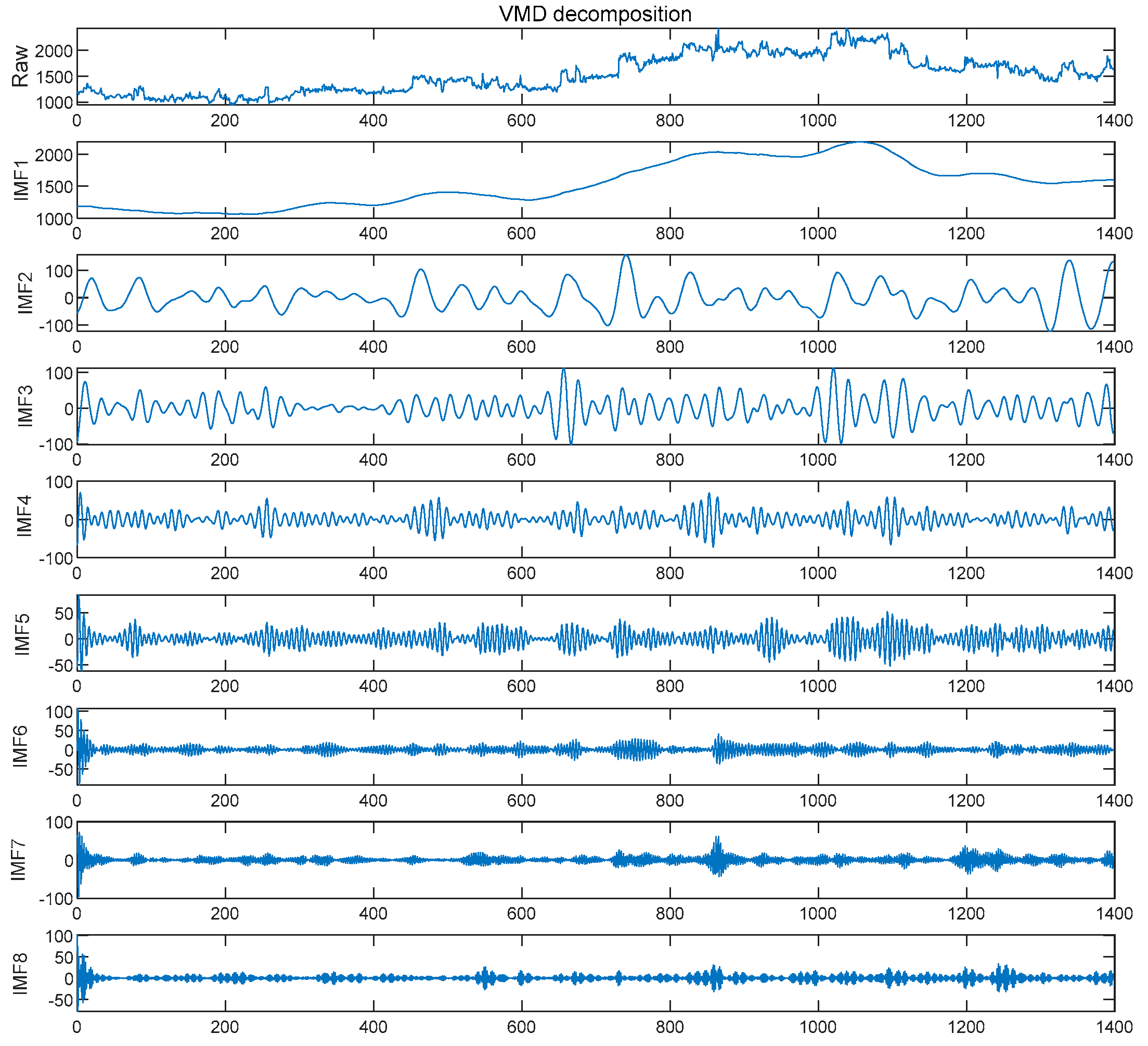

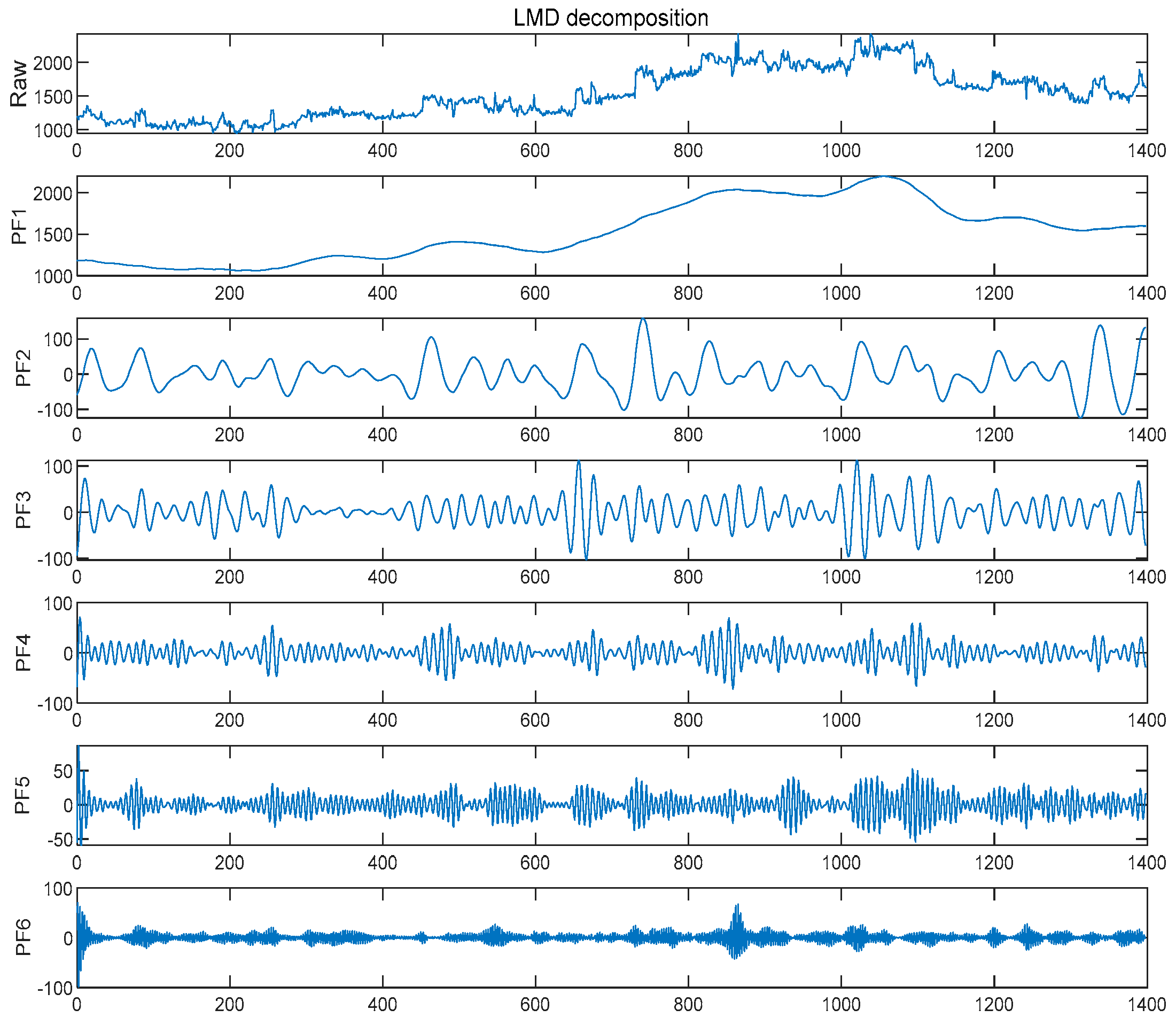

3.2.1. LVMD Double Decomposition Modal Strategy

- VMD Variational Mode Decomposition

- Initialize parameters , , , and let .

- , update and .

- , repeat the previous step until .

- Update , and the equation is shown in Equation (12).

- Repeat steps 2–5 until the end, when the condition of Equation (13) is satisfied.

- The component that contains the minimum time information after decomposition is judged and considered to be random noise that is eliminated. Then, the data are reconstructed by the remaining modal component pairs, and the reconstructed data are the load data that do not contain noise.

- LMD Local Mean Decomposition

- First, find all the extreme points contained in the data series , assuming that the distribution of extreme points is , and then calculate the mean and envelope of the adjacent extreme points according to Equations (14) and (15).

- 2.

- is removed from the original signal to obtain , and is obtained by demodulating using Equations (17) and (18):

- 3.

- The PF component of the envelope signal is the product of the envelope signal and the pure FM signal, and the above steps are repeated after stripping the PF component from the original signal to obtain the new signal until the residual component is a monotonic function. The final result of LMD is shown in Equation (20):

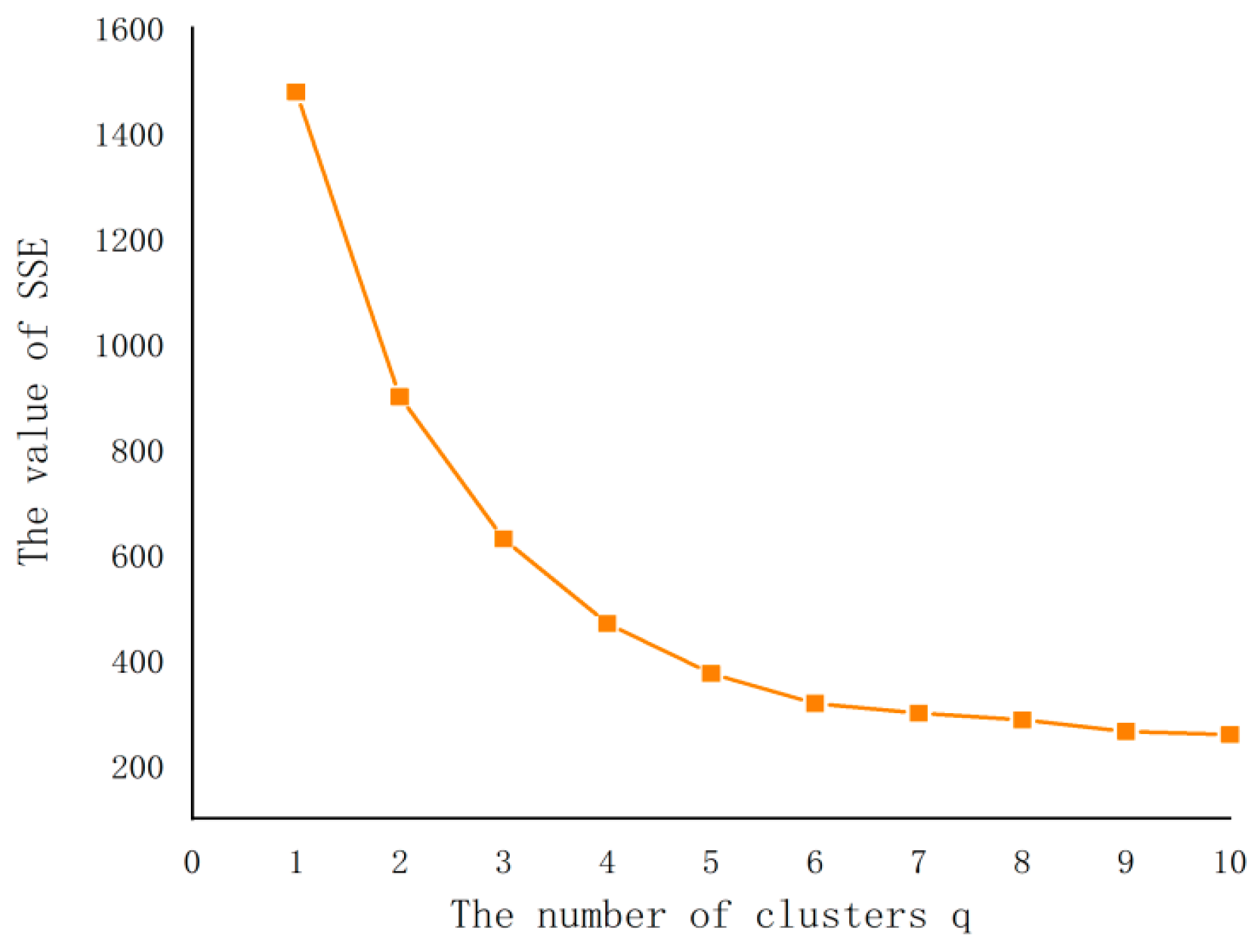

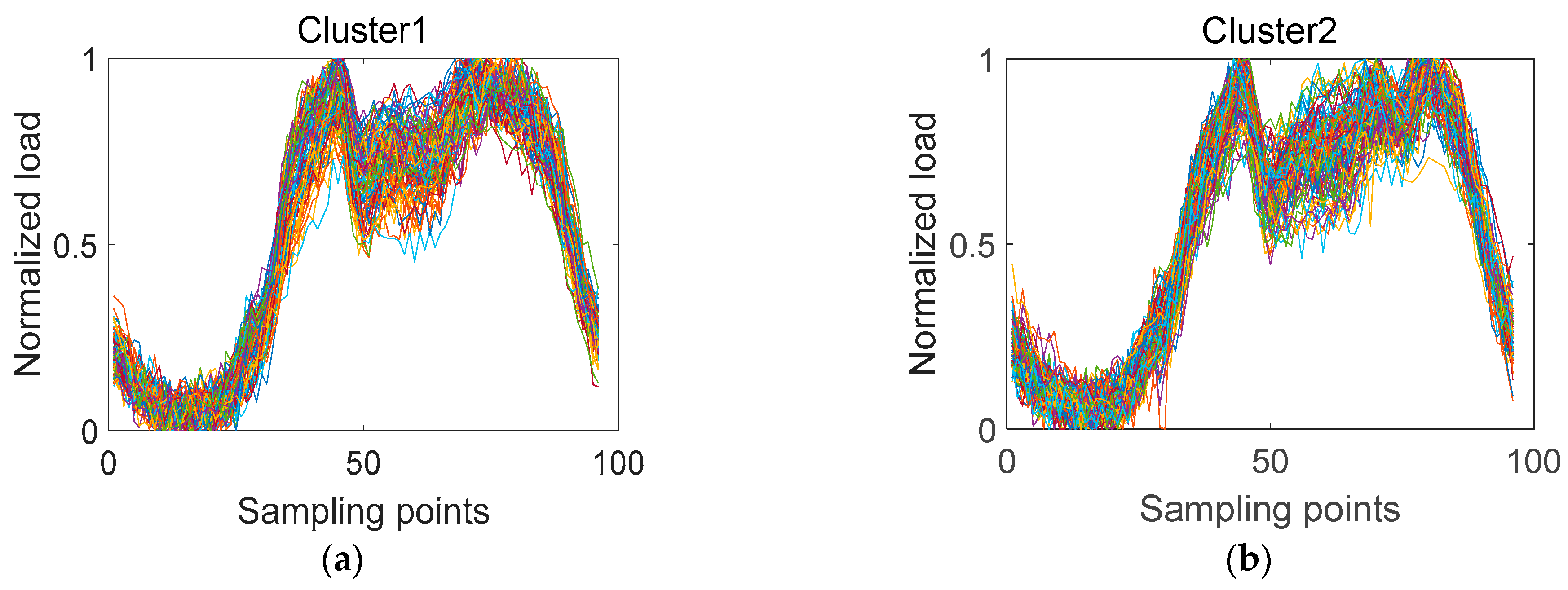

3.2.2. DBFCM Double Weights Fuzzy C-Means Algorithm

- FCM Fuzzy C-means Algorithm

- DBFCM Double Weights Fuzzy C-means Algorithm

- The affiliation matrix defines the class volume, which is then introduced as a constraint s.t. into the traditional FCM algorithm objective function, as shown in Equation (24):

- 2.

- The load curve is characteristically weighted by introducing a weighting factor to the traditional Euclidean distance . The DBFCM is used to perform cluster analysis on the decomposed and reconstructed load curves of LVMD to improve the optimal number of clusters.

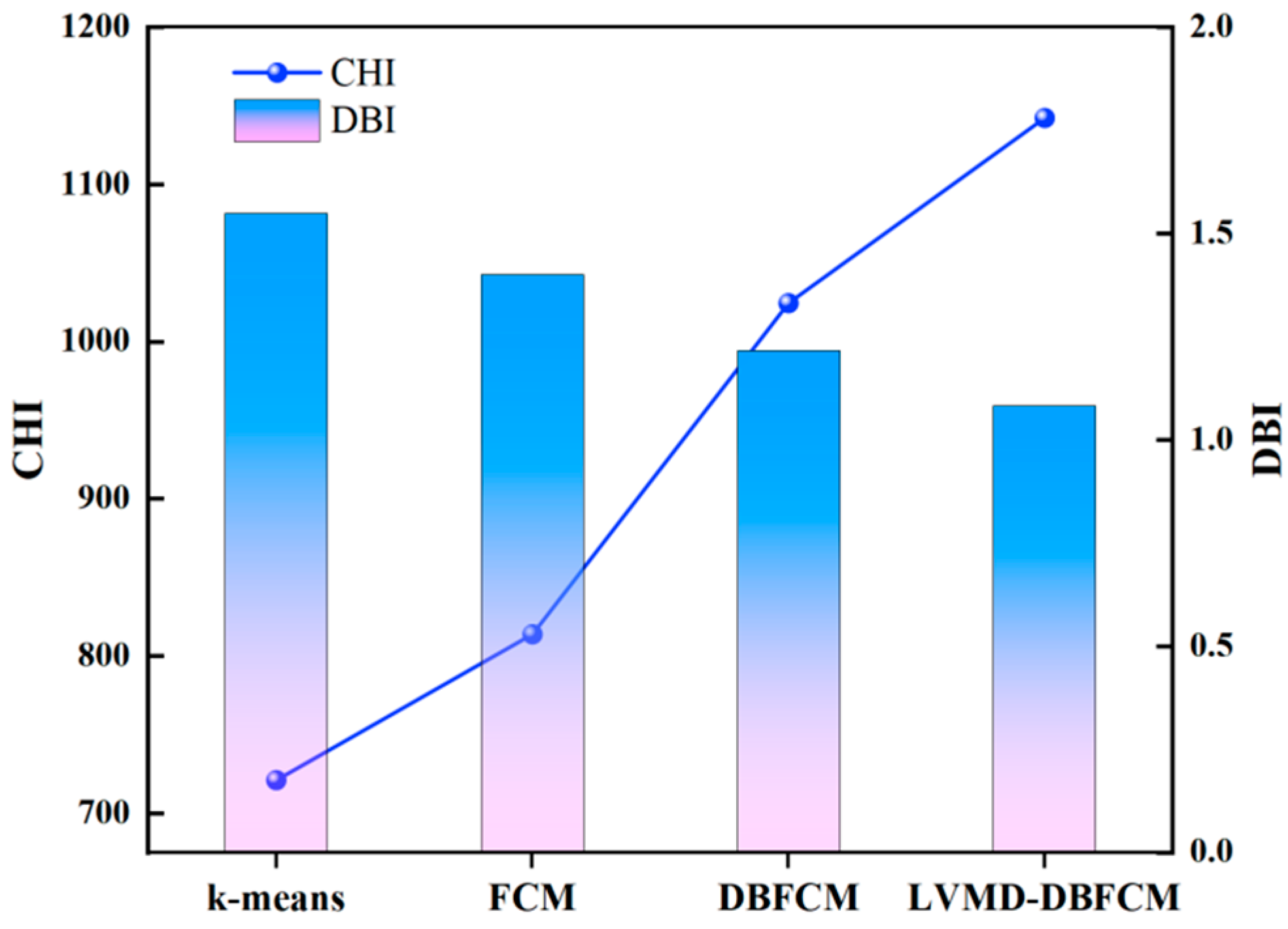

3.2.3. Clustering Validity Quality Evaluation

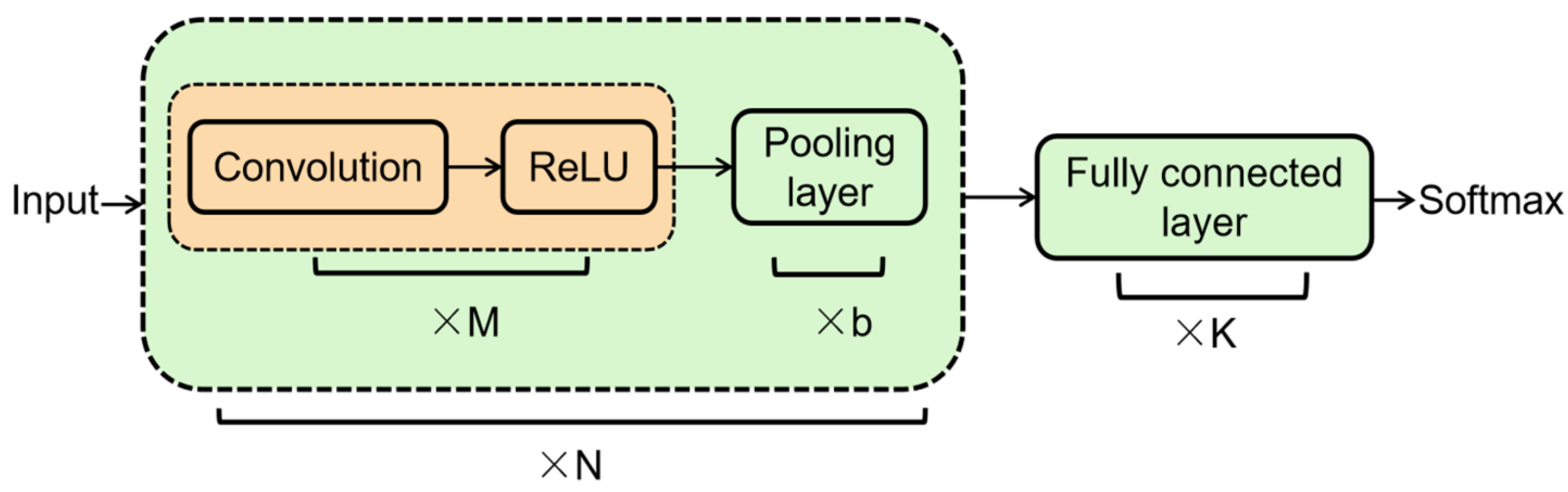

3.3. The Proposed CNN-IVIA-BLSTM Forecasting Model

3.3.1. CNN: Convolutional Neural Network

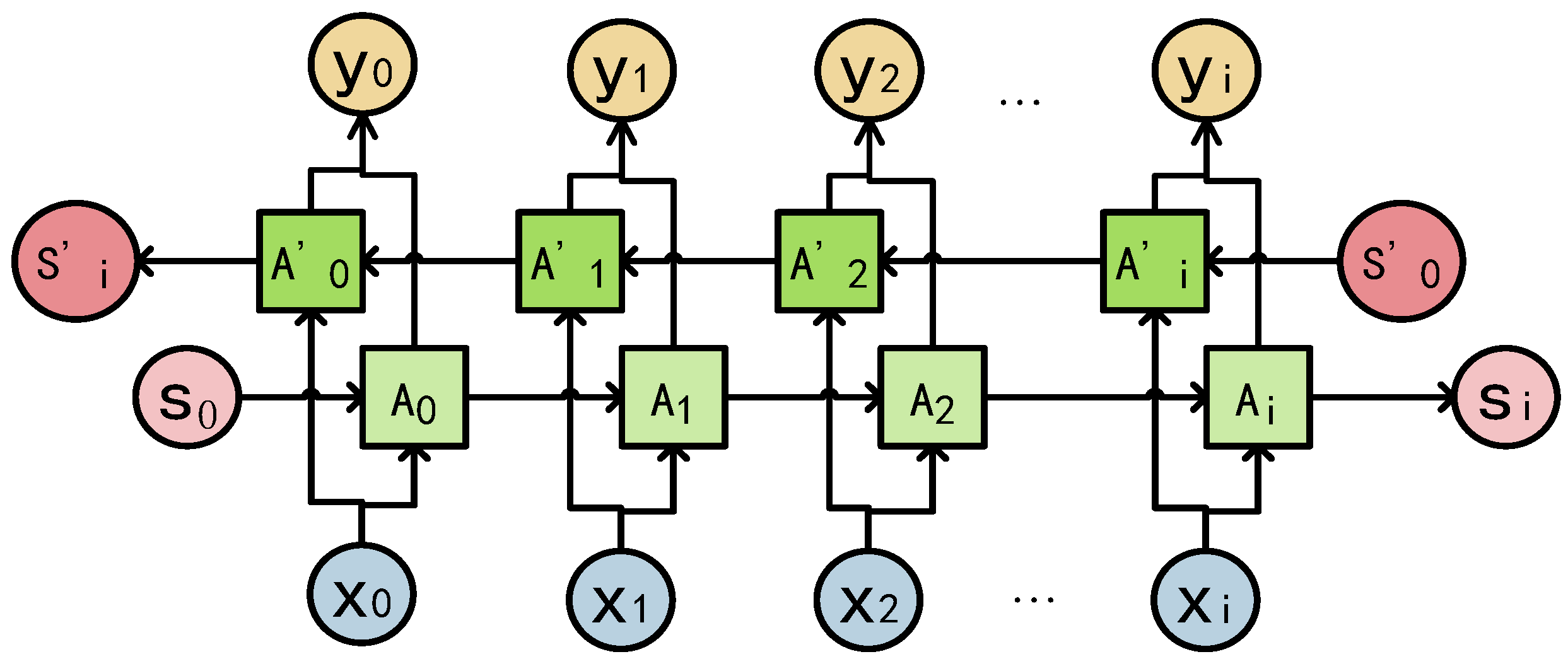

3.3.2. BLSTM Bidirectional Long Short-Term Memory Network

3.3.3. Hybrid Forecasting Model

- Selected information as model input.

- LVMD decomposes and denoises the original sequence, and DBFCM performs clustering.

- The IVIA population size N, the maximum number of iterations M, and the initial search range of the parameters (the number of neurons in the hidden layer H, training number E, learning rate , and regularization factor ) are set. The root mean square error () is used as the objective function in the optimization algorithm, and finally, the model of the influenza virus immunization algorithm coupled with the bidirectional long and short-term memory network is developed.

- The 1D CNN reads the load sequence with a sliding time window of 10 and a step size of 1 for feature extraction.

- m prediction models are obtained by inputting the CNN-IVIA-BLSTM prediction models for each component separately.

- Finally, the predicted values of the m prediction models are combined to obtain the predicted values of the load.

3.3.4. Power Load Forecast Evaluation Indicator

4. Case Analysis

4.1. Analysis of Data Processing Results

4.1.1. Data Denoising and Decomposition

4.1.2. Analysis of the Clustering Results

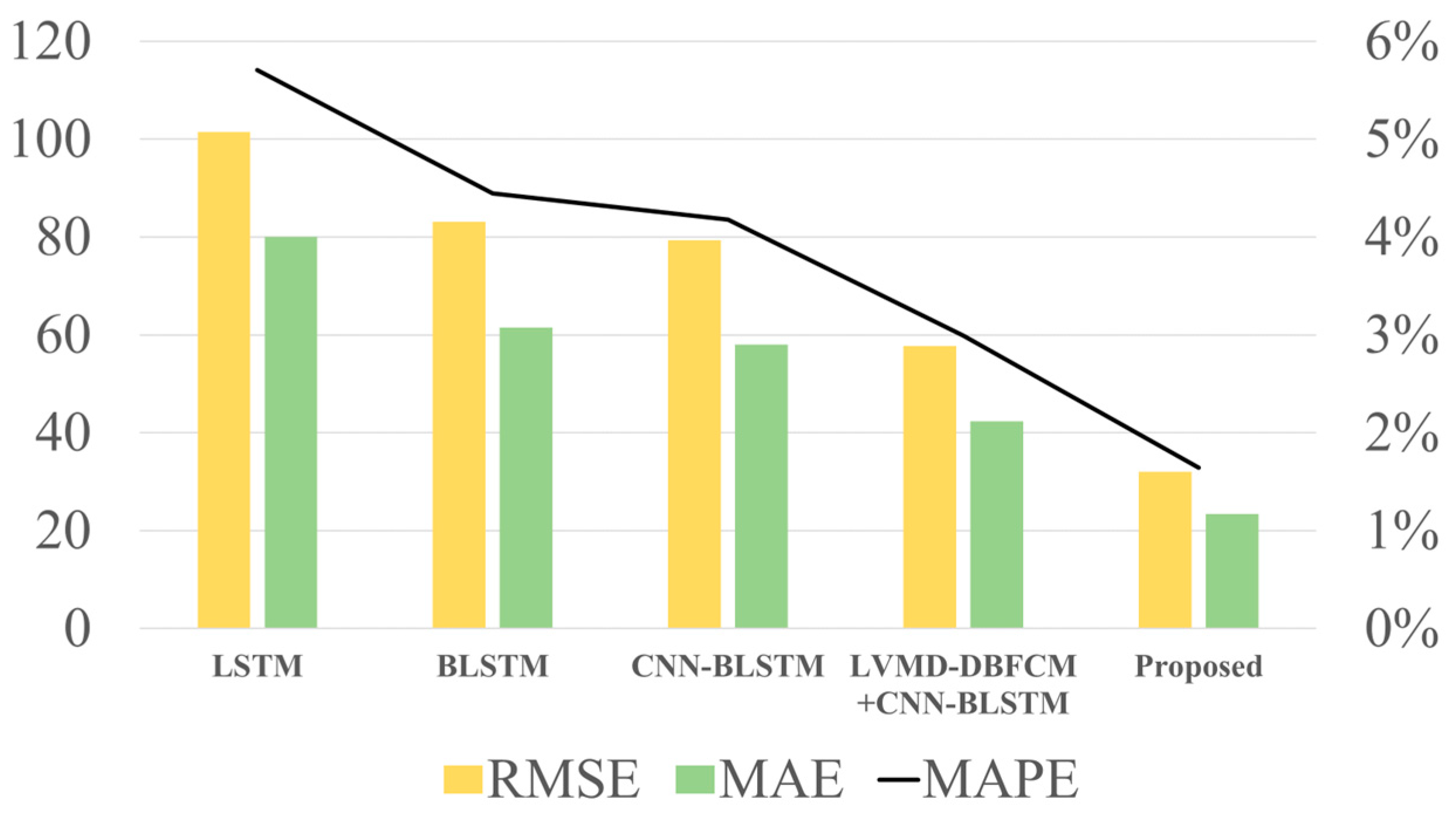

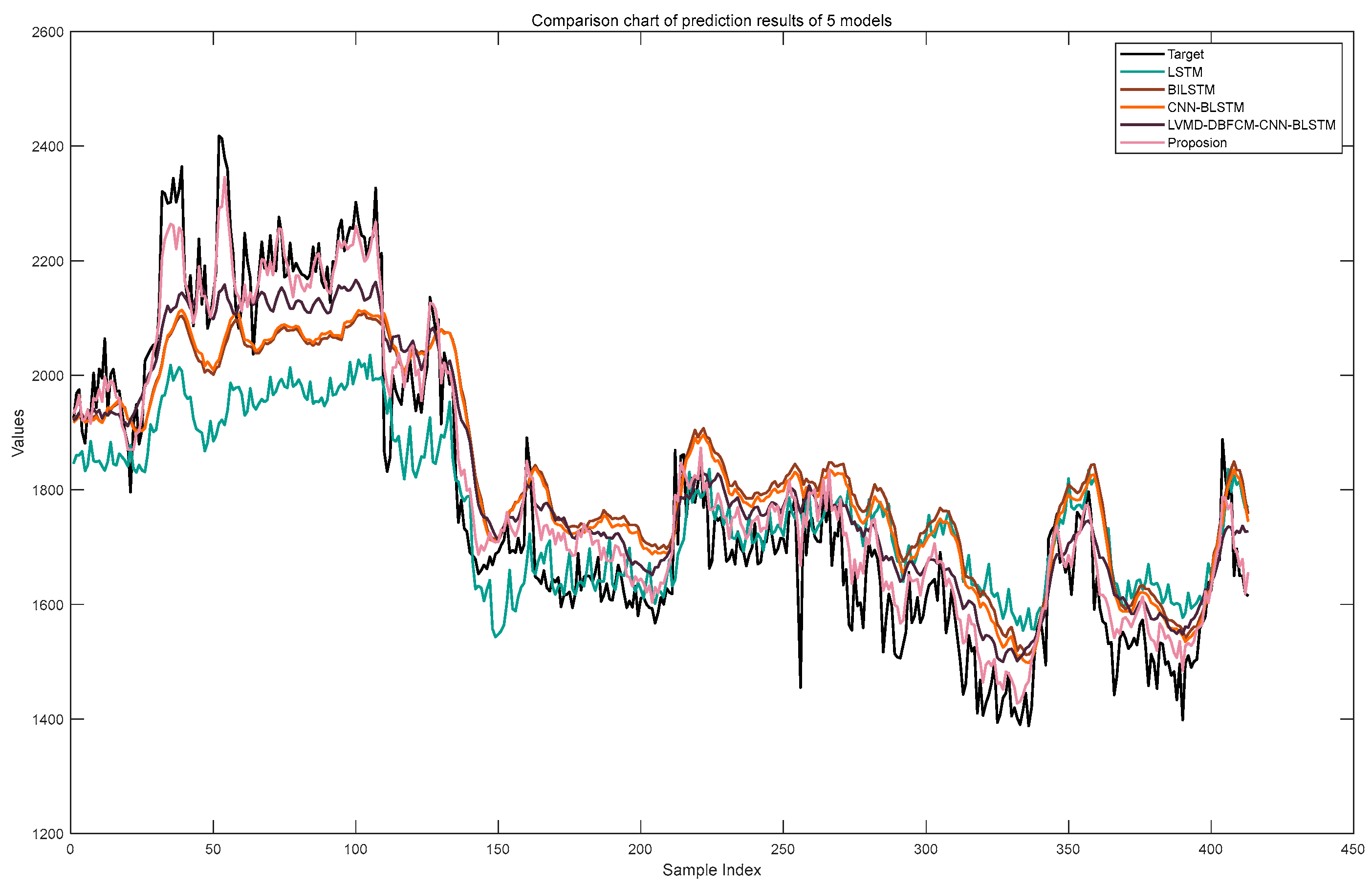

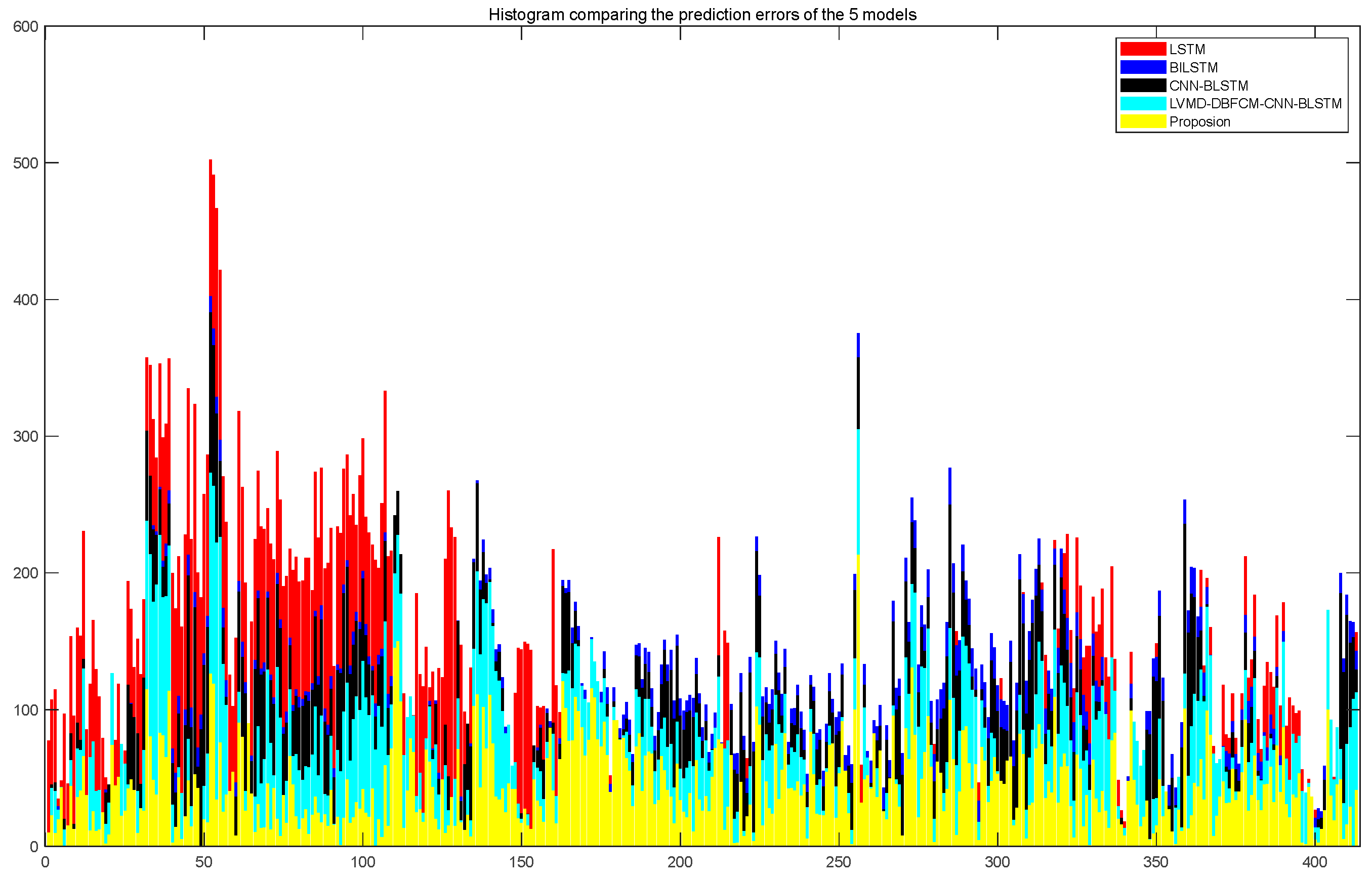

4.2. Analysis of the Prediction Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Huang, Y. Medium-Long Term Power Load Forecasting of a Region. Master’s Thesis, Xihua University, Chengdu, China, 2015. [Google Scholar]

- Han, F.J.; Wang, X.H.; Qiao, J. Review on Artificial Intelligence based Load Forecasting Research for the New-type Power System. Proc. CSEE 2023, 1–24. [Google Scholar]

- Zhang, Y.; Wang, A.H.; Zhang, H. Overview of smart grid development in China. Power Syst. Prot. Control 2021, 49, 180–187. [Google Scholar]

- Gao, D.D.; Gao, S.T. Review on medium-long term power load forecasting study. Sci. Technol. Innov. Her. 2014, 11, 25. [Google Scholar]

- Shang, C.; Gao, J.; Liu, H.; Liu, F. Short-term load forecasting based on PSO-KFCM daily load curve clustering and CNN-LSTM model. IEEE Access 2021, 9, 50344–50357. [Google Scholar] [CrossRef]

- Huang, J.B. Based on trend extrapolation method analysis of city complexes load development characteristicsitle of the chapter. Rural Electrif. 2019, 7, 39–42. [Google Scholar]

- Song, F.; Liu, J.; Zhang, T. The Grey Forecasting Model for the Medium-and Long-Term Load Forecasting. J. Phys. Conf. Ser. 2020, 1654, 012104. [Google Scholar] [CrossRef]

- Fan, G.F.; Qing, S.; Wang, H. TSupport Vector Regression Model Based on Empirical Mode Decomposition and Auto Regression for Electric Load Forecasting. Energies 2013, 6, 1887–1901. [Google Scholar] [CrossRef]

- Qiu, X.P. Neural Network and Deep Learning. J. Chin. Inf. Process. 2020, 34, 4. [Google Scholar]

- Jarquin, C.S.S.; Gandelli, A.; Grimaccia, F.; Mussetta, M. Short-Term Probabilistic Load Forecasting in University Buildings by Means of Artificial Neural Networks. Forecasting 2023, 5, 390–404. [Google Scholar] [CrossRef]

- Liu, J.H. Power load forecasting in Shanghai based on CNN-LSTM combined model. Proc. SPIE 2022, 12254, 125–132. [Google Scholar]

- Li, J.W.; Luong, T.; Dan, J. When are tree structures necessary for deep learning of representations. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; Association for Computational Linguistics: Stroudsburg, PA, USA, 2015. [Google Scholar]

- Gu, H.C.; Mou, P.; Li, J.W. Modeling and application of ethylene cracking furnace based on cross-iterative BLSTM network. CIESC J. 2019, 70, 548–555. [Google Scholar]

- Xu, Y.; Xiang, Y.F.; Ma, T.X. Short-term Power Load Forecasting Method Based on EMD-CNN-LSTM Hybrid Model. J. North China Electr. Power Univ. 2022, 49, 81–89. [Google Scholar]

- Gao, W.C. Urban Gas Load Forecasting Based on EWT-CNN-LSTM Model. Master’s Thesis, Shanghai Normal University, Shanghai, China, 2021. [Google Scholar]

- Sun, G.L.; Li, B.J.; Xu, D.M.; Li, Y.P. Monthly Runoff Prediction Model Based on VMD-SSA-LSTM. Water Resour. Power 2022, 40, 18–21. [Google Scholar]

- Yang, Y. Research and Application of Medium and Long Term Load Forecasting Technology. Master’s Thesis, Shenyang Institute of Computing Technology, Chinese Academy of Science, Shenyang, China, 2021. [Google Scholar]

- Mojtaba, G.; Faraji, D.I.; Ebrahim, A.; Abolfazl, R. A novel and effective optimization algorithm for global optimization and its engineering applications: Turbulent Flow of Water-based Optimization (TFWO). Eng. Appl. Artif. Intell. 2020, 92. [Google Scholar] [CrossRef]

- Abdolkarim, M.B.; Mahmoud, D.N.; Adel, A. Golden eagle optimizer: A nature-inspired metaheuristic algorithm. Comput. Ind. Eng. 2021, 152, 107050. [Google Scholar]

- Mohamed, A.A.; Hassan, S.A.; Hemeida, A.M.; Alkhalaf, S. Parasitism-Predation algorithm (PPA): A novel approach for feature selection. Ain Shams Eng. J. 2019, 11, 293–308. [Google Scholar] [CrossRef]

- Dhiman, G.; Garg, M.; Nagar, A.; Kumar, V. A novel algorithm for global optimization: Rat Swarm Optimizer. J. Ambient Intell. Humaniz. Comput. 2020, 12, 8457–8482. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Ramya, S.; Rajesh, N.B.; Viswanathan, B. Particle Swarm Optimization (PSO) based optimum Distributed Generation (DG) location and sizing for Voltage Stability and Loadability Enhancement in Radial Distribution System. Int. Rev. Autom. Control IREACO 2014, 7, 288–293. [Google Scholar]

- Jhila, N.; Modarres, K.F.; Akiko, Y. A whale optimization algorithm (WOA) approach for clustering. Cogent Math. Stat. 2018, 5, 1483565. [Google Scholar]

- Karol, S.; Ewelina, H.; Katarzyna, L.; Katarzyna, K. Spread of Influenza Viruses in Poland and Neighboring Countries in Seasonal Terms. Pathogens 2021, 10, 316. [Google Scholar]

- Oxford, J.S. Special Article: What Is the True Nature of Epidemic Influenza Virus and How Do New Epidemic Viruses Spread. Epidemiol. Infect. 1987, 99, 1–3. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Z.W. Study on the Impact of Weather Factors on Characteristics of Electric Load. Ph.D. Thesis, Zhejiang University, Hangzhou, China, 2008. [Google Scholar]

- Xu, F.; Weng, G.Q. Research on Load Forecasting Based on CNN-LSTM Hybrid Deep Learning Model. In Proceedings of the 2022 IEEE 5th International Conference on Electronics Technology (ICET), Chengdu, China, 13–16 May 2022; pp. 1332–1336. [Google Scholar]

- Hu, Y.C. Research on Power Load Pattern Recognition Method Based on Improved k-means Clustering Algorithm. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2018. [Google Scholar]

- Zhang, H.; Zhang, Y.; Xu, Z. Thermal Load Forecasting of an Ultra-short-term Integrated Energy System Based on VMD-CNN-LSTM. In Proceedings of the 2022 International Conference on Big Data, Information and Computer Network (BDICN), Sanya, China, 20–22 January 2022; pp. 264–269. [Google Scholar]

- Dragomiretskiy, K.; Zosso, D. Variational mode decomposition. IEEE Trans. Signal Process. 2014, 62, 531–544. [Google Scholar] [CrossRef]

- Yang, S.S.; Zhou, H.; Zhao, H.Y. Fault diagnosis method for the bearing of reciprocating compressor based on LMD multiscale entropy and SVM. J. Mech. Transm. 2015, 39, 119–123. [Google Scholar]

- Wu, D.S.; Yang, Q.; Zhang, J.Y. Ensemble fault diagnosis method based on VMD-LMD-CNN. Bearing 2020, 10, 57–63. [Google Scholar]

- Li, P.S.; Li, X.R.; Chen, H.H. The characteristics classification and synthesis of power load based on fuzzy clustering. Proc. CSEE 2005, 25, 73–78. [Google Scholar]

- Zhou, K.L. Theoretical and Applied Research on Fuzzy C-means Clustering and Its Cluster Validation. Ph.D. Thesis, Hefei University of Technology, Hefei, China, 2014. [Google Scholar]

- Dong, T. Research on Electrical Load Pattern Recognition and Load Forecasting Based on Deep Learning. Master’s Thesis, Jilin University, Changchun, China, 2022. [Google Scholar]

- Ma, Z.B.; Xu, S.A.; Zhu, S.B. Power Load Classification Based on Feature Weighted Fuzzy Clustering. ELECTRIC POWER 2022, 55, 25–32. [Google Scholar]

- Tomasini, C.; Emmendorfer, L.; Borges, E.N. A methodology for selecting the most suitable cluster validation internal indices. In Proceedings of the 31st Annual ACM Symposium on Applied Computing (SAC ′16), Pisa, Italy, 4–8 April 2016; pp. 901–903. [Google Scholar]

- Hao, X.H.; Li, Y.L.; Gu, Q. Power load data clustering algorithm based on DTW histogram. Transducer Microsyst. Technol. 2020, 39, 140–142. [Google Scholar]

- Liu, Y.H.; Zhao, Q. Ultra-short-term power load forecasting based on cluster empirical mode decomposition of CNN-LSTM. Power Syst. Technol. 2021, 45, 4444–4451. [Google Scholar]

- Zhu, H.S. Design and Implementation of CNN-BLSTM Speech Separation Algorithm Fused With Self-attention Mechanism. Master’s Thesis, Hebei University of Science and Technology, Shijiazhuang, China, 2021. [Google Scholar]

- Wang, J.D.; Gu, Z.C.; Ge, L.J. Load Clustering Characteristic Analysis of the Distribution Network Based on the Combined Improved Firefly Algorithm and K-means Algorithm. J. Tianjin Univ. Sci. Technol. 2023, 56, 137–147. [Google Scholar]

- Pan, X.G. Research of Fuzzy Clustering Algorithm on Complicated Data and Feature Weight Learning Techniques. Ph.D. Thesis, Jiangnan University, Wuxi, China, 2022. [Google Scholar]

| Standard | Dimension | Search Space | Minimum |

|---|---|---|---|

| 30 | [−100, 100] | 0 | |

| 30 | [−10, 10] | 0 | |

| 30 | [−100, 100] | 0 | |

| 30 | [−100, 100] | 0 | |

| 30 | [−30, 30] | 0 | |

| 30 | [−100, 100] | 0 | |

| 30 | [−128, 128] | 0 | |

| 30 | [−500, 500] | −12,569.5 | |

| 30 | [−5.12, 5.12] | 0 | |

| 30 | [−32, 32] | 0 | |

| 30 | [−600, 600] | 0 | |

| 30 | [−50, 50] | 0 | |

| 30 | [−50, 50] | 0 | |

| 2 | [−65.56, 65.56] | 1 | |

| 4 | [−5, 5] | 0.0003075 | |

| 2 | [−5, 5] | −1.0316 | |

| 2 | [−5, 5] | 0.398 | |

| 2 | [−2, 2] | 3 | |

| 3 | [1, 3] | −3.86 | |

| 6 | [0, 1] | −3.32 | |

| 4 | [0, 10] | −10.1532 | |

| 4 | [0, 10] | −10.4028 | |

| 4 | [0, 10] | −10.5364 |

| F | IVIA | TFWO | GEO | PPA | RSO | GWO | PSO | WOA | |

|---|---|---|---|---|---|---|---|---|---|

| optimal | 0.000 × 100 | 0.000 × 100 | 5.988 × 10−6 | 0.000 × 100 | 0.000 × 100 | 0.000 × 100 | 8.428 × 10−5 | 0.000 × 100 | |

| F1 | average | 1.820 × 10−248 | 4.900 × 10−1 | 2.136 × 10−1 | 1.598 × 10−6 | 9.458 × 10−102 | 1.985 × 10−47 | 6.789 × 10−12 | 9.486 × 10−71 |

| worst | 1.245 × 10−162 | 3.500 × 10−11 | 5.121 × 10−1 | 2.185 × 10−1 | 1.169 × 10−13 | 8.942 × 10−10 | 8.628 × 10−18 | 6.839 × 10−27 | |

| optimal | 0.000 × 100 | 0.000 × 100 | 0.000 × 100 | 0.000 × 100 | 0.000 × 100 | 6.843 × 10−36 | 6.880 × 10−10 | 1.642 × 10−104 | |

| F2 | average | 9.840 × 10−53 | 1.249 × 10−8 | 4.878 × 10−6 | 9.785 × 10−7 | 1.854 × 10−36 | 6.842 × 10−13 | 6.874 × 10−7 | 4.859 × 10−47 |

| worst | 2.350 × 10−30 | 1.895 × 10−6 | 1.236 × 10−4 | 8.545 × 10−5 | 1.561 × 10−18 | 6.842 × 10−6 | 6.420 × 10−4 | 6.465 × 10−28 | |

| optimal | 0.000 × 100 | 2.855 × 10−1 | 1.565 × 101 | 1.585 × 10−7 | 1.265 × 10−6 | 6.872 × 10−3 | 4.265 × 10−2 | 1.063 × 103 | |

| F3 | average | 4.185 × 10−11 | 1.522 × 100 | 5.016 × 102 | 1.855 × 10−5 | 1.295 × 10−6 | 3.000 × 101 | 2.326 × 101 | 3.064 × 103 |

| worst | 1.855 × 10−8 | 5.489 × 100 | 1.000 × 105 | 1.855 × 10−3 | 1.486 × 10−3 | 1.014 × 102 | 6.019 × 101 | 1.036 × 104 | |

| optimal | 9.459 × 10−77 | 1.125 × 100 | 2.153 × 100 | 2.126 × 100 | 3.165 × 10−3 | 2.894 × 10−24 | 8.486 × 10−2 | 3.492 × 10−1 | |

| F4 | average | 5.895 × 10−34 | 2.459 × 100 | 4.153 × 100 | 3.124 × 100 | 2.989 × 100 | 1.987 × 10−14 | 1.425 × 100 | 9.482 × 10−1 |

| worst | 2.349 × 10−19 | 3.157 × 100 | 8.166 × 100 | 5.156 × 100 | 6.362 × 100 | 6.850 × 10−7 | 6.895 × 100 | 3.843 × 100 | |

| optimal | 9.646 × 10−10 | 2.360 × 10−1 | 1.465 × 101 | 1.946 × 100 | 1.235 × 100 | 2.169 × 100 | 9.892 × 10−1 | 1.979 × 100 | |

| F5 | average | 5.419 × 10−8 | 6.523 × 100 | 5.988 × 102 | 1.249 × 101 | 1.565 × 101 | 1.249 × 101 | 1.468 × 101 | 9.162 × 100 |

| worst | 1.242 × 10−2 | 2.104 × 101 | 1.275 × 104 | 1.991 × 101 | 1.035 × 102 | 5.616 × 101 | 2.317 × 101 | 3.216 × 101 | |

| optimal | 2.355 × 10−18 | 3.985 × 10−4 | 8.852 × 10−2 | 1.289 × 10−3 | 1.965 × 10−6 | 3.687 × 10−1 | 7.985 × 10−10 | 6.485 × 10−2 | |

| F6 | average | 9.475 × 10−10 | 9.125 × 10−1 | 8.049 × 10−2 | 1.597 × 10−1 | 1.547 × 10−1 | 9.006 × 10−1 | 7.864 × 10−7 | 9.717 × 10−1 |

| worst | 9.855 × 10−5 | 1.836 × 100 | 3.598 × 100 | 1.099 × 100 | 6.218 × 10−1 | 2.663 × 100 | 6.843 × 10−2 | 2.097 × 100 | |

| optimal | 1.968 × 10−7 | 0.000 × 100 | 3.942 × 10−2 | 3.975 × 10−6 | 4.885 × 10−3 | 3.946 × 10−3 | 9.847 × 10−5 | 3.550 × 10−5 | |

| F7 | average | 2.495 × 10−3 | 1.698 × 10−1 | 3.965 × 10−1 | 3.735 × 10−1 | 1.125 × 10−2 | 8.972 × 10−1 | 8.885 × 10−1 | 4.165 × 10−1 |

| worst | 6.515 × 10−1 | 1.317 × 100 | 1.569 × 100 | 1.032 × 100 | 3.339 × 10−1 | 1.398 × 100 | 1.763 × 100 | 1.223 × 100 | |

| optimal | −1.256 × 104 | −1.024 × 104 | −5.663 × 103 | −1.124 × 104 | −1.035 × 104 | −9.176 × 103 | −1.034 × 104 | −1.224 × 104 | |

| F8 | average | −1.156 × 104 | −9.563 × 103 | −4.983 × 103 | −8.832 × 103 | −8.865 × 103 | −5.863 × 103 | −6.856 × 103 | −8.936 × 103 |

| worst | −1.055 × 104 | −7.934 × 103 | −3.527 × 103 | −7.295 × 103 | −5.235 × 103 | −4.330 × 103 | −5.845 × 103 | −7.532 × 103 | |

| optimal | 0.000 × 100 | 4.946 × 10−6 | 9.533 × 10−7 | 0.000 × 100 | 1.968 × 10−22 | 6.427 × 10−3 | 6.848 × 10−8 | 7.842 × 10−28 | |

| F9 | average | 5.649 × 10−5 | 1.568 × 10−3 | 3.798 × 10−3 | 1.765 × 10−3 | 1.765 × 10−6 | 3.499 × 10−5 | 3.492 × 10−4 | 9.787 × 10−9 |

| worst | 3.476 × 10−2 | 1.065 × 100 | 9.833 × 10−1 | 3.177 × 10−2 | 1.685 × 10−1 | 1.297 × 100 | 1.379 × 10−1 | 3.790 × 10−3 | |

| optimal | 9.852 × 10−21 | 4.682 × 10−11 | 1.649 × 10−4 | 6.842 × 10−8 | 9.845 × 10−6 | 5.400 × 10−16 | 6.463 × 10−5 | 6.842 × 10−15 | |

| F10 | average | 1.855 × 10−8 | 4.190 × 10−3 | 1.515 × 10−1 | 6.850 × 10−3 | 9.815 × 10−2 | 8.943 × 10−3 | 3.163 × 10−3 | 8.463 × 10−8 |

| worst | 1.642 × 10−5 | 1.684 × 10−1 | 1.331 × 100 | 1.146 × 100 | 3.855 × 10−1 | 6.315 × 10−1 | 9.198 × 10−1 | 6.318 × 10−3 | |

| optimal | 5.989 × 10 −9 | 9.847 × 10−5 | 5.646 × 10−3 | 1.685 × 10−7 | 9.946 × 10−5 | 3.489 × 10−2 | 3.965 × 10−4 | 3.787 × 10−9 | |

| F11 | average | 3.500 × 10−8 | 3.490 × 10−1 | 3.654 × 101 | 4.846 × 10−4 | 1.654 × 10−3 | 3.326 × 10−1 | 1.038 × 100 | 9.779 × 10−5 |

| worst | 1.985 × 10−4 | 2.165 × 100 | 1.068 × 102 | 3.168 × 100 | 5.486 × 10−1 | 1.235 × 100 | 1.380 × 100 | 3.480 × 10−3 | |

| optimal | 5.648 × 10−12 | 2.165 × 10−6 | 6.846 × 101 | 6.546 × 10−7 | 6.468 × 10−7 | 4.893 × 10−8 | 4.896 × 10−13 | 1.687 × 10−3 | |

| F12 | average | 6.942 × 10−8 | 1.656 × 10−3 | 6.845 × 102 | 8.646 × 10−5 | 6.579 × 10−4 | 7.893 × 108 | 4.987 × 10−10 | 6.447 × 10−1 |

| worst | 1.685 × 10−4 | 9.924 × 10−2 | 1.007 × 104 | 1.634 × 10−1 | 6.846 × 10−2 | 3.589 × 10−3 | 4.983 × 10−7 | 1.349 × 100 | |

| optimal | 6.115 × 10−8 | 4.216 × 10−5 | 2.647 × 101 | 5.242 × 10−2 | 5.464 × 10−4 | 4.685 × 10−4 | 4.165 × 10−7 | 4.198 × 10−8 | |

| F13 | average | 4.359 × 10−20 | 6.410 × 10−3 | 1.464 × 102 | 5.825 × 100 | 1.351 × 10−2 | 3.199 × 10−3 | 4.147 × 10−4 | 4.168 × 10−6 |

| worst | 4.642 × 10−8 | 1.416 × 100 | 1.001 × 104 | 2.353 × 101 | 3.861 × 100 | 2.056 × 100 | 1.325 × 100 | 9.650 × 10−3 | |

| optimal | 1.000 × 100 | 1.000 × 100 | 1.001 × 100 | 1.024 × 100 | 1.115 × 100 | 1.259 × 100 | 1.000 × 100 | 1.000 × 100 | |

| F14 | average | 1.001 × 100 | 1.674 × 100 | 1.009 × 100 | 2.196 × 100 | 1.351 × 100 | 1.950 × 100 | 1.350 × 100 | 1.268 × 100 |

| worst | 1.007 × 100 | 2.419 × 100 | 2.486 × 100 | 1.117 × 101 | 2.652 × 100 | 1.286 × 101 | 2.149 × 100 | 1.635× 108 | |

| optimal | 3.000 × 10−4 | 5.419 × 10−4 | 1.684 × 10−3 | 6.554 × 10−4 | 3.948 × 10−4 | 9.157 × 10−4 | 9.165 × 10−3 | 3.917 × 10−4 | |

| F15 | average | 3.425 × 10−4 | 8.646 × 10−3 | 1.682 × 10−3 | 6.834 × 10−3 | 6.422 × 10−2 | 2.925 × 10−3 | 6.117 × 10−3 | 9.164 × 10−3 |

| worst | 6.453 × 10−3 | 6.550 × 10−1 | 1.954 × 10−1 | 9.846 × 10−2 | 9.648 × 10−1 | 1.896 × 10−1 | 1.430 × 10−2 | 9.168 × 10−2 | |

| optimal | −1.032 × 100 | −9.186 × 10−1 | −1.021 × 100 | −1.001 × 100 | −9.425 × 10−1 | −9.168 × 10−1 | −8.250 × 10−2 | −1.000 × 100 | |

| F16 | average | −1.000 × 100 | −8.634 × 10−1 | −9.648 × 10−1 | −9.545 × 10−1 | −8.217 × 10−1 | −2.198 × 10−1 | −1.625 × 10−8 | −5.491 × 10−1 |

| worst | 4.618 × 10−1 | −5.462 × 10−1 | −6.139 × 10−1 | −8.316 × 10−1 | −7.717 × 10−1 | 1.198 × 10−1 | 1.032 × 100 | −1.493 × 10−1 | |

| optimal | 3.981 × 10−3 | 4.856 × 10−2 | 4.404 × 10−1 | 4.040 × 10−1 | 3.990 × 10−2 | 4.299 × 10−1 | 4.399 × 10−1 | 3.992 × 10−1 | |

| F17 | average | 4.040 × 10−2 | 4.000 × 10−2 | 6.004 × 10−1 | 6.040 × 10−1 | 8.036 × 10−1 | 6.498 × 10−1 | 9.413 × 10−1 | 1.065 × 100 |

| worst | 5.033 × 10−1 | 9.911 × 10−1 | 1.032 × 100 | 2.030 × 100 | 1.603 × 100 | 2.064 × 100 | 2.319 × 100 | 1.916 × 100 | |

| optimal | 3.000 × 100 | 3.005 × 100 | 3.016 × 100 | 3.149 × 100 | 3.001 × 100 | 3.198 × 100 | 3.616 × 100 | 3.264 × 100 | |

| F18 | average | 3.015 × 100 | 3.147 × 100 | 3.656 × 100 | 3.648 × 100 | 3.004 × 100 | 3.616 × 100 | 4.086 × 100 | 3.949 × 100 |

| worst | 3.146 × 100 | 3.142 × 100 | 4.411 × 100 | 4.245 × 100 | 3.133 × 100 | 4.691 × 100 | 4.620 × 100 | 5.017 × 100 | |

| optimal | −3.860 × 100 | −3.861 × 100 | −3.195 × 100 | −3.619 × 100 | −3.812 × 100 | −3.852 × 100 | −3.801 × 100 | −3.859 × 100 | |

| F19 | average | −3.677 × 100 | −3.852 × 100 | −2.870 × 100 | −3.595 × 100 | −3.816 × 100 | −3.742 × 100 | −3.550 × 100 | −3.726 × 100 |

| worst | −3.165 × 100 | −3.832 × 100 | −8.456 × 10−1 | −3.455 × 100 | −3.795 × 100 | −3.516 × 100 | −2.949 × 100 | −3.030 × 100 | |

| optimal | −3.320 × 100 | −3.146 × 100 | −3.307 × 100 | −3.032 × 100 | −2.919 × 100 | −1.982 × 100 | −2.098 × 100 | −3.156 × 100 | |

| F20 | average | −2.996 × 100 | −2.920 × 100 | −3.019 × 100 | −2.533 × 100 | −1.398 × 100 | −1.089 × 100 | −9.497 × 10−1 | −1.979 × 100 |

| worst | −2.649 × 100 | −2.520 × 100 | −2.120 × 100 | 1.298 × 100 | −5.945 × 10−1 | 1.685 × 10−1 | −6.430 × 10−2 | −1.189 × 100 | |

| optimal | −1.015 × 101 | −1.007 × 101 | −1.002 × 101 | −1.015 × 101 | −8.103 × 100 | −1.002 × 101 | −9.162 × 100 | −1.006 × 101 | |

| F21 | average | −9.122 × 100 | −9.004 × 100 | −9.654 × 100 | −1.012 × 101 | −4.137 × 100 | −8.981 × 100 | −7.198 × 100 | −9.682 × 100 |

| worst | −7.596 × 100 | −5.642 × 100 | −8.514 × 100 | −9.632 × 100 | −6.517 × 10−1 | −1.192 × 100 | −1.192 × 100 | −8.336 × 100 | |

| optimal | −1.040 × 101 | −1.032 × 101 | −1.035 × 101 | −1.040 × 101 | −1.039 × 101 | −1.032 × 101 | −9.198 × 100 | −1.006 × 101 | |

| F22 | average | −1.015 × 101 | −8.250 × 100 | −1.015 × 101 | −5.365 × 100 | −3.349 × 100 | −9.195 × 100 | −6.927 × 100 | −8.198 × 100 |

| worst | −1.002 × 101 | −4.820 × 100 | −9.671 × 100 | −2.389 × 10−1 | 8.924 × 10−2 | −4.928 × 100 | −1.162 × 100 | −1.265 × 10−1 | |

| optimal | −1.052 × 101 | −9.525 × 100 | −1.051 × 101 | −8.449 × 100 | −1.046 × 101 | −1.000 × 101 | −9.198 × 100 | −9.062 × 100 | |

| F23 | average | −1.025 × 101 | −6.489 × 100 | −1.050 × 101 | −4.168 × 100 | −6.265 × 100 | −5.984 × 100 | −3.198 × 10−1 | −6.894 × 100 |

| worst | −9.219 × 100 | −1.220 × 100 | −9.647 × 100 | −1.608 × 10−1 | −2.487 × 10−1 | −1.963 × 10−1 | 1.625 × 10−2 | −1.820 × 100 |

| Methods | CHI | DBI |

|---|---|---|

| K-means | 721.201 | 1.549 |

| FCM | 814.014 | 1.401 |

| DBFCM | 1024.743 | 1.216 |

| LVMD-DBFCM | 1142.509 | 1.083 |

| Models | RMSE | MAE | MAPE |

|---|---|---|---|

| LSTM | 101.4817 | 80.0980 | 5.7087% |

| BLSTM | 83.1498 | 61.4826 | 4.4460% |

| CNN-BLSTM | 79.3340 | 58.0245 | 4.1782% |

| LVMD-DBFCM+CNN-BLSTM | 57.7316 | 42.3669 | 2.9946% |

| Proposed | 31.9942 | 23.3691 | 1.6421% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, L.; Wang, J.; Guo, Z.; Zheng, T. Load Forecasting Based on LVMD-DBFCM Load Curve Clustering and the CNN-IVIA-BLSTM Model. Appl. Sci. 2023, 13, 7332. https://doi.org/10.3390/app13127332

Hu L, Wang J, Guo Z, Zheng T. Load Forecasting Based on LVMD-DBFCM Load Curve Clustering and the CNN-IVIA-BLSTM Model. Applied Sciences. 2023; 13(12):7332. https://doi.org/10.3390/app13127332

Chicago/Turabian StyleHu, Linjing, Jiachen Wang, Zhaoze Guo, and Tengda Zheng. 2023. "Load Forecasting Based on LVMD-DBFCM Load Curve Clustering and the CNN-IVIA-BLSTM Model" Applied Sciences 13, no. 12: 7332. https://doi.org/10.3390/app13127332

APA StyleHu, L., Wang, J., Guo, Z., & Zheng, T. (2023). Load Forecasting Based on LVMD-DBFCM Load Curve Clustering and the CNN-IVIA-BLSTM Model. Applied Sciences, 13(12), 7332. https://doi.org/10.3390/app13127332