Review of Scanning and Pixel Array-Based LiDAR Point-Cloud Measurement Techniques to Capture 3D Shape or Motion †

Abstract

1. Introduction

2. 3D LiDAR Fundamentals

2.1. Photon-to-Digital Conversation

2.2. LiDAR Measurement Data

2.3. LiDAR Multiple Returns

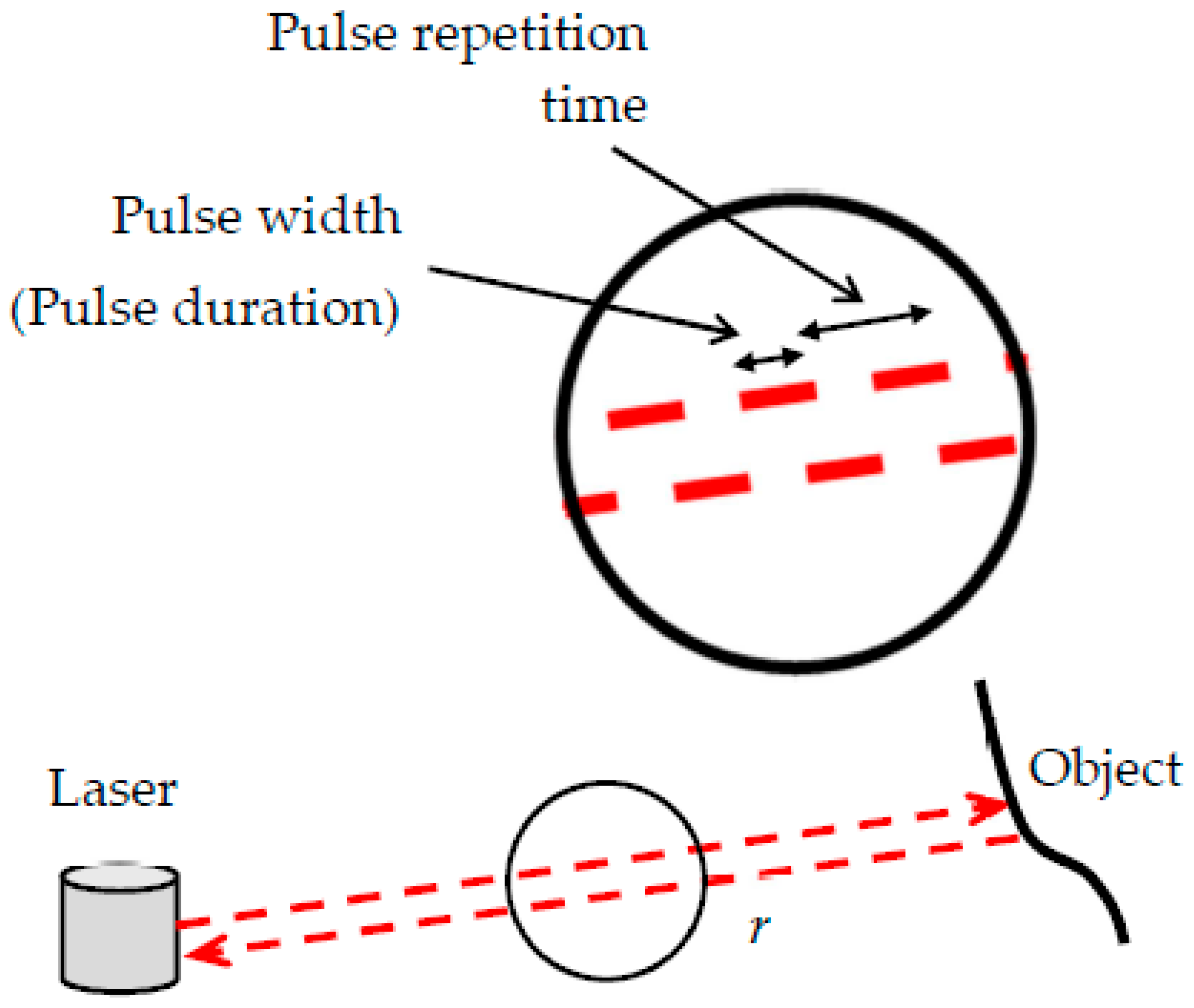

2.4. Pulse Repetition Frequency

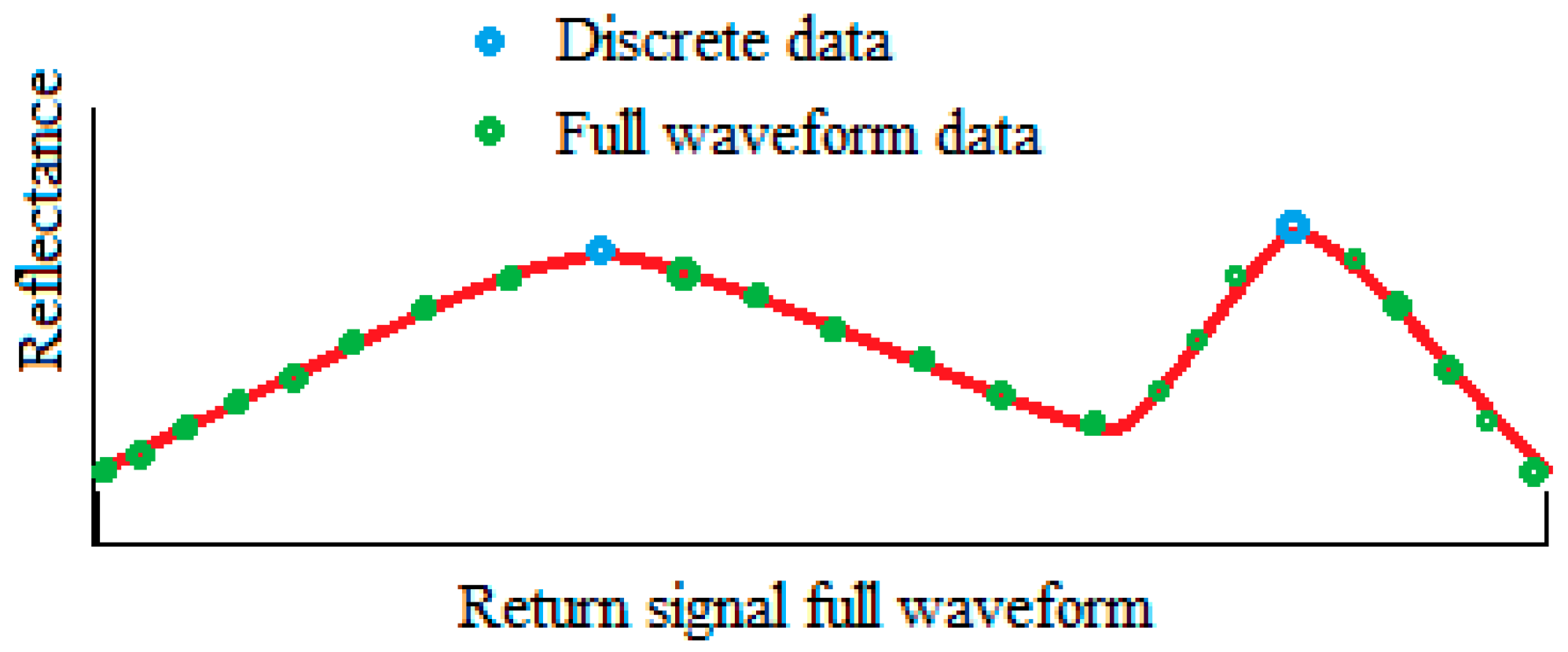

2.5. Discrete and Full-Waveform LiDAR

2.6. Multispectral LiDAR

3. LiDAR Range Measurement

3.1. Pulse-Time Range Measurement

- r: Measured distance

- c: Light speed

- Δt: Round trip time-of-flight

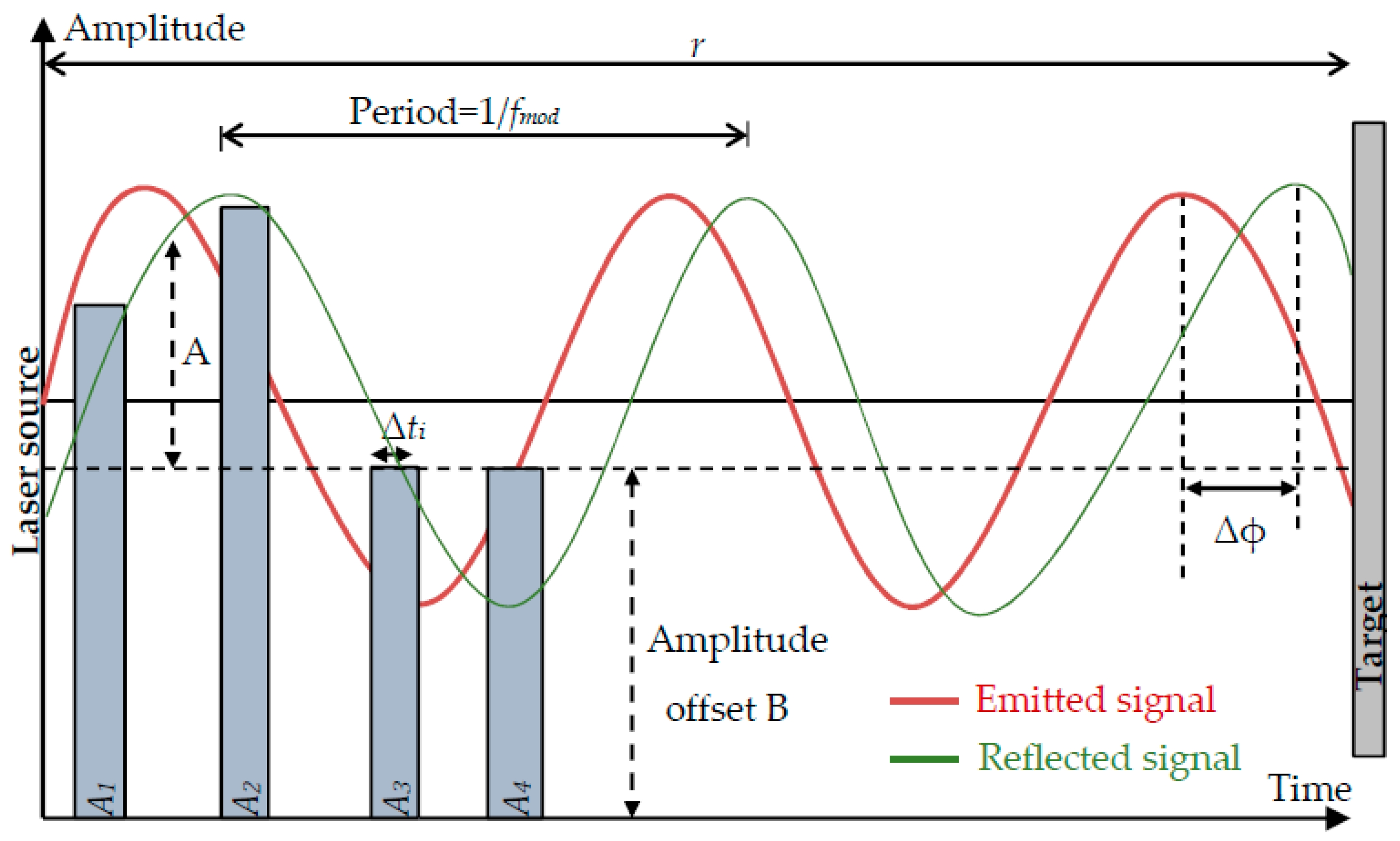

3.2. Phase-Shift Range Measurement

- Δt: Round trip time-of-flight

- Nc: Number of full wavelengths

- T: Period

- Δφ: Faz angle

4. LiDAR Photon Imaging

4.1. Linear-Mode LiDAR

4.2. Geiger-Mode LiDAR

4.3. Single-Photon LiDAR (SPL)

5. LiDAR 3D Point-Cloud Acquisition Techniques

5.1. Laser Scanning

5.1.1. Terrestrial Laser Scanner

5.1.2. Solid-State LiDAR Scanners

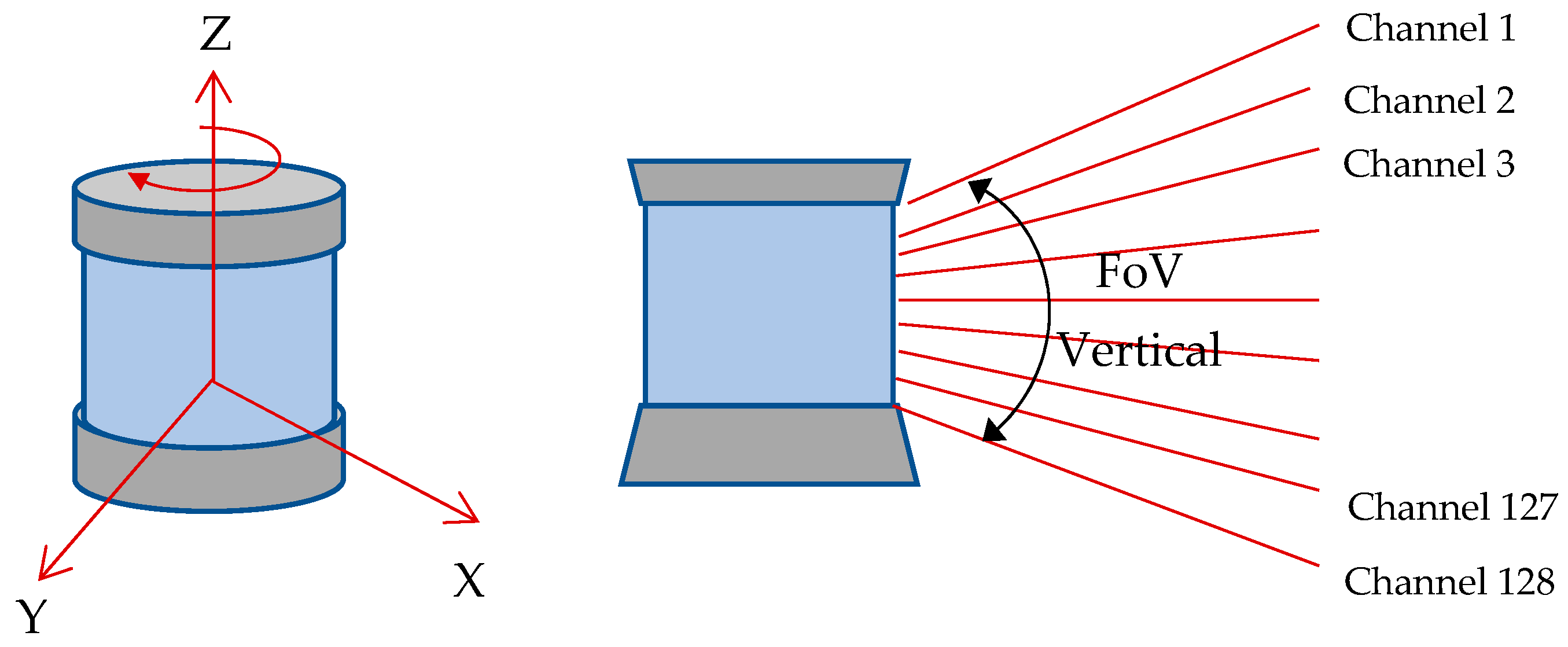

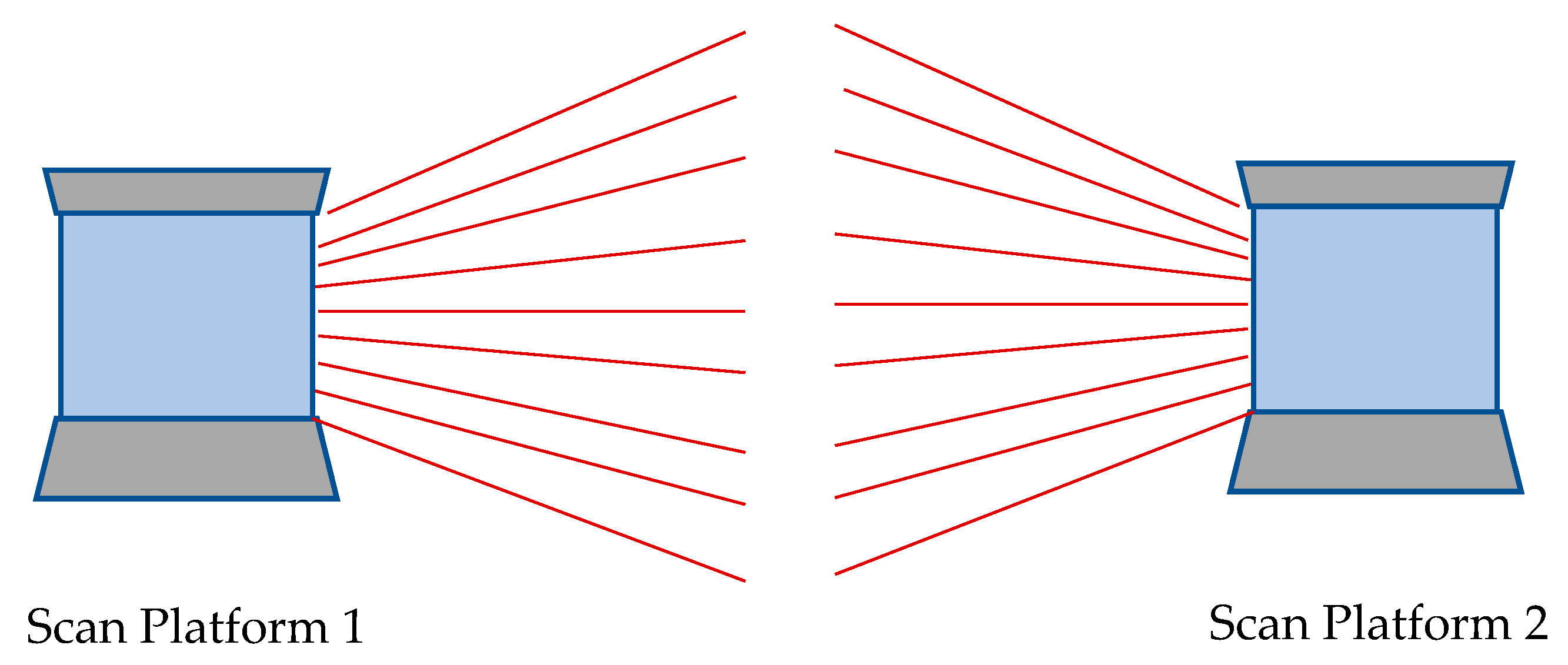

5.1.3. Multi-Beam LiDAR (MBL)

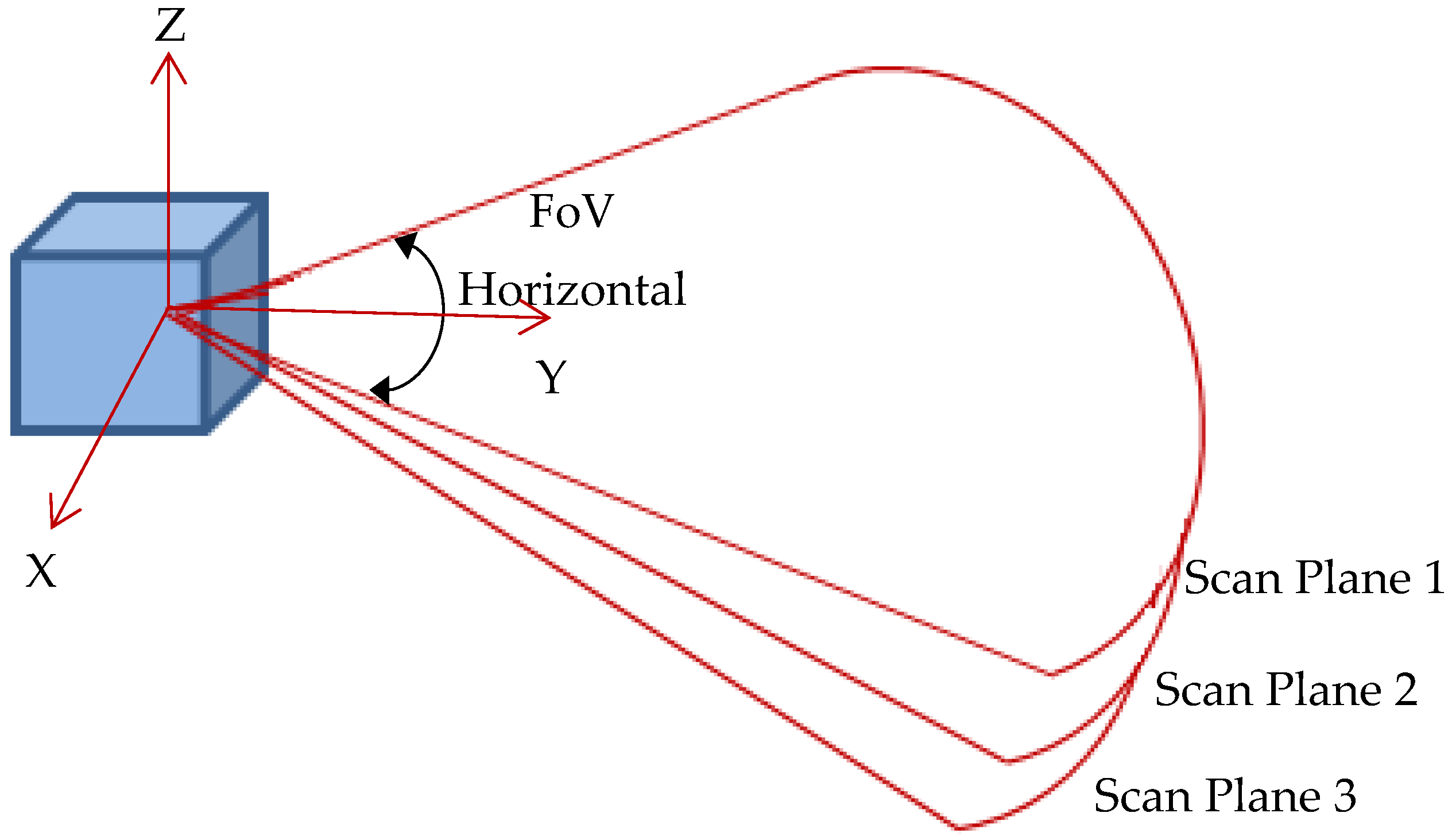

5.1.4. Multi-Layer LiDAR

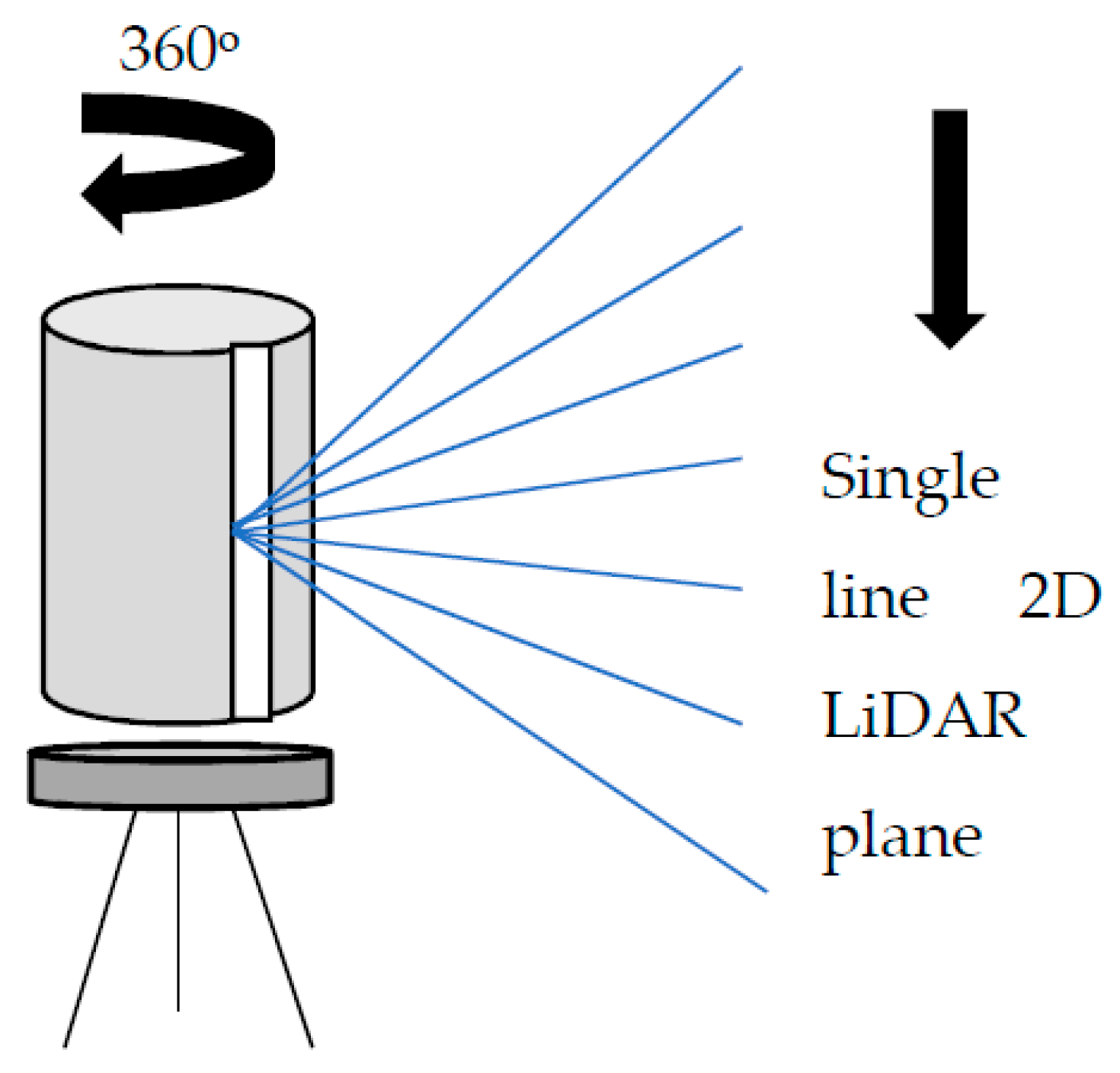

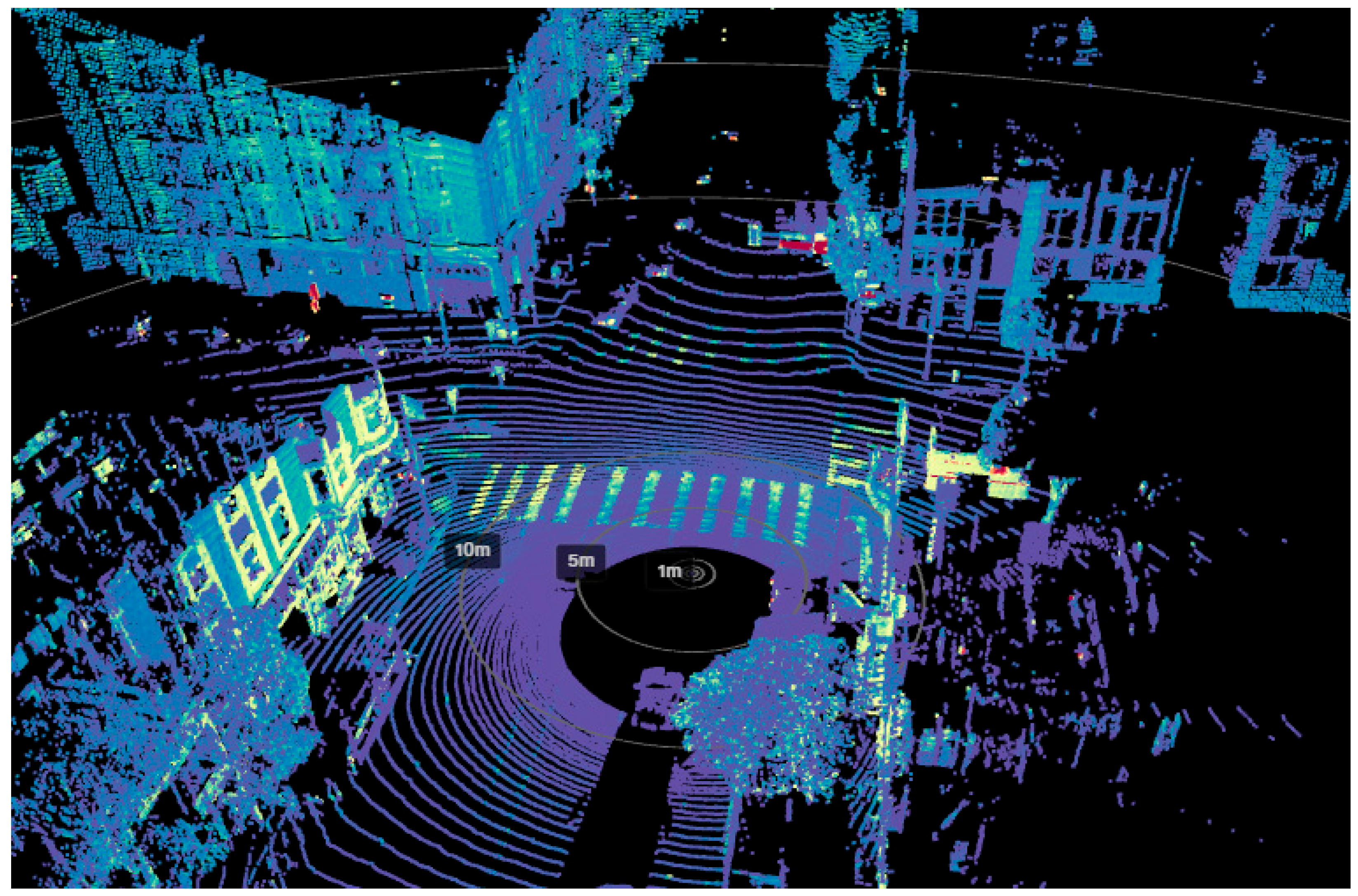

5.1.5. Rotating 2D LiDAR

5.2. Solid-State LiDAR Camera

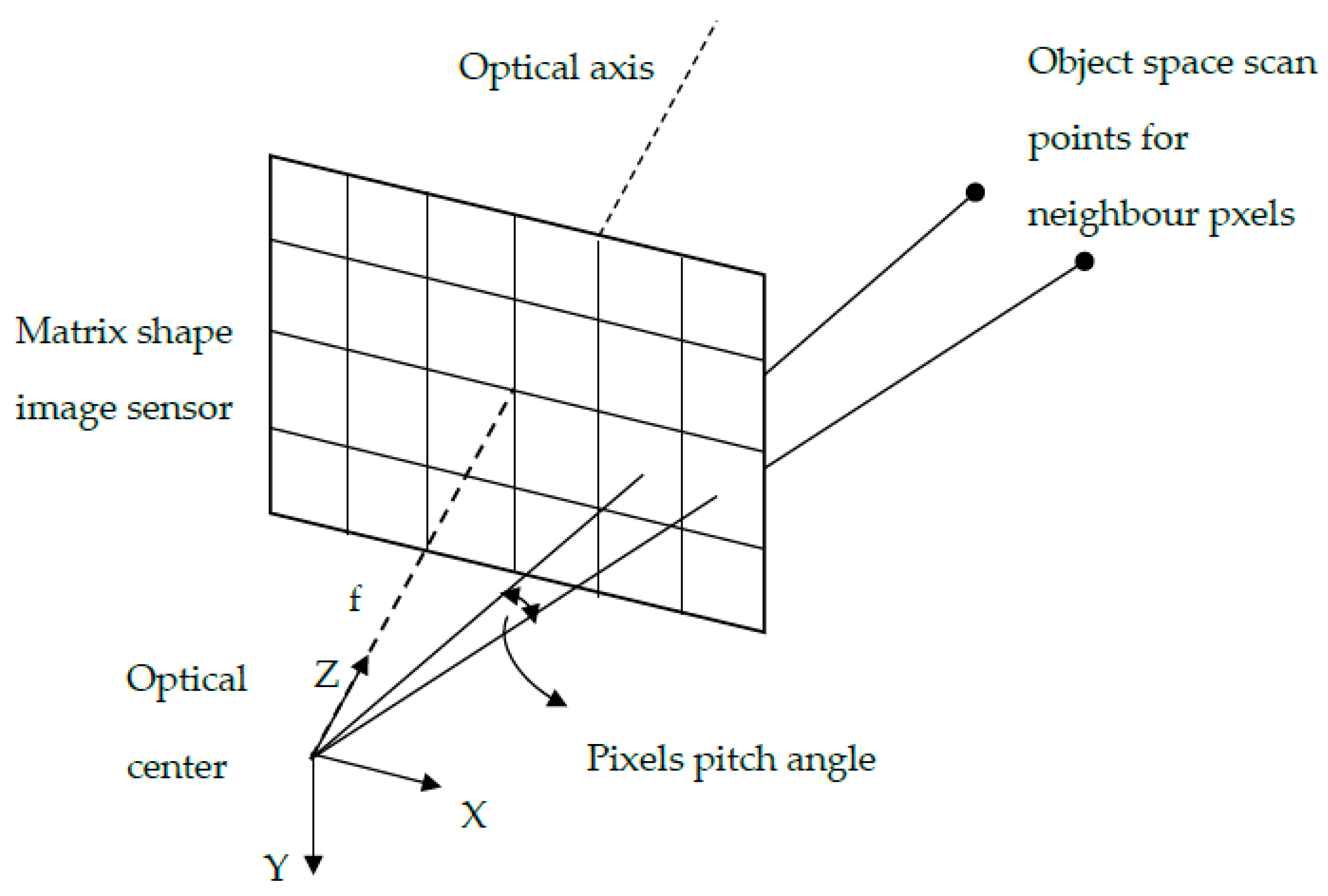

5.2.1. ToF Camera (AMCW)

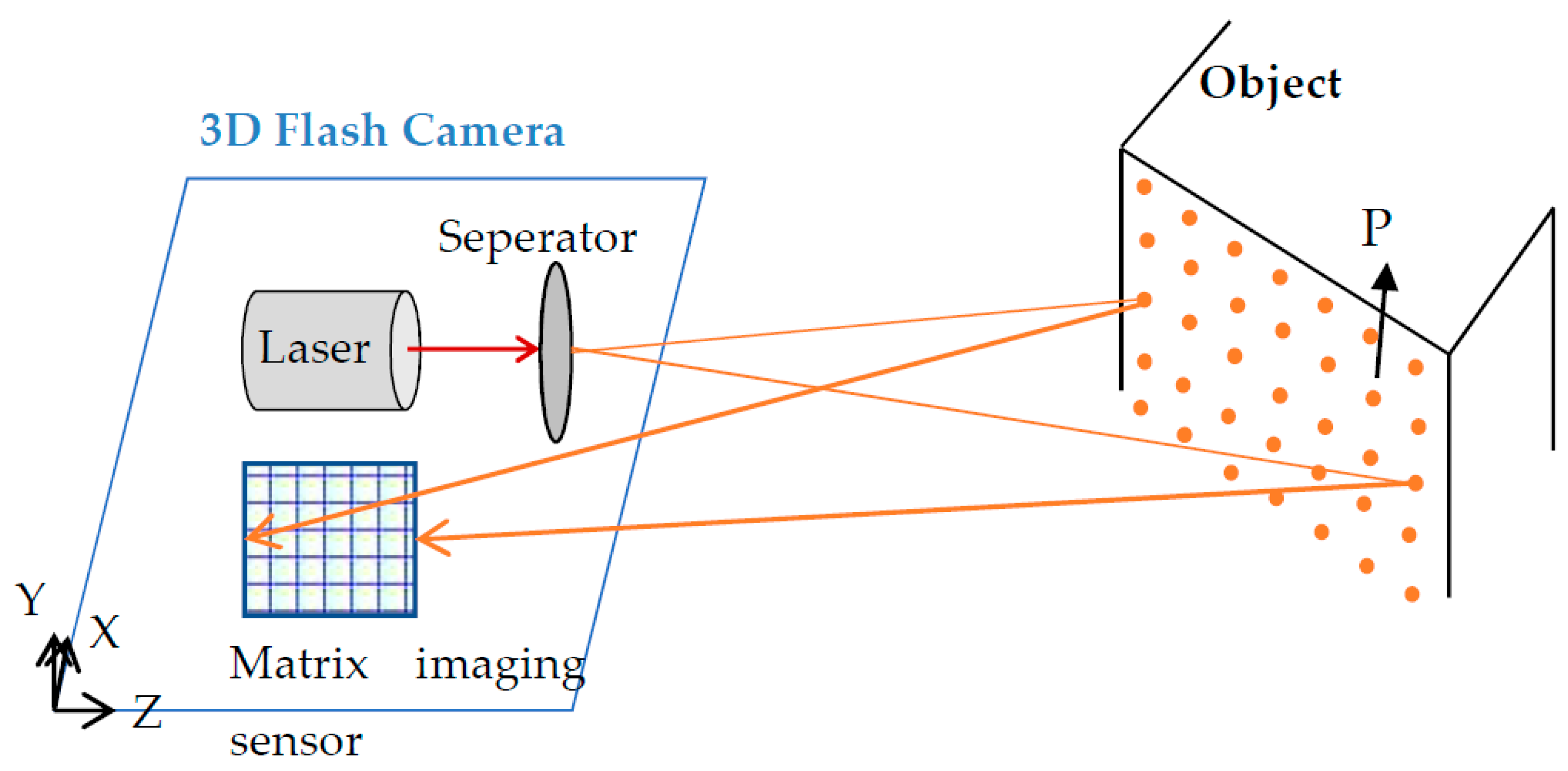

5.2.2. 3D Flash LiDAR Camera

6. Discussion

6.1. Mobile Measurement

6.2. Interference Effect

6.3. Point-Cloud Specifications

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, P.; Luo, Z.; Shi, W. Hysteretic mapping and corridor semantic modeling using mobile LiDAR systems. ISPRS J. Photogramm. Remote Sens. 2022, 186, 267–284. [Google Scholar] [CrossRef]

- Ma, R.; Chen, C.; Yang, B.; Li, D.; Wang, H.; Cong, Y.; Hu, Z. CG-SSD: Corner guided single stage 3D object detection from LiDAR point cloud. ISPRS J. Photogramm. Remote Sens. 2022, 191, 33–48. [Google Scholar] [CrossRef]

- Jung, J.; Sohn, G. A line-based progressive refinement of 3D rooftop models using airborne LiDAR data with single view imagery. ISPRS J. Photogramm. Remote Sens. 2019, 149, 157–175. [Google Scholar] [CrossRef]

- Francis, S.L.X.; Anavatti, S.G.; Garratt, M.; Shim, H. A ToF-camera as a 3D vision vensor for autonomous mobile robotics. Int. J. Adv. Robot. Syst. 2015, 12, 11. [Google Scholar] [CrossRef]

- Wang, J.; Sun, W.; Shou, W.; Wang, X.; Hu, C.; Chong, H.Y.; Liu, Y.; Sun, C. Integrating BIM and LiDAR for real-time construction quality control. J. Intell. Robot. Syst. 2015, 79, 417–432. [Google Scholar] [CrossRef]

- Dora, C.; Murphy, M. Current state of the art historic building information modelling. The International Archives of the Photogrammetry. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W5, 185–192. [Google Scholar]

- Hayakawa, Y.S.; Kusumoto, S.; Matta, N. Application of terrestrial laser scanning for detection of ground surface deformation in small mud volcano (Murono, Japan). Earth Planets Space 2016, 68, 114. [Google Scholar] [CrossRef]

- Mandlburger, G.; Hauer, C.; Wieser, M.; Pfeifer, N. Topo-bathymetric LiDAR for monitoring river morphodynamics and instream Habitats—A Case Study at the Pielach River. Remote Sens. 2015, 7, 6160–6195. [Google Scholar] [CrossRef]

- Yadav, M.; Singh, A.K.; Lohani, B. Extraction of road surface from mobile LiDAR data of complex road environment. Int. J. Remote Sens. 2017, 38, 4645–4672. [Google Scholar] [CrossRef]

- Xia, S.; Wang, R. Extraction of residential building instances in suburban areas from mobile LiDAR data. ISPRS J. Photogramm. Remote Sens. 2018, 144, 453–468. [Google Scholar] [CrossRef]

- Wang, X.; Li, P. Extraction of urban building damage using spectral, height and corner information from VHR satellite images and airborne LiDAR data. ISPRS J. Photogramm. Remote Sens. 2020, 159, 322–336. [Google Scholar] [CrossRef]

- Qi, X.; Lichti, D.D.; El-Badry, M.; Chan, T.O.; El-Halawany, S.I.; Lahamy, H.; Steward, J. Structural dynamic deflection measurement with range cameras. Photogramm. Rec. 2014, 29, 89–107. [Google Scholar] [CrossRef]

- Yeong, D.J.; Hernandez, G.V.; Barry, J.; Walsh, J. Sensor and sensor fusion technology in autonomous vehicles: A review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

- Hecht, J. LiDAR for self driving cars. Opt. Photonics News 2018, 29, 26–33. [Google Scholar] [CrossRef]

- Royo, S.; Garcia, M.B. An overview of LiDAR imaging systems for autonomous vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef]

- Nguyen, P.T.T.; Yan, S.W.; Liao, J.F.; Kuo, C.H. Autonomous mobile robot navigation in sparse LiDAR feature environments. Appl. Sci. 2021, 11, 5963. [Google Scholar] [CrossRef]

- McManamon, P.F.; Banks, P.; Beck, J.; Fried, D.G.; Huntington, A.S.; Watson, E.A. Comparison of flash LiDAR detector options. Opt. Eng. 2017, 56, 031223. [Google Scholar] [CrossRef]

- Hao, Q.; Tao, Y.; Cao, J.; Cheng, Y. Development of pulsed-laser three-dimensional imaging flash LiDAR using APD arrays. Microw. Opt. Technol. Lett. 2021, 63, 2492–2509. [Google Scholar] [CrossRef]

- Yu, X.; Kukko, A.; Kaartinen, H.; Wang, Y.; Liang, X.; Matikainen, L.; Hyyppa, J. Comparing features of single and multi-photon LiDAR in boreal forests. ISPRS J. Photogramm. Remote Sens. 2020, 168, 268–276. [Google Scholar] [CrossRef]

- Previtali, M.; Garramone, M.; Scaioni, M. Multispectral and mobile mapping ISPRS WG III/5 data set: First analysis of the dataset impact. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, XLIII-B3-2021, 229–235. [Google Scholar] [CrossRef]

- Okhrimenko, M.; Coburn, C.; Hopkinson, C. Multi-Spectral Lidar: Radiometric Calibration, Canopy Spectral Reflectance, and Vegetation Vertical SVI Profiles. Remote Sens. 2019, 11, 1556. [Google Scholar] [CrossRef]

- Gundacker, S.; Heering, A. The silicon photomultiplier: Fundamentals and applications of a modern solid-state photon detector. Phys. Med. Biol. 2020, 65, 17TR01. [Google Scholar] [CrossRef]

- Aull, B. Geiger-mode avalanche photodiode arrays integrated to all-digital CMOS circuits. Sensors 2016, 16, 495. [Google Scholar] [CrossRef]

- Ussyshkin, V.; Theriault, L. Airborne LiDAR: Advances in discrete return technology for 3D vegetation mapping. Remote Sens. 2011, 3, 416–434. [Google Scholar] [CrossRef]

- Hancock, S.; Armston, J.; Li, Z.; Gaulton, R.; Lewis, P.; Disney, M.; Danson, F.M.; Strahler, A.; Schaaf, C.; Anderson, K.; et al. Waveform lidar over vegetation: An evaluation of inversion methods for estimating return energy. Remote Sens. Environ. 2015, 164, 208–224. [Google Scholar] [CrossRef]

- Anderson, K.; Hancock, S.; Disney, M.; Gaston, K.J. Is waveform worth it? A comparison of LiDAR approaches for vegetation and landscape characterization. Remote Sens. Ecol. Conserv. 2015, 2, 5–15. [Google Scholar] [CrossRef]

- Morsy, S.; Shaker, A.; El-Rabbany, A. Multispectral LiDAR Data for Land Cover Classification of Urban Areas. Sensors 2017, 17, 958. [Google Scholar] [CrossRef]

- Kahlmann, T. Range Imaging Metrology: Investigation, Calibration and Development. Ph.D. Thesis, ETH Zurich, Zurich, Switzerland, 2007. [Google Scholar]

- Li, L. Time-of-flight cameras: An introduction. In Technical White Paper SLOA190B; Texas Instruments: Dallas, TX, USA, 2014. [Google Scholar]

- Piatti, D.; Rinaudo, F. SR-4000 and CamCube3.0 Time of Flight (ToF) Cameras: Tests and Comparison. Remote Sens. 2012, 4, 1069–1089. [Google Scholar] [CrossRef]

- Mandlburger, G.; Jutzi, B. On the feasibility of water surface mapping with single photon LiDAR. ISPRS Int. J. Geo-Inf. 2019, 8, 188. [Google Scholar] [CrossRef]

- Mandlburger, G. Evaluation of Single Photon and Waveform LiDAR. Arch. Photog. Cartog. Remote Sens. 2019, 31, 13–20. [Google Scholar] [CrossRef]

- Stoker, J.M.; Abdullah, Q.A.; Nayegandhi, A.; Winehouse, J. Evaluation of single photon and Geiger mode LiDAR for the 3D elevation program. Remote Sens. 2016, 8, 767. [Google Scholar] [CrossRef]

- Mizuno, T.; Ikeda, H.; Makino, K.; Tamura, Y.; Suzuki, Y.; Baba, T.; Adachi, S.; Hashi, T.; Mita, M.; Mimasu, Y.; et al. Geiger-mode three-dimensional image sensor for eye-safe flash LİDAR. IEICE Electron. Express 2020, 17, 20200152. [Google Scholar] [CrossRef]

- National Research Council. Laser Radar: Progress and Opportunities in Active Electro-Optical Sensing; The National Academies Press: Washington, DC, USA, 2014. [Google Scholar] [CrossRef]

- Mandlburger, G.; Lehner, H.; Pfeifer, N. A comparison of single photon LiDAR and full waveform LiDAR. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4, 397–404. [Google Scholar] [CrossRef]

- Villa, F.; Severini, F.; Madonini, F.; Zappa, F. SPADs and SiPMs Arrays for Long-Range High-Speed Light Detection and Ranging (LiDAR). Sensors 2021, 21, 3839. [Google Scholar] [CrossRef]

- Donati, S.; Martini, G.; Pei, Z. Analysis of timing errors in time-of-flight LiDAR using APDs and SPADs receivers. IEEE J. Quantum Electron. 2021, 57, 7500108. [Google Scholar] [CrossRef]

- Altuntas, C. Point cloud acquisition techniques by using scanning lidar for 3D modelling and mobile measurement. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 43, 967–972. [Google Scholar] [CrossRef]

- Reshetyuk, Y. Self-Calibration and Direct Georeferencing in Terrestrial Laser Scanning. Ph.D. Thesis, KTH Royal Institute of Technology, Stockholm, Sweden, 2009. [Google Scholar]

- Gorecki, C.; Bargiel, S. MEMS Scanning Mirrors for Optical Coherence Tomography. Photonics 2021, 8, 6. [Google Scholar] [CrossRef]

- Hamamatsu Corporation. Available online: https://www.hamamatsu.com/eu/en/index.html (accessed on 24 September 2021).

- Mirrorcle Technologies, Inc. Available online: https://mirrorcletech.com/wp/ (accessed on 6 August 2022).

- Guo, Y.; Guo, Y.; Li, C.; Zhang, H.; Zhou, X.; Zhang, L. Integrated Optical Phased Arrays for Beam Forming and Steering. Appl. Sci. 2021, 11, 4017. [Google Scholar] [CrossRef]

- Wei, W.; Shirinzadeh, B.; Nowell, R.; Ghafarian, M.; Ammar, M.M.A.; Shen, T. Enhancing solid state LiDAR mapping with a 2D spinning LiDAR in urban scenario SLAM on ground vehicles. Sensors 2021, 21, 1773. [Google Scholar] [CrossRef]

- Alsadik, B. Multibeam LiDAR for Mobile Mapping Systems. GIM Int. 2020, 2020, 1–3. [Google Scholar]

- Hesaitech. Available online: https://www.hesaitech.com/en/Pandar128 (accessed on 5 August 2022).

- Weber, H. LiDAR sensor functionality and variants. SICK AG White Pap. 2018, 7, 1–16. [Google Scholar]

- SICK AG. Operating Instruction of MRS6000 3D LİDAR Sensors. Available online: https://cdn.sick.com/media/docs/0/40/540/operating_instructions_mrs6000_en_im0076540.pdf (accessed on 8 August 2022).

- Horaud, R.; Hansard, M.; Evangelidis, M.; Menier, C. An overview of depth cameras and range scanners based on time-of-flight technologies. Mach. Vis. Appl. 2016, 27, 1005–1020. [Google Scholar] [CrossRef]

- Fuller, L.; Carl, A.; Spagnolia, J.; Short, B.; Dahlin, M. Photon counting linear mode global shutter flash LİDAR for improved range performance. In Laser Radar Technology and Applications XXVII; SPIE: Bellingham, WA, USA, 2022; Volume 12110, pp. 35–41. [Google Scholar]

- LeiShen Intelligent System Co., Ltd. Available online: http://www.lsLiDAR.com/en/hs/86 (accessed on 11 August 2022).

- El-Khrachy, I.M. Towards an Automatic Registration for Terrestrial Laser Scanner Data. Ph.D. Dissertation, Technical University of Braunschweig, Brunswick, Germany, 2008. [Google Scholar]

| Brand/Model | LW (nm) | FoV (H° × V°) | Range Principle | Max. Range | Measuring Speed | Range Accur. |

|---|---|---|---|---|---|---|

| Leica/BLK360 | 830 | 360 × 270 | Phase-shift | 45 m | 680,000 pts/s | 7 mm@20 m |

| Leica/RTC360 | 1550 | 360 × 300 | Phase-shift | 130 m | 2 M pts/s | 0.5 mm@20 m |

| Leica/ScanStatioP40 | 1550 | 360 × 290 | Phase-shift | 270 m | 1 M pts/s | 0.5 mm@50 m |

| Z + F/IMAGER 5016 | 1500 | 360 × 320 | Phase-shift | 365 m | 1.1 M pts/sec | 1 mm + 10 ppm |

| RIEGL/VZ-6000 | N.Inf. | 360 × 60 | Pulsed | 6000 m | 222,000 pts/s | 15 mm@150 m |

| RIEGL/VZ-4000 | N.Inf. | 360 × 60 | Pulsed | 4000 m | 222,000 pts/s | 15 mm@150 m |

| RIEGL/VZ-400i | N.Inf. | 360 × 100 | Pulsed | 800 m | 500,000 pts/s | 5 mm@100 m |

| FARO/Focus Pre 350 | 1553.5 | 360 × 300 | Phase-shift | 350 m | 2 M pts/s | 1 mm@25 m |

| OPTECH/Polaris | 1550 | 360 × 120 | Pulsed | 2000 m | 2 Mhz | 5 mm@100 m |

| Topcon/GLS-2200 | 1064 | 360 × 270 | Pulsed | 500 m | 120,000 pts/s | 3.1 mm@150 m |

| Trimble/TX8 | 1500 | 360 × 317 | Pulsed | 340 m | 1 M pts/s | <2 mm |

| PENTAX/S-3180V | Na | 360 × 320 | Pulsed | 187.3 m | 1.016 M pts/s | <1 mm |

| Maptek XR3 | N.Inf. | 360 × 90 | Pulsed | 2400 m | 400,000 pts/s | 5 mm@65 m |

| GVI/LiPod | 903 | 360 × 30 | Pulsed | 100 m | 600,000 pts/s | <3 cm |

| Brand/Model | LW (nm) | LO | FoV (H° × V°) | Max. Range | RR | Measuring Speed | Range Accur. | PC |

|---|---|---|---|---|---|---|---|---|

| Velodyne/Velabit | 903 | MEMS | 90 × 70 | 100 m | na | na | na | 3 W |

| Hesai/PandarGT-L60 | 1550 | MEMS | 60 × 20 | 300 m%10 | 20 Hz | 945,000 pts/s | 2 cm | 30 W |

| Livox/Mid-40 | 905 | OPA | Circular 38.4° | 260 m | na | 100,000 pts/s | 2 cm | 10 W |

| Livox/Avia | 905 | OPA | 70.4 × 77.2 circular | 450 m | na | 240,000 pts/s | 2 cm | 9 W |

| Intel R.Sense/L515 | 860 | MEMS | 70 × 55 | 9 m | 30 fps | 23 M pts/s | 14 mm | 3.5 W |

| Quanergy/S3-2NSI-S00 | 905 | OPA | 50 × 4 | 10 m@%80 | 10 Hz | na | 5 cm | 6 W |

| Leishen/LS21F | 1550 | MEMS | 60 × 25 | 250 m@%5 | 30 Hz | 4 M pts/s | 5 cm | 35 W |

| Brand/Model | LW (nm) | Channel# | FoV (H° × V°) | Max. Range | RR | Measuring Speed | Range Accur. | PC |

|---|---|---|---|---|---|---|---|---|

| Velodyne/HDL-32E | 905 | 32 | 360 × 40 | 100 m | 20 Hz | 1.39 M pts/s | 2 cm | 12 W |

| Ouster/OS2-128 | 865 | 128 | 360 × 22.5 | 240 m | 20 Hz | 2,621,440 pts/s | 2.5 cm | 24 W |

| HESAI/Pandar 64 | 905 | 64 | 360 × 40 | 200 m | 20 Hz | 1,152,000 pts/s | 2 cm | 22 W |

| Quanergy/M8-Ultra | 905 | 8 | 360 × 20 | 200 m | 20 Hz | 1.3 M pts/s | 3 cm | 16 W |

| LSLİDAR/C32 | 905 | 32 | 360 × 31 | 150 m | 20 Hz | 600,000 pts/s | 3 cm | 9 W |

| LSLİDAR/CH32 | 905 | 32 | 120 × 22.25 | 200 m | 20 Hz | 426,000 pts/s | 2 cm | 10 W |

| Brand/Model | LW (nm) | Scan Planes | FoV (H° × V°) | Range Principle | Max. Range | RR | Measuring Speed | PC |

|---|---|---|---|---|---|---|---|---|

| SICK/MRS1000 | 850 | 4 | 275 × 7.5 | Puls-time | 30 m | 50 Hz | 165,000 pts/s | 30 W |

| SICK/MRS6000 | 870 | 24 | 275 × 15 | Puls-time | 75 m | 10 Hz | Na | 20 W |

| PEPPERL + FUCHS/R2300 | 905 | 4 | 100 × 9 | Puls-time | 10 m | 90 kHz | 50,000 pts/s | 8 W |

| Brand/Model | LW (nm) | FoV (H° × V°) | Max. Range | Measuring Speed | Range Accur. | Return Signal# | PC |

|---|---|---|---|---|---|---|---|

| Acuity Technologies/AL-500 | 905 | 360 × 120 | 300 m | 200,000 pts/s | 8 mm | 4 | 12 W |

| Acuity Technologies/AL-500AIR | 905 | 120 × 120 | 300 m | 200,000 pts/s | 8 mm | 4 | 6 W |

| Brand/Model | LW (nm) | Pixel Array | FoV (H° × V°) | Range Principle | Max. Range | Frame Rate | Range Accuracy | PC |

|---|---|---|---|---|---|---|---|---|

| Terabee/3Dcam | IR | 80 × 60 | 74 × 57 | Phase-shift (indoor) | 4 m | 30 fps | 1%of distance | 4 W |

| LUCID/Helios2+ | 850 nm | 640 × 480 | 69 × 51 | Phase-shift (indoor) | 8.3 m | 103 fps | 4 mm@1.5 m | 12 W |

| Panasonic/HC4W | 940 nm | 640 × 480 | 90 × 70 | Pulsed (outdoor) | 4 m | 30 fps | Na | 5.4 W |

| Odos Imaging/Swift-E | 850 nm | 640 × 480 | 43 × 33 | Pulsed (outdoor) | 6 m | 44 fps | 1 cm | 30 W |

| Brand/Model | LW (nm) | Pixel Array | FoV (H° × V°) | Range Principle | Imaging Technique | Max. Range | Frame Rate | Range Accur. | PC |

|---|---|---|---|---|---|---|---|---|---|

| ASC/GSFL-16K | 1570 | 128 × 128 | 3 × 60 | Puls-time | LMAPD | 1000 m | 20 Hz | 10 cm | 30 W |

| Ball Aerospace/ GM-Camera | 1000 | 128 × 32 | Na | Puls-time | GMAPD | 6 km | 90 kHz | 7.5 cm | 18 W |

| Ouster/ES2 | 880 | Na | 26 × 13 | Puls-time | SPAD | 450 m | 30 Hz | 5 cm | 18 W |

| Leica/SPL100 | 532 | 10 × 10 beamlet | 20 × 30 | Puls-time | SPL | 4500 m | 25 Hz | 10 cm | 5 W |

| Leddar Tech/ Leddar Pixel | 905 | Na | 177.5 × 16 | Puls-time | LMAPD | 56 m | 20 Hz | <3 cm | 20 W |

| Point-Cloud Acquisition | Max. Range | Measuring Speed | PC | Mobility | Main Application Area | |

|---|---|---|---|---|---|---|

| Scanning | Mechanical LiDAR (TLS) | 6 km | 222,000 pts/s | Na | No | Object, historical relics, building, scene view 3D modeling |

| Solid-state LiDAR-MEMS | 300 m | 945,000 pts/s | Na | Yes | Autonomous driving, smart cities, indoor mapping, logistics, delivery, transportation, robotics | |

| Solid-state LiDAR-OPA | 450 m | 240,000 pts/s | 9 W | Yes | Robot and vehicle navigation, mapping, powerline surveying, smart cities, security | |

| Multi-beam LiDAR | 200 m | 1.3 M pts/s | 18 W | Yes | Autonomous cars and trucks, robotaxis, robotics, indoor and outdoor mobile mapping | |

| Multi-layer LiDAR | 75 m | 10 Hz | 20 W | Yes | Mobility and navigation services. Gap-free measurement, mining | |

| Rotating 2D LiDAR | 300 m | 200,000 pts/s | 6 W | Yes | Vehicle navigation and obstacle detection, crime scene reconstruction, forest inventory, drone, and airborne mapping | |

| Pixel array | ToF camera (AMCW) | 8 m | 40 fps | 12 W | Yes | Indoor navigation, mapping, and modeling, BIM applications |

| 3D Flash LiDARcamera | 6 km | 90 kHz | 18 W | Yes | Outdoor navigation and modeling, space research, robotic automation, heavy industry |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Altuntas, C. Review of Scanning and Pixel Array-Based LiDAR Point-Cloud Measurement Techniques to Capture 3D Shape or Motion. Appl. Sci. 2023, 13, 6488. https://doi.org/10.3390/app13116488

Altuntas C. Review of Scanning and Pixel Array-Based LiDAR Point-Cloud Measurement Techniques to Capture 3D Shape or Motion. Applied Sciences. 2023; 13(11):6488. https://doi.org/10.3390/app13116488

Chicago/Turabian StyleAltuntas, Cihan. 2023. "Review of Scanning and Pixel Array-Based LiDAR Point-Cloud Measurement Techniques to Capture 3D Shape or Motion" Applied Sciences 13, no. 11: 6488. https://doi.org/10.3390/app13116488

APA StyleAltuntas, C. (2023). Review of Scanning and Pixel Array-Based LiDAR Point-Cloud Measurement Techniques to Capture 3D Shape or Motion. Applied Sciences, 13(11), 6488. https://doi.org/10.3390/app13116488