Multi-Stage Prompt Tuning for Political Perspective Detection in Low-Resource Settings

Abstract

:1. Introduction

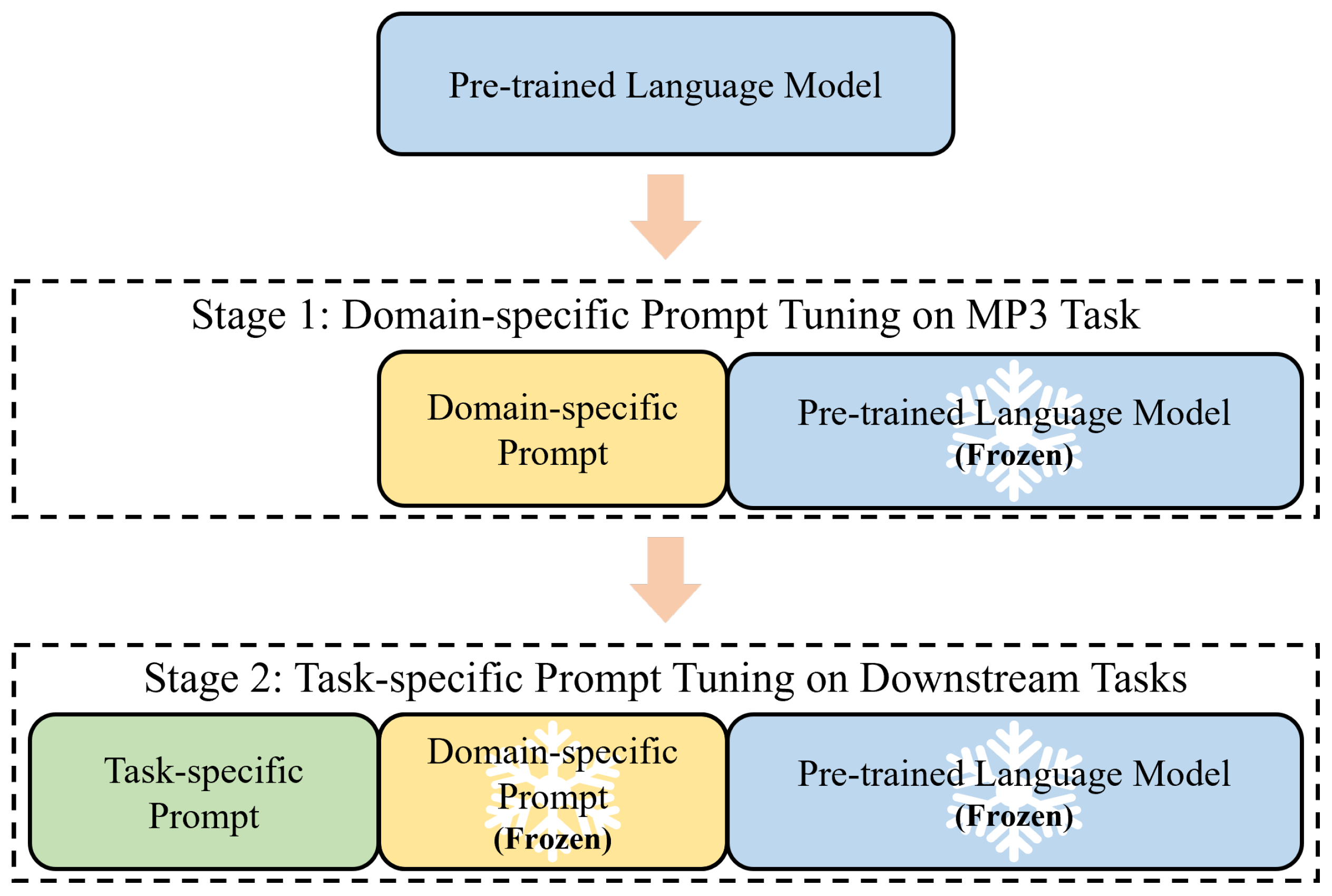

- We develop a multi-stage continuous prompt tuning framework for political perspective detection in low-resource settings.

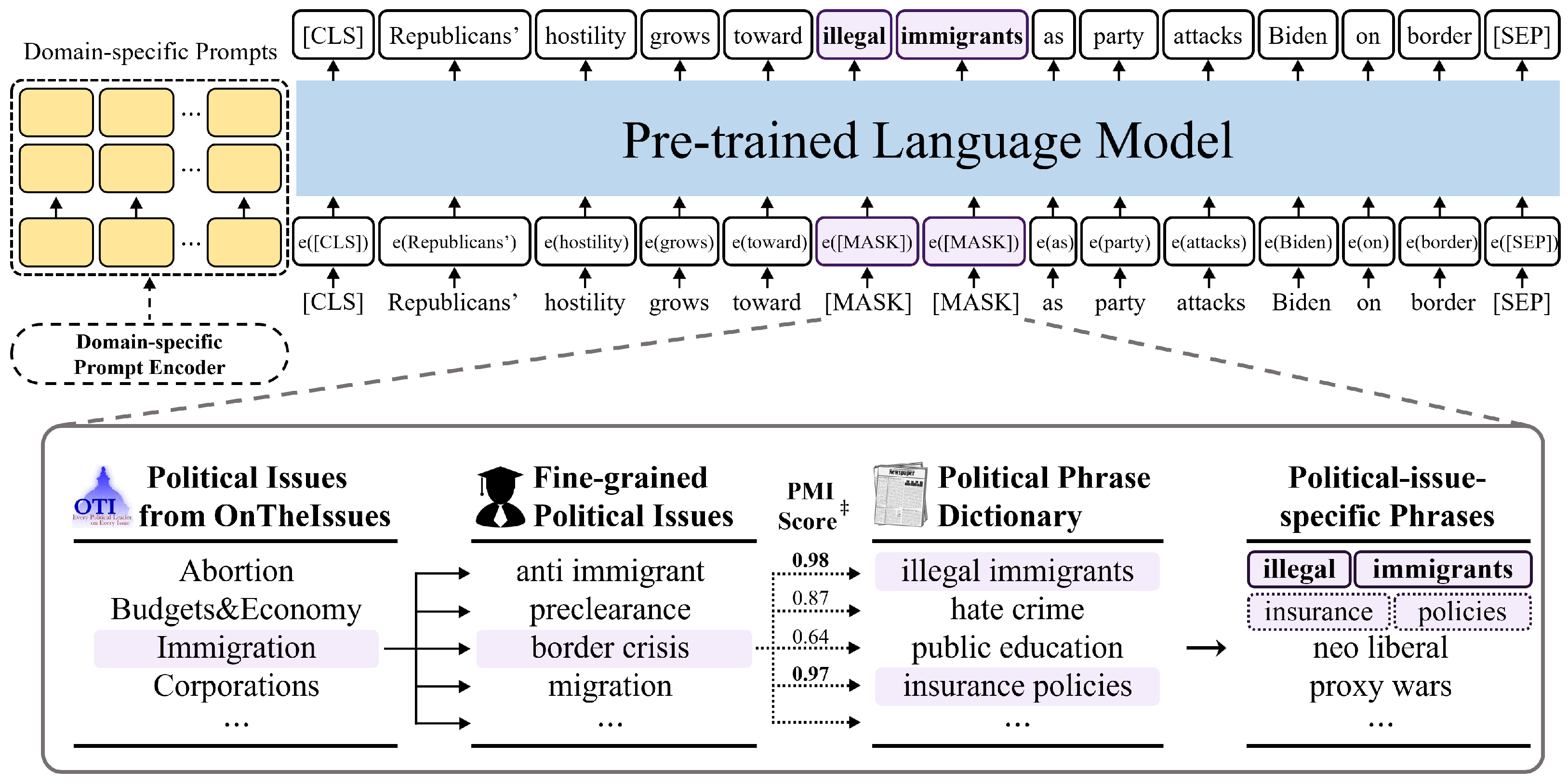

- We propose a domain-specific prompt tuning method for the domain adaptation of pre-trained language models through the MP3 task. For the MP3 task, we construct political-issue-specific phrases and mask them from the news corpus.

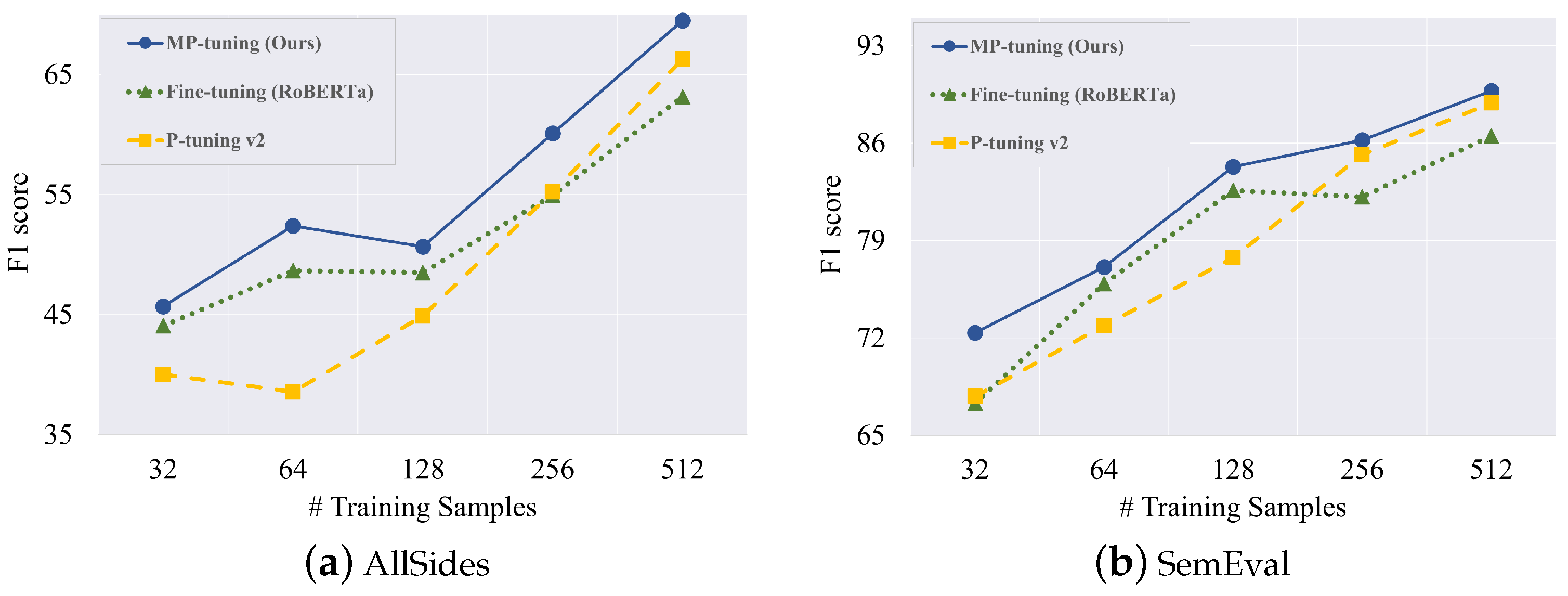

- The performance evaluation clearly shows that our model outperforms strong baselines in political perspective detection tasks in few-shot settings.

2. Preliminary

2.1. Transformers

2.2. Pre-Trained Language Models Based on Transformer

2.3. Prompt-Based Learning Methods

2.3.1. Discrete Prompting

2.3.2. Prompt Tuning

3. Methodology

3.1. Domain-Specific Prompt Tuning on MP3 Task

3.1.1. Domain-Specific Prompt Tuning

3.1.2. Masked Political Phrase Prediction Task (MP3)

3.2. Task-Specific Prompt Tuning on Downstream Tasks

4. Experimental Setup

4.1. Unlabeled News Corpus

4.2. Downstream Task Datasets

4.2.1. Semeval

4.2.2. Allsides

4.3. Baselines

4.3.1. Fine-Tuning Methods

- MAN [24] utilizes pre-training tasks that integrate social and linguistic information and conducts fine-tuning for political perspective detection.

4.3.2. Prompt-Based Learning Methods

- Lester’s prompt tuning [26] uses trainable continuous prompts as an alternative to text prompts. Independent continuous prompts are trained directly for each target task. The backbone of this model is the RoBERTa (large) model used in our experiments.

- P-tuning v2 [15] is the deep prompt tuning method this study adopts. Continuous prompts are applied to each layer of the pre-trained model. The backbone of this model is the RoBERTa (large) model used in our experiments.

- MP-tuning is our proposed multi-stage prompt tuning framework, which uses the RoBERTa (large) model as a backbone.

4.4. Implementation Details

4.5. Evaluation Results

4.6. Ablation Study

5. Analysis

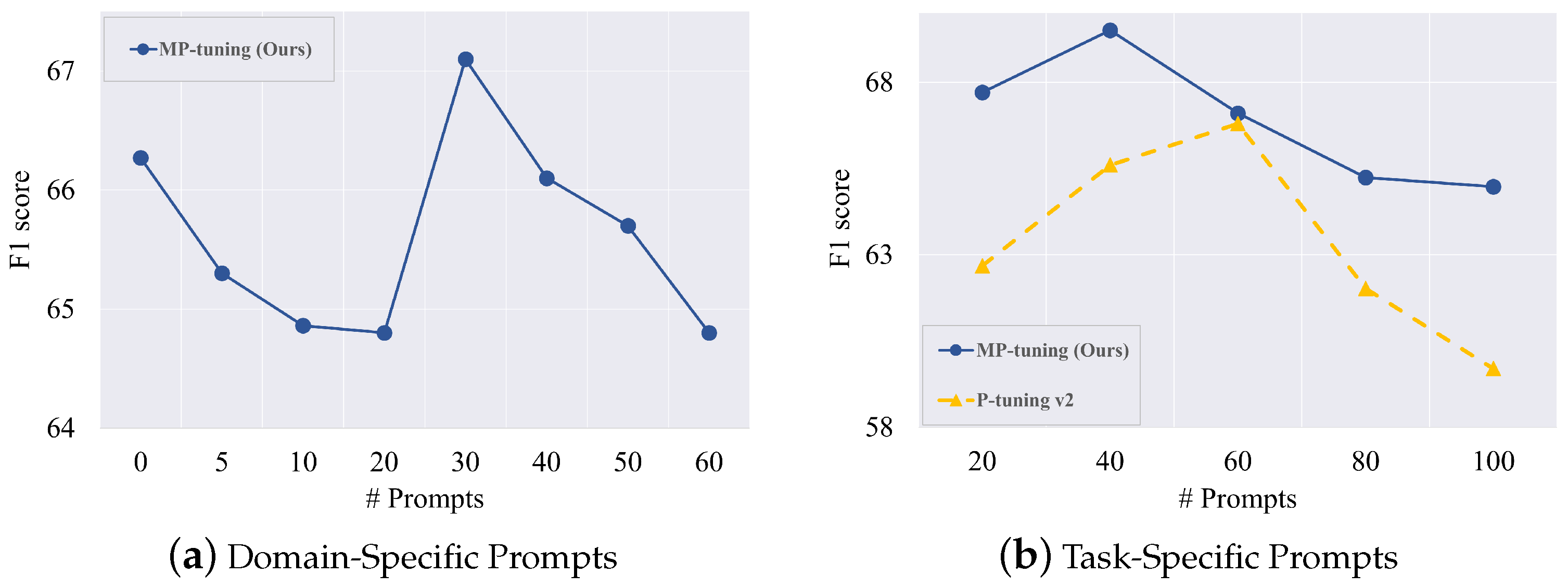

5.1. Analysis on Domain-Specific Prompt

5.2. Analysis on Task-Specific Prompt

6. Related Work

6.1. Political Perspective Detection

6.2. Prompt-Based Learning

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Elfardy, H.; Diab, M.T.; Callison-Burch, C. Ideological Perspective Detection Using Semantic Features. In Proceedings of the Fourth Joint Conference on Lexical and Computational Semantics (*SEM 2015), Denver, CO, USA, 4–7 June 2015; pp. 137–146. [Google Scholar]

- Li, C.; Goldwasser, D. Encoding Social Information with Graph Convolutional Networks for Political Perspective Detection in News Media. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL), Florence, Italy, 28 July–2 August 2019; pp. 2594–2604. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learner. In Proceedings of the Annual Conference on Neural Information Processing Systems (NeurIPS), Online, 6–12 December 2020; pp. 1877–1901. [Google Scholar]

- Schick, T.; Schütze, H. Exploiting Cloze-Questions for Few-Shot Text Classification and Natural Language Inference. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics (EACL), Online, 19–23 April 2021; pp. 255–269. [Google Scholar]

- Li, X.L.; Liang, P. Prefix-Tuning: Optimizing Continuous Prompts for Generation. In Proceedings of the Joint Conference of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (ACL/IJCNLP), Dublin, Ireland, 1–6 August 2021; pp. 4582–4597. [Google Scholar]

- Razeghi, Y.; Logan, R.L., IV; Gardner, M.; Singh, S. Impact of Pretraining Term Frequencies on Few-Shot Numerical Reasoning. In Proceedings of the Conference Empirical Methods in Natural Language Processing (EMNLP), Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 840–854.

- Kiesel, J.; Mestre, M.; Shukla, R.; Vincent, E.; Adineh, P.; Corney, D.; Stein, B.; Potthast, M. SemEval-2019 Task 4: Hyperpartisan News Detection. In Proceedings of the 13th International Workshop on Semantic Evaluation (SemEval), Minneapolis, MN, USA, 6–7 June 2019; pp. 829–839. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, L.K.; Polosukhin, L. Attention is all you need. In Proceedings of the Annual Conference on Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Schick, T.; Schütze, H. It’s Not Just Size That Matters: Small Language Models Are Also Few-Shot Learners. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT), Online, 6–11 June 2021; pp. 2339–2352. [Google Scholar]

- Shin, T.; Razeghi, Y.; Logan, R.L., IV; Wallace, E.; Singh, S. AutoPrompt: Eliciting Knowledge from Language Models with Automatically Generated Prompts. In Proceedings of the Joint Conference of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (ACL/IJCNLP), Dublin, Ireland, 1–6 August 2021; pp. 3816–3830. [Google Scholar]

- Gao, T.; Fisch, A.; Chen, D. Making Pre-Trained Language Models Better Few-Shot Learners. In Proceedings of the Joint Conference of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (ACL/IJCNLP), Dublin, Ireland, 1–6 August 2021; pp. 3816–3830. [Google Scholar]

- Liu, X.; Zheng, Y.; Du, Z.; Ding, M.; Qian, Y.; Yang, Z.; Tang, J. GPT understands, too. arXiv 2021, arXiv:2103.10385. [Google Scholar]

- Liu, X.; Ji, K.; Fu, Y.; Tam, W.; Du, Z.; Yang, Z.; Tang, J. P-Tuning: Prompt Tuning Can Be Comparable to Fine-tuning Across Scales and Tasks. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL), Dublin, Ireland, 22–27 May 2022; pp. 61–68. [Google Scholar]

- Schucher, N.; Reddy, S.; de Vries, H. The Power of Prompt Tuning for Low-Resource Semantic Parsing. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL), Dublin, Ireland, 22–27 May 2022; pp. 148–156. [Google Scholar]

- Zhang, Z.; Han, X.; Liu, Z.; Jiang, X.; Sun, M.; Liu, Q. ERNIE: Enhanced Language Representation with Informative Entities. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL), Florence, Italy, 28 July–2 August 2019; pp. 1441–1451. [Google Scholar]

- Sun, Y.; Wang, S.; Li, Y.; Feng, S.; Chen, X.; Zhang, H.; Tian, X.; Zhu, D.; Tian, H.; Wu, H. ERNIE: Enhanced Representation through Knowledge Integration. arXiv 2019, arXiv:1904.09223. [Google Scholar]

- Joshi, M.; Chen, D.; Liu, Y.; Weld, D.S.; Zettlemoyer, L.; Levy, O. SpanBERT: Improving Pre-training by Representing and Predicting Spans. Trans. Assoc. Comput. Linguist. (TACL) 2020, 8, 64–77. [Google Scholar] [CrossRef]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed Representations of Words and Phrases and their Compositionality. In Proceedings of the Annual Conference on Neural Information Processing Systems (NeurIPS), Lake Tahoe, NV, USA, 5–10 December 2013; pp. 3111–3119. [Google Scholar]

- Roy, S.; Goldwasser, D. Weakly Supervised Learning of Nuanced Frames for Analyzing Polarization in News Media. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 7698–7716. [Google Scholar]

- Church, K.W.; Hanks, P. Word Association Norms, Mutual Information and Lexicography. Comput. Linguist. 1990, 16, 22–29. [Google Scholar]

- Feng, S.; Chen, Z.; Li, Q.; Luo, M. Knowledge Graph Augmented Political Perspective Detection in News Media. arXiv 2021, arXiv:2108.0386. [Google Scholar]

- Li, C.; Goldwasser, D. Using Social and Linguistic Information to Adapt Pretrained Representations for Political Perspective Identification. In Proceedings of the Joint Conference of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (ACL/IJCNLP), Dublin, Ireland, 1–6 August 2021; pp. 4569–4579. [Google Scholar]

- Zhang, W.; Feng, S.; Chen, Z.; Lei, Z.; Li, J.; Luo, M. KCD: Knowledge Walks and Textual Cues Enhanced Political Perspective Detection in News Media. In Proceedings of the 2022 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), Seattle, WA, USA, 10–15 July 2022; pp. 4129–4140. [Google Scholar]

- Lester, B.; Al-Rfou, R.; Constant, N. The Power of Scale for Parameter-Efficient Prompt Tuning. In Proceedings of the Conference Empirical Methods in Natural Language Processing (EMNLP), Punta Cana, Dominican Republic, 7–11 November 2021; pp. 3045–3059. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. In Proceedings of the International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Annual Conference on Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the Conference Empirical Methods in Natural Language Processing: System Demonstrations (EMNLP Demos), Online, 16–20 November 2020; pp. 38–45. [Google Scholar]

- Lin, W.-H.; Wilson, T.; Wiebe, J.; Hauptmann, A. Which Side are You on? Identifying Perspectives at the Document and Sentence Levels. In Proceedings of the 10th Conference on Computational Natural Language Learning (CoNLL), New York, NY, USA, 8–9 June 2006; pp. 109–116. [Google Scholar]

- Cabot, P.-L.H.; Dankers, V.; Abadi, D.; Fischer, A.; Shutova, E. The Pragmatics behind Politics: Modelling Metaphor, Framing and Emotion in Political Discourse. In Findings of the Association for Computational Linguistics (EMNLP); Online; Association for Computational Linguistics location: Toronto, ON, Canada; pp. 4479–4488.

- Jiang, Z.; Xu, F.F.; Araki, J.; Neubig, G. How Can We Know What Language Models Know. Trans. Assoc. Comput. Linguist. (TACL) 2020, 8, 423–438. [Google Scholar] [CrossRef]

- Vu, T.; Lester, B.; Constant, N.; Al-Rfou, R.; Cer, D. SPoT: Better Frozen Model Adaptation through Soft Prompt Transfer. In Proceedings of the Joint Conference of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (ACL/IJCNLP), Dublin, Ireland, 1–6 August 2021; pp. 5039–5059. [Google Scholar]

| Dataset | # Samples | # Class | Class Distribution |

|---|---|---|---|

| SemEval | 645 | 2 | 407/238 |

| AllSides | 10,385 | 3 | 4164/3931/2290 |

| Setting | Model | SemEval | AllSides | ||

|---|---|---|---|---|---|

| Acc | MaF | Acc | MaF | ||

| Fine-tuning | BERT * | 86.92 | 80.71 | 80.80 | 79.71 |

| RoBERTa * | 87.08 | 81.34 | 81.80 | 80.51 | |

| MAN † | 84.66 | 83.09 | 81.41 | 80.44 | |

| Prompting | Lester’s prompt tuning * | 82.72 | 81.35 | 76.42 | 74.38 |

| P-tuning v2 * | 90.06 | 87.12 | 81.18 | 79.27 | |

| MP-tuning (Ours) | 91.47 | 88.92 | 83.21 | 82.54 | |

| Method | SemEval | AllSides | ||

|---|---|---|---|---|

| Acc | MaF | Acc | MaF | |

| MP-tuning (Ours) | 91.47 | 88.92 | 83.21 | 82.54 |

| w/o MP3 | 91.10 | 87.72 | 82.90 | 81.84 |

| w/o DP | 90.81 | 87.09 | 81.52 | 79.97 |

| w/o MP3, DP | 90.06 | 87.12 | 81.18 | 79.27 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, K.-M.; Lee, M.; Won, H.-S.; Kim, M.-J.; Kim, Y.; Lee, S. Multi-Stage Prompt Tuning for Political Perspective Detection in Low-Resource Settings. Appl. Sci. 2023, 13, 6252. https://doi.org/10.3390/app13106252

Kim K-M, Lee M, Won H-S, Kim M-J, Kim Y, Lee S. Multi-Stage Prompt Tuning for Political Perspective Detection in Low-Resource Settings. Applied Sciences. 2023; 13(10):6252. https://doi.org/10.3390/app13106252

Chicago/Turabian StyleKim, Kang-Min, Mingyu Lee, Hyun-Sik Won, Min-Ji Kim, Yeachan Kim, and SangKeun Lee. 2023. "Multi-Stage Prompt Tuning for Political Perspective Detection in Low-Resource Settings" Applied Sciences 13, no. 10: 6252. https://doi.org/10.3390/app13106252

APA StyleKim, K.-M., Lee, M., Won, H.-S., Kim, M.-J., Kim, Y., & Lee, S. (2023). Multi-Stage Prompt Tuning for Political Perspective Detection in Low-Resource Settings. Applied Sciences, 13(10), 6252. https://doi.org/10.3390/app13106252