DMS-YOLOv5: A Decoupled Multi-Scale YOLOv5 Method for Small Object Detection

Abstract

:1. Introduction

- (1)

- Excessively increasing the depth of the feature extraction network for small objects with small size and resolution leads to the loss of their own feature information. Increasing the receptive field size during pooling leads to problems such as image resolution reduction and loss of image details.

- (2)

- Small objects are easily confused with the background in complex background environments, making it difficult to extract their feature information through feature extraction.

- (3)

- Multiple downsampling operations in the detection head network of the object detection algorithm cause a loss of small object details.

- (4)

- The coupled network in the object detection algorithm processes classification and bounding box regression tasks simultaneously, which weakens the performance of both tasks.

- (5)

- The bounding box regression loss function in object detection algorithms has different spatial sensitivity to objects of different scales, resulting in low regression prediction accuracy for small objects and easy missed detection of adjacent objects.

- (1)

- This paper proposes an improved small object detection method based on the official version of the originla YOLOv5s algorithm, namely DMS-YOLOv5.

- (2)

- The receptive field module is introduced into the feature extraction network to increase the network’s receptive field, allowing the network to focus more on small objects with small size and low resolution and complete the extraction of multi-level semantic information.

- (3)

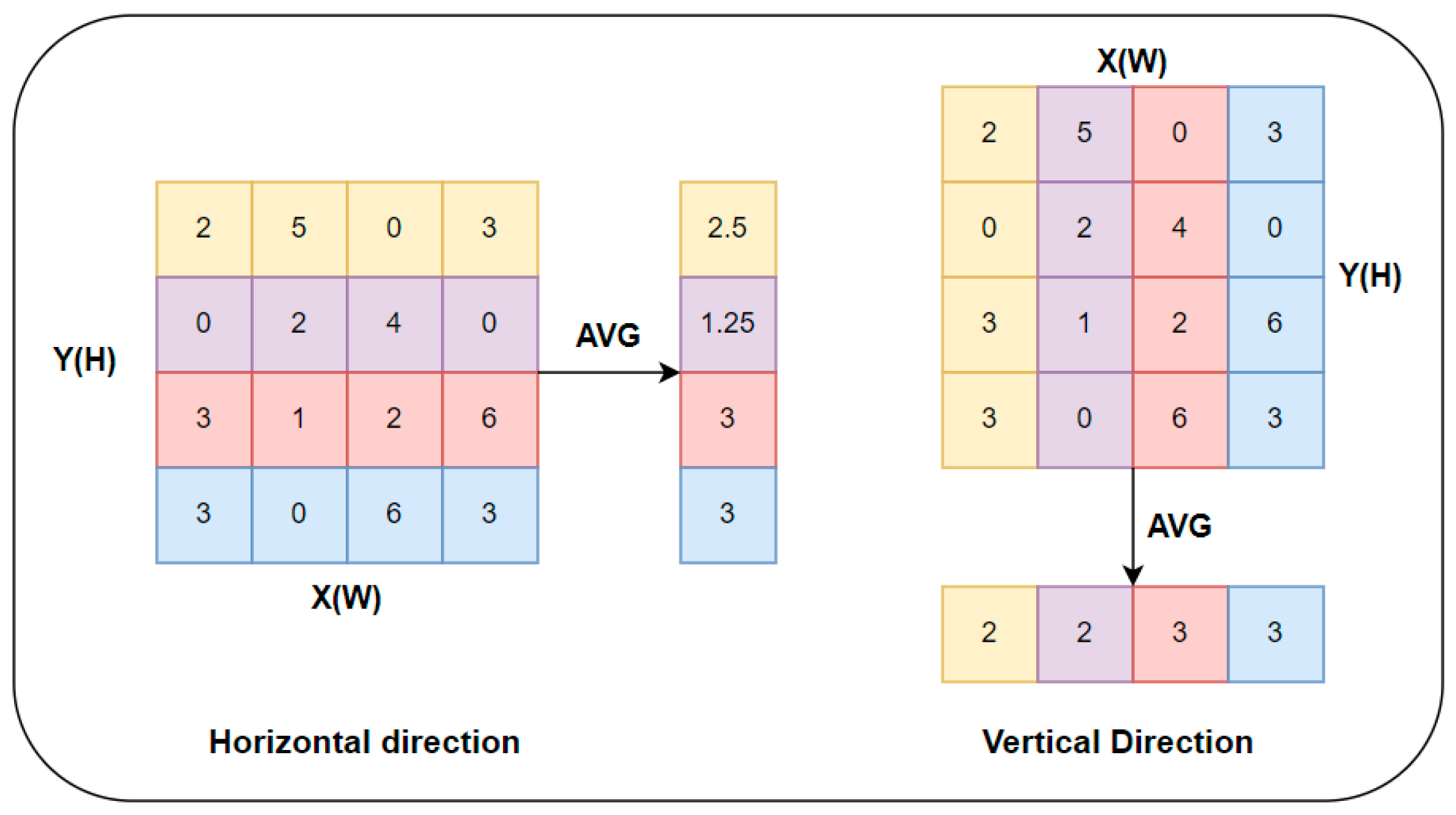

- The CA attention mechanism is introduced, which combines spatial and channel attention information, to reduce the interference of background information on object features and enhance the network’s ability to focus on object information.

- (4)

- A small object detection layer is added to compensate for the problem of losing small object details during multiple downsampling operations.

- (5)

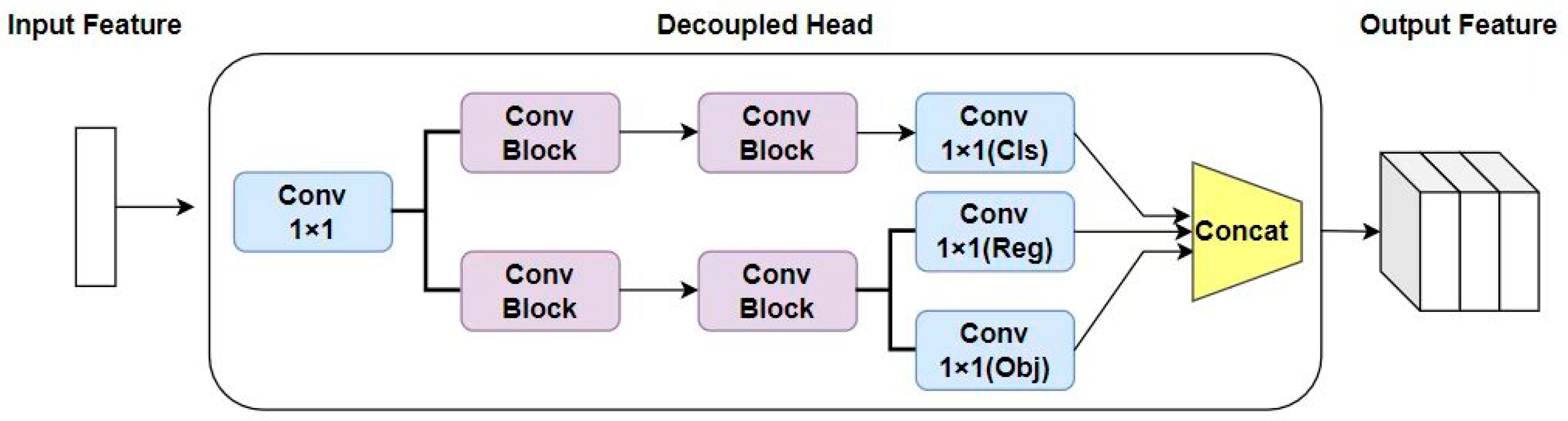

- The decoupling network is introduced into the detection head network to process classification and bounding box regression tasks separately, thereby improving the detection accuracy and efficiency of both tasks.

- (6)

- The bounding box loss function and non-maximum suppression algorithm are improved to alleviate the missed detection problem caused by the concentrated distribution of small objects and mutual occlusion between objects.

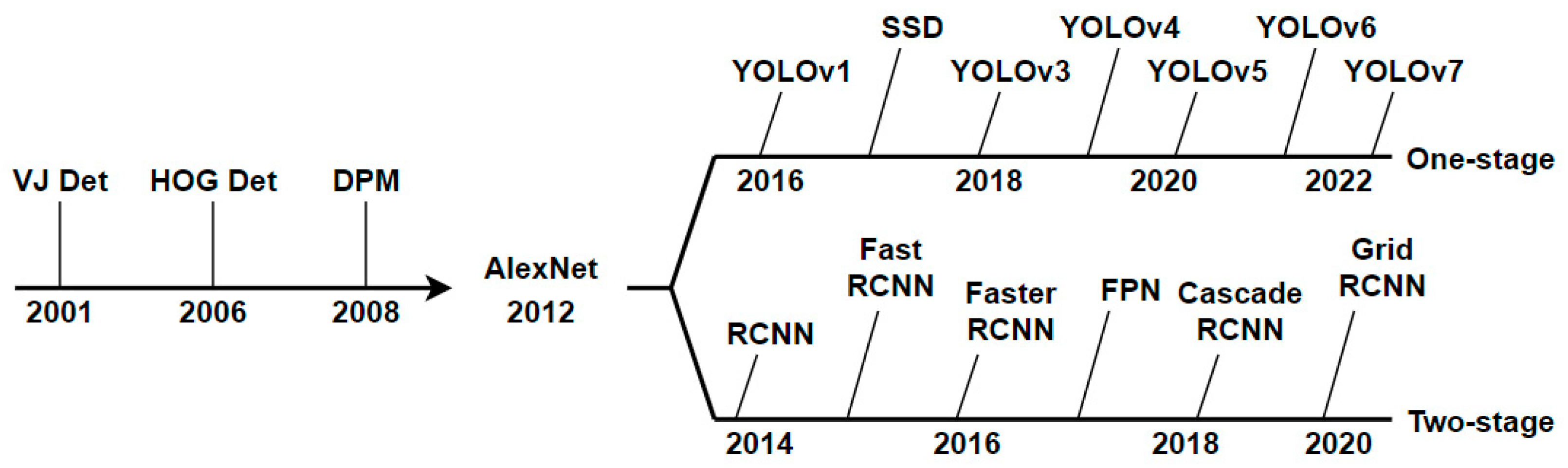

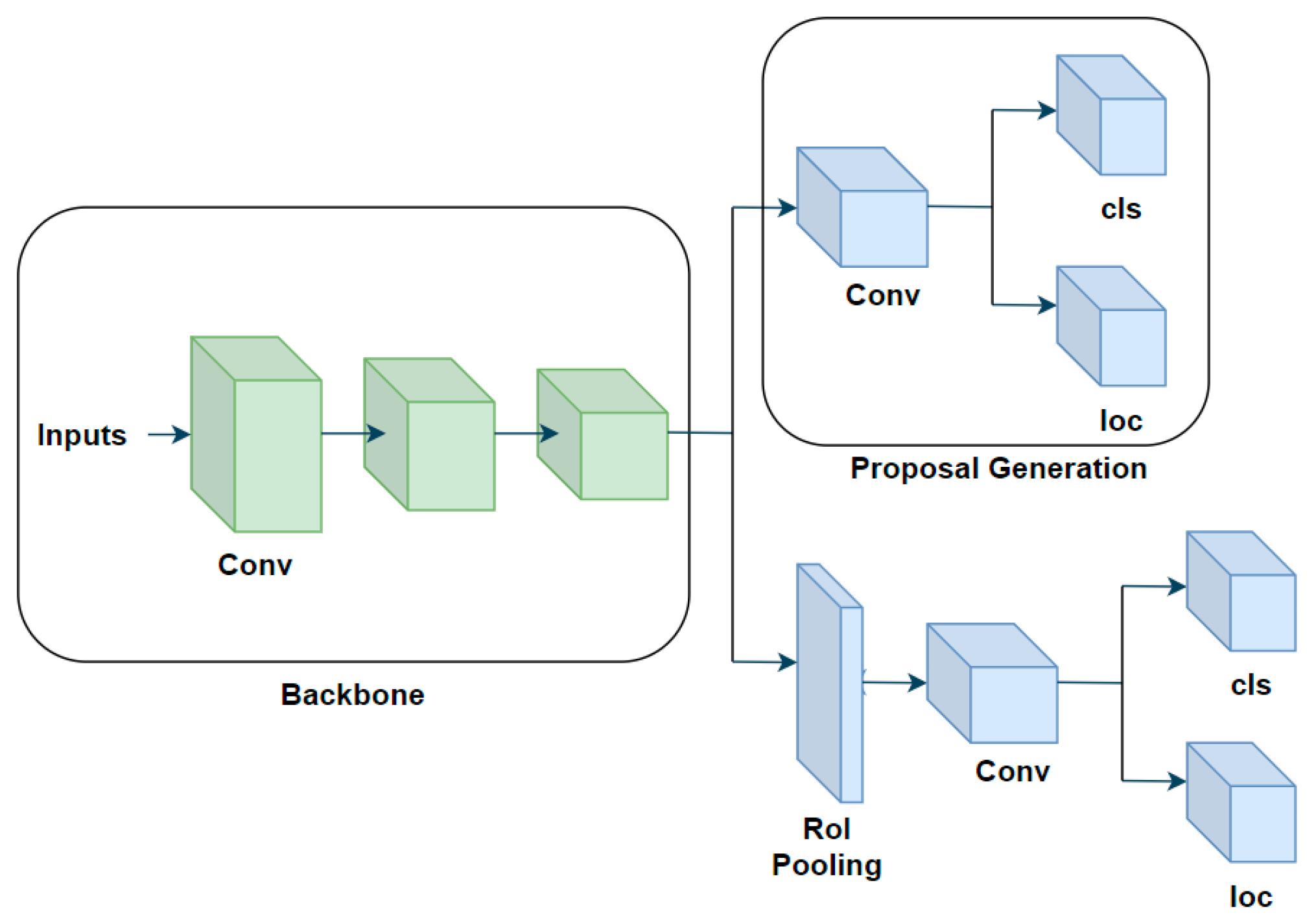

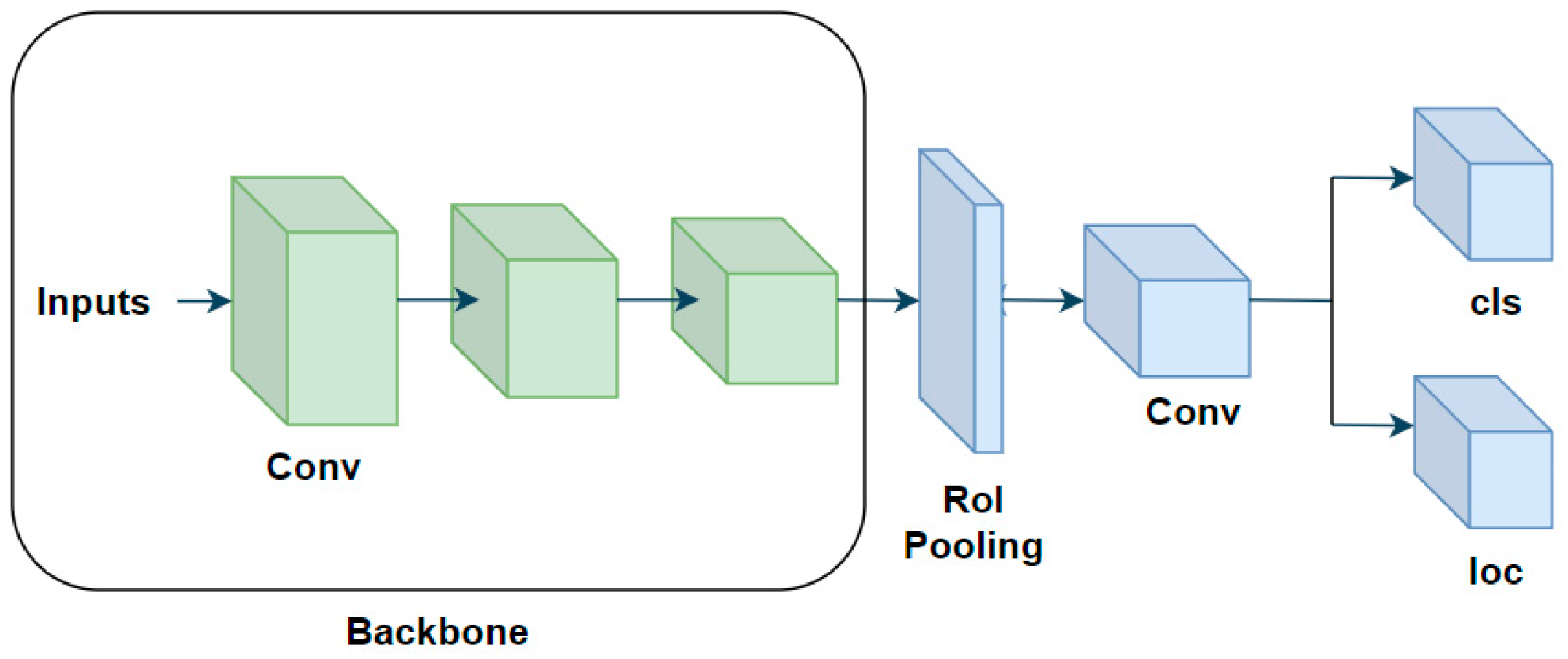

2. Related Work

3. Methods

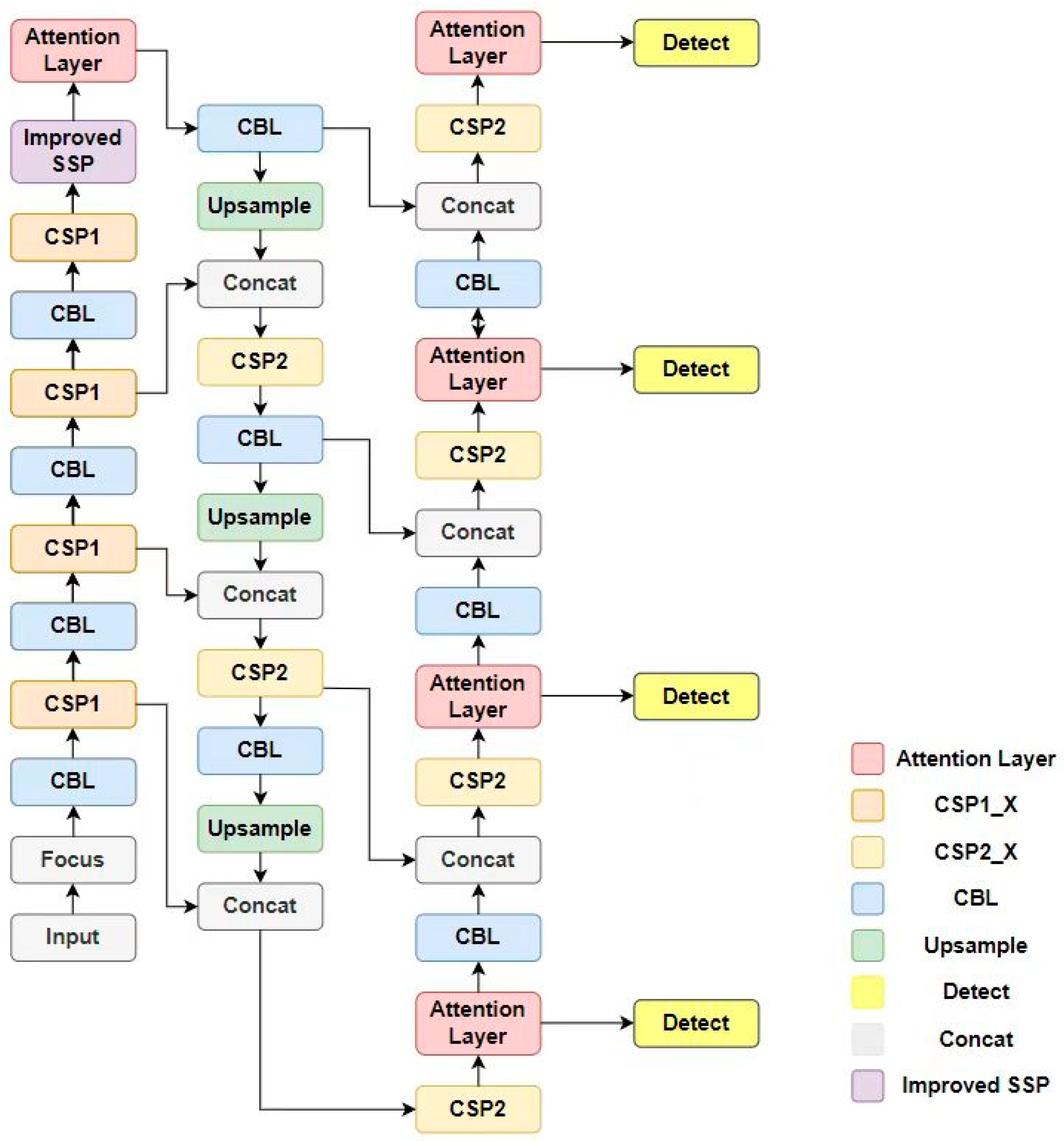

3.1. Overview of the Network Architecture

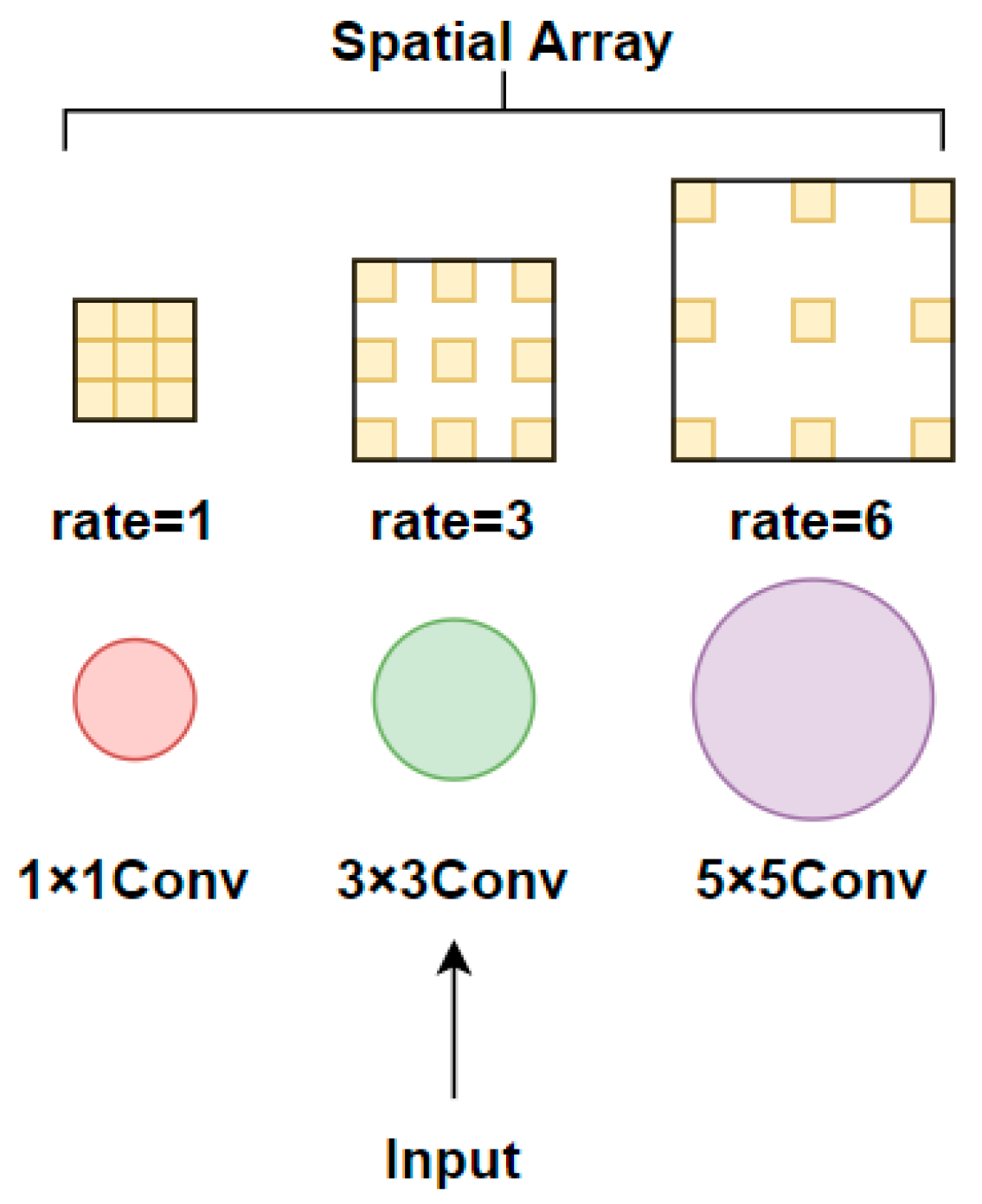

3.2. Receptive Field Module

3.3. Attention Mechanism Module

3.4. Multi-Head Small Object Detection Layer

3.5. Decoupled Network Module

3.6. Bounding Box Regression Loss Function

4. Experiments

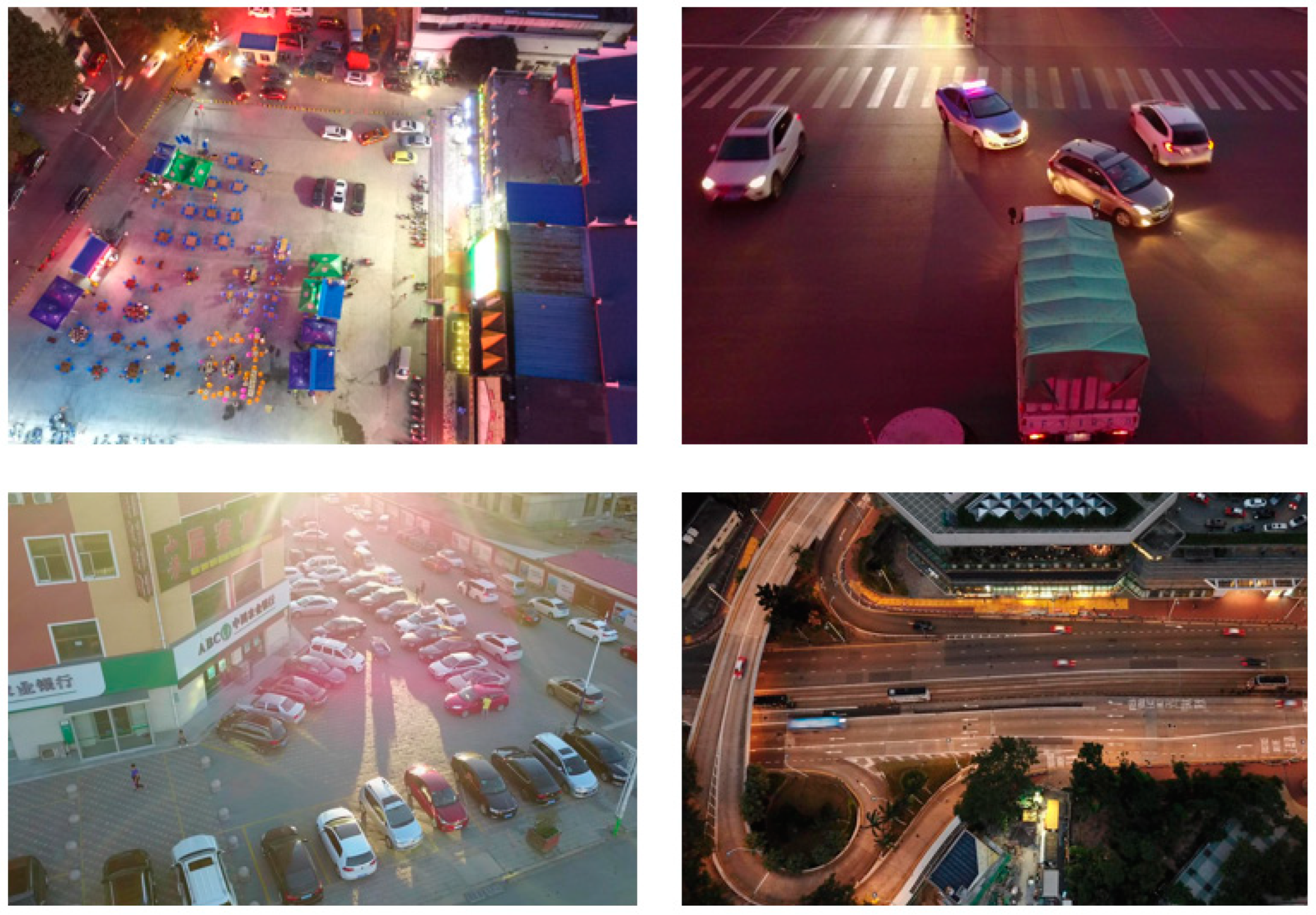

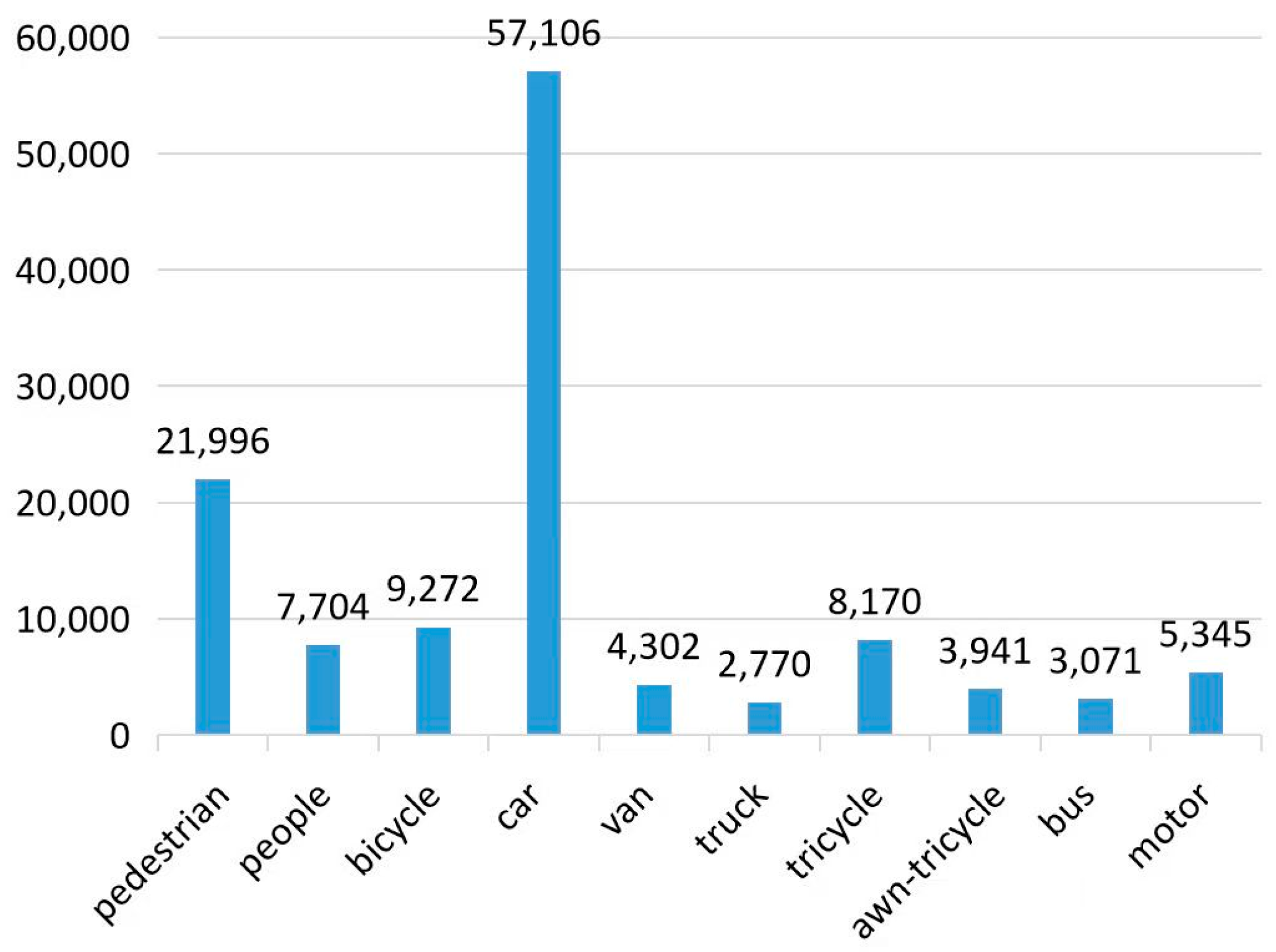

4.1. Datasets

4.2. Experimental Preparation

4.3. Experimental Results and Analysis

4.3.1. Results and Analysis of Receptive Field Module

4.3.2. Results and Analysis of Attention Mechanism Module

4.3.3. Results and Analysis of Small Object Detection Layer

4.3.4. Results and Analysis of Decoupled Network

4.3.5. Results and Analysis of Boundary Box Loss Function and NMS Algorithm

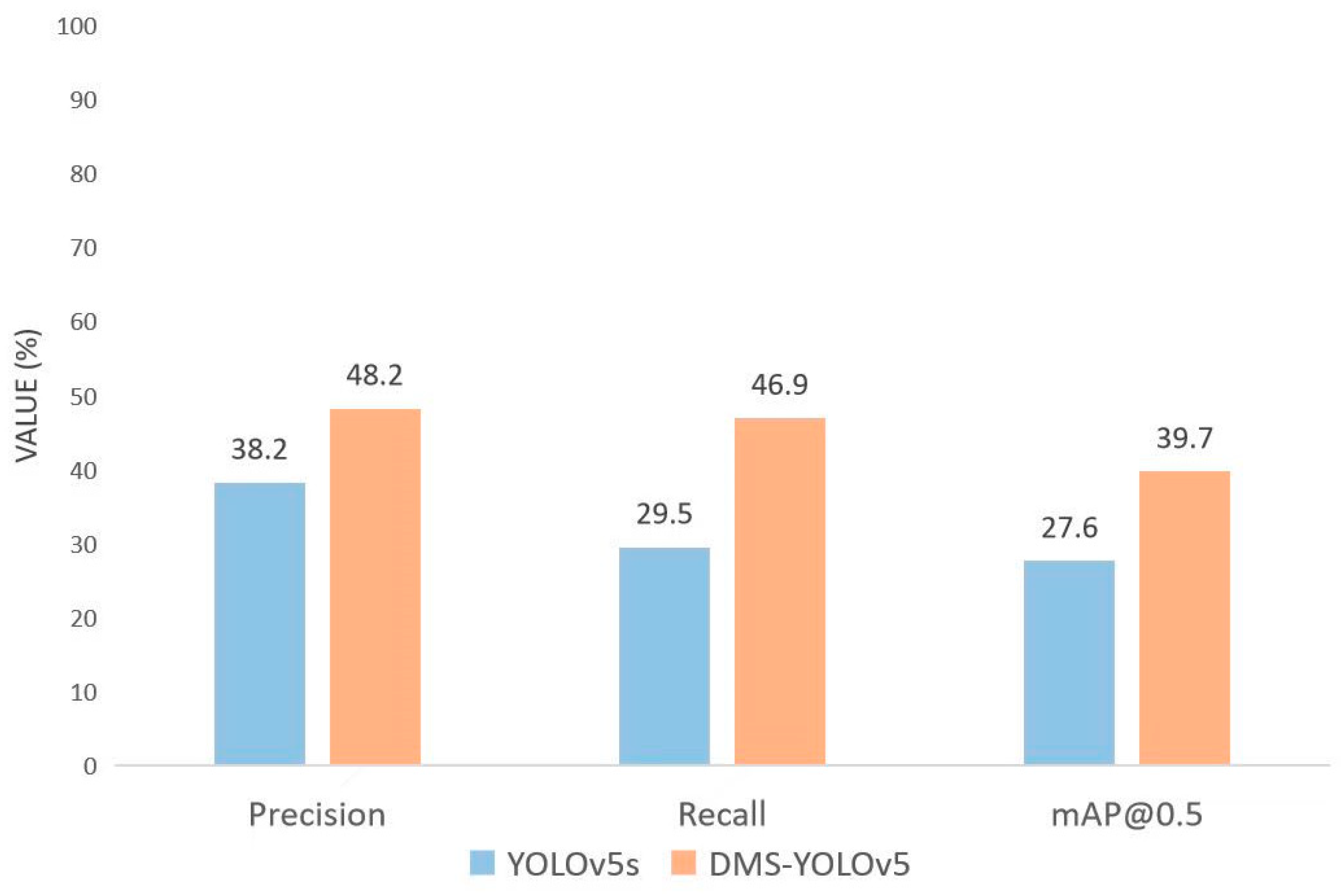

4.3.6. Ablation Experiments and Results Analysis

- (1)

- The multi-scale receptive field in the feature extraction network can be used to achieve feature extraction tasks for different scale objects, especially for small object datasets, where multi-scale receptive field can give more attention to low-resolution small objects.

- (2)

- The introduction of the channel and spatial attention CA mechanism further reduces the parameter and computational complexity of the attention mechanism.

- (3)

- The introduction of the small object detection layer solves the problem of the loss of detailed information of low-resolution small objects due to the high sampling multiple in the downsampling process.

- (4)

- The decoupled network is introduced into the detection head network to achieve branch processing for classification and boundary box regression tasks, improving the detection accuracy and efficiency of the two tasks.

- (5)

- Improving the boundary box loss function and NMS algorithm can alleviate the problem of missed detection caused by the concentration of small objects and the mutual occlusion between objects.

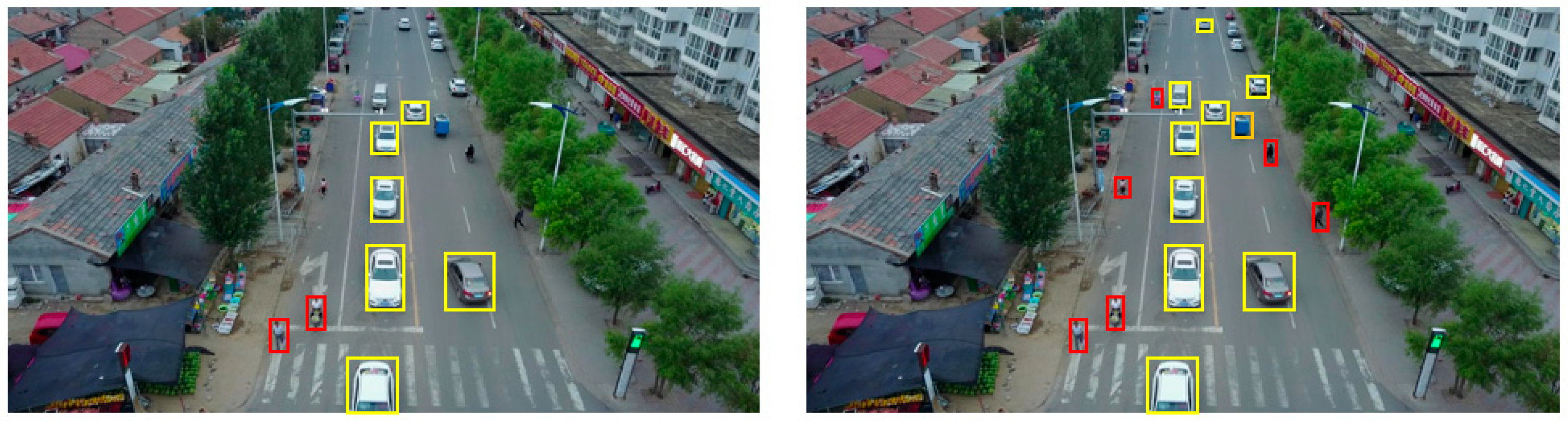

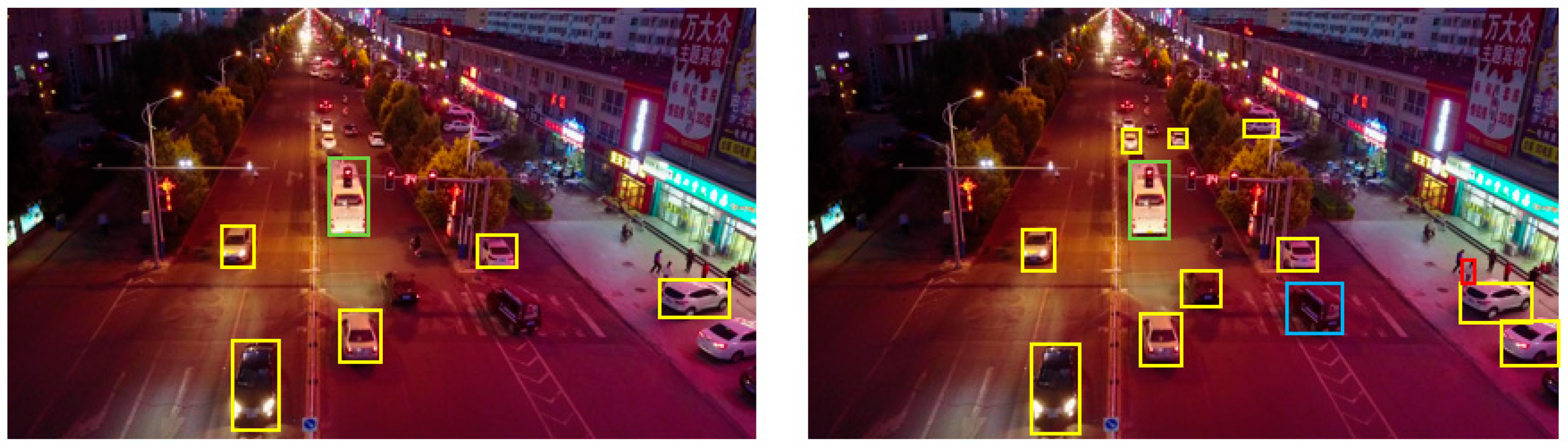

4.4. Method Performance Demonstration

4.5. Comparative Experiments with Similar Methods

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef] [PubMed]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20 June 2005; IEEE: New York, NY, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, P.; Wergeles, N.; Shang, Y. A survey and performance evaluation of deep learning methods for small object detection. Expert Syst. Appl. 2021, 172, 114602. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Ramachandran, A.; Sangaiah, A.K. A review on object detection in unmanned aerial vehicle surveillance. Int. J. Cogn. Comput. Eng. 2021, 2, 215–228. [Google Scholar] [CrossRef]

- Hnewa, M.; Radha, H. Object detection under rainy conditions for autonomous vehicles: A review of state-of-the-art and emerging techniques. IEEE Signal Process. Mag. 2020, 38, 53–67. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Wang, P.; Bayram, B.; Sertel, E. A comprehensive review on deep learning based remote sensing image super-resolution methods. Earth-Sci. Rev. 2022, 232, 104110. [Google Scholar] [CrossRef]

- Gui, J.; Sun, Z.; Wen, Y.; Tao, D.; Ye, J. A review on generative adversarial networks: Algorithms, theory, and applications. IEEE Trans. Knowl. Data Eng. 2021, 35, 3313–3332. [Google Scholar] [CrossRef]

- Sun, Y.; Weng, Y.; Luo, B.; Li, G.; Tao, B.; Jiang, D.; Chen, D. Gesture recognition algorithm based on multi-scale feature fusion in RGB-D images. IET Image Process. 2023, 17, 1280–1290. [Google Scholar] [CrossRef]

- Wang, M.; Yang, W.; Wang, L.; Chen, D.; Wei, F.; KeZiErBieKe, H.; Liao, Y. FE-YOLOv5: Feature enhancement network based on YOLOv5 for small object detection. J. Vis. Commun. Image Represent. 2023, 90, 103752. [Google Scholar] [CrossRef]

- Jiang, W.; Schotten, H.D. Neural network-based fading channel prediction: A comprehensive overview. IEEE Access 2019, 7, 118112–118124. [Google Scholar] [CrossRef]

- Liu, T.; Luo, R.; Xu, L.; Feng, D.; Cao, L.; Liu, S.; Guo, J. Spatial Channel Attention for Deep Convolutional Neural Networks. Mathematics 2022, 10, 1750. [Google Scholar] [CrossRef]

- Sultana, F.; Sufian, A.; Dutta, P. A review of object detection models based on convolutional neural network. Intell. Comput. Image Process. Based Appl. 2020, 1157, 1–16. [Google Scholar]

- Du, L.; Zhang, R.; Wang, X. Overview of two-stage object detection algorithms. J. Phys. Conf. Ser. 2020, 1544, 012033. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Lu, X.; Li, B.; Yue, Y.; Li, Q.; Yan, J. Grid r-cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7363–7372. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, J.; Chen, Y.; Dong, Z.; Gao, M. Improved YOLOv5 network for real-time multi-scale traffic sign detection. Neural Comput. Appl. 2022, 35, 7853–7865. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20 June–25 June 2021; pp. 13713–13722. [Google Scholar]

- Ciampiconi, L.; Elwood, A.; Leonardi, M.; Mohamed, A.; Rozza, A. A survey and taxonomy of loss functions in machine learning. arXiv 2023, arXiv:2301.05579. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Du, D.; Zhu, P.; Wen, L.; Bian, X.; Ling, H.; Hu, Q.; Zheng, J.; Peng, T.; Wang, X.; Zhang, Y.; et al. VisDrone-SOT2019: The vision meets drone single object tracking challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

| Method | mAP_0.5 | Precision | Recall |

|---|---|---|---|

| YOLOv5 | 27.6 | 38.2 | 29.5 |

| YOLOv5_RFB | 28.8 | 38.1 | 31.3 |

| Method | mAP_0.5 | Precision | Recall |

|---|---|---|---|

| YOLOv5 | 27.6 | 38.2 | 29.5 |

| YOLOv5_CA | 30.1 | 39.9 | 35.1 |

| Method | mAP_0.5 | Precision | Recall |

|---|---|---|---|

| YOLOv5 | 27.6 | 38.2 | 29.5 |

| YOLOv5_4P | 31.0 | 41.2 | 36.6 |

| Method | mAP_0.5 | Precision | Recall |

|---|---|---|---|

| YOLOv5 | 27.6 | 38.2 | 29.5 |

| YOLOv5_Decoupled640 | 32.7 | 42.7 | 39.1 |

| YOLOv5_Decoupled1280 | 35.6 | 46.5 | 42.2 |

| Method | mAP_0.5 | Precision | Recall |

|---|---|---|---|

| YOLOv5 | 27.6 | 38.2 | 29.5 |

| YOLOv5_DIoU | 28.8 | 39.1 | 29.9 |

| YOLOv5_CIoU | 29.4 | 39.0 | 30.2 |

| YOLOv5_CIoU_D-NMS | 31.8 | 40.2 | 37.1 |

| Method | RFB | CA | 4P | Decoupled | Loss | mAP_0.5 | Precision | Recall |

|---|---|---|---|---|---|---|---|---|

| YOLOv5 | 27.6 | 38.2 | 29.5 | |||||

| 1 | √ | 28.8 | 38.1 | 31.3 | ||||

| 2 | √ | √ | 32.2 | 40.3 | 35.9 | |||

| 3 | √ | √ | √ | 35.7 | 42.5 | 39.1 | ||

| 4 | √ | √ | √ | √ | 38.9 | 45.6 | 44.4 | |

| 5 | √ | √ | √ | √ | √ | 39.7 | 48.2 | 46.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, T.; Wushouer, M.; Tuerhong, G. DMS-YOLOv5: A Decoupled Multi-Scale YOLOv5 Method for Small Object Detection. Appl. Sci. 2023, 13, 6124. https://doi.org/10.3390/app13106124

Gao T, Wushouer M, Tuerhong G. DMS-YOLOv5: A Decoupled Multi-Scale YOLOv5 Method for Small Object Detection. Applied Sciences. 2023; 13(10):6124. https://doi.org/10.3390/app13106124

Chicago/Turabian StyleGao, Tianyu, Mairidan Wushouer, and Gulanbaier Tuerhong. 2023. "DMS-YOLOv5: A Decoupled Multi-Scale YOLOv5 Method for Small Object Detection" Applied Sciences 13, no. 10: 6124. https://doi.org/10.3390/app13106124

APA StyleGao, T., Wushouer, M., & Tuerhong, G. (2023). DMS-YOLOv5: A Decoupled Multi-Scale YOLOv5 Method for Small Object Detection. Applied Sciences, 13(10), 6124. https://doi.org/10.3390/app13106124