Design of a Cargo-Carrying Analysis System for Mountain Orchard Transporters Based on RGB-D Data

Abstract

1. Introduction

2. Materials and Methods

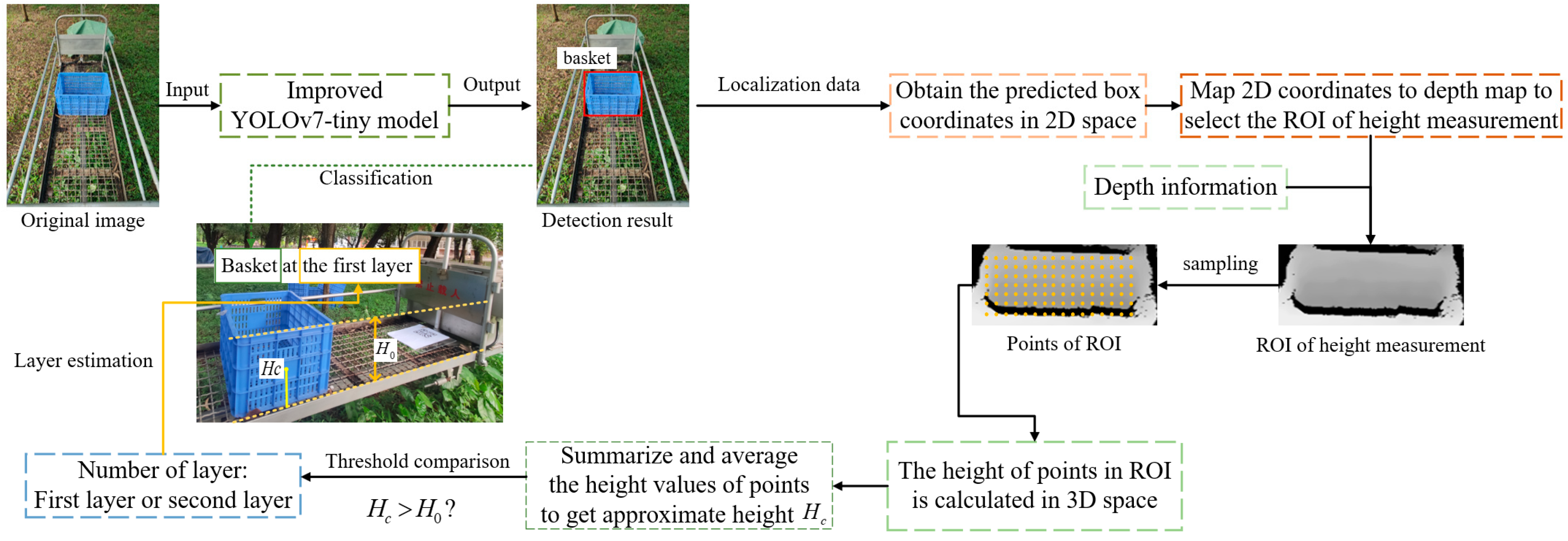

2.1. Overview of the Cargo-Carrying Analysis System

2.2. Acquisition System and Data Construction

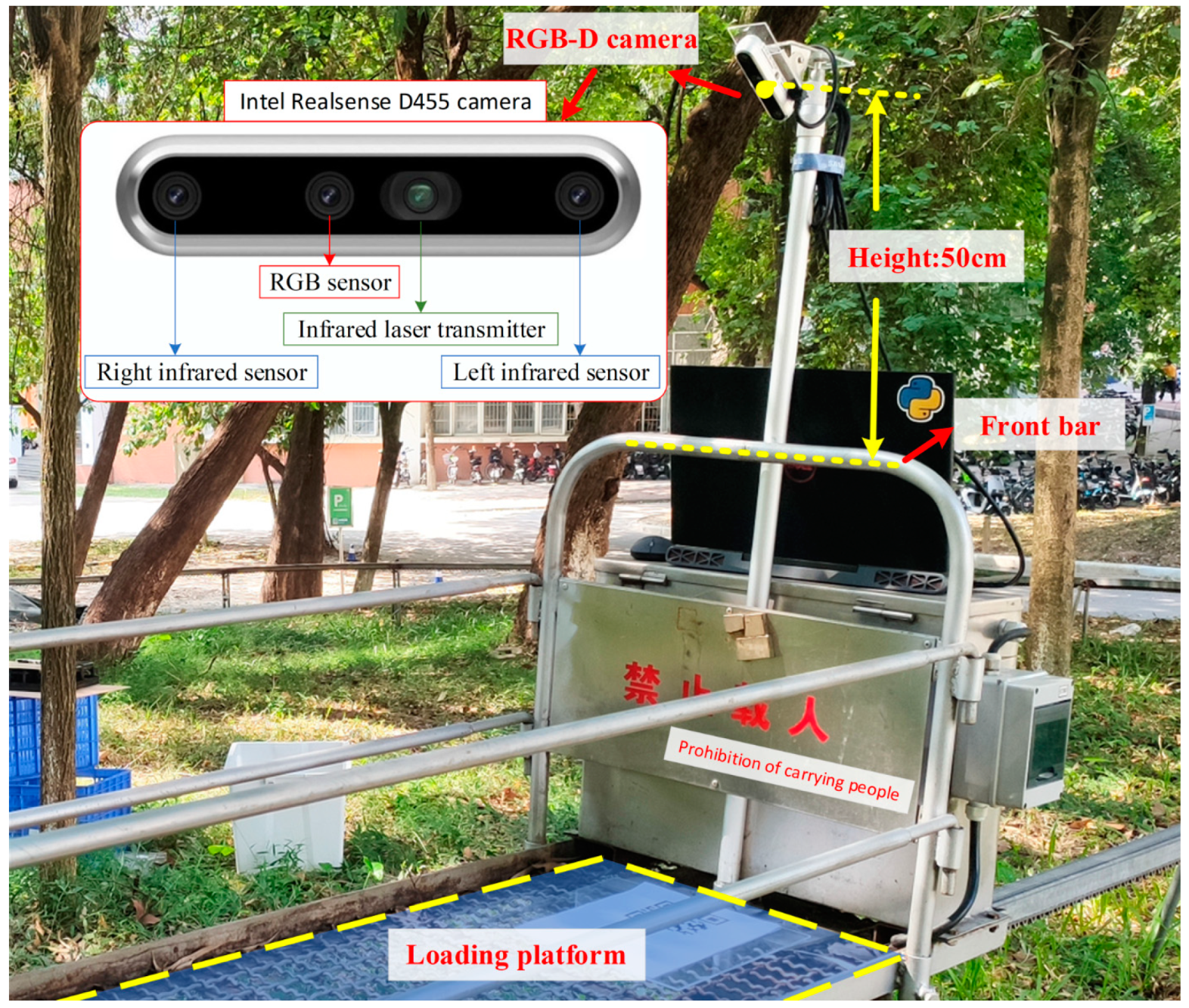

2.2.1. Data Acquisition System and Condition

2.2.2. Construction of the Object Detection Dataset

3. Model Construction and Volume Measurement Strategy and Deployment

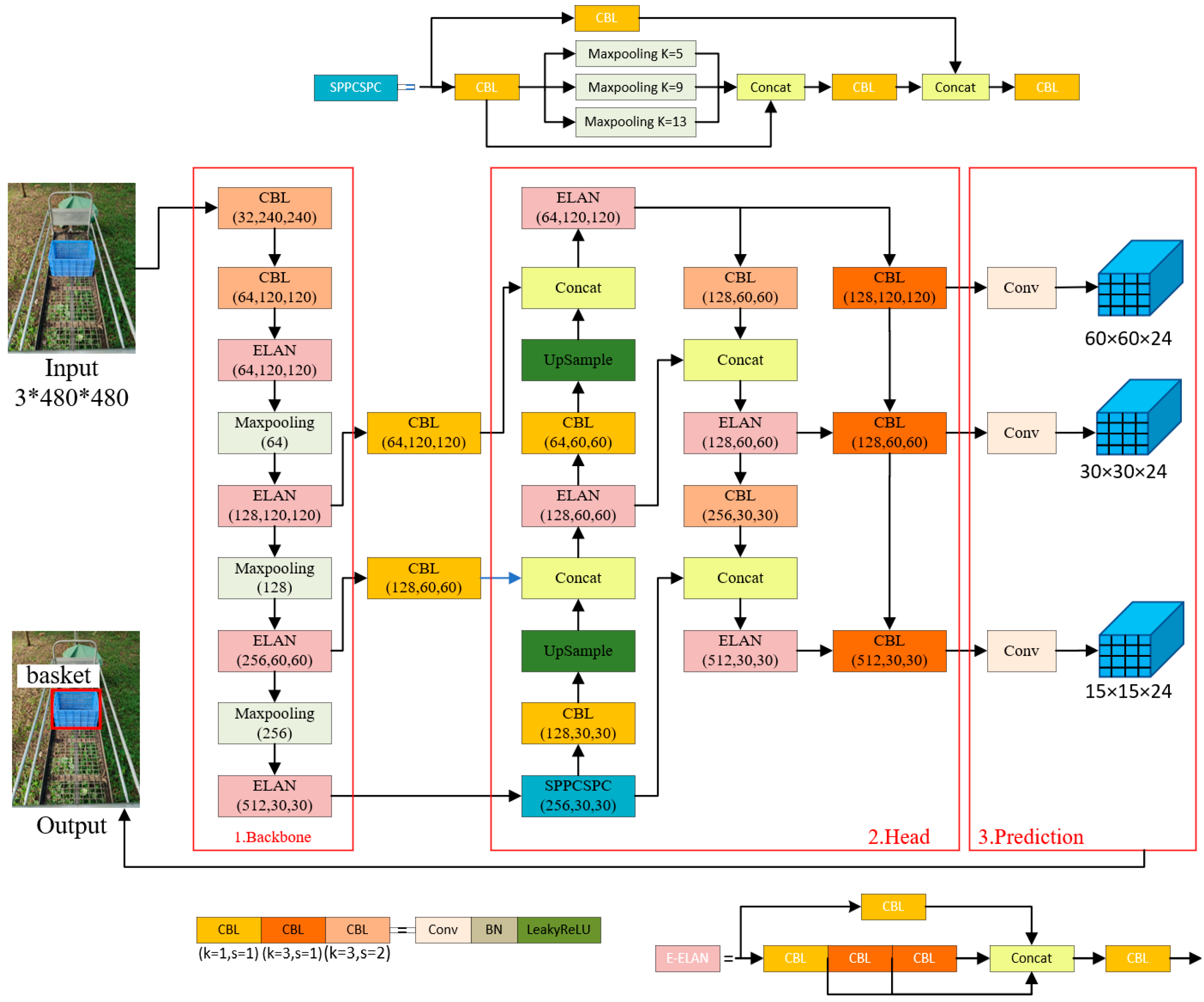

3.1. YOLOv7-Tiny Object Detection Algorithm

3.1.1. Optimization of Loss Function

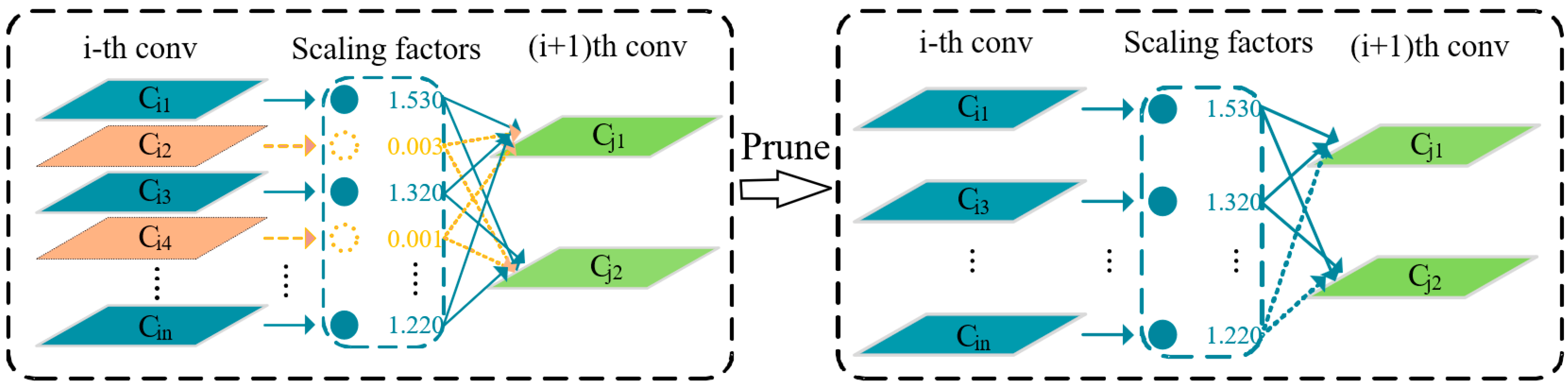

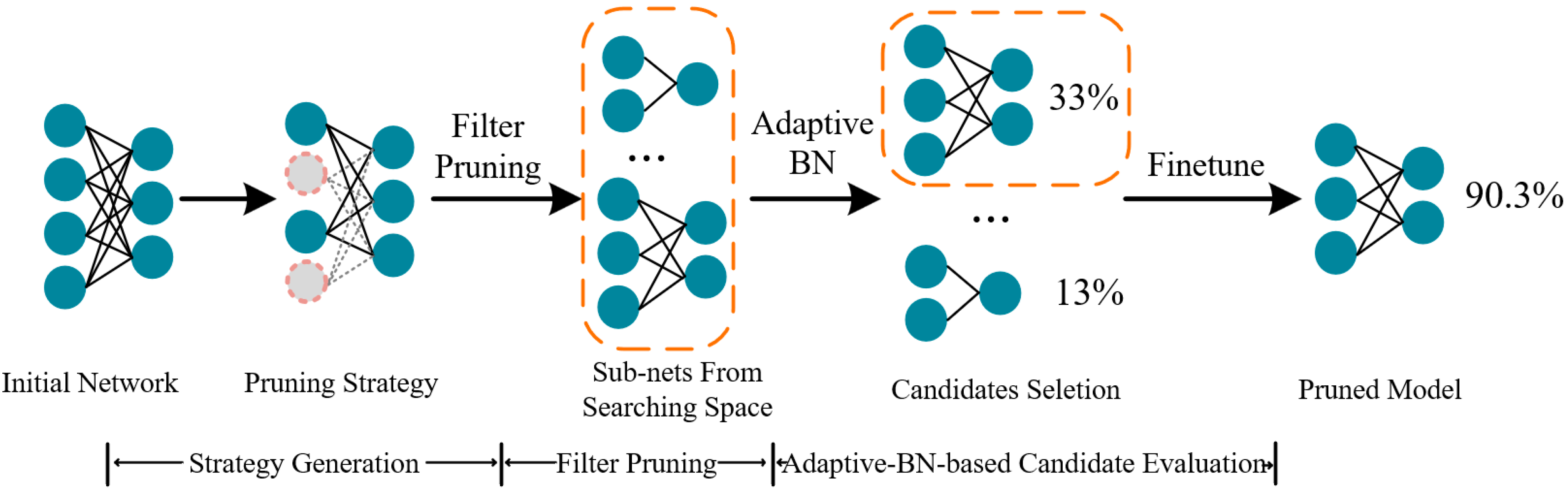

3.1.2. Model Pruning

3.2. Volume Measurement Strategy

- The improved YOLOv7-tiny model was used to obtain the 2D coordinate information () in the RGB image;

- The ROI of the height measurement in the depth map was obtained according to the 2D coordinate information;

- The depth value () and the spatial point height measurement model were combined to obtain the height value () of each point in the ROI;

- The height values of each spatial point in the ROI were summed and averaged to obtain the approximate height value () of the basket;

- The approximate height of the basket was compared to the height threshold to determine the number of layers where the basket was located;

- Combined with the classification and the number of layers, the load volume measurement was realized.

3.2.1. Construction of 3D Spatial Trailer Model

3.2.2. Spatial Point Height Measurement Model

- To obtain the intrinsic matrix, we used Zhang’s [28] calibration method to calibrate the RGB-D camera;

- We initialized the variables, measured the height value () from the RGB-D camera to the loading platform, and obtained the 2D coordinate information () corresponding to spatial point ;

- For coordinate normalization, we normalized the 2D coordinates as , according to the camera projection model:

- We calculated angle . We defined the normalized coordinates of and as and and we calculated angle as follows:

- We obtained the height value () of using Formula (11). We determined the lengths of and in the depth map, and .

3.3. Hardware and Deployment

4. Results

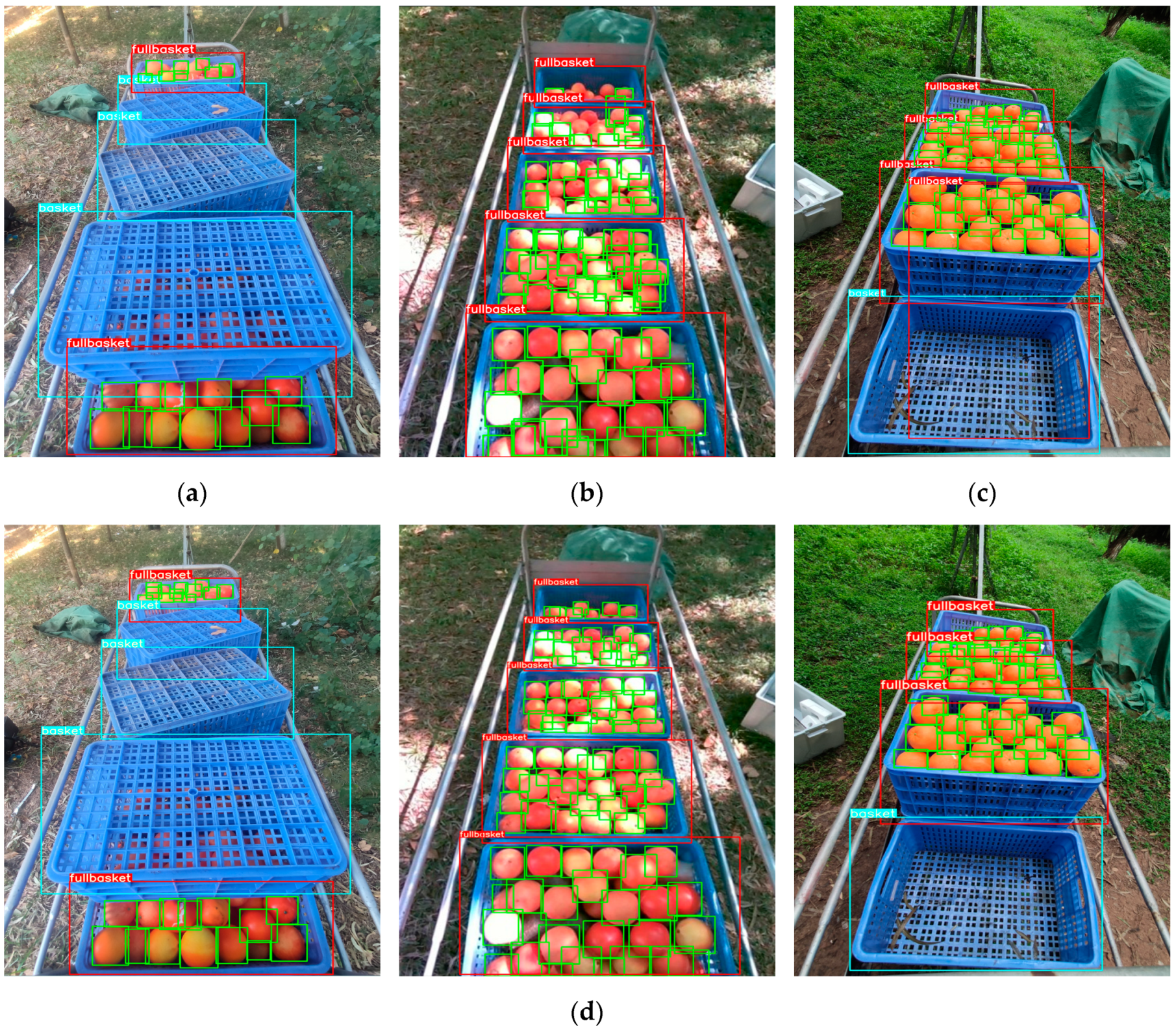

4.1. Training and Evaluation of Object Detection Algorithm

4.1.1. Experimental Training Settings

4.1.2. Model Evaluation

4.2. Ablation Experiment

4.3. Performances of Different Detection Algorithms

4.4. Performance of Height Measurement Model

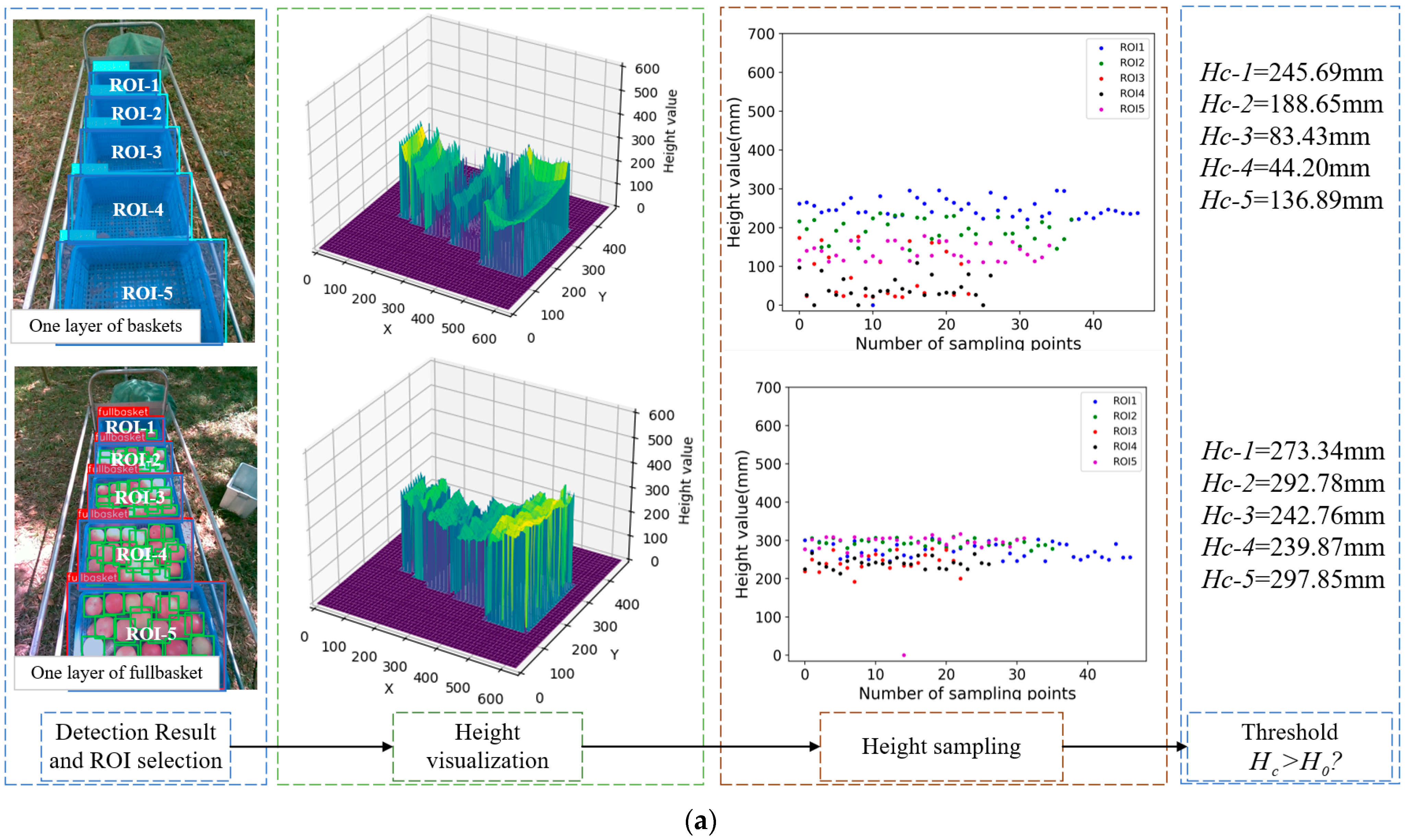

4.5. Experimental Results of Overall Method

5. Discussion

- Methods using infrared, laser, and RFID sensors. Zhao et al. [33] used laser sensors to measure the geometric dimensions of mechanical parts. Mohammed [34] used RFID technology to locate cargo. Kong et al. [14] measured the cargo volumes of train carriages based on lidar. This method has a simple hardware structure and a low cost, but it cannot obtain the classification of the cargo.

- Methods that use RGB images to obtain the classification and location. For example, Cong et al. [35] used an edge operator to position cargo for a vision robot application. Guan et al. [36] obtained an edge contour using the Canny operator. Jin et al. [12] used Faster-RCNN to detect overlapping cargo. Although the above methods can obtain the classification of cargo, it is difficult to obtain the height and volume.

- Methods that use multi-sensor information fusion. Although the structures of sensors are different, the sensing data can be summarized as RGB and depth information. Pang et al. [37] realized the detection overrun of railway cargo using vision and lidar technology. Liu [38] obtained the classification and the spatial distance of an object using RGB-D data. Li et al. [39] used stereo vision technology to obtain classification and localization, and realized the dimension measurement of logistics materials.

6. Conclusions

- Compared to 3D data, 2D images are easily available and computationally efficient. In this study, we used a lightweight detection method to detect 2D images and produce the classification and localization. The 3D information was driven by 2D detection results to determine the load volume using fewer computing resources.

- Focal loss and the EagleEye pruning method were used to improve YOLOv7-tiny, which allowed the model to converge more quickly and speed up detection. The mean average precision (mAP) of the proposed method was 89.8%, which was 8.2%, 6.9%, and 1.6% higher than those of Faster-RCNN, RetinaNet-Res50, and YOLOv5s, respectively. The model had 6.7 GFLOPs, and the detection speed was 63 ms/img on the embedded platform, a Jetson Nano.

- The spatial-geometry-based height measurement model proposed in this paper had a height measurement error of less than 3 cm at a range of 1.8 m horizontally from the depth camera, which met the requirements for load analysis in mountain orchards.

- The method determined the load volume when the transporter was carrying one or two layers of fruit baskets. The total time required by the method was 75 ms/img. The method provides technical support for achieving the accurate transport of cargo in mountain orchards and is valuable for rationally dispatching transport equipment and for operational safety.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Huang, H.; Huang, T.; Li, Z.; Lyu, S.; Hong, T. Design of Citrus Fruit Detection System Based on Mobile Platform and Edge Computer Device. Sensors 2021, 22, 59. [Google Scholar] [CrossRef] [PubMed]

- Sheng, L.; Song, S.; Hong, T.; Li, Z.; Dai, Q. The Present Situation and Development of Mountainous Orchard Mechanization in Guangdong Province. J. Agric. Mech. Res. 2017, 39, 257–262. [Google Scholar] [CrossRef]

- Li, Z.; Hong, T.; Sun, T.; Ou, Y.; Luo, Y. Design of battery powered monorail transporter for mountainous orchard. J. Northwest A F Univ. Nat. Sci. Ed. 2016, 44, 221–227. [Google Scholar]

- Li, Z.; Hong, T.; Lu, S.; Wu, W.; Liu, Y. Research Progress of Self-Propelled Electric Monorail Transporters in Mountainous Orchards. Mod. Agric. Equip. 2020, 41, 2–9. [Google Scholar]

- Liu, Y.; Hong, T.; Li, Z. Influence of Toothed Rail Parameters on Impact Vibration Meshing of Mountainous Self-Propelled Electric Monorail Transporter. Sensors 2020, 20, 5880. [Google Scholar] [CrossRef] [PubMed]

- Lu, S.; Wei, Z.; Wu, B.; Li, Z.; Hong, T. Development of on-orbit status sensing system for orchard monorail conveyer. Trans. Chin. Soc. Agric. Eng. 2020, 36, 56–64. [Google Scholar]

- Lu, S.; Liang, Y.; Li, Z.; Wang, J.; Wang, W. Orchard monorail conveyer location based on ultra high frequency RFID dual antennas and dual tags. Trans. Chin. Soc. Agric. Eng. 2018, 34, 71–79. [Google Scholar]

- Chuang, J.; Li, J.; Hong, T. Design and test of ultrasonic obstacle avoidance system for mountain orchard monorail conveyor. Trans. Chin. Soc. Agric. Eng. 2015, 31, 69–74. [Google Scholar]

- Chiaravalli, D.; Palli, G.; Monica, R.; Aleotti, J.; Rizzini, D.L. Integration of a Multi-Camera Vision System and Admittance Control for Robotic Industrial Depalletizing. In Proceedings of the 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; pp. 667–674. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Y.; Fu, H.; Shi, J.; Chen, N. Irregular cigarette parcel stacking system coupled with machine vision- based parcel identification. Tob. Sci. Technol. 2019, 52, 105–111. [Google Scholar]

- Yang, J.; Wu, S.; Gou, L.; Yu, H.; Lin, C.; Wang, J.; Wang, P.; Li, M.; Li, X. SCD: A Stacked Carton Dataset for Detection and Segmentation. Sensors 2022, 22, 3617. [Google Scholar] [CrossRef]

- Jin, Q.; Li, T. Object recognition method based on deep learning in storage environment. J. Beijing Inf. Sci. Technol. Univ. 2018, 33, 60–65. [Google Scholar] [CrossRef]

- Wang, C.; Yuan, Q.; Bai, H.; Li, H.; Zong, W. Lightweight object detection algorithm for warehouse goods. Laser Optoelectron. 2022, 59, 74–80. [Google Scholar]

- Kong, D.; Zhang, N.; Huang, Z.; Chen, X.; Shen, Y. Measurement method of volume of freight carriages based on laser radar detection technology. J. Yanshan Univ. 2019, 43, 160–168. [Google Scholar]

- Doliotis, P.; McMurrough, C.D.; Criswell, A.; Middleton, M.B.; Rajan, S.T. A 3D Perception-Based Robotic Manipulation System for Automated Truck Unloading. In Proceedings of the IEEE International Conference on Automation Science & Engineering, Fort Worth, TX, USA, 21–25 August 2016. [Google Scholar] [CrossRef]

- Shengkai, W. Carton Dataset Construction and Research of Its Vision Detection Algorithm. Ph.D. Thesis, Huazhong University of Science and Technology, Wuhan, China, 2021. [Google Scholar]

- Wang, C.; Bochkovskiy, A.; Liao, H.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Liu, X.; Zhang, B.; Liu, N. CAST-YOLO: An Improved YOLO Based on a Cross-Attention Strategy Transformer for Foggy Weather Adaptive Detection. Appl. Sci. 2023, 13, 1176. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018, IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wang, Y.; Li, D. Improved YOLO Framework Blood Cell Detection Algorithm. Comput. Eng. Appl. 2022, 58, 191–198. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 99, 2999–3007. [Google Scholar]

- Liu, Z.; Li, J.; Shen, Z.; Huang, G.; Yan, S.; Zhang, C. Learning Efficient Convolutional Networks through Network Slimming. arXiv 2017, arXiv:1708.06519. [Google Scholar]

- Li, B.; Wu, B.; Su, J.; Wang, G. Eagleeye: Fast Sub-Net Evaluation for Efficient Neural Network Pruning. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part II 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 639–654. [Google Scholar]

- He, J.; Liu, X. Ground Obstacle Detection Technology Based on Fusion of RGB-D and Inertial Sensors. J. Comput.-Aided Des. Comput. Graph. 2022, 34, 254–263. [Google Scholar] [CrossRef]

- Kneip, L.; Scaramuzza, D.; Siegwart, R. A Novel Parametrization of the Perspective-Three-Point Problem for a Direct Computation of Absolute Camera Position and Orientation. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2969–2976. [Google Scholar]

- Quan, L.; Lan, Z. Linear N-point camera pose determination. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 774–780. [Google Scholar] [CrossRef]

- Zhang, Z. Flexible Camera Calibration by Viewing a Plane from Unknown Orientations. In Proceedings of the 7th IEEE International Conference on Computer Vision (ICCV’99), Kerkyra, Greece, 20–27 September 1999; pp. 666–673. [Google Scholar]

- Xiang, X.; Song, X.; Zheng, Y.; Wang, H.; Fang, Z. Research on embedded face detection based on MobileNet-YOLO. J. Chin. Agric. Mech. 2022, 43, 124–130. [Google Scholar] [CrossRef]

- Hu, J.; Li, Z.; Huang, H.; Hong, T.; Jiang, S.; Zeng, J. Citrus psyllid detection based on improved YOLOv4-Tiny model. Trans. Chin. Soc. Agric. Eng. 2021, 37, 197–203. [Google Scholar]

- Lu, S.; Lu, S.; Li, Z.; Hong, T.; Xue, Y.; Wu, B. Orange recognition method using improved YOLOv3-LITE lightweight neural network. Trans. Chin. Soc. Agric. Eng. 2019, 35, 205–214. [Google Scholar]

- Farid, A.; Hussain, F.; Khan, K.; Shahzad, M.; Khan, U.; Mahmood, Z. A Fast and Accurate Real-Time Vehicle Detection Method Using Deep Learning for Unconstrained Environments. Appl. Sci. 2023, 13, 3059. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, W.; Liu, L. Geometric dimension measurement of parts based on laser sensor. Las. J. 2018, 39, 55–58. [Google Scholar] [CrossRef]

- Mohammed, A.M. Modelling and optimization of an RFID-based supply chain network Diss. Ph.D. Thesis, University of Portsmouth, Portsmouth, UK, 2018. [Google Scholar]

- Cong, K.; Han, J.; Chang, F. Goods contour detection and positioning by a vision robot. J. Shandong Univ. 2010, 40, 15–18. [Google Scholar]

- Guan, X.; Chen, L.; Lyu, Z. Visual Servo Technology Research of Industrial Palletizing Robot. Mach. Des. Res. 2018, 34, 54–56. [Google Scholar] [CrossRef]

- Pang, T.; He, J. Automatic detection system of railway out of gauge goods based on faster r-cnn. Autom. Instrum. 2021, 8, 72–76. [Google Scholar] [CrossRef]

- Liu, H. Research on Fast Object Detection and Ranging Algorithm Based on Improved YOLOv4 Model. Master’s Thesis, Zhengzhou University, Zhengzhou, China, 2021. [Google Scholar]

- Li, J.; Zhou, F.; Li, Z.; Li, X. Intelligent Geometry Size Measurement System for Logistics Industry. Comput. Sci. 2018, 45, 218–222. [Google Scholar]

| Model | AP% | mAP% | GFLOPs | Speed (ms/img) | ||

|---|---|---|---|---|---|---|

| Basket | Orange | Fullbasket | ||||

| YOLOv7-tiny | 97.5 | 76.5 | 92.7 | 88.9 | 13.2 | 119 |

| YOLOv7 + FL | 97.4 | 77.1 | 95.5 | 90.2 | 13.2 | 119 |

| YOLOv7 + FL + Prune | 97.2 | 77.1 | 95.1 | 89.8 | 6.7 | 63 |

| Model | AP% | mAP% | Size (MB) | GFLOPs | ||

|---|---|---|---|---|---|---|

| Basket | Orange | Fullbasket | ||||

| Faster-RCNN | 88.9 | 72.4 | 83.7 | 81.6 | 165.8 | - |

| RetinaNet-Res50 | 90.7 | 72.8 | 84.3 | 82.9 | 145.8 | 156 |

| YOLOv3-tiny | 95.0 | 44.6 | 89.6 | 76.4 | 17.4 | 12.9 |

| YOLOv5s | 96.4 | 77.1 | 91.3 | 88.2 | 14.4 | 16.4 |

| YOLOv7-tiny | 97.5 | 76.5 | 92.7 | 88.9 | 12.3 | 13.2 |

| Proposed Method | 97.2 | 77.1 | 95.1 | 89.8 | 6.3 | 6.7 |

| Height | Group 1 (H1 = 274 mm) | Group 2 (H2 = 536 mm) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Horizontal Distance (mm) | 200 | 500 | 800 | 1100 | 1400 | 1800 | 200 | 500 | 800 | 1100 | 1400 | 1800 |

| Depth value (mm) | 691 | 891 | 1065 | 1169 | 1379 | 1472 | 558 | 692 | 850 | 1018 | 1167 | 1349 |

| Height value (mm) | 276.5 | 276.7 | 278.6 | 292.3 | 320.2 | 289.7 | 534.6 | 528.5 | 556.3 | 580.1 | 599.2 | 552.3 |

| Height error (mm) | 2.5 | 2.7 | 4.6 | 18.3 | 46.2 | 15.7 | 1.4 | 7.5 | 20.3 | 28.6 | 63.2 | 16.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Zhou, Y.; Zhao, C.; Guo, Y.; Lyu, S.; Chen, J.; Wen, W.; Huang, Y. Design of a Cargo-Carrying Analysis System for Mountain Orchard Transporters Based on RGB-D Data. Appl. Sci. 2023, 13, 6059. https://doi.org/10.3390/app13106059

Li Z, Zhou Y, Zhao C, Guo Y, Lyu S, Chen J, Wen W, Huang Y. Design of a Cargo-Carrying Analysis System for Mountain Orchard Transporters Based on RGB-D Data. Applied Sciences. 2023; 13(10):6059. https://doi.org/10.3390/app13106059

Chicago/Turabian StyleLi, Zhen, Yuehuai Zhou, Chonghai Zhao, Yuanhang Guo, Shilei Lyu, Jiayu Chen, Wei Wen, and Ying Huang. 2023. "Design of a Cargo-Carrying Analysis System for Mountain Orchard Transporters Based on RGB-D Data" Applied Sciences 13, no. 10: 6059. https://doi.org/10.3390/app13106059

APA StyleLi, Z., Zhou, Y., Zhao, C., Guo, Y., Lyu, S., Chen, J., Wen, W., & Huang, Y. (2023). Design of a Cargo-Carrying Analysis System for Mountain Orchard Transporters Based on RGB-D Data. Applied Sciences, 13(10), 6059. https://doi.org/10.3390/app13106059