Void Detection inside Duct of Prestressed Concrete Bridges Based on Deep Support Vector Data Description

Abstract

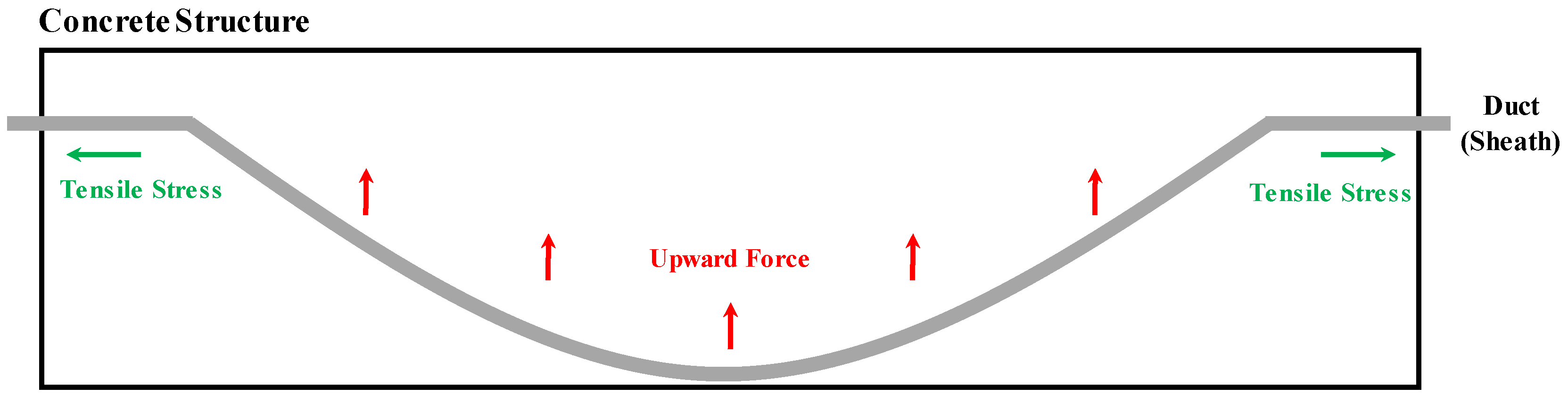

1. Introduction

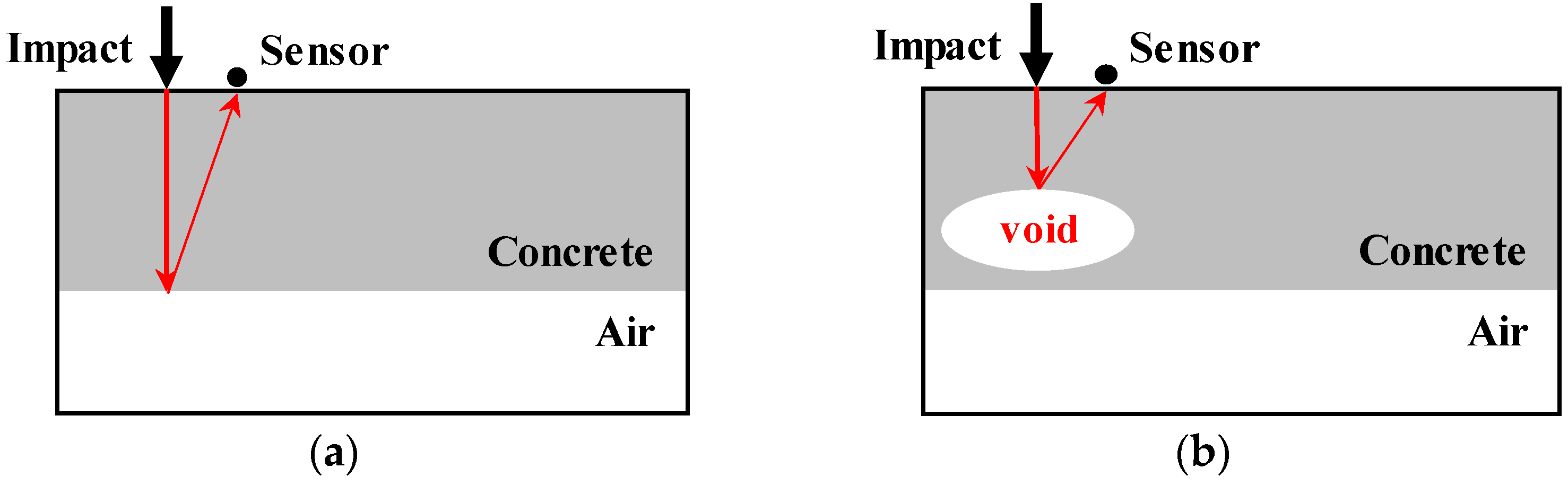

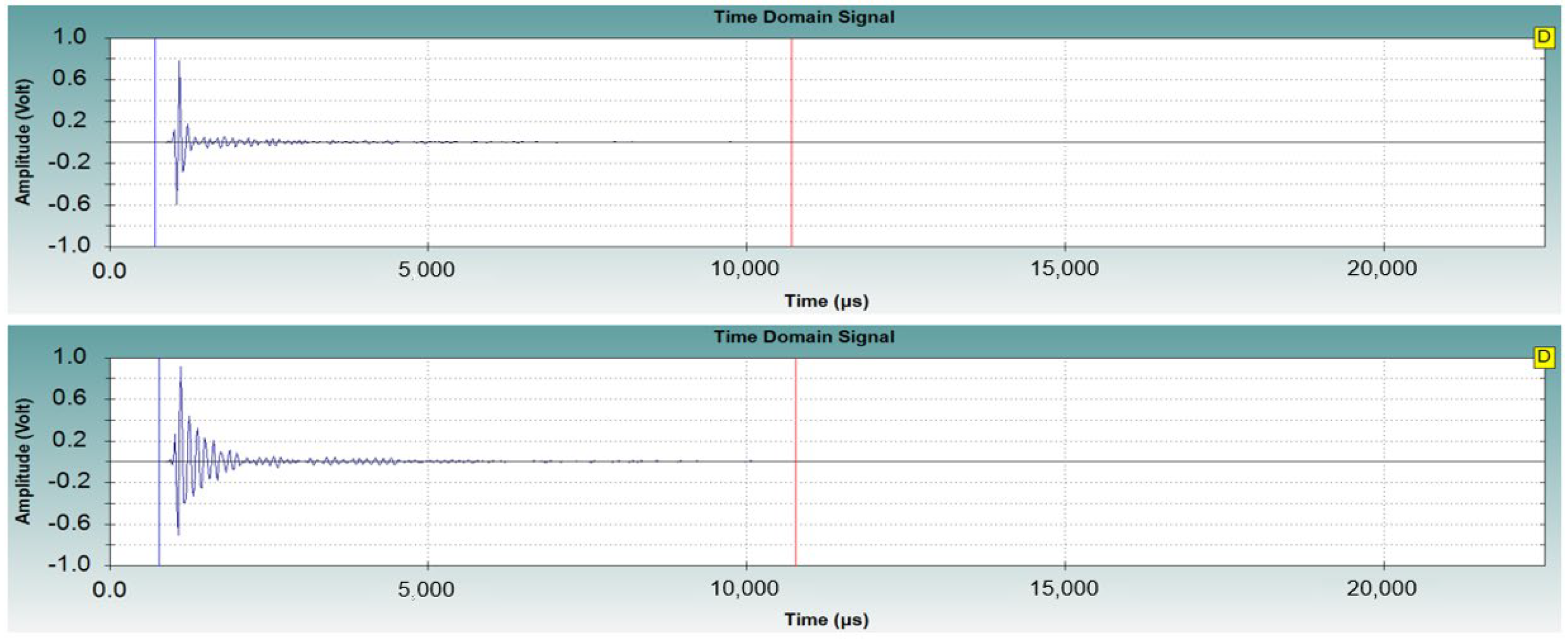

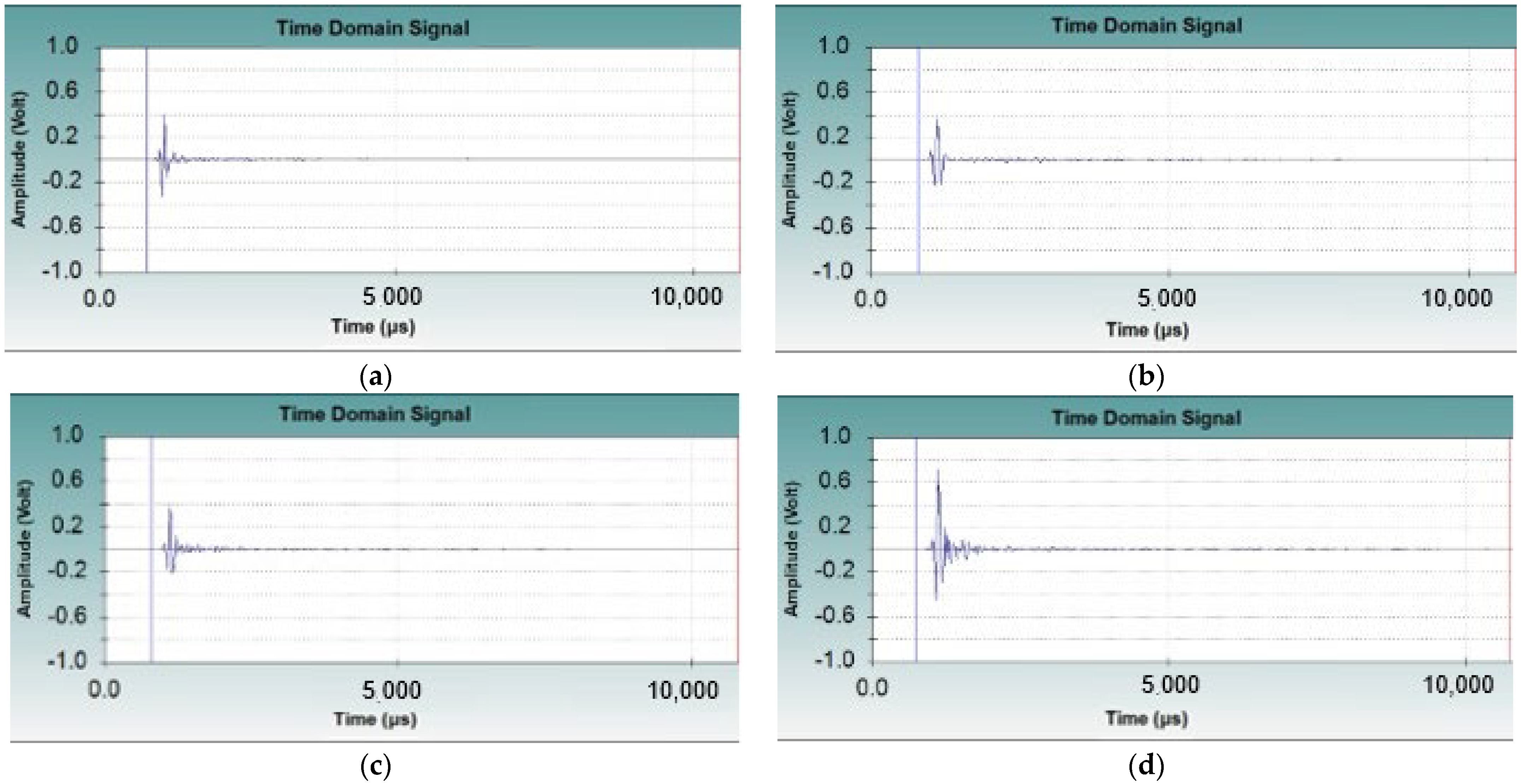

2. Impact–Echo Data

3. Void Detection Based on Deep SVDD

3.1. Standardization

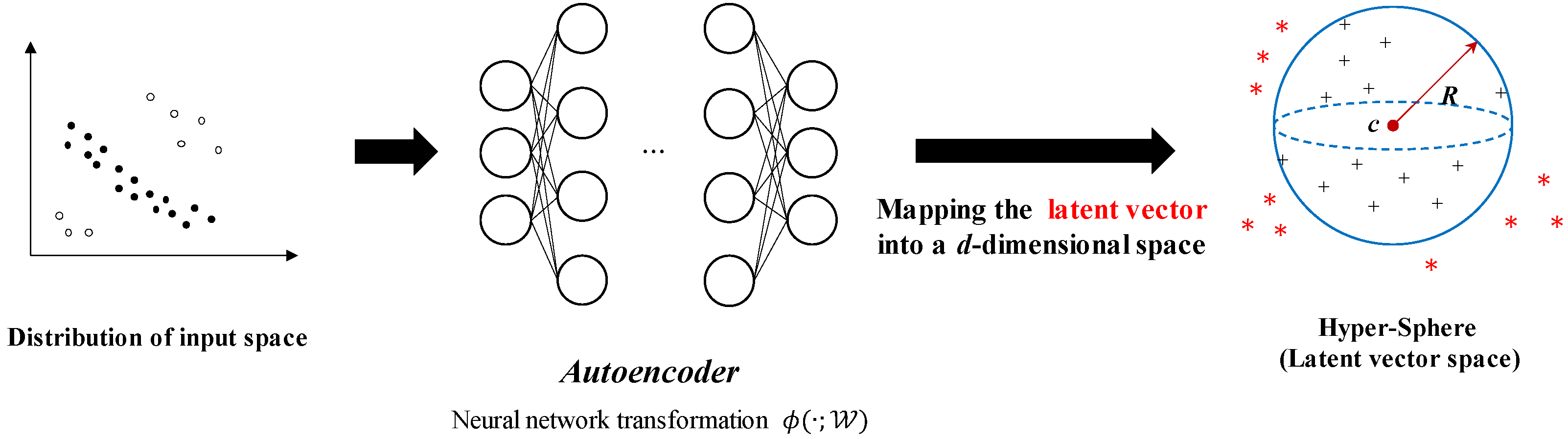

3.2. Deep Support Vector Data Description

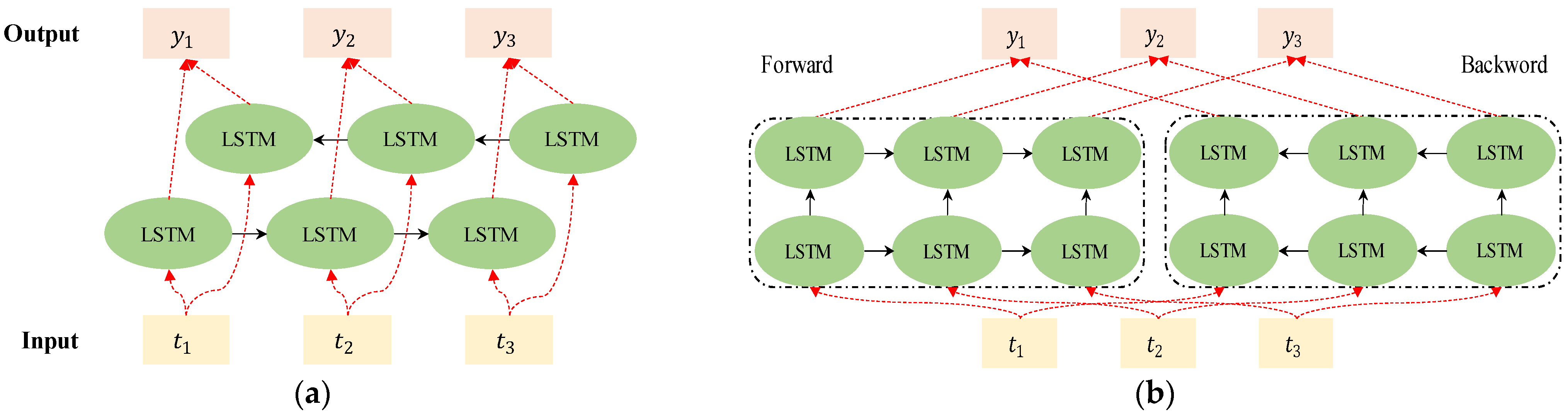

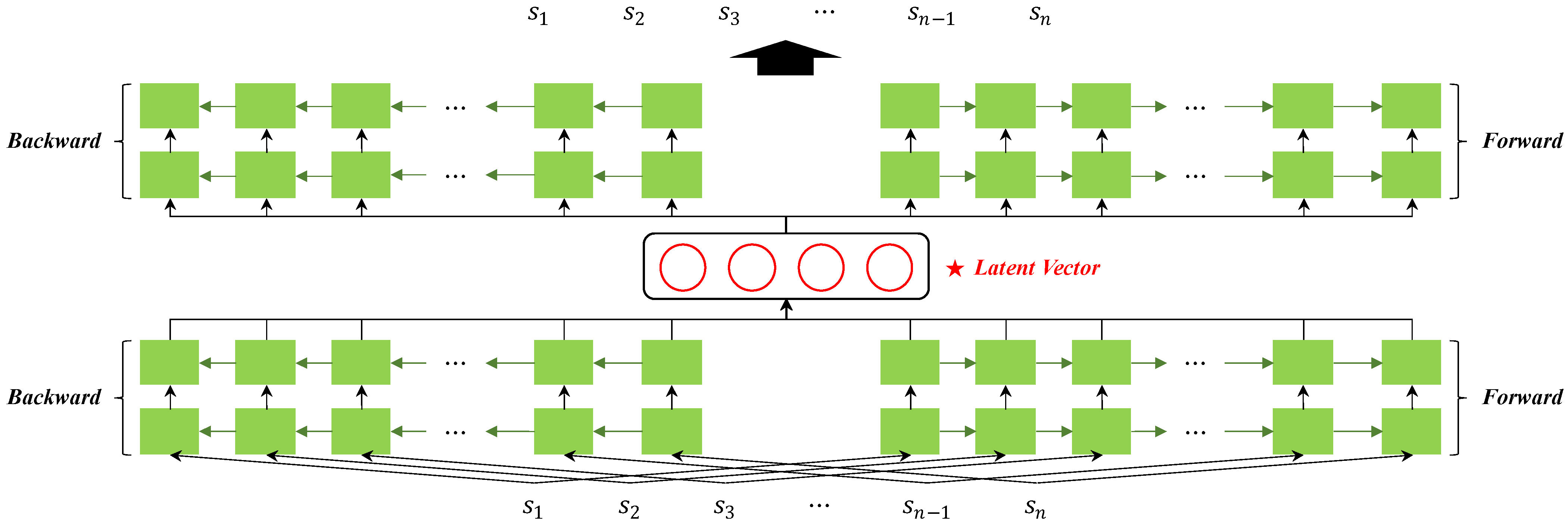

3.3. Autoencoder Based on ELMo

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Guo, T.; Chen, Z.; Liu, T.; Han, D. Time-dependent Reliability of Strengthened PSC Box-girder Bridge Using Phased and Incremental Static Analyses. Eng. Struct. 2016, 117, 358–371. [Google Scholar] [CrossRef]

- Bazant, Z.P.; Yu, Q.; Li, G.H.; Klein, G.H.; Kristek, V. Excessive Deflections of Record-Span Prestressed Box Girder. Concr. Int. 2010, 32, 44–52. [Google Scholar]

- Hertlein, B.H. Stress wave testing of concrete: A 25-year Review and a Peek into the Future. Constr. Build. Mater. 2013, 38, 1240–1245. [Google Scholar] [CrossRef]

- Wiggenhauser, H. Advanced NDT Methods for the Assessment of Concrete Structures. In Proceedings of the 2nd International Conference on Concrete Repair, Rehabilitation and Retrofitting, Cape Town, South Africa, 24–26 November 2008. [Google Scholar]

- McCann, D.M.; Forde, M.C. Review of NDT Methods in the Assessment of Concrete and Masonry Structures. NDT E Int. 2001, 34, 71–84. [Google Scholar] [CrossRef]

- Hoła, J.; Bień, J.; Schabowicz, K. Non-destructive and Semi-destructive Diagnostics of Concrete Structures in Assessment of their Durability. Bull. Pol. Acad. Sci. Technol. Sci. 2015, 63, 87–96. [Google Scholar] [CrossRef]

- Azari, H.; Nazarian, S.; Yuan, D. Assessing Sensitivity of Impact Echo and Ultrasonic Surface Waves Methods for Nondestructive Evaluation of Concrete Structures. Constr. Build. Mater. 2014, 71, 384–391. [Google Scholar] [CrossRef]

- Sansalone, M.; Carino, N.J. Impact-echo Method. Concr. Int. 1988, 10, 38–46. [Google Scholar]

- Liang, M.T.; Su, P.J. Detection of the corrosion damage of rebar in concrete using impact-echo method. Cem. Concr. Res. 2001, 31, 1427–1436. [Google Scholar] [CrossRef]

- Krüger, M.; Grosse, C.U. Impact-Echo-Techniques for crack depth measurement. Sustain. Bridges 2007, 29, 1–9. [Google Scholar]

- Chaudhary, M.T.A. Effectiveness of impact echo testing in detecting flaws in prestressed concrete slabs. Constr. Build. Mater. 2013, 47, 753–759. [Google Scholar] [CrossRef]

- Yeh, P.L.; Liu, P.L. Application of the wavelet transform and the enhanced Fourier spectrum in the impact echo test. NDT E Int. 2008, 41, 382–394. [Google Scholar] [CrossRef]

- Zhang, R.; Olson, L.D.; Seibi, A.; Helal, A.; Khalil, A.; Rahim, M. Improved impact-echo approach for non-destructive testing and evaluation. In Proceedings of the 3rd WSEAS International Conference on Advances in Sensors, Signals and Materials, Faro, Portugal, 3–5 November 2010. [Google Scholar]

- Lin, C.C.; Liu, P.L.; Yeh, P.L. Application of empirical mode decomposition in the impact-echo test. NDT E Int. 2009, 42, 589–598. [Google Scholar] [CrossRef]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.C.; Yung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. Ser. A 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Shokouhi, P.; Gucunski, N.; Maher, A. Time-frequency techniques for the impact echo data analysis and interpretations. In Proceedings of the 9th European NDT Conference, Berlin, German, 25–29 September 2006. [Google Scholar]

- Spencer, B.F., Jr.; Hoskere, V.; Narazaki, Y. Advances in computer vision-based civil infrastructure inspection and monitoring. Engineering 2019, 5, 199–222. [Google Scholar] [CrossRef]

- Zhang, L.; Shen, J.; Zhu, B. A research on an improved Unet-based concrete crack detection algorithm. Struct. Health Monit. 2021, 20, 1864–1879. [Google Scholar] [CrossRef]

- Zhang, J.K.; Yan, W.; Cui, D.M. Concrete condition assessment using impact-echo method and extreme learning machines. Sensors 2016, 16, 447. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Dorafshan, S.; Azari, H. Deep learning models for bridge deck evaluation using impact echo. Constr. Build. Mater. 2020, 263, 120109. [Google Scholar] [CrossRef]

- Dorafshan, S.; Azari, H. Evaluation of bridge decks with overlays using impact echo, a deep learning approach. Autom. Constr. 2020, 113, 103133. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. In The Handbook of Brain Theory and Neural Networks; Arbib, M.A., Ed.; MIT Press: Cambridge, MA, USA, 1995; p. 3361. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Lin, S.; Meng, D.; Choi, H.; Shams, S.; Azari, H. Laboratory assessment of nine methods for nondestructive evaluation of concrete bridge decks with overlays. Constr. Build. Mater. 2018, 188, 966–982. [Google Scholar] [CrossRef]

- Oh, B.D.; Choi, H.; Song, H.J.; Kim, J.D.; Park, C.Y.; Kim, Y.S. Detection of Defect Inside Duct Using Recurrent Neural Networks. Sens. Mater. 2020, 32, 171–182. [Google Scholar] [CrossRef]

- Oh, B.D.; Choi, H.; Kim, Y.J.; Chin, W.J.; Kim, Y.S. Defect Detection of Bridge based on Impact-Echo signals and Long Short-Term Memory. J. KIISE 2021, 48, 988–997. (In Korean) [Google Scholar] [CrossRef]

- Oh, B.D.; Choi, H.; Kim, Y.J.; Chin, W.J.; Kim, Y.S. Nondestructive Evaluation of Ducts in Prestressed Concrete Bridges Using Heterogeneous Neural Networks and Impact-echo. Sens. Mater. 2022, 34, 121–133. [Google Scholar] [CrossRef]

- Ruff, L.; Vandermeulen, R.; Goernitz, N.; Deecke, L.; Siddiqui, S.A.; Binder, A.; Müller, E.; Kloft, M. Deep one-class classification. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018. [Google Scholar]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LO, USA, 1–6 June 2018. [Google Scholar]

- Yang, S.; Liu, B.; Yang, M.; Li, Y. Long-term development of compressive strength and elastic modulus of concrete. Struct. Eng. Mech. 2018, 66, 263–271. [Google Scholar]

- Tufail, M.; Shahzada, K.; Gencturk, B.; Wei, J. Effect of elevated temperature on mechanical properties of limestone, quartzite and granite concrete. Int. J. Concr. Struct. Mater. 2017, 11, 17–28. [Google Scholar] [CrossRef]

- Bastidas-Arteaga, E. Reliability of reinforced concrete structures subjected to corrosion-fatigue and climate change. Int. J. Concr. Struct. Mater. 2018, 12, 1–13. [Google Scholar] [CrossRef]

- Tax, D.M.; Duin, R.P. Support vector data description. Mach. Learn. 2004, 54, 45–66. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Liou, C.Y.; Cheng, W.C.; Liou, J.W.; Liou, D.R. Autoencoder for words. Neurocomputing 2014, 139, 84–96. [Google Scholar] [CrossRef]

| Domain | Feature Type | Number of Features |

|---|---|---|

| Raw IE | 880–2640 | 80 |

| Specimen Type | Normal | Defect |

| Specimen−1 | 3200 | 4800 |

| Specimen−2 | 3456 | 7775 |

| Total | 14,390 | |

| Hyper-Parameters | Optimal | Types |

|---|---|---|

| First LSTM output size (Autoencoder) | 8 | 4–10 |

| Second LSTM output size (Autoencoder) | 4 | 4–10 |

| Hidden node (Deep SVDD) | 32 | 8–64 |

| Loss function (Deep SVDD) | Hard margin | Soft margin, Hard margin |

| Model | True | Pred | Acc (%) | ||

|---|---|---|---|---|---|

| Normal | Void | ||||

| Oh et al. [26] | Normal | 709 | 8 | 98.88 | 99.47 |

| Void | 4 | 1526 | 99.74 | ||

| Oh et al. [27] | Normal | 685 | 3 | 99.56 | 99.33 |

| Void | 12 | 1547 | 99.23 | ||

| Oh et al. [28] | Normal | 687 | 8 | 99.85 | 99.47 |

| Void | 4 | 1548 | 99.74 | ||

| Model | True | Pred | Acc (%) | ||

|---|---|---|---|---|---|

| Normal | Void | ||||

| Oh et al. [26] | Normal | 665 | 2791 | 19.24 | 56.96 |

| Void | 2043 | 5732 | 73.72 | ||

| Oh et al. [27] | Normal | 6 | 3450 | 0.17 | 68.99 |

| Void | 32 | 7743 | 99.59 | ||

| Oh et al. [28] | Normal | 3456 | 0 | 100.0 | 30.77 |

| Void | 7775 | 0 | 0.0 | ||

| Proposed model | Normal | 3456 | 0 | 100.0 | 77.84 |

| Void | 2489 | 5286 | 67.99 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oh, B.-D.; Choi, H.; Chin, W.-J.; Park, C.-Y.; Kim, Y.-S. Void Detection inside Duct of Prestressed Concrete Bridges Based on Deep Support Vector Data Description. Appl. Sci. 2023, 13, 5981. https://doi.org/10.3390/app13105981

Oh B-D, Choi H, Chin W-J, Park C-Y, Kim Y-S. Void Detection inside Duct of Prestressed Concrete Bridges Based on Deep Support Vector Data Description. Applied Sciences. 2023; 13(10):5981. https://doi.org/10.3390/app13105981

Chicago/Turabian StyleOh, Byoung-Doo, Hyung Choi, Won-Jong Chin, Chan-Young Park, and Yu-Seop Kim. 2023. "Void Detection inside Duct of Prestressed Concrete Bridges Based on Deep Support Vector Data Description" Applied Sciences 13, no. 10: 5981. https://doi.org/10.3390/app13105981

APA StyleOh, B.-D., Choi, H., Chin, W.-J., Park, C.-Y., & Kim, Y.-S. (2023). Void Detection inside Duct of Prestressed Concrete Bridges Based on Deep Support Vector Data Description. Applied Sciences, 13(10), 5981. https://doi.org/10.3390/app13105981