Abstract

Business Intelligence and Analytics (BIA) systems play an essential role in organizations, providing actionable insights that enable business users to make more informed, data-driven decisions. However, many Higher Education (HE) institutions do not have accessible and usable models to guide them through the incremental development of BIA solutions to realize the full potential value of BIA. The situation is becoming ever more acute as HE operates today in a complex and dynamic environment brought forward by globalization and the rapid development of information technologies. This paper proposes a domain-specific BIA maturity model (MM) for HE–the HE-BIA Maturity Model. Following a design science approach, this paper details the design, development, and evaluation of two artifacts: the MM and the maturity assessment method. The evaluation phase comprised three case studies with universities from different countries and two workshops with practitioners from more than ten countries. HE institutions reported that the assessment with the HE-BIA model was (i) useful and adequate for their needs; (ii) and contributed to a better understanding of the current status of their BIA landscape, making it explicit that a BIA program is a technology endeavor as well as an organizational development.

1. Introduction

Business Intelligence and Analytics (BIA) systems play an essential role in organizations, providing actionable insights that enable business users to make more informed, data-driven decisions [1,2]. Conceptually, business intelligence (BI) systems combine architectures, databases (or data warehouses), analytical tools, and applications to provide managerial decision support [3,4]. The goal of BI is to provide the right information, to the right business users, at the right time and with the right context.

Traditionally, the BI component was linked to the data exploration layer, also called the BI applications layer [5]. Nowadays, BI is seen as a comprehensive concept, i.e., the complete end-to-end solution, including the methods and processes that enable data collection and transformation into actionable insights used for decision making. The range and sophistication of BI methods and techniques have evolved through the years. Due to the growing emphasis on analytics and big data, the term business intelligence and analytics (BIA) has consistently been used to comprehensively and more accurately describe contemporary data-driven decision support systems [2]. The use of artificial intelligence (AI) techniques has led to a new generation of AI-enabled BI tools [6], enabling prescriptive analysis, in addition to the usual descriptive and predictive analysis.

As the BI term has evolved throughout the years, BI development inside an organization typically also progresses iteratively. This development path can be challenging, and there are factors that need to be considered to ensure the success of this type of system. The literature suggests several studies on critical success factors and BI capabilities that organizations should seek to achieve in order to leverage the true value and impact of BIA [7,8,9]. An alternative approach to reflect on the critical success aspects of BIA projects is to consider a maturity model. By design, maturity models (MM) are iterative and showcase a progressive path [10], in which an organization starts with a basic or initial stage of maturity and progresses towards a more mature state. Maturity models are defined using a set of dimensions and a sequence of levels (or stages) mapping the progression path. The selection of dimensions in a MM is the foundation of the model design. Typically, these models are used as a self-assessment tool to identify the strengths and weaknesses of certain areas in an organization. In other words, a MM enables an assessment of the current maturity level in each dimension, as well as a reflection on the desired maturity level to be achieved in the future. These models are instrumental in defining the AS-IS and the TO-BE view pictures of an organization, using the maturity dimensions as key assessment areas.

MM can be generic or domain specific. A generic model can be used across different industries, enabling benchmarking. However, this approach tends to be complex, with a large number of assessment questions and a terminology set that is not particularly overlapping with the vocabulary and definitions of a specific domain. In this paper, we are focused on Higher Education (HE). In this sector, maturity models have been used to assess several dimensions of HE institutions (HEIs), such as information technology (IT), process management, online learning and learning analytics [11,12]. Several BI-related maturity models exist in the literature [10], including models that originated from academia or practice. These maturity models can be generic, such as [13,14,15], or domain specific, as the [16] for higher education or [17] for healthcare.

A previous study reported that the use of a generic BI maturity model resulted in difficulties in correctly assessing the BI maturity level of initiatives in different European HEIs [18]. The main reasons are the lack of understanding of key BI concepts and obstacles in locating the right set of experts in each institution that could correctly and informedly answer the many diverse questions of the MM. This result led to the decision in 2019 to development of a new maturity model specific to the assessment of BIA systems in HE. The new MM is the outcome of a research project conducted by two BIA professors in collaboration with the BI Special Interest Group (BI SIG) of EUNIS–the European University Information Systems organization, a non-profit organization aiming at developing the IT landscape of HEIs through collaboration and networking [19]. The end goal of this project is to conduct a European-level survey on the maturity level of BIA systems in Higher Education. The previous study conducted by the EUNIS BI SIG was inconclusive [18]. Therefore, this research project was launched with the purpose of designing a new MM, driven by the existing knowledge published in the literature, and reflecting the needs and vocabulary of HE practitioners represented in the EUNIS BI-SIG. Specifically, five requirements encompass the research design of this project, which led to the decision to design a new BI maturity model, as opposed to using an existing one.

- (R1): The model should enable each HEI to conduct a self-assessment exercise;

- (R2): It should be a domain-specific model, easy to understand and use relevant terminology for HE;

- (R3): The model should use a lean approach, i.e., enabling a high-level assessment that can be achieved with few resources (people and time) as opposed to providing an extensive list of questions;

- (R4): The model should capture new analytical aspects, such as the use of AI and Big Data, Internet of Things and 5G, that will be increasingly more relevant in the campus of the future. Many MM usually cited in the literature are now outdated, considering the complexity and novelty of current BIA solutions;

- (R5): The model should be designed following a research methodology in order to ensure a scientific and rigorous approach.

This paper presents the HE-BIA maturity model version 2.0 that enables Higher Education Institutions to perform a lean self-assessment of their BIA solutions. The remainder of this paper is structured as follows. We start discussing the related work in terms of existing BIA maturity models, followed by the description of the research design. The next section presents a step-by-step description of the design science research approach. Then, we present the research artifacts, the HE-BIA maturity model v2.0 and the assessment model. In the following section, we discuss the evaluation phase and the feedback received. The final section presents conclusions and avenues for future work.

2. Related Work

Maturity models are established means to measure the strengths and weaknesses of BI initiatives. These MM consist of multiple archetypal levels of maturity of a certain domain and can be used for organizational assessment and development. In the case of BI, many MMs have been proposed both from academia and industry (practice/consulting). In the literature, we find many BI maturity models that have been used for several years, some of them for almost two decades. Table 1 presents an overview of existing BI MM that were critically analyzed in this study, selected in accordance with the following criteria: the credibility of the proponent and availability of documentation. The listed models can be applied to any industry, except the latter two models [16,17] which have been developed respectively for higher education and healthcare.

Table 1.

Comparative analysis of BIA maturity models.

A comparative analysis was performed on the selected maturity models, taken as reference to the properties of a BI maturity model: number of dimensions and levels, origin (academia or industry), and evaluation type. Dimensions are specific capabilities, processes or objects that make up the field of study. Levels represent the states of maturity in a given dimension; each level has a designation and a detailed description. The evaluation type refers to the method applied, which can be either a self-assessment exercise (e.g., using a questionnaire) or a third-party assisted exercise (with consultants or experts).

An analysis of Table 1 conveys the following evidence for the sample of analyzed models: (1) they are mostly originated from the industry/practice and typically use a 5-level scale; (2) the number of dimensions is quite diverse; and (3) the predominant type of evaluation is self-assessment using a questionnaire, although there are also several models requiring third-party assistance.

The work of [17] was instrumental in the design of the HE-BIA maturity model, which will be detailed in the following section. The ISMETT hospital BI MM is different from the other selected models. First, it was designed by a research task force comprising both researchers and practitioners knowledgeable of BI in healthcare. Secondly, the number of dimensions is substantially higher than usual, in total 23 dimensions, clustered in four areas: functional, technological, diffusional, and organizational. This comprehensive set of dimensions was considered “to form a model that is enough detailed and simple to suggest effective improvement paths to be pursued by healthcare organizations” [17] (p. 87). Although compliant with requirement R1, the ISMETT hospital BI MM was still overly complex for our purposes, failing to comply with requirement R3. Being a domain-specific model for healthcare, this model was inspirational to our study, providing examples of how to adjust the terminology to higher education (requirement R2).

Models number 8 and 9 were particularly important to determine new analytical aspects of modern BIA solutions (requirement R4) that should be included in the new MM. In 2014, TDWI proposed a MM model for analytics [24] (model 8), acknowledging the fact that many organizations had moved forward from traditional BI systems, and were now implementing advanced analytics projects, and that self-service BI had become accessible to more users. In this model, TDWI defines analytics as an overarching term including BI and advanced analytics. Model 8 proposes an online assessment questionnaire with 35 questions across five dimensions of analytics maturity: Organization, Infrastructure, Data management, Analytics, and Governance. The Modern DW MM [25] (model 9), proposed by TDWI in 2018, distinguishes new DW requirements from traditional DW requirements–related to the management and reporting of structured data. The model addresses new DW requirements including support for real-time or streaming data, support for multi-structured data and advanced analytics, and a services architecture. This model uses 50 questions across five main dimensions: Data Diversity, Infrastructure agility, Analytics support, Sharing and collaboration, and Security and governance. Each of these main dimensions are further divided into 20 sub-dimensions.

Finally, model number 10 [16], is the Institutional Intelligence White Book maturity model, also known as the OCU model, developed by Oficina de Cooperación Universitaria (OCU), in Spain, with the collaboration of Jisc, in the UK, two universities from the US and one university from Germany. This model is compliant with requirements R1 (self-assessment) and R2 (domain-specific), aiming to serve as a general tool to assess the maturity of the Institutional Intelligence capability in HEIs. The assessment is performed across nine dimensions, and five levels, using an Excel spreadsheet. This model was deemed complex to use and outdated, thus failing to comply with requirements R3 and R4.

3. Research Design

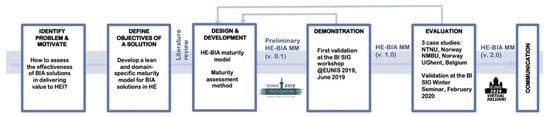

This project followed a design science approach. Design science research (DSR), in the context of Information Systems, aims at designing new and better solutions to existing problems. Figure 1 displays the research design followed, which is a version of the DSR process model [27]. Starting with the identification of the research problem, and from that, inferring the objectives of the solution given the knowledge of what is possible and feasible. The next activity is the design and development of the set of artifacts that compose the solution to the problem. Artifacts can be constructs (i.e., concept vocabulary), models, methods or instantiations [28]. The fourth activity is the demonstration of the use of the artifact to solve one or more instances of the problem, using, for instance, a case study or simulation. Then follows evaluation to observe and measure the utility and effectiveness of the artifacts as a solution to the identified problem. The sixth and final activity of the DSR process model is communication, in which results are shared, emphasizing the utility, novelty and rigor of the artifacts’ design. In this project, two iterations of the design and development activity were performed, represented by the feedback loops in Figure 1.

Figure 1.

The HE-BIA maturity model design science research design.

4. Design Science Research Step-by-Step

4.1. Problem and Motivation

HEIs are operating today in a very complex and dynamic environment, in which globalization and the rapid development of information technologies have led to strong competition. There is a considerable amount of data involved in the daily operations within HE. To illustrate, educational institutions need to handle data related to enrollment, how students perform in their courses, the grades received, their outcomes once they graduate and have begun seeking employment. Besides teaching and learning, research and innovation are also strategic key areas for many HEIs. A large amount of processes needs to be supported in the daily operation and strategic development of HEIs [29]. Increasingly, BI is employed to support these lines of activities by facilitating fast and evidence-based decision-making throughout the HEI. A recent Educause report [30] revealed the top 10 strategic technologies that HEIs in the US are investing in: APIs; institutional support for accessibility technologies; blended data center; mobile devices for teaching and learning; open educational resources; technologies for improving student data analysis; security analytics; integrated student success planning and advising systems; mobile apps for enterprise applications; and predictive analytics for student success. Most of these technological trends rely on BI and analytics solutions. In the US, the Higher Education Data Warehousing (HEDW) is a network of practitioners on knowledge management and decision support. This is a mature community that since 2013 brings together specialists from IT, institutional research, and functional areas in HEIs. The group’s name reflects the initial focus on data warehouse (DW); however, this is a BIA community of practice. At the European level, the EUNIS Special interest Group on Business Intelligence also brings together BIA practitioners and researchers in HE since 2012. BIA is undoubtedly a strategic technological need in higher education worldwide. In Europe, most HEIs are not directly business-driven; however, there is a clear need to increase public investment efficiency in higher education as an economic good [31]. Apart from the economic and public accountability goals, HEIs also compete for the most qualified students and best faculty to excel in the core areas of teaching and research. Worldwide, many universities and colleges have implemented BIA solutions in their operations, with a varying degree of success. BI has been applied in HEIs, for instance, to ensure compliance [32], to analyze student learning [33,34], in current research information systems or CRIS [35], or in monitoring strategic goals [36]. However, for the most part, BI investment in the education sector is still lagging behind the industrial sector and larger enterprises, partially due to budget limitations [37]. The benefits of BIA systems [38] are attractive to HEIs, whether in the form of increased autonomy and flexibility for the users when it comes to creating reports, quick and simple analyses, improved decision support and operational efficiency, as well as a range of new analytical functions. Notwithstanding, there is a limited study at a holistic level on how to assess the effectiveness of BIA solutions in delivering value to HEIs. The present study aims to address this gap.

4.2. Objectives of the Solution

The objective of the project can be specified at the micro (HEI) and macro (BIA community) levels. At the micro-level, the objective is to provide an HEI with a specific maturity model for higher education as a general tool to assess the maturity level of the BIA capability of the institution. Specifically, the use of the MM should enable HEIs to:

- Identify their current maturity level;

- Set a desired maturity level for the BIA initiative according to university strategic goals;

- Identify the dimensions of potential improvement, and use them to internally devise an adequate path for improvement (roadmap) to reach the desired maturity level;

- Use the model as a strategic tool to raise awareness among university management of the need to invest in BIA.

At a macro level, the goal is to have a basis of understanding for the European higher education BIA community of the critical success factors of BIA deployment, with an inspirational mindset looking at the future campus. An expected outcome, from the EUNIS BI SIG perspective, is to be able to run in the near future a more informed survey on the maturity of BIA across European HEIs. A survey with preferably a wider range of participation than a previous survey carried out in 2013, which targeted mostly public HEIs (92%) in nine countries (France, Finland, Germany, Ireland, Italy, Portugal, Spain, Sweden, and the United Kingdom), with 66 respondents [18]. But most importantly, a survey that can run based on a more mature and general understanding of the key components of a BIA solution. This work also has the objective to train IT and functional/business users in HE, at the European level, on the key concepts of BIA. This way, participants can more informedly perform a self-assessment and benchmark their BIA maturity level against other HEIs at a given point in time and overtime. The research question that frames this project is the following:

Is a lean and HE-specific MM a useful and relevant instrument for assessing the current status of deployed BIA solutions?

4.3. Design and Development

This phase of the DSR process entails the iterative design and development of two artifacts that compose the solution to the problem. The first artifact—the HE-BIA maturity model—is a domain-specific MM, fulfilling the five design requirements. The second artifact is an assessment method comprising a set of recommendations on how to perform the self-assessment exercise.

The design started with a knowledge acquisition phase, which included an extensive literature review on BIA maturity models and several brainstorming sessions among the project’s researchers. Due to the aforementioned deficiency of the current models, we chose to combine the knowledge of the existing models and, where applicable, add new knowledge into a new model. We did this by analyzing existing maturity dimensions and levels in order to compose a maturity model for HEIs using domain-specific terminology. A design constraint that was always present was to avoid an overly technical terminology that could prevent the maturity exercise from being accessible to academic decision-makers, e.g., executive sponsors and business users. Instead of writing generic maturity statements, we decided to instantiate the model as much as possible to the HE context in terms of entities, processes, and situations (requirement R2). Another design constraint was the level of detail of the exercise. In order to meet requirements R1 and R3, we needed to balance the depth (i.e., level of detail) of the model, not only in terms of the number of maturity dimensions but also in the level descriptions. The first iteration of design & development led to the preliminary version of the HE-BIA maturity model, called v0.1 (see Figure 1). This model was further refined in two iterations, following a typical design approach for maturity models [39]. Two versions of the HE-BIA model were developed to incorporate the set of improvement recommendations gathered in the demonstration and evaluation phases, respectively.

The type of assessment exercise we envisaged also constrained the design of the model. Given the requirement to produce a lean approach (R3), the maturity model should be used in a self-assessment exercise in a limited time frame, in a collaborative effort to promote discussion among participants, as opposed to using extensive questionnaires.

4.4. Demonstration

In June 2019, the preliminary version of the HE-BIA MM was presented and initially validated in a workshop with the EUNIS BI SIG community of practice. This half-day workshop involved 30 participants from 12 countries and took place before the annual congress of EUNIS 2019 in Trondheim, Norway. The participants included BI practitioners, IT directors, Vice-Rectors, and BI project leaders. In the DSR paradigm, the relevance of the designed artifacts is measured in terms of their utility for practice [28]. Therefore, the goal of this workshop was to evaluate the utility of the proposed maturity model, gathering qualitative feedback on its relevance for the BI SIG community. Following the demonstration phase, a new iteration of the design and development phase took place, leading to a new version of the model–HE-BIA MM v1.0 (see Figure 1). The details of this initial feedback are presented in the Discussion section.

4.5. Evaluation

In the evaluation phase, three case studies were completed with HEIs selected from different countries: the Norwegian University of Science and Technology (NTNU) and the Norwegian University of Life Sciences (NMBU), both in Norway and Ghent University (UGhent) from Belgium. The selection of HEIs for the case studies was made by convenience [40]. All HEIs are active members of the EUNIS BI SIG. NTNU is the largest university in Norway (42,000 students) and is considered a front-runner in terms of BI, having a stable BI program since 2011 [41]. The cross-functional BI team at NTNU comprises 19 people, of which 10 are full-time employees (FTE). The team, led by a full-time BI manager, is organized in different roles as scrum developer, product owner, educational analyst, business architect, and information architect. NMBU is a medium-sized university (5200 students) that started its BI initiative in 2017. The BI team currently consists of one FTE full-stack developer working in close collaboration with stakeholders in the university. UGhent is a large university in Belgium (45,000 students), with a consolidated BI program since 2015. The BI team at UGhent comprises a full-time BI team leader and six FTE (four internal FTE and two FTE external consultants). The first case study, with NTNU, occurred in September–October 2019, followed by the NMBU case study in November 2019, and finally, the UGhent case study in February 2020. Version 1.0 of the HE-BIA MM and a similar research protocol were used in all three case studies.

The final validation of the model was performed on a BI SIG workshop that took place in Ghent, in Belgium, in February 2020. The BISIG Winter Seminar was a 2-day event, with 21 participants from eight countries (Belgium, Denmark, Finland, Germany, Netherlands, Norway, Portugal, and United Kingdom). The HE-BIA MM was instrumental in the workshop organized on the second day of the event. NTNU and NMBU shared their results and experience with the maturity assessment. Several exercises were developed collaboratively to further test the relevance and usefulness of the HE-BIA MM. A summary of the feedback received is presented in the Discussion section.

4.6. Communication

Preliminary results, focusing on HE-BIA MM version 0.1 were presented in a communication at the EUNIS 2019 annual congress in Trondheim, Norway. Later in 2020, at the EUNIS 2020 annual congress, an extended abstract was published describing the research design that would lead to the definition of the HE-BIA MM version 2.0. Recently, in 2021, a paper detailing the in-depths findings of one of the case studies performed to evaluate the HE-BIA MM version 1.0, was published in the International Journal of Business Intelligence Research [41]. The present paper is focused on the design process of the MM, detailing each step of the design science research methodology and the iterations that lead to the final version (2.0) of the HE-BIA MM (as stated by requirement R5).

5. The HE-BIA Maturity Model v2.0

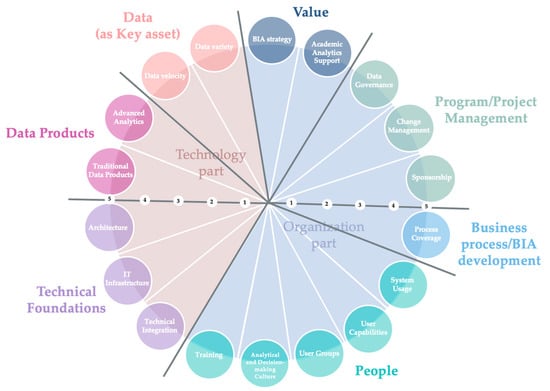

The level of detail of the model was the central design decision. The design was an iterative exercise, starting with the study of the work of [17] and a tentative application to the higher education context. This model has 23 dimensions (see Table 1) and an overly complex assessment procedure. Therefore, although inspirational, the work of [17] needed to be adapted to HE and simplified to comply with our design requirements (R1, R4), i.e., enabling HEIs to perform a lean self-assessment of their BIA solutions. An analysis of older models (e.g., see models no. 5 and 6 in Table 1) reveals a design pattern of using a fewer set of dimensions, that context-wise, include several maturity aspects. As opposed to these older maturity models with fewer dimensions, we opted to design a model with more dimensions in order to provide users with a sufficient level of detail for the maturity assessment. Additionally, most of the models presented in Table 1 needed to be updated to capture new analytical aspects (requirement R4), such as the use of AI and big data, which determined the use of additional maturity dimensions. We designed a model with a hierarchy of “maturity components” in which we can drill-down and drill-up. The hierarchy of “maturity components” is defined as part → category → maturity dimension, in which the latter is the lowest level of detail. The HE-BI MM comprises 18 dimensions, grouped in two parts and seven maturity categories, as displayed in Figure 2. In the proposed model, dimensions describe, at the right level of detail, the important issues that HEIs need to address to get a holistic assessment and view of their BIA initiative. As frequently documented in the literature, the implementation of BIA systems involves a combination of technological and organizational issues that need to be secured in order to be successful. This determined the initial categorization of “maturity components” into two parts: technology- and organization-related issues, which are then further detailed into categories (see Figure 2).

Figure 2.

The HE-BIA maturity model v.2.0: parts and maturity categories.

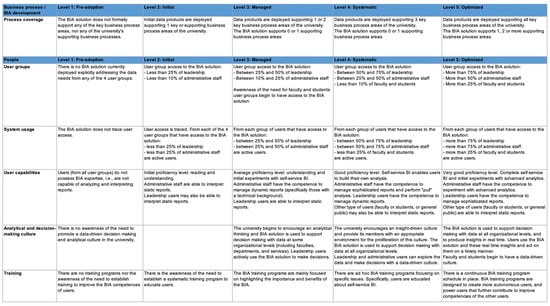

The HE-BIA maturity model comprises 18 dimensions over seven maturity categories, as illustrated in Figure 3. The model uses five maturity levels to specify the progression in each dimension: 1—pre-adoption, 2—Initial, 3—Managed, 4—Systematic, and 5—Optimized. The definition of each dimension is presented in Table A1 and Table A2 in the Appendix A.

Figure 3.

The HE-BIA maturity model v.2.0: maturity dimensions and levels.

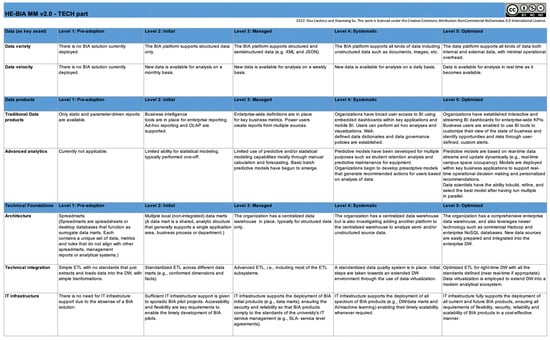

5.1. The Technology Part

The technological part groups the elements in the technical realm that are instrumental for enabling the effective use of BIA solutions in the context of HEIs. The maturity levels of the seven dimensions in the technological part are detailed in Figure A1 in the Appendix A.

5.1.1. Data (as a Key Asset)

Data is central to the notion of data-driven decision-making or evidence-based decision-making and, therefore, forms the entry point to the assessment. Data is also rather situational to the organization in what data can be collected and what data is relevant. The data category encompasses two maturity dimensions: data variety and data velocity. We decided to exclude the data volume dimension from the assessment. This is because what organizations need is insight from data [42], and every so often, rapidly processing a smaller amount of data may benefit the organization more than simply collecting large amounts of data. When confronted with the question of inclusion or exclusion of the volume dimension, practitioners in HEIs, after some reflection, have been in unison on the choice of exclusion. In their own words, “it is the thickness of the data that counts.” Conversely, the ability and the practice to collect and prepare different types of data (variety) and at a higher speed (velocity) are considered good indicators of the organization’s ability to enable the more innovative upstream BIA activities.

5.1.2. Data Products

Data Products are the direct tangible BIA products and services provided by the BIA technical platform. The type and sophistication of the products have a direct relation to the possibility provided to the upstream analyses and activities. Two maturity dimensions are grouped in this category: traditional data products and advanced analytics. The former has its focus on the traditional BI products, such as reports and dashboards, while the latter captures the recent trends and developments of the business analytics part, namely machine learning and data mining focused tasks, such as prediction (aligned with requirement R4). We consider these two separate lines of products, where one does not succeed or replace the other. They are both needed in the foreseeable future and follow their separate maturity ladders.

5.1.3. Technical Foundations

The Technical foundations category groups the underlying technical backend factors that make the deployment of frontend data products possible. These factors are directly relevant to the production of data products. Three maturity dimensions are grouped here. Architecture aims to capture the practices of how to store and organize the central data repository (with less potential problems, less maintenance, and more flexibility to accommodate emerging needs) to best support BIA frontend activities (such as interactive dashboards and ad hoc analytical needs) across the whole organization.

Technical integration captures the best practices of integrating source data into a central repository. Traditionally, ETL (Extract, Transform, Load) processes are used to integrate data into the data warehouse [43]. Recently, development on sourcing unstructured and real-time data has led to the development of data lakes, where ELT (Extract, Load, Transform) is often used to integrate the source data [44].

The last dimension in this category is IT infrastructure. This dimension encapsulates the required university’s information technology infrastructure capabilities to support the development of BIA products. While IT infrastructure is typically not considered a motivation factor for BIA development, nonetheless, we have decided to include it in the maturity model as a hygiene factor. The rationale is that if this element is absent or insufficient, it hampers the development of BIA solutions. For example, lack of possibility or flexibility in accessing the needed infrastructure for testing and deployment of new BIA products have a tendency to either slow down the projects or entail workarounds (e.g., BIA team seeking public cloud infrastructure services instead).

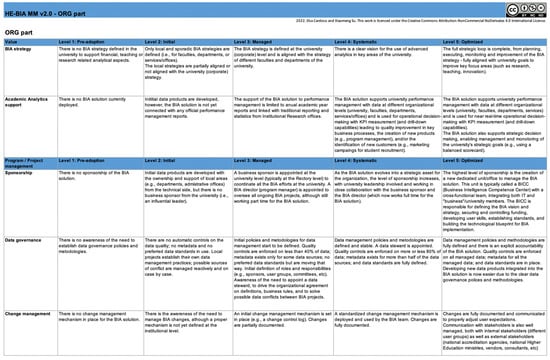

5.2. The Organization Part

The organization part groups the non-technical elements that are key to the development and usage of BIA solutions in higher education. The maturity levels of the 11 dimensions in the organization part are detailed in Figure A2 and Figure A3 in the Appendix A.

5.2.1. Value

The Value category measures the actual impact or value that the BIA solution has on the organization. The first dimension of this category is BIA strategy, which refers to defining and managing the strategy of the BIA initiative itself and its alignment with the university’s strategic goals. The greater the degree of alignment between the BIA solution’s strategy and the university’s strategy (enabling improvement in key areas or processes), the greater the value of the BIA solution.

The second dimension in this category is Academic analytics support, which describes the effective value of the BIA solution in supporting academic analytics. Academic analytics is a HE domain-specific concept, similar to business analytics, which is concerned with improving the effectiveness of organizational processes [45]. This dimension refers to the application of data analytics at an institutional level, as well as national and international levels, to provide insights for policymaking. This dimension measures how well the BIA solution supports the performance management needs of academic leadership at different organizational levels (i.e., university/rectory, faculties, departments, services, or offices) with relevant data and insights impacting operational decision-making, leading, for instance, to cost reductions in key business processes or the identification of new customers (e.g., marketing campaigns for student recruitment).

5.2.2. Program/Project Management

The successful development of BIA solutions is an evolving and iterative endeavor. Hence, BIA systems are considered an organizational program (i.e., a collection of projects) rather than an individual project. The program/project management category includes three dimensions related to BIA project success factors, which are pivotal in the planning and managing the execution of the BIA program [46]. The Sponsorship dimension is instrumental in ensuring adequate resources for the development and improvement of the BIA solution. This dimension describes the level of support, ownership, and responsibility of top management towards the BIA solution. Maturity progression in this dimension includes the appointment of a business sponsor (a well-respected and influencer leader of the university, typically from the rectory or top management), a BIA director, and an organizational unit dedicated to the development and management of the BIA solution. This unit is typically called a Business Intelligence Competence Center (BICC), with a cross-functional team, including members from IT and the “business” or academic side [47,48].

The Data governance dimension maps the level of adherence to data governance practices and methodologies to ensure the quality and accuracy of the data in the BIA solution. According to [49], there has been a growing interest and recognition of the importance of data governance in HE. Data governance refers to the exercise of authority and control over the management of data [50]. This includes the effective application of principles, policies, roles, responsibilities, use of data standards for data management (for modeling and data exchange) across the university.

The final dimension in this category is Change management. This dimension maps the ability to manage changes within the context of the BIA solution. Change management is a crucial activity in DW/BI systems [5]. Any issue that impacts a BIA project’s schedule, budget or scope should be considered a change. Industry experts advocate that a change management mechanism should be set in place to capture and document changes enabling better communication with the BIA users. Given the evolving nature of the BIA solutions, as previously discussed, there is a need for continuous support and development. Hence, change management is essential to manage the expectations of BIA users. This dimension captures this need to document and manage changes of the BIA solution; it does not cover organizational change management as in [51].

5.2.3. Business Process/BIA Development

The Business process/BIA development category comprises a single dimension, Process coverage. This dimension maps the coverage of the BIA solution in support of the university business processes. The initial design of this dimension considered the original value chain concept [52] to distinguish between primary and support business processes. However, the application of the value chain model to higher education is not straightforward. Ref. [53] proposes a reconfigured higher education value chain model, with an interpretation of academic processes, distinguishing between value driving and other activities (primary and secondary support services). However, this reconfigured value chain is not as well-known as the original Porter’s model. And given the design requirements R2 and R3 in the maturity model, we opted for a different interpretation of the chain of activities or processes in HEIs. Four key business process areas are defined—Finance, Academic, Research, and Human Resources—corresponding to the most common areas of usage of BIA in universities. The maturity progression in the Process coverage dimension considers the formal support of the BIA solution with specific data products to these key business process areas as well as the supporting or secondary business processes (such as information technology, logistics, facility management, etc.). This design choice intends to avoid the complex exercise of mapping the value chain model to HE, opting for a lean approach to determine process coverage.

5.2.4. People

The People category groups the important aspects of users, their competence, their decision-making culture, and their engagement with the BIA systems. The User groups dimension measures the type of users that have access to the BIA solutions. In the maturity model, academic users are grouped into four structural groups: leadership (top-level management/rectory, school-level management, etc.), administrative staff (from central university services, local school services/offices), faculty, and students. To realize its full potential, some authors state that one might “need to be thinking about deploying BI to 100 percent of the employees as well as beyond organizational boundaries to customers and suppliers” [54] (p. 89). Including students as a user group may be controversial, but the rationale in the model is to be forward-thinking (i.e., requirement R4), and we envisage that, in the future, learning analytics products may be available to students through the university BIA solution.

Gaining access is only a start; the number of active users is another often-cited measure of success. System usage is the dimension that captures the effective use of the BIA solution by measuring active users that go beyond merely having access. In the literature, system usage is commonly used to measure the intensity of use of an information system [55] in terms of how long the system is used. In the MM, we opted to quantify the number of active users. What counts as “active” is rather situational and is not prescribed by the MM. In fact, discussing what an active user is can bring forward interesting assumptions and understanding of the value of BIA for each organization.

On top of being able to actively use the BIA solution, it is also useful to see how deep the user can engage with the BIA systems, ranging from a recipient of a static report to actively analyzing and interrogating the underlying data to test hypotheses and generate correlations and inferences. This is encapsulated in the dimension of User capability, which relates to measuring the extent to which users engage with the BIA solution to perform their tasks [55]. We consider the User capability dimension as one of the important triggering factors to unleash the technical potentials of BIA solutions.

Ultimately, being able to access data, actively use it, and perform complex analytical tasks on the data are all necessary steps that rely on the analytic and decision-making culture to achieve the ultimate goals of BIA–insight and action. The Analytical and decision-making culture dimension measures the extent to which the university incorporates a shift from intuitive to data-driven decision-making across the organization [56]. This relates to what some authors call the “embeddedness of BI systems” [55], in which the BIA solution becomes part of the organizational activities supporting the decision-making of all user groups in HEIs.

Finally, the People category includes the Training dimension, used to measure the competence improvement of the different sets of users. Establishing a training program to improve the BIA competencies of users is critical to achieving an effective usage of the BIA solution.

6. The Assessment Method

The proposed assessment method comprises two facilitated sessions with key BIA stakeholders and semi-structured interviews. Design requirements R1, R2, and R3 are particularly important for the assessment method. Starting with requirement R3, a lean approach was envisaged to enable a high-level yet relevant assessment exercise. A central decision is the selection of the BIA team members and stakeholders that should take part in the maturity assessment. To this end, the recommendation is to define two groups of participants to the facilitated sessions. One session is targeted to product owners and user representatives, and another facilitated session is conducted with the BIA technical team. The number of participants in each session should be between five and seven. The duration of each session should not exceed two hours. It is recommended that the BIA team leader be present in both sessions to capture the dynamics and discussions of the groups. However, he/she should only actively participate in one of the groups, opting for the technical or business side.

The HE-BIA Maturity Model was designed to enable each HEI to conduct a self-assessment exercise (requirement R1). Hence, the BIA team leader plays a pivotal role in the assessment procedure. In each of the performed case studies, the maturity assessment started with a planning session between the BIA team leader and the two researchers. With the help of the BI team leader, the list of participants for the facilitated sessions was elaborated, and key persons in the organization with insight into the BI program were also identified. The facilitated sessions occurred on-site, with no particular order. The BIA team leader decides which team meets first. It is important to send an email to the participants explaining the goal of the maturity assessment. This is, of course, situational for each institution, depending on the reasons that led to the assessment. We opted not to send the maturity model in the communication email. Given R2, the designed model should be easy to understand and use relevant terminology for HE. As researchers, we also wanted to test this. Coming with an open mind to the assessment frees participants from previous misconstructions leading to a collaborative effort in-session to assess the maturity of each dimension. However, the BIA team leader can also send a priori the maturity model to the participants if he/she believes it is the best course of action for the team.

A similar procedure was followed in each facilitated session, as shown below.

- The facilitator (one of the authors of this paper) introduces the maturity model and the workshop’s plans (10 min);

- Each participant reads the assessment materials: the brochure How to do the assessment? and the Maturity Model (10 min);

- Participants are divided into pre-defined groups of two or three. The local assessment sponsor (typically the BIA team leader) should take into account group dynamics;

- Each group does the assessment independently, scoring each dimension of the maturity model (30 min);

- The facilitator leads the discussion to reach a consensus about the final score of each maturity dimension and presents a visualization of the results (1 h);

- Participants write the feedback form (10 min).

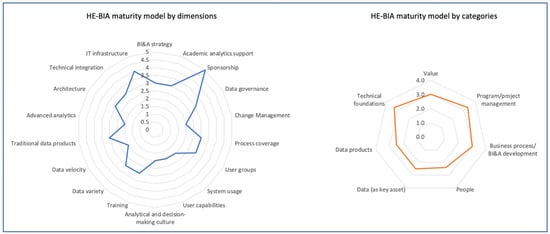

As described, during the session, participants are asked to individually read the materials and then start using the maturity model divided into groups of two (or three if needed). This is the first round of assessment, which should be fast (30 min). Then follows the discussion between all participants in the session (1 h). The facilitator leads the group into an analysis of each dimension. Each group reveals its score of maturity. If there is a mismatch, it is an indication that there are different perspectives and assumptions in the group. A consensus needs to be reached for the maturity level. Participants are encouraged to share their arguments for the score and their predicaments with the maturity levels. This part of the assessment is limited to one hour, so discussions need to be focused on the dimensions. The facilitator uses an Excel spreadsheet for data collection, storing for each dimension the scores from the different groups and the consensus score. Once the scoring is completed, the facilitator shows the group a visualization of their assessment using a radar chart, as displayed in Figure 4. Finally, participants are given some time to individually reflect on the assessment procedure and provide feedback on the issues that were most relevant to them.

Figure 4.

The output of the maturity assessment with the HE-BIA maturity model (fictional data).

A radar chart is produced for each session. Comparing the results of the technical team with those of the team of product owners and user representatives can be very insightful to the entire BIA team. The results can be used by the team leader to improve the BIA solution.

In the performed case studies, two semi-structured interviews followed the workshops or facilitated sessions, which complemented the assessment procedure. The recommendation is to start by interviewing the BIA program sponsor, gathering the perspective from top management, and finally interviewing the BIA team leader. In both interviews, a summary of the assessment results can be presented and discussed.

The assessment procedure relies on the presence of the facilitator. Our recommendation is that the profile of the facilitator should be a researcher with deep expertise on BIA, as opposed to a consultant or academic manager. The facilitator needs to lead the group into reflection and an honest self-assessment. Therefore, it should be someone perceived by the group as an impartial player, acting with the sole purpose of encouraging fruitful discussions.

7. Discussion

The HE-BIA maturity model is the result of an iterative development with two design iterations, as illustrated by the feedback loops in Figure 1. In this section, we discuss the design choices and the model revisions according to the feedback received in the demonstration and evaluation phases. The discussion of the maturity assessment results of the three HEIs used as case studies is out of scope. This paper focuses on the DSR cycles leading to the final versions of the designed artifacts: maturity model and assessment procedure.

7.1. Feedback from the Demonstration Phase

HE-BIA MM v1.0 incorporated the initial qualitative feedback received during the 2019 EUNIS BI SIG workshop. In this workshop, participants were divided into six groups (of five), and each group performed a maturity assessment using one university as a case study. The selected case studies were HEIs in which one of the group’s participants was affiliated and knowledgeable of the BI initiative. The selected HEIs were from Belgium, Canada, Croatia, Norway, and Portugal.

Participants were given a copy of the maturity model (version 0.1), a two-page document with an introduction to the model and recommendations on how to perform the assessment, and a feedback form. The assessment exercise took 40 min. Groups then gave a five-minute presentation discussing their case and sharing insights on the usefulness of the model and the exercise itself.

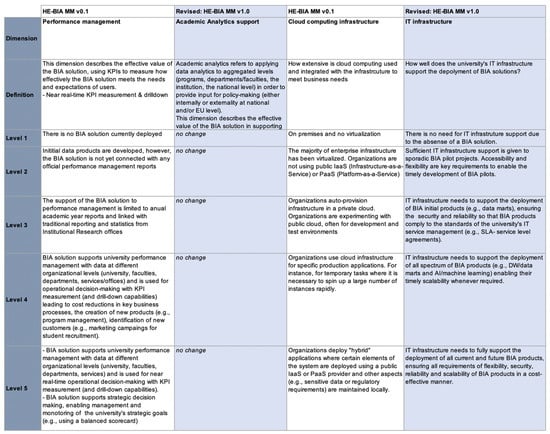

Table 2 summarizes the qualitative feedback received in this workshop, compiled from the feedback forms and oral discussions. The initial feedback was extremely positive. The maturity assessment exercise enabled very fruitful discussions in each group and as a whole during the presentation of cases. The maturity model was well received and deemed useful for practitioners. It was considered with the potential to be a benchmark tool and a more systematic way to approach university management in order to secure funding and all the required resources for the BIA initiative. Negative feedback was always given in terms of improvement. Specifically, regarding the maturity dimensions, participants mentioned the ambiguity of some terms which required clarification (e.g., semi-structured data, real-time data streams) and questioned the definition of two dimensions: Performance management and Cloud computing infrastructure. This led to the major revision of HE-BIA MM v1.0. The Performance management dimension was renamed Academic analytics support, and the Cloud computing infrastructure dimension was revised into the IT infrastructure dimension, as detailed in Figure 5.

Table 2.

Qualitative feedback received on the EUNIS BI SIG 2019 workshop.

Figure 5.

Major dimension revisions on HE-BI MM v1.0.

The Performance management dimension was well designed, but its name was controversial. Hence, the name change, using a HE domain-specific concept as academic analytics support. Regarding the Cloud computing dimension, several workshop participants questioned its direct relevance to BIA. Some asked if moving to the cloud is always an advantage? Others expressed their view that it is not a technical feature list that represents maturity but rather how well the underlying infrastructure meets and supports the actual organizational needs. These concerns and the subsequent in-depth discussions between the researchers and the participants lead to the removal of cloud computing as a dimension replacing it with the new Technical infrastructure dimension (see Figure 5).

The Analytical culture dimension was also renamed as Analytical and decision-making culture, as suggested. Finally, the comments on the levels led to an improvement in the assessment method, acknowledging upfront to participants that level descriptions were sometimes generic hence perfect matches might be difficult to attain. If the current assessment is in-between two levels due to a partial match, then the lower level of maturity should be selected. Participants were also informed that the distance between consecutive maturity levels would typically vary.

7.2. Feedback from the Evaluation Phase

During the evaluation phase, three case studies were performed with three institutions using the same version of the model (v1.0). All interactions with the participants were recorded, including workshops and interviews. This enabled a fine data collection in terms of feedback, suggestions, and requests for change. Comments were also gathered from the received feedback forms, as well as the researchers’ notes. Each comment was stored and annotated in an Excel file.

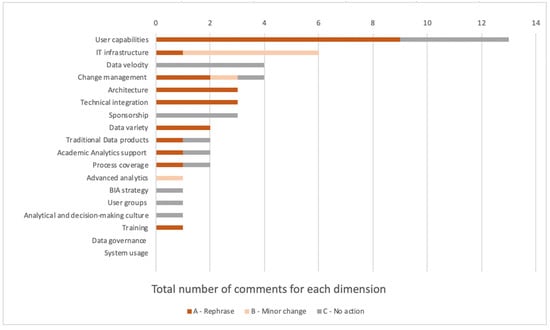

Figure 6 displays the total number of comments received for each dimension of the model. Comments were classified into three categories: A—rephrasing, B—minor change, and C—no action required. The User capabilities dimension received the highest number of comments, 13 in total, of which only nine led to revisions in the model. From the list of the top-10 dimensions with the most comments, only three are related to the organizational part. The objective of the workshops and interviews was to collect the aspects that were not understood by the users. Unsurprisingly, the technical aspects generated more discussion and requests for clarification.

Figure 6.

Summary of comments received to HE-BI MM v1.0.

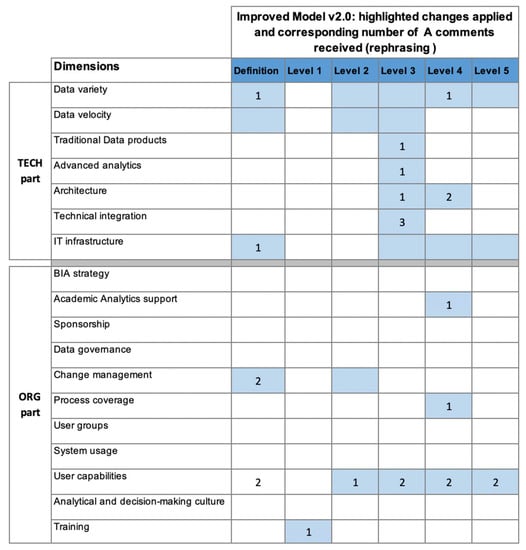

Apart from the absolute value of comments, it is also relevant to analyze the impact of the comments on the model’s improvements leading to v2.0. Figure 7 presents a visualization highlighting the parts of the model that were improved (i.e., dimension definition and level descriptions) in blue, as well as the number of A comments received for each part. As expected, the User capabilities dimension was refined in order to solve the inconsistencies commented by the users. Most comments were related to the levels’ progression, mentioning that administrative users need to be supported first rather than leaders.

Figure 7.

HE-BI MM v2.0: improvements made considering received feedback from v1.0.

The revisions in the Data variety dimension included a clarification in the definition, stating that “variety” relates to the different data types in data sources and a simplification of the level progression.

Most comments about Data velocity focused on whether the dimension covered the need to have analytics updated as close to real-time, or if it was technically feasible to have that. Many participants mentioned that in universities, educational data is generated on a semester or yearly basis (e.g., student questionnaires). As can be seen from Figure 6 and Figure 7, the received comments did not originate a rephrasing and were labeled C (no action). However, we decided to clarify the dimension’s definition, stating that “this dimension refers to the real consumption of existing data for analysis purposes by the BIA users.” Moreover, the level progression was simplified to monthly (since most financial reports have this periodicity), weekly, daily, and real-time. Analytical applications providing close to real-time data are still scarce in higher education. For instance, Ref. [57] reports on the use of analytics to provide updated information about the occupancy and utilization of spaces and how that was instrumental in constructing resilient and adaptive timetables. In March 2020, due to the COVID-19 pandemic, organizations in general, and also HEIs, were forced to accelerate their digital transformation strategies. During this period, new data-driven products were developed in which the velocity of data analysis was crucial. For instance, analytics were used to efficiently monitor in real-time the usage of learning spaces or to track quarantine and isolation cases [58]. Given requirement R4, we decided to keep the model focused on innovative use of analytics; hence the velocity of data analysis is an important aspect. All three case studies were completed before the pandemic. We believe that, currently, all three universities would report differently in this dimension.

Comments received for the Architecture and Technical integration dimensions resulted in a clarification of the level progression stages. For instance, level 3 of the Technical integration dimension was too detailed in v1.0, mentioning some ETL subsystems, which generated confusion, especially among the business users.

The validation process in this study comprised three case studies and two workshops with HE practitioners, which are more than usual surveys and interviews. It is, in a way, less quantitative than other validations; however, it is more effective since it resulted in very detailed feedback. We reckon that qualitative research provides rich and accessible insights into the real world. It exhibits the experiences and perspectives of practitioners in a way that is both different from but also complementary to the knowledge we can obtain through quantitative methods. In this study, the opportunity to probe allowed the researchers to determine more than just initial responses and rationales. Since we aimed for a model accessible to the community (requirement R2), it was important to engage in a participatory design, such that the community has some degree of agency and sense of ownership to the MM. This will foster the adoption of the model in the BIA teams in the different HEIs. Only when it is adopted by a larger group can its utility at a macro level be fully realized. Finally, such a design choice was also based on the intention to support the HE community. Indeed, participants felt that the assessment through seminars and workshops was useful and applicable, as well as a good trigger for reflections and discussions on their practices.

8. Conclusions

In this paper, we presented a domain-specific BIA maturity model for HEIs validated by practitioners in the field: how it was conceived, the methodological design process that we followed, the model itself, and finally, the demonstrations and validation of its coverage, accuracy, and usability.

The design of the MM was driven by five requirements. R1 established that the model should enable each HEI to conduct a self-assessment exercise. This was validated with three case studies, in which all HEIs reported that the exercise was very useful and relevant to assess their current status of BIA development.

As much as the MM is a product, it is also a process. We documented in detail the assessment process of the MM. Likewise, we consider certain elements and characteristics in the MM process that are instrumental in securing the usefulness. For example, it was considered intuitive and natural to start the assessment with the Data category. As one participant puts it:

“You have taken data as the starting point, which I think is most important because if you don’t have data, you don’t have BI at all. You can always have teams, and you could have technical staff, have technical applications, etc., but if you don’t have data, you don’t have anything at all. So I think it’s a great place to start to assess your maturity according to how mature you are in structuring your data, in what kind of data do you have, the access to data, and how you (are) using the master data.”

Similarly, participants considered it advantageous to score the technical dimensions before dealing with the model’s organizational part, as the former is somewhat more concrete and subsequently necessitates establishing a shared vocabulary for the discussions later on. The team was also positive about the setup of two separate facilitated sessions, one with the technical team and another with the product owners and user representatives. It was apparent that discovering the discrepancies between the two groups is rather intriguing and the subsequent discussion on why it happens is even more engaging. The size of the group (6–8 people) and the duration of the assessment (two hours) were considered appropriate. The presence of a facilitator was also considered instrumental. A facilitator helps to make sure everyone listens, stays on topic with the agenda, knows their tasks and roles, and feels included in the process. The proposed lean approach, with the high-level assessment (requirement R3), was considered adequate and very useful in terms of building a more data-driven organization in each HEI.

The validation process also revealed that the MM uses relevant terminology for HE (requirement R2). The comments received enabled us to refine the concepts that were not easily understood by HE practitioners (including business users and the technical team).

Overall, the MM was considered complete and adequate for the needs of HEIs. From a practitioner’s point of view, there are no redundant dimensions or missing components. The forward-thinking approach captured by requirement R4, focusing on new analytical products, was proven valid. In a period as turbulent as the COVID-19 pandemic, universities responded with agility, and digital transformation accelerated remarkably. Universities developed new products using analytical models where real-time information became crucial for making decisions to benefit the health of the campus community. Finally, the model was designed systematically using a research methodology, with two design and feedback iterations, thus fulfilling requirement R5.

The next step for this study is two-fold. Firstly, it is planned to roll out the MM to the EUNIS BI SIG community and facilitate their self-assessment of the BIA initiatives. A survey on the maturity level of BIA systems in European HEIs can now be performed based on the HE-BIA MM. The result from such a study will enable to benchmark with some level of certainty the levels of BIA development in European HEIs. This is a highly anticipated result for the EUNIS BI SIG members. The model and the result from such regional assessment could provide the governing body with useful knowledge to address the design of policies and continual improvement strategies at a regional level. Additionally, the dissemination of the final version of the MM within the EUNIS BI SIG will enable us to overcome a limitation of this study, which is the limited number of case studies (three). Increasing the number of case studies showcasing the diversity of HEIs in Europe will certainly be an important aspect from a research standpoint.

Secondly, as the MM was well received by the community, considerable interests were raised among the practitioners for “actionable insights” in the form of guidelines for road mapping. Hence, the next steps will include the design of appropriate and accessible instruments to facilitate strategic road mapping of BIA development in higher education. Such instruments may include inspirational use cases documenting good practices regarding the maturity dimensions (e.g., User groups–using BIA for students and faculty; and Advanced analytics–using real-time BIA applications).

Finally, the maturity of BIA is also connected to the larger area of digital maturity, as illustrated in [59]. In [60], the authors proposed an integrated digital transformation model applied to universities where BIA is one of the 17 components. In this paper, we zoomed in and focused on the improvement of BIA. Our experience with the universities in our cases showed that such a focused and domain-specific model fostered a more precise description and communication during gap analysis, as the terms, situations, and systems were relatable to their daily work situation. In the future, it will be interesting to see if the BIA maturity model can be extended to provide a HEI with a more comprehensive view of its standing on the digital transformation landscape.

Author Contributions

Conceptualization, E.C. and X.S.; methodology, E.C. and X.S.; validation, E.C. and X.S.; investigation, E.C. and X.S.; writing—original draft preparation, E.C. and X.S.; writing—review and editing, E.C. and X.S.; visualization, E.C.; project administration, E.C. and X.S. All authors have read and agreed to the published version of the manuscript.

Funding

This paper was published with the support of the Portuguese Government, through the FCT funding of the R&D Unit UIDB/03126/2020.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

HE-BIA MM v2.0: definition of dimensions of the Technology part.

Table A1.

HE-BIA MM v2.0: definition of dimensions of the Technology part.

| Dimension | Definition |

|---|---|

| Data variety | How extensive is the variety of data used in analytics (from the point of view of data sources)? |

| Data velocity | How extensive is the velocity of data used in analytics (the speed at which analysts can access and leverage the data needs to match the speed at which it’s created)? This dimension refers to the real consumption of existing data for analysis purposes by the BIA users. |

| Traditional data products | Development and utilization of reports, dashboards, scorecards, OLAP (online analytical processing), and data visualization technologies to display output information in a format readily understood by its users, e.g., managers and other key decision-makers. |

| Advanced analytics | Development and utilization of sophisticated statistical and data mining/machine learning software to explore data and identify useful correlations, patterns, and trends and extrapolate them to predict what is likely to occur in the future. |

| Architecture | How advanced is the BIA architecture? |

| Technical integration | Techniques and best practices that repurpose data by transforming it as it is moved. ETL (extract, transform, and load) is the most common form of data integration found in data warehousing. |

| IT infrastructure | How well does the university’s IT infrastructure support the deployment of BIA solutions? The rationale is that if this element is absent or insufficient, it hampers the development of BIA solutions. For example, lack of possibility or flexibility in accessing the needed infrastructure for testing and deployment of new BIA products has a tendency to either slow down the BIA projects or entail workarounds. |

Table A2.

HE-BIA MM v2.0: definition of dimensions of the Organization part.

Table A2.

HE-BIA MM v2.0: definition of dimensions of the Organization part.

| Dimension | Definition |

|---|---|

| BIA strategy | Defining and managing the vision and strategy of the BIA solution and how it supports the university’s strategy. Examples of strategies include being more predictive, aligning BIA with the “business”/academic stakeholders, and researching new opportunities experimenting with new technologies and methodologies to drive the campus of the future (given the new data sources, technologies, and analysis methods currently available). |

| Academic analytics support | Academic analytics refers to applying data analytics to aggregated levels (programs, departments/faculties, the institution, the national level) in order to provide input for policymaking (either internally or externality at national and/or EU level). This dimension describes the effective value of the BIA solution in supporting academic analytics. |

| Sponsorship | Level of sponsorship of the BIA solution; includes senior leadership support and responsibility. Important to secure adequate resources (e.g., funding, HR, organizational structure) and to set out the vision and strategy of the BIA solution. |

| Data governance | Level of adherence to data governance practices and methodologies, i.e., the effective application of principles, policies, use of data standards (for how data is modeled and exchanged), roles, responsibility, and operational processes definition for data management across the organization, ensuring the quality and the accuracy of the data in the BIA solution. |

| Change management | Ability to manage changes within the context of the BIA solution in terms of user requests, architecture, skills, user experience, etc. Any issue that impacts the schedule, budget or scope of any product/service of the BIA solution should be considered a change |

| Process coverage | How wide does the BIA solution give support to the university process areas? Specifically: (a) how many of the key business process areas are impacted: Financial, Academic, Research, and Human Resources; (b) and how many of the supporting or secondary business processes areas are impacted (e.g., IT, logistics, facility management). The key business processes correspond to the most common areas of usage of BIA in universities and not the traditional value-driven key processes of the value chain model. |

| User groups | Type of users that have access to the BIA solution (i.e., accessing users). Users are typically grouped into four structural groups: (1) Leadership: university top-level management/Rectory, school/faculty level management, central university services, local school/faculty services management; (2) Administrative staff: from central university services, local school/faculty services (or offices); (3) Faculty: professors, researchers; and (4) Students: current, alumni. |

| System usage | This dimension measures the effective usage of the BIA solution, determining the number of active users, considering the four user groups. The definition of an active user needs to be clarified for each organization (as an example, “active” entails at least one interaction with the BIA solution per month). |

| User capabilities | Profile of users in terms of BIA proficiency and data analysis literacy. |

| Analytical and decision-making culture | Includes the promotion of data-driven decision support and analytical culture across the university, i.e., how BIA contributes to decisions made throughout the university. |

| Training | Competence improvement of the different sets of users. Users of the DW/BI system must be educated on data content, BIA applications, and ad hoc data access tool capabilities (if needed). |

Figure A1.

The HE-BIA maturity model v.2.0: technology part.

Figure A2.

The HE-BIA maturity model v.2.0: organization part.

Figure A3.

The HE-BIA maturity model v.2.0: organization part (continued).

References

- Rouhani, S.; Ashrafi, A.; ZareRavasan, A.; Afshari, S. The impact model of business intelligence on decision support and organizational benefits. J. Enterp. Inf. Manag. 2016, 29, 19–50. [Google Scholar] [CrossRef]

- Chen, H.; Chiang, R.; Storey, V. Business Intelligence and Analytics: From Big Data to Big Impact. MIS Q. 2012, 36, 1165–1188. [Google Scholar] [CrossRef]

- Baars, H.; Kemper, H.-G. Management Support with Structured and Unstructured Data—An Integrated Business Intelligence Framework. Inf. Syst. Manag. 2008, 25, 132–148. [Google Scholar] [CrossRef]

- Sharda, R.; Delen, D.; Turban, E. Analytics, Data Science, & Artificial Intelligence: Systems for Decision Support, 11th ed.; Pearson: London, UK, 2020. [Google Scholar]

- Kimball, R.; Ross, M.; Thornthwaite, W.; Mundy, J.; Becker, B. The Data Warehouse Lifecycle Toolkit, 2nd ed.; Wiley: Hoboken, NJ, USA, 2008. [Google Scholar]

- Ereth, J.; Eckerson, W. AI: The New BI-How algorithms are transforming Business Intelligence and Analytics. 2018. Available online: https://www.eckerson.com/register?content=ai-the-new-bi-how-algorithms-are-transforming-business-intelligence-and-analytics (accessed on 20 March 2022).

- Işık, Ö.; Jones, M.C.; Sidorova, A. Business intelligence success: The roles of BI capabilities and decision environments. Inf. Manag. 2013, 50, 13–23. [Google Scholar] [CrossRef]

- Yeoh, W.; Koronios, A. Critical success factors for business intelligence systems. J. Comput. Inf. Syst. 2010, 50, 23–32. [Google Scholar] [CrossRef]

- Yeoh, W.; Popovič, A. Extending the understanding of critical success factors for implementing business intelligence systems. J. Assoc. Inf. Sci. Technol. 2015, 67, 134–147. [Google Scholar] [CrossRef]

- Lahrmann, G.; Marx, F.; Winter, R.; Wortmann, F. Business Intelligence Maturity Models: An Overview. In Proceedings of the Annual Hawaii International Conference on System Sciences, Kauai, HI, USA, 4–7 January 2011; pp. 1–12. [Google Scholar]

- Freitas, E.L.S.X.; Ufpe, U.F.D.P.; Souza, F.D.F.D.; Garcia, V.C.; Falcão, T.P.D.R.; Marques, E.C.M.; Mello, R.F. Avaliação de um Modelo de Maturidade para Adoção de Learning Analytics em Instituições de Ensino Superior. RELATEC Rev. Latinoam. Tecnol. Educ. 2020, 19, 101–113. [Google Scholar] [CrossRef]

- Tocto-Cano, E.; Collado, S.P.; López-Gonzales, J.; Turpo-Chaparro, J. A Systematic Review of the Application of Maturity Models in Universities. Information 2020, 11, 466. [Google Scholar] [CrossRef]

- Raber, D.; Winter, R.; Wortmann, F. Using Quantitative Analyses to Construct a Capability Maturity Model for Business Intelligence. In Proceedings of the 45th Annual Hawaii International Conference on System Sciences (HICSS), Maui, HI, USA, 4–7 January 2012; pp. 4219–4228. [Google Scholar] [CrossRef]

- Sen, A.; Sinha, A.; Ramamurthy, K. Data Warehousing Process Maturity: An Exploratory Study of Factors Influencing User Perceptions. IEEE Trans. Eng. Manag. 2006, 53, 440–455. [Google Scholar] [CrossRef]

- TDWI Research. TDWI Benchmark Guide. Interpreting Benchmark Scores Using TDWI’s Maturity Model. 2012. Available online: https://tdwi.org (accessed on 20 March 2013).

- OCU. Institutional Intelligence White Book Maturity Model. In White Book of Institutional Intelligence; Alcolea, J.J., Ed.; Office for University Cooperation: Tokyo, Japan, 2013. [Google Scholar]

- Gastaldi, L.; Pietrosi, A.; Lessanibahri, S.; Paparella, M.; Scaccianoce, A.; Provenzale, G.; Corso, M.; Gridelli, B. Measuring the maturity of business intelligence in healthcare: Supporting the development of a roadmap toward precision medicine within ISMETT hospital. Technol. Forecast. Soc. Chang. 2018, 128, 84–103. [Google Scholar] [CrossRef]

- Cardoso, E.; Alcolea, J.J.; Rieger, B.; Schulze, S.; Rivera, M.; Leone, A. Evaluation of the maturity level of BI initiatives in European Higher Education Institutions: Initial report from the BI Task Force @EUNIS. In Proceedings of the 19th International Conference of European University Information Systems (EUNIS 2013), Riga, Latvia, 12–14 June 2013. [Google Scholar]

- EUNIS. EUNIS Business Intelligence Special Interest Group. Available online: https://www.eunis.org/task-forces/business-intelligence-bi/ (accessed on 16 March 2021).

- Watson, H.; Ariyachandra, T.; Matyska, R.J. Data Warehousing Stages of Growth. Inf. Syst. Manag. 2001, 18, 42–50. [Google Scholar] [CrossRef]

- Spruit, M.; Sacu, C. DWCMM: The data warehouse capability maturity model. J. Univers. Comput. Sci. 2015, 21, 1508–1534. [Google Scholar]

- HP. The HP Business Intelligence Maturity Model: Describing the BI Journey; Hewlett-Packard: Palo Alto, CA, USA, 2019. [Google Scholar]

- Hostmann, B.; Hagerty, J. ITScore Overview for Business Intelligence and Performance Management (Issue G00205072). Available online: https://www.gartner.com/en/documents/1433813 (accessed on 20 March 2022).

- Halper, F.; Stodder, D. TDWI Analytics Maturity Model Guide. In TDWI Research; 2014; Available online: https://tdwi.org/~/media/545E06D7CE184B19B269E929B0903D0C (accessed on 20 March 2022).

- Halper, F. TDWI Modern Data Warehousing Maturity Model Guide. Interpreting Your Assessment Score. Available online: https://cloud.google.com/blog/products/data-analytics/how-modern-your-data-warehouse-take-our-new-maturity-assessment-find-out (accessed on 20 March 2022).

- Davenport, T.H.; Harris, J.G.; Morison, R. Analytics at Work: Smarter Decisions, Better Results; Harvard Business Press: Boston, MA, USA, 2010. [Google Scholar]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A Design Science Research Methodology for Information Systems Research. J. Manag. Inf. Syst. 2007, 24, 45–77. [Google Scholar] [CrossRef]

- Hevner, A.R.; March, S.T.; Park, J.; Ram, S. Design Science in Information Systems Research. MIS Q. Manag. Inf. Syst. 2004, 28, 75–105. [Google Scholar] [CrossRef]

- Sanchez-Puchol, F.; Pastor-Collado, J.A.; Borrell, B. Towards an Unified Information Systems Reference Model for Higher Education Institutions. Procedia Comput. Sci. 2017, 121, 542–553. [Google Scholar] [CrossRef]

- Mccormack, M.; Brooks, D.C.; Shulman, B. Higher Education’s 2020 Trend Watch and Top 10 Strategic Technologies the Top 10 Strategic Technologies for 2020; Educase: Boulder, CO, USA, 2020; Available online: https://www.educause.edu/ecar/research-publications/higher-education-trend-watch-and-top-10-strategic-technologies/2020/the-top-10-strategic-technologies-for-2020#TheTop10StrategicTechnologiesfor2020 (accessed on 20 March 2022).

- De Wit, K.; Broucker, B. The Governance of Big Data in Higher Education. In Data Analytics Applications in Education; CRC Press: Boca Raton, FL, USA, 2017; pp. 211–232. [Google Scholar] [CrossRef]

- Niño, H.A.C.; Niño, J.P.C.; Ortega, R.M. Business intelligence governance framework in a university: Universidad de la costa case study. Int. J. Inf. Manag. 2018, 50, 405–412. [Google Scholar] [CrossRef]

- Kabakchieva, D. Business Intelligence Systems for Analyzing University Students Data. Cybern. Inf. Technol. 2015, 15, 104–115. [Google Scholar] [CrossRef]

- Schwendimann, B.; Triana, M.J.R.; Vozniuk, A.; Prieto, L.P.; Boroujeni, M.S.; Holzer, A.; Gillet, D.; Dillenbourg, P. Perceiving Learning at a Glance: A Systematic Literature Review of Learning Dashboard Research. IEEE Trans. Learn. Technol. 2016, 10, 30–41. [Google Scholar] [CrossRef]

- Guillaumet, A.; García, F.; Cuadrón, O. Analyzing a CRIS: From data to insight in university research. Procedia Comput. Sci. 2019, 146, 230–240. [Google Scholar] [CrossRef]

- Guster, D.; Brown, C. The application of business intelligence to higher education: Technical and managerial perspectives. J. Inf. Technol. Manag. 2012, 23, 42–62. [Google Scholar]

- Agustiono, W. Academic Business Intelligence: Can a Small and Medium-sized University Afford to Build and Deploy it within Limited Resources? J. Inf. Syst. Eng. Bus. Intell. 2019, 5, 1–12. [Google Scholar] [CrossRef]

- Hočevar, B.; Jaklič, J. Assessing benefits of business intelligence systems—A case study. Manag. J. Contemp. Manag. Issues 2010, 15, 87–119. Available online: https://hrcaksrcehr/53608 (accessed on 20 March 2022).

- Becker, J.; Knackstedt, R.; Pöppelbuß, D.-W.I.J. Developing Maturity Models for IT Management. Bus. Inf. Syst. Eng. 2009, 1, 213–222. [Google Scholar] [CrossRef]

- Yin, R.K. Case Study Research: Design and Methods, 3rd ed.; SAGE Publications: New York, NY, USA, 2002. [Google Scholar]

- Su, X.; Cardoso, E. Measuring the Maturity of the Business Intelligence and Analytics Initiative of a Large Norwegian University. Int. J. Bus. Intell. Res. 2021, 12, 1–26. [Google Scholar] [CrossRef]