1. Introduction

Virtual Reality (VR) is used for training in medical emergency situations [

1], mechanical assembly [

2,

3] and military preparation [

4] due to its low cost, rapid setup time, safety and capability to repeat and track users’ tasks. These tasks involve a series of actions to be performed, for example, walking around an area while moving objects from one place to another. VR apps usually implement object grasping by attaching a single object to the controller. This is sufficient for many actions, such as grasping an apple, but does not allow users to grasp multiple objects and release them in a controlled manner. For instance, a nurse placing surgical equipment on a table or an operator sorting shelves will grab multiple objects on each hand. We believe that various VR applications that lack the capability of multi-object grasp could benefit from it. For example, virtual stores [

5] and physical rehabilitation applications [

6] may present a more realistic and transferable experience with multi-object grasp methods. For example, when visiting a clothes shop, it is common to grab multiple clothes to test various sizes, or in the supermarket to pick up multiple yogurts of different flavours. In rehabilitation, there are several tasks that include grabbing multiple items such as playing with cards or moving specifically shaped tokens.

In this paper, we design, implement and evaluate an interaction technique for grasping multiple objects in VR and to release them in a controlled manner. The technique is designed to be similar to the real action of grasping and releasing multiple objects so that the practised skills could be transferred to real life. Thus, ray-casting and bounding-box techniques were discarded, since they are not inspired in the real world. Moreover, fully physical simulations were not considered since they are impractical for grasping multiple objects.

Three techniques were compared: the use of the hands in real life, a traditional single-object grasp technique and the new multi-object grasp technique. The aim is to understand if using the multi-object grasp technique in VR provides any benefit and if the users are comfortable using it.

Section 2 covers previous techniques for grasping objects.

Section 3 explains the implemented single-object grasp and multi-object VR techniques as well as the procedure that was followed for selecting some of the options within the design space. Then, a user study (

Section 4) was performed to compare single-object and multi-object grasping in Virtual Reality and in the real-life on a task involving moving and placing coloured tokens. In

Section 5, the obtained measurements and their statistical analysis are presented. These results show that the multi-object grasp technique, although seen as the most complex one, leads to significantly less travelled distance and physical effort. Lastly, in

Section 6, high-level insights are highlighted as well as the limitations and future work.

2. Related Work

The selection techniques implemented in most VR applications [

7] are strongly related to the grasping action. Grasping can be seen as a selection automatically followed by a direct manipulation. A common selection technique in VR environments is ray casting [

8,

9], where a ray extends from the user’s hand and selects the closest element that intersects with the ray. Some variations have been proposed to deal with its drawbacks: Vanacken et al. [

10] investigated a method for improving selection in a dense area, while Shadow Cone [

11] and Bending Ray [

12] were designed to solve target occlusion.

More realistic selection techniques display a small volume within the virtual hand and the objects that intersect with it are selected [

13]. This technique only allows users to select objects that are within reach, but it is closer to the real-life action of grasping. Physical simulations of the hand can be used for manipulating the virtual objects around them [

14]. Full physical simulations of the hand are the closest to real life [

15], but with the lack of haptic feedback and more accurate hand tracking, it is difficult to grasp even a single object without docking points, and impractical to do it with multiple objects.

Researchers have adapted single-object selection techniques to allow sequential selection of multiple objects (serial techniques). For instance, ray-based methods were adapted [

16] to select the objects that intersect with the ray one-by-one. On the other hand, parallel techniques select objects that are within a defined volume (usually a bounding box) [

17,

18,

19,

20,

21]. Lucas et al. [

22] showed that parallel techniques can be more effective than serial techniques. However, as the number of objects increase and the proximity between them decrease, parallel techniques are most likely to select undesired targeted objects.

Sequential multi-object selection has been applied to ray-based techniques, therefore they are not similar to the real-life action of grasping. Parallel techniques are based on bounding volumes which are not realistic either. Thus, the aim of this work is to design and evaluate a multi-object grasp technique that is close to the real-life action and yet remains functional.

3. Interaction Techniques

To perform tasks that involve moving objects between places in a virtual environment, two interaction techniques for grasping were developed: a single-object and a multi-object grasp technique.

3.1. Single-Object Grasp

Single-object grasp uses the common VR behaviour when users grasp objects with the controller, i.e., when pressing and holding one of the buttons of the controller (usually the middle-finger trigger), the object that is in contact with the hand is grasped; when the button is released, the object is released. While the object is grasped, the hand translation and orientation is transferred to the object. In the real world, this technique could be represented by grasping an apple from a basket and releasing it into a table. This technique is also similar to the drag-and-drop technique in phones and computers.

3.2. Multi-Object Grasp

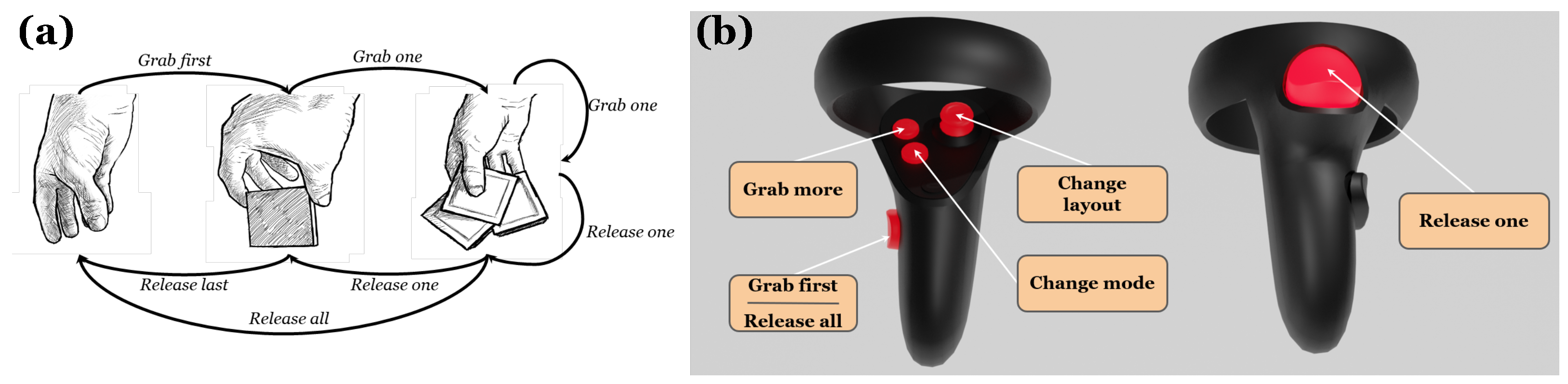

Multi-object grasp combines the use of several buttons from the controller to enable the grasping and release of multiple objects (

Figure 1). This technique permits the controlled release of objects, either by releasing one-by-one, or by releasing all of them simultaneously. In the real world, this activity can be represented as grasping several screws and releasing them one-by-one into each hole of a panel, or grasping the paper-clips on a desktop and releasing all of them inside a pencil-case.

To develop the multi-object grasp technique, a preliminary study was conducted to analyse possible implementations for this new technique in a suitable and close-to-reality manner. The features that this technique needed are: grasp multiple objects that are directly reachable with the hand; keep control of when to release these objects; and make this experience as similar as possible to grasping in real life.

We investigated how to map the movements performed by the fingers when grasping and moving multiple tokens in real life to the buttons of a controller, in this case an Oculus Quest controller. One approach was to use discrete gestures, for example, by performing a throwing action with the hand to release all tokens. However, discrete gestures were not as accurate, fast or comfortable as pressing the buttons. Another idea was to employ hand-tracking. However, this lacks haptic feedback, was more prone to occlusions during the tasks, forced the user to look at their hands while doing the tasks, and provided limited accuracy.

The multi-object grasp technique was elicited through informal interviews. In order to obtain a natural set of interactions with the controllers for performing the actions (grab first, grab one, release all, release last), we gathered six people with backgrounds in computer science, electronics and medicine. Firstly, we asked them to perform the actions with real wooden tokens. Then we asked them how they would perform those actions with the game controller. Most participants agreed on the grab first action (pressing the ring trigger) and release all (releasing the trigger). For release one, some suggested a throwing gesture but others suggested pressing one of the top buttons. For grab one, there was no agreement between pressing the index trigger or doing it automatically when an object was touched while the grab first mode was held.

We designed the multi-object grasp technique using the single-object grasp as a base and adding the support for multi-object on top. The base is that when the middle-trigger button is pressed, the objects intersecting with the hand are grasped. When this trigger is released, all the objects are released. Additionally, we need to grab extra objects or release a a single object while the middle-trigger button is pressed. For releasing a single object, we used the index trigger button, which was the most intuitive option for the users. For grasping an extra object, we used the button on top of the thumb.

We tested if extra objects should be automatically grasped when the users’ hand came in contact with them or if it was better to actively press a button. Eight participants performed the study described in

Section 4 with only the desktop and shelf scenarios and two conditions: using the index trigger in the controller to grab one object, or doing it automatically as the virtual hand touched an object while the grab mode was held. The task completion time (TCT) for the ‘with-button’ was M = 14.23 s SD = 5.58 s; for the ‘no-button’ condition M = 14.00 s SD = 3.84 s. A t-paired test reported a

p-value of 0.43, so no significant difference was found between using the button to select each object or automatically attaching it to the hand. Thus, we selected the option of pressing the button since it is closer to the real action, i.e., we actively grab more objects by moving our thumb and index finger, and they do not become automatically added or stacked to our hand as we pass it around.

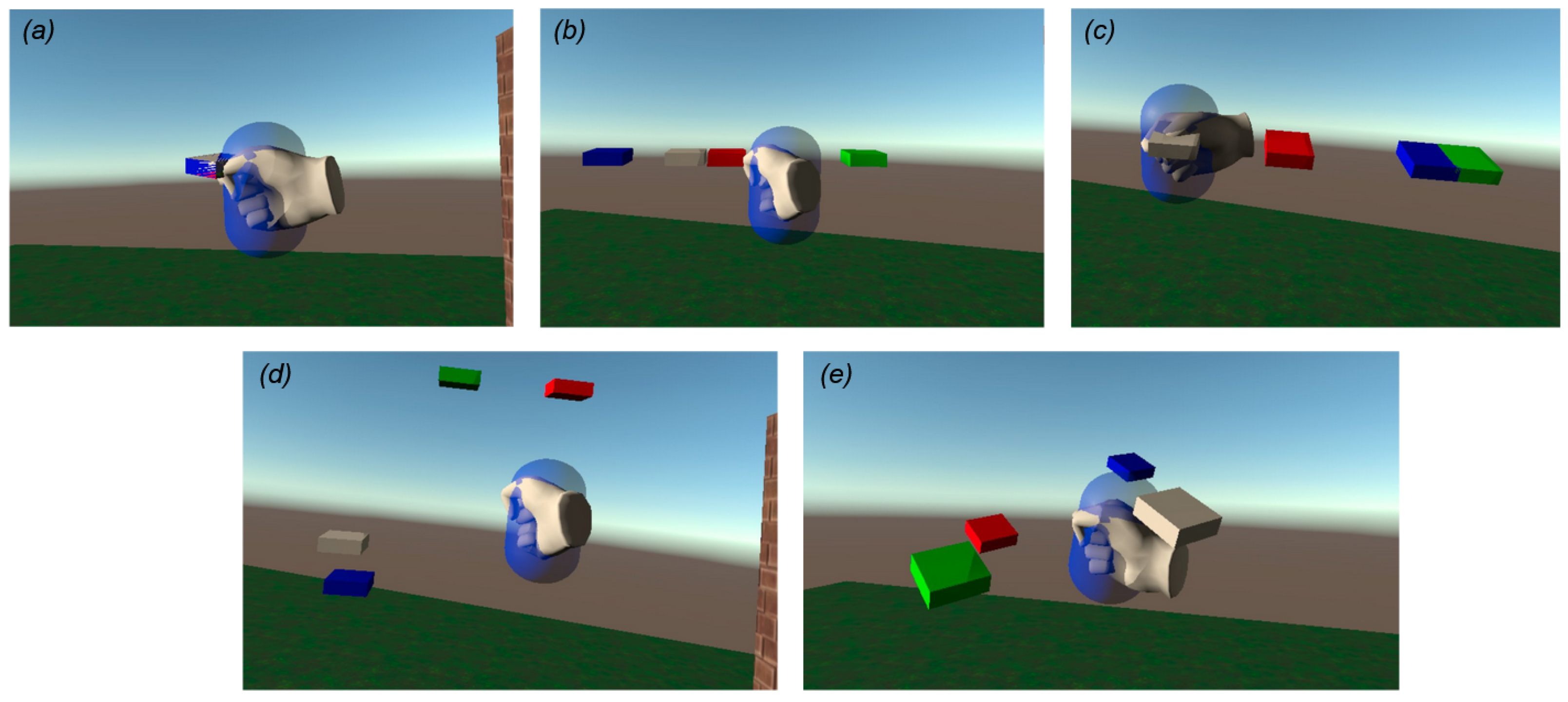

When multiple objects were grasped, we tested multiple spatial distributions of these objects around the hand. Moreover, we included the option of rearranging the grabbed objects with the other hand. The selected layouts that we explored were: same position, horizontal line, line along the forearm, fan and random (see

Figure 2).

4. User Study

A user study was conducted to determine which grasping techniques perform better under various scenarios and how they compare to the real-world task. A total of 12 participants took part using three conditions (real world, VR single-object grasp, VR multi-object) under three scenarios (desktop, shelf, room) with 16 trials each. In total, we collected 12 participants × 3 conditions × 3 scenarios × 16 trials = 1728 trials.

4.1. Participants and Apparatus

A total of 12 users participated in the study, aged 23 to 49 years (avg = 30, SD = 5.2) and of equally distributed gender (6 women and 6 men). The participants were staff from the computer science and mathematics department; 4 of them had experience with VR whereas the others had had no exposure to it. They presented no vision impairments and could walk without problems during the required tasks. A VR headset (Oculus Quest 1) and two controllers were used for this study. The implementation of the virtual scenes were designed using Unity 2019.4.14f1 software tool.

4.2. Task

A set of 20 square wooden tokens were employed as the objects to move during the task. There were 5 tokens of each of the following colours: red, green, blue and white. The tokens were 5 cm × 5 cm × 1 cm and they were comfortably manipulated by the users.

At the beginning, the tokens were positioned in an initial zone. Then, for each trial, the user was indicated how many tokens and of which colour to position in each of the 4 target zones. Depending on the scenario, the distances between the zones were different. After finishing each trial, the tokens did not reset their position. Thus, the following trial will involve planning the movements based on the current one. Target zones may need to be filled (Trial #2), rearranged (Trial #8), emptied (Trial #16), or a combination of these actions (Trial #6); as shown in

Figure 3.

4.3. Conditions

There were three conditions for the study: real world, Virtual Reality using single-object grasp and Virtual Reality using multi-object grasp.

Real environment: In the real environment, the users could employ both hands to collect and distribute the tokens. In this condition, the user can either pick objects in serial or in parallel, as they would do to complete daily tasks. We explained the users that they could only use their hands; actions such as using a T-shirt or a bag for holding the tokens were not allowed. The instructions for the trials were shown on a laptop with a 14″ screen, and were shown as they appear in

Figure 3. Users reported no issues understanding or seeing them across the different scenarios.

Virtual environment with single-object grasp: For the virtual world, the users employed a VR headset (Oculus Quest 1) and two controllers. These controllers became the representation of the user’s hands in the virtual environment and their actions were controlled with the buttons. Here, the single-object grasp technique was employed; they could grab only one object at a time in each hand. The instructions were shown on a virtual screen in the VR conditions; for different scenarios this screen was placed at different distances, but users reported no problem observing or understanding the instructions.

Virtual environment with multi-object grasp: this was identical to the previous condition, except, in this case, the user could grab multiple tokens in each hand and move them to the final location using the multi-object grasp technique described in

Section 3.

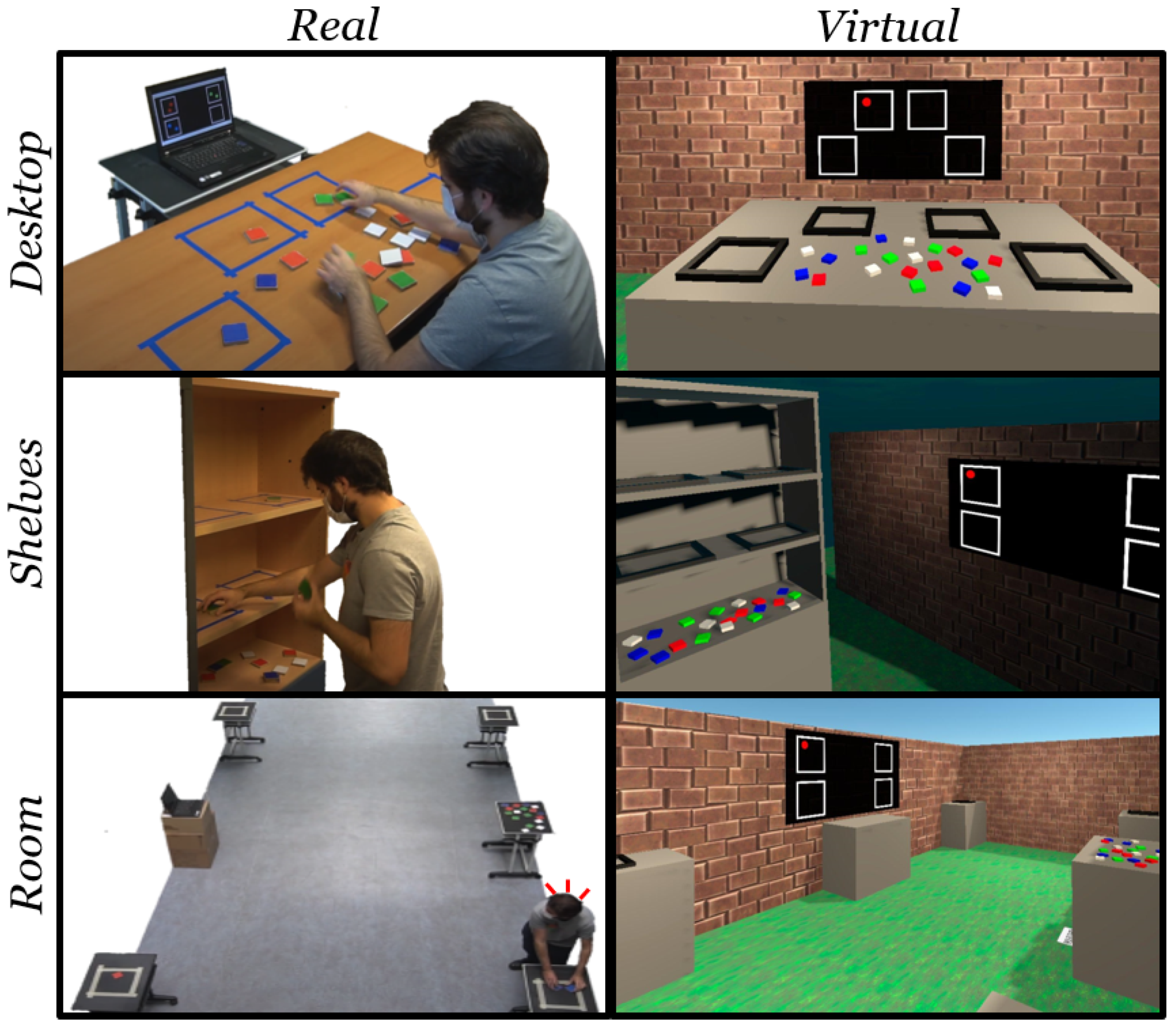

4.4. Scenarios

Each of the conditions were tested in three scenarios. These scenarios were designed to be as similar as possible for the real and virtual environments, as can be seen in

Figure 4.

Desktop: The participants are seated in front of a desktop table. There are four different zones on the table. The target trials are shown in front of the user.

Shelves: The participants are standing up in front of a rack with three shelves. Two zones are located at the top of the shelf, two more in the middle and the lower one is for the initial zone of the tokens. The target trials are shown at the right of the user.

Room: The participants are standing up in the middle of a room. The target zones are at the four corners of the room, on top of small tables; the initial zone is at the side between two corners, and the screen showing the target trials is by the wall in front of the initial zone. In all conditions, the users were instructed to walk at a comfortable pace and never run, to avoid collisions.

4.5. Procedure

Before starting the experiment, the participants received instructions detailing the different scenarios, conditions, and task that they will have to perform. The conditions were counterbalanced using a latin square to avoid order effects. In the Virtual Reality conditions, users performed a tutorial on how to use the controllers and the techniques. For the real-life condition, the users had some time to move the tokens around and to become comfortable with the scenario.

The experiments were limited to 45 min to avoid visual fatigue or tiredness. They were performed over two sessions: the first session included the desktop and shelves scenarios, while the second session was the room scenario. The order of conditions was maintained across the two sessions.

4.6. Measurements

The objective measured values were task completion time (TCT) and distance travelled by the hands. At the end of the experiment, subjective questionnaires were also filled in by the participants regarding workload and usability, namely, the NASA TLX [

23] and SUS [

24].

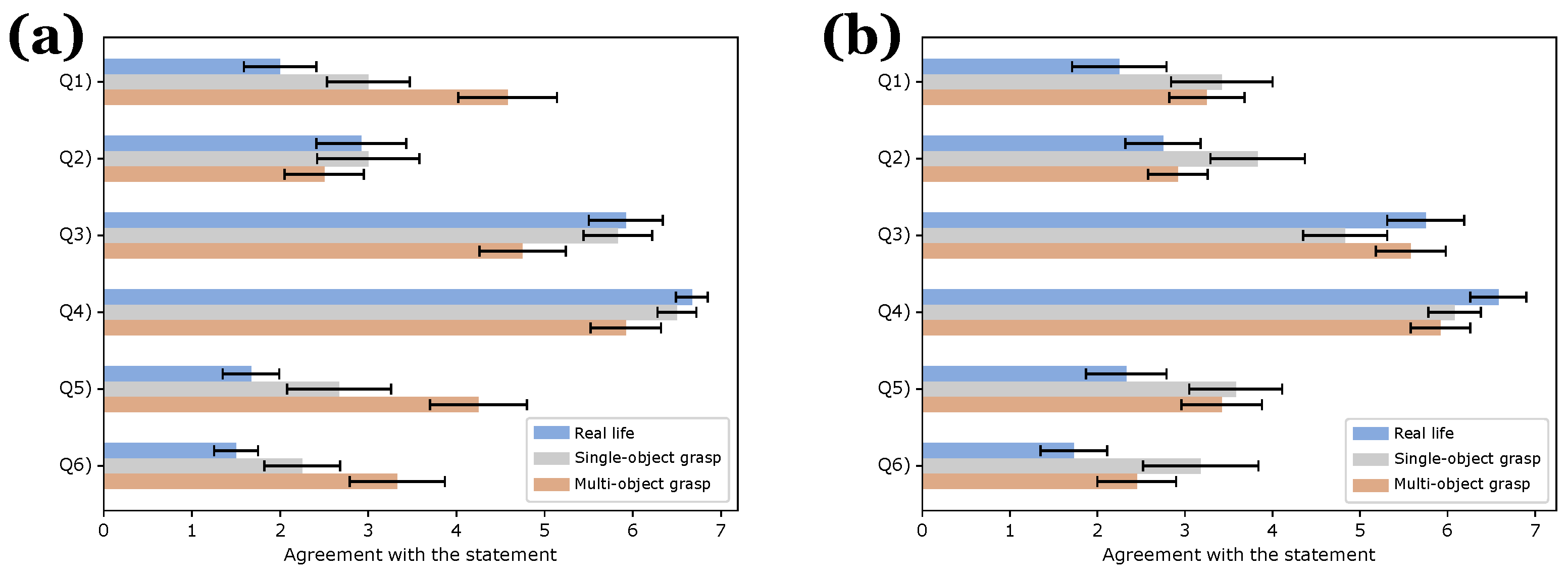

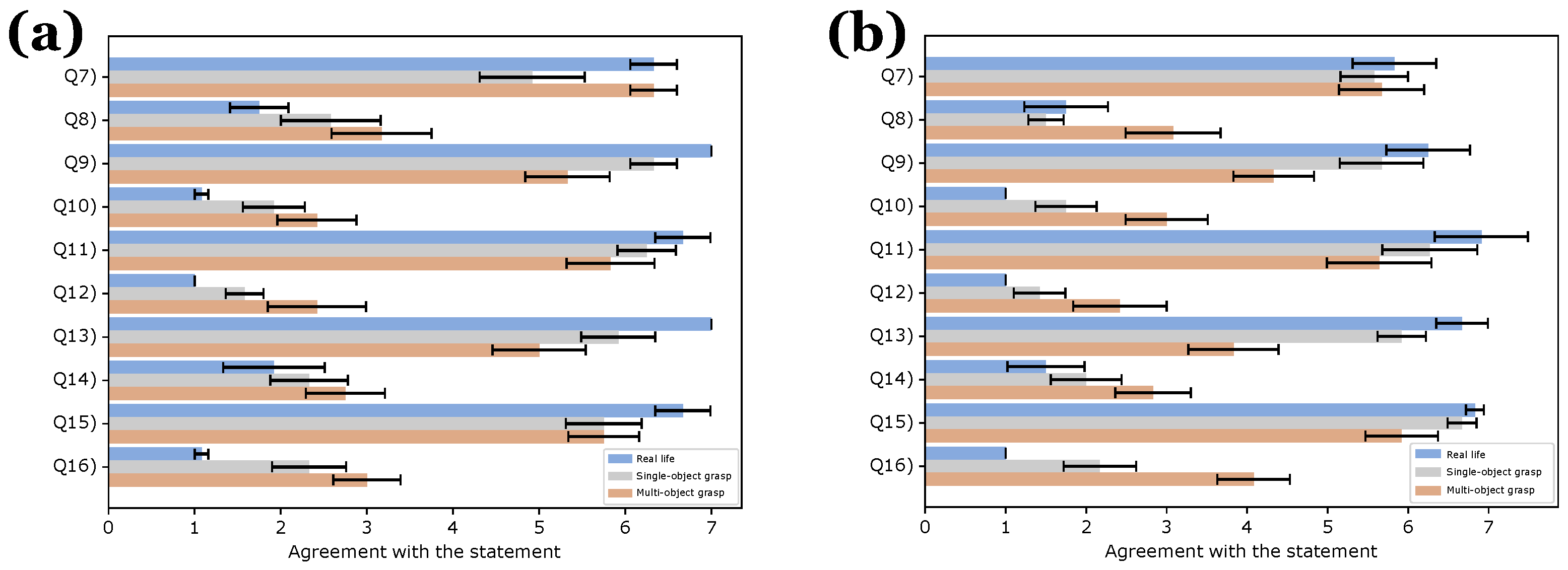

The questions from the NASA TLX questionnaire were as follows: Q1—The mental effort necessary to use this method is huge; Q2—The physical effort necessary to use this method is huge; Q3—The dynamic of the activity has been very fast; Q4—I have felt successful at doing the activities; Q5—I had to put lots of effort in order to do the activities; Q6—I felt frustrated while doing the activities. The questions from the SUS questionnaire were: Q7—I think that I would like to use this system frequently; Q8—I found the system unnecessarily complex; Q9—I thought the system was easy to use; Q10—I think that I would need the support of a technical person to be able to use this system; Q11—I found the various functions in this system were well integrated; Q12—I thought there was too much inconsistency in this system; Q13—I would imagine that most people would learn to use this system very quickly; Q14—I found the system very cumbersome to use; Q15—I felt very confident using the system; Q16—I needed to learn a lot of things before I could get going with this system.

In the last part of the questionnaire, participants were asked to rank the conditions according to their preferences: from 1 (preferred one) to 3 (least preferred condition).

5. Results

The measurements from the user study were analysed using ANOVA repeated measures to detect significant effects of the condition, and post hoc tests with Bonferroni correction were used to determine significant differences.

5.1. Objective Results

The objective results show information about the average task completion time (TCT) that it took for the users to complete the trials in each scenario and condition. Additionally, the average travelled distance by the hands of the users in meters when performing the tasks is reported.

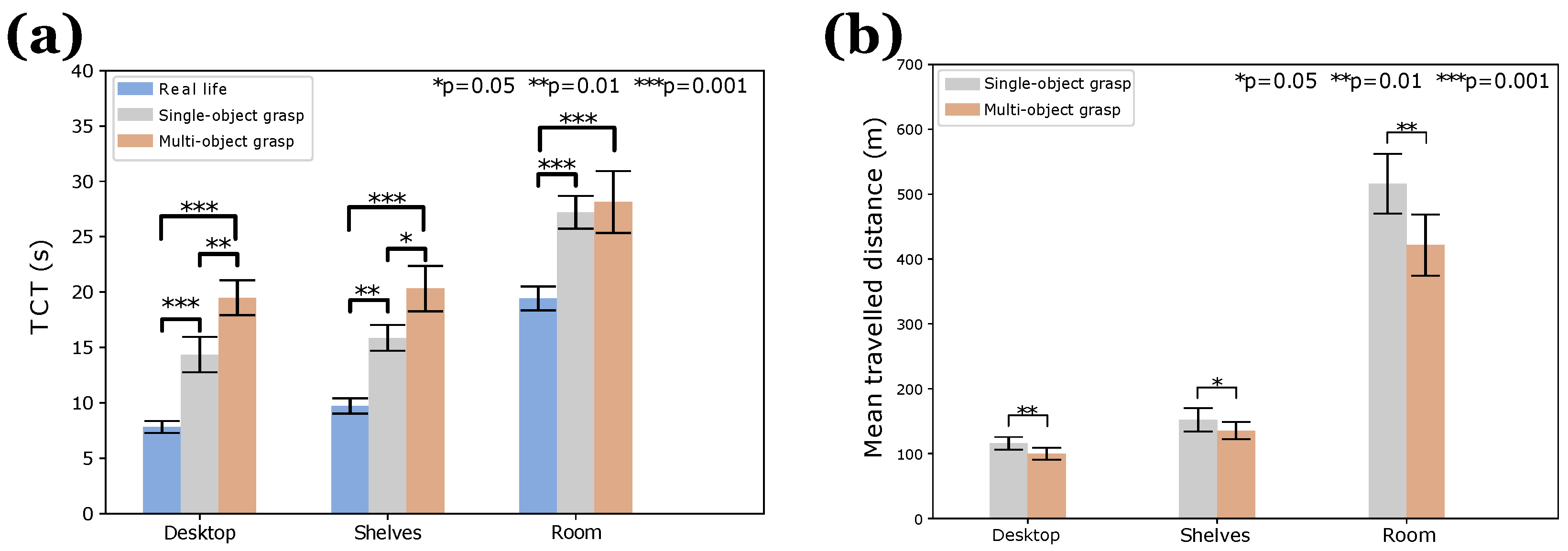

As we can observe for TCT in

Figure 5a, users were able to complete the tasks faster in the real environment condition, having a significant difference from both VR conditions. Focusing on the VR techniques, for the Desktop and the Shelf scenarios, the single-object grasp technique was significantly faster than the multi-object grasp technique. For the room scenario, we can see that there was no significant difference. Regarding the deviation, it can be seen that when performing the experiments in the real world in the small scenarios (Desktop, Shelf), most of the users needed a similar amount of time to finish the tasks. This variance increased slightly when the experiments were done in the room scenario. In the VR conditions, the deviation was wider, specially in the room condition.

The travelled distance of the hands was gathered for the VR conditions (

Figure 5b). In all the scenarios, the travelled distance was significantly less when using the multi-object grasp technique. In terms of standard deviation, users travelled similar distances in the desktop and the shelves scenarios. However, in the room scenario, the difference in the travelled distance from one user to another varied more.

5.2. Subjective Results

Subjective results are shown by condition and scenarios: NASA-TLX in

Figure 6, and SUS in

Figure 7. Qualitative questionnaires for Desktop and Shelf scenarios were answered at the end of the first session, questionnaires from the Room scenario were gathered in session 2.

NASA-TLX questionnaire: The users felt more comfortable in terms of difficulty and system complexity in the real condition. The required mental effort to complete the tasks under this condition was quantified as the lowest, and so was the physical one. The users also indicated that the real-life condition made the activities more dynamic and they felt successful in finishing the task without putting too much effort into them. They did not experience frustration during the real-world scenarios.

Under the VR conditions, users were more comfortable with the single-object technique in terms of the needed effort to successfully complete the tasks with a lower mental effort than when using the multi-object grasp technique. Nevertheless, the users also reported that the physical effort was larger when using the single-object technique. They also felt they had to make more of an effort when using the single-object grasp to finish the activities. In regard to the physical effort, the multi-object grasp technique was reported to be less tiring than the single-object grasp technique. The results also show that the users found the dynamics of the activity faster when they were able to use the multi-object grasp technique.

Overall, the users felt capable of completing the activities successfully in all the scenarios. Even though the real-world scenario felt the easiest and the VR with multi-object the hardest, the differences were not significant. The users felt a higher frustration when they were using the single-object grasp technique than the multi-object grasp one. In addition, they felt that the general effort to complete the activities was higher when using the single-object rather than the multi-object technique.

SUS questionnaire: Users had a preference towards the real environment condition. They preferred the real world system, followed by the VR with multi-object grasp technique. They also found the real environment condition less complex than the two VR conditions and they did not think they would need technical help to complete the tasks in that situation. The real-life system was the least cumbersome and the one in which they felt more confident, with no need to learn instructions before the execution of the tasks.

Focusing on the VR conditions, the users would prefer to use the multi-object grasp technique frequently, even though they found this technique to be the most complex one. The users did not find any of the conditions exceedingly complex, although the multi-object grasp technique was perceived as the most difficult one. The users felt that they would need more support to use the multi-object grasp technique. The users found that the functions were well integrated in all the conditions with multi-object obtaining the lowest score.

Users did not encounter many inconsistencies, but they found more in the multi-object grasp technique than in the single-object grasp technique. Users reported that the single-object technique is faster to learn than the multi-object one. Users did not find any of the conditions cumbersome to use, although the multi-object technique was found to be the most cumbersome. They also felt less confidence when using the multi-object grasp technique than when using the single-object one.

In conclusion, although users find the multi-object grasp technique more difficult in some aspects and with a steeper learning curve, the physical effort to finish the tasks was lower. They would rather use the multi-object grasp technique more often than the single-object grasp one in the room scenario. The standard deviation between different users is not large in any of the cases but VR conditions seem more varied than real-life.

In terms of users ranking the conditions, the real environment condition was the preferred choice in all scenarios. In the Desktop and the Shelf scenarios, the users preferred the single-object grasp. For the room scenario, users ranked the real-life conditions as high as the VR multi-object grasp technique. The conclusion is that even though users felt that the multi-object grasp technique was harder to control and that they needed more mental effort to handle it, they experienced lower physical tiredness, faster pace, and lower general effort to complete the tasks.

6. Discussion

Performing the experiments in the real environment provide better results than both single-object and multi-object grasp techniques; however, in terms of the subjective experience, the multi-object technique is better than the single-object technique; this is also shown in the reduction of the distance that users travelled to complete the tasks. The users walked ~100 m less in the room scenario when using the multi-object grasp technique. For even larger distances, such as a storage area, the multi-object grasp technique becomes the only practical option.

Although we did not use physical simulations of the contacts between the fingers and palm with the tokens, we could add some specific parts to a physic-based interaction for supporting in VR manoeuvres that people tried to use in real life, such as pushing multiple tokens away by swiping with the hand or forearm, or moving a large number of objects with both hands put together.

A more extensive evaluation with the presented techniques should be conducted to study how well training in virtual environments transfer to the real world. This study could involve a control group (training in real-life) and an experimental group (training in Virtual Reality), the performance at a more specific task would be measured before and after the training. From this long-term study an interesting future line would be to analyse why the power users (participants in the 90th percentile) perform well: efficiency of movement, fast thinking time, fast movements or use of both hands.

The evaluations were not performed along an extensive period of time (e.g., weeks), but we reckon that with extensive training, people will become more proficient in using the multi-object grasp technique. However, we still think that intuitiveness should be an important factor for interaction techniques and thus the traditional single-object technique should still be the default one in most applications. We note that the multi-object grasp technique was designed to be a superset of the single-object grasp one. That is, it can be used as a single-object technique without any additional knowledge. We hope that multi-object grasping can be provided as an option for VR games and applications that may benefit from it.

Author Contributions

Conceptualization, U.J.F. and A.M.; methodology, U.J.F. and A.M.; software, U.J.F.; validation, S.M., A.O. and O.A.; resources, A.O., O.A. and A.M.; data curation, U.J.F.; writing—original draft preparation, S.E., N.I., U.J.F., R.M. and A.M.; writing—review and editing, S.E., N.I., R.M., A.O., S.M., O.A., A.M.; supervision, A.M.; funding acquisition, A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the EU Horizon 2020 research and innovation programme under grant agreement No 101017746; U.J.F. was funded by UPNA-Beca colaboración.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Ethics Committee of Public University of Navarre (protocol code “Técnicas de agarre para posicionamiento de objetos en tareas logísticas de realidad virtual” and processed on PI-005/22 22 February 2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data is provided on request.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| VR | Virtual Reality |

| TCT | Task Completion Time |

| SUS | System Usability Scale |

| TLX | Task Load Index |

| cm | Centimetre |

| m | Metre |

References

- Kolivand, H.; Tomi, B.; Zamri, N.; Shahrizal Sunar, M. Virtual Surgery, Applications and Limitations. In Medical Imaging Technology; Springer: Singapore, 2015; pp. 169–195. [Google Scholar] [CrossRef]

- Stone, R.J.; Panfilov, P.B.; Shukshunov, V.E. Evolution of aerospace simulation: From immersive Virtual Reality to serious games. In Proceedings of the RAST 2011—5th International Conference on Recent Advances in Space Technologies, Istanbul, Turkey, 9–11 June 2011. [Google Scholar] [CrossRef]

- Kamińska, D.; Sapiński, T.; Aitken, N.; Rocca, A.D.; Barańska, M.; Wietsma, R. Virtual reality as a new trend in mechanical and electrical engineering education. Open Phys. 2017, 15, 936–941. [Google Scholar] [CrossRef]

- Haluck, R.S.; Marshall, R.L.; Krummel, T.M.; Melkonian, M.G. Are surgery training programs ready for virtual reality? A survey of program directors in general surgery. J. Am. Coll. Surg. 2001, 193, 660–665. [Google Scholar] [CrossRef]

- Schnack, A.; Wright, M.J.; Holdershaw, J.L. Immersive virtual reality technology in a three-dimensional virtual simulated store: Investigating telepresence and usability. Food Res. Int. 2019, 117, 40–49. [Google Scholar] [CrossRef] [PubMed]

- Tang, H.; Feng, Z.; Xu, T.; Yang, X. VR system for active hand rehabilitation training. In Proceedings of the 2017 4th International Conference on Information, Cybernetics and Computational Social Systems (ICCSS), Dalian, China, 24–26 July 2017; pp. 316–320. [Google Scholar]

- Bergström, J.; Dalsgaard, T.S.; Alexander, J.; Hornbæk, K. How to Evaluate Object Selection and Manipulation in VR? Guidelines from 20 Years of Studies. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Poupyrev, I.; Billinghurst, M.; Weghorst, S.; Ichikawa, T. The Go-Go Interaction Technique: Non-Linear Mapping for Direct Manipulation in VR. In Proceedings of the 9th Annual ACM Symposium on User Interface Software and Technology, Seattle, WA, USA, 6–8 November 1996; Association for Computing Machinery: New York, NY, USA, 1996; pp. 79–80. [Google Scholar] [CrossRef]

- Bowman, D.A.; Kruijff, E.; LaViola, J.J., Jr.; Poupyrev, I. 3D User Interfaces: Theory and Practice; Addison-Wesley: Redwood City, CA, USA, 2005. [Google Scholar]

- Vanacken, L.; Grossman, T.; Coninx, K. Multimodal selection techniques for dense and occluded 3D virtual environments. Int. J. Hum.-Comput. Stud. 2009, 67, 237–255. [Google Scholar] [CrossRef]

- Steed, A.; Parker, C. 3D Selection Strategies for Head Tracked and Non-Head Tracked Operation of Spatially Immersive Displays. In Proceedings of the 8th International Immersive Projection Technology Workshop, Ames, IA, USA, 13–14 May 2004; pp. 13–14. [Google Scholar]

- Feiner, A.O.S. The flexible pointer: An interaction technique for selection in augmented and virtual reality. Proc. UIST 2003, 3, 81–82. [Google Scholar]

- Mine, M.R.; Brooks, F.P.; Sequin, C.H. Moving Objects in Space: Exploiting Proprioception in Virtual-Environment Interaction. In Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 3–8 August 1997; ACM Press: New York, NY, USA, 1997; pp. 19–26. [Google Scholar] [CrossRef]

- Jörg, S.; Ye, Y.; Mueller, F.; Neff, M.; Zordan, V. Virtual hands in VR: Motion capture, synthesis, and perception. In Proceedings of the SIGGRAPH Asia 2020 Courses, Virtual Event, 17 August 2020; pp. 1–32. [Google Scholar]

- Wan, H.; Luo, Y.; Gao, S.; Peng, Q. Realistic virtual hand modeling with applications for virtual grasping. In Proceedings of the VRCAI 2004, ACM SIGGRAPH International Conference on Virtual Reality Continuum and its Applications in Industry, Singapore, 16–18 June 2004. [Google Scholar] [CrossRef]

- de Haan, G.; Koutek, M.; Post, F.H. IntenSelect: Using Dynamic Object Rating for Assisting 3D Object Selection. In Proceedings of the 11th Eurographics Conference on Virtual Environments, Aalborg, Denmark, 6–7 October 2005; Eurographics Association: Goslar, Germany, 2005; pp. 201–209. [Google Scholar]

- Looser, J.; Billinghurst, M.; Grasset, R.; Cockburn, A. An Evaluation of Virtual Lenses for Object Selection in Augmented Reality. In Proceedings of the 5th International Conference on Computer Graphics and Interactive Techniques in Australia and Southeast Asia, Perth, Australia, 1–4 December 2007; Association for Computing Machinery: New York, NY, USA, 2007; pp. 203–210. [Google Scholar] [CrossRef] [Green Version]

- Ulinski, A.; Zanbaka, C.; Wartell, Z.; Goolkasian, P.; Hodges, L.F. Two Handed Selection Techniques for Volumetric Data. In Proceedings of the 2007 IEEE Symposium on 3D User Interfaces, Charlotte, NC, USA, 10–11 March 2007. [Google Scholar] [CrossRef]

- Stenholt, R.; Madsen, C.B. Shaping 3-D boxes: A full 9 degree-of-freedom docking experiment. In Proceedings of the 2011 IEEE Virtual Reality Conference, Singapore, 19–23 March 2011; pp. 103–110. [Google Scholar] [CrossRef]

- Stenholt, R. Efficient Selection of Multiple Objects on a Large Scale. In Proceedings of the 18th ACM Symposium on Virtual Reality Software and Technology, Toronto, ON, Canada, 10–12 December 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 105–112. [Google Scholar] [CrossRef] [Green Version]

- Accot, J.; Zhai, S. More than Dotting the i’s—Foundations for Crossing-Based Interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Minneapolis, MN, USA, 20–25 April 2002; Association for Computing Machinery: New York, NY, USA, 2002; pp. 73–80. [Google Scholar] [CrossRef]

- Lucas, J.F.; Bowman, D.A.; Chen, J.; Wingrave, C.A. Design and evaluation of 3D multiple object selection techniques. In Proceedings of the UIST05: The 18th Annual ACM Symposium on User Interface Software and Technology, Seattle, WA, USA, 23–26 October 2005. [Google Scholar]

- NASA Ames Research Center. NASA Task Load Index (TLX): Paper and Pencil Version; NASA Ames Research Center Aerospace Human Factors Research Division: Moffett Field, CA, USA, 1986.

- Bangor, A.; Kortum, P.T.; Miller, J.T. An empirical evaluation of the system usability scale. Intl. J. Hum. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).