Robot-Agnostic Interaction Controllers Based on ROS

Abstract

1. Introduction

1.1. Related Research

1.2. Research Approach and Contribution

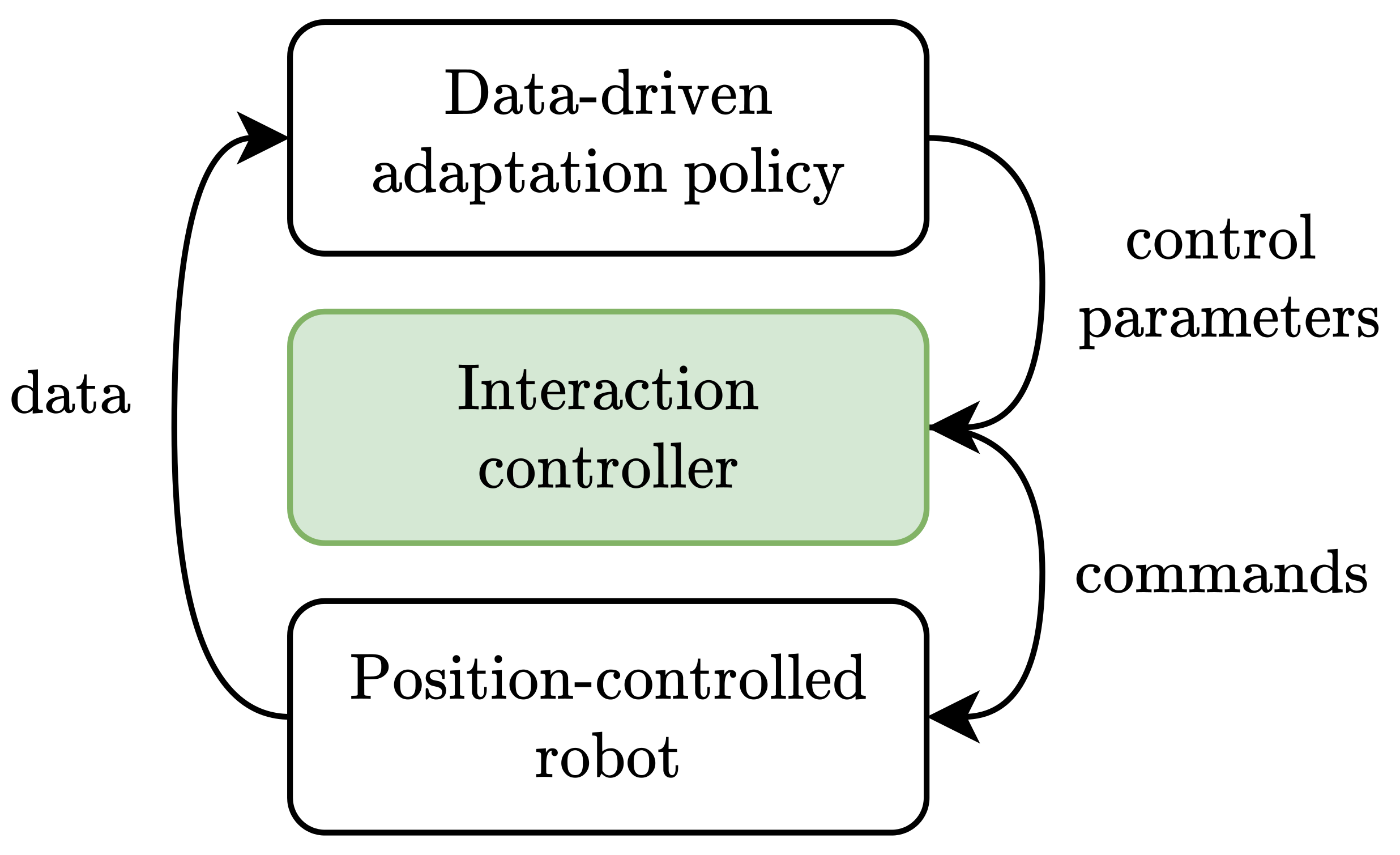

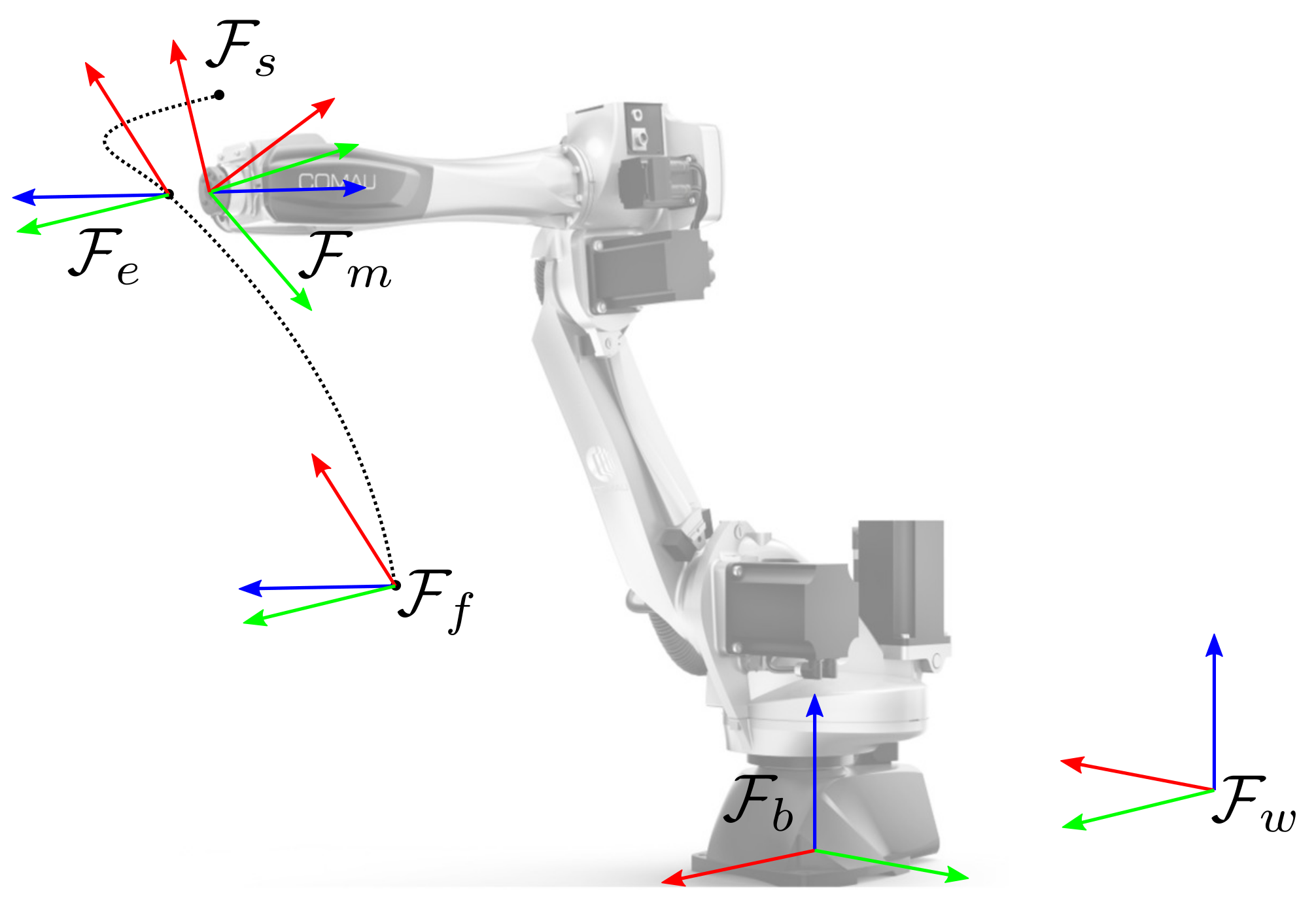

2. Materials and Methods

2.1. Interaction Control

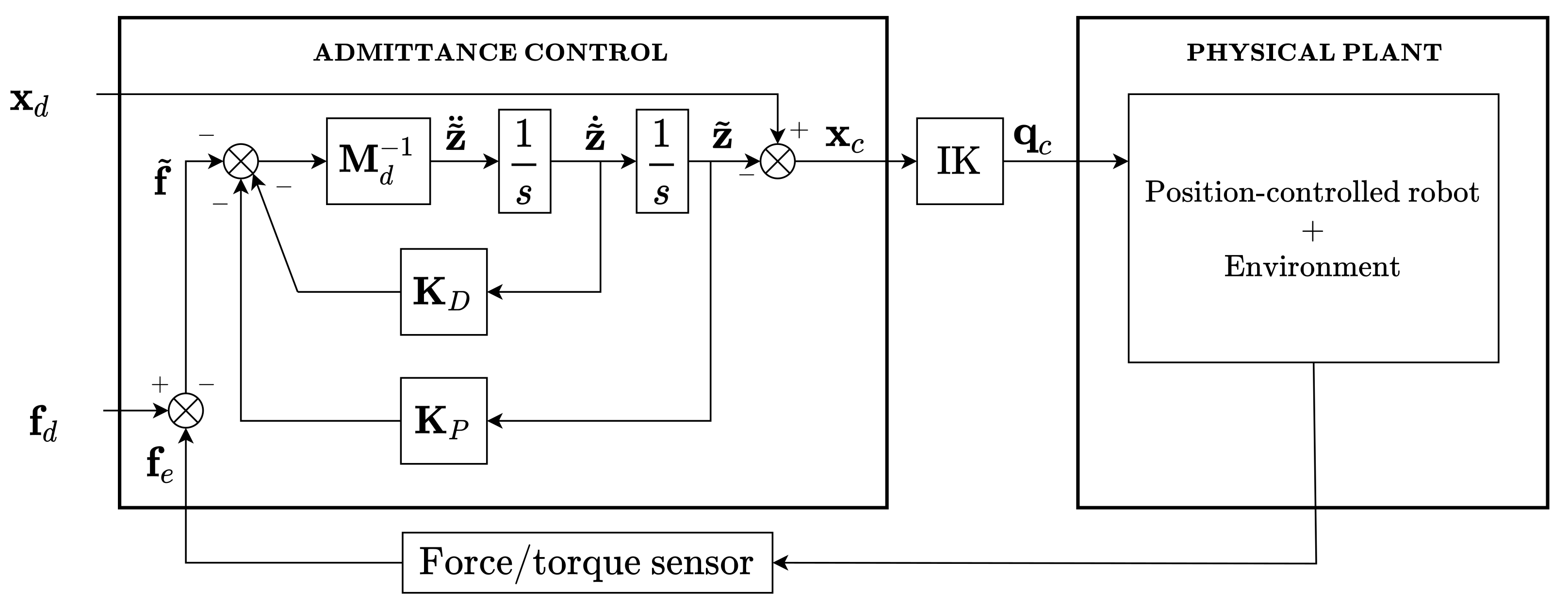

2.1.1. Admittance Control

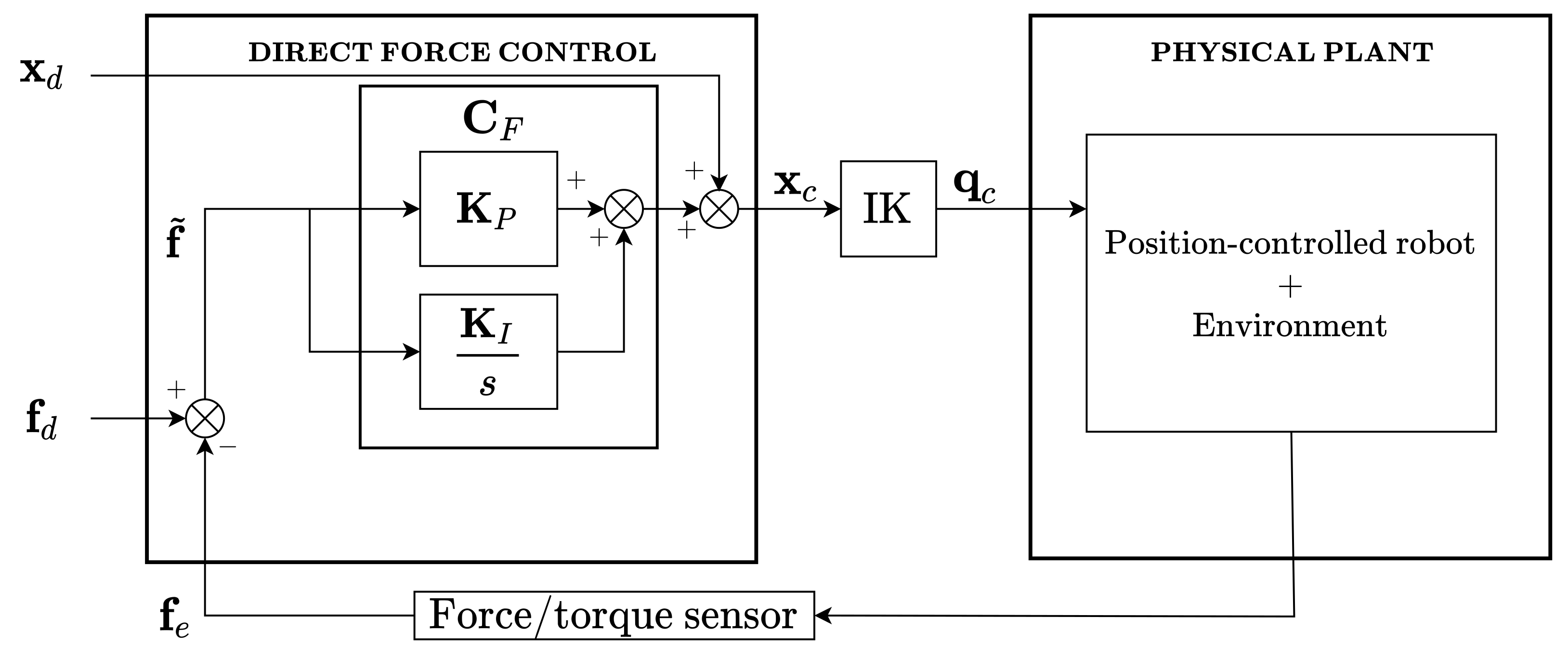

2.1.2. Direct Force Control

2.2. ROS-Based Implementation

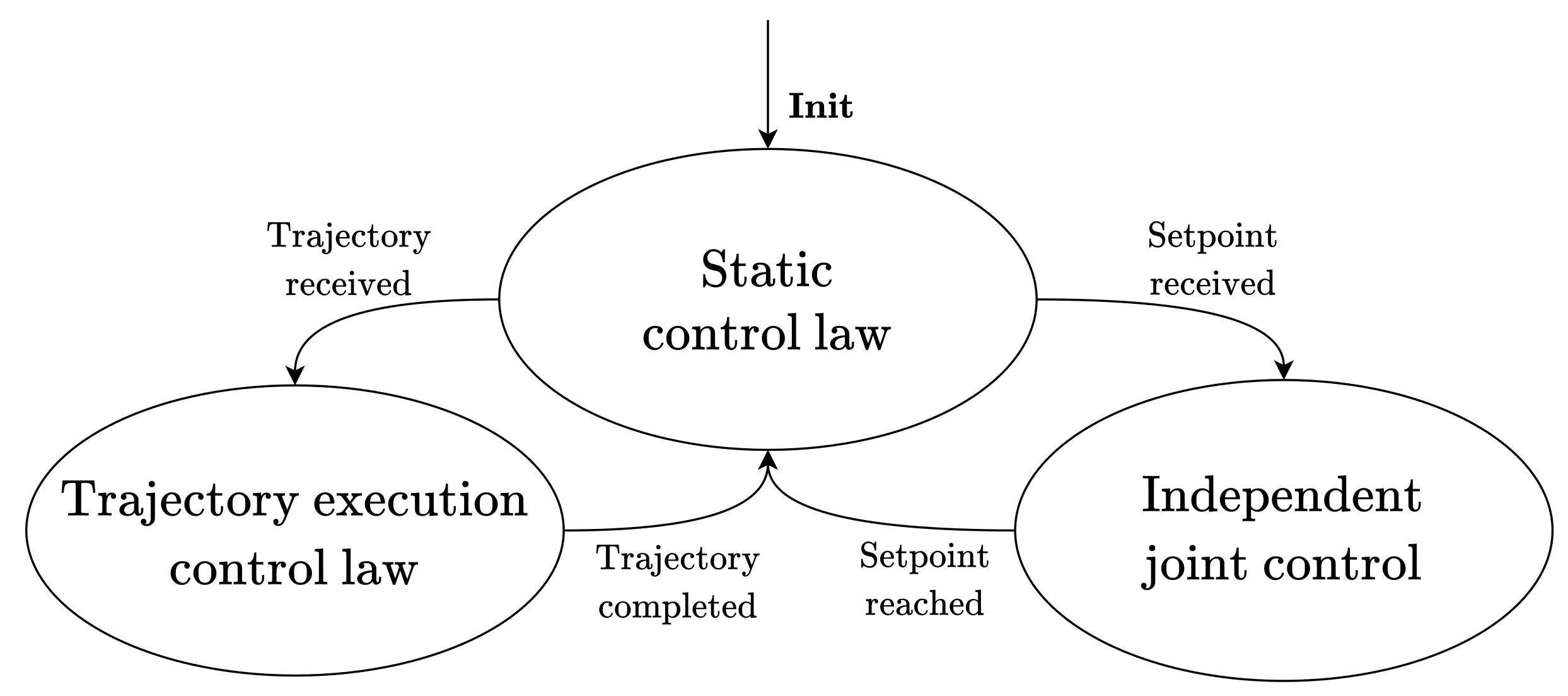

2.2.1. Finite State Machine Architecture

- Static control law: the algorithm simply executes the control law, with fixed force and position references;

- Trajectory execution control law: the algorithm still executes the control law while tracking the trajectory received as input (the tracking might not be accurate, along force-controlled directions, due to the control action);

- Independent joint control: the interaction control law is not executed, while the independent joint control allows reaching the desired position and orientation with an assigned tolerance.

- Trajectory received: a trajectory is received;

- Setpoint received: a setpoint is received;

- Trajectory completed: the execution of the trajectory is completed;

- Setpoint reached: the setpoint is reached.

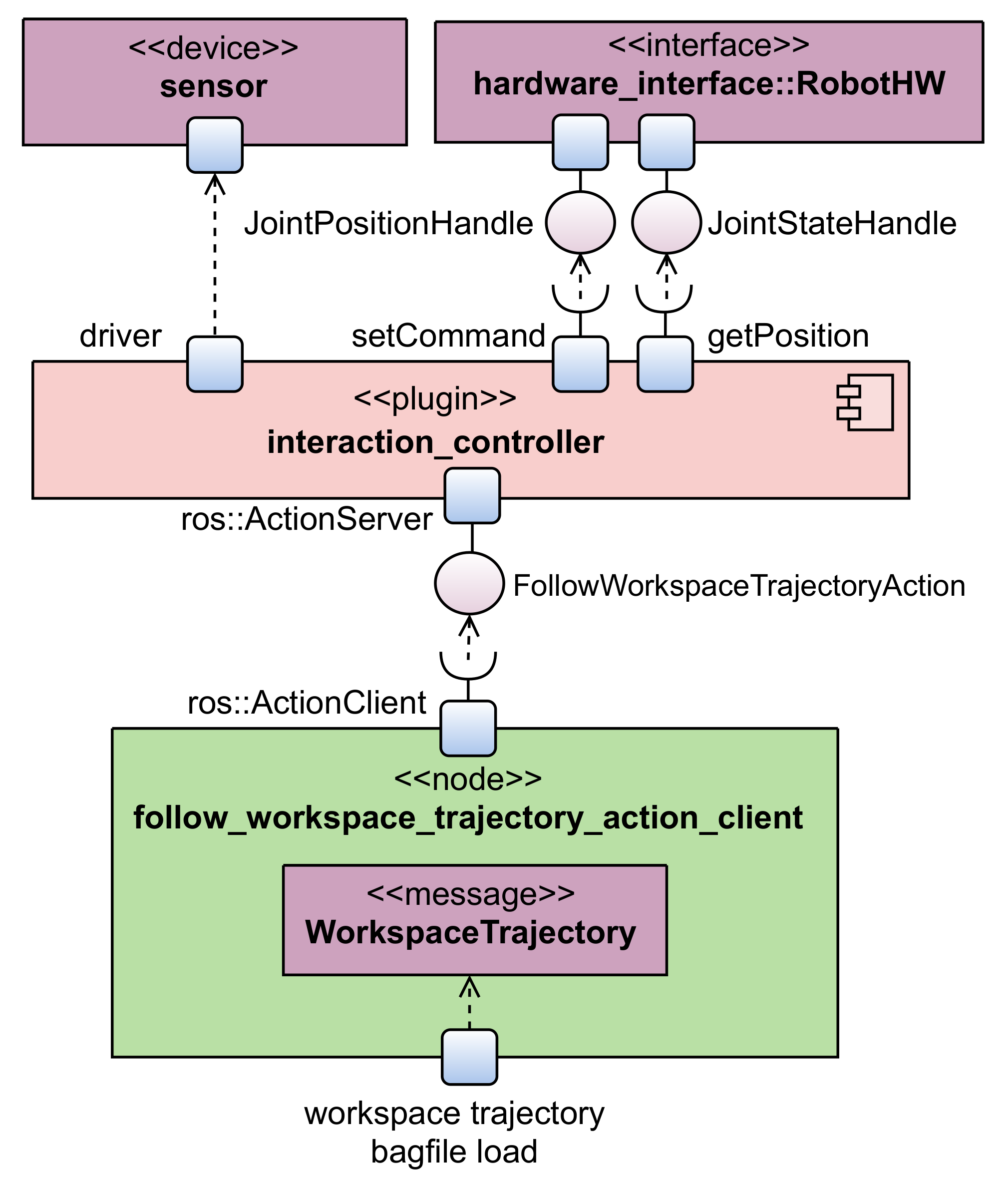

2.2.2. Action Communication Interface

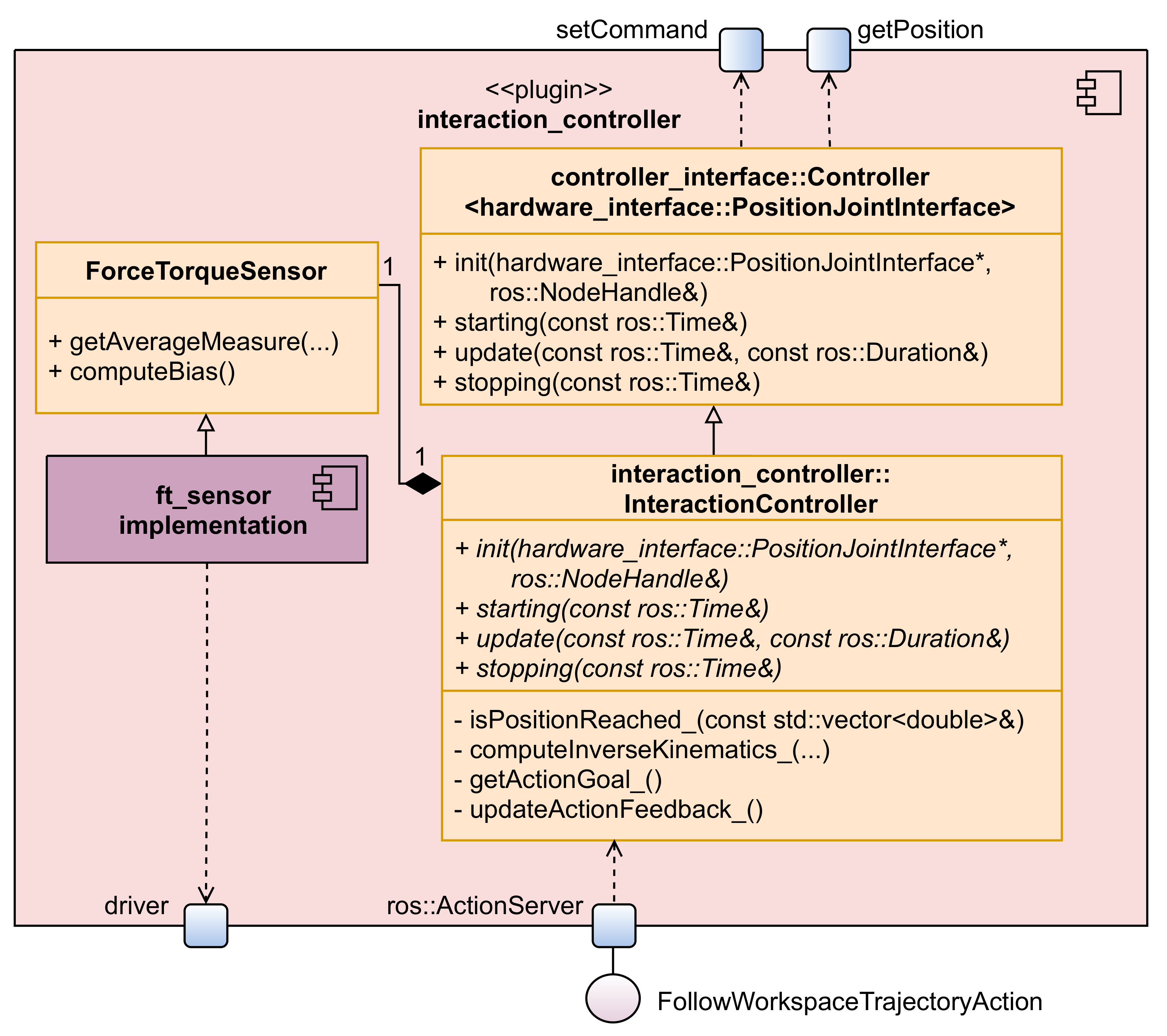

2.2.3. Implementation Details

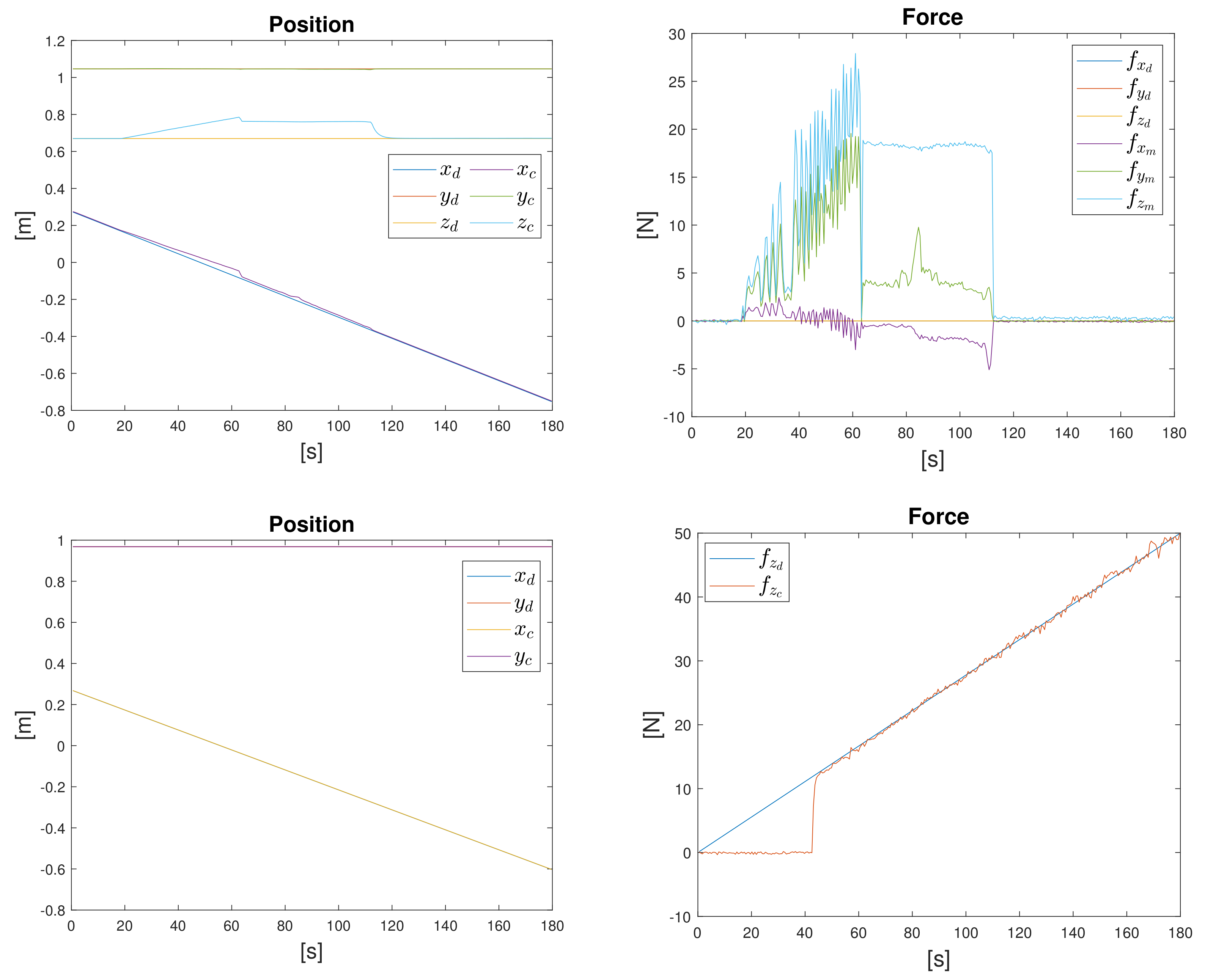

3. Results

3.1. Trajectory Execution

3.2. Human–Robot Interaction

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ROS | robot operating system |

| FSM | finite state machine |

| URDF | unified robot description format |

References

- Siciliano, B.; Khatib, O. Springer Handbook of Robotics; Springer International Publishing: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Siciliano, B.; Sciavicco, L.; Villani, L.; Oriolo, G. Robotics. Modelling, Planning and Control; Springer: London, UK, 2009. [Google Scholar] [CrossRef]

- Vladareanu, L.; Lile, R.; Radulescu, M.; Mitroi, D.; Marin, D.; Ciocirlan, A.; Boscoianu, E.C.; Boscoianu, M. Intelligent control interfaces developed on Versatile Portable Intelligent Platform in order to improving autonomous navigation robots performances. Period. Eng. Nat. Sci. 2019, 7, 324–329. [Google Scholar] [CrossRef][Green Version]

- Basile, F.; Caccavale, F.; Chiacchio, P.; Coppola, J.; Marino, A.; Gerbasio, D. Automated synthesis of hybrid Petri net models for robotic cells in the aircraft industry. Control Eng. Pract. 2014, 31, 35–49. [Google Scholar] [CrossRef]

- Basile, F.; Caccavale, F.; Chiacchio, P.; Coppola, J.; Curatella, C. Task-oriented motion planning for multi-arm robotic systems. Robot. Comput.-Integr. Manuf. 2012, 28, 569–582. [Google Scholar] [CrossRef]

- ROS. 2008. Available online: http://www.ros.org/ (accessed on 27 February 2022).

- Tavares, P.; Silva, J.; Costa, P.; Veiga, G.; Moreira, A. Flexible Work Cell Simulator Using Digital Twin Methodology for Highly Complex Systems in Industry 4.0. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2018; pp. 541–552. [Google Scholar] [CrossRef]

- ROS-Industrial. Available online: https://rosindustrial.org/ (accessed on 27 February 2022).

- Volpe, R.; Nesnas, I.; Estlin, T.; Mutz, D.; Petras, R.; Das, H. The CLARAty architecture for robotic autonomy. In Proceedings of the 2001 IEEE Aerospace Conference Proceedings (Cat. No. 01TH8542), Big Sky, MT, USA, 10–17 March 2001; Volume 1, p. 1. [Google Scholar] [CrossRef]

- OROCOS. Available online: https://orocos.org/ (accessed on 27 February 2022).

- YARP. Available online: https://www.yarp.it/latest/ (accessed on 27 February 2022).

- Lippiello, V.; Luigi, V.; Siciliano, B. An open architecture for sensory feedback control of a dual-arm industrial robotic cell. Ind. Robot. Int. J. 2007, 34, 46–53. [Google Scholar] [CrossRef]

- Michieletto, S.; Tosello, E.; Romanelli, F.; Ferrara, V.; Menegatti, E. ROS-I Interface for COMAU Robots. In Proceedings of the International Conference on Simulation, Modeling, and Programming for Autonomous Robots, Bergamo, Italy, 20–23 October 2014. [Google Scholar] [CrossRef]

- Martinez, C.; Barrero, N.; Hernandez, W.; Montaño, C.; Mondragón, I. Setup of the Yaskawa SDA10F Robot for Industrial Applications, Using ROS-Industrial. In Advances in Automation and Robotics Research in Latin America; Chang, I., Baca, J., Moreno, H.A., Carrera, I.G., Cardona, M.N., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 186–203. [Google Scholar]

- Gašpar, T.; Ridge, B.; Bevec, R.; Bem, M.; Kovač, I.; Ude, A.; Gosar, Ž. Rapid hardware and software reconfiguration in a robotic workcell. In Proceedings of the 18th International Conference on Advanced Robotics (ICAR), Hong Kong, China, 10–12 July 2017; pp. 229–236. [Google Scholar] [CrossRef]

- Gašpar, T.; Deniša, M.; Radanovič, P.; Ridge, B.; Rajeeth Savarimuthu, T.; Kramberger, A.; Priggemeyer, M.; Roßmann, J.; Wörgötter, F.; Ivanovska, T.; et al. Smart hardware integration with advanced robot programming technologies for efficient reconfiguration of robot workcells. Robot. Comput.-Integr. Manuf. 2020, 66, 101979. [Google Scholar] [CrossRef]

- Rajapaksha, U.K.; Jayawardena, C.; MacDonald, B.A. ROS Based Heterogeneous Multiple Robots Control Using High Level User Instructions. In Proceedings of the TENCON 2021—2021 IEEE Region 10 Conference (TENCON), Auckland, New Zealand, 7–10 December 2021; pp. 163–168. [Google Scholar] [CrossRef]

- La Mura, F.; Todeschini, G.; Giberti, H. High Performance Motion-Planner Architecture for Hardware-In-the-Loop System Based on Position-Based-Admittance-Control. Robotics 2018, 7, 8. [Google Scholar] [CrossRef]

- Fernandez, S.R.; Olabi, A.; Gibaru, O. Multi-Surface Admittance Control Approach applied on Robotic Assembly of Large-Scale parts in Aerospace Manufacturing. In Proceedings of the 2019 19th International Conference on Advanced Robotics (ICAR), Belo Horizonte, Brazil, 2–6 December 2019; pp. 688–694. [Google Scholar] [CrossRef]

- Xue, X.; Huang, H.; Zuo, L.; Wang, N. A Compliant Force Control Scheme for Industrial Robot Interactive Operation. Front. Neurorobot. 2022, 16. [Google Scholar] [CrossRef] [PubMed]

- Scherzinger, S.; Roennau, A.; Dillmann, R. Forward Dynamics Compliance Control (FDCC): A new approach to cartesian compliance for robotic manipulators. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4568–4575. [Google Scholar] [CrossRef]

- Chitta, S.; Marder-Eppstein, E.; Meeussen, W.; Pradeep, V.; Rodríguez Tsouroukdissian, A.; Bohren, J.; Coleman, D.; Magyar, B.; Raiola, G.; Lüdtke, M.; et al. ros_control: A generic and simple control framework for ROS. J. Open Source Softw. 2017, 2, 456. [Google Scholar] [CrossRef]

- Ferrein, A.; Schiffer, S.; Kallweit, S. The ROSIN Education Concept—Fostering ROS Industrial-Related Robotics Education in Europe. In Proceedings of the ROBOT’2017: Third Iberian Robotics Conference, Seville, Spain, 22–24 November 2017. [Google Scholar] [CrossRef]

- ROS 2 Documentation. 2022. Available online: https://docs.ros.org/en/foxy/index.html (accessed on 27 February 2022).

- Mayoral-Vilches, V.; Pinzger, M.; Rass, S.; Dieber, B.; Gil-Uriarte, E. Can ROS be used securely in industry? Red teaming ROS-Industrial. arXiv 2020, arXiv:2009.08211. [Google Scholar]

- Gazebo. Available online: http://gazebosim.org/ (accessed on 27 February 2022).

- Roveda, L.; Testa, A.; Shahid, A.; Braghin, F.; Piga, D. Q-Learning-Based Model Predictive Variable Impedance Control for Physical Human-Robot Collaboration. Preprint Submitted to Elsevier. Available online: https://www.researchgate.net/publication/354569180_Q-Learning-Based_Model_Predictive_Variable_Impedance_Control_for_Physical_Human-Robot_Collaboration (accessed on 27 February 2022).

- Peng, G.; Chen, C.L.P.; Yang, C. Neural Networks Enhanced Optimal Admittance Control of Robot-Environment Interaction Using Reinforcement Learning. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.; Buss, M. Force Tracking Impedance Control with Variable Target Stiffness. IFAC Proc. Vol. 2008, 41, 6751–6756. [Google Scholar] [CrossRef]

- Li, C.; Zhang, Z.; Xia, G.; Xie, X.; Zhu, Q. Efficient Force Control Learning System for Industrial Robots Based on Variable Impedance Control. Sensors 2018, 18, 2539. [Google Scholar] [CrossRef] [PubMed]

- Duan, J.; Gan, Y.; Chen, M.; Dai, X. Adaptive variable impedance control for dynamic contact force tracking in uncertain environment. Robot. Auton. Syst. 2018, 102, 54–65. [Google Scholar] [CrossRef]

- De Schutter, J.; Van Brussel, H. Compliant Robot Motion II. A Control Approach Based on External Control Loops. Int. J. Robot. Res. 1988, 7, 18–33. [Google Scholar] [CrossRef]

- Pluginlib. Available online: http://wiki.ros.org/pluginlib (accessed on 27 February 2022).

- Actionlib. Available online: http://wiki.ros.org/actionlib (accessed on 27 February 2022).

- Controller_manager. Available online: http://wiki.ros.org/controller_manager (accessed on 27 February 2022).

- Ferrentino, E.; Salvioli, F.; Chiacchio, P. Globally Optimal Redundancy Resolution with Dynamic Programming for Robot Planning: A ROS Implementation. Robotics 2021, 10, 42. [Google Scholar] [CrossRef]

- Rosbag. Available online: http://wiki.ros.org/rosbag (accessed on 27 February 2022).

- PositionJointInterface. Available online: http://docs.ros.org/melodic/api/hardware_interface/html/c++/classhardware__interface_1_1PositionJointInterface.html (accessed on 27 February 2022).

- JointHandle. Available online: http://docs.ros.org/en/jade/api/hardware_interface/html/c++/classhardware__interface_1_1JointHandle.html (accessed on 27 February 2022).

- Force_torque_sensor_controller. Available online: http://wiki.ros.org/force_torque_sensor_controller (accessed on 27 February 2022).

- Parameter Server. Available online: http://wiki.ros.org/ParameterServer (accessed on 27 February 2022).

- Niu, J.; Wang, H.; Shi, H.; Pop, N.; Li, D.; Li, S.; Wu, S. Study on structural modeling and kinematics analysis of a novel wheel-legged rescue robot. Int. J. Adv. Robot. Syst. 2018, 15, 1729881417752758. [Google Scholar] [CrossRef]

- Storiale, F.; Ferrentino, E. Robot-Agnostic Interaction Controllers Based on ROS. GitHub/Zenodo. Available online: https://zenodo.org/record/6306080#.Yhzz1OjMK3C (accessed on 27 February 2022).

- F/T Sensor Gamma—ATI Industrial Automation. Available online: https://www.ati-ia.com/products/ft/ft_models.aspx?id=gamma (accessed on 27 February 2022).

- FTN-Mini-58 SI-700-30—Schunk. Available online: https://schunk.com/tw_en/gripping-systems/product/39921-ftn-mini-58-si-700-30/ (accessed on 27 February 2022).

- Gazebo Ros Force/Torque Sensor Plugin. Available online: http://docs.ros.org/en/jade/api/gazebo_plugins/html/group__GazeboRosFTSensor.html (accessed on 27 February 2022).

- Comedi. Available online: https://www.comedi.org/ (accessed on 27 February 2022).

- Roveda, L.; Vicentini, F.; Pedrocchi, N.; Braghin, F.; Tosatti, L.M. Impedance shaping controller for robotic applications in interaction with compliant environments. In Proceedings of the 2014 11th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Vienna, Austria, 1–3 September 2014; Volume 2, pp. 444–450. [Google Scholar] [CrossRef]

- Beltran-Hernandez, C.C.; Petit, D.; Ramirez-Alpizar, I.G.; Nishi, T.; Kikuchi, S.; Matsubara, T.; Harada, K. Learning Force Control for Contact-Rich Manipulation Tasks With Rigid Position-Controlled Robots. IEEE Robot. Autom. Lett. 2020, 5, 5709–5716. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Storiale, F.; Ferrentino, E.; Chiacchio, P. Robot-Agnostic Interaction Controllers Based on ROS. Appl. Sci. 2022, 12, 3949. https://doi.org/10.3390/app12083949

Storiale F, Ferrentino E, Chiacchio P. Robot-Agnostic Interaction Controllers Based on ROS. Applied Sciences. 2022; 12(8):3949. https://doi.org/10.3390/app12083949

Chicago/Turabian StyleStoriale, Federica, Enrico Ferrentino, and Pasquale Chiacchio. 2022. "Robot-Agnostic Interaction Controllers Based on ROS" Applied Sciences 12, no. 8: 3949. https://doi.org/10.3390/app12083949

APA StyleStoriale, F., Ferrentino, E., & Chiacchio, P. (2022). Robot-Agnostic Interaction Controllers Based on ROS. Applied Sciences, 12(8), 3949. https://doi.org/10.3390/app12083949