COME-UP: Computation Offloading in Mobile Edge Computing with LSTM Based User Direction Prediction

Abstract

:1. Introduction

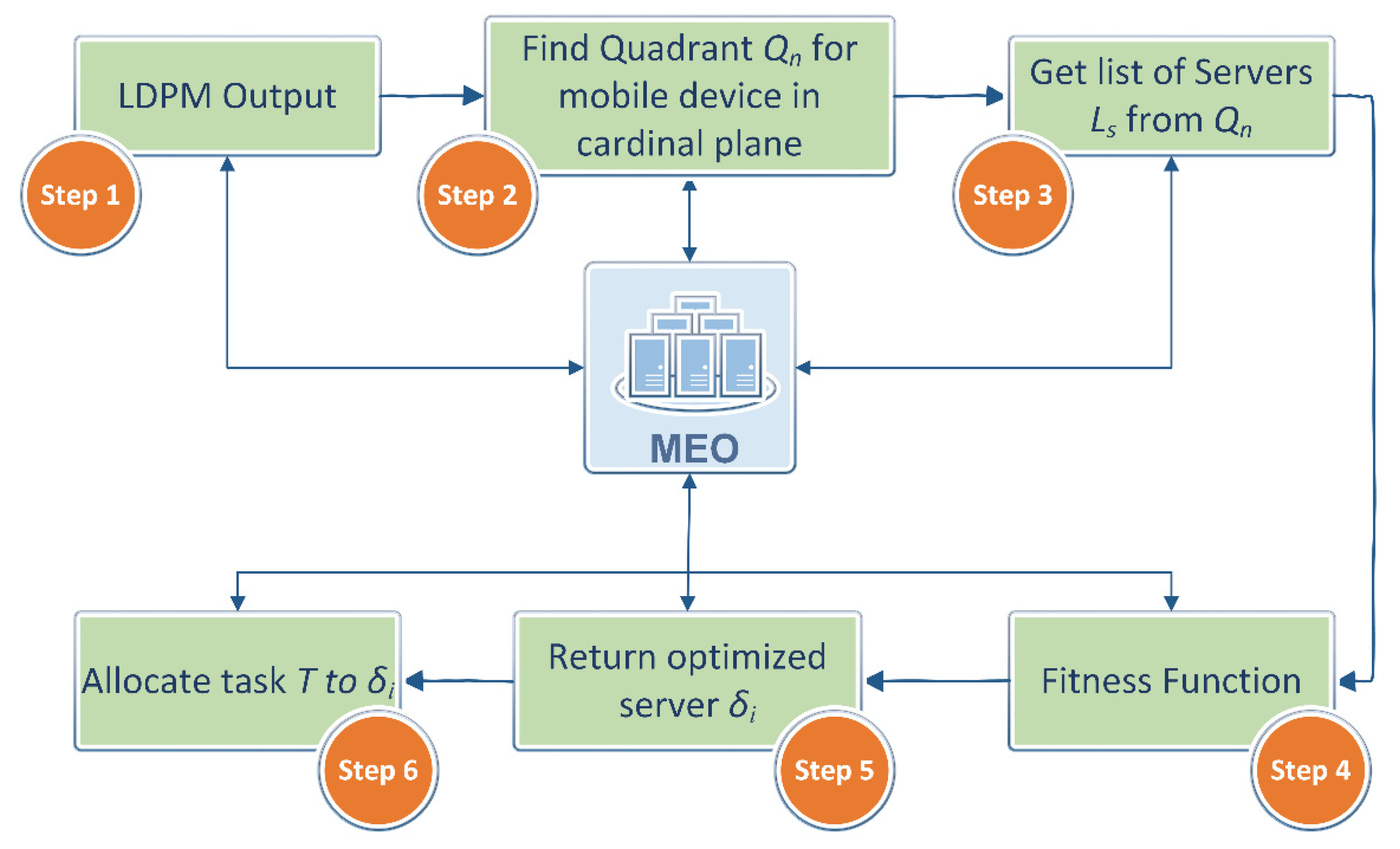

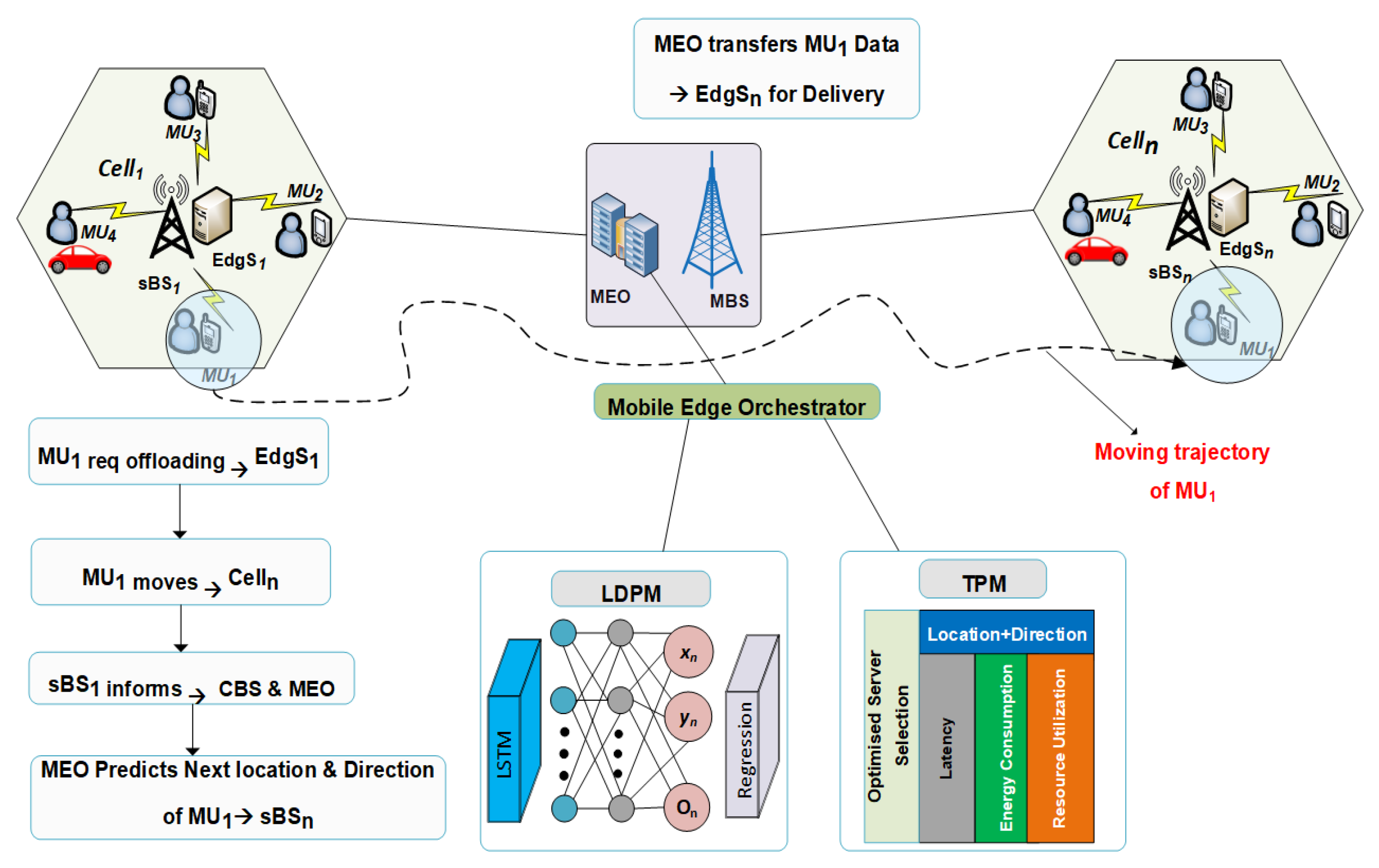

- In MEC, we propose a novel task offloading framework called COME-UP, which is composed of two sub-models. Time series analysis combined with LSTM is used by the location and direction prediction model;

- The task placement model makes use of a fitness function based on the weighted sum method to determine which edge server is the most suited;

- We formulate a fitness function that determines the optimized server using different priority weights for latency ( ), energy (), and server load ();

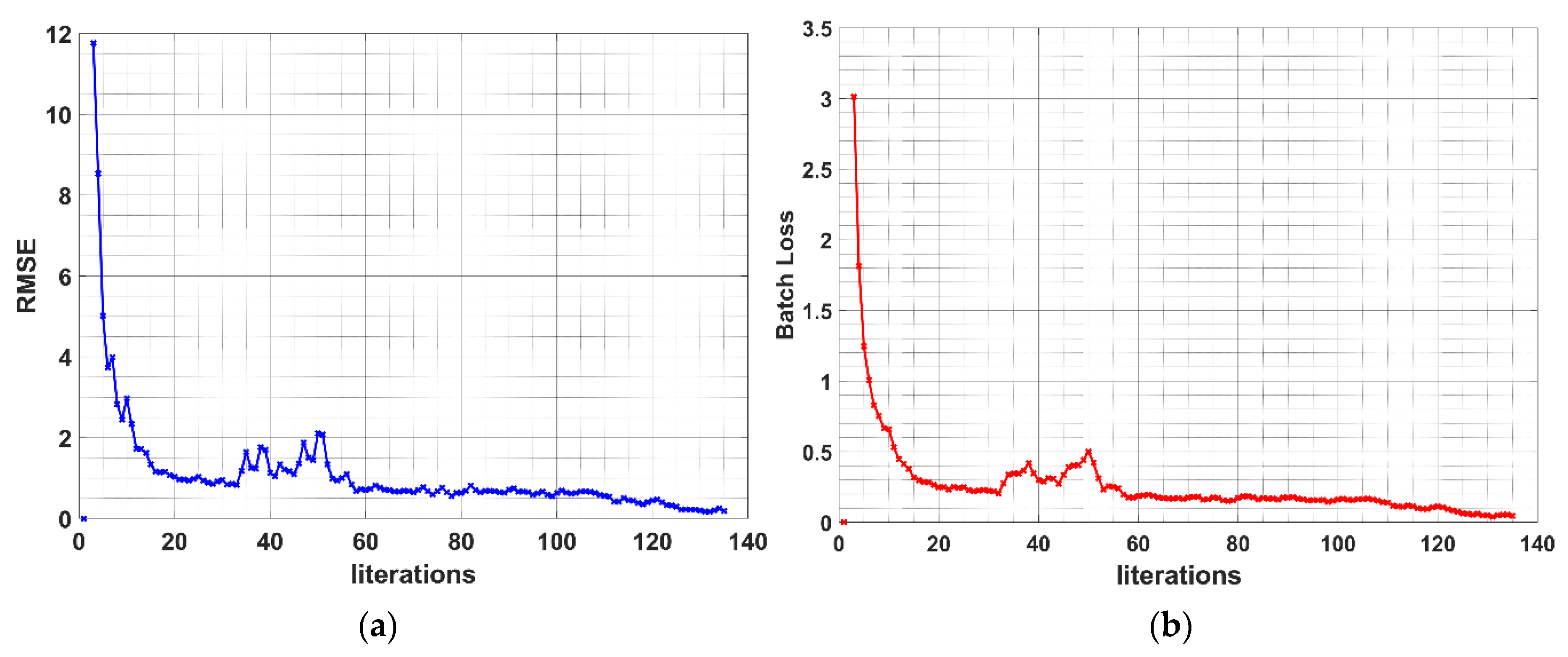

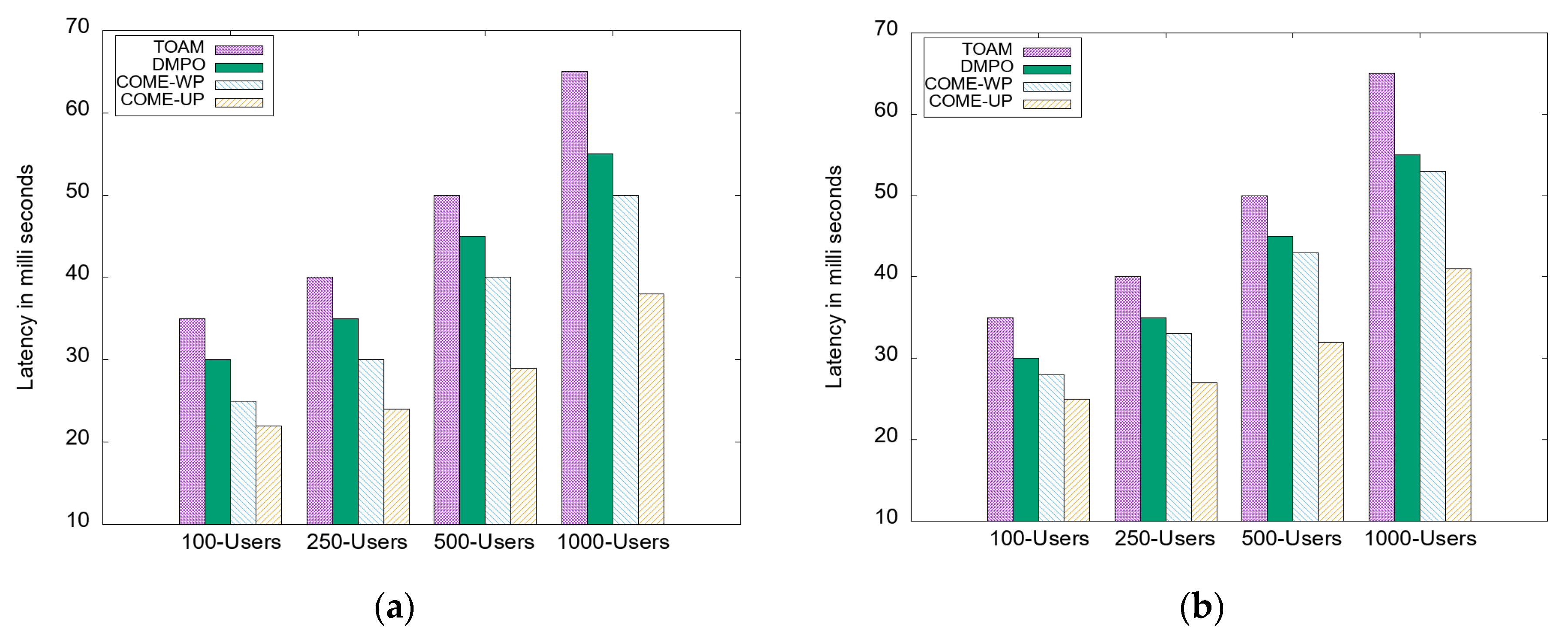

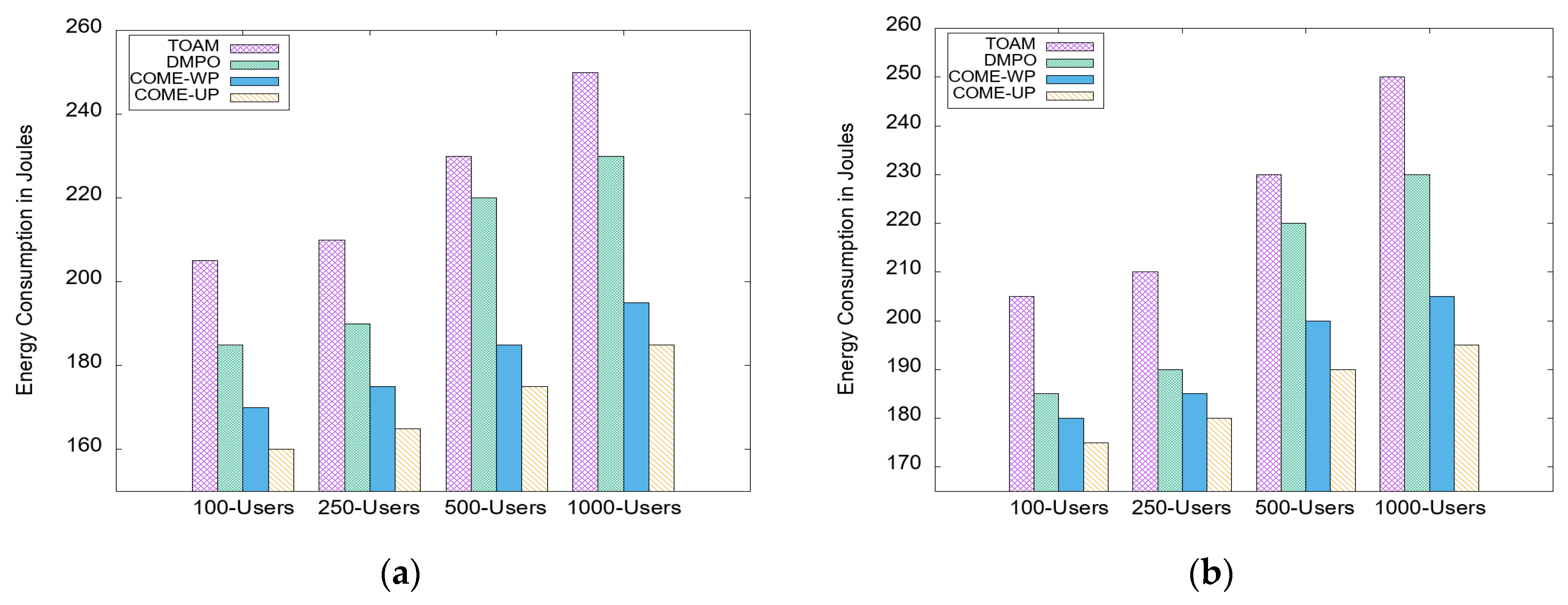

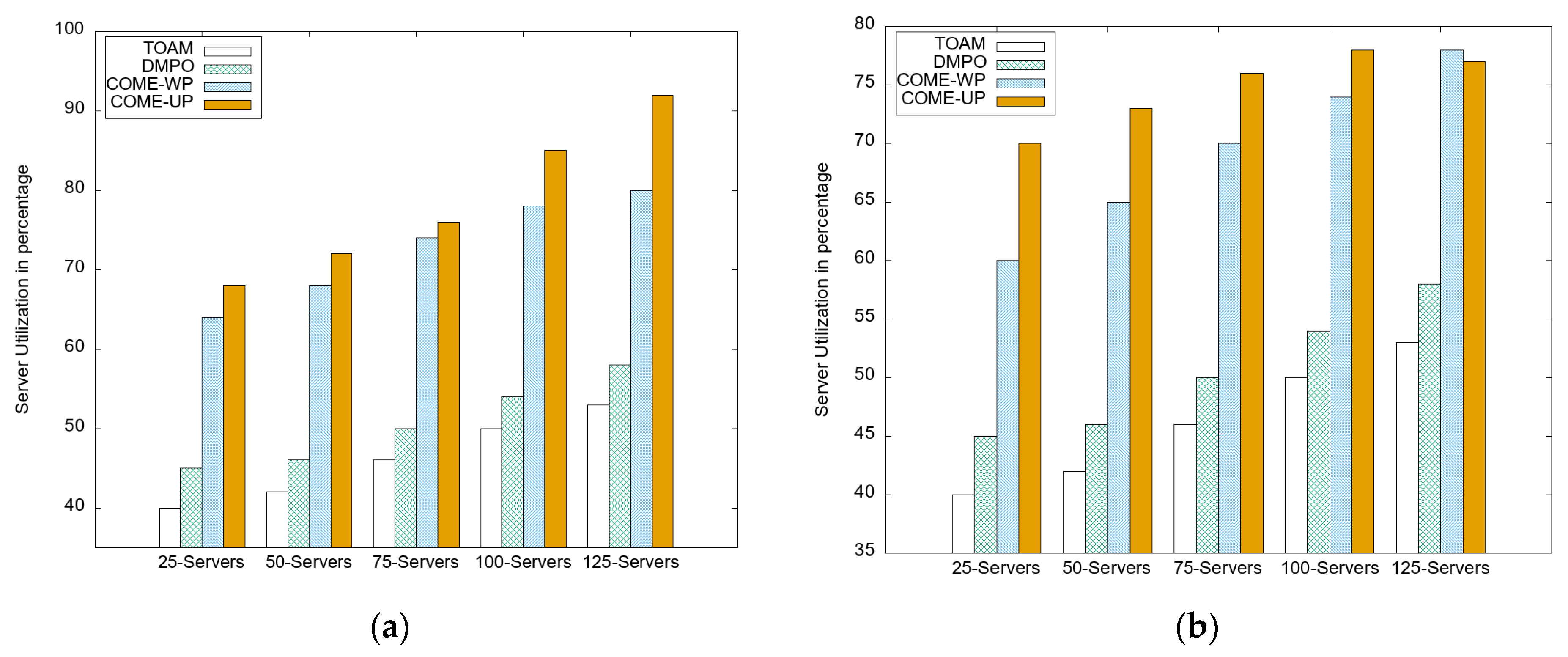

- The performance comparisons utilizing MobFogSim show that the COME-UP outperforms the others (DMPO and TAOM) with a 32% reduction in latency, 16% energy savings, and increasing resource (CPU) utilization by 9%. The root mean square error of the LSTM model is noted as 0.5.

2. Related Work

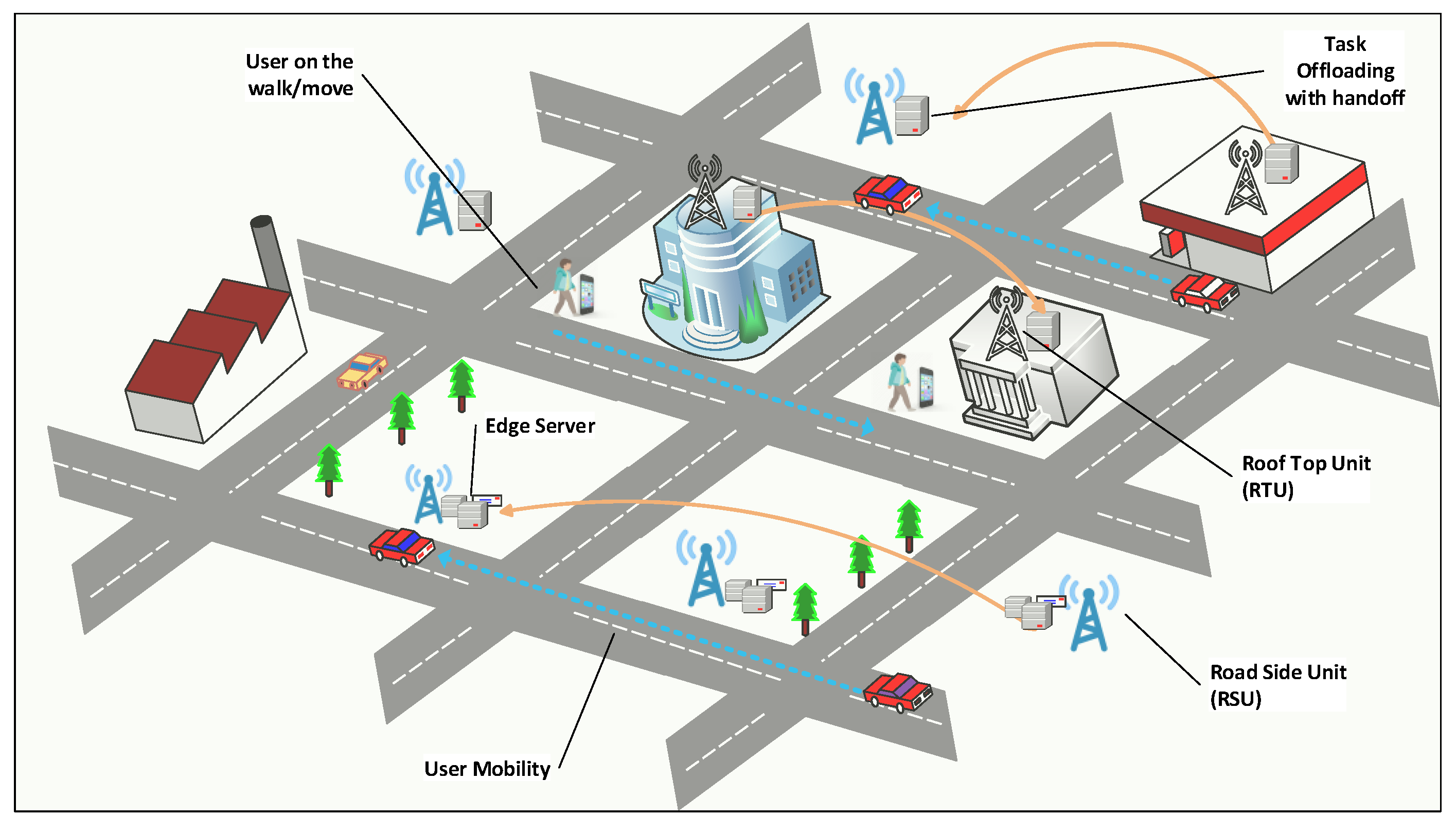

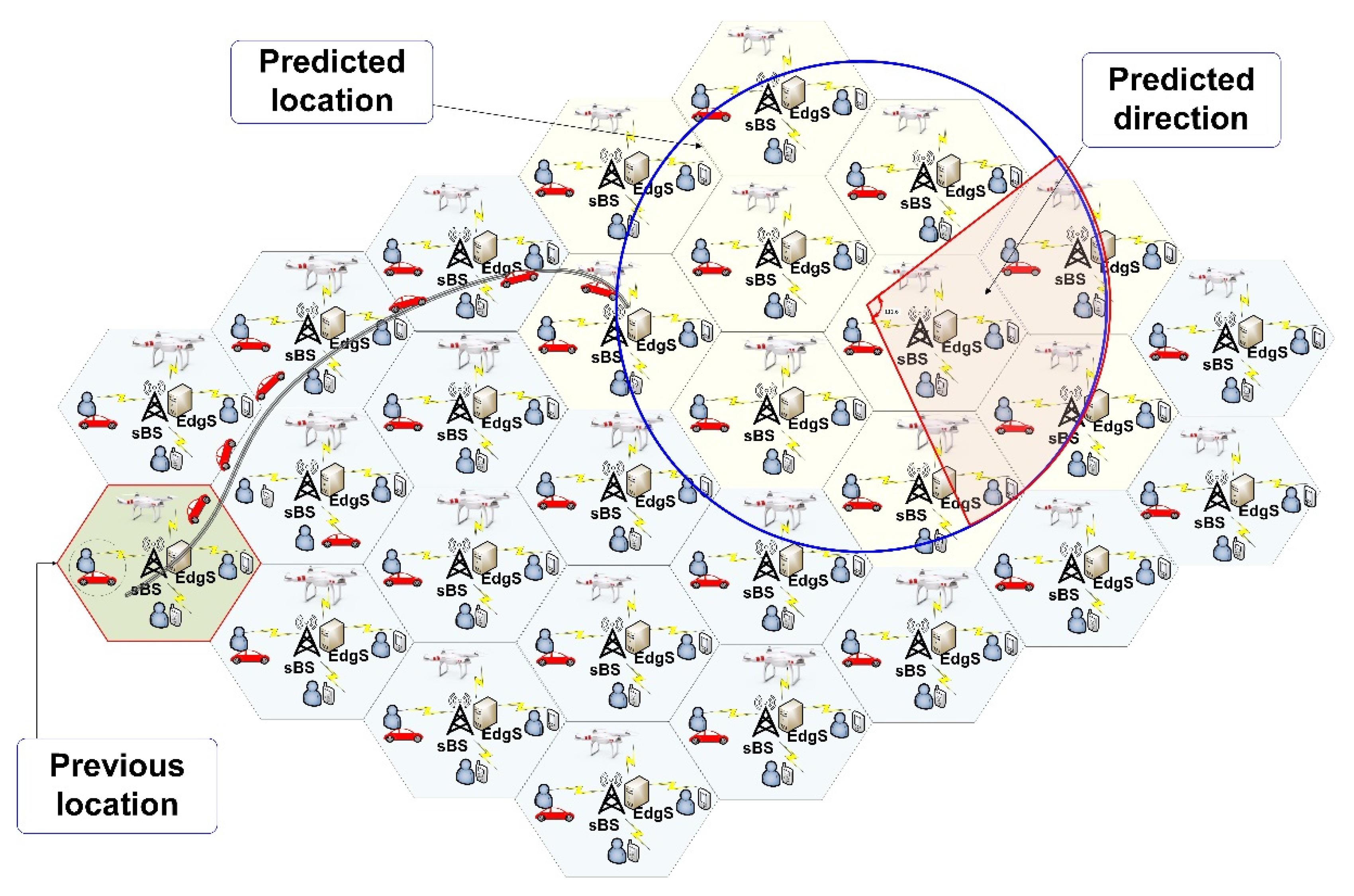

3. System Overview

3.1. Computation Model

3.2. Energy Model for MEC Servers

4. COME-UP: Computation Offloading in Mobile Edge Computing with User Direction Prediction

4.1. Location and Direction Prediction Model (LDMP)

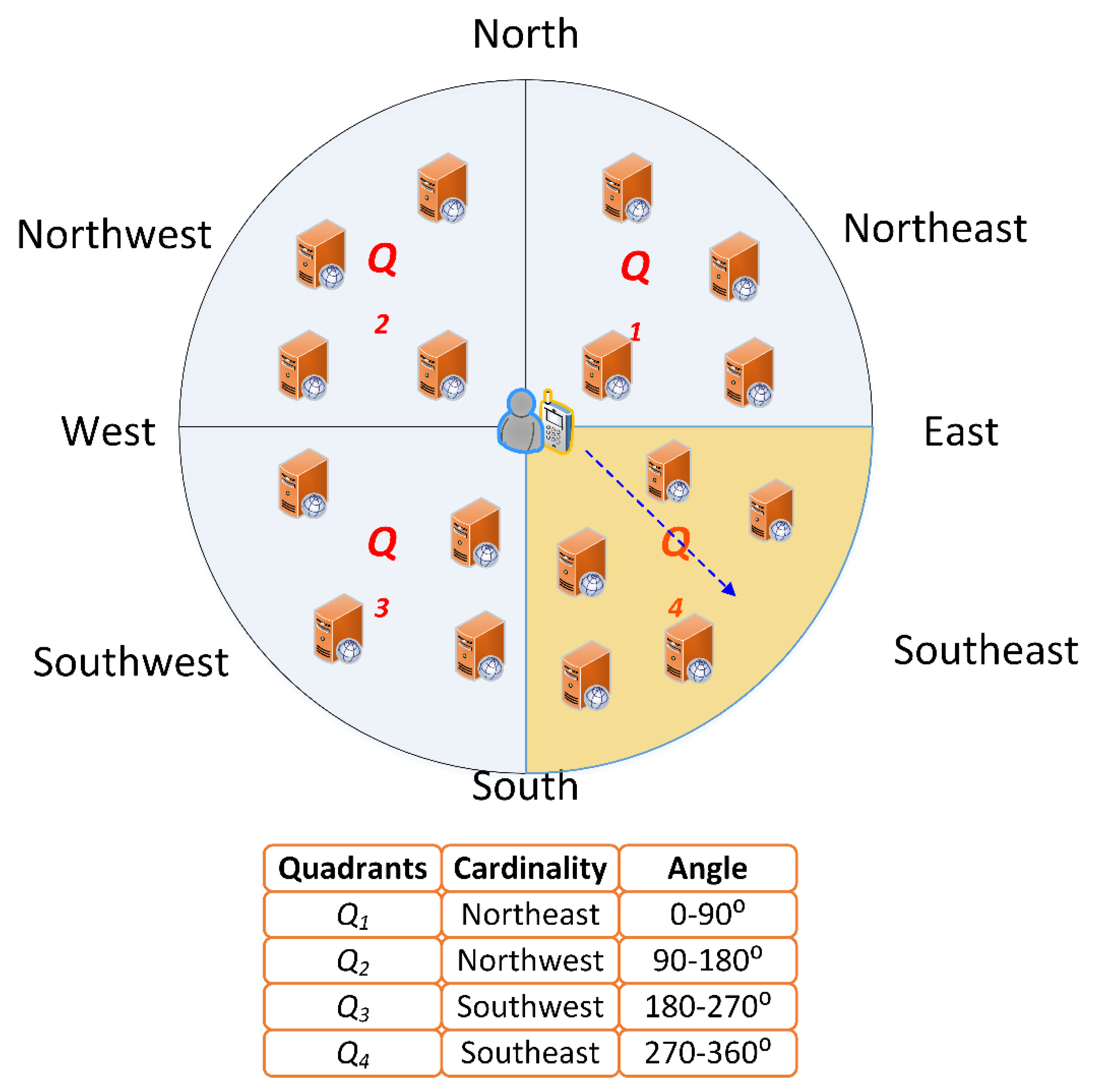

4.2. Task Placement Model

4.3. Fitness Function

5. Experimental Evaluations

5.1. Experimental Setup

5.2. Results

5.3. Discussion

6. Conclusions

7. Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Zhao, Y.; Xu, K.; Wang, H.; Li, B.; Qiao, M.; Shi, H. MEC-Enabled Hierarchical Emotion Recognition and Perturbation-Aware Defense in Smart Cities. IEEE Internet Things J. 2021, 8, 16933–16945. [Google Scholar] [CrossRef]

- Wang, J.; Lv, T.; Huang, P.; Mathiopoulos, P.T. Mobility-aware partial computation offloading in vehicular networks: A deep reinforcement learning based scheme. China Commun. 2020, 17, 31–49. [Google Scholar] [CrossRef]

- McClellan, M.; Cervelló Pastor, C.; Sallent, S. Deep Learning at the Mobile Edge: Opportunities for 5G Networks. Appl. Sci. 2020, 10, 4735. [Google Scholar] [CrossRef]

- Huynh, L.N.T.; Pham, Q.V.; Pham, X.Q.; Nguyen, T.D.T.; Hossain, M.D.; Huh, E.N. Efficient Computation Offloading in Multi-Tier Multi-Access Edge Computing Systems: A Particle Swarm Optimization Approach. Appl. Sci. 2020, 10, 203. [Google Scholar] [CrossRef] [Green Version]

- Safavat, S.; Sapavath, N.N.; Rawat, D.B. Recent advances in mobile edge computing and content caching. Digit. Commun. Netw. 2020, 6, 189–194. [Google Scholar] [CrossRef]

- Mustafa, E.; Shuja, J.; Jehangiri, A.I.; Din, S.; Rehman, F.; Mustafa, S.; Maqsood, T.; Khan, A.N. Joint wireless power transfer and task offloading in mobile edge computing: A survey. Clust. Comput. 2021, 1–20. [Google Scholar] [CrossRef]

- Wang, C.; Li, R.; Li, W.; Qiu, C.; Wang, X. SimEdgeIntel: A open-source simulation platform for resource management in edge intelligence. J. Syst. Arch. 2021, 115, 102016. [Google Scholar] [CrossRef]

- Yu, F.; Chen., H.; Xu., J. DMPO: Dynamic mobility-aware partial offloading in mobile edge computing. Futur. Gener. Comput. Syst. 2018, 89, 722–735. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, Z.; Min, G.; Huang, X.; Ni, Q.; Wang, R. User mobility aware task assignment for Mobile Edge Computing. Future Gener. Comput. Syst. 2018, 85, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Puliafito, C.; Gonçalves, D.M.; Lopes, M.M.; Martins, L.L.; Madeira, E.; Mingozzi, E.; Rana, O.; Bittencourt, L.F. MobFogSim: Simulation of mobility and migration for fog computing. Simul. Model. Pract. Theory 2020, 101, 102062. [Google Scholar] [CrossRef]

- Shakarami, A.; Ghobaei, A.M.; Shahidinejad, A. A survey on the computation offloading approaches in mobile edge computing: A machine learning-based perspective. Comput. Netw. 2020, 182, 107496. [Google Scholar] [CrossRef]

- Zaman, S.K.U.; Jehangiri, A.I.; Maqsood, T.; Ahmad, Z.; Umar, A.I.; Shuja, J.; Alanazi, E.; Alasmary, W. Mobility-aware computational offloading in mobile edge networks: A survey. Clust. Comput. 2021, 24, 2735–2756. [Google Scholar]

- Tu, Y.; Chen, H.; Yan, L.; Zhou, X. Task Offloading Based on LSTM Prediction and Deep Reinforcement Learning for Efficient Edge Computing in IoT. Futur. Internet 2022, 14, 30. [Google Scholar] [CrossRef]

- Moon, S.; Lim, Y. Task Migration with Partitioning for Load Balancing in Collaborative Edge Computing. Appl. Sci. 2022, 12, 1168. [Google Scholar] [CrossRef]

- Chamola, V.; Tham, C.K.; Chalapathi, G.S.S. Latency aware mobile task assignment and load balancing for edge cloudlets. In Proceedings of the 2017 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kona, HI, USA, 13–17 March 2017. [Google Scholar] [CrossRef]

- Alam, G.R.; Tun, Y.K.; Hong, C.S. Multi-agent and reinforcement learning based code offloading in mobile fog. In Proceedings of the 2016 International Conference on Information Networking (ICOIN), Kota Kinabalu, Malaysia, 13–15 January 2016. [Google Scholar] [CrossRef]

- Xia, X.; Zhou, Y.; Li, J.; Yu, R. Quality-Aware Sparse Data Collection in MEC-Enhanced Mobile Crowdsensing Systems. IEEE Trans. Comput. Soc. Syst. 2019, 6, 1051–1062. [Google Scholar] [CrossRef]

- Deng, S.; Huang, L.; Taheri, J.; Zomaya, A.Y. Computation Offloading for Service Workflow in Mobile Cloud Computing. IEEE Trans. Parallel Distrib. Syst. 2015, 26, 3317–3329. [Google Scholar] [CrossRef]

- Waqas, M.; Niu, Y.; Li, Y.; Ahmed, M.; Jin, D.; Chen, S.; Han, Z. A Comprehensive Survey on Mobility-Aware D2D Communications: Principles, Practice and Challenges. IEEE Commun. Surv. Tutor. 2019, 22, 1863–1886. [Google Scholar] [CrossRef] [Green Version]

- Qi, Q.; Liao, J.; Wang, J.; Li, Q.; Cao, Y. Software Defined Resource Orchestration System for Multitask Application in Heterogeneous Mobile Cloud Computing. Mob. Inf. Syst. 2016, 2016, 2784548. [Google Scholar] [CrossRef]

- Peng, Q.; Xia, Y.; Feng, Z.; Lee, J.; Wu, C.; Luo, X.; Zheng, W.; Liu, H.; Qin, Y.; Chen, P. Mobility-Aware and Migration-Enabled Online Edge User Allocation in Mobile Edge Computing. In Proceedings of the 2019 IEEE International Conference on Web Services (ICWS), Milan, Italy, 8–13 July 2019; pp. 91–98. [Google Scholar] [CrossRef]

- Shi, Y.; Chen, S.; Xu, X. MAGA: A Mobility-Aware Computation Offloading Decision for Distributed Mobile Cloud Computing. IEEE Internet Things J. 2017, 5, 164–174. [Google Scholar] [CrossRef]

- Yao, H.; Bai, C.; Xiong, M.; Zeng, D.; Fu, Z.J. Heterogeneous cloudlet deployment and user-cloudlet association toward cost effective fog computing. Concurr. Comput. Pract. Exp. 2017, 29, e3975. [Google Scholar] [CrossRef]

- Zhan, W.; Luo, C.; Min, G.; Wang, C.; Zhu, Q.; Duan, H. Mobility-Aware Multi-User Offloading Optimization for Mobile Edge Computing. IEEE Trans. Veh. Technol. 2020, 69, 3341–3356. [Google Scholar] [CrossRef]

- Wu, C.L.; Chiu, T.C.; Wang, C.Y.; Pang, A.C. Mobility-Aware Deep Reinforcement Learning with Glimpse Mobility Prediction in Edge Computing. In Proceedings of the ICC 2020—2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020. [Google Scholar] [CrossRef]

- Huang, L.; Feng, X.; Feng, A.; Huang, Y.; Qian, L.P. Distributed Deep Learning-based Offloading for Mobile Edge Computing Networks. Mob. Netw. Appl. 2018, 1–8. [Google Scholar] [CrossRef]

- Tang, M.; Wong, V.W. Deep Reinforcement Learning for Task Offloading in Mobile Edge Computing Systems. IEEE Trans. Mob. Comput. 2020. [Google Scholar] [CrossRef]

- Jang, I.; Kim, H.; Lee, D.; Son, Y.-S.; Kim, S. Knowledge Transfer for On-Device Deep Reinforcement Learning in Resource Constrained Edge Computing Systems. IEEE Access 2020, 8, 146588–146597. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, H.; Wu, C.; Mao, S.; Ji, Y.; Bennis, M. Optimized Computation Offloading Performance in Virtual Edge Computing Systems Via Deep Reinforcement Learning. IEEE Internet Things J. 2019, 6, 4005–4018. [Google Scholar] [CrossRef] [Green Version]

- Song, S.; Fang, Z.; Zhang, Z.; Chen, C.-L.; Sun, H. Semi-Online Computational Offloading by Dueling Deep-Q Network for User Behavior Prediction. IEEE Access 2020, 8, 118192–118204. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, Z.; Zhao, L.; Yang, P. Attention-based BiLSTM models for personality recognition from user-generated content. Inf. Sci. 2022, 596, 460–471. [Google Scholar] [CrossRef]

- Zhang, Z.; Guo, J.; Zhang, H.; Zhou, L.; Wang, M. Product selection based on sentiment analysis of online reviews: An intuitionistic fuzzy TODIM method. Complex Intell. Syst. 2022, 1–14. [Google Scholar] [CrossRef]

- Zhou, L.; Tang, L.; Zhang, Z. Extracting and ranking product features in consumer reviews based on evidence theory. J. Ambient Intell. Humaniz. Comput. 2022, 1–11. [Google Scholar] [CrossRef]

- Xu, C.; Zheng, G.; Zhao, X. Energy-Minimization Task Offloading and Resource Allocation for Mobile Edge Computing in NOMA Heterogeneous Networks. IEEE Trans. Veh. Technol. 2020, 69, 16001–16016. [Google Scholar] [CrossRef]

- Zaman, S.K.U.; Khan, A.U.R.; Malik, S.U.R.; Khan, A.N.; Maqsood, T.; Madani, S.A. Formal Verification and Performance Evaluation of Task Scheduling Heuristics for Makespan Optimization and Workflow Distribution in Large-scale Computing Systems. Comput. Syst. Sci. Eng. 2017, 32, 227–241. [Google Scholar]

- Wang, D.; Tian, X.; Cui, H.; Liu, Z. Reinforcement learning-based joint task offloading and migration schemes optimization in mobility-aware MEC network. China Commun. 2020, 17, 31–44. [Google Scholar] [CrossRef]

- Zaman, S.K.U.; Maqsood, T.; Ali, M.; Bilal, K.; Madani, S.A.; Khan, A.u.R. A Load Balanced Task Scheduling Heuristic for Large-Scale Computing Systems. Comput. Syst. Sci. Eng. 2019, 34, 79–90. [Google Scholar] [CrossRef]

- Katal, A.; Dahiya, S.; Choudhury, T. Energy efficiency in cloud computing data center: A survey on hardware technologies. Clust. Comput. 2021, 25, 675–705. [Google Scholar] [CrossRef]

- Beloglazov, A.; Abawajy, J.; Buyya, R. Energy-aware resource allocation heuristics for efficient management of data centers for Cloud computing. Future Gener. Comput. Syst. 2012, 28, 755–768. [Google Scholar] [CrossRef] [Green Version]

- Parrilla, L.; Álvarez-Bermejo, J.A.; Castillo, E.; López-Ramos, J.A.; Morales-Santos, D.P.; García, A. Elliptic Curve Cryptography hardware accelerator for high-performance secure servers. J. Supercomput. 2018, 75, 1107–1122. [Google Scholar] [CrossRef]

- Duong, T.M.; Kwon, S. Vertical Handover Analysis for Randomly Deployed Small Cells in Heterogeneous Networks. IEEE Trans. Wirel. Commun. 2020, 19, 2282–2292. [Google Scholar] [CrossRef]

- Liu, X.; Yu, J.; Qi, H.; Yang, J.; Rong, W.; Zhang, X.; Gao, Y. Learning to Predict the Mobility of Users in Mobile mmWave Networks. IEEE Wirel. Commun. 2020, 27, 124–131. [Google Scholar] [CrossRef]

- Hewamalage, H.; Bergmeir, C.; Bandara, K. Recurrent neural networks for time series forecasting: Current status and fu-ture directions. Int. J. Forecast. 2021, 37, 388–427. [Google Scholar]

- Liu, D.; Li, W. Mobile communication base station traffic forecast. Computing 2021, 5, 52–55. [Google Scholar]

- Chih-Lin, I.; Han, S.; Bian, S. Energy-efficient 5G for a greener future. Nat. Electron. 2020, 3, 182–184. [Google Scholar] [CrossRef]

- Marler, R.T.; Arora, J.S. The weighted sum method for multi-objective optimization: New insights. Struct. Multidiscip. Optim. 2010, 41, 853–862. [Google Scholar]

- Hoshino, M.; Yoshida, T.; Imamura, D. Further advancements for E-UTRA physical layer aspects (Release 9) Further advancements for E-UTRA physical layer aspects (Release 9), 2010. IEICE Trans. Commun. 2011, 94, 3346–3353. [Google Scholar]

- Wu, W.; Zhou, F.; Hu, R.Q.; Wang, B. Energy-Efficient Resource Allocation for Secure NOMA-Enabled Mobile Edge Computing Networks. IEEE Trans. Commun. 2020, 68, 493–505. [Google Scholar] [CrossRef] [Green Version]

- Lema, M.A.; Laya, A.; Mahmoodi, T.; Cuevas, M.; Sachs, J.; Markendahl, J.; Dohler, M. Business Case and Technology Analysis for 5G Low Latency Applications. IEEE Access 2017, 5, 5917–5935. [Google Scholar] [CrossRef] [Green Version]

| Symbol | Definition |

|---|---|

| ti | Incoming task for offloading |

| Ei | Computation time of task ti at the user device |

| Ci | Cycles required to complete the task ti |

| Ri | Mobile device transmission time |

| Cs | Computing capacity of the edge server |

| Amount of time required to execute task ti at edge server Sk | |

| Aggregate execution time of tasks mapped at edge server S | |

| Us | Resource utilization of server |

| Pk | Power consumed by server |

| Selected server with optimized features after prediction |

| Parameter | Value |

|---|---|

| Macrocell radius | 200 m |

| No of cell sites | 144 |

| Moving velocity of the user | 20–80 km/h |

| Server CPU frequency and instruction set | 3 GHz & CISC |

| Mobile device CPU frequency and instruction set | 3 GHz & RISC |

| Input Data size | 1∼30 MB |

| No of users | 100~1000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zaman, S.K.u.; Jehangiri, A.I.; Maqsood, T.; Umar, A.I.; Khan, M.A.; Jhanjhi, N.Z.; Shorfuzzaman, M.; Masud, M. COME-UP: Computation Offloading in Mobile Edge Computing with LSTM Based User Direction Prediction. Appl. Sci. 2022, 12, 3312. https://doi.org/10.3390/app12073312

Zaman SKu, Jehangiri AI, Maqsood T, Umar AI, Khan MA, Jhanjhi NZ, Shorfuzzaman M, Masud M. COME-UP: Computation Offloading in Mobile Edge Computing with LSTM Based User Direction Prediction. Applied Sciences. 2022; 12(7):3312. https://doi.org/10.3390/app12073312

Chicago/Turabian StyleZaman, Sardar Khaliq uz, Ali Imran Jehangiri, Tahir Maqsood, Arif Iqbal Umar, Muhammad Amir Khan, Noor Zaman Jhanjhi, Mohammad Shorfuzzaman, and Mehedi Masud. 2022. "COME-UP: Computation Offloading in Mobile Edge Computing with LSTM Based User Direction Prediction" Applied Sciences 12, no. 7: 3312. https://doi.org/10.3390/app12073312

APA StyleZaman, S. K. u., Jehangiri, A. I., Maqsood, T., Umar, A. I., Khan, M. A., Jhanjhi, N. Z., Shorfuzzaman, M., & Masud, M. (2022). COME-UP: Computation Offloading in Mobile Edge Computing with LSTM Based User Direction Prediction. Applied Sciences, 12(7), 3312. https://doi.org/10.3390/app12073312