Investigating the Influence of Feature Sources for Malicious Website Detection

Abstract

:1. Introduction

- Conduct an empirical study into the accuracy contributions of the various feature types that can be used for this classification problem. It is worth noting that while other studies have evaluated and combined features extensively, our distinction and contribution stems from the specific sources of the features, which are embeddings from images, URLs, and JavaScript content extracted using the Distilbert, Codebert, and Longformer transformers, and the different PCA and Chi-squared feature selection reductions of those embeddings combined with lexical, host-based, and content-based features typically seen in the literature. This would provide researchers with insight into how each of these features types, and their combinations, can contribute to classifying malicious websites.

- Provide the open-source community with a new dataset GAWAIN in order to add a dataset extracted with the intention to give the community this potentially innovative combination of feature sources to work with in future studies of their own. This dataset was constructed from existing real-world URLs that have been pre-labeled as malicious or benign throughout multiple sources.

2. Previous Work

3. Preliminary Experiments

3.1. A.K. Singh’s Dataset

3.2. Additional Malicious Samples

3.3. Expanding A.K. Singh’s Dataset

3.3.1. Lexical Features

3.3.2. Host-Based Features

3.3.3. Content-Based Features

3.4. Feature Inspection & Experimental Results

- Original data provided in [21];

- Original data with JavaScript features removed;

- Expanded data with features and malicious samples described in this section;

- Expanded data with JavaScript features removed.

4. Dataset GAWAIN

Feature List

- JavaScript average and max array length.

- HTML content length.

- Number of JavaScript tag references.

- Number of semicolons, zeros, spaces, hyphens, @ characters, queries, ampersands and equals.

- Domain and domain length.

- Dominant Colors Description (DCD).

- Hex length in content.

- Contains Hex.

- Unique number of characters, numbers, and letters.

- Ratio of letters to characters, numbers to characters.

- Is in Alexa’s top 1 million.

- URL, content and image embeddings.

- Mean summary statistic for URL, content and image embeddings.

5. Experimental Results—GAWAIN

5.1. Feature Categorization

5.2. Methodology

5.3. Model Performance

5.4. Feature Contributions

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- IC3 Internet Crime Report; Internet Crime Compliant Center, FBI: Washington, DC, USA, 2020.

- Sahoo, D.; Liu, C.; Hoi, S. Malicious URL detection using machine learning: A survey. arXiv 2017, arXiv:1701.07179. [Google Scholar]

- Kulkarni, A.; Brown, L.L., III. Phishing Websites Detection using Machine Learning. IJACSA 2019, 10. [Google Scholar] [CrossRef] [Green Version]

- Lokesh, G.H.; BoreGowda, G. Phishing website detection based on effective machine learning approach. JCST 2021, 5, 1–14. [Google Scholar]

- Basnet, R.; Mukkamala, S.; Sung, A. Detection of Phishing Attacks: A Machine Learning Approach. SFSC 2008, 226, 373–383. [Google Scholar]

- Yang, P.; Zhao, G.; Zeng, P. Phishing Website Detection Based on Multidimensional Features Driven by Deep Learning. IEEE Access 2019, 7, 15196–15209. [Google Scholar] [CrossRef]

- Amiri, I.S.; Akanbi, O.A.; Fazeldehkordi, E. A Machine-Learning Approach to Phisihing Detection and Defense; Syngress: Rockland, MA, USA, 2014. [Google Scholar]

- Ndichu, S.; Ozawa, S.; Misu, T.; Okada, K. A Machine Learning Approach to Malicious JavaScript Detection using Fixed Length Vector Representation. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Yaokai, Y. Effective Phishing Detection Using Machine Learning Approach; Case Western Reserve University: Cleveland, OH, USA, 2019. [Google Scholar]

- Rudd, E.M.; Abdallah, A. Training Transformers for Information Security Tasks: A Case Study on Malicious URL Prediction. arXiv 2020, arXiv:2011.03040. [Google Scholar]

- Sahingoz, O.K.; Buber, E.; Demir, O.; Diri, B. Machine learning based phishing detection from URLs. JESA 2019, 117, 345–357. [Google Scholar] [CrossRef]

- Moubayed, A.; Aqeeli, E.; Shami, A. Ensemble-based Feature Selection and Classification Model for DNS Typo-squatting Detection. CCECE 2020, 44, 456–466. [Google Scholar]

- Dalgic, F.C.; Bozkir, A.S.; Aydos, M. Phish-IRIS: A New Approach for Vision Based Brand Prediction of Phishing Web Pages via Compact Visual Descriptors. In Proceedings of the 2018 2nd International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkey, 19–21 October 2018; pp. 1–8. [Google Scholar]

- Shahrivari, V.; Darabi, M.M.; Izadi, M. Phishing Detection Using Machine Learning Techniques. arXiv 2020, arXiv:2009.11116. [Google Scholar]

- Malicious Website Feature Study. GitHub Repository. Available online: https://github.com/AhmadChaiban/Malicious-Website-Feature-Study (accessed on 10 August 2021).

- Apruzzese, G.; Colajanni, M.; Ferretti, L.; Guido, A.; Marchetti, M. On the effectiveness of machine and deep learning for cyber security. In Proceedings of the 10th International Conference on Cyber Conflict (CyCon), Tallinn, Estonia, 30 May–1 June 2018; pp. 371–390. [Google Scholar]

- Gu, J.; Oelke, D. Understanding bias in machine learning. arXiv 2019, arXiv:1909.01866. [Google Scholar]

- Lin, Y.; Liu, R.; Divakaran, D.; Ng, J.; Chan, Q.; Lu, Y.; Si, Y.; Zhang, F.; Dong, J. Phishpedia: A Hybrid Deep Learning Based Approach to Visually Identify Phishing Webpages. In Proceedings of the 30th USENIX Security Symposium (USENIX) Security 21, Vancouver, BC, Canada, 11–13 August 2021. [Google Scholar]

- Yi, P.; Guan, Y.; Zou, F.; Yao, Y.; Wang, W.; Zhu, T. Web Phishing Detection Using a Deep Learning Framework. Wirel. Commun. Mob. Comput. 2018, 2018, 4678746. [Google Scholar] [CrossRef]

- McGahagan, J.; Bhansali, D.; Pinto-Coelho, C.; Cukier, M. Discovering features for detecting malicious websites: An empirical study. Comput. Secur. 2021, 109, 102374. [Google Scholar] [CrossRef]

- Singh, A.K. Malicious and Benign Webpages Dataset. Data Brief 2020, 32, 106304. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.; Goyal, N. Malcrawler: A crawler for seeking and crawling malicious websites. In Proceedings of the International Conference on Distributed Computing and Internet Technology, Bhubaneswar, India, 7–10 January 2021; pp. 210–223. [Google Scholar]

- Choi, H.; Zhu, B.; Lee, H. Detecting Malicious Web Links and Identifying Their Attack Types. WebApps 2011, 11, 218. [Google Scholar]

- Canfora, G.; Mercaldo, F.; Visaggio, C. Malicious javascript detection by features extraction. E-Inform. Softw. Eng. J. 2014, 8, 65–78. [Google Scholar]

- Gupta, S.; Gupta, B.B. Enhanced XSS Defensive Framework for Web Applications Deployed in the Virtual Machines of Cloud Computing Environment. Procedia Technol. 2016, 24, 1595–1602. [Google Scholar] [CrossRef] [Green Version]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Feng, Z.; Guo, D.; Tang, D.; Duan, N.; Feng, X.; Gong, M.; Shou, L.; Qin, B.; Liu, T.; Jiang, D.; et al. Codebert: A pre-trained model for programming and natural languages. arXiv 2020, arXiv:2002.08155. [Google Scholar]

- Hess, S.; Satam, P.; Ditzler, G.; Hariri, S. Malicious HTML File Prediction: A Detection and Classification Perspective with Noisy Data. In Proceedings of the 2018 IEEE/ACS 15th International Conference on Computer Systems and Applications (AICCSA), Aqaba, Jordan, 28 October–1 November 2018; pp. 1–7. [Google Scholar]

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar]

- Simple Transformers. GitHub Repository. Available online: https://github.com/ThilinaRajapakse/simpletransformers (accessed on 15 December 2021).

- Beltagy, I.; Peters, M.; Cohan, A. Longformer: The long-document transformer. arXiv 2020, arXiv:2004.05150. [Google Scholar]

- Joshi, A.; Lloyd, L.; Westin, P.; Seethapathy, S. Using Lexical Features for Malicious URL Detection–A Machine Learning Approach. arXiv 2019, arXiv:1910.06277. [Google Scholar]

- Rozi, M.; Ban, T.; Kim, S.; Ozawa, S.; Takahashi, T.; Inoue, D. Detecting Malicious Websites Based on JavaScript Content Analysis. In Proceedings of the Computer Security Symposium 2021, Dubrovnik, Croatia, 21–25 June 2021; pp. 727–732. [Google Scholar]

- Schütt, K.; Bikadorov, A.; Kloft, M.; Rieck, K. Early Detection of Malicious Behavior in JavaScript Code. In Proceedings of the 5th ACM Workshop on Security and Artificial Intelligence, Raleigh, NC, USA, 19 October 2012; pp. 15–24. [Google Scholar]

- Curtsinger, C.; Livshits, B.; Zorn, B.; Seifert, C. ZOZZLE: Fast and Precise In-Browser JavaScript Malware Detection. In Proceedings of the 20th USENIX Security Symposium (USENIX Security 11), San Francisco, CA, USA, 8–12 August 2011. [Google Scholar]

- Aldwairi, M.; Al-Salman, R. MALURLS: A lightweight malicious website classification based on URL features. J. Emerg. Technol. Web Intell. 2012, 4, 128–133. [Google Scholar] [CrossRef] [Green Version]

- JS Auto DeObfuscator. GitHub Repository. Available online: https://github.com/lucianogiuseppe/JS-Auto-DeObfuscator (accessed on 15 December 2021).

- Fass, A.; Krawczyk, R.; Backes, M.; Stock, B. JaSt: Fully Syntactic Detection of Malicious (Obfuscated) JavaScript. In Detection of Intrusions and Malware, and Vulnerability Assessment; SpringerLink: Berlin, Germany, 2018; pp. 303–325. [Google Scholar]

- JaSt—JS AST-Based Analysis. GitHub Repository. Available online: https://github.com/Aurore54F/JaSt (accessed on 15 December 2021).

| Category | Feature | Description |

|---|---|---|

| Raw | URL of the website | String format of the URL of the website. |

| HTML page content | The HTML page code of the homepage of the website in string format. | |

| Hostname | The extracted hostname of the website in string format. | |

| Domain name | The extracted domain name of each website in string format. | |

| Label of the website | A binary label that states whether the website is malicious or benign. | |

| Lexical | Length of the URL * | The number of characters in the URL. |

| Number of underscores, semicolons, subdomains, zeros, spaces, hyphens, @ symbols, queries, ampersands, and equal signs † | The number of each of these characters counted in the URL. Note that all these characters are considered as separate features. | |

| Hostname length † | The number of characters in the hostname extracted from the given website. | |

| Ratio of digits to URL † | The number of digits divided by the length of the URL. | |

| Ratio of digits to hostname † | The number of digits divided by length of hostname. | |

| IP address in URL † | A binary label that states if an IP address exists in the URL. | |

| Existence of @ symbol in URL † | A label that states if the URL contains any @ characters. | |

| Domain length | The number of characters in the extracted domain name. | |

| Unique URL characters, numbers and letters | Taking the total number of unique characters, numbers and letters in a URL. Note that each of these is their own feature. | |

| Ratio of letters to chars | The unique letter count divided by the total unique character count in a URL. | |

| Ratio of numbers to chars | Count of numbers in a URL divided by the total unique character in a URL. | |

| Top-level domain * | The top level domain of a website as a category label. | |

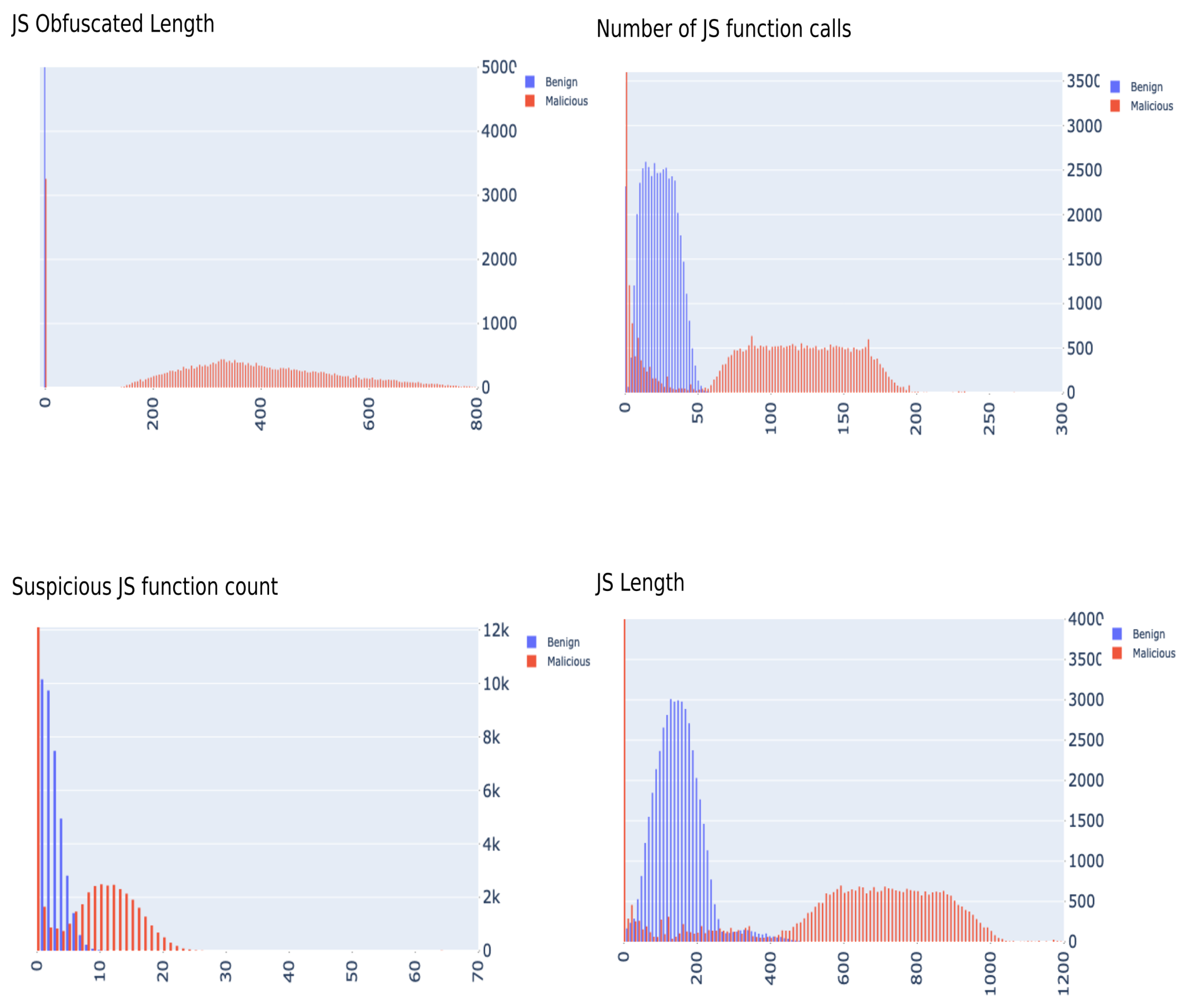

| Content | Length of JavaScript code * | Total number of characters of JavaScript code present in HTML content. |

| Length of deobfuscated JS code *, ⋄ | Length of the JavaScript code put through deobfuscation tool. | |

| URL count in content † | The total number of URLs found in the HTML page content [23,24]. | |

| Unique URL count in content † | The unique number of URLs found in the HTML page content [23,24]. | |

| Suspicious JS function count † | The number of suspicious functions found in the JavaScript code [25]. | |

| JavaScript function count † | The total number of JavaScript functions called in the code [23,24]. | |

| Content length | The total number of characters of the HTML content page. | |

| Script tag references | A count of the total number of script tags in the HTML page content. | |

| Contains HEX | Binary indicator about webpage’s HTML content containing any hexadecimal characters. | |

| HEX length | The number of Hexadecimal characters in the HTML content page. | |

| DCD MPEG-7 † | The 5 dominant colors of an image. They are extracted as 5 different columns. | |

| Average length of JS arrays | The average length of arrays in the JS code present in HTML content. | |

| Maximum length of JS arrays | The maximum length of arrays in the JS code present in HTML content. | |

| Host | IP address * | String format of the IP address of the website. |

| Geographic location | The name of the country the website is hosted in. | |

| WHOIS information* | A binary label that states whether the WHOIS information for the website is complete or incomplete. | |

| HTTPS * | A binary label that states whether the website is secure HTTP protocol or not. | |

| Is in Alexa’s top 1 million | A binary label that describes if the given domain name of a URL exists in Alexa’s top 1 million domains. | |

| Embedding | Image embeddings | The embeddings of the website’s image. They are extracted using MobileNetV2 [26]. |

| Content embeddings | The embeddings of the HTML page content. Extracted using CodeBert [27,28]. | |

| URL embeddings (Distilbert) | URL embeddings extracted using the Distilbert Tranformer [29,30]. | |

| URL embeddings (Longformer) | URL embeddings extracted using the Longformer Tranformer [30,31]. | |

| Mean statistic for embeddings | URL and image embeddings, as well as chi-squared feature selected embedding dimensions, are summarized as a single representative number—the mean of the embedding vector (or parts of the vector). |

| Model | Variation 1 (with JS) | Variation 2 (w/o JS) | Variation 3 (with JS) | Variation 4 (w/o JS) |

|---|---|---|---|---|

| N. Bayes | ||||

| SVM | ||||

| KNN | ||||

| XGBoost | ||||

| AdaBoost |

| Parameter Name | Assigned Value |

|---|---|

| Max Depth | {} |

| Minimum child weight | {1} |

| Number of estimators | {} |

| Colsample by tree | 1.0} |

| Learning rate | |

| Tree method | Auto, Exact, Approx, Hist, GPU-hist |

| Booster | Gbtree, Gblinear, Dart |

| Gamma | } |

| Feature Category | Individual | Combined |

|---|---|---|

| Lexical | 75.48 | N/A |

| Host-based | 65.70 | 80.02 |

| Content-based (Baseline) | 76.30 | 83.11 |

| URL embeddings (Longformer) | ||

| all | 76.38 | 83.23 |

| PCA 10 | 75.41 | 83.73 |

| PCA 25 | 75.24 | 82.41 |

| PCA 50 | 75.25 | 82.09 |

| Chi-sq | 76.43 | 83.29 |

| Image embeddings | ||

| all | 60.27 | 82.24 |

| PCA 10 | 62.57 | 83.69 |

| PCA 25 | 61.73 | 83.46 |

| PCA 50 | 61.16 | 82.82 |

| Chi-sq | 61.29 | 83.62 |

| Content embeddings | ||

| all | 67.63 | 81.89 |

| PCA 10 | 67.71 | 83.74 |

| PCA 25 | 68.11 | 83.58 |

| PCA 50 | 67.76 | 83.06 |

| Chi-sq | 66.57 | 83.59 |

| URL embeddings (Distilbert) | ||

| all | 76.62 | 82.47 |

| PCA 10 | 72.97 | 83.79 |

| PCA 25 | 73.24 | 83.57 |

| PCA 50 | 74.08 | 82.99 |

| Chi-sq | 75.12 | 83.64 |

| Best Score (Feat selection) | N/A | 84.27 |

| Feature | Contribution (%) |

|---|---|

| WHOIS | 13.97 |

| Top-level domain | 4.53 |

| Unique URL Chars count | 4.36 |

| Having @ in URL | 4.29 |

| HTTPS | 4.13 |

| Unique Nums in URL count | 3.75 |

| Location | 2.84 |

| Number of @’s in URL | 2.79 |

| Number of ampersands in URL | 2.74 |

| Feature | Contribution (%) |

|---|---|

| URL (Distilbert) PCA embedding 1 | 6.21 |

| Number of ampersands in URL | 2.89 |

| URL (Longformer) PCA embedding 4 | 2.79 |

| DCD Color 1 | 2.74 |

| WHOIS | 2.56 |

| URL (Longformer) PCA embedding 2 | 2.56 |

| HTTPS | 2.47 |

| Top-level domain | 2.33 |

| Is in Alexa’s top 1 million | 2.33 |

| Feature | Contribution (%) |

|---|---|

| URL (Longformer) embedding 2 | 4.76 |

| Number of Semicolons in URL | 4.14 |

| URL (Distilbert) Embedding 20 | 3.86 |

| Mean of URL (Distilbert) embeddings | 3.69 |

| Mean of URL (Longformer) embeddings | 3.18 |

| WHOIS | 3.02 |

| HTTPS | 2.92 |

| Number of ampersands in URL | 2.82 |

| Mean of feat. selected | 2.80 |

| Longformer embeddings |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chaiban, A.; Sovilj, D.; Soliman, H.; Salmon, G.; Lin, X. Investigating the Influence of Feature Sources for Malicious Website Detection. Appl. Sci. 2022, 12, 2806. https://doi.org/10.3390/app12062806

Chaiban A, Sovilj D, Soliman H, Salmon G, Lin X. Investigating the Influence of Feature Sources for Malicious Website Detection. Applied Sciences. 2022; 12(6):2806. https://doi.org/10.3390/app12062806

Chicago/Turabian StyleChaiban, Ahmad, Dušan Sovilj, Hazem Soliman, Geoff Salmon, and Xiaodong Lin. 2022. "Investigating the Influence of Feature Sources for Malicious Website Detection" Applied Sciences 12, no. 6: 2806. https://doi.org/10.3390/app12062806

APA StyleChaiban, A., Sovilj, D., Soliman, H., Salmon, G., & Lin, X. (2022). Investigating the Influence of Feature Sources for Malicious Website Detection. Applied Sciences, 12(6), 2806. https://doi.org/10.3390/app12062806