Building a Production-Ready Multi-Label Classifier for Legal Documents with Digital-Twin-Distiller

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

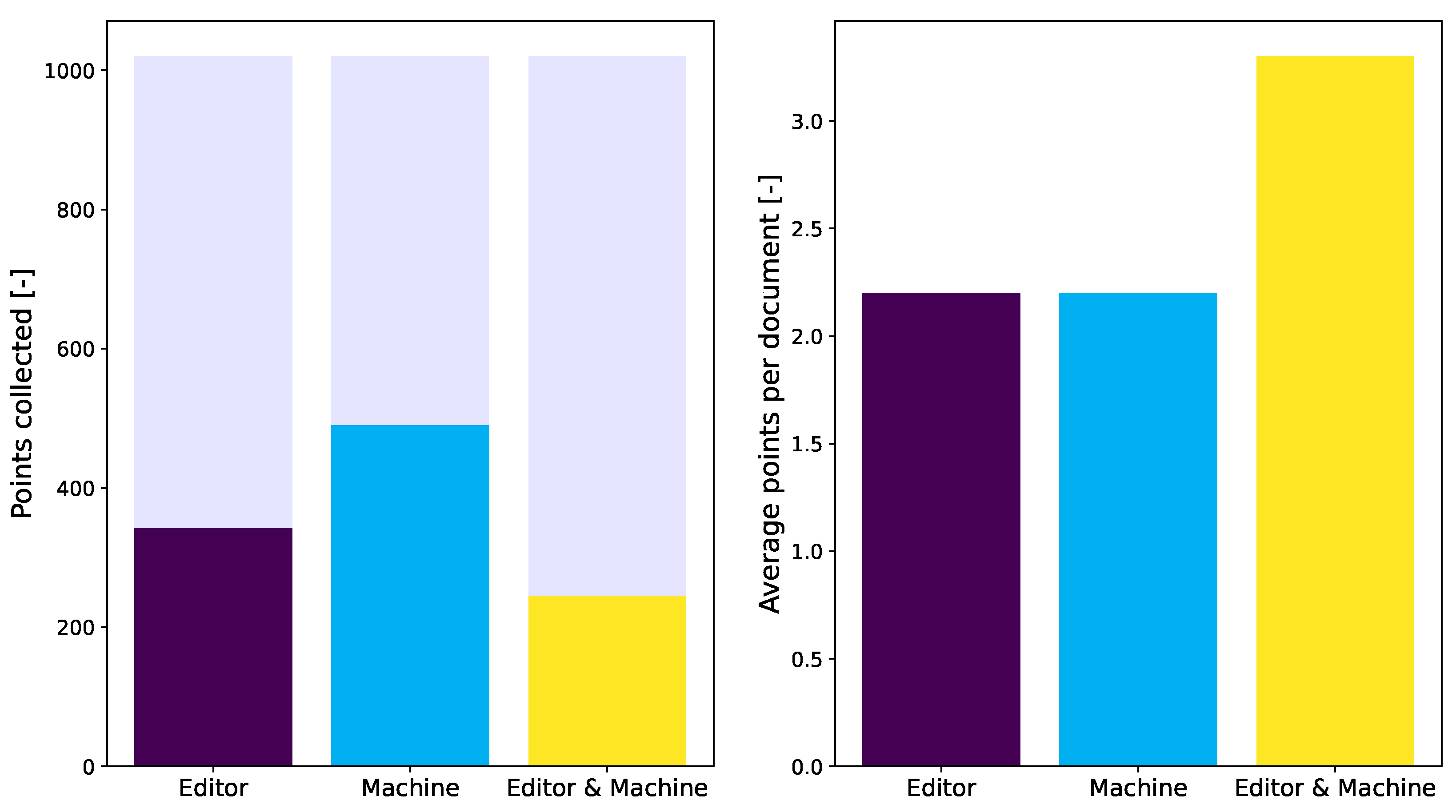

2.2. Labeling Process

3. Applied Methodologies

3.1. Vectorization

3.2. Preprocessing

3.3. Dimension Reduction

3.4. Negative Filtering

3.5. Training

3.6. Iterative Labeling

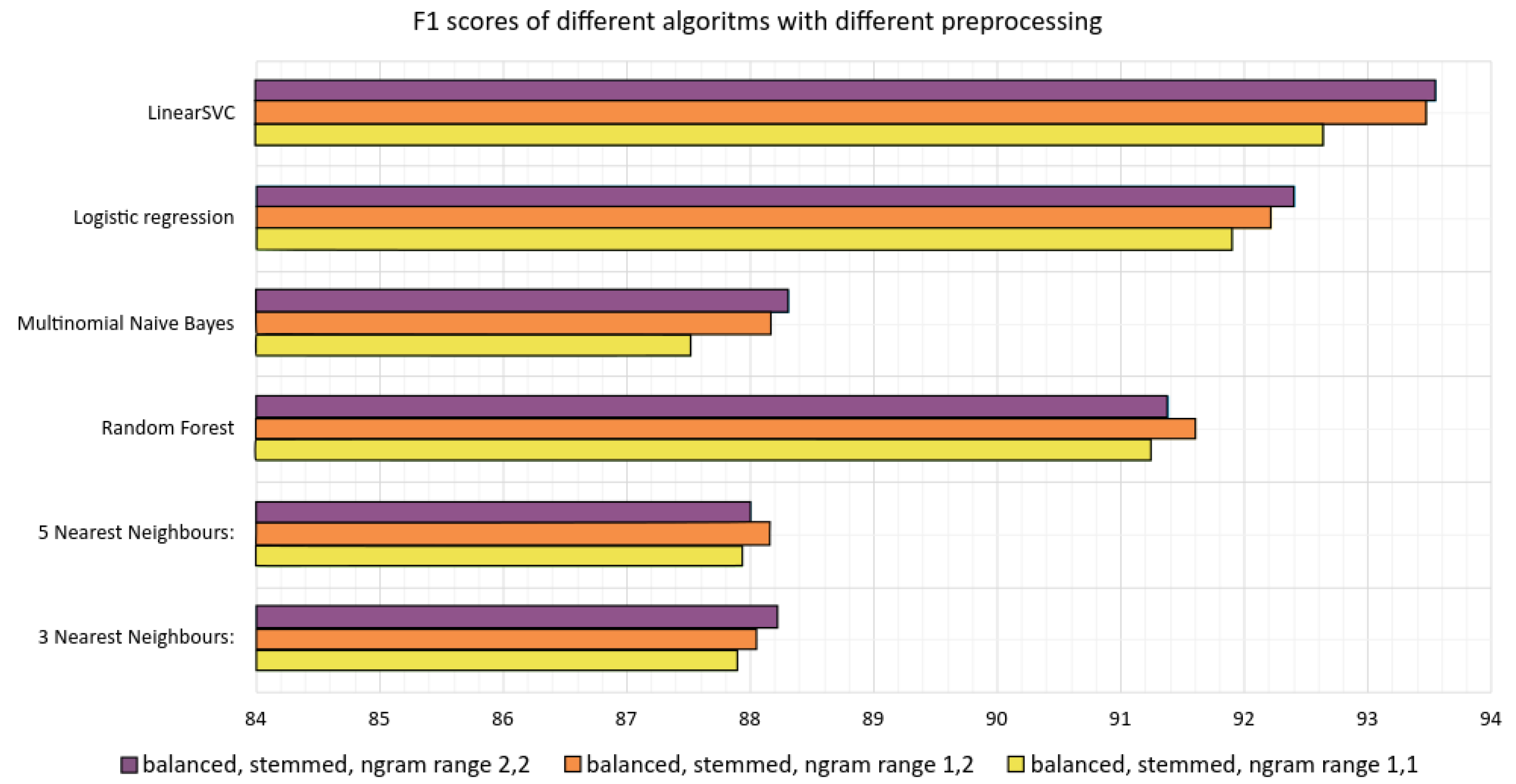

4. Results

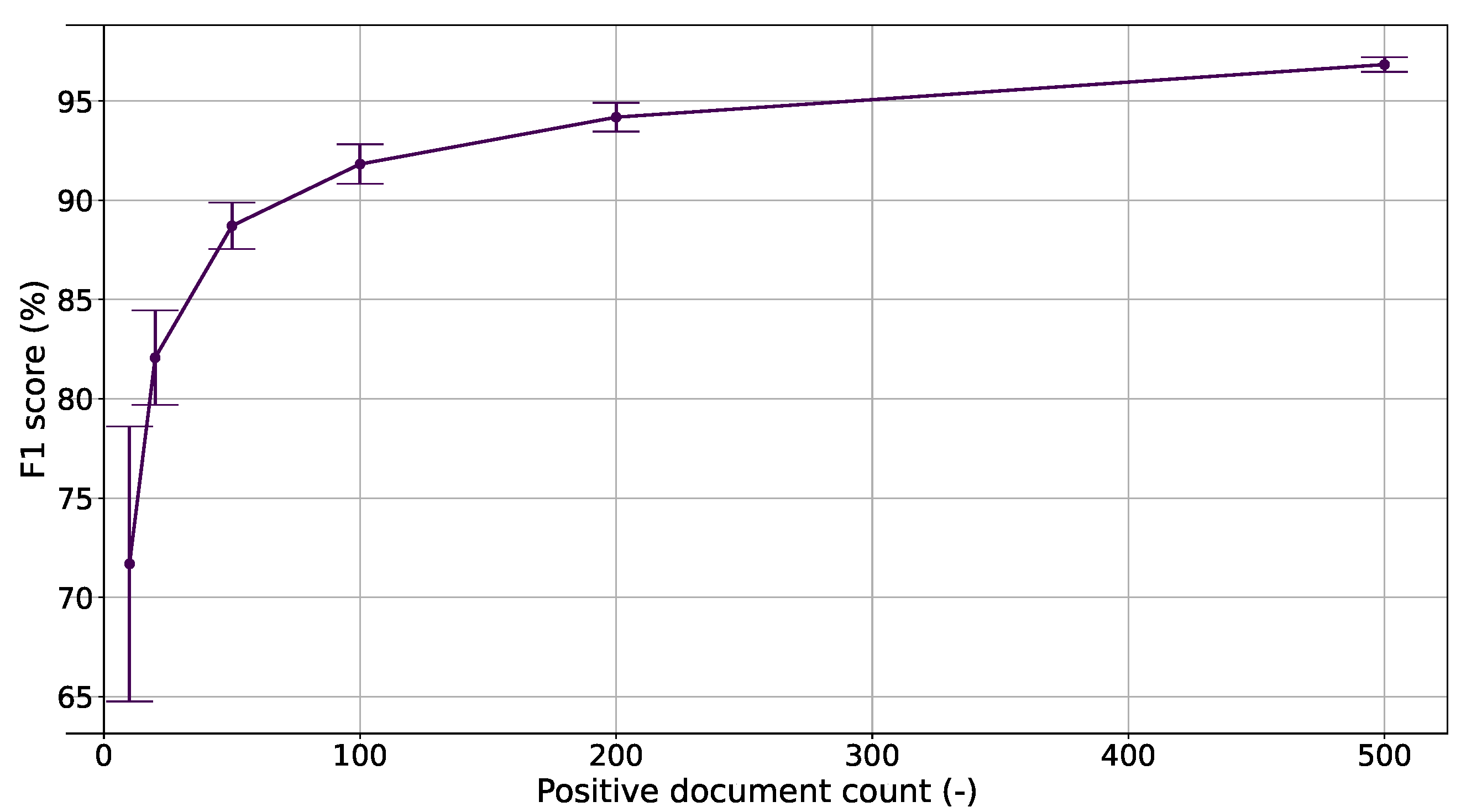

4.1. Determining the Minimum Number of Documents Needed for Classification

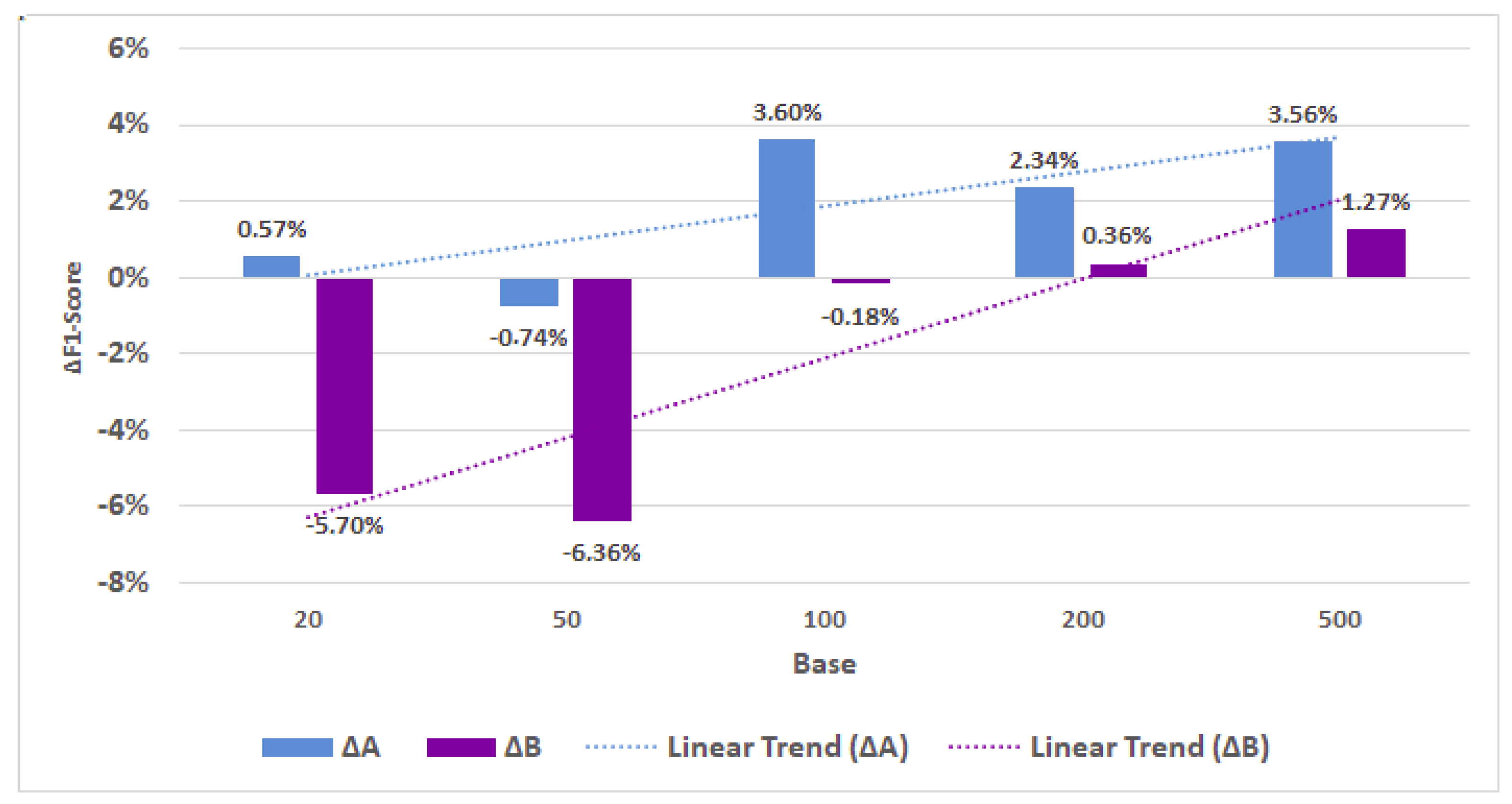

4.2. Data Augmentation

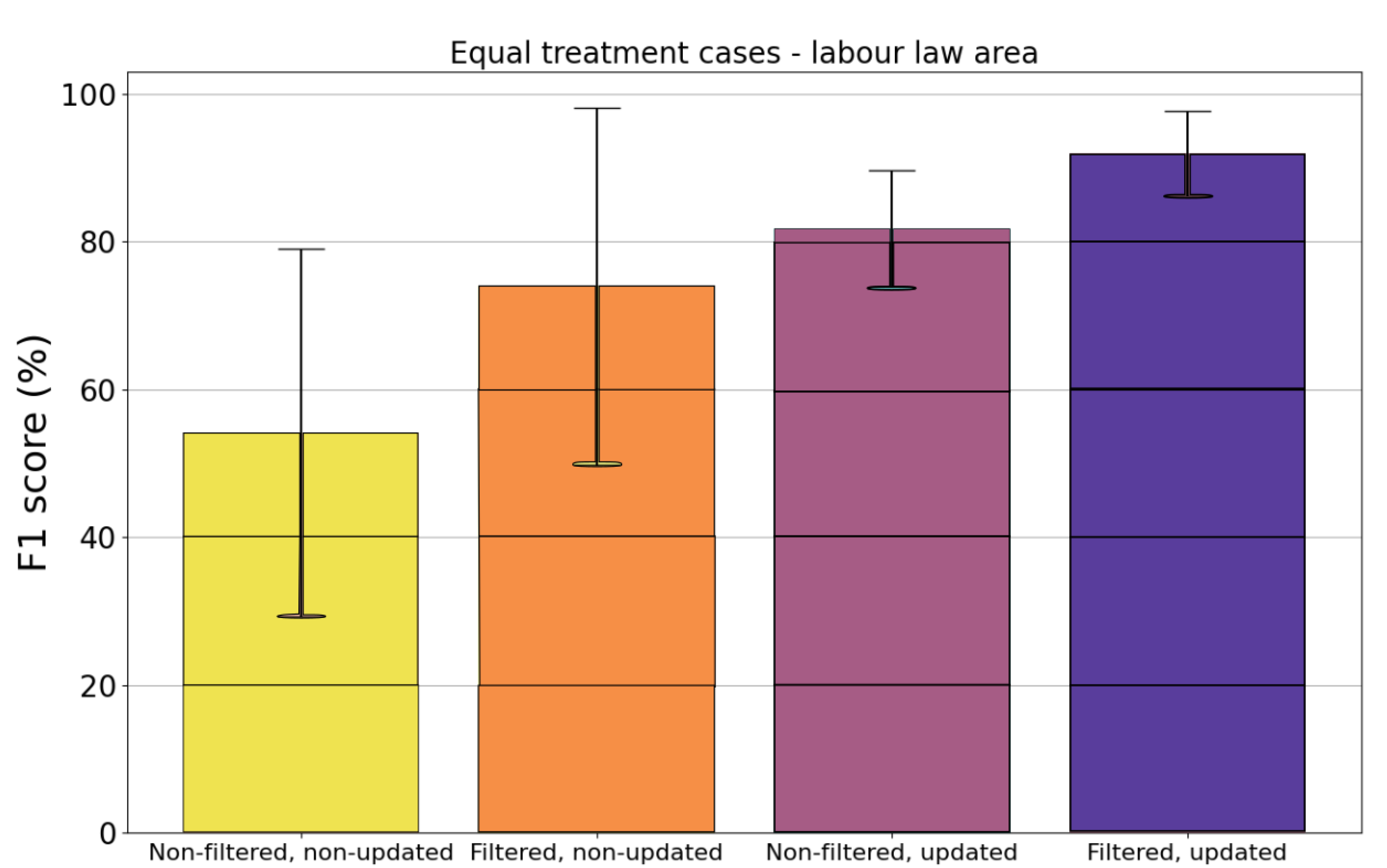

4.3. Effect of the Negative Filtering and Addition of Validated Positive Training Data

4.4. Validation on Benchmark Set

Correlation between Categories

4.5. Experimental Validation

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Cheng, T.T.; Cua, J.L.; Tan, M.D.; Yao, K.G.; Roxas, R.E. Information extraction from legal documents. In Proceedings of the IEEE 2009 Eighth International Symposium on Natural Language Processing, Bangkok, Thailand, 20–22 October 2009; pp. 157–162. [Google Scholar]

- Song, D.; Vold, A.; Madan, K.; Schilder, F. Multi-label legal document classification: A deep learning-based approach with label-attention and domain-specific pre-training. Inf. Syst. 2021; 2021, in press. [Google Scholar] [CrossRef]

- Wan, L.; Papageorgiou, G.; Seddon, M.; Bernardoni, M. Long-length Legal Document Classification. arXiv 2019, arXiv:1912.06905. [Google Scholar]

- Wei, F.; Qin, H.; Ye, S.; Zhao, H. Empirical study of deep learning for text classification in legal document review. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 3317–3320. [Google Scholar]

- Kanapala, A.; Pal, S.; Pamula, R. Text summarization from legal documents: A survey. Artif. Intell. Rev. 2019, 51, 371–402. [Google Scholar] [CrossRef]

- Saravanan, M.; Ravindran, B.; Raman, S. Improving legal document summarization using graphical models. Front. Artif. Intell. Appl. 2006, 152, 51. [Google Scholar]

- Vico, H.; Calegari, D. Software architecture for document anonymization. Electron. Notes Theor. Comput. Sci. 2015, 314, 83–100. [Google Scholar] [CrossRef][Green Version]

- Oksanen, A.; Tamper, M.; Tuominen, J.; Hietanen, A.; Hyvönen, E. ANOPPI: A Pseudonymization Service for Finnish Court Documents. In Proceedings of the JURIX, Madrid, Spain, 11–13 December 2019; pp. 251–254. [Google Scholar]

- Csányi, G.M.; Nagy, D.; Vági, R.; Vadász, J.P.; Orosz, T. Challenges and Open Problems of Legal Document Anonymization. Symmetry 2021, 13, 1490. [Google Scholar] [CrossRef]

- van Opijnen, M.; Peruginelli, G.; Kefali, E.; Palmirani, M. On-Line Publication of Court Decisions in the EU: Report of the Policy Group of the Project ‘Building on the European Case Law Identifier’. 2017. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3088495 (accessed on 5 January 2021).

- Walters, E.; Asjes, J. Fastcase, and the Visual Understanding of Judicial Precedents. In Legal informatics; Katz, D.M., Dolin, R., Bommarito, M.J., Eds.; Cambridge University Press: Cambridge, UK, 2021; pp. 357–406. [Google Scholar]

- Katz, D.M.; Bommarito, M.J.; Blackman, J. A general approach for predicting the behavior of the Supreme Court of the United States. PLoS ONE 2017, 12, e0174698. [Google Scholar] [CrossRef]

- Aletras, N.; Tsarapatsanis, D.; Preoţiuc-Pietro, D.; Lampos, V. Predicting judicial decisions of the European Court of Human Rights: A natural language processing perspective. PeerJ Comput. Sci. 2016, 2, e93. [Google Scholar] [CrossRef]

- Li, S.; Zhang, H.; Ye, L.; Su, S.; Guo, X.; Yu, H.; Fang, B. Prison term prediction on criminal case description with deep learning. Comput. Mater. Contin. 2020, 62, 1217–1231. [Google Scholar] [CrossRef]

- Medvedeva, M.; Vols, M.; Wieling, M. Using machine learning to predict decisions of the European Court of Human Rights. Artif. Intell. Law 2020, 28, 237–266. [Google Scholar] [CrossRef]

- Sulea, O.M.; Zampieri, M.; Vela, M.; Van Genabith, J. Predicting the law area and decisions of french supreme court cases. arXiv 2017, arXiv:1708.01681. [Google Scholar]

- Sun, Y.Y.; Zhang, Y.; Zhou, Z.H. Multi-label learning with weak label. In Proceedings of the Twenty-Fourth AAAI Conference on Artificial Intelligence, Atlanta, GA, USA, 11–15 July 2010. [Google Scholar]

- Yang, S.J.; Jiang, Y.; Zhou, Z.H. Multi-instance multi-label learning with weak label. In Proceedings of the Twenty-Third International Joint Conference on Artificial Intelligence, Beijing, China, 3–9 August 2013. [Google Scholar]

- Stefanovič, P.; Kurasova, O. Approach for Multi-Label Text Data Class Verification and Adjustment Based on Self-Organizing Map and Latent Semantic Analysis. Informatica 2022, 32, 1–22. [Google Scholar] [CrossRef]

- Ahmed, N.A.; Shehab, M.A.; Al-Ayyoub, M.; Hmeidi, I. Scalable multi-label arabic text classification. In Proceedings of the IEEE 2015 6th International Conference on Information and Communication Systems (ICICS), Amman, Jordan, 7–9 April 2015; pp. 212–217. [Google Scholar]

- Chidanand, A.; Fred, D.; Weiss, S. Reuters-21578 Dataset; ACM Transactions on Information Systems; Association for Computing Machinery: New York, NY, USA, 1994; Volume 12. [Google Scholar]

- Maas, A.; Daly, R.E.; Pham, P.T.; Huang, D.; Ng, A.Y.; Potts, C. Learning word vectors for sentiment analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011; pp. 142–150. [Google Scholar]

- Bansal, N.; Sharma, A.; Singh, R. An Evolving Hybrid Deep Learning Framework for Legal Document Classification. Ingénierie Des Systèmes D’Information 2019, 24, 425–431. [Google Scholar] [CrossRef]

- Bambroo, P.; Awasthi, A. LegalDB: Long DistilBERT for Legal Document Classification. In Proceedings of the IEEE 2021 International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT), Bhilai, India, 19–20 February 2021; pp. 1–4. [Google Scholar]

- Chen, H.; Wu, L.; Chen, J.; Lu, W.; Ding, J. A comparative study of automated legal text classification using random forests and deep learning. Inf. Process. Manag. 2022, 59, 102798. [Google Scholar] [CrossRef]

- Šajatović, A.; Buljan, M.; Šnajder, J.; Bašić, B.D. Evaluating automatic term extraction methods on individual documents. In Proceedings of the Joint Workshop on Multiword Expressions and WordNet (MWE-WN 2019), Florence, Italy, 2 August 2019; pp. 149–154. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the Advances in Neural Information Processing Systems, NIPS 2013, Lake Tahoe, NV, USA, 5–8 December 2013; pp. 3111–3119. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Orosz, T.; Vági, R.; Csányi, G.M.; Nagy, D.; Üveges, I.; Vadász, J.P.; Megyeri, A. Evaluating Human versus Machine Learning Performance in a LegalTech Problem. Appl. Sci. 2022, 12, 297. [Google Scholar] [CrossRef]

- Mastropaolo, A.; Pallante, F.; Radicioni, D.P. Legal Documents Categorization by Compression. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Law (ICAIL ’13), Rome, Italy, 10–14 June 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 92–100. [Google Scholar] [CrossRef]

- Luhn, H.P. A statistical approach to mechanized encoding and searching of literary information. IBM J. Res. Dev. 1957, 1, 309–317. [Google Scholar] [CrossRef]

- Jones, K.S. A statistical interpretation of term specificity and its application in retrieval. J. Doc. 1972, 28, 11–21. [Google Scholar] [CrossRef]

- Wikipedia. Agglutinative Languages. Available online: https://en.wikipedia.org/wiki/Agglutinative_language (accessed on 5 January 2021).

- Üveges, I.; Csányi, G.M.; Ring, O.; Orosz, T. Comparison of text augmentation methods in sentiment analysis of political texts. In Proceedings of the XVIII Hungarian Computational Linguistics Conference (MSZNY 2022), Szeged, Hungary, 27–28 January 2022. [Google Scholar]

- Németh, L.; Trón, V.; Halácsy, P.; Kornai, A.; Rung, A.; Szakadát, I. Leveraging the open source ispell codebase for minority language analysis. In Proceedings of the LREC 2004—SALTMIL Workshop: First Steps in Language Documentation for Minority Languages, Lisbon, Portugal, 24–30 May 2004; pp. 56–59. [Google Scholar]

- St, L.; Wold, S. Analysis of variance (ANOVA). Chemom. Intell. Lab. Syst. 1989, 6, 259–272. [Google Scholar]

- Zwanenburg, G.; Hoefsloot, H.C.; Westerhuis, J.A.; Jansen, J.J.; Smilde, A.K. ANOVA—Principal component analysis and ANOVA—Simultaneous component analysis: A comparison. J. Chemom. 2011, 25, 561–567. [Google Scholar] [CrossRef]

- Csányi, G.; Orosz, T. Comparison of data augmentation methods for legal document classification. Acta Technica Jaurinensis 2021. [Google Scholar] [CrossRef]

- Joachims, T. Text categorization with support vector machines: Learning with many relevant features. In European Conference on Machine Learning; Springer: Berlin/Heidelberg, Germany, 1998; pp. 137–142. [Google Scholar]

- Yang, Y. An evaluation of statistical approaches to text categorization. Inf. Retr. 1999, 1, 69–90. [Google Scholar] [CrossRef]

- Boutell, M.R.; Luo, J.; Shen, X.; Brown, C.M. Learning multi-label scene classification. Pattern Recognit. 2004, 37, 1757–1771. [Google Scholar] [CrossRef]

- Bekker, J.; Davis, J. Learning from positive and unlabeled data: A survey. Mach. Learn. 2020, 109, 719–760. [Google Scholar] [CrossRef]

- Bekker, J.; Davis, J. Estimating the class prior in positive and unlabeled data through decision tree induction. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LO, USA, 2–7 February 2018. [Google Scholar]

- Scott, C. A rate of convergence for mixture proportion estimation, with application to learning from noisy labels. In Artificial Intelligence and Statistics; PMLR: San Diego, CA, USA, 2015; pp. 838–846. [Google Scholar]

- Wu, M.; Liu, F.; Cohn, T. Evaluating the utility of hand-crafted features in sequence labelling. arXiv 2018, arXiv:1808.09075. [Google Scholar]

- Li, G.; Wang, Z.; Ma, Y. Combining Domain Knowledge Extraction With Graph Long Short-Term Memory for Learning Classification of Chinese Legal Documents. IEEE Access 2019, 7, 139616–139627. [Google Scholar] [CrossRef]

- Ma, Y.; Zhang, P.; Ma, J. An Ontology Driven Knowledge Block Summarization Approach for Chinese Judgment Document Classification. IEEE Access 2018, 6, 71327–71338. [Google Scholar] [CrossRef]

- Goh, Y.C.; Cai, X.Q.; Theseira, W.; Ko, G.; Khor, K.A. Evaluating human versus machine learning performance in classifying research abstracts. Scientometrics 2020, 125, 1197–1212. [Google Scholar] [CrossRef]

- Gonçalves, T.; Quaresma, P. Evaluating Preprocessing Techniques in a Text Classification Problem; SBC-Sociedade Brasileira de Computação: São Leopoldo, Brazil, 2005. [Google Scholar]

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of tricks for efficient text classification. arXiv 2016, arXiv:1607.01759. [Google Scholar]

- Rehurek, R.; Sojka, P. Gensim—Statistical semantics in python. In Proceedings of the EuroScipy 2011, Paris, France, 25–28 August 2011. [Google Scholar]

- Marivate, V.; Sefara, T. Improving short text classification through global augmentation methods. In International Cross-Domain Conference for Machine Learning and Knowledge Extraction; Springer: Berlin/Heidelberg, Germany, 2020; pp. 385–399. [Google Scholar]

- Wei, J.; Zou, K. Eda: Easy data augmentation techniques for boosting performance on text classification tasks. arXiv 2019, arXiv:1901.11196. [Google Scholar]

- Miller, G.A. WordNet: An Electronic Lexical Database; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Miháltz, M.; Hatvani, C.; Kuti, J.; Szarvas, G.; Csirik, J.; Prószéky, G.; Váradi, T. Methods and results of the Hungarian WordNet project. In Proceedings of the GWC: The Fourth Global WordNet Conference, Szeged, Hungary, 22–25 January 2008; pp. 310–320. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Ghamrawi, N.; McCallum, A. Collective multi-label classification. In Proceedings of the 14th ACM International Conference on Information and Knowledge Management, Bremen, Germany, 31 October–5 November 2005; pp. 195–200. [Google Scholar]

- Roitblat, H.L.; Kershaw, A.; Oot, P. Document categorization in legal electronic discovery: Computer classification vs. manual review. J. Am. Soc. Inf. Sci. Technol. 2010, 61, 70–80. [Google Scholar] [CrossRef]

| Dataset | Nr. of Classes | Nr. of Docs | Avg. Word Count Per Document |

|---|---|---|---|

| IMDB | 90 | 10,789 | 144 |

| Reuters | 10 | 135,669 | 393 |

| Subject matter | 167 | 173,892 | 3354 |

| Law Area | Administrative | Civil | Criminal | Economic | Labor | Not Stated |

|---|---|---|---|---|---|---|

| Training set | 30,891 | 72,525 | 29,751 | 21,125 | 15,063 | 19 |

| Test set | 1207 | 1602 | 591 | 729 | 369 | 20 |

| APD | NF | Positive Count | Negative Count | Average (%) | Std (%) | Min (%) | Max (%) |

|---|---|---|---|---|---|---|---|

| No | No | 32 | 10,012 | 54.15 | 24.82 | 0 | 100 |

| No | Yes | 32 | 9072 | 73.95 | 24.14 | 0 | 100 |

| Yes | No | 102 | 10,012 | 81.71 | 7.87 | 60.87 | 94.74 |

| Yes | Yes | 102 | 9072 | 91.97 | 5.77 | 82.35 | 100 |

| Did Not Match | Partly Matched | Completely Matched | Sum | |

|---|---|---|---|---|

| Count | 64 | 40 | 46 | 150 |

| Percentage (%) | 42.67% | 26.67% | 30.67% | 100% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Csányi, G.M.; Vági, R.; Nagy, D.; Üveges, I.; Vadász, J.P.; Megyeri, A.; Orosz, T. Building a Production-Ready Multi-Label Classifier for Legal Documents with Digital-Twin-Distiller. Appl. Sci. 2022, 12, 1470. https://doi.org/10.3390/app12031470

Csányi GM, Vági R, Nagy D, Üveges I, Vadász JP, Megyeri A, Orosz T. Building a Production-Ready Multi-Label Classifier for Legal Documents with Digital-Twin-Distiller. Applied Sciences. 2022; 12(3):1470. https://doi.org/10.3390/app12031470

Chicago/Turabian StyleCsányi, Gergely Márk, Renátó Vági, Dániel Nagy, István Üveges, János Pál Vadász, Andrea Megyeri, and Tamás Orosz. 2022. "Building a Production-Ready Multi-Label Classifier for Legal Documents with Digital-Twin-Distiller" Applied Sciences 12, no. 3: 1470. https://doi.org/10.3390/app12031470

APA StyleCsányi, G. M., Vági, R., Nagy, D., Üveges, I., Vadász, J. P., Megyeri, A., & Orosz, T. (2022). Building a Production-Ready Multi-Label Classifier for Legal Documents with Digital-Twin-Distiller. Applied Sciences, 12(3), 1470. https://doi.org/10.3390/app12031470