Robotic Assistance in Medication Intake: A Complete Pipeline

Abstract

:1. Introduction

1.1. Scope

- The development of an integrated vision system able to perform object and human detection and tracking suitable for monitoring of medication activities;

- The integration of novel object grasping strategies with environmental contact, enabling object manipulation from challenging spots accompanied with situation awareness mechanisms;

- The development of safe manipulation and navigation strategies suitable for robotic agents that target operation in domestic environments with non-expert robot users;

- The identification of the necessitated robot skills for assistance in medication adherence activity, their development in a modular manner and their organization under a task planner framework that covers all the corner cases that can be identified within the examined assistive scenario;

- The integration and assessment of all the developed skills in multiple realistic scenarios with various users.

1.2. State-of-the-Art Robotic Applications in Medication Adherence Activities

1.3. Paper Layout

- the user requirements during medication adherence activities;

- the hardware architecture and the physical implementation of the robotic manipulator;

- the adopted software components that address the user requirements;

- the safety features incorporated within the developed software;

- the experimental results that demonstrate operation in various environments with real users.

2. System Architecture

2.1. Medication Adherence: Use Case Requirements

- be aware of the medication schedule of the user;

- provide reminders to the user through the communication modalities before a medication session;

- be able to locate, detect and fetch the pill box, especially when the latter is placed in high places difficult to be reached by the user;

- monitor and assess the progress of the medication adherence activity;

- be able to place the pill box back to its storage position;

- establish communication with external person in cases were medication process has not been completed successfully;

- complete the assistance provision for the medication adherence scenario in a coherent and structured manner, with sufficient repeatability.

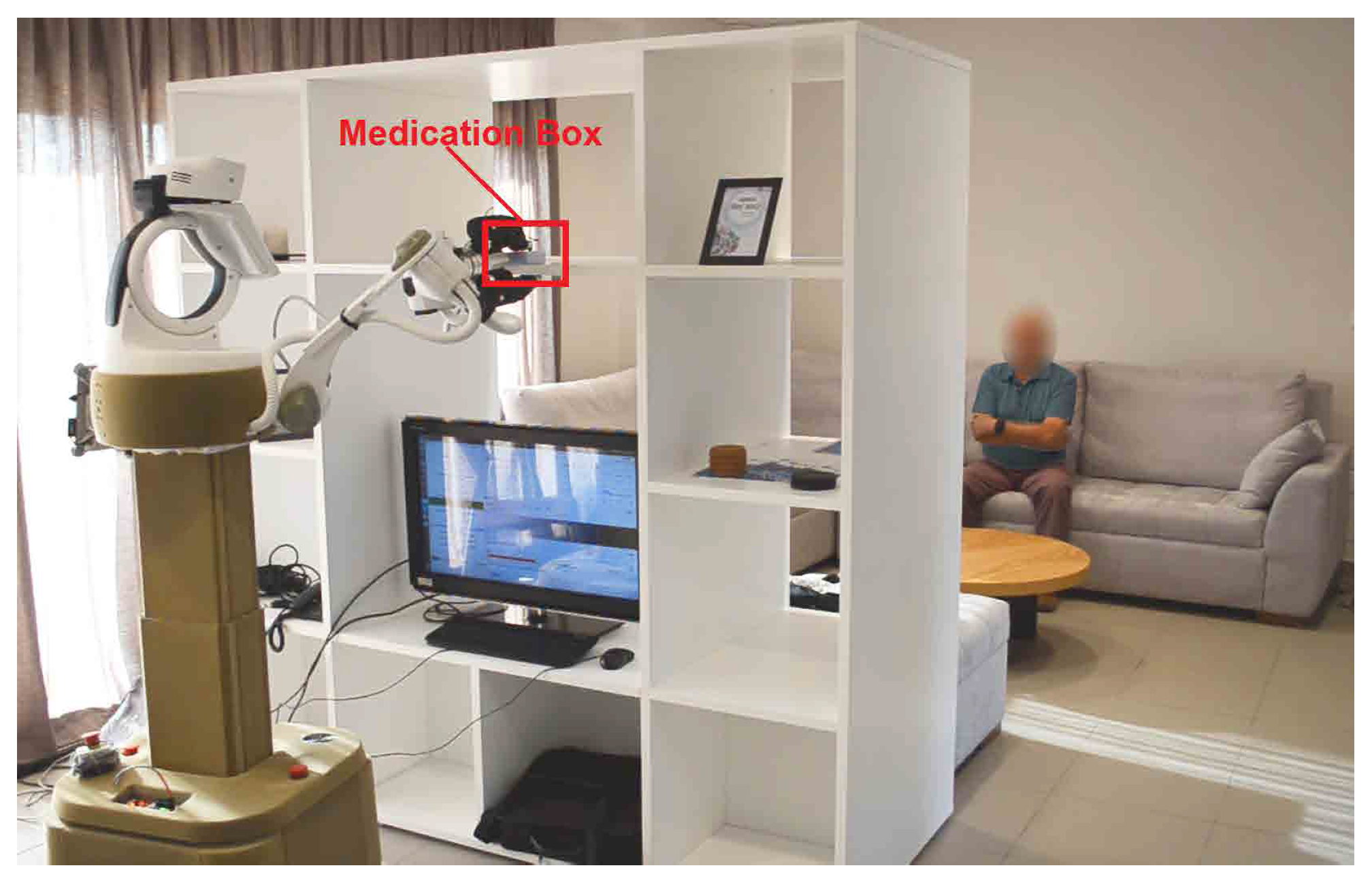

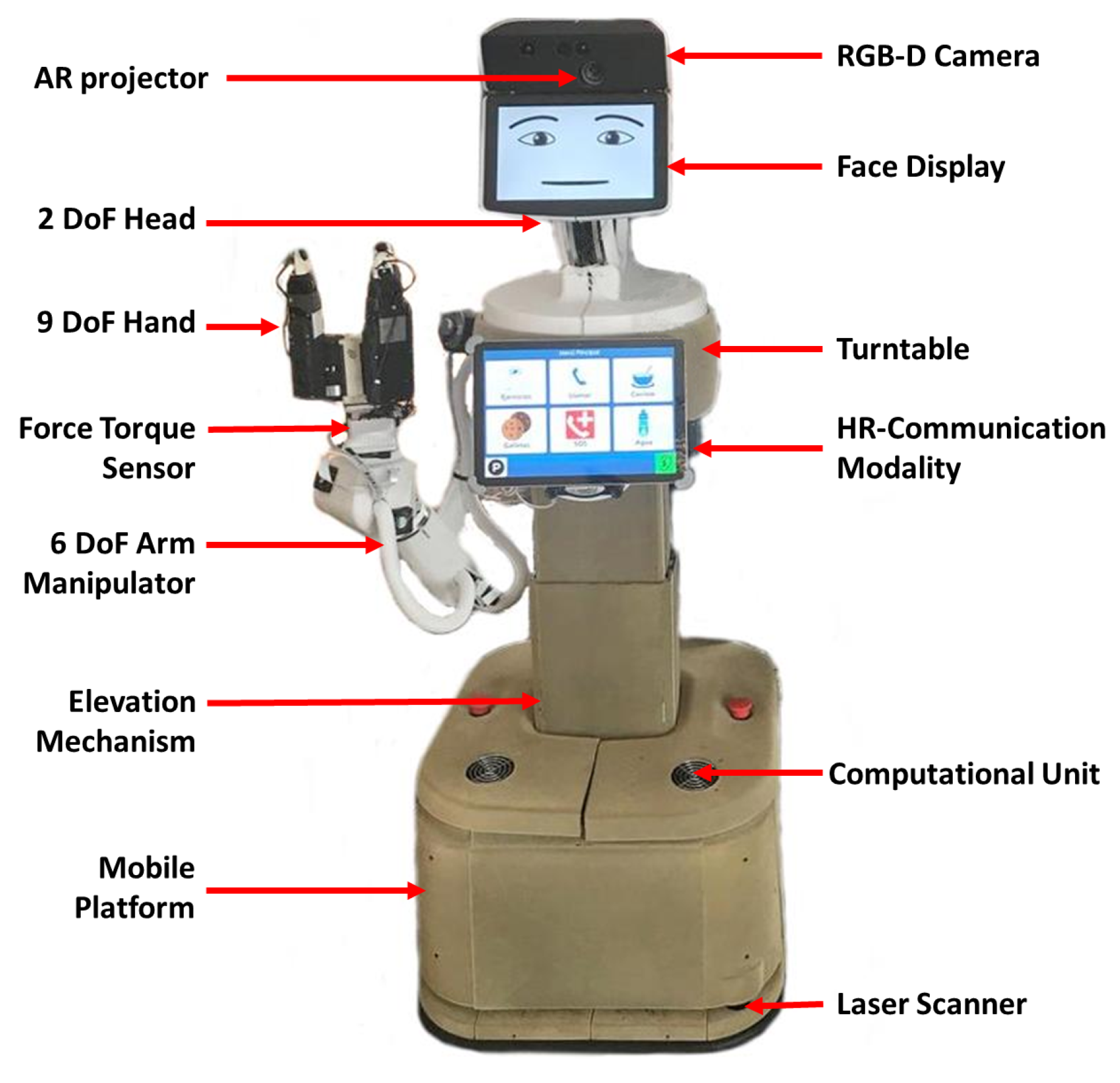

2.2. Hardware Specifications and Setup

3. Robot Perception Skills

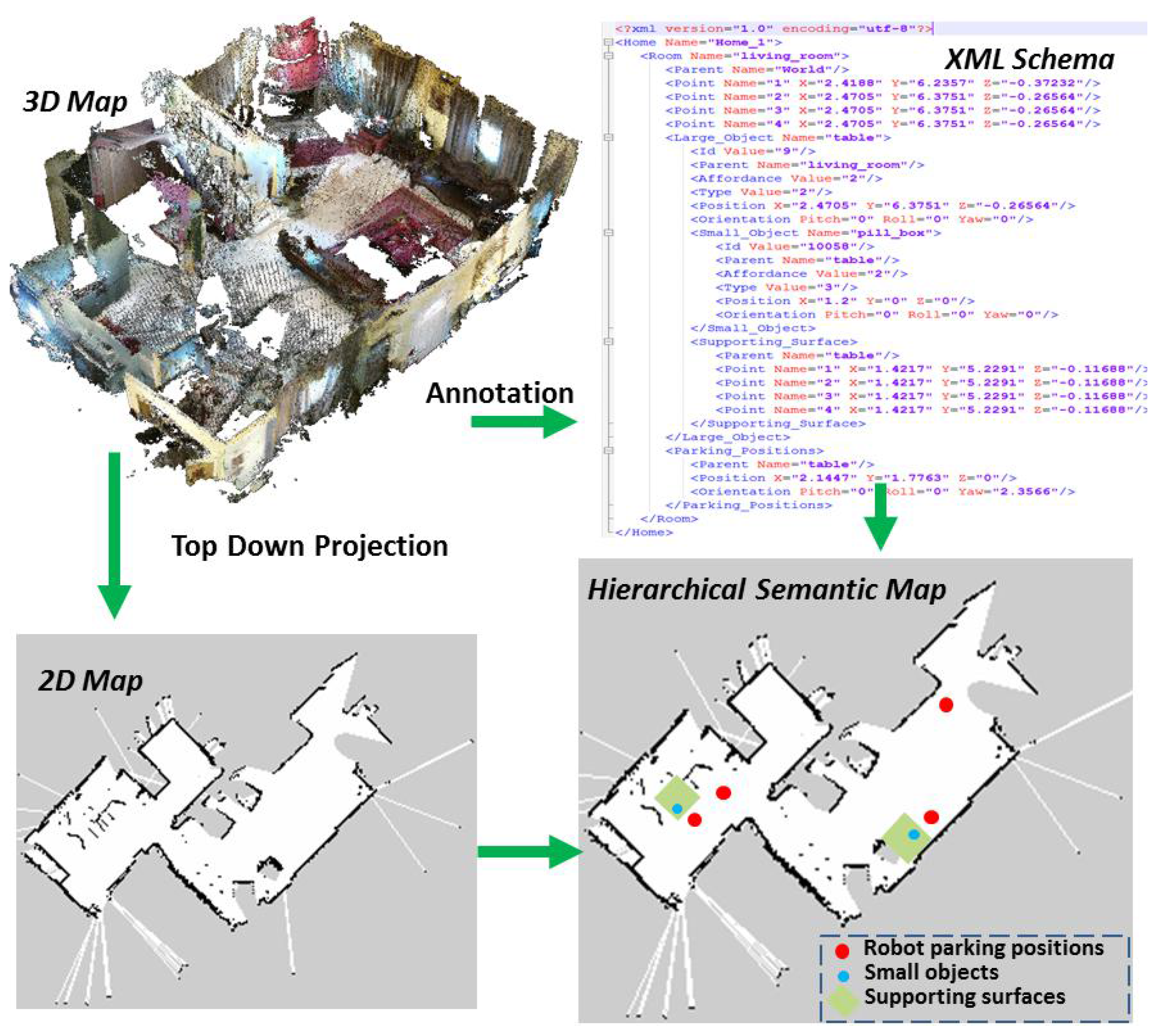

3.1. Hierarchical Semantic Map

3.2. Object Detection and Monitoring

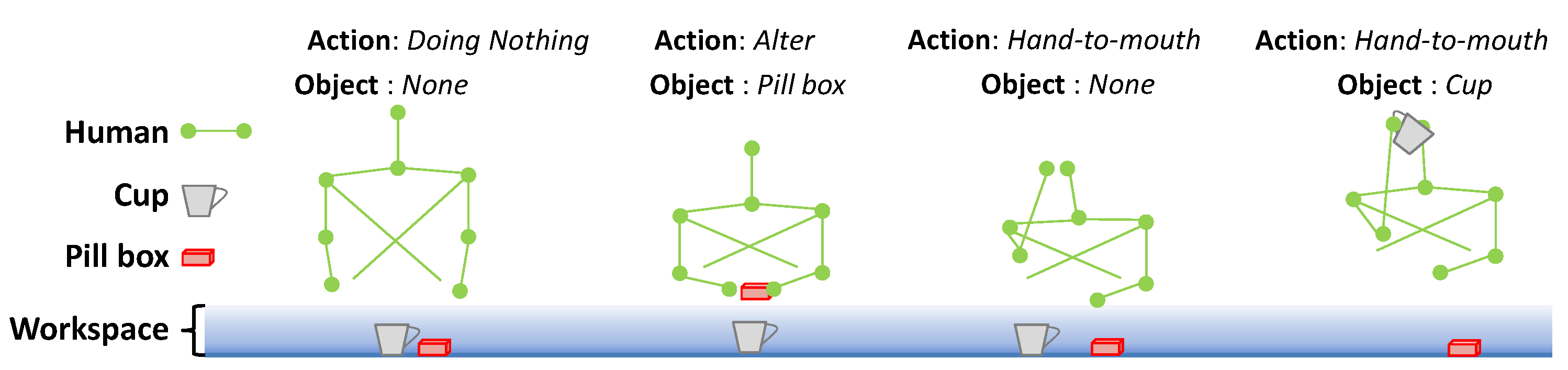

3.3. Human Understanding in the Scene

4. Robot Action Skills

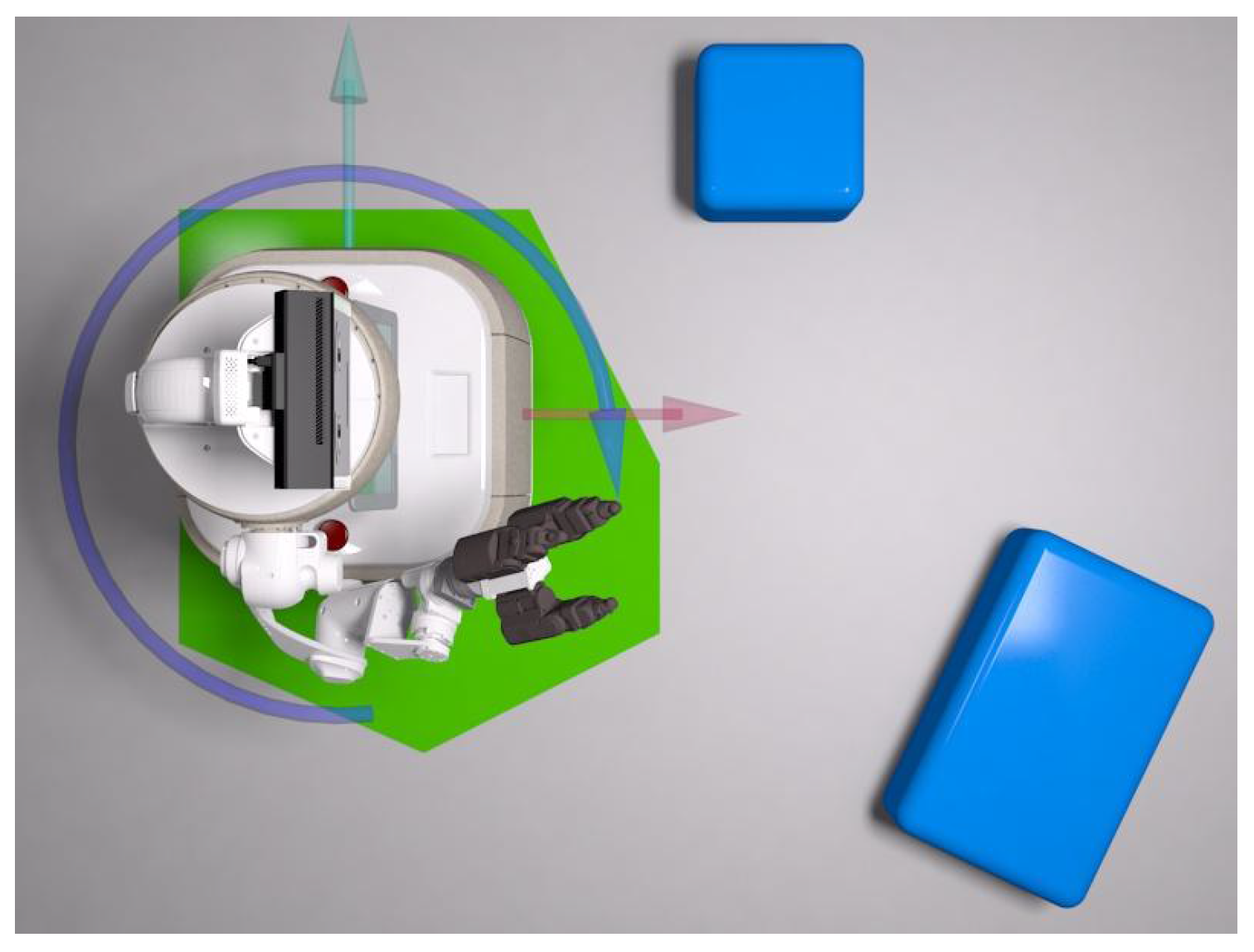

4.1. Navigation

4.1.1. Path Planning and Parking Position Selection

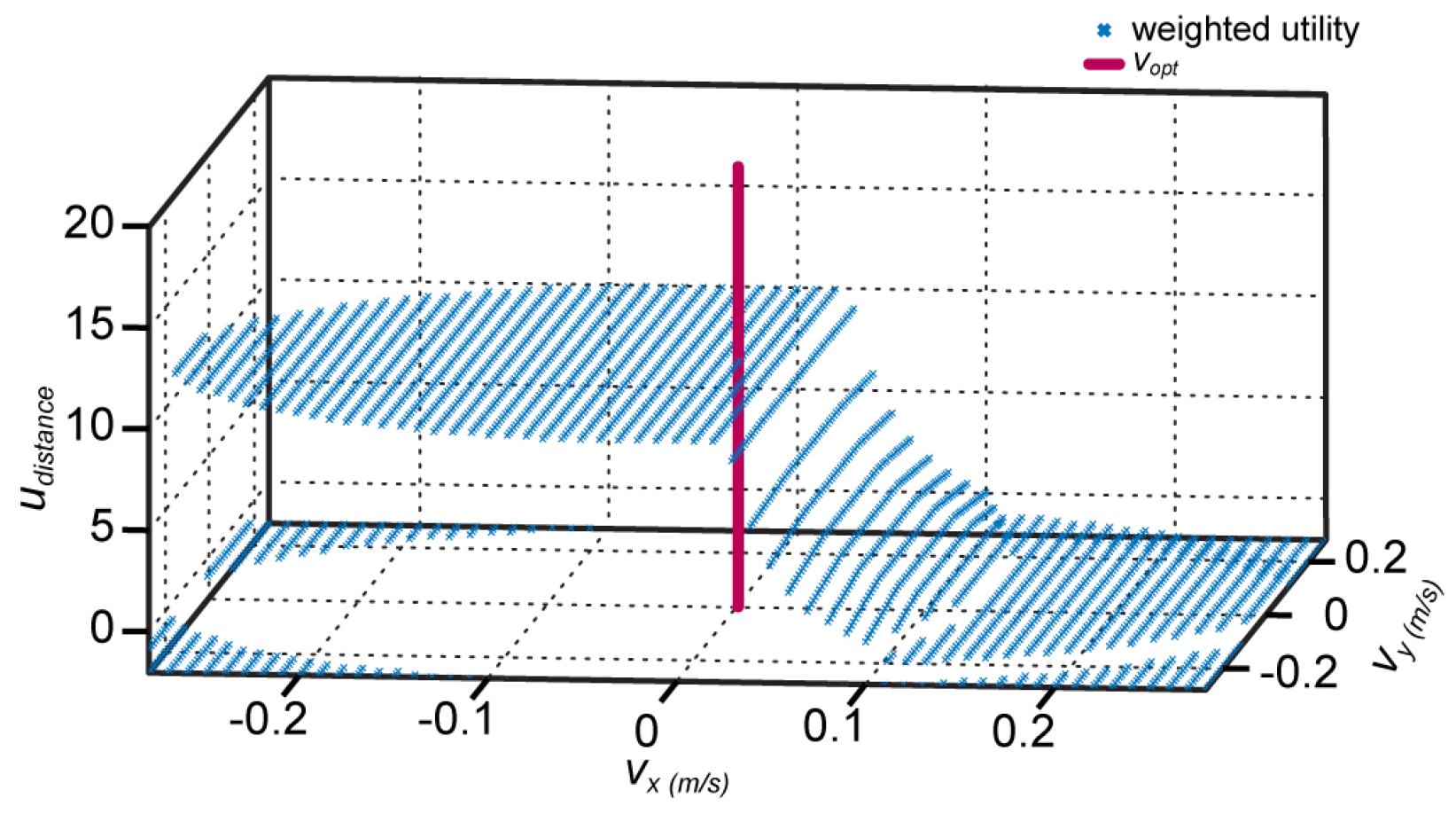

4.1.2. Local Planning

| Algorithm 1: Pseudo code of the dynamic window procedure. |

|

- heading—Rewards goal directed motions

- speed—Rewards high linear velocities to enforce fast goal directed motions whenever possible

- distance—Rewards long predicted times until collision

4.2. Manipulation and Admittance Control

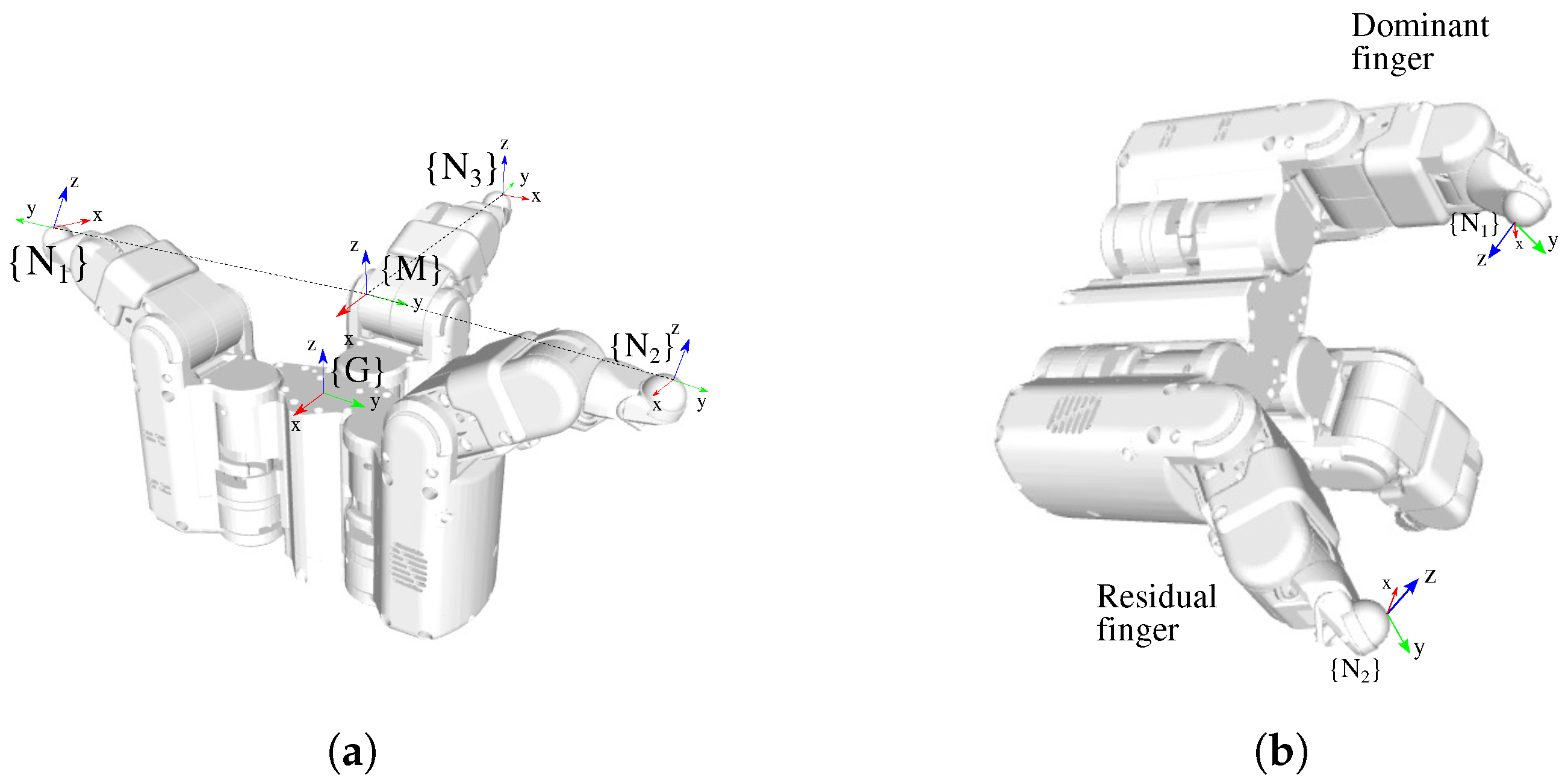

4.3. Grasping

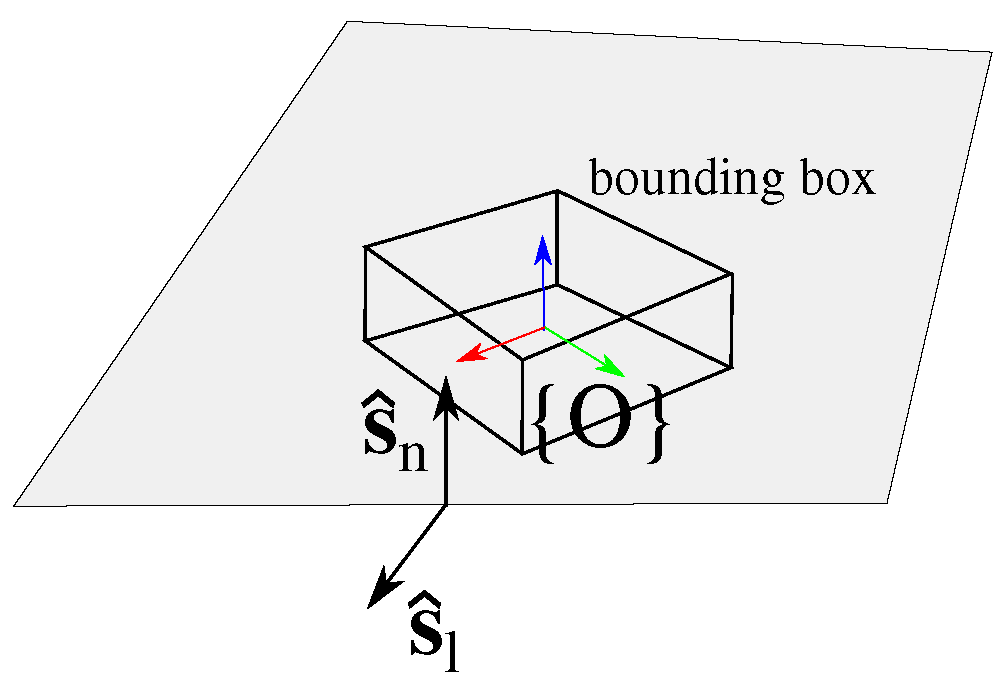

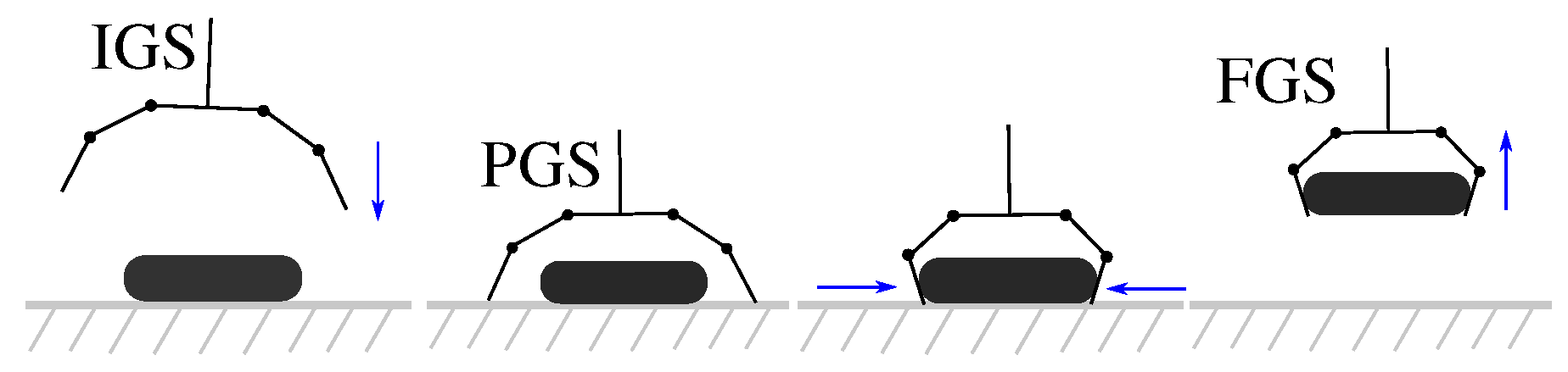

4.3.1. Grasping the “Pill Box” from a Table

4.3.2. Grasping the “Pill Box” from a High Shelf

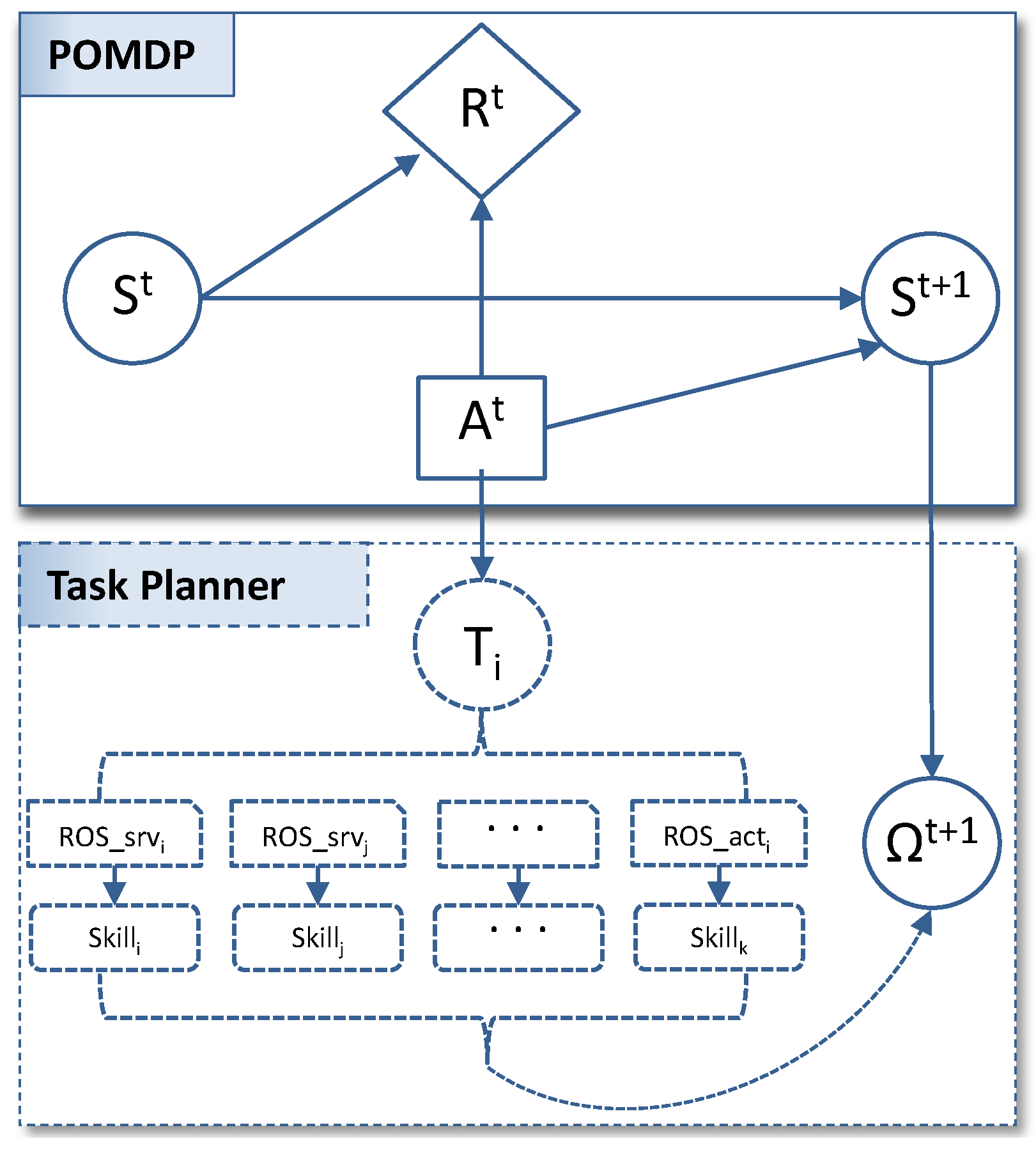

4.3.3. Slippage Detection and Reaction

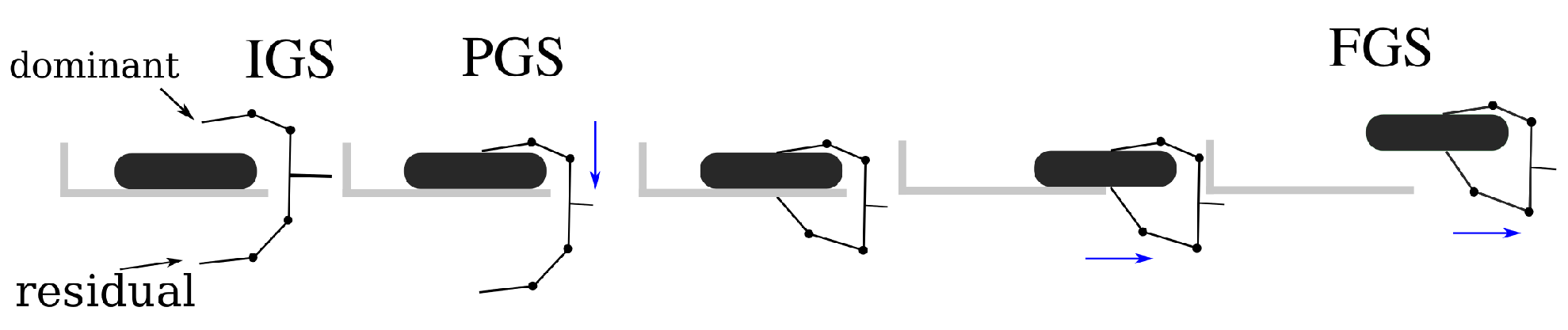

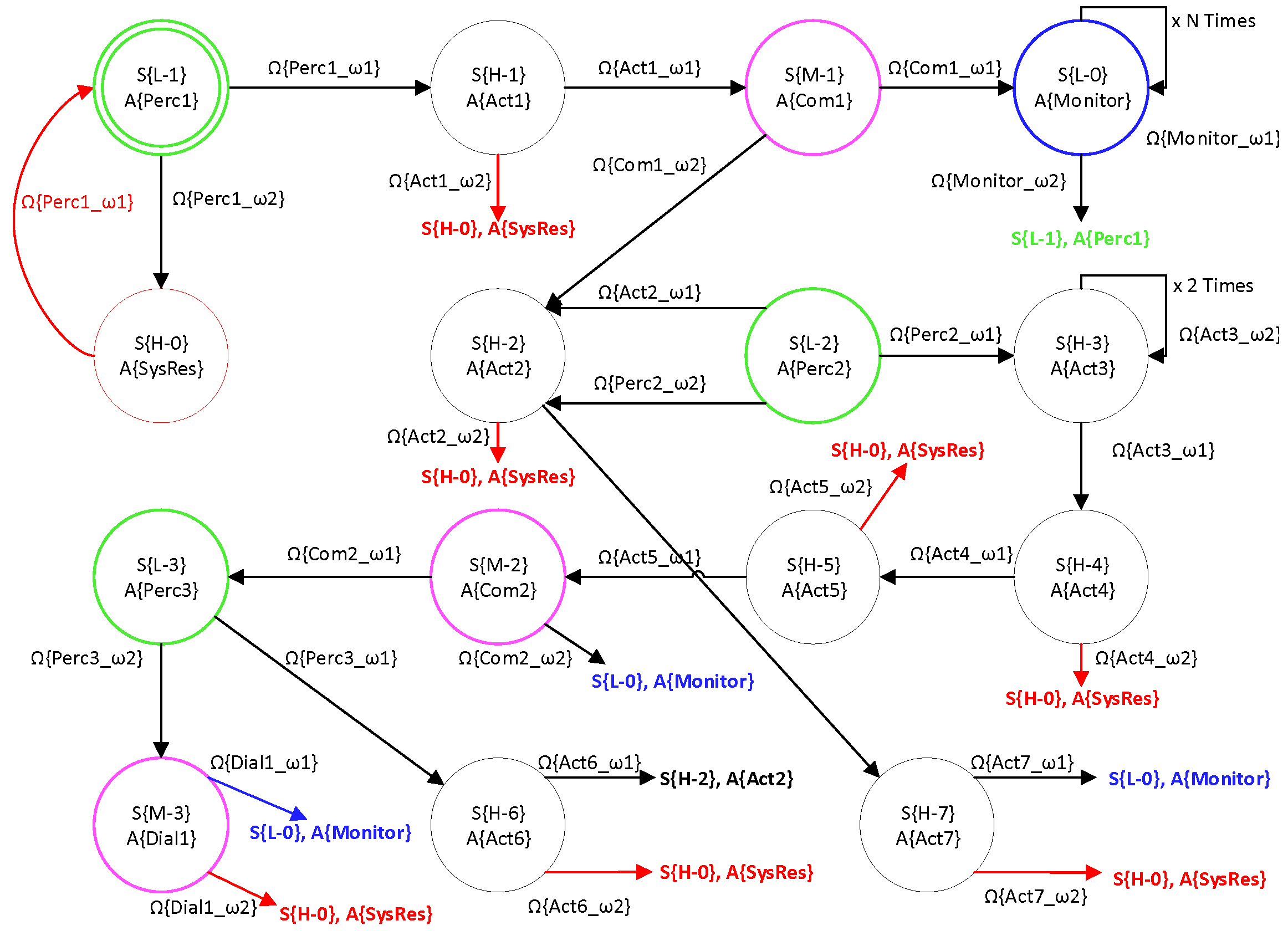

5. Robot Decision-Making and Task Planning

5.1. POMDP Decision-Making

5.2. Task Planner

6. Experimental Evaluation and Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Fischinger, D.; Einramhof, P.; Papoutsakis, K.; Wohlkinger, W.; Mayer, P.; Panek, P.; Hofmann, S.; Koertner, T.; Weiss, A.; Argyros, A.; et al. Hobbit, a care robot supporting independent living at home: First prototype and lessons learned. Robot. Auton. Syst. 2016, 75, 60–78. [Google Scholar] [CrossRef]

- Amirabdollahian, F.; op den Akker, R.; Bedaf, S.; Bormann, R.; Draper, H.; Evers, V.; Gelderblom, G.J.; Ruiz, C.G.; Hewson, D.; Hu, N.; et al. Accompany: Acceptable robotiCs COMPanions for AgeiNG Years—Multidimensional aspects of human-system interactions. In Proceedings of the The 6th International Conference on Human System Interaction, Sopot, Poland, 6–8 June 2013; pp. 570–577. [Google Scholar]

- Lieto, J.M.; Schmidt, K.S. Reduced ability to self-administer medication is associated with assisted living placement in a continuing care retirement community. J. Am. Med. Dir. Assoc. 2005, 6, 246–249. [Google Scholar] [CrossRef] [PubMed]

- Gross, H.M.; Mueller, S.; Schroeter, C.; Volkhardt, M.; Scheidig, A.; Debes, K.; Richter, K.; Doering, N. Robot companion for domestic health assistance: Implementation, test and case study under everyday conditions in private apartments. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 5992–5999. [Google Scholar]

- Wilson, J.R. Robot assistance in medication management tasks. In Proceedings of the The Eleventh ACM/IEEE International Conference on Human Robot Interaction, Christchurch, New Zealand, 7–10 March 2016; pp. 643–644. [Google Scholar]

- Prakash, A.; Beer, J.M.; Deyle, T.; Smarr, C.A.; Chen, T.L.; Mitzner, T.L.; Kemp, C.C.; Rogers, W.A. Older adults’ medication management in the home: How can robots help? In Proceedings of the 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 3–6 March 2013; pp. 283–290. [Google Scholar]

- Zielinska, T. Professional and personal service robots. Int. J. Robot. Appl. Technol. 2016, 4, 63–82. [Google Scholar] [CrossRef]

- Spyridon, M.G.; Eleftheria, M. Classification of domestic robots. ARSA-Adv. Res. Sci. Areas 2012, 1, 1693. [Google Scholar]

- Engelhardt, K.G. An overview of health and human service robotics. Robot. Auton. Syst. 1989, 5, 205–226. [Google Scholar] [CrossRef]

- Broadbent, E.; Tamagawa, R.; Kerse, N.; Knock, B.; Patience, A.; MacDonald, B. Retirement home staff and residents’ preferences for healthcare robots. In Proceedings of the 18th IEEE International Symposium on Robot and Human Interactive Communication, RO-MAN 2009, Toyama, Japan, 27 September–2 October 2009; pp. 645–650. [Google Scholar]

- Broadbent, E.; Tamagawa, R.; Patience, A.; Knock, B.; Kerse, N.; Day, K.; MacDonald, B.A. Attitudes towards health-care robots in a retirement village. Australas. J. Ageing 2012, 31, 115–120. [Google Scholar] [CrossRef] [PubMed]

- Pineau, J.; Montemerlo, M.; Pollack, M.; Roy, N.; Thrun, S. Towards robotic assistants in nursing homes: Challenges and results. Robot. Auton. Syst. 2003, 42, 271–281. [Google Scholar] [CrossRef]

- Tiwari, P.; Warren, J.; Day, K.; MacDonald, B.; Jayawardena, C.; Kuo, I.H.; Igic, A.; Datta, C. Feasibility study of a robotic medication assistant for the elderly. In Proceedings of the Twelfth Australasian User Interface Conference, Perth, Australia, 17–20 January 2011; Australian Computer Society, Inc.: Sydney, Australia, 2011; Volume 117, pp. 57–66. [Google Scholar]

- Bedaf, S.; Gelderblom, G.J.; De Witte, L. Overview and categorization of robots supporting independent living of elderly people: What activities do they support and how far have they developed. Assist. Technol. 2015, 27, 88–100. [Google Scholar] [CrossRef] [PubMed]

- Mast, M.; Burmester, M.; Graf, B.; Weisshardt, F.; Arbeiter, G.; Španěl, M.; Materna, Z.; Smrž, P.; Kronreif, G. Design of the human–robot interaction for a semi-autonomous service robot to assist elderly people. In Ambient Assisted Living; Springer: Berlin/Heidelberg, Germany, 2015; pp. 15–29. [Google Scholar]

- Bohren, J.; Rusu, R.B.; Jones, E.G.; Marder-Eppstein, E.; Pantofaru, C.; Wise, M.; Mösenlechner, L.; Meeussen, W.; Holzer, S. Towards autonomous robotic butlers: Lessons learned with the PR2. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 5568–5575. [Google Scholar]

- Abdelnour, C.; Tantinya, N.; Hernandez, J.; Martin, E.; Garcia, S.; Ribes, J.; Lafuente, A.; Hernandez, I.; Rosende-Roca, M.; Mauleon, A.; et al. Ramcip project, a robotic assistant to support alzheimer’s disease patients at home: A novel approach in caregiving. Alzheimer’s Dement. J. Alzheimer’s Assoc. 2016, 12, P798. [Google Scholar] [CrossRef]

- Korchut, A.; Szklener, S.; Abdelnour, C.; Tantinya, N.; Hernandez-Farigola, J.; Ribes, J.C.; Skrobas, U.; Grabowska-Aleksandrowicz, K.; Szczesniak-Stanczyk, D.; Rejdak, K. challenges for service robots—Requirements of elderly adults with cognitive impairments. Front. Neurol. 2017, 8, 228. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kostavelis, I.; Boukas, E.; Nalpantidis, L.; Gasteratos, A. Stereo-based visual odometry for autonomous robot navigation. Int. J. Adv. Robot. Syst. 2016, 13, 21. [Google Scholar] [CrossRef] [Green Version]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. G2o: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

- Doumanoglou, A.; Kouskouridas, R.; Malassiotis, S.; Kim, T.K. Recovering 6D object pose and predicting next-best-view in the crowd. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3583–3592. [Google Scholar]

- Kostavelis, I.; Vasileiadis, M.; Skartados, E.; Kargakos, A.; Giakoumis, D.; Bouganis, C.S.; Tzovaras, D. Understanding of human behavior with a robotic agent through daily activity analysis. Int. J. Soc. Robot. 2019, 11, 437–462. [Google Scholar] [CrossRef] [Green Version]

- Vasileiadis, M.; Malassiotis, S.; Giakoumis, D.; Bouganis, C.S.; Tzovaras, D. Robust Human Pose Tracking For Realistic Service Robot Applications. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1363–1372. [Google Scholar]

- Leigh, A.; Pineau, J. Laser-based person tracking for clinical locomotion analysis. In Proceedings of the IROS Workshop on Rehabilitation and Assistive Robotics, Chicago, IL, USA, 14–18 September 2014. [Google Scholar]

- Kostavelis, I.; Kargakos, A.; Giakoumis, D.; Tzovaras, D. Robot’s Workspace Enhancement with Dynamic Human Presence for Socially-Aware Navigation. In Proceedings of the International Conference on Computer Vision Systems, Shenzhen, China, 10–13 July 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 279–288. [Google Scholar]

- Stavropoulos, G.; Giakoumis, D.; Moustakas, K.; Tzovaras, D. Automatic action recognition for assistive robots to support MCI patients at home. In Proceedings of the 10th International Conference on PErvasive Technologies Related to Assistive Environments, Island of Rhodes, Greece, 21–23 June 2017; pp. 366–371. [Google Scholar]

- Koenig, S.; Likhachev, M. Incremental a. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 9–14 December 2002; pp. 1539–1546. [Google Scholar]

- Koenig, S.; Likhachev, M. Fast replanning for navigation in unknown terrain. IEEE Trans. Robot. 2005, 21, 354–363. [Google Scholar] [CrossRef]

- Rusu, R.B.; Marton, Z.C.; Blodow, N.; Dolha, M.; Beetz, M. Towards 3D point cloud based object maps for household environments. Robot. Auton. Syst. 2008, 56, 927–941. [Google Scholar] [CrossRef]

- Hall, E.T. The Hidden Dimension; Anchor Books: New York City, NY, USA, 1966. [Google Scholar]

- Takayama, L.; Pantofaru, C. Influences on proxemic behaviors in human–robot interaction. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 11–15 October 2009; pp. 5495–5502. [Google Scholar]

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23–33. [Google Scholar] [CrossRef] [Green Version]

- Kazemi, M.; Valois, J.S.; Bagnell, J.A.; Pollard, N. Robust Object Grasping Using Force Compliant Motion Primitives. In Proceedings of the Robotics: Science and Systems, Sydney, NSW, Australia, 9–13 July 2012; pp. 1–8. [Google Scholar]

- Sarantopoulos, I.; Doulgeri, Z. Human-inspired robotic grasping of flat objects. Robot. Auton. Syst. 2018, 108, 179–191. [Google Scholar] [CrossRef]

- Sarantopoulos, I.; Koveos, Y.; Doulgeri, Z. Grasping flat objects by exploiting non-convexity of the object and support surface. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1–6. [Google Scholar]

- Agriomallos, I.; Doltsinis, S.; Mitsioni, I.; Doulgeri, Z. Slippage detection generalizing to grasping of unknown objects using machine learning with novel features. IEEE Robot. Autom. Lett. 2018, 3, 942–948. [Google Scholar] [CrossRef]

- Littman, M.L. A tutorial on partially observable Markov decision processes. J. Math. Psychol. 2009, 53, 119–125. [Google Scholar] [CrossRef]

- Shani, G.; Pineau, J.; Kaplow, R. A survey of point-based POMDP solvers. Auton. Agents Multi-Agent Syst. 2013, 27, 1–51. [Google Scholar] [CrossRef]

- Kostavelis, I.; Giakoumis, D.; Malassiotis, S.; Tzovaras, D. A POMDP Design Framework for Decision Making in Assistive Robots. In Proceedings of the International Conference on Human-Computer Interaction, Vancouver, Canada, 9–14 July 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 467–479. [Google Scholar]

- Hansen, E.A. Solving POMDPs by searching in policy space. In Proceedings of the Fourteenth Conference on Uncertainty in Artificial Intelligence, Madison, WI, USA, 24–26 July 1998; Morgan Kaufmann Publishers Inc.: Madison, WI, USA, 1998; pp. 211–219. [Google Scholar]

- Foukarakis, M.; Antona, M.; Stephanidis, C. Applying a Multimodal User Interface Development Framework on a Domestic Service Robot. In Proceedings of the 10th International Conference on PErvasive Technologies Related to Assistive Environments, Island of Rhodes, Greece, 21–23 June 2017; pp. 378–384. [Google Scholar]

- Braziunas, D. Pomdp Solution Methods; University of Toronto: Toronto, ON, Canada, 2003. [Google Scholar]

| State S{Hi, Mi, Li} | Robot Action (Ai) | Observation () |

|---|---|---|

| S{L-1} | A{Perc1}: Robot looks for the user | {Perc1_1}: Robot successfully detected the user {Perc1_2}: Robot failed to detect the user |

| S{H-1} | A{Act1}: Robot navigates close to the user | {Act1_1}: Robot successfully navigated towards the user {Act1_2}: Robot failed to navigate successfully |

| S{M-1} | A{Com1}: Robot reminds the user about the medication | {Com1_1}: Users has already taken the medication {Com1_2}: Users has not taken the medication |

| S{H-2} | A{Act2}: Robot navigates to the shelf | {Act2_1}: Robot navigated and parked successfully wrt the shelf {Act2_1}: Robot failed to navigate and park wrt the shelf |

| S{L-2} | A{Perc2}: Robot detects the pill box | {Perc2_1}: Robot detected the pill box and inferred a grasp pose {Perc2_2}: Robot failed to detect the pill box or has not found a graspable pose |

| S{H-3} | A{Act3}: Robot grasps the pill box | {Act3_1}: Robot grasped the pill box successfully {Act3_1}: Robot failed to grasp the pill box successfully |

| S{H-4} | A{Act4}: Robot navigates towards the table | {Act4_1}: Robot navigated towards the table successfully {Act4_2}: Robot failed to navigate towards the table successfully |

| S{H-5} | A{Act5}: Robot releases the pillbox to the table | {Act5_1}: Robot released the pill box to the table correctly {Act5_1}: Robot failed to release the pill box to the table |

| S{M-2} | A{Com2}: robot requests from the user to take the medication | {Com2_1}: The user accepted to take the medication after robot notification {Com2_2}: User notified that s/he will not need any further assistance and dismissed the robot |

| S{L-3} | A{Perc3}: Robot recognizes the medication adherence activity | {Perc3_1}: Robot detected medication intake activity {Perc3_1}: Robot failed to detect the medication intake activity |

| S{M-3} | A{Dial1}: Robot informs external through com. Chnl. | {Dial1_1}: Robot established communication with a relative and reported the situation {Dial1_1}: Robot failed to establish external communication |

| S{H-6} | A{Act6}: Robot grasps the pill box from the table | {Act6_1}: Robot grasped the pill box from the table successfully {Act6_2}: Robot failed to grasp the pill box from the table |

| S{H-7} | A{Act7}: Robot releases the pillbox at the shelf | {Act_7_1}: Robot released the pill box to the shelf successfully {Act_7_1}: Robot failed to release the pill box to the shelf |

| S{L-0} | A{Monitor}: Robot navigates to parking position and performs monitoring | {Monitor_1}: Robot monitors the user and nothing triggers the medication intake scenario {Monitor_2}: The medication intake scenario has been triggered |

| S{H-0} | A{SysRes}: Task re-initialized due to internal error | {SysRes_1}: Robot parses the ROS diagnostics and if possible re-initializes the task {SysRes_2}: Robot parses the ROS diagnostics and if not possible returns to S{L-0} |

| No. Participants | No. Total Repetitions | No. Successful Executions | Overall Performance (%) |

|---|---|---|---|

| 12 | 84 | 68 | 80.95 |

| Localization Error | Action Recogn. Error | Planning Error | Communication Error | Other Error |

|---|---|---|---|---|

| 4/16 | 3/16 | 4/16 | 3/16 | 2/16 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kostavelis, I.; Kargakos, A.; Skartados, E.; Peleka, G.; Giakoumis, D.; Sarantopoulos, I.; Agriomallos, I.; Doulgeri, Z.; Endo, S.; Stüber, H.; et al. Robotic Assistance in Medication Intake: A Complete Pipeline. Appl. Sci. 2022, 12, 1379. https://doi.org/10.3390/app12031379

Kostavelis I, Kargakos A, Skartados E, Peleka G, Giakoumis D, Sarantopoulos I, Agriomallos I, Doulgeri Z, Endo S, Stüber H, et al. Robotic Assistance in Medication Intake: A Complete Pipeline. Applied Sciences. 2022; 12(3):1379. https://doi.org/10.3390/app12031379

Chicago/Turabian StyleKostavelis, Ioannis, Andreas Kargakos, Evangelos Skartados, Georgia Peleka, Dimitrios Giakoumis, Iason Sarantopoulos, Ioannis Agriomallos, Zoe Doulgeri, Satoshi Endo, Heiko Stüber, and et al. 2022. "Robotic Assistance in Medication Intake: A Complete Pipeline" Applied Sciences 12, no. 3: 1379. https://doi.org/10.3390/app12031379

APA StyleKostavelis, I., Kargakos, A., Skartados, E., Peleka, G., Giakoumis, D., Sarantopoulos, I., Agriomallos, I., Doulgeri, Z., Endo, S., Stüber, H., Janjoš, F., Hirche, S., & Tzovaras, D. (2022). Robotic Assistance in Medication Intake: A Complete Pipeline. Applied Sciences, 12(3), 1379. https://doi.org/10.3390/app12031379